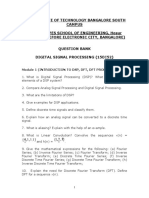

SIGNALS ANALYSIS

http://www.tutorialspoint.com/signals_and_systems/signals_analysis.htm

Copyright tutorialspoint.com

Analogy Between Vectors and Signals

There is a perfect analogy between vectors and signals.

Vector

A vector contains magnitude and direction. The name of the vector is denoted by bold face type

and their magnitude is denoted by light face type.

Example: V is a vector with magnitude V. Consider two vectors V 1 and V 2 as shown in the

following diagram. Let the component of V 1 along with V 2 is given by C12 V 2 . The component of a

vector V 1 along with the vector V 2 can obtained by taking a perpendicular from the end of V 1 to

the vector V 2 as shown in diagram:

The vector V 1 can be expressed in terms of vector V 2

V 1 = C12 V 2 + V e

Where Ve is the error vector.

But this is not the only way of expressing vector V 1 in terms of V 2 . The alternate possibilities are:

V 1 =C1 V 2 +V e1

V 2 =C2 V 2 +V e2

�The error signal is minimum for large component value. If C12 =0, then two signals are said to be

orthogonal.

Dot Product of Two Vectors

V 1 . V 2 = V 1 .V 2 cos

= Angle between V1 and V2

V 1 . V 2 =V 2 .V 1

The components of V 1 alogn V 2 = V 1 Cos = V 1.V 2

V2

From the diagram, components of V 1 alogn V 2 = C 12 V 2

V1 . V2

V2 = C1 2 V2

C12 =

V1 . V2

V2

Signal

The concept of orthogonality can be applied to signals. Let us consider two signals f1 t and f2 t .

Similar to vectors, you can approximate f1 t in terms of f2 t as

f1 t = C12 f2 t + fet for (t1 < t < t2 )

fet = f1t C12 f2t

One possible way of minimizing the error is integrating over the interval t1 to t2 .

t2

1

[fe (t)]dt

t2 t1 t1

t2

1

[f1 (t) C12 f2 (t)]dt

t2 t1 t1

However, this step also does not reduce the error to appreciable extent. This can be corrected by

taking the square of error function.

1

t2 t1

1

t2 t1

tt12 [fe (t)]2 dt

tt12 [fe (t) C12 f2 ]2 dt

Where is the mean square value of error signal. The value of C12 which minimizes the error, you

need to calculate d

dC12

d

[ 1

dC12 t2 t1

1

t2 t1

=0

tt12 [f1 (t) C12 f2 (t)]2 dt] = 0

tt12 [ dCd f12 (t)

12

d

dC12

2f1 (t)C12 f2 (t) +

d

dC12

2 ]dt = 0

f22 (t)C12

Derivative of the terms which do not have C12 term are zero.

tt12 2f1 (t)f2 (t)dt + 2C12 tt12 [f22 (t)]dt = 0

t

() ()

�If C12

tt2 f1 (t)f2 (t)dt

1

tt2 f22 (t)dt

component is zero, then two signals are said to be orthogonal.

Put C12 = 0 to get condition for orthogonality.

0=

tt2 f1 (t)f2 (t)dt

1

tt2 f22 (t)dt

1

t2

t1

f1 (t)f2 (t)dt = 0

Orthogonal Vector Space

A complete set of orthogonal vectors is referred to as orthogonal vector space. Consider a three

dimensional vector space as shown below:

Consider a vector A at a point (X 1 , Y 1 , Z 1 ). Consider three unit vectors (V X, V Y, V Z) in the direction

of X, Y, Z axis respectively. Since these unit vectors are mutually orthogonal, it satisfies that

VX . VX = VY . VY = VZ . VZ = 1

VX . VY = VY . VZ = VZ . VX = 0

You can write above conditions as

Va . Vb = {

1

0

a=b

ab

The vector A can be represented in terms of its components and unit vectors as

A = X1 VX + Y1 VY + Z1 VZ . . . . . . . . . . . . . . . . (1)

Any vectors in this three dimensional space can be represented in terms of these three unit

vectors only.

If you consider n dimensional space, then any vector A in that space can be represented as

A = X1 VX + Y1 VY + Z1 VZ +. . . +N1 VN . . . . . (2)

As the magnitude of unit vectors is unity for any vector A

The component of A along x axis = A.V X

The component of A along Y axis = A.V Y

�The component of A along Z axis = A.V Z

Similarly, for n dimensional space, the component of A along some G axis

= A. V G. . . . . . . . . . . . . . . (3)

Substitute equation 2 in equation 3.

CG = (X1 VX + Y1 VY + Z1 VZ +. . . +G1 VG . . . +N1 VN )VG

= X1 VX VG + Y1 VY VG + Z1 VZ VG +. . . +G1 VG VG . . . +N1 VN VG

= G1 since VG VG = 1

If VG VG 1 i.e.VG VG = k

AVG = G1 VG VG = G1 K

G1 =

(AVG )

K

Orthogonal Signal Space

Let us consider a set of n mutually orthogonal functions x1 t , x2 t ... xn t over the interval t1 to t2 . As

these functions are orthogonal to each other, any two signals xjt , xkt have to satisfy the

orthogonality condition. i.e.

t2

t1

xj (t)xk (t)dt = 0 where j k

Let

t2

t1

x2k (t)dt = kk

Let a function ft , it can be approximated with this orthogonal signal space by adding the

components along mutually orthogonal signals i.e.

f(t) = C1 x1 (t) + C2 x2 (t)+. . . +Cn xn (t) + fe (t)

= nr=1 Cr xr (t)

f(t) = f(t) nr=1 Cr xr (t)

Mean sqaure error

1

t2 t2

tt12 [fe (t)]2 dt

n

t2

1

=

[f[t] Cr xr (t)]2 dt

t2 t2 t1

r=1

The component which minimizes the mean square error can be found by

d

d

d

=

=. . . =

=0

dC1

dC2

dCk

Let us consider d

dCk

=0

t2

d

1

[

[f(t) nr=1 Cr xr (t)]2 dt] = 0

dCk t2 t1 t1

All terms that do not contain Ck is zero. i.e. in summation, r=k term remains and all other terms

are zero.

t2

t1

2f(t)xk (t)dt + 2Ck

Ck =

t2

t1

t2

t1

[x2k (t)]dt = 0

tt12 f(t)xk (t)dt

inttt21 x2k (t)dt

f(t)xk (t)dt = Ck Kk

Mean Square Error

The average of square of error function fet is called as mean square error. It is denoted by

epsilon .

.

1

t2 t1

tt12 [fe (t)]2 dt

1

t2 t1

tt12 [fe (t) nr=1 Cr xr (t)]2 dt

1

t2 t1

[tt12 [fe2 (t)]dt + nr=1 Cr2 tt12 x2r (t)dt 2nr=1 Cr tt12 xr (t)f(t)dt

t

You know that Cr2 t 2

1

x2r (t)dt = Cr tt12 xr (t)f(d)dt = Cr2 Kr

1

t2 t1

[tt12 [f 2 (t)]dt + nr=1 Cr2 Kr 2nr=1 Cr2 Kr ]

1

t2 t1

[tt12 [f 2 (t)]dt nr=1 Cr2 Kr ]

1

t2 t1

[tt12 [f 2 (t)]dt + (C12 K1 + C22 K2 +. . . +Cn2 Kn )]

The above equation is used to evaluate the mean square error.

Closed and Complete Set of Orthogonal Functions

Let us consider a set of n mutually orthogonal functions x1 t , x2 t ...xn t over the interval t1 to t2 . This

is called as closed and complete set when there exist no function ft satisfying the condition

tt12 f(t)xk (t)dt = 0

If this function is satisfying the equation t 2 f(t)xk (t)dt = 0 for k = 1, 2, . . then ft is said to be

1

orthogonal to each and every function of orthogonal set. This set is incomplete without ft . It

becomes closed and complete set when ft is included.

ft can be approximated with this orthogonal set by adding the components along mutually

orthogonal signals i.e.

f(t) = C1 x1 (t) + C2 x2 (t)+. . . +Cn xn (t) + fe (t)

If the infinite series C1 x1 (t) + C2 x2 (t)+. . . +Cn xn (t) converges to ft then mean square error is

zero.

Orthogonality in Complex Functions

If f1 t and f2 t are two complex functions, then f1 t can be expressed in terms of f2 t as

f1 (t) = C12 f2 (t)

..with negligible error

�Where

C12 =

tt2 f1 (t)f2 (t)dt

1

tt2 | f2 (t)|2 dt

1

Where f2 (t) = complex conjugate of f2 t .

If f1 t and f2 t are orthogonal then C12 = 0

tt12 f1 (t)f2 (t)dt

tt12 |f2 (t)|2 dt

t2

t1

=0

f1 (t)f2 (dt) = 0

The above equation represents orthogonality condition in complex functions.