MOS RAM

In 1957, Frosch and Derick manufactured the first silicon dioxide field-effect transistors at Bell

Labs, the first transistors in which drain and source were adjacent at the surface.[12] Subsequently,

in 1960, a team demonstrated a working MOSFET at Bell Labs.[13][14] This led to the development

of metal–oxide–semiconductor (MOS) memory by John Schmidt at Fairchild Semiconductor in

1964.[10][15] In addition to higher speeds, MOS semiconductor memory was cheaper and

consumed less power than magnetic core memory.[10] The development of silicon-gate MOS

integrated circuit (MOS IC) technology by Federico Faggin at Fairchild in 1968 enabled the

production of MOS memory chips.[16] MOS memory overtook magnetic core memory as the

dominant memory technology in the early 1970s.[10]

Integrated bipolar static random-access memory (SRAM) was invented by Robert H. Norman at

Fairchild Semiconductor in 1963.[17] It was followed by the development of MOS SRAM by John

Schmidt at Fairchild in 1964.[10] SRAM became an alternative to magnetic-core memory, but

required six MOS transistors for each bit of data.[18] Commercial use of SRAM began in 1965,

when IBM introduced the SP95 memory chip for the System/360 Model 95.[11]

Dynamic random-access memory (DRAM) allowed replacement of a 4 or 6-transistor latch

circuit by a single transistor for each memory bit, greatly increasing memory density at the cost

of volatility. Data was stored in the tiny capacitance of each transistor and had to be periodically

refreshed every few milliseconds before the charge could leak away.

Toshiba's Toscal BC-1411 electronic calculator, which was introduced in 1965,[19][20][21] used a

form of capacitor bipolar DRAM, storing 180-bit data on discrete memory cells, consisting of

germanium bipolar transistors and capacitors.[20][21] Capacitors had also been used for earlier

memory schemes, such as the drum of the Atanasoff–Berry Computer, the Williams tube and the

Selectron tube. While it offered higher speeds than magnetic-core memory, bipolar DRAM could

not compete with the lower price of the then-dominant magnetic-core memory. [22]

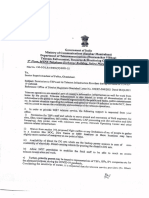

CMOS 1-megabit (Mbit) DRAM chip, one of the last models

developed by VEB Carl Zeiss Jena, in 1989

In 1966, Robert Dennard invented modern DRAM architecture for which there is a single MOS

transistor per capacitor.[18] While examining the characteristics of MOS technology, he found it

was capable of building capacitors, and that storing a charge or no charge on the MOS capacitor

could represent the 1 and 0 of a bit, while the MOS transistor could control writing the charge to

the capacitor. This led to his development of a single-transistor DRAM memory cell. [18] In 1967,

Dennard filed a patent under IBM for a single-transistor DRAM memory cell, based on MOS

�technology.[23] The first commercial DRAM IC chip was the Intel 1103, which was manufactured

on an 8 μm MOS process with a capacity of 1 kbit, and was released in 1970.[10][24][25]

The earliest DRAMs were often synchronized with the CPU clock (clocked) and were used with

early microprocessors. In the mid-1970s, DRAMs moved to the asynchronous design, but in the

1990s returned to synchronous operation.[26][27] In 1992 Samsung released KM48SL2000, which

had a capacity of 16 Mbit.[28][29] and mass-produced in 1993.[28] The first commercial DDR

SDRAM (double data rate SDRAM) memory chip was Samsung's 64 Mbit DDR SDRAM chip,

released in June 1998.[30] GDDR (graphics DDR) is a form of DDR SGRAM (synchronous

graphics RAM), which was first released by Samsung as a 16 Mbit memory chip in 1998.[31]