0% found this document useful (0 votes)

13 views34 pagesMultiple Regression

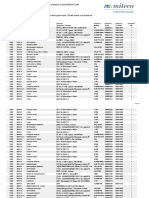

The document introduces multiple regression analysis, focusing on the relationship between one dependent variable and multiple independent variables. It provides an example of pie sales influenced by price and advertising, along with statistical outputs from Excel and Minitab, including regression equations and significance tests. Key metrics such as R-squared values and confidence intervals are discussed to evaluate the model's effectiveness and the significance of individual predictors.

Uploaded by

pavi.premsai.spamCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

13 views34 pagesMultiple Regression

The document introduces multiple regression analysis, focusing on the relationship between one dependent variable and multiple independent variables. It provides an example of pie sales influenced by price and advertising, along with statistical outputs from Excel and Minitab, including regression equations and significance tests. Key metrics such as R-squared values and confidence intervals are discussed to evaluate the model's effectiveness and the significance of individual predictors.

Uploaded by

pavi.premsai.spamCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 34