0% found this document useful (0 votes)

22 views4 pagesHierarchical Clustering Case Study

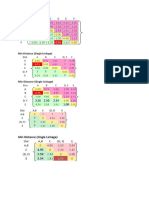

Hierarchical clustering is a technique that creates a tree structure of clusters without requiring a predefined number of clusters, commonly used in customer segmentation, image analysis, and bioinformatics. It can be approached in two ways: bottom-up (agglomerative) which merges clusters, and top-down (divisive) which splits clusters, both visualized through dendrograms. The method is beneficial for understanding hierarchical relationships in data.

Uploaded by

vishalprajapati6212Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

22 views4 pagesHierarchical Clustering Case Study

Hierarchical clustering is a technique that creates a tree structure of clusters without requiring a predefined number of clusters, commonly used in customer segmentation, image analysis, and bioinformatics. It can be approached in two ways: bottom-up (agglomerative) which merges clusters, and top-down (divisive) which splits clusters, both visualized through dendrograms. The method is beneficial for understanding hierarchical relationships in data.

Uploaded by

vishalprajapati6212Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 4