0% found this document useful (0 votes)

9 views7 pagesHierarchical Clustering in Machine Learning

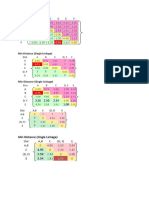

Hierarchical clustering is an unsupervised machine learning algorithm that groups unlabeled datasets into clusters, represented as a dendrogram. It has two main approaches: agglomerative (bottom-up) and divisive (top-down), with agglomerative being the more commonly used method. The algorithm does not require a predetermined number of clusters and employs various linkage methods to measure distances between clusters.

Uploaded by

karthikmarketing2004Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

9 views7 pagesHierarchical Clustering in Machine Learning

Hierarchical clustering is an unsupervised machine learning algorithm that groups unlabeled datasets into clusters, represented as a dendrogram. It has two main approaches: agglomerative (bottom-up) and divisive (top-down), with agglomerative being the more commonly used method. The algorithm does not require a predetermined number of clusters and employs various linkage methods to measure distances between clusters.

Uploaded by

karthikmarketing2004Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 7