0% found this document useful (0 votes)

134 views24 pagesComplete Operating Systems Interview Preparation G

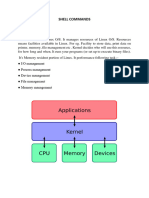

This guide provides a comprehensive overview for preparing for operating systems interviews, covering definitions, functions, types, structures, process management, CPU scheduling, synchronization, and deadlock. It includes real-life examples, interview questions, and explanations of key concepts such as process states, scheduling algorithms, and synchronization mechanisms. The document aims to equip candidates with essential knowledge and practical insights for OS-related interviews.

Uploaded by

ladaninand23Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

134 views24 pagesComplete Operating Systems Interview Preparation G

This guide provides a comprehensive overview for preparing for operating systems interviews, covering definitions, functions, types, structures, process management, CPU scheduling, synchronization, and deadlock. It includes real-life examples, interview questions, and explanations of key concepts such as process states, scheduling algorithms, and synchronization mechanisms. The document aims to equip candidates with essential knowledge and practical insights for OS-related interviews.

Uploaded by

ladaninand23Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 24