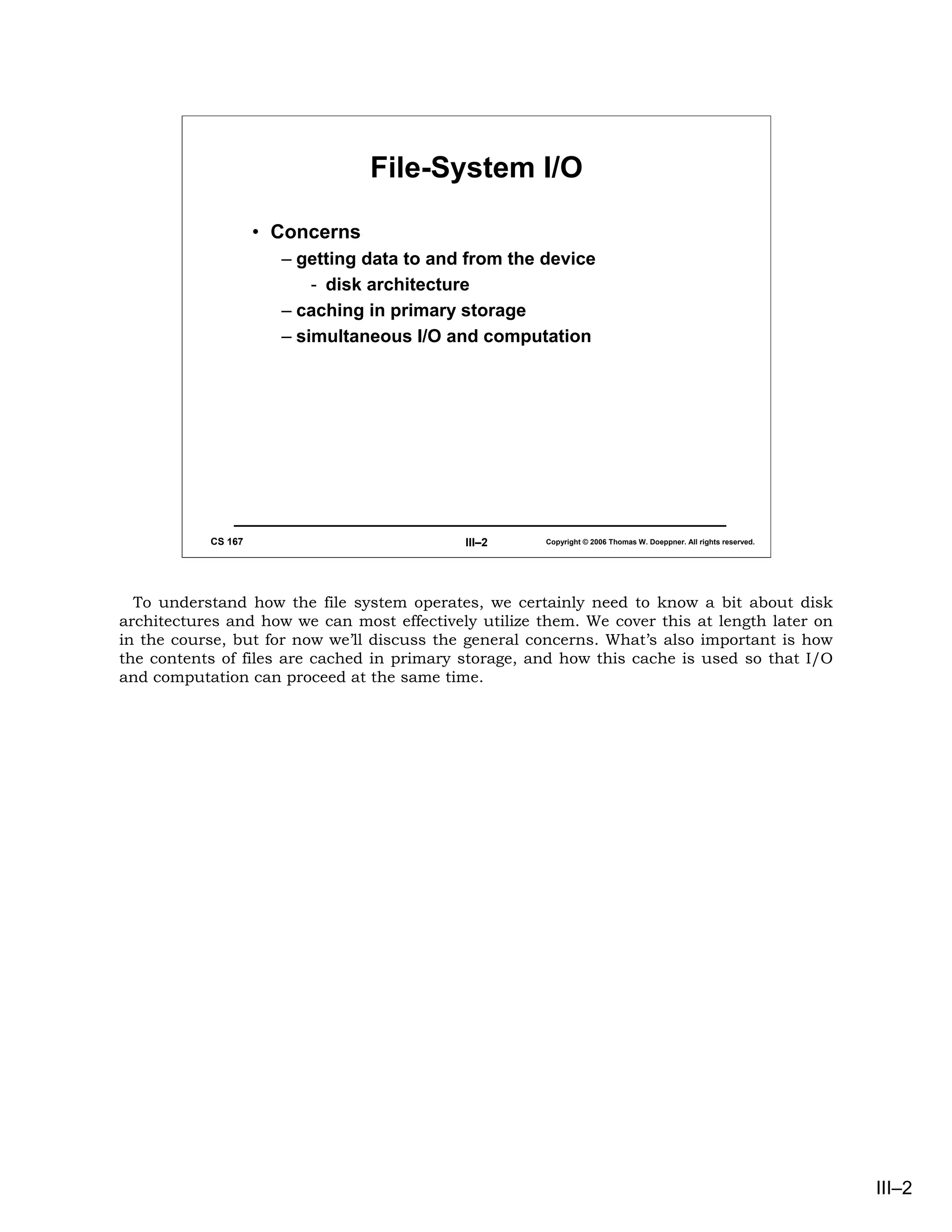

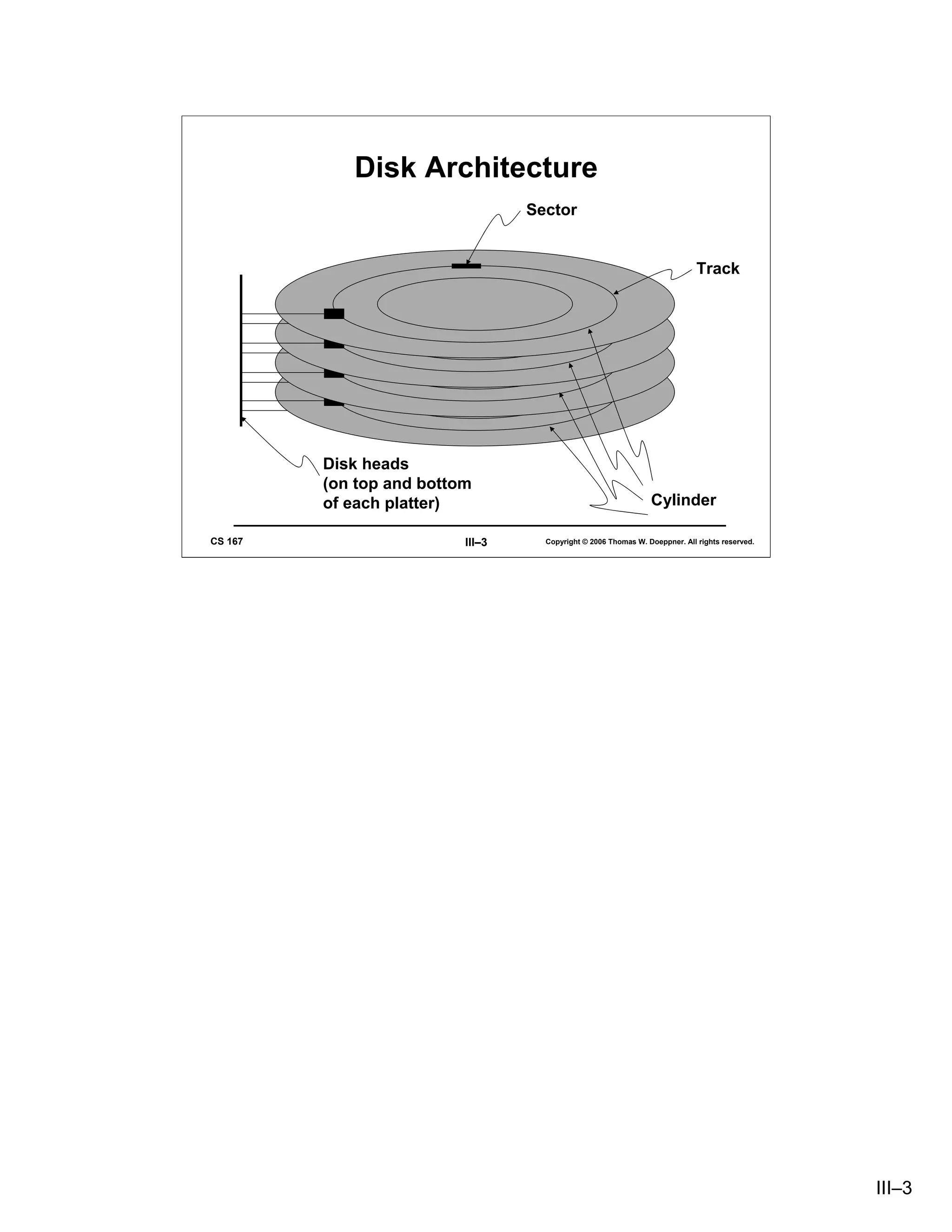

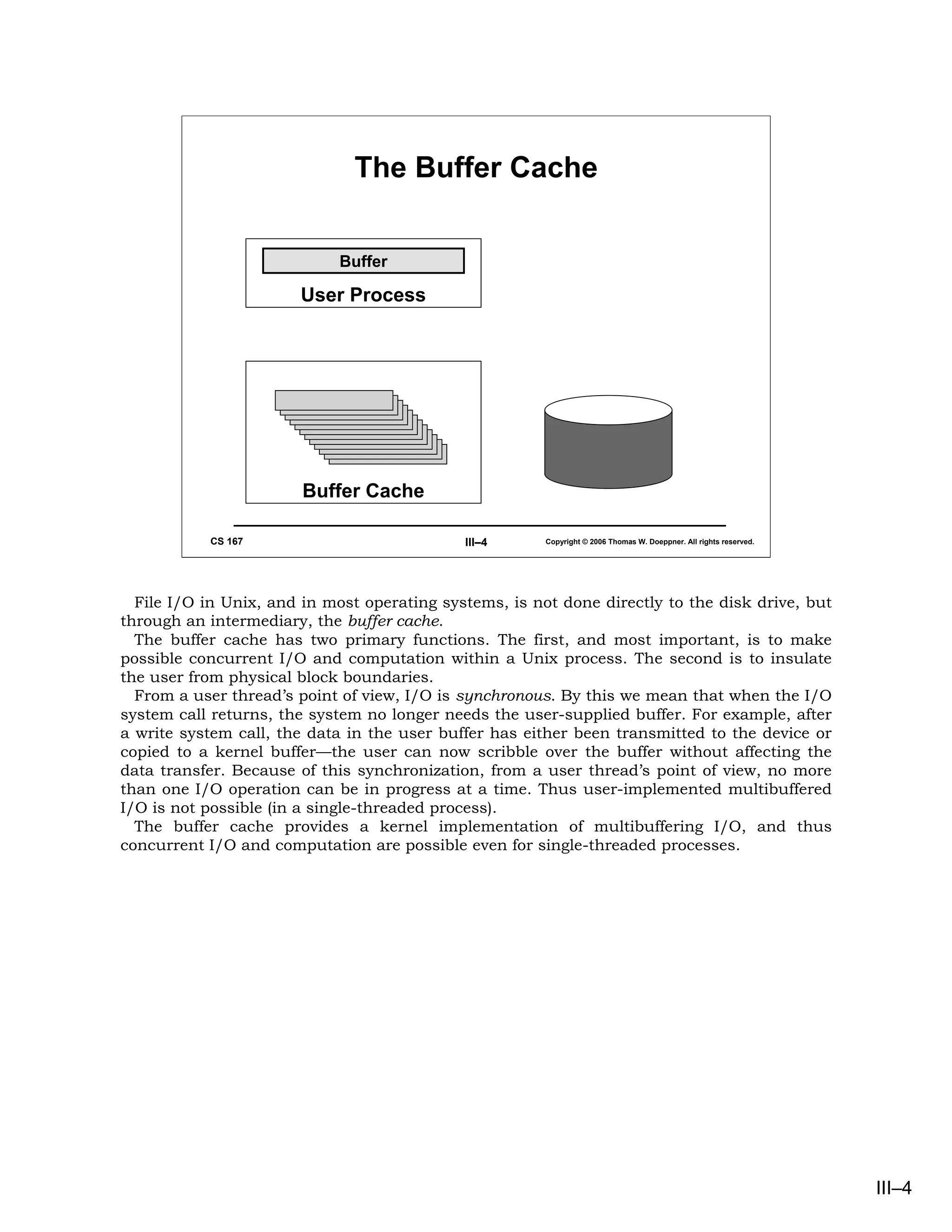

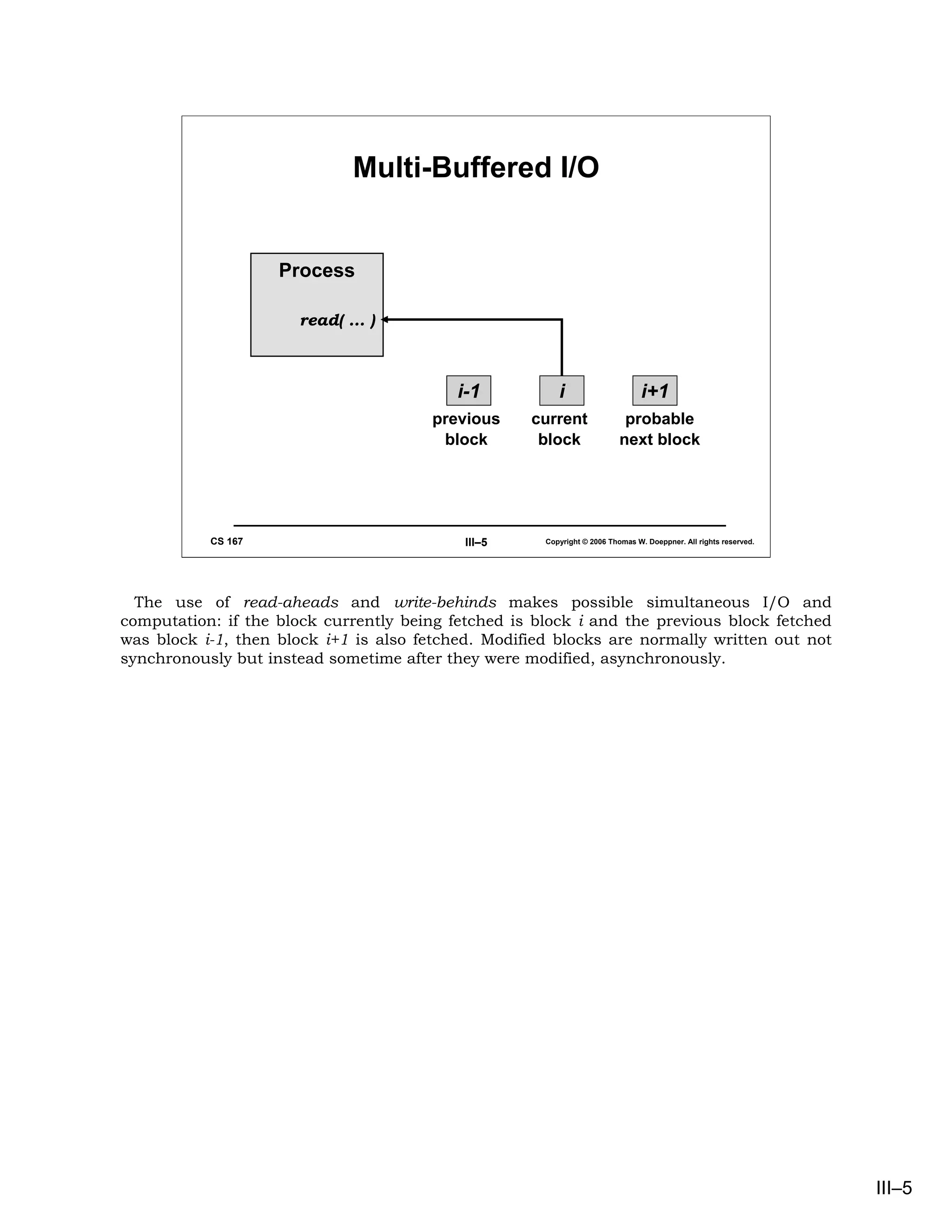

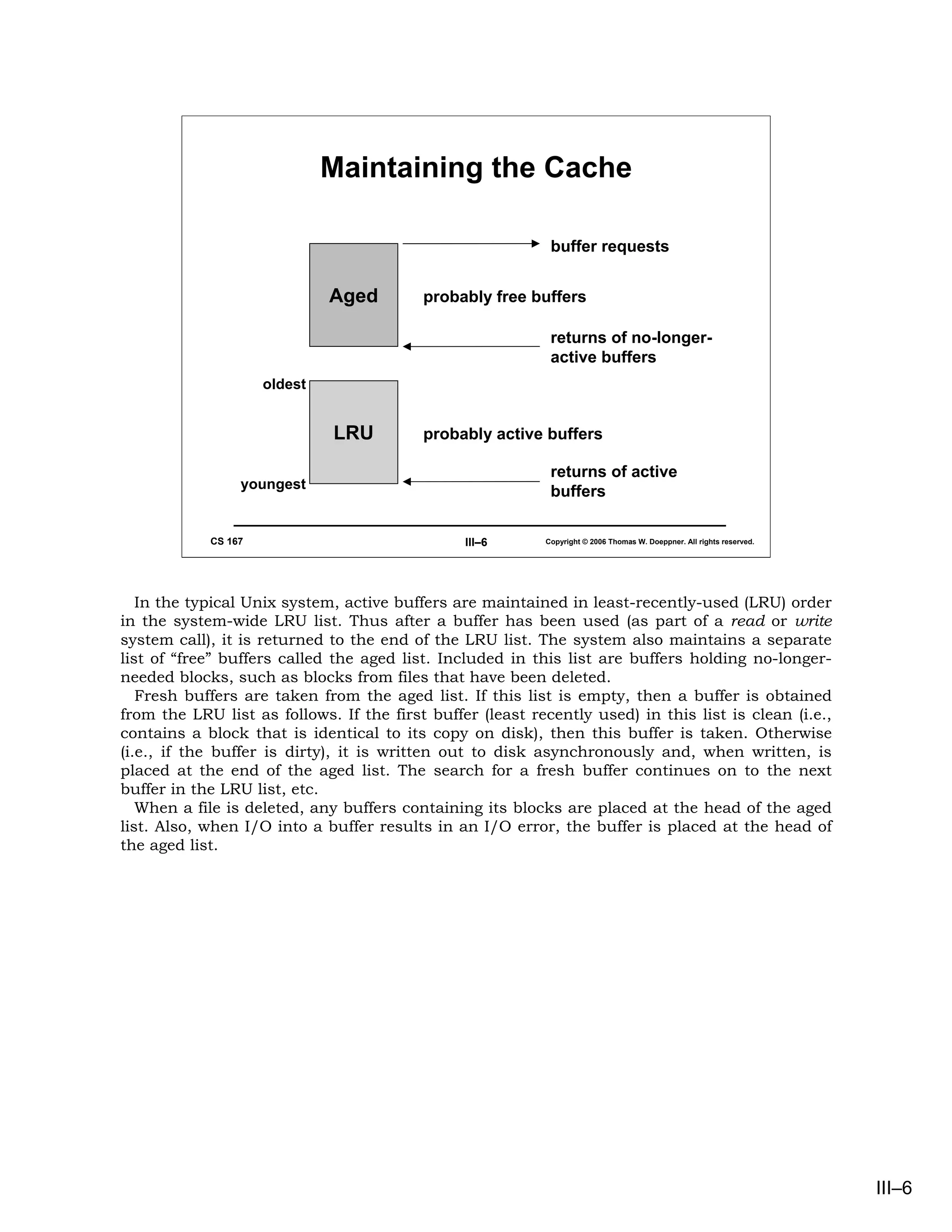

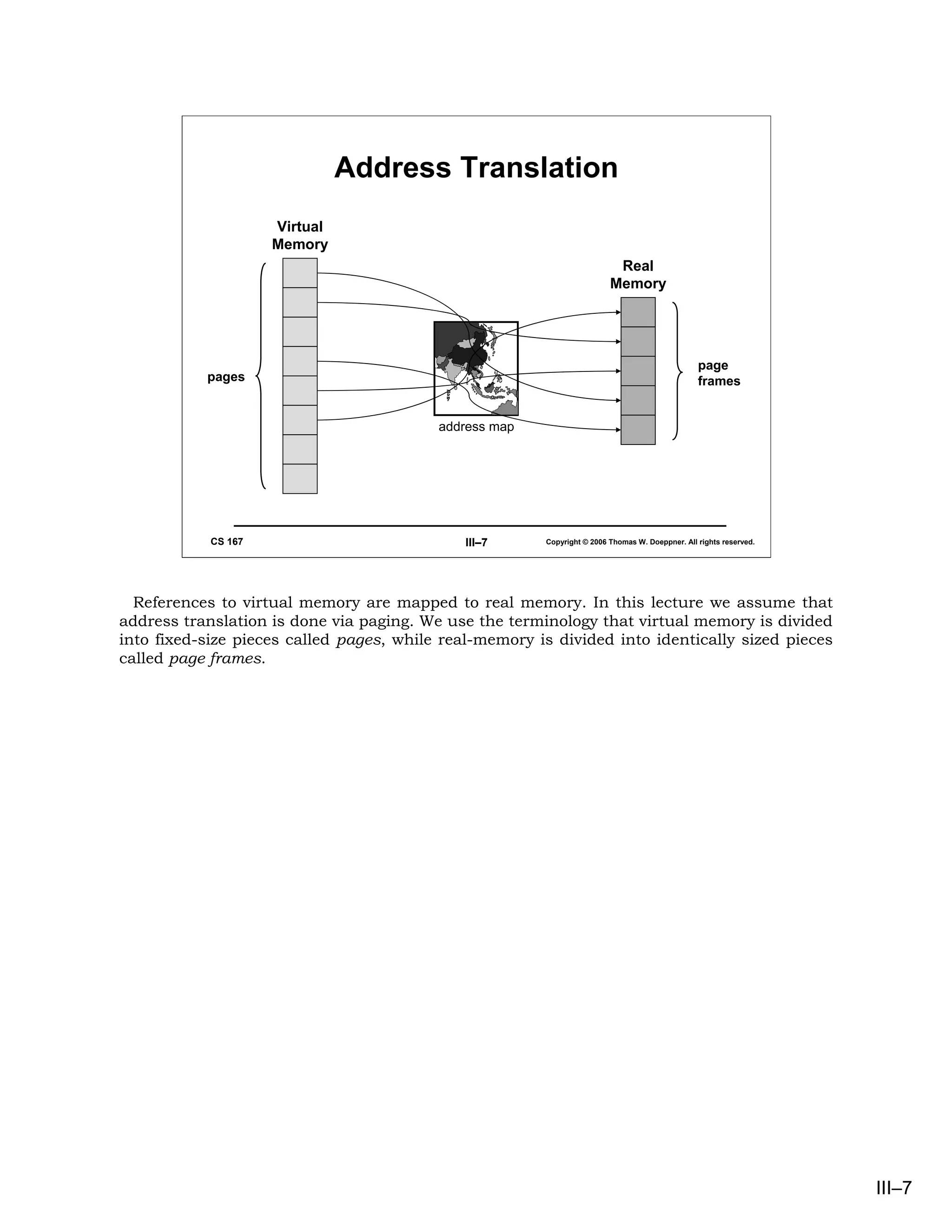

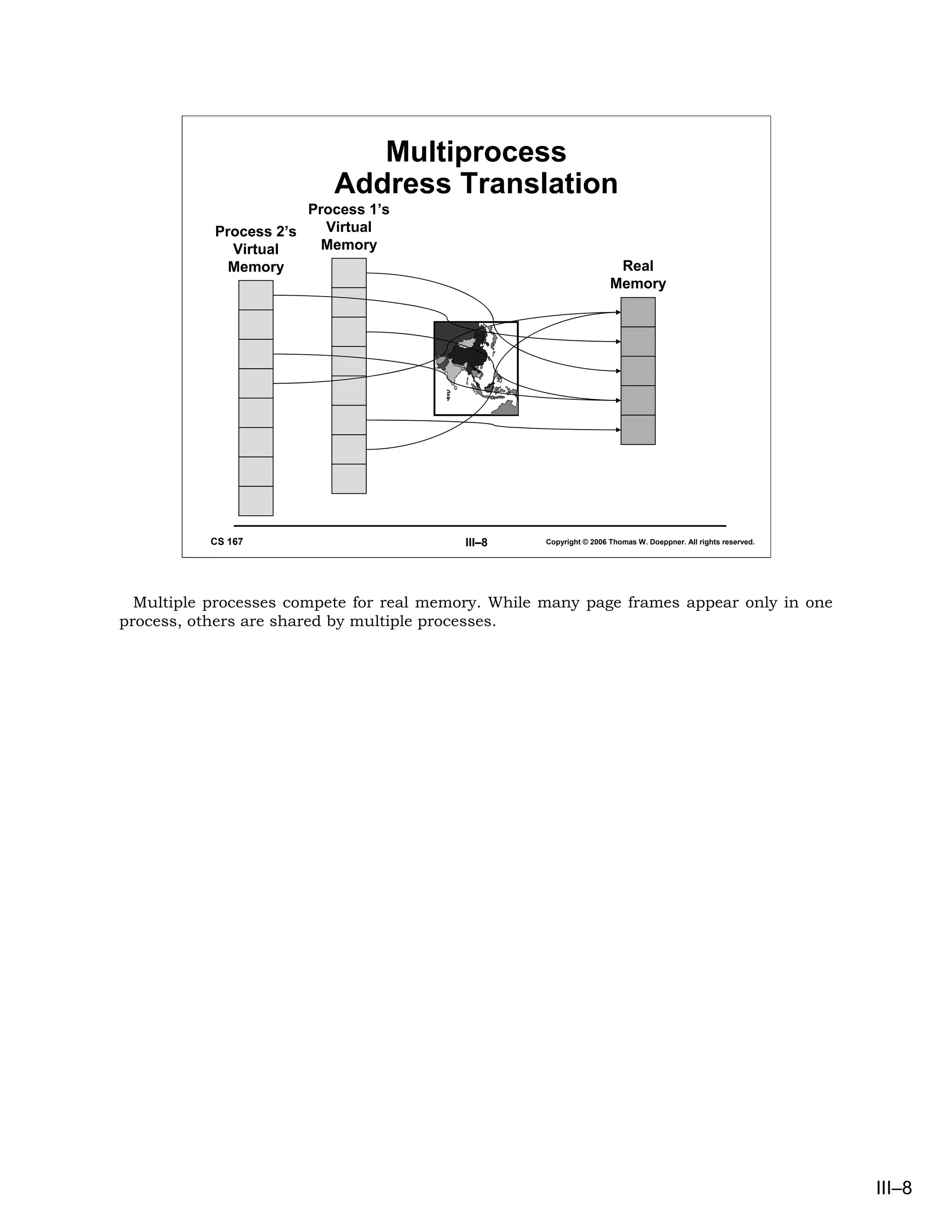

This document provides an overview of file system I/O in Unix systems. It discusses how file contents are cached in primary storage using a buffer cache to allow for concurrent I/O and computation. It describes disk architecture with sectors, tracks, and cylinders and how the buffer cache uses read-aheads and write-behinds to enable simultaneous I/O and processing. It also covers how the buffer cache maintains buffers in a least-recently-used order and how virtual memory addresses are translated to physical memory pages.

![Mapped Files

• Traditional File I/O

char buf[BigEnough];

fd = open(file, O_RDWR);

for (i=0; i<n_recs; i++) {

read(fd, buf, sizeof(buf));

use(buf);

}

• Mapped File I/O

void *MappedFile;

fd = open(file, O_RDWR);

MappedFile = mmap(... , fd, ...);

for (i=0; i<n_recs; i++)

use(MappedFile[i]);

CS 167 III–11 Copyright © 2006 Thomas W. Doeppner. All rights reserved.

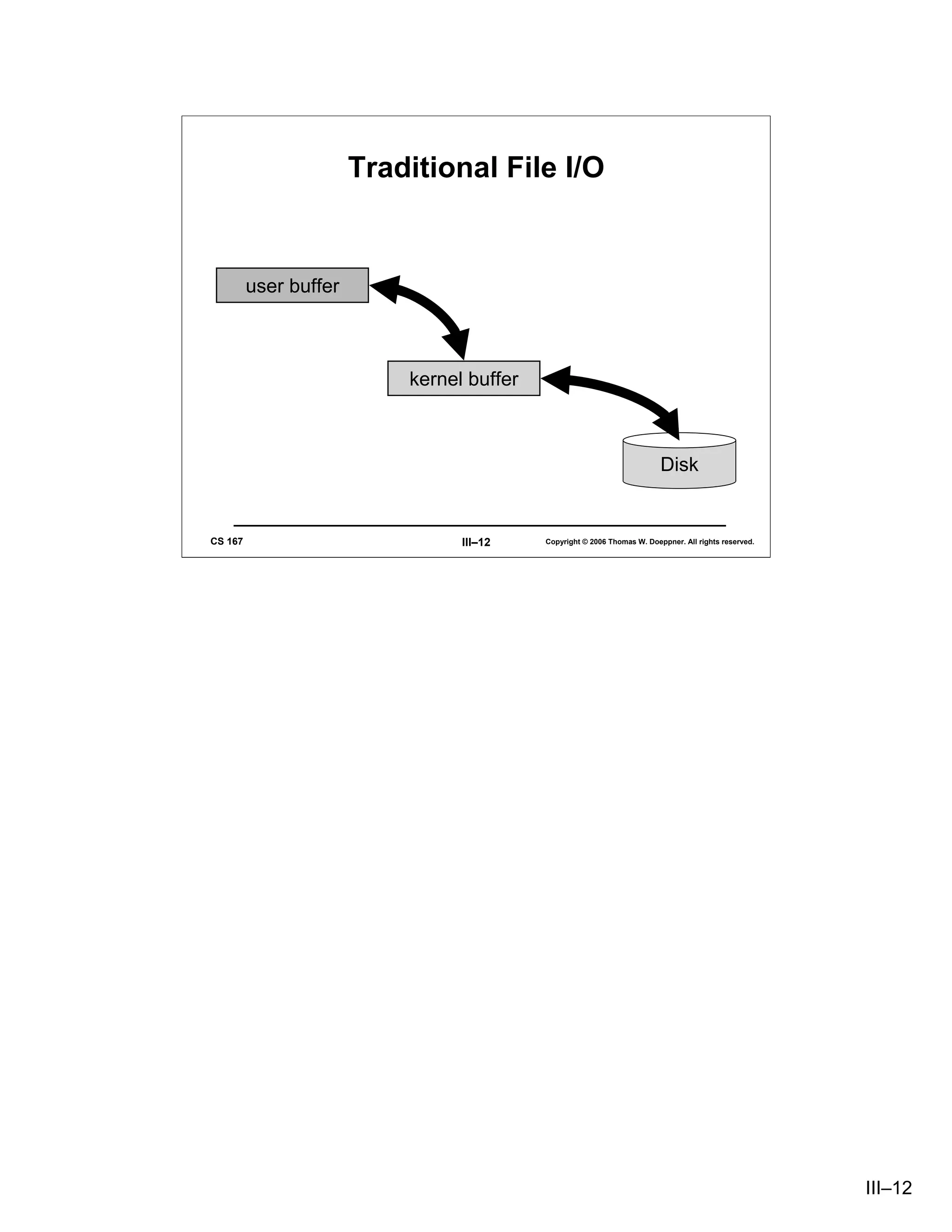

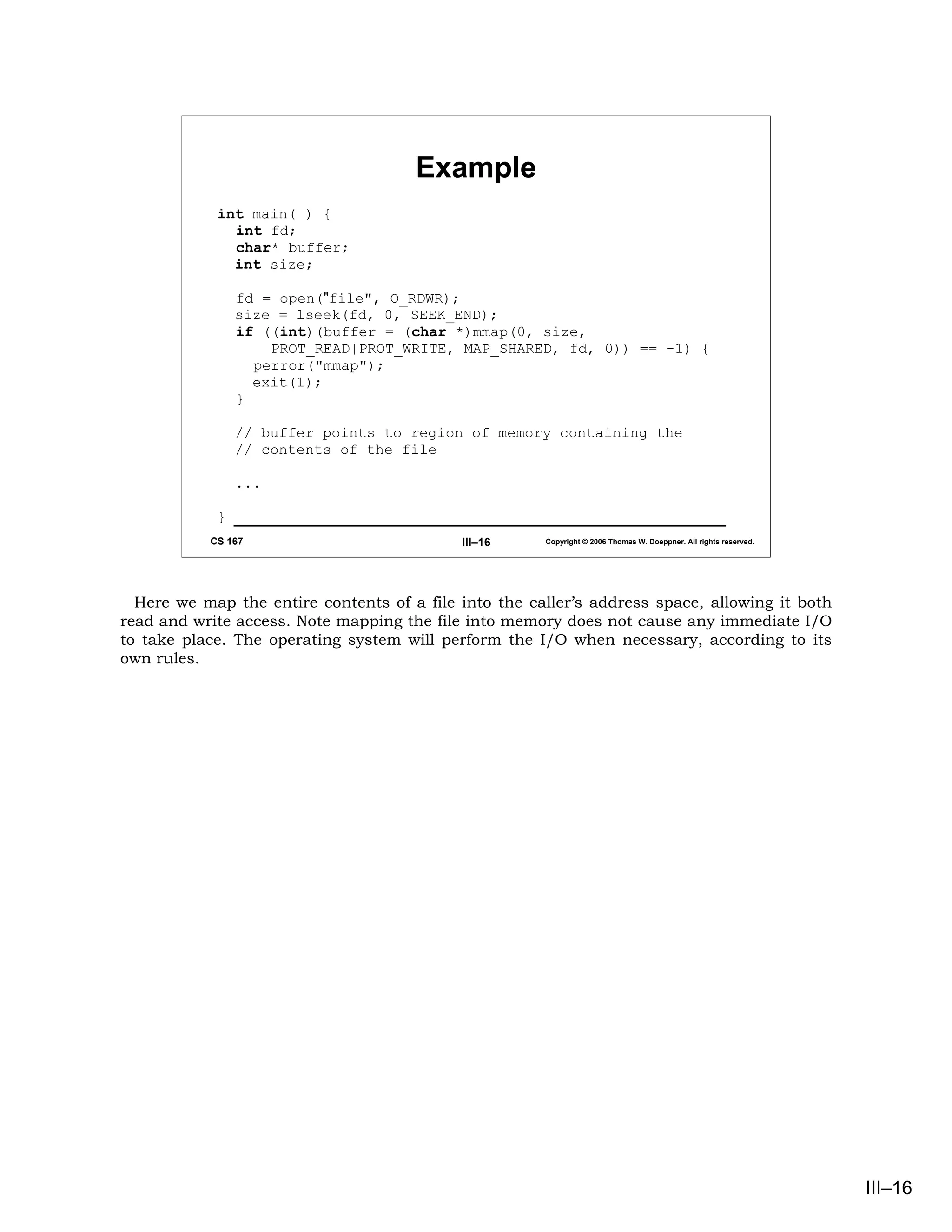

Traditional I/O involves explicit calls to read and write, which in turn means that data is

accessed via a buffer; in fact, two buffers are usually employed: data is transferred

between a user buffer and a kernel buffer, and between the kernel buffer and the I/O

device.

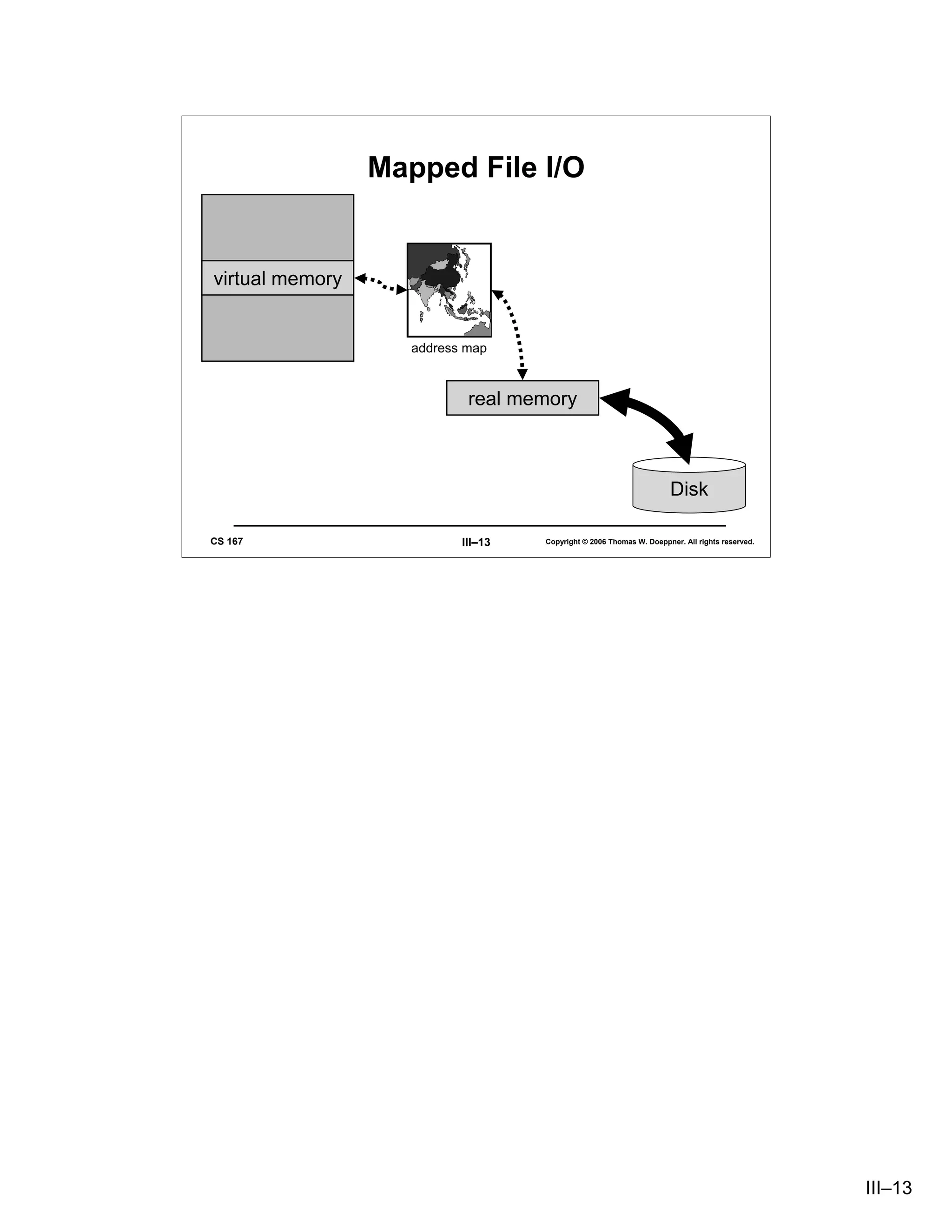

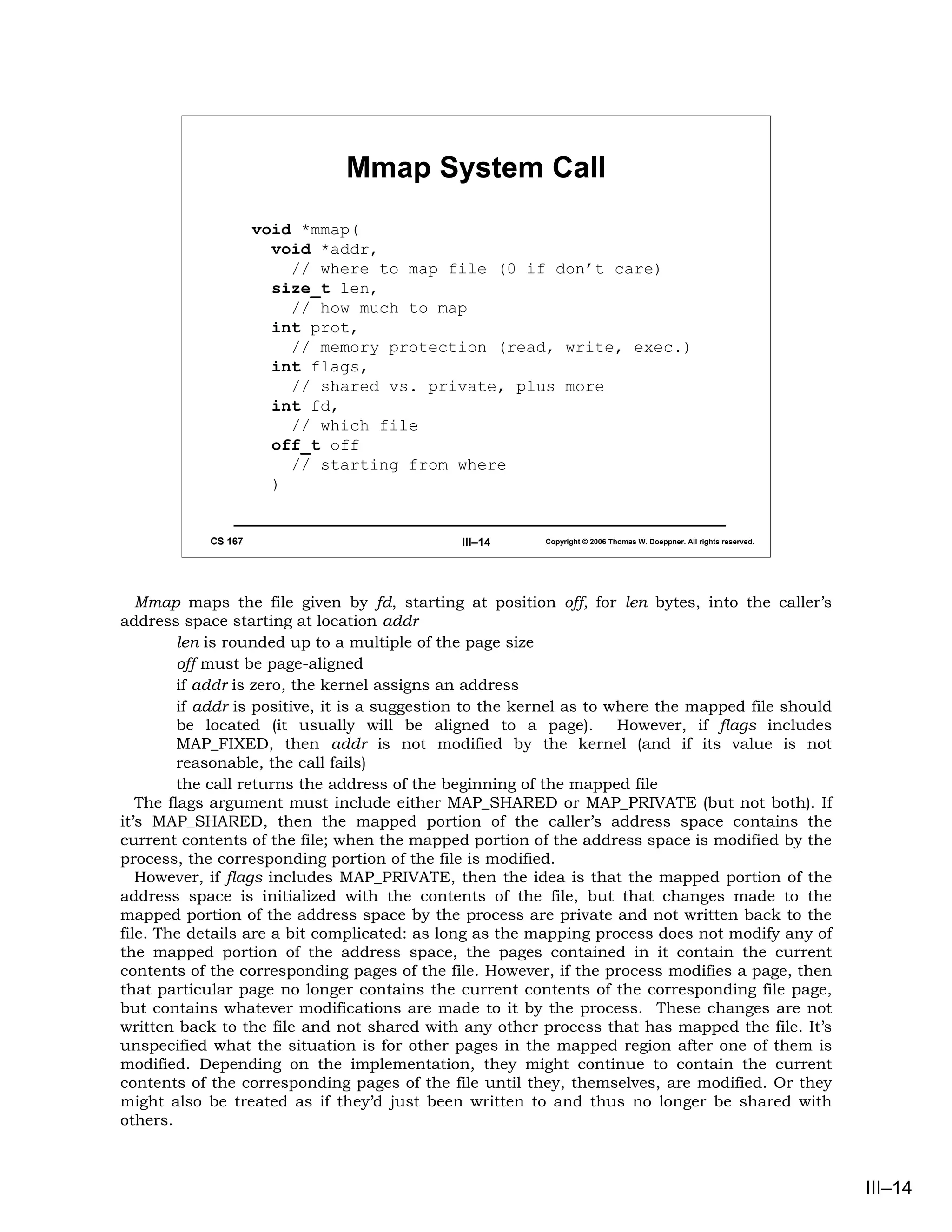

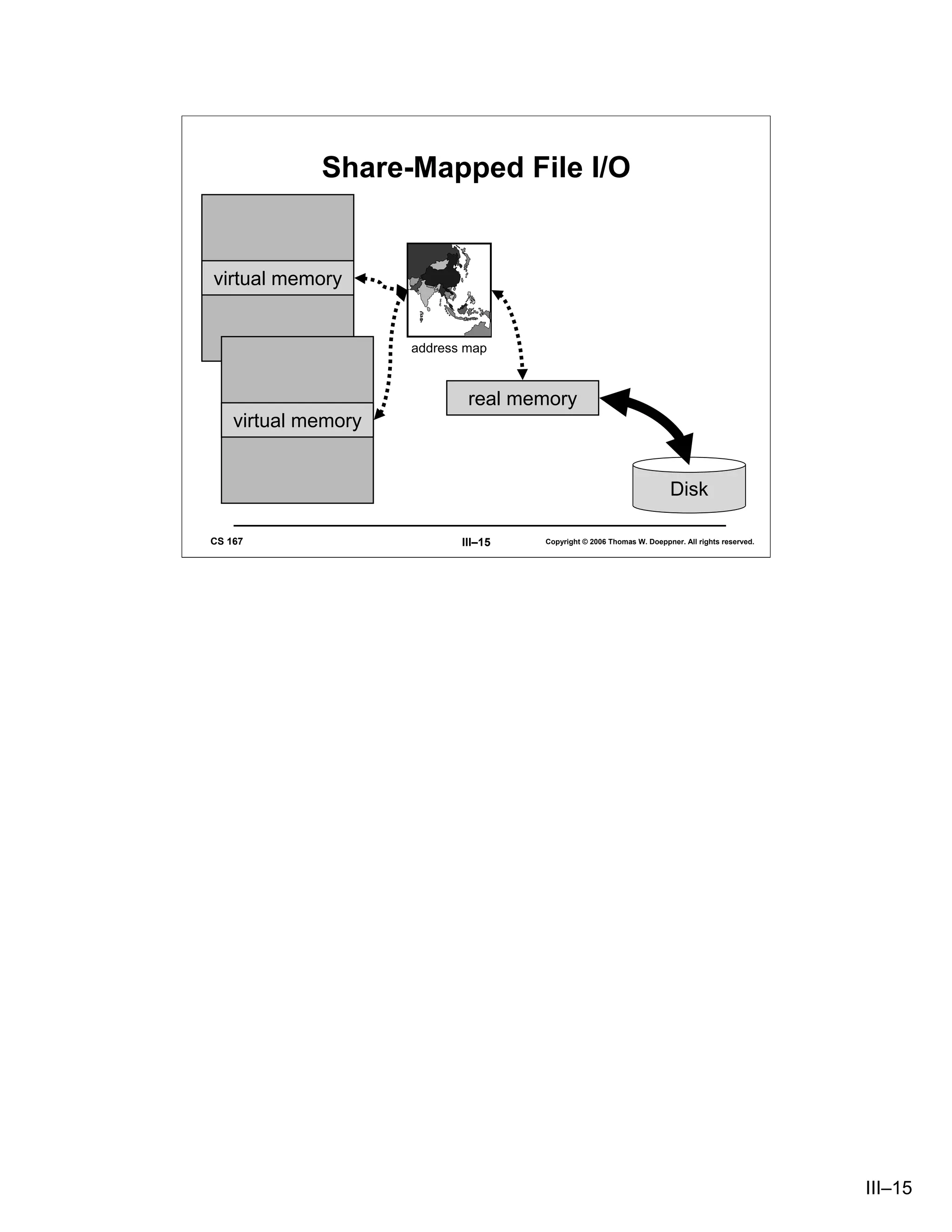

An alternative approach is to map a file into a process’s address space: the file provides

the data for a portion of the address space and the kernel’s virtual-memory system is

responsible for the I/O. A major benefit of this approach is that data is transferred directly

from the device to where the user needs it; there is no need for an extra system buffer.

III–11](https://image.slidesharecdn.com/03unixintro2-090825003958-phpapp02/75/03unixintro2-11-2048.jpg)