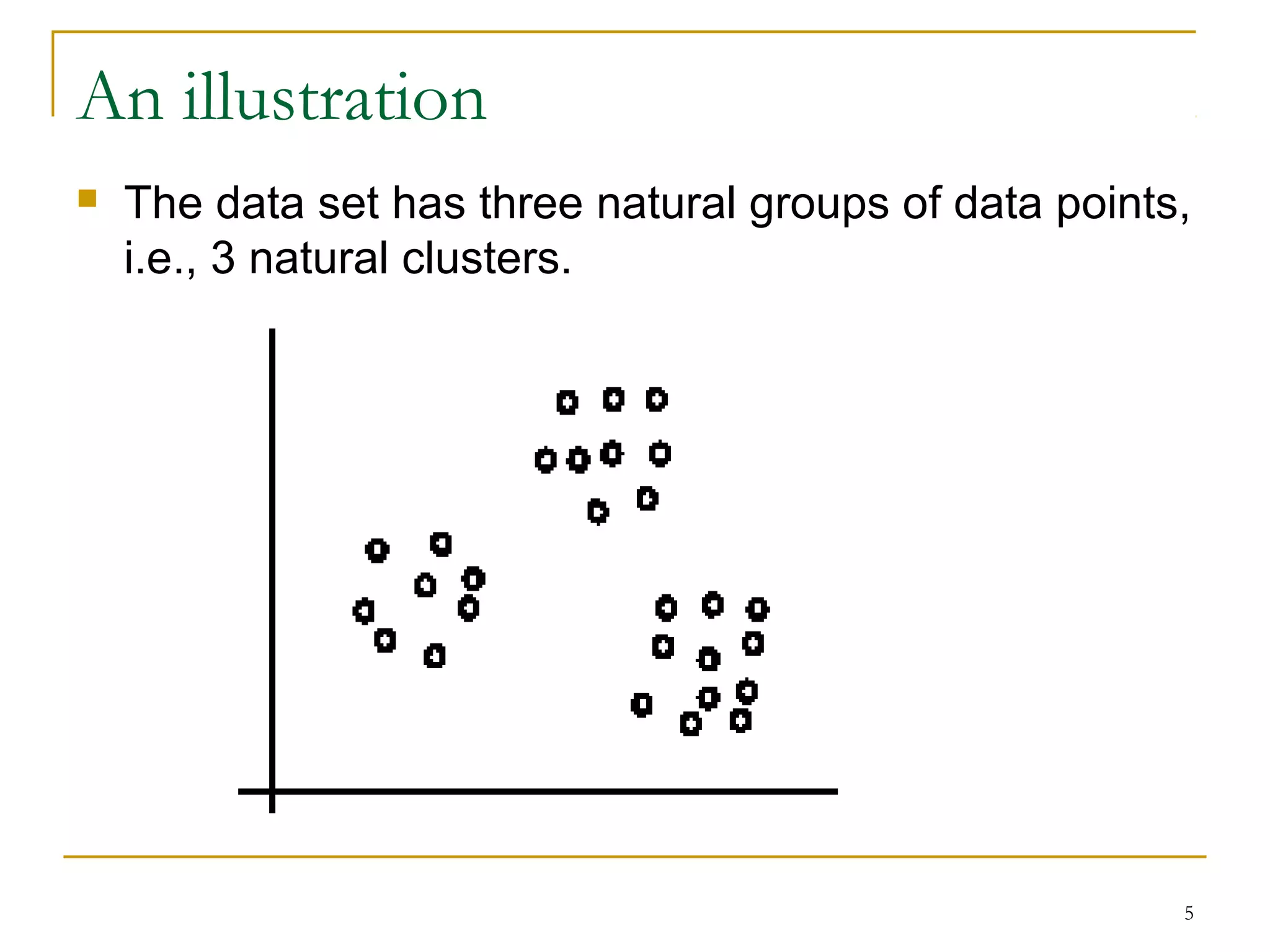

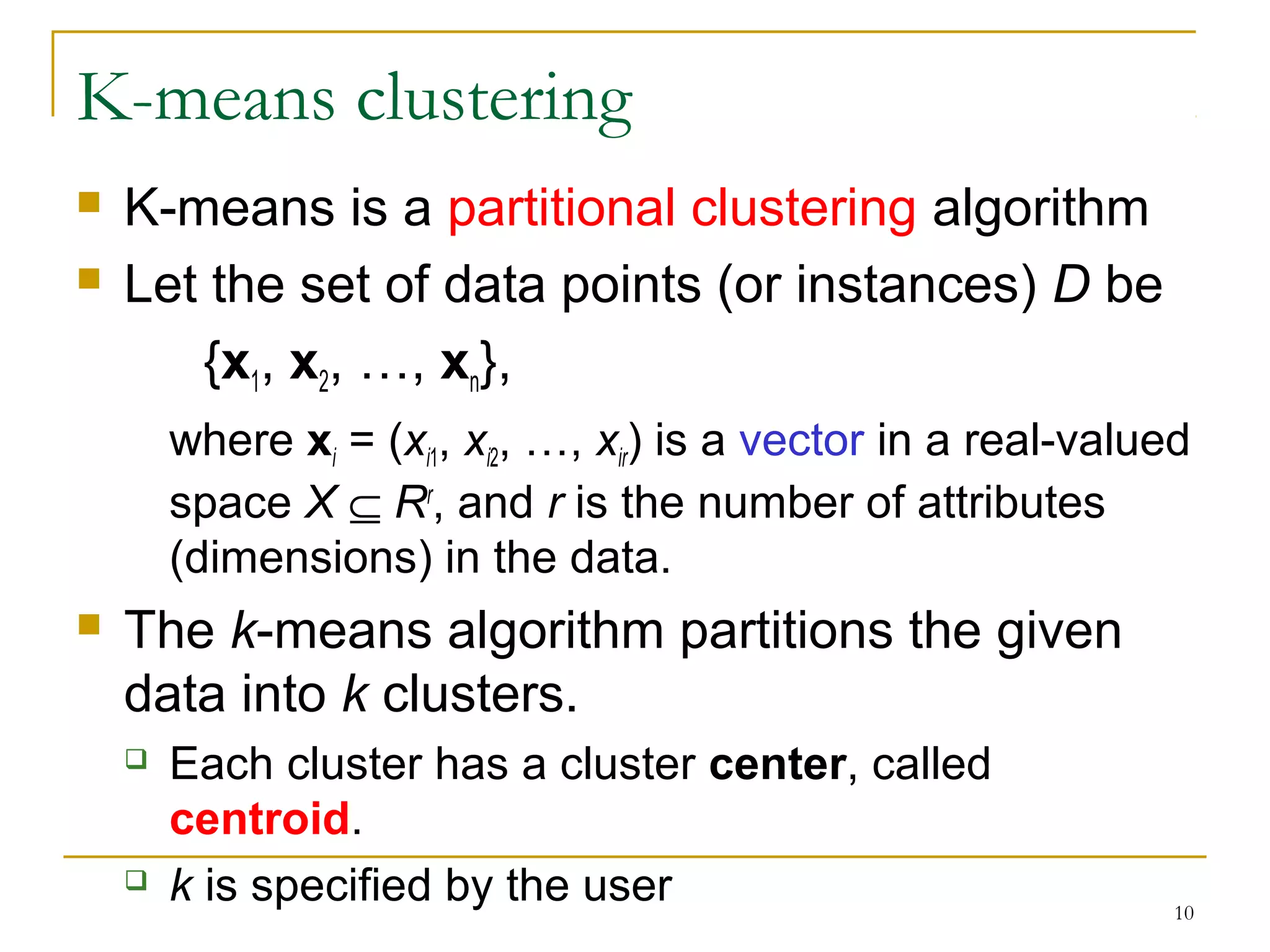

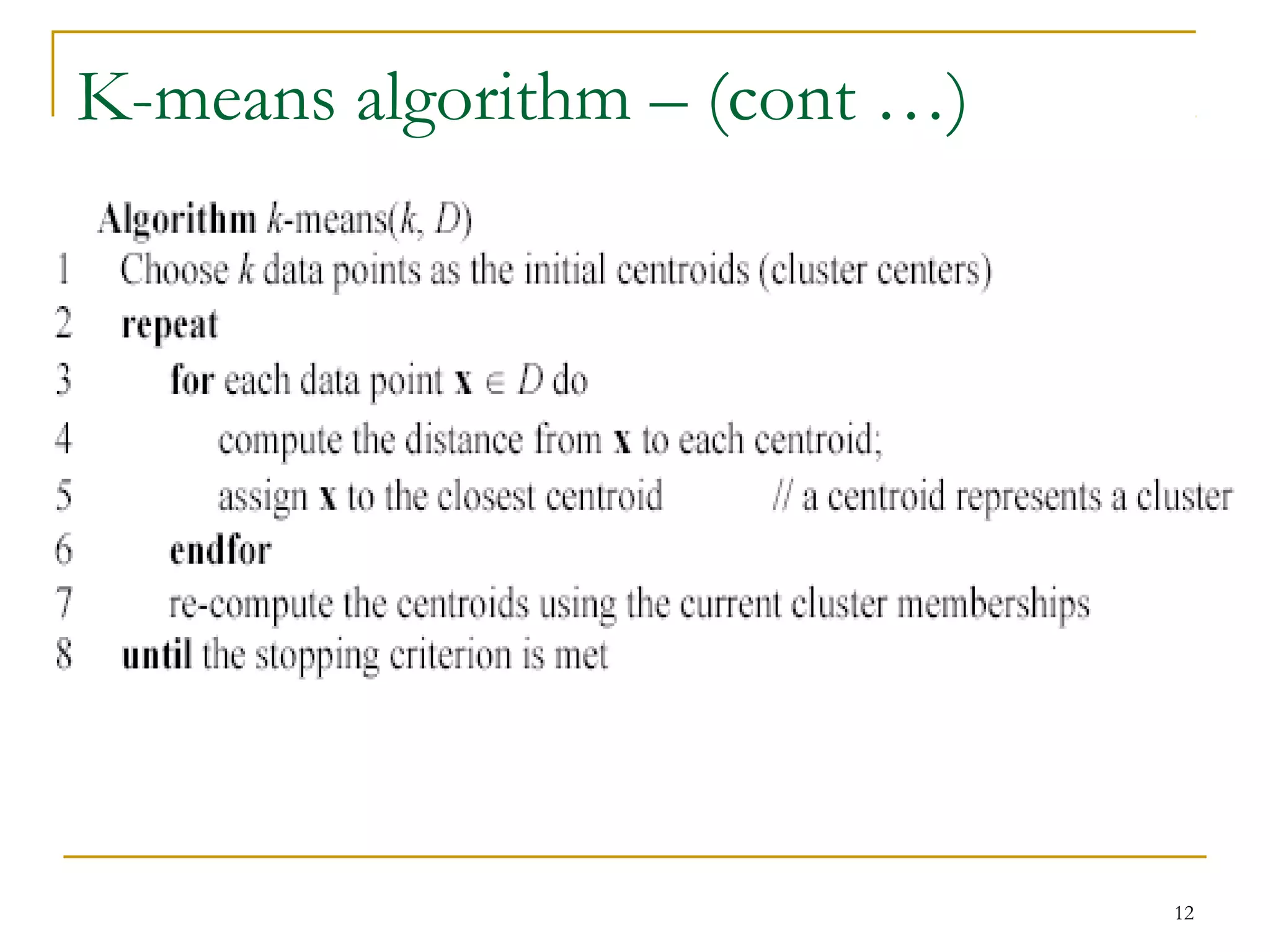

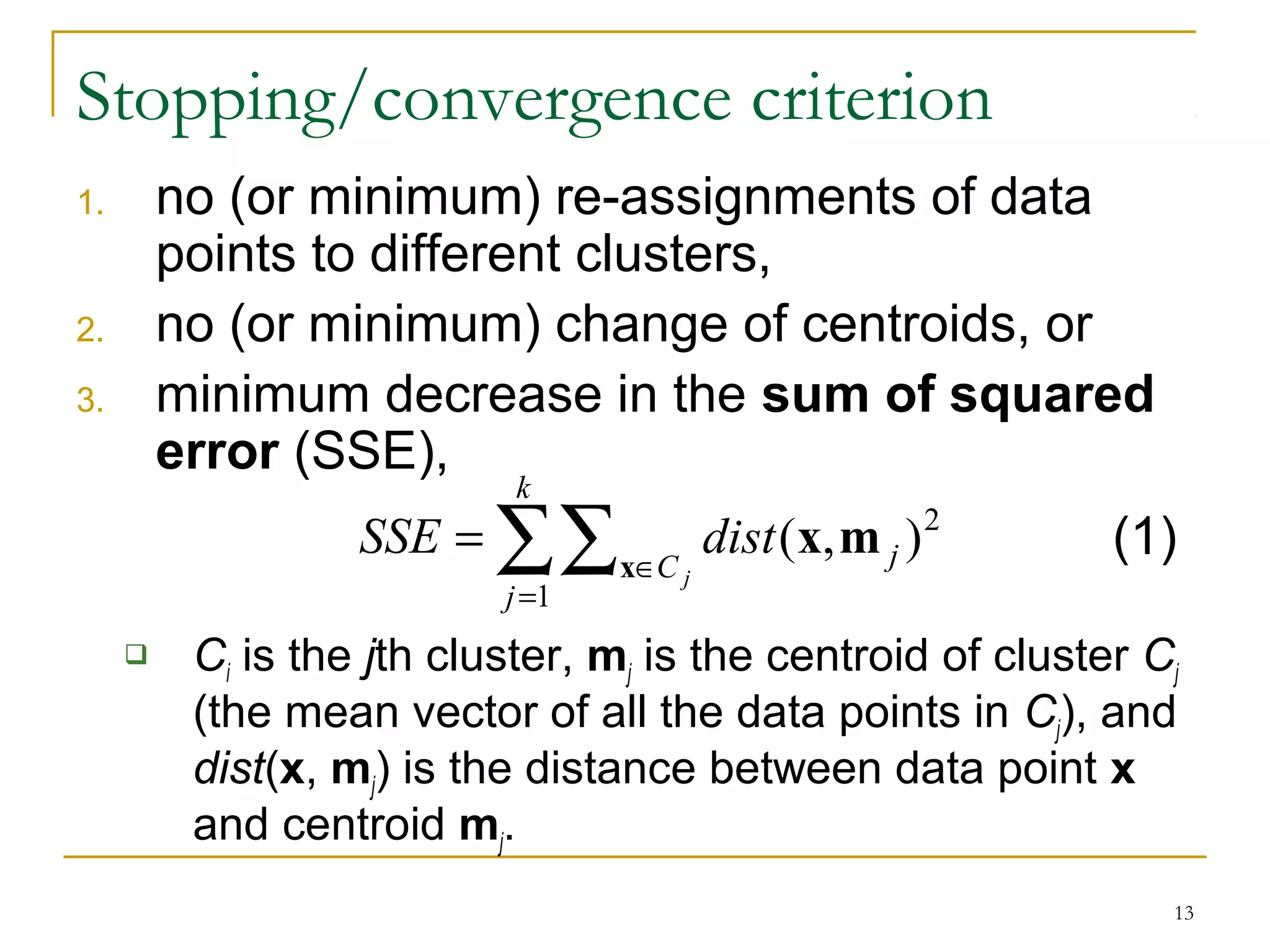

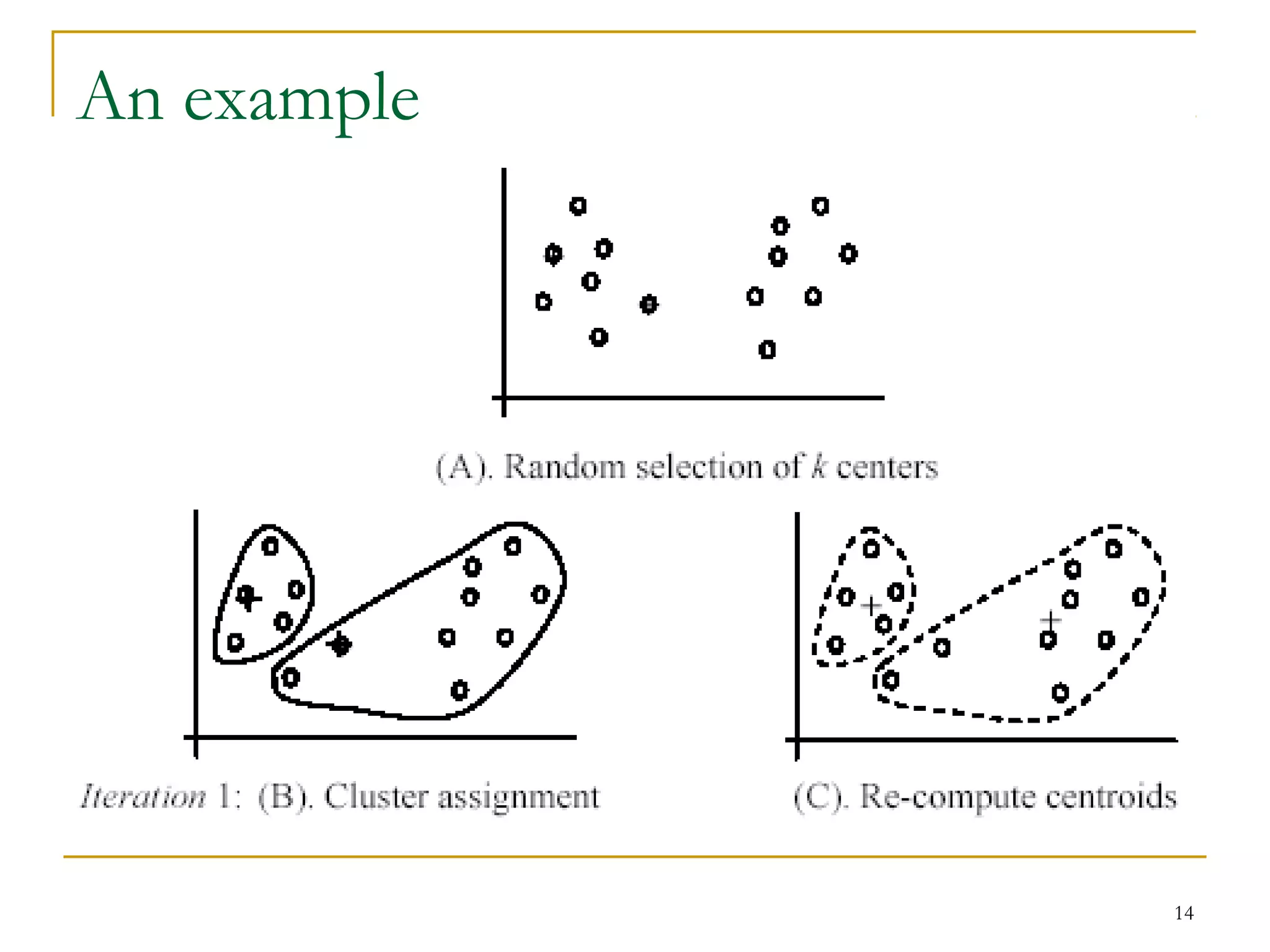

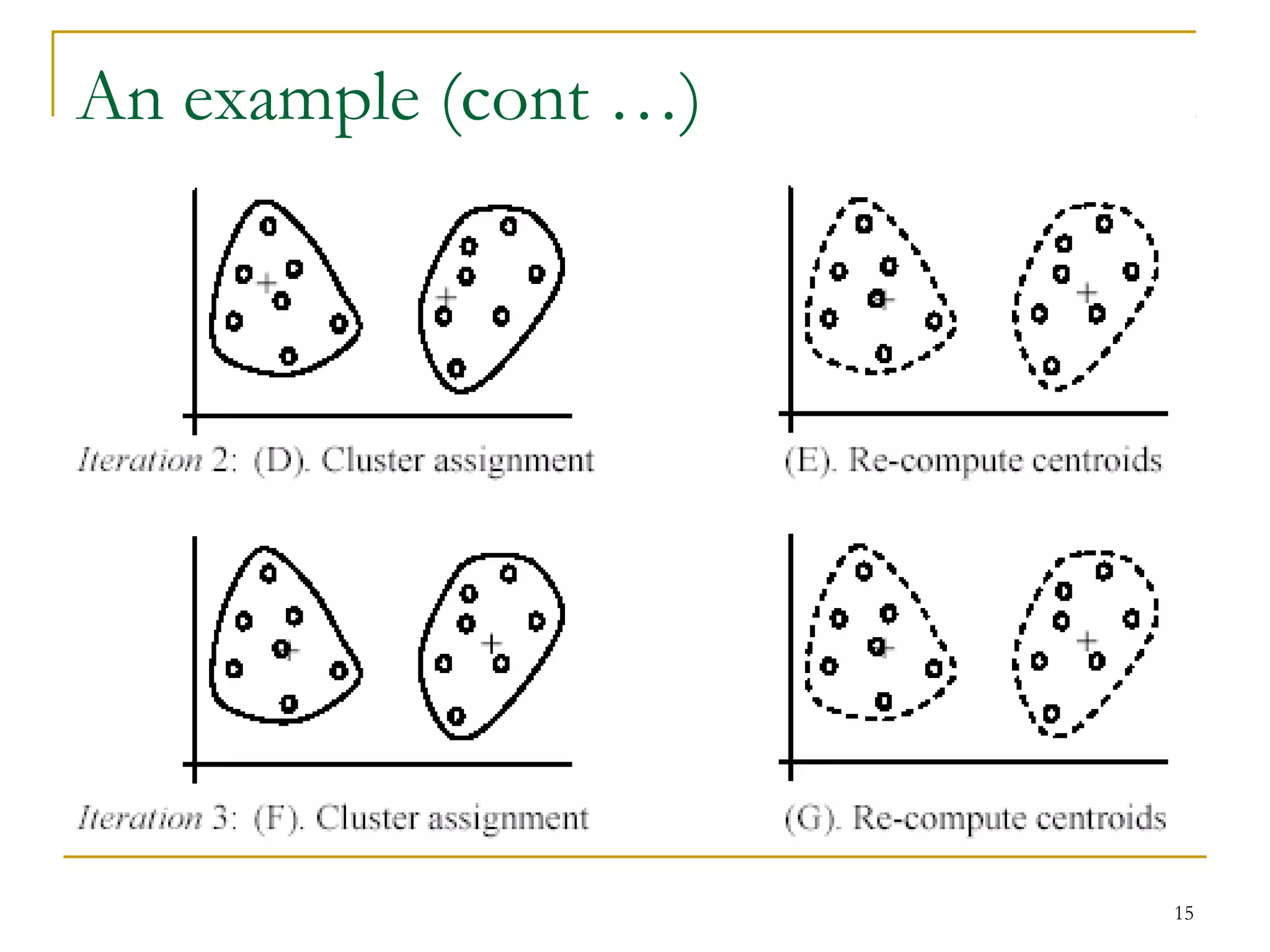

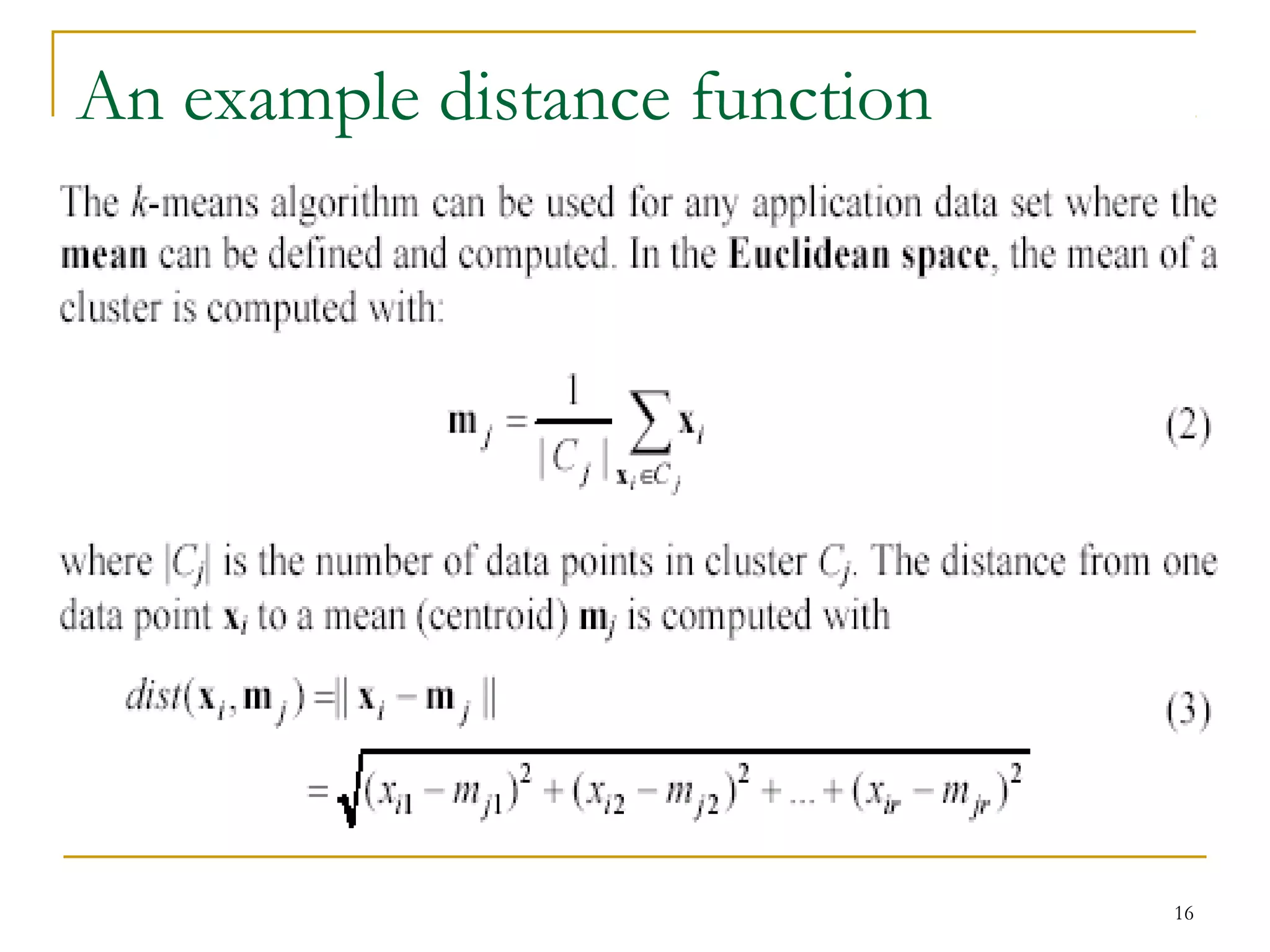

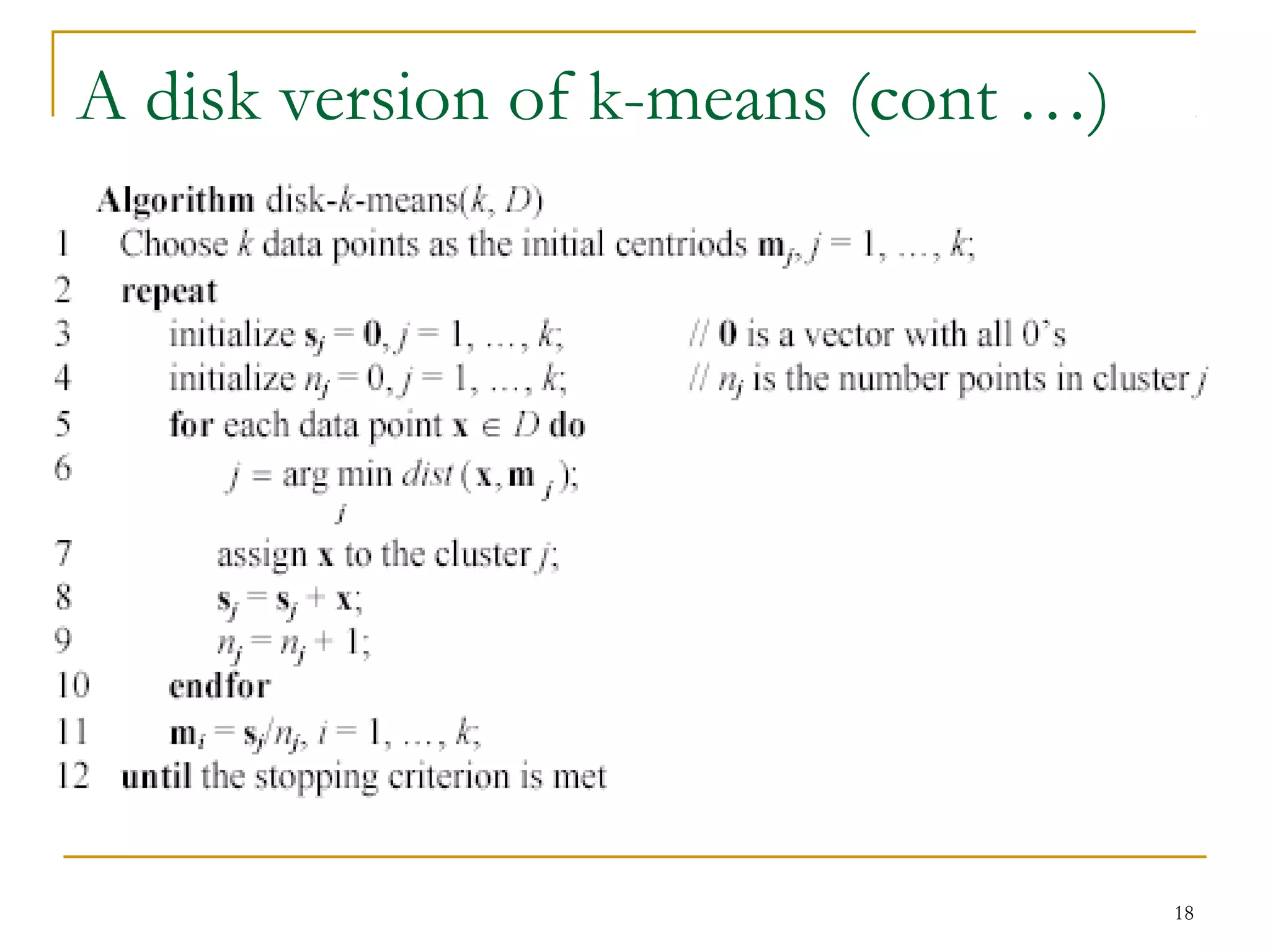

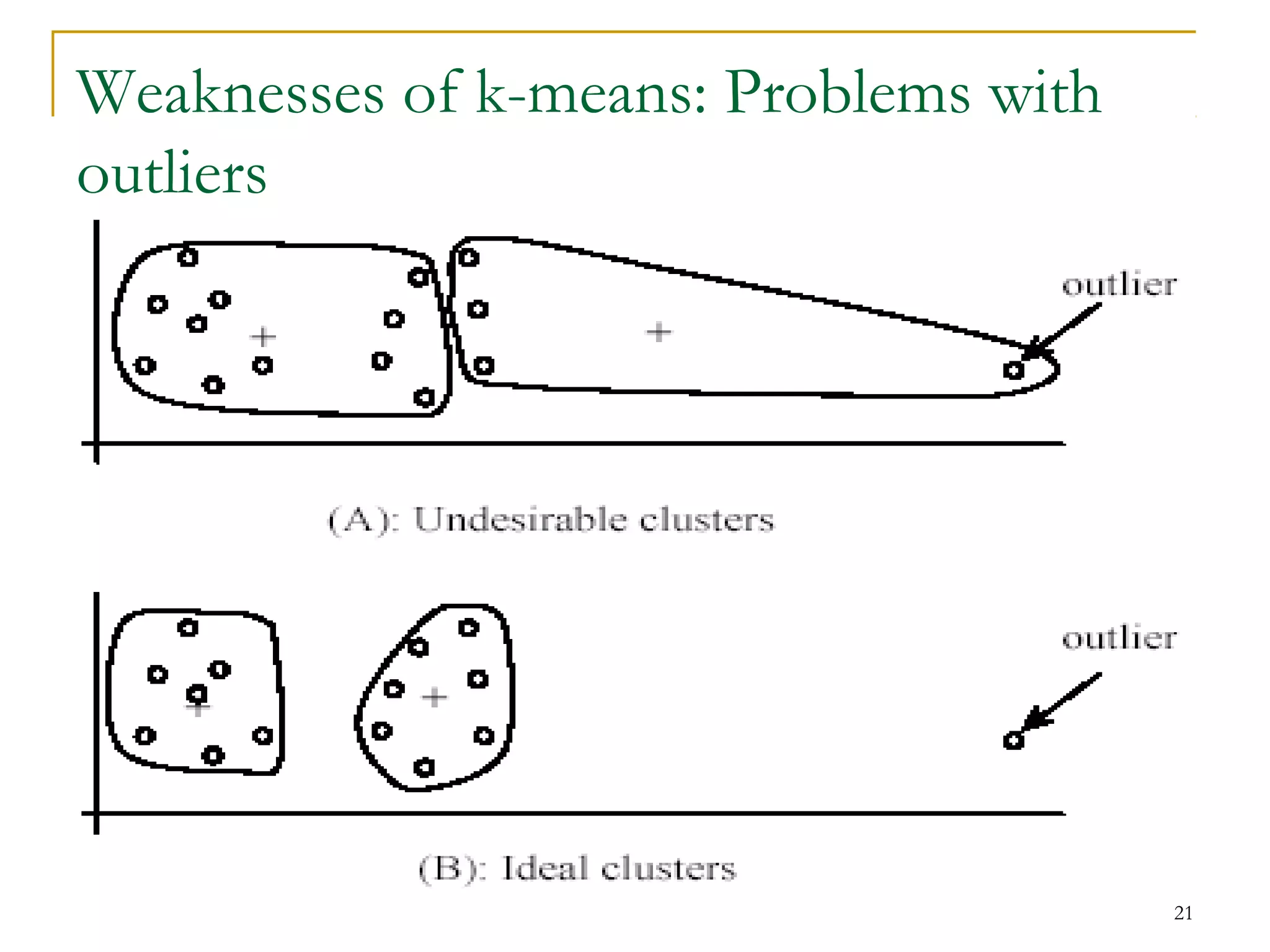

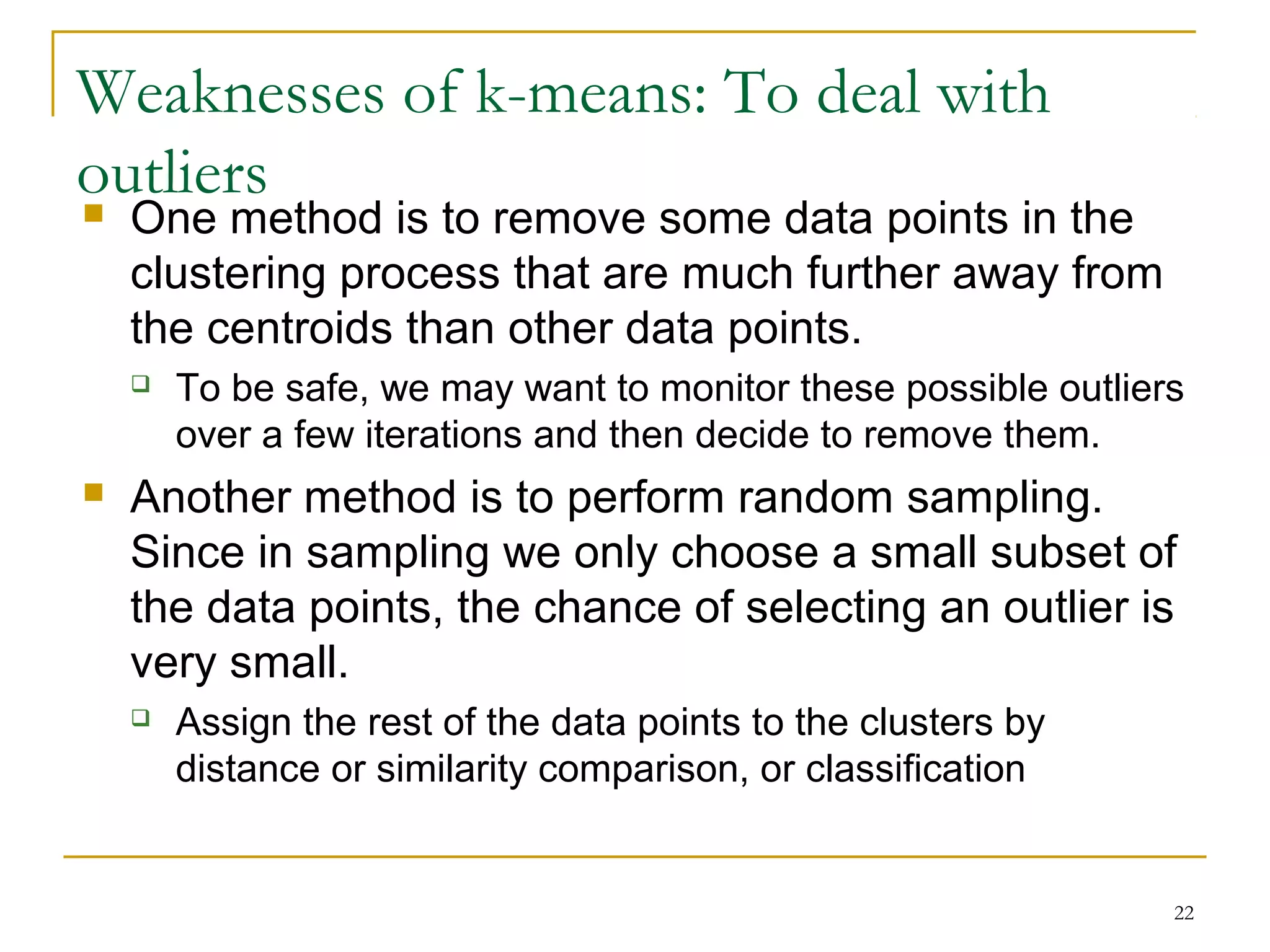

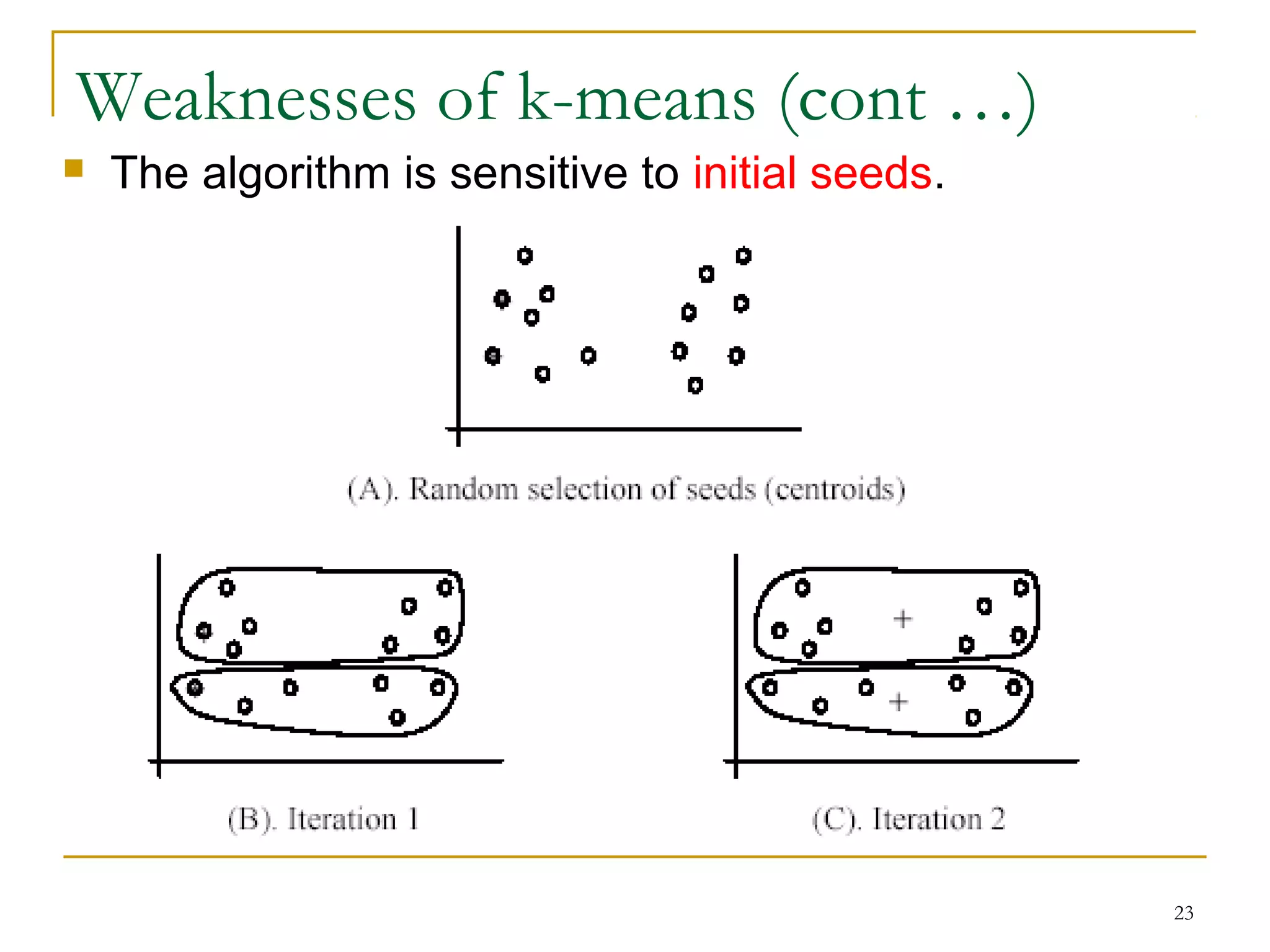

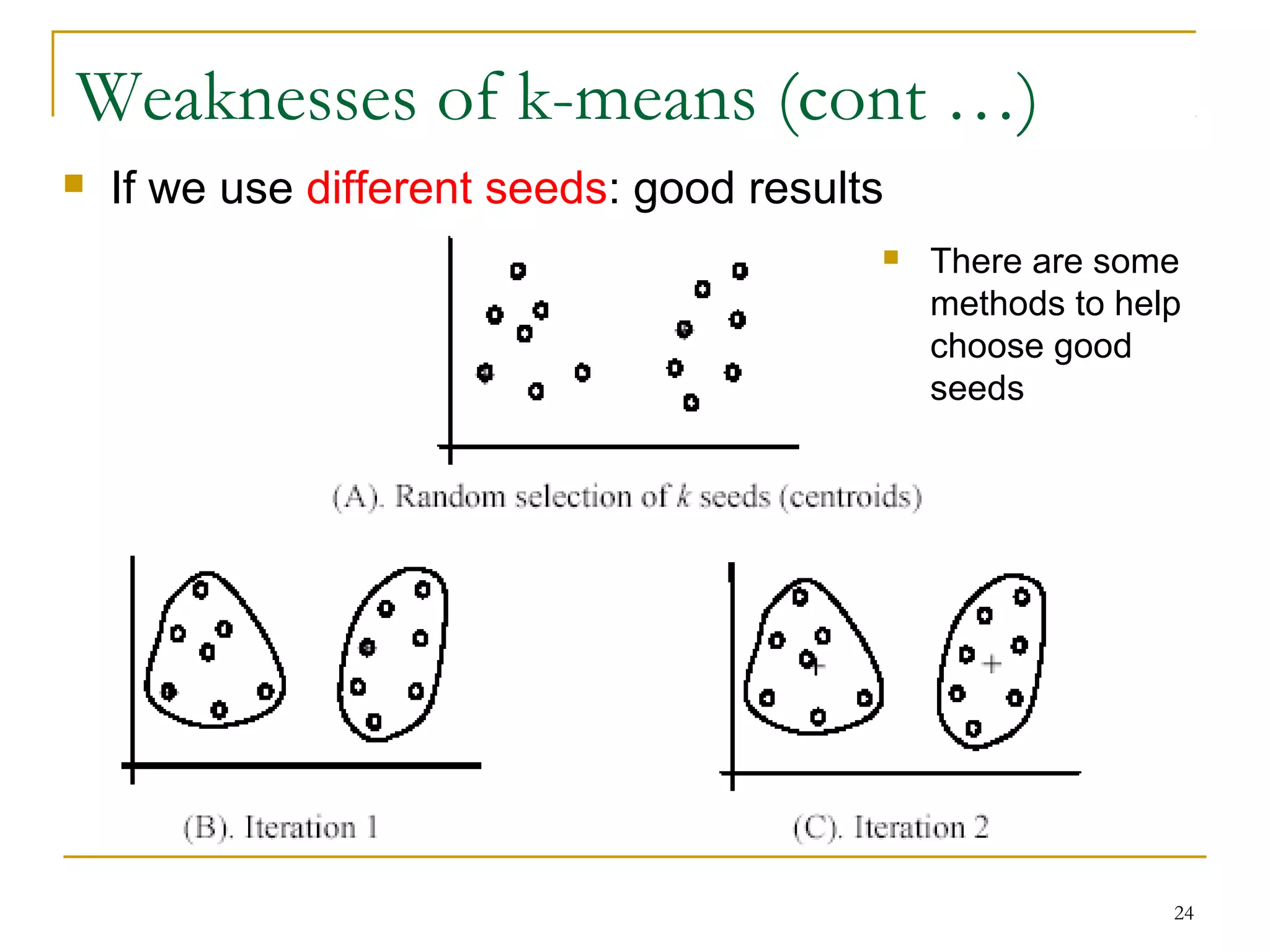

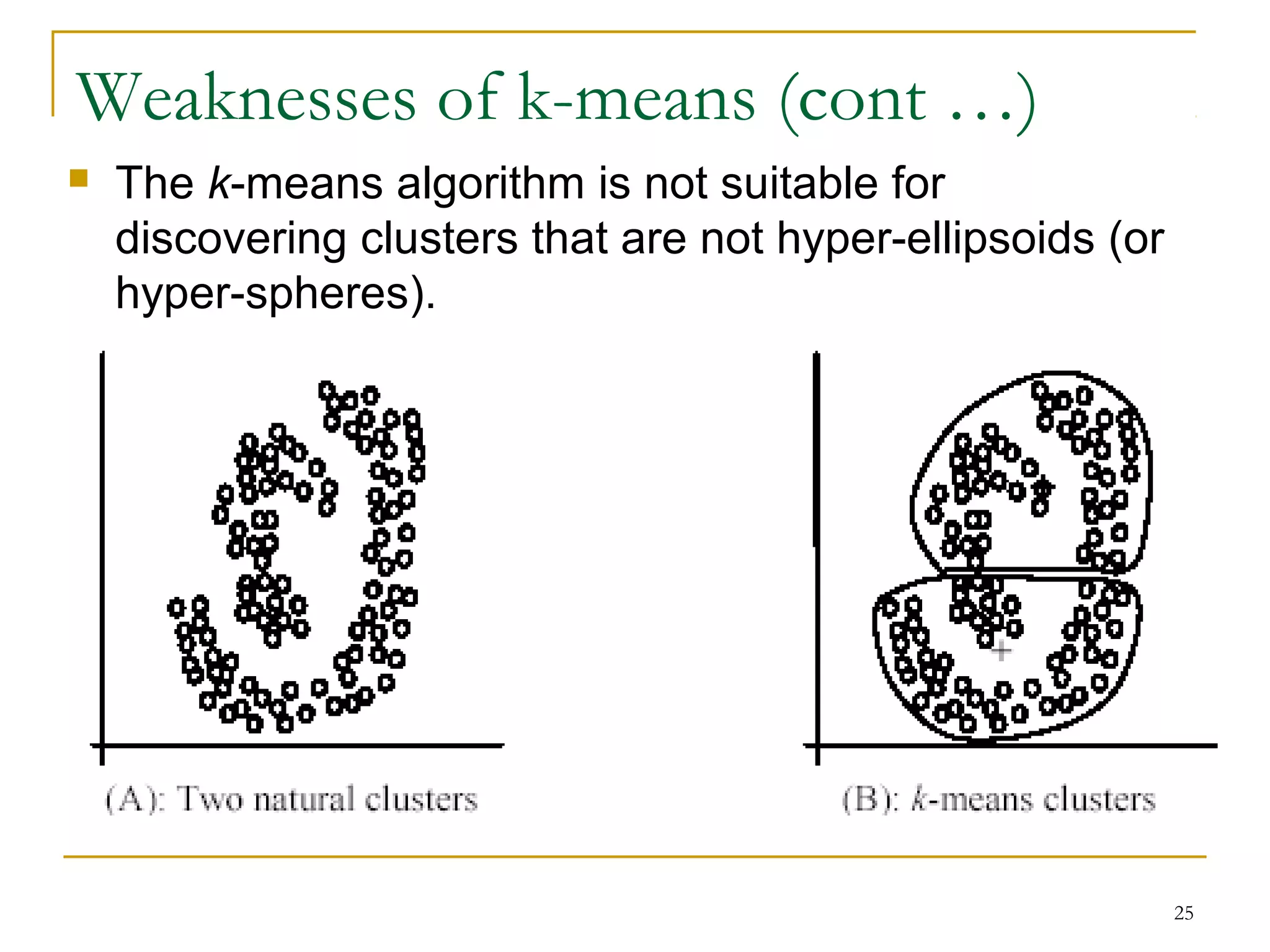

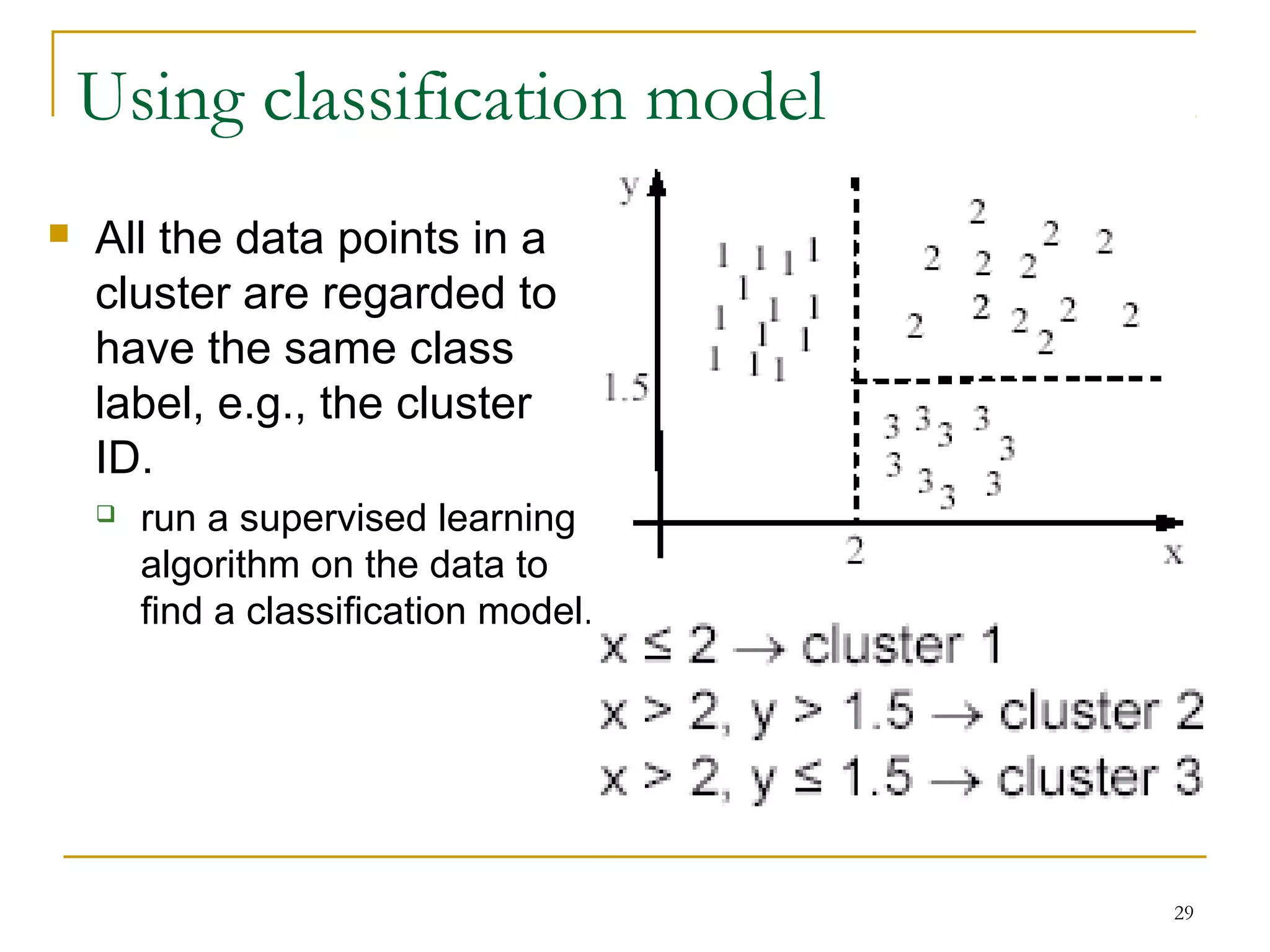

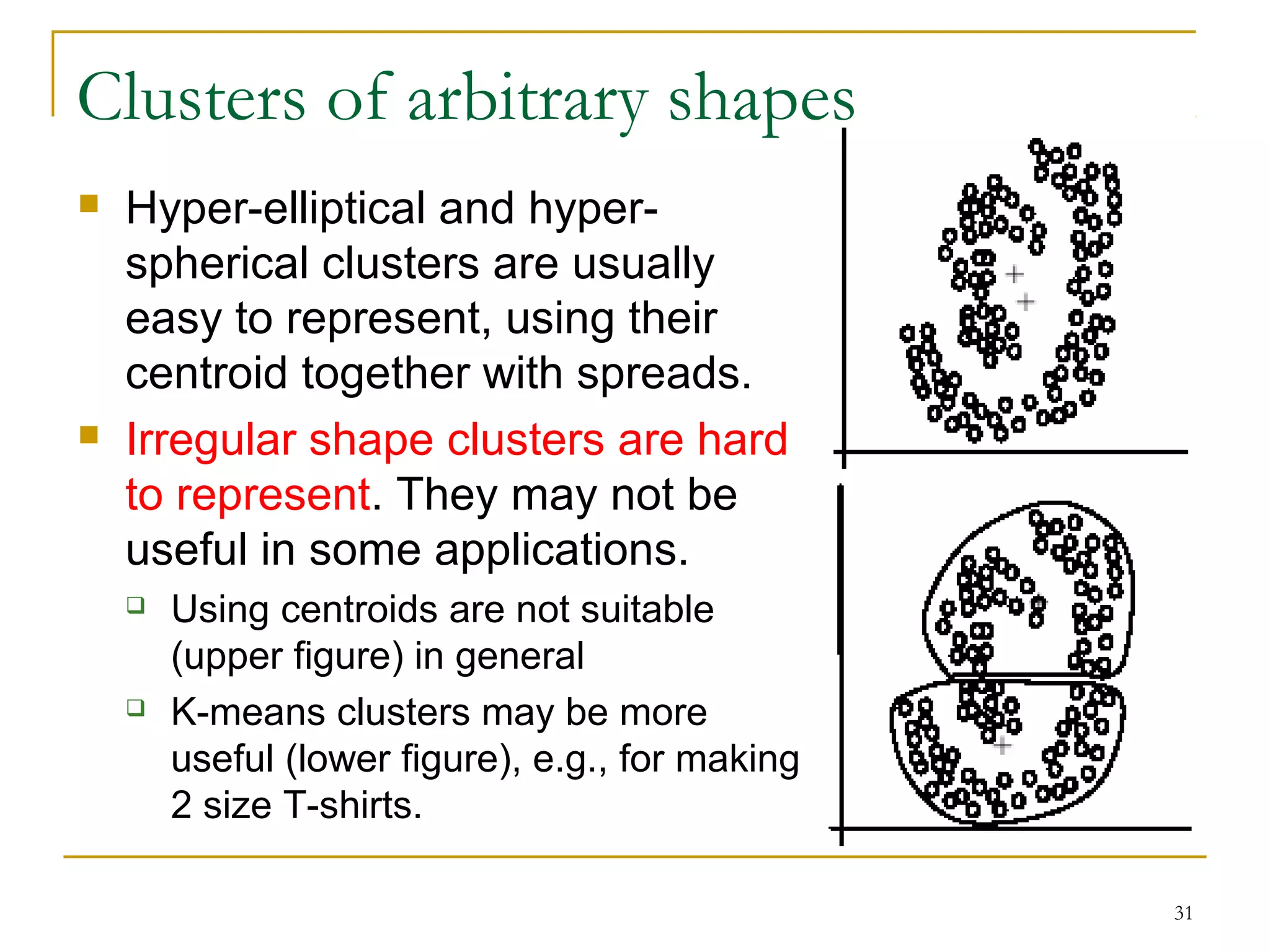

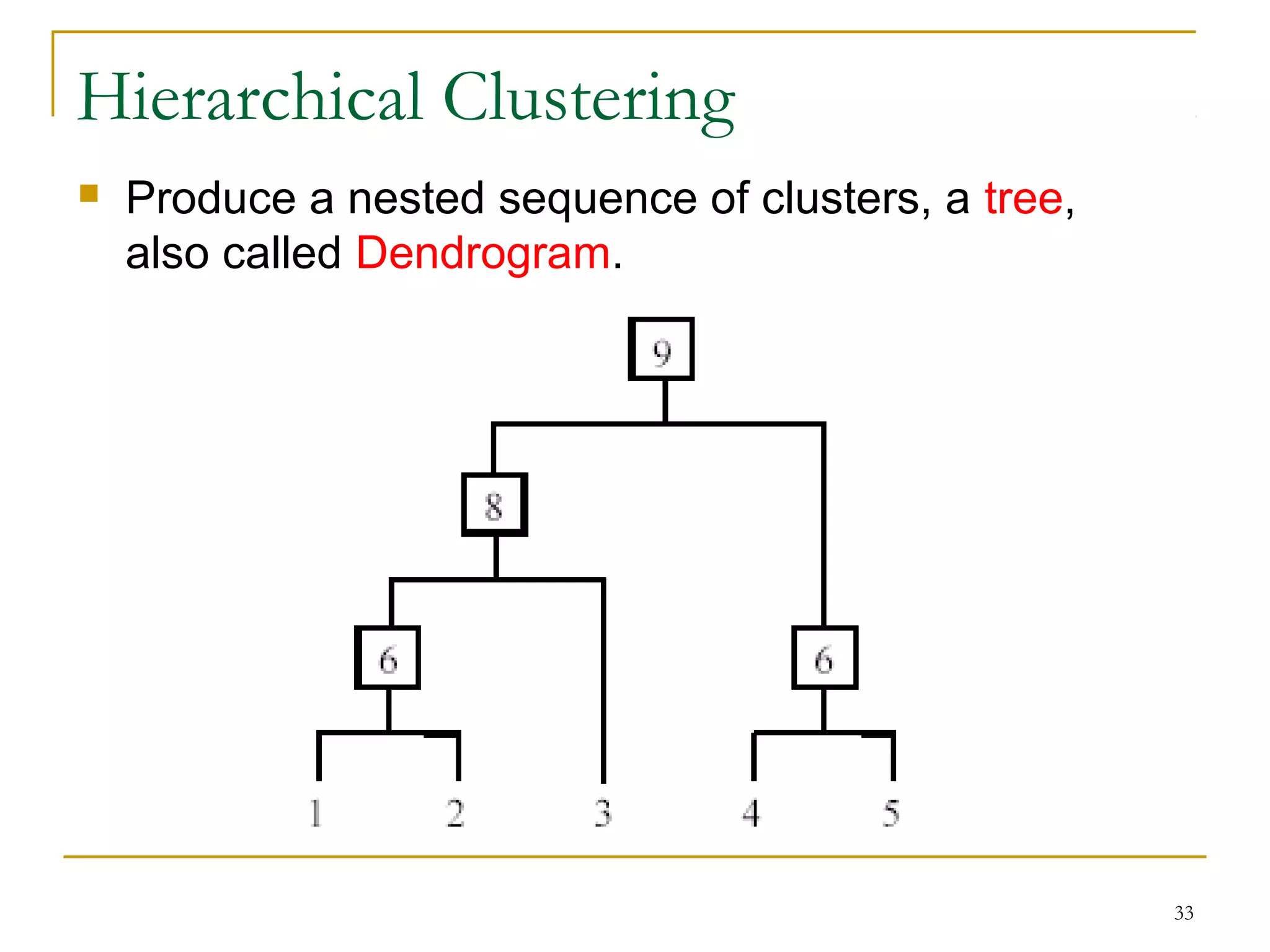

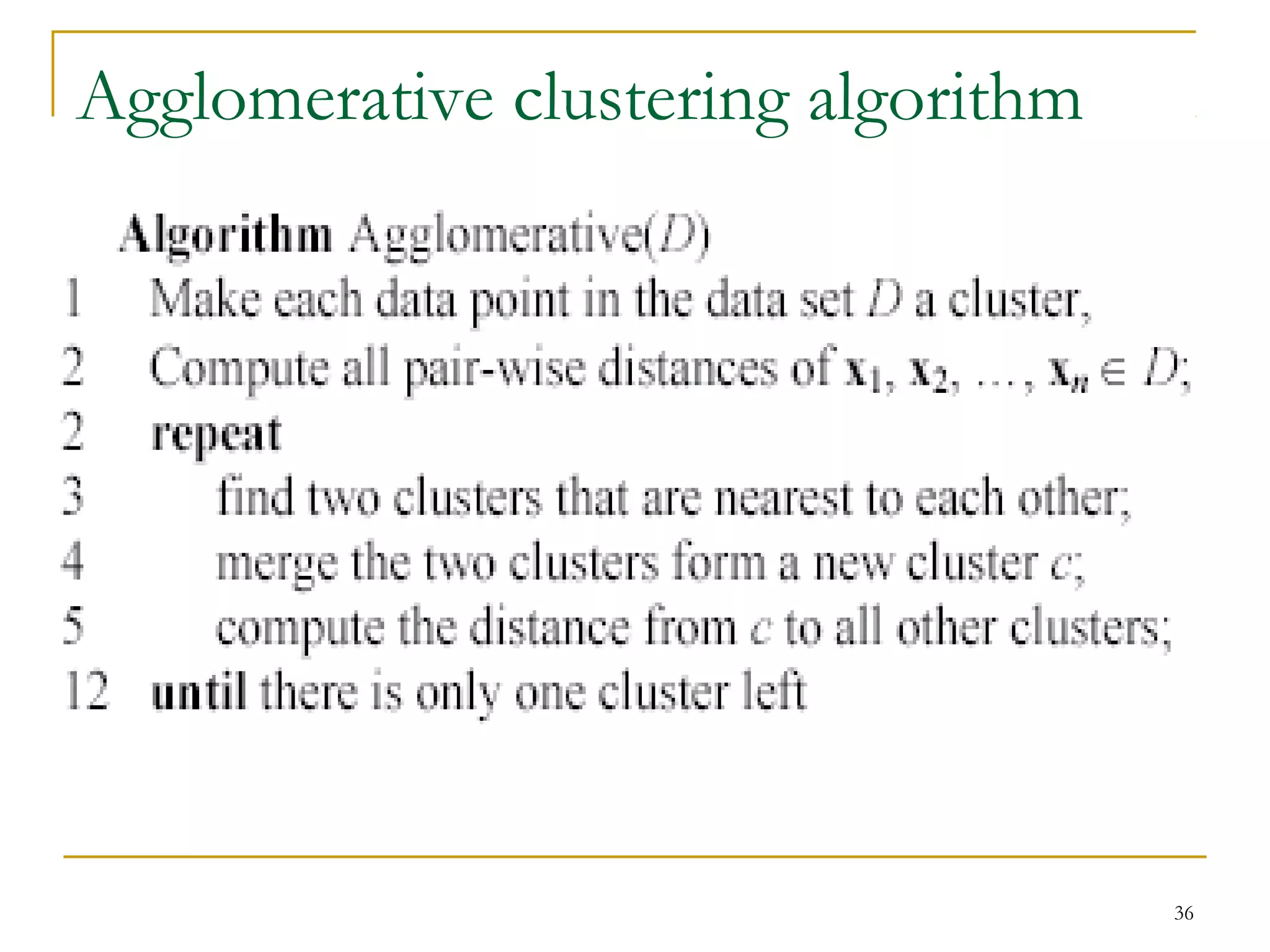

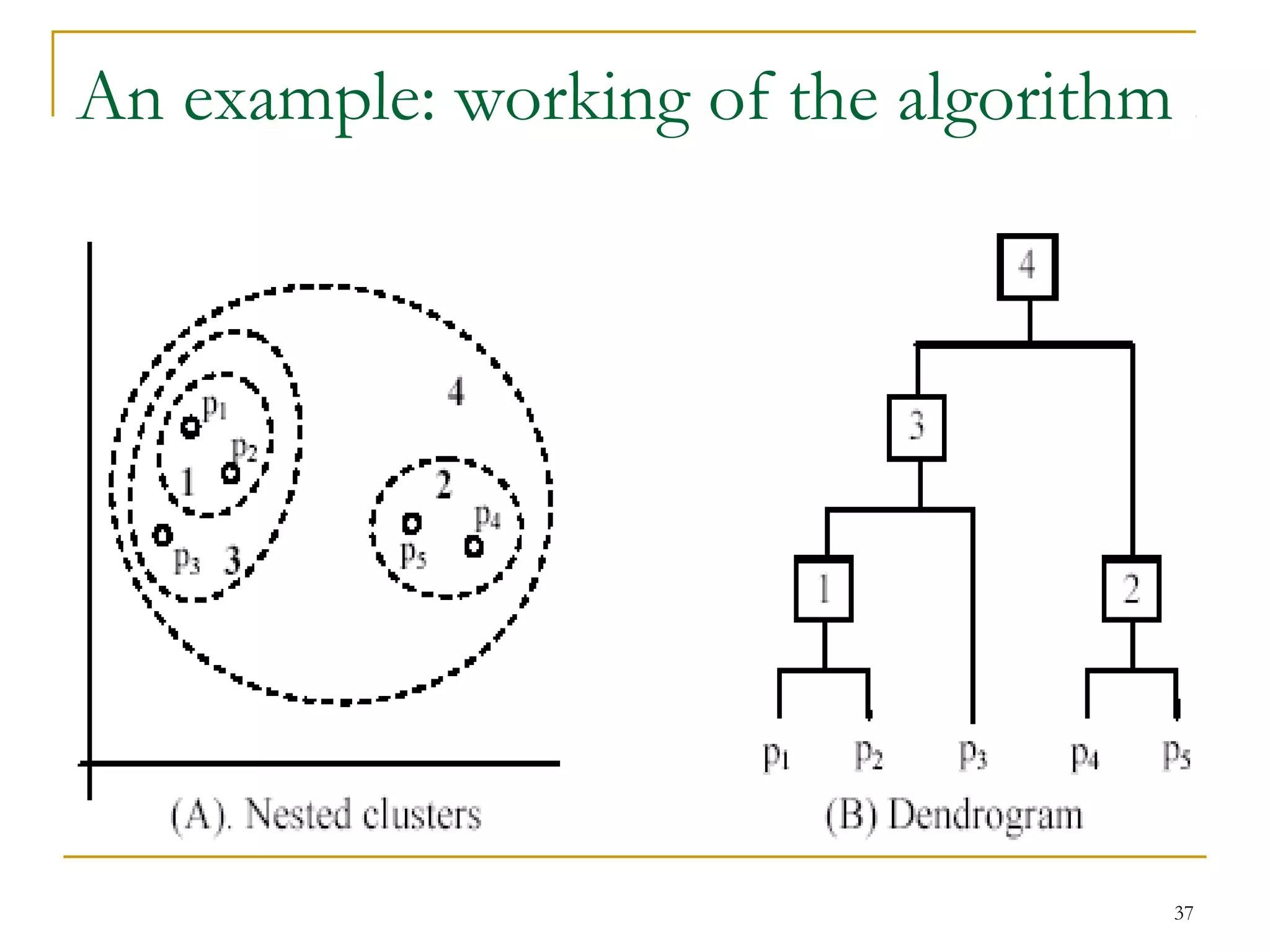

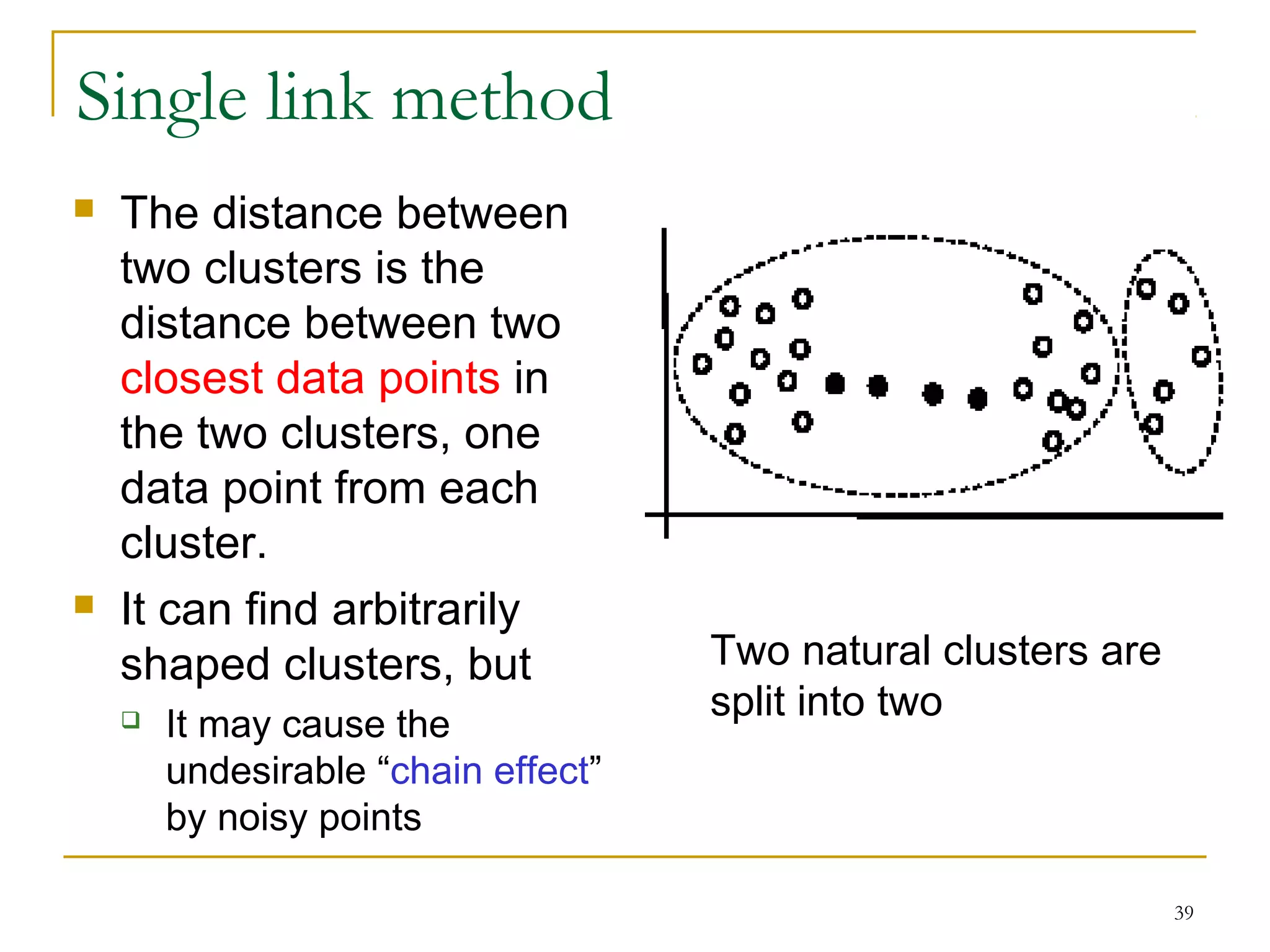

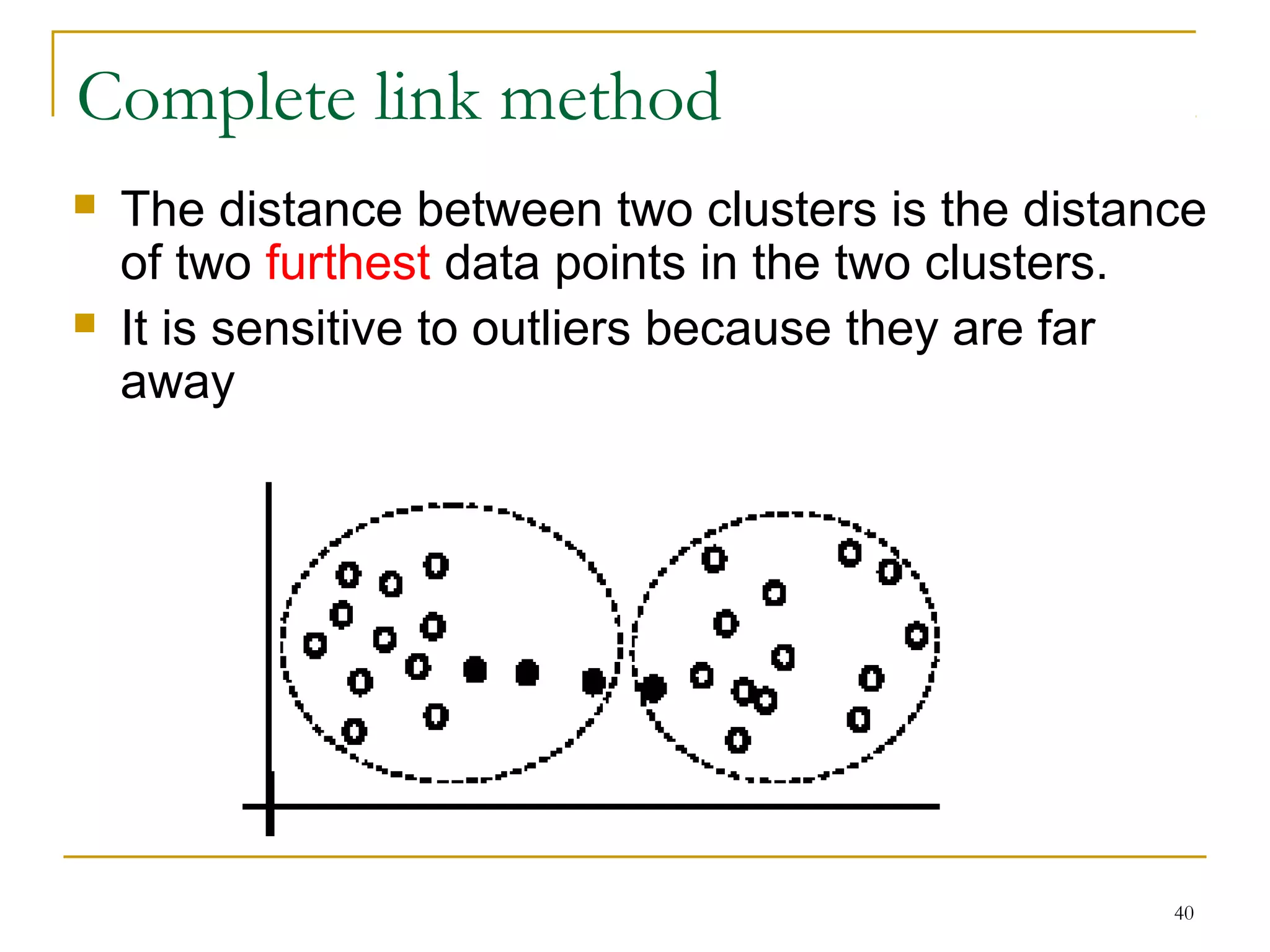

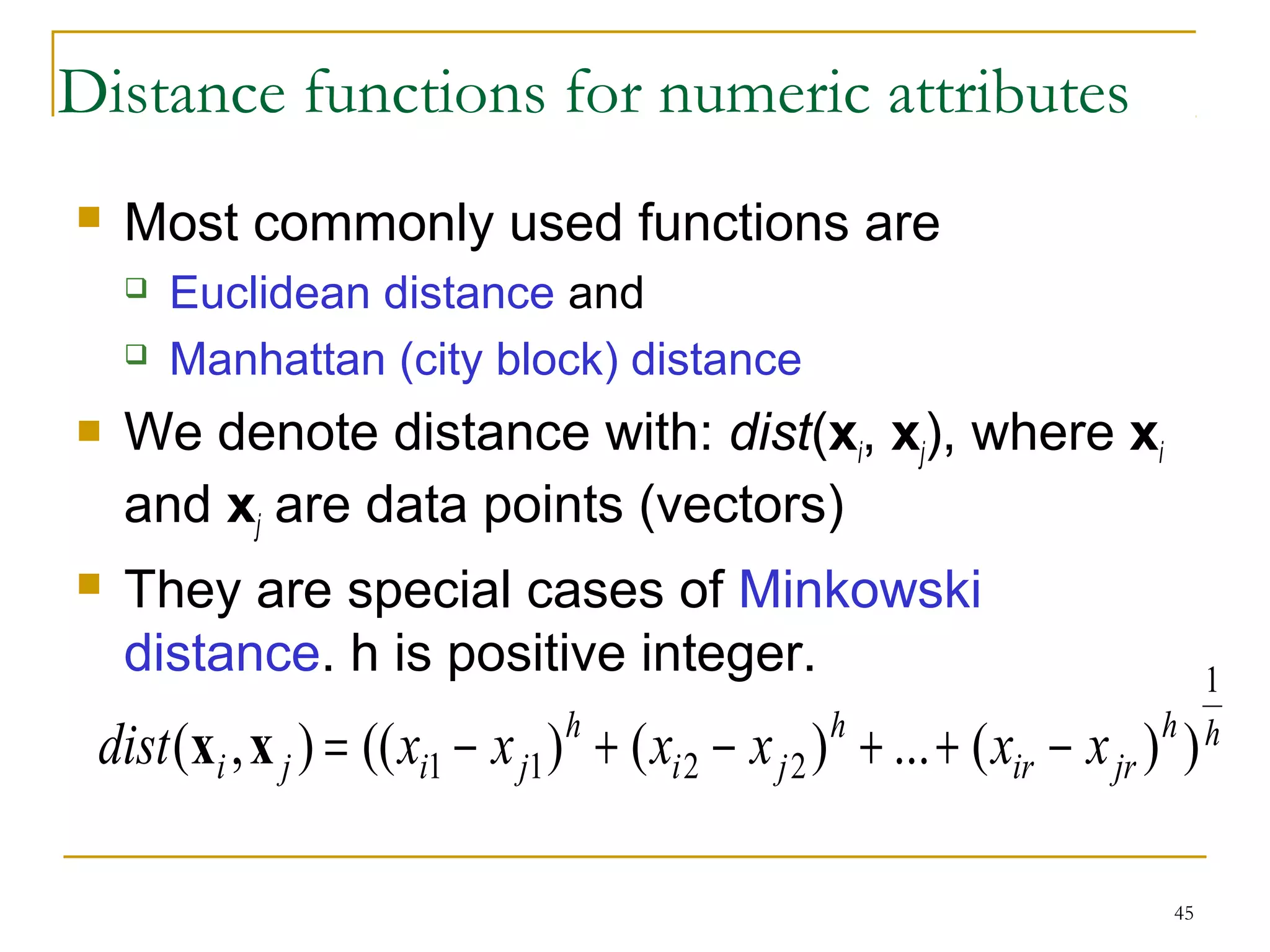

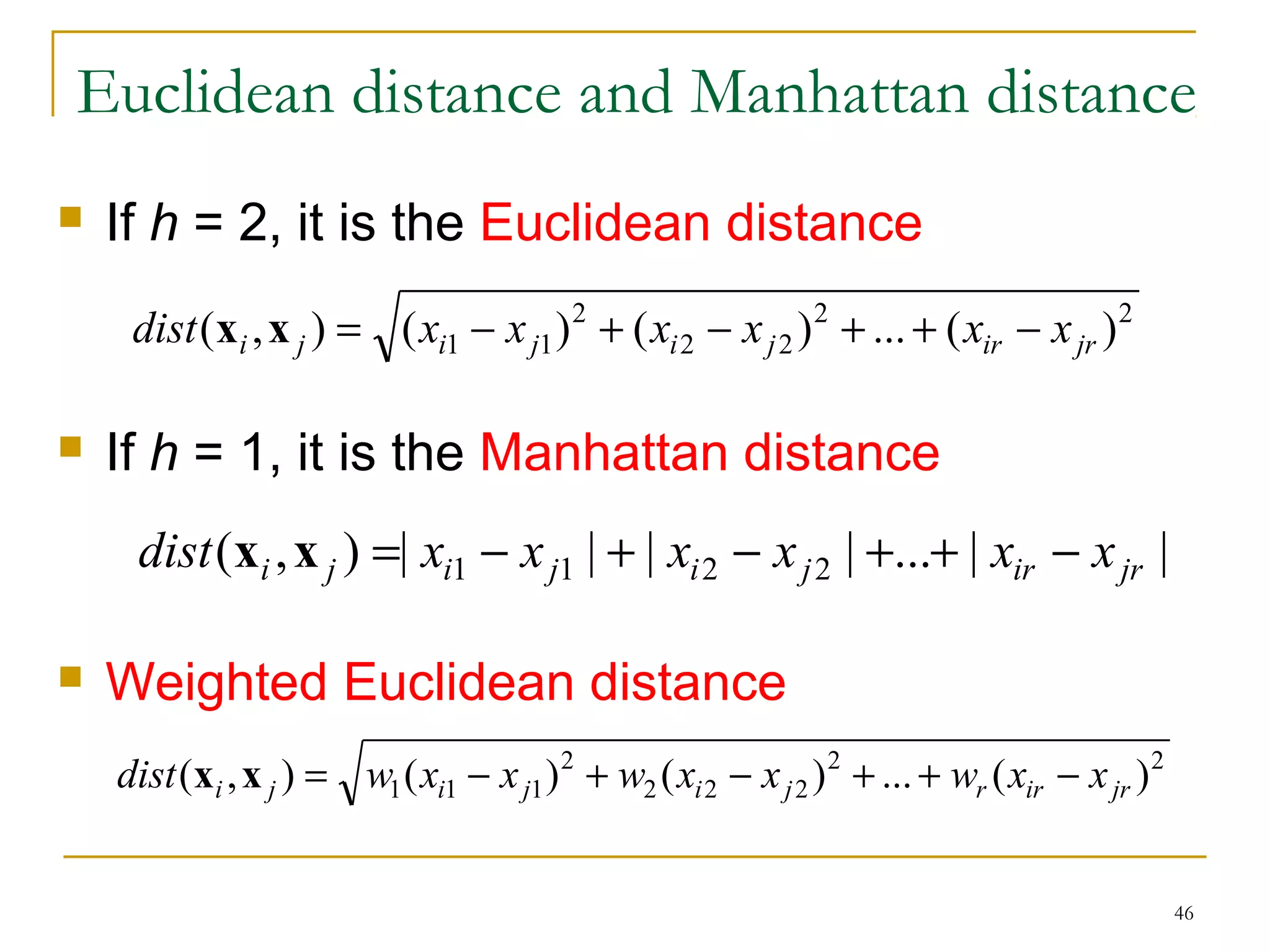

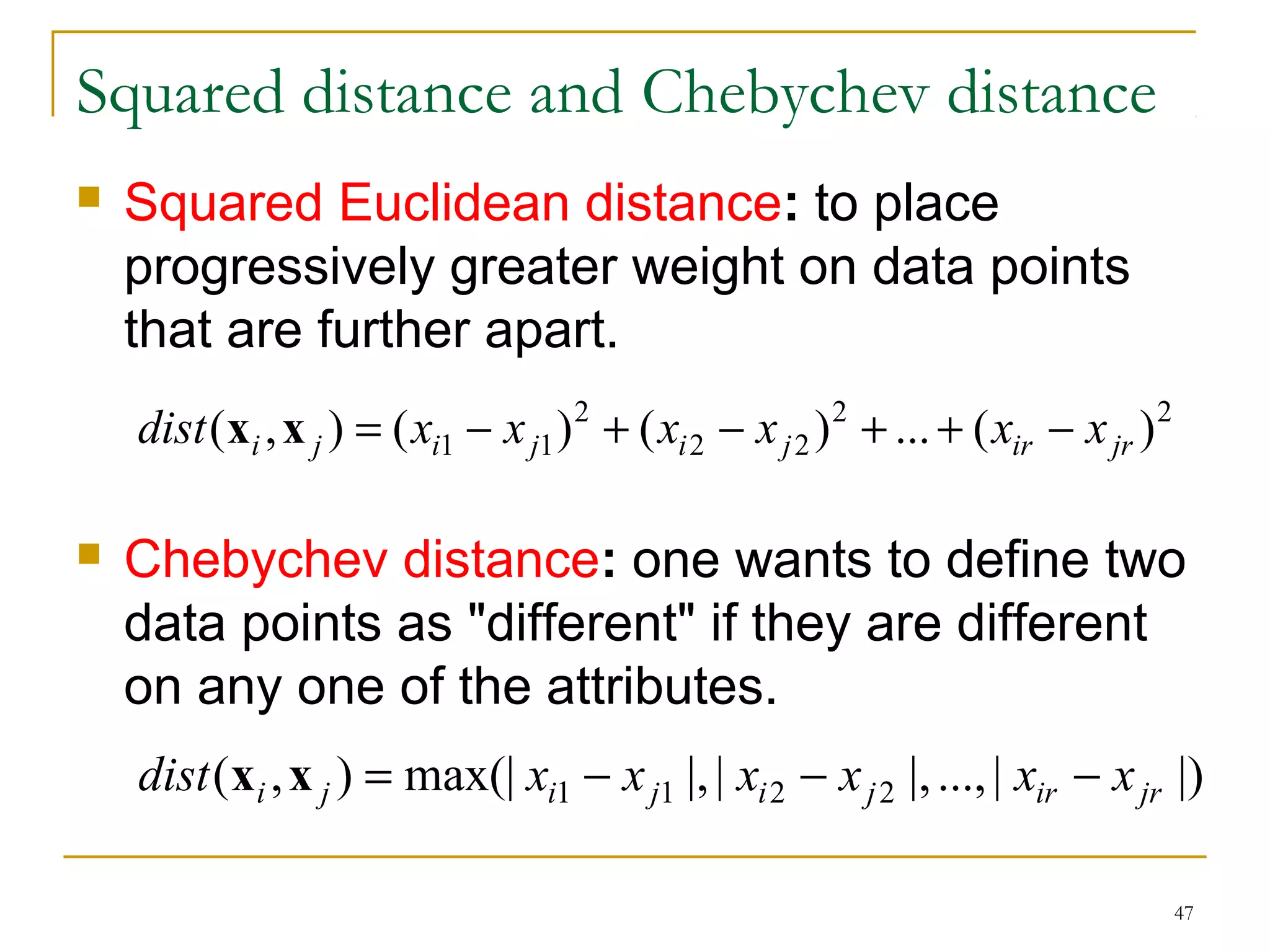

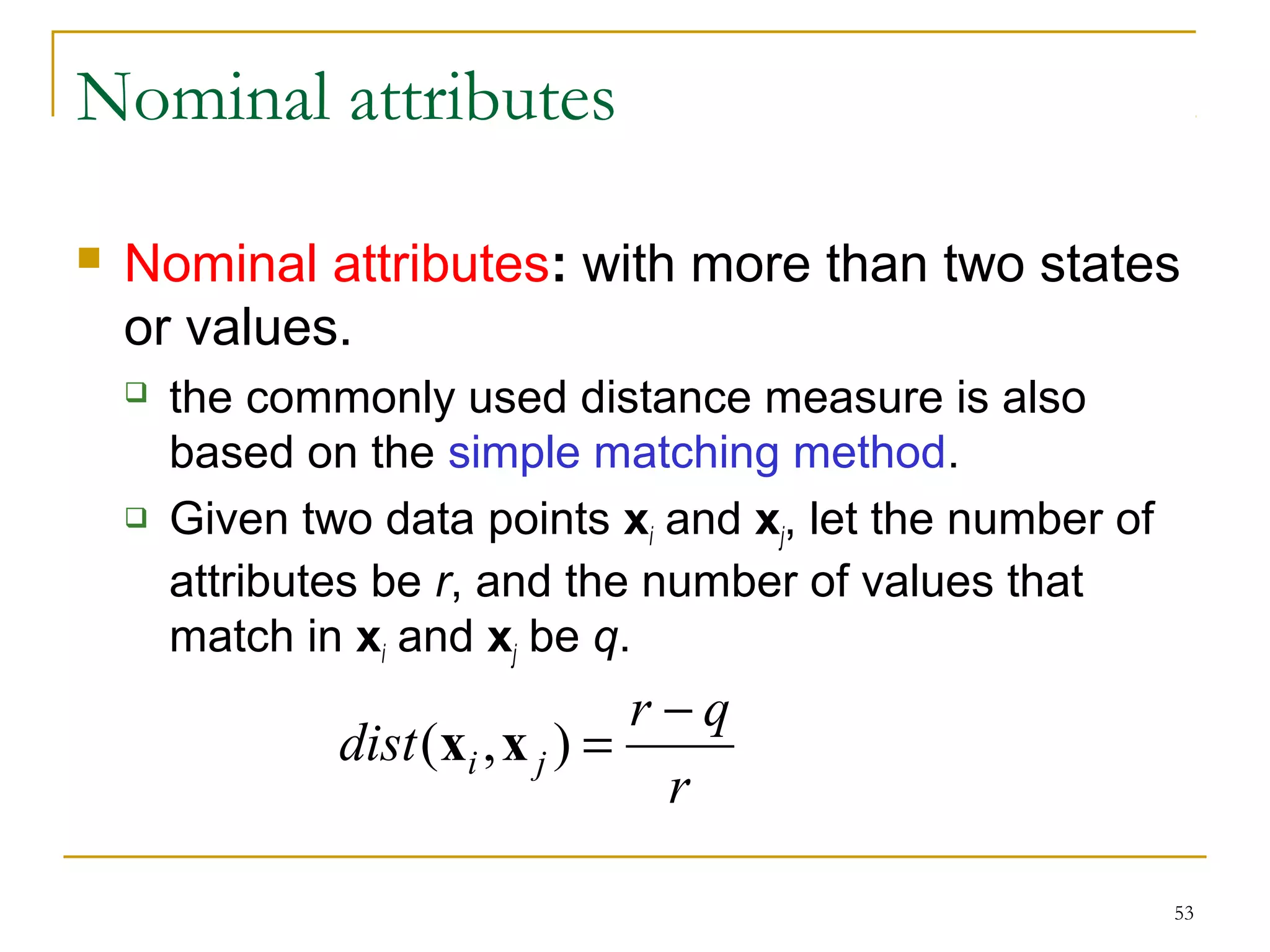

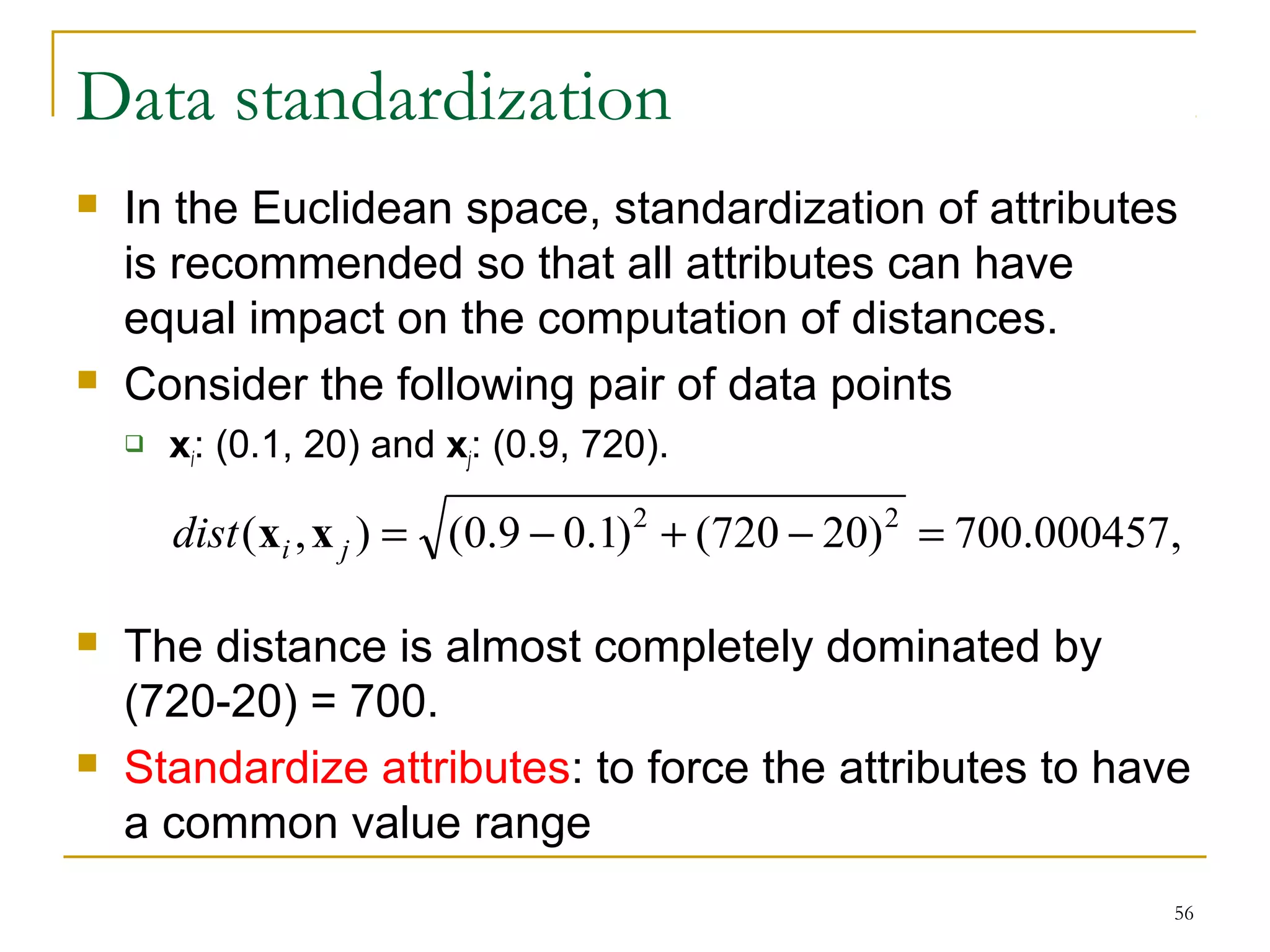

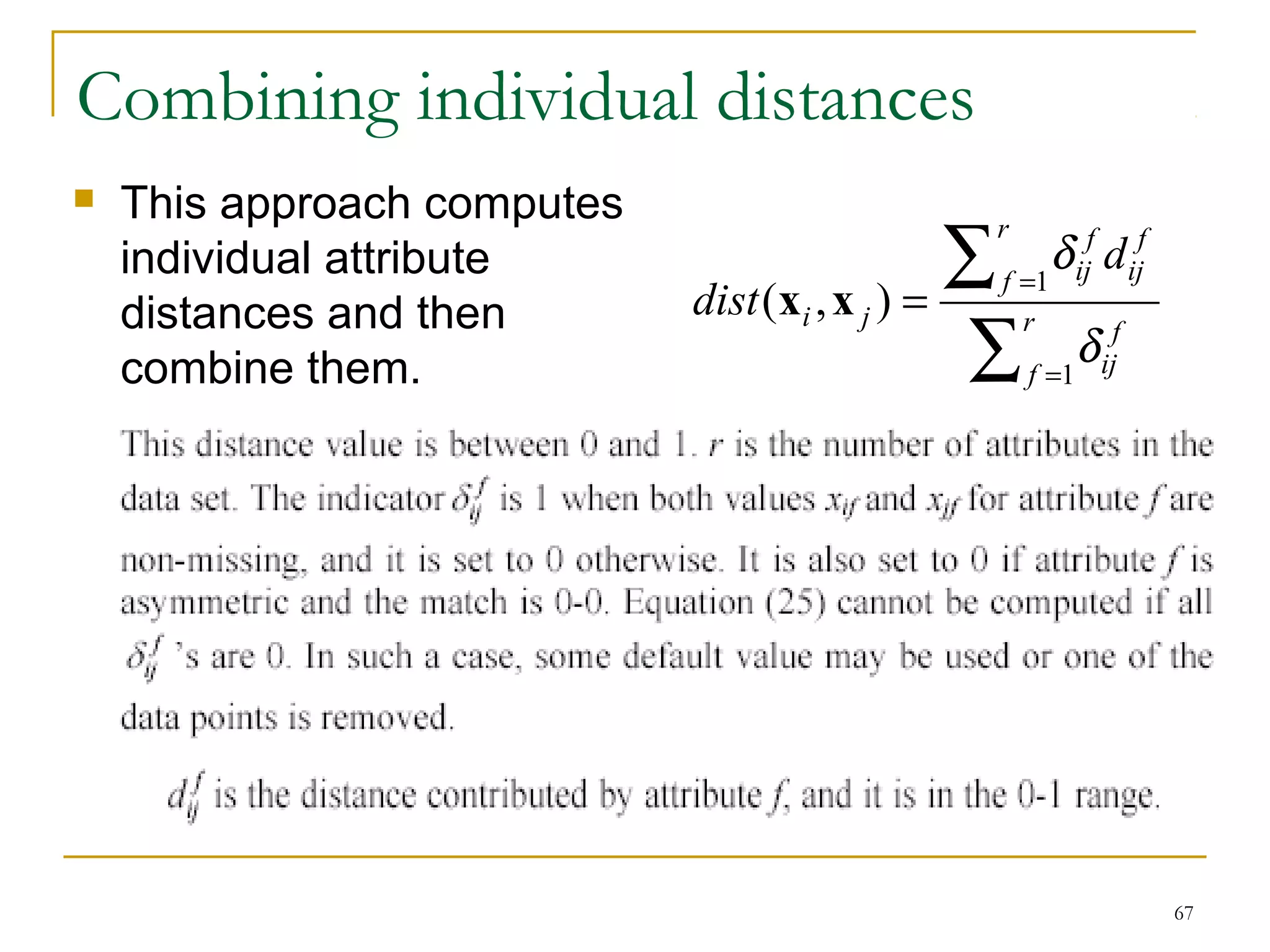

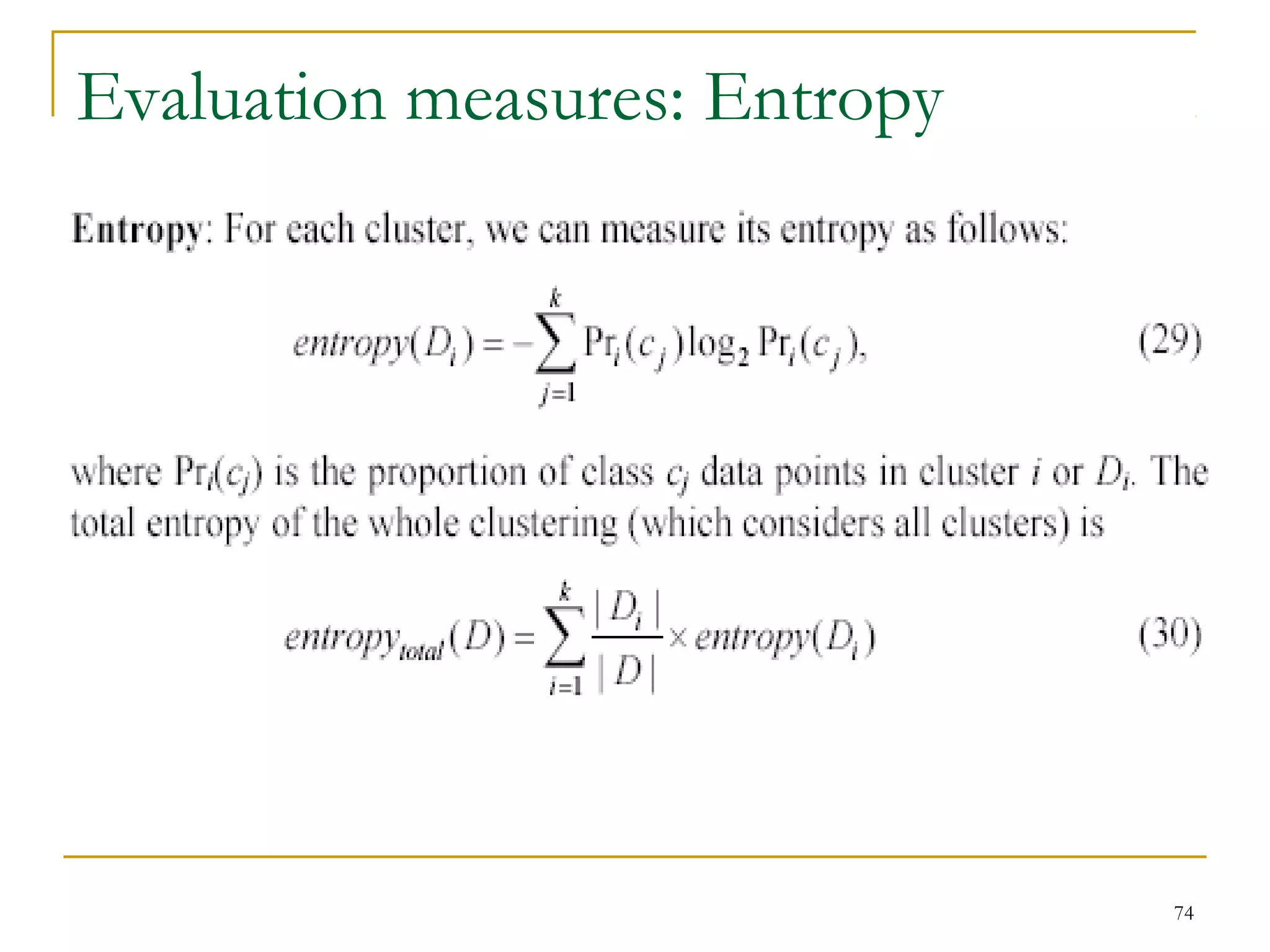

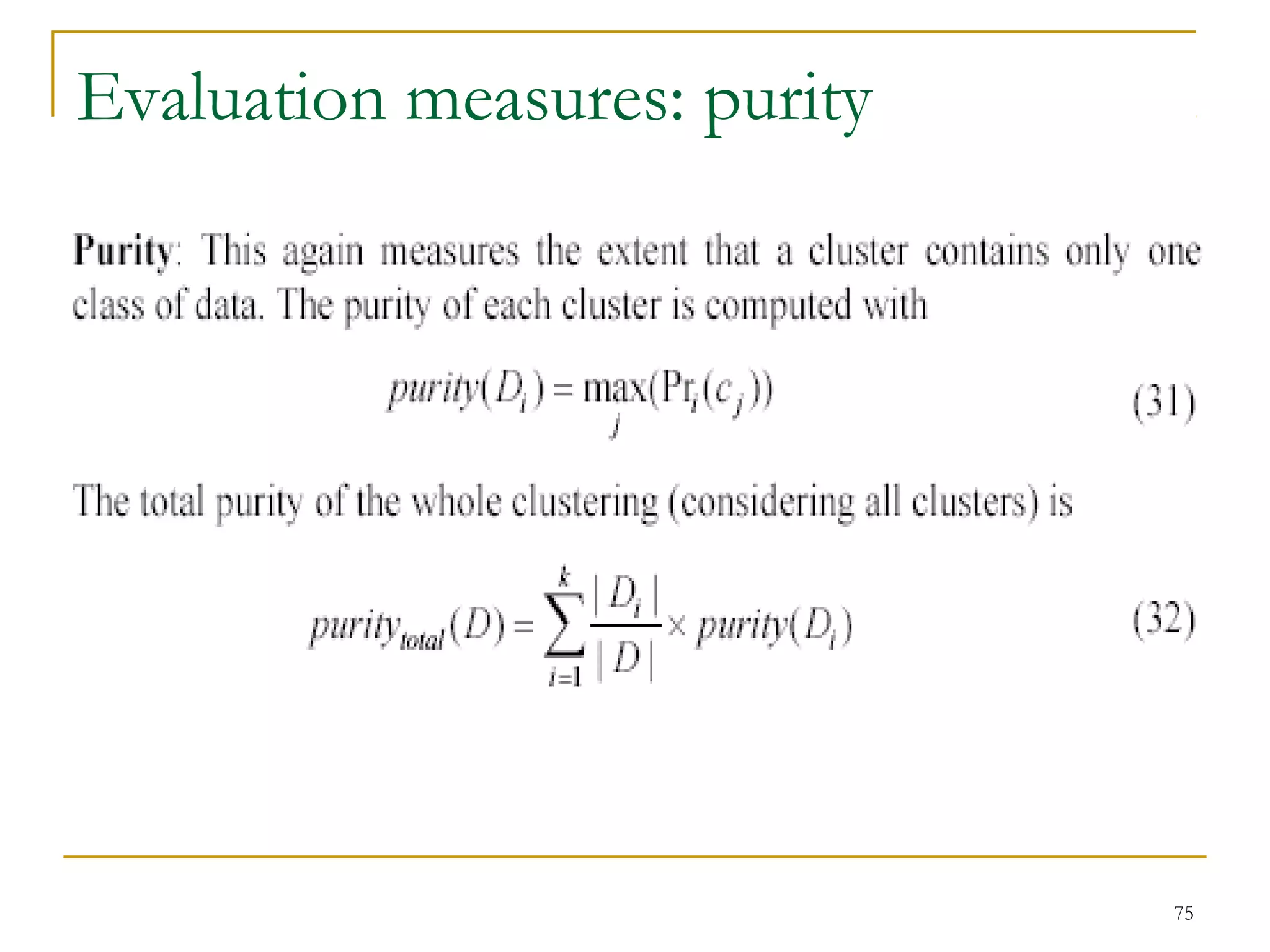

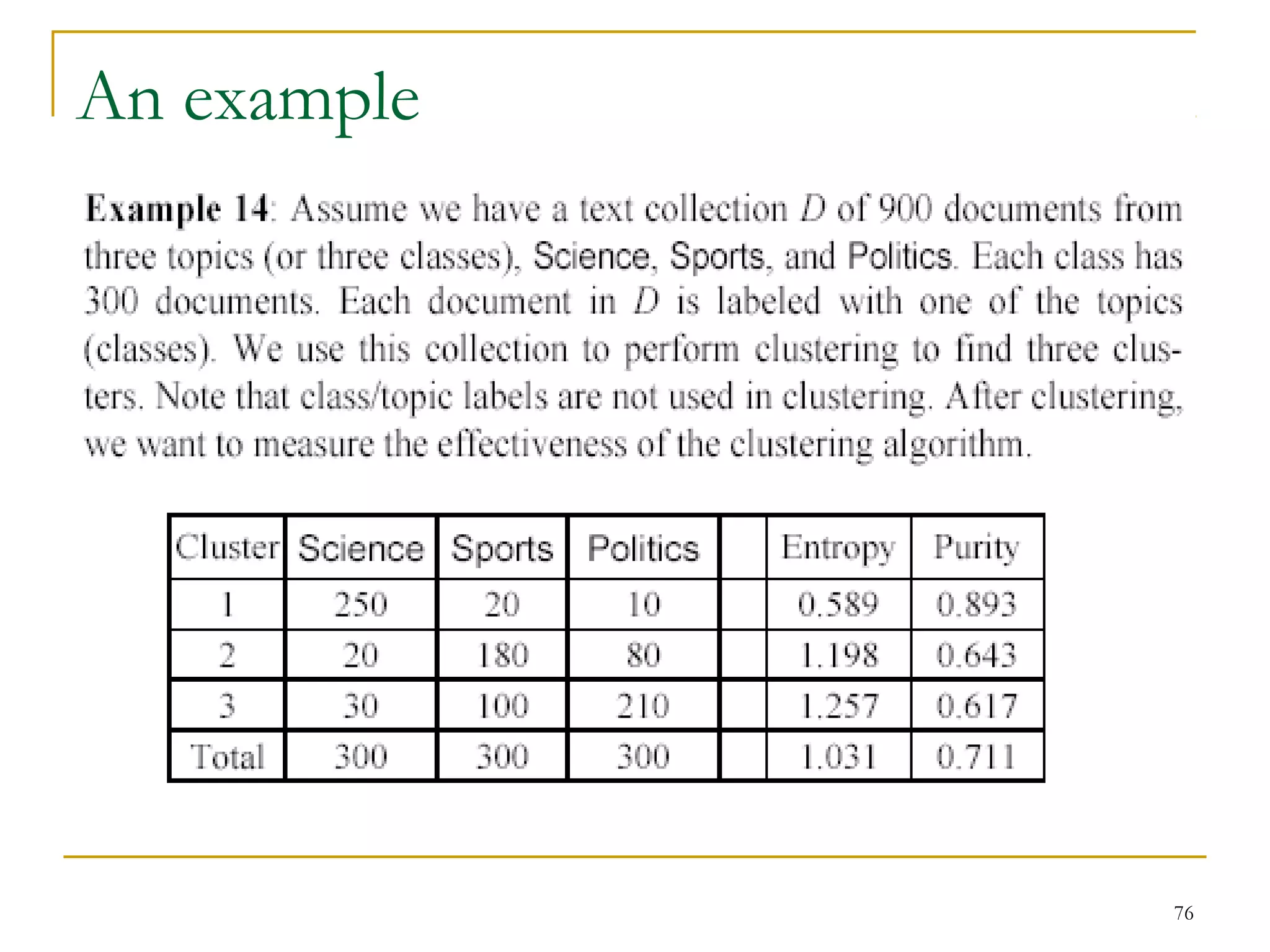

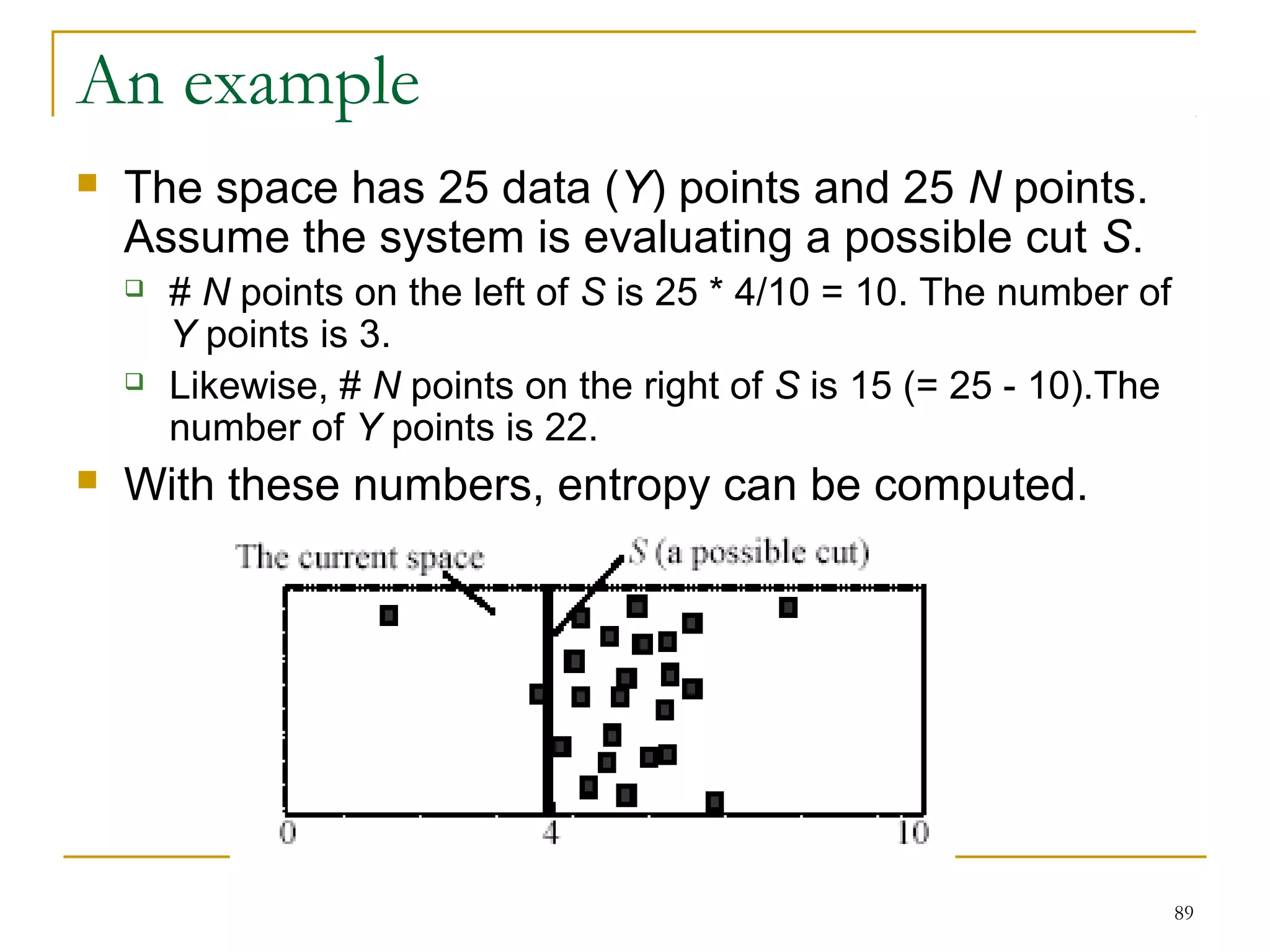

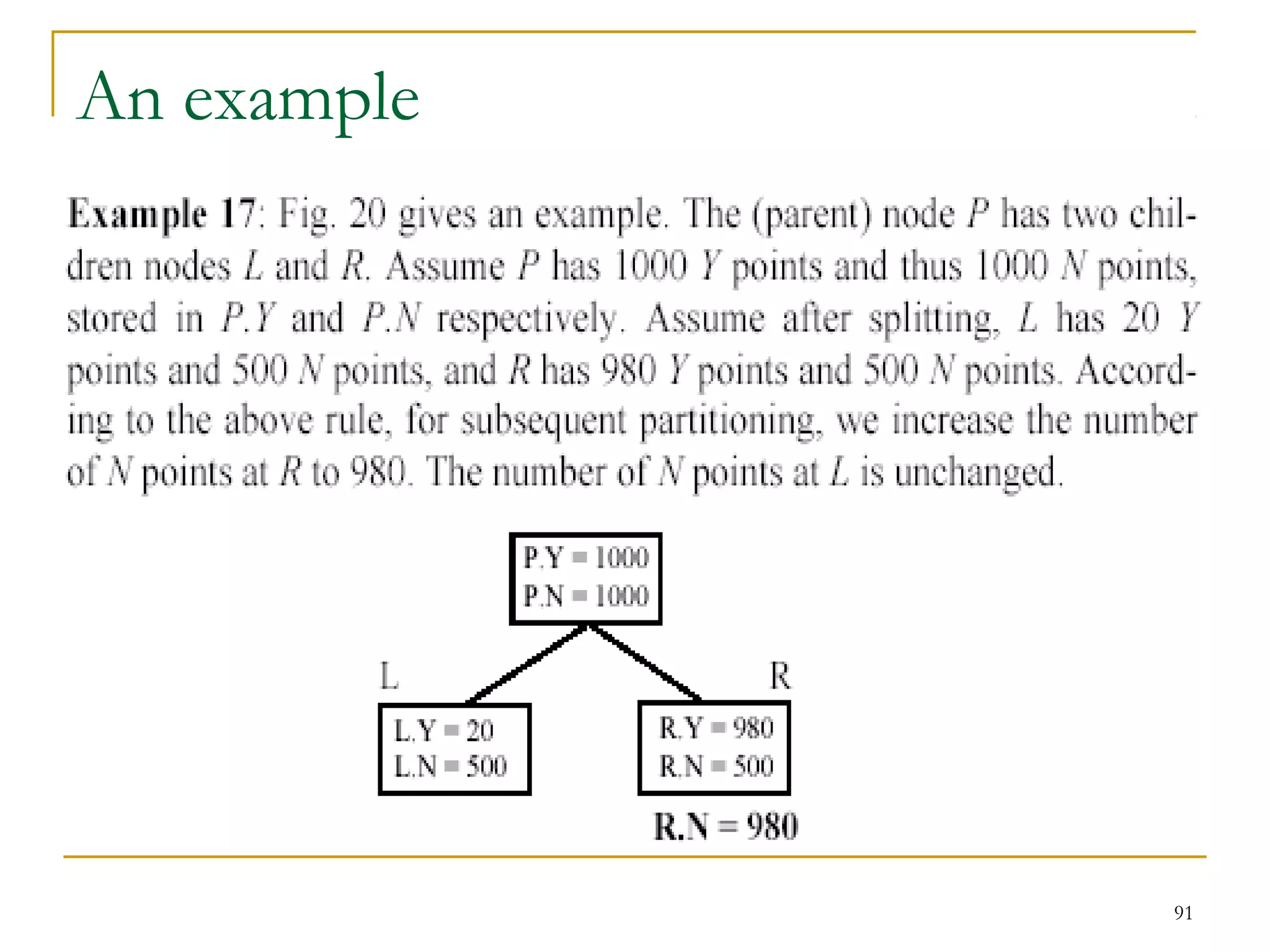

This document provides an overview of supervised and unsupervised learning, with a focus on clustering as an unsupervised learning technique. It describes the basic concepts of clustering, including how clustering groups similar data points together without labeled categories. It then covers two main clustering algorithms - k-means, a partitional clustering method, and hierarchical clustering. It discusses aspects like cluster representation, distance functions, strengths and weaknesses of different approaches. The document aims to introduce clustering and compare it with supervised learning.