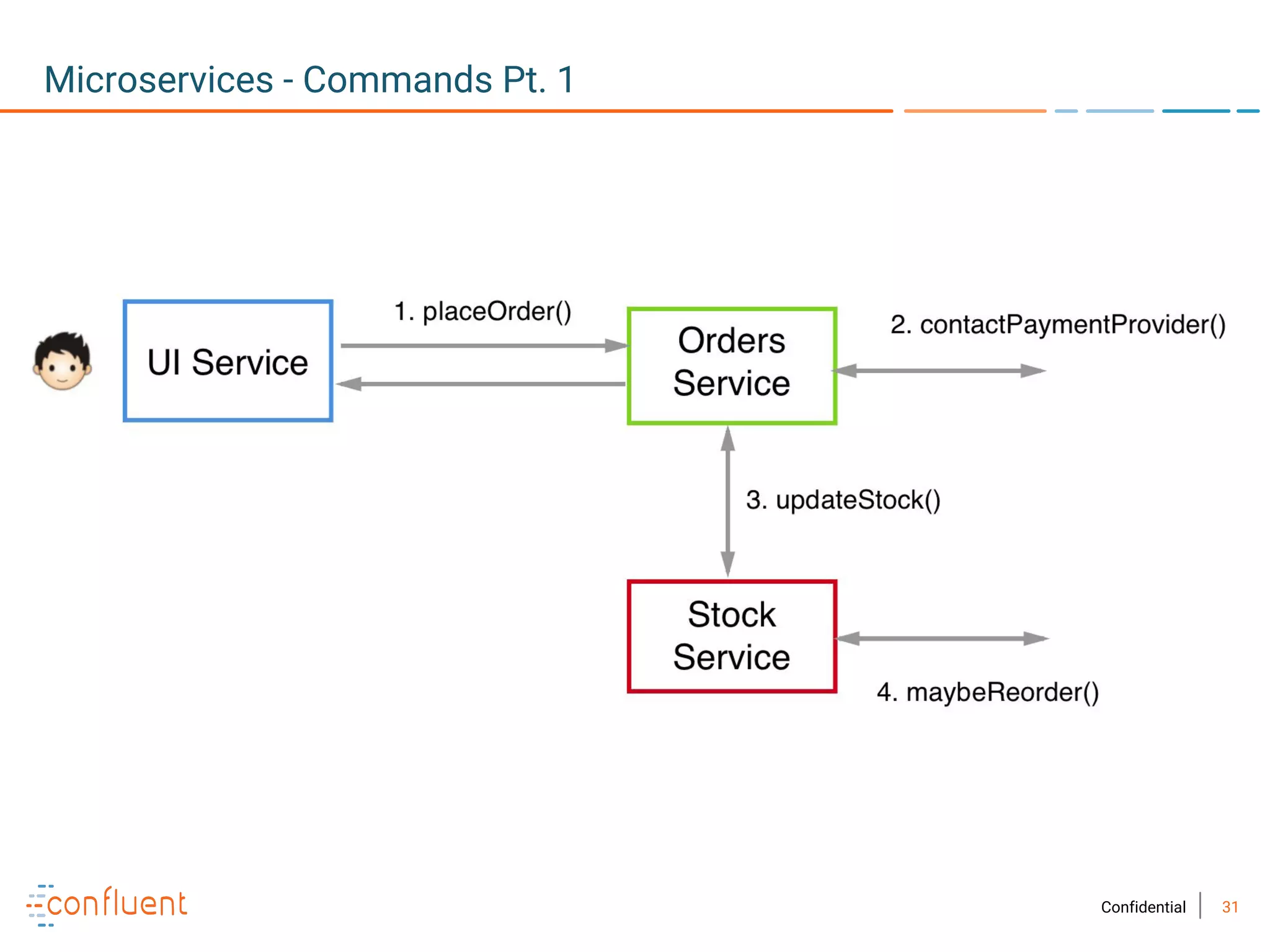

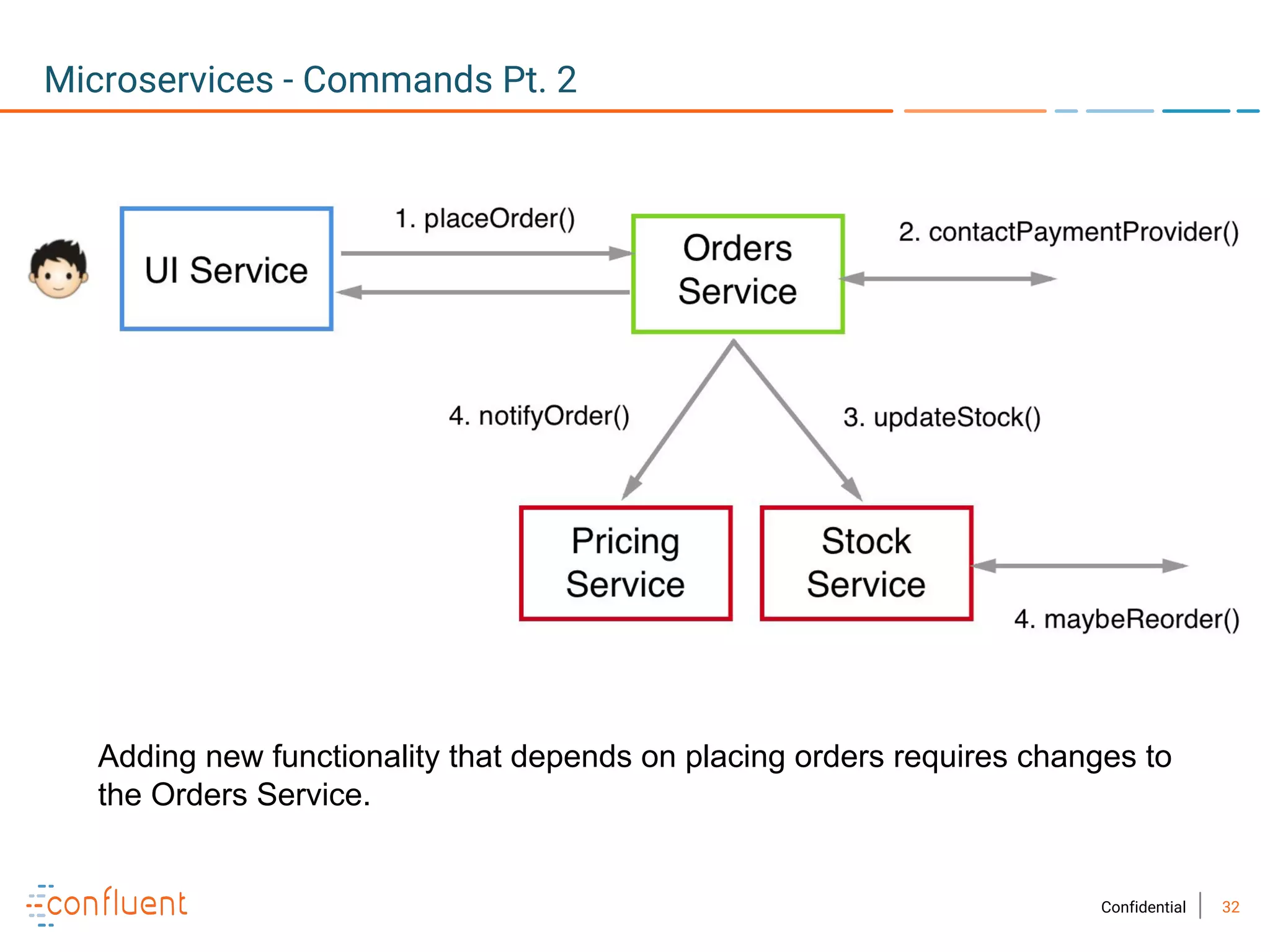

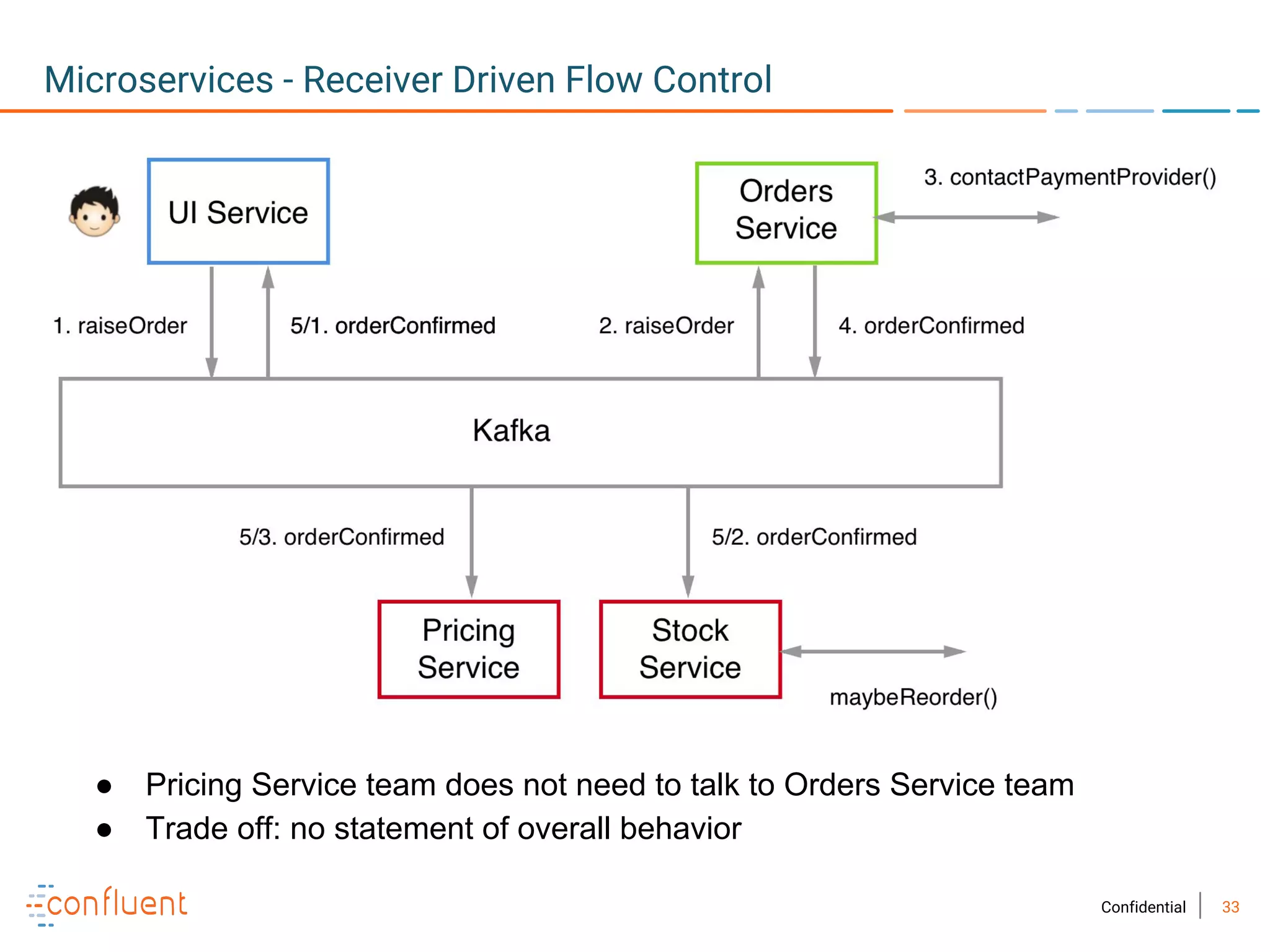

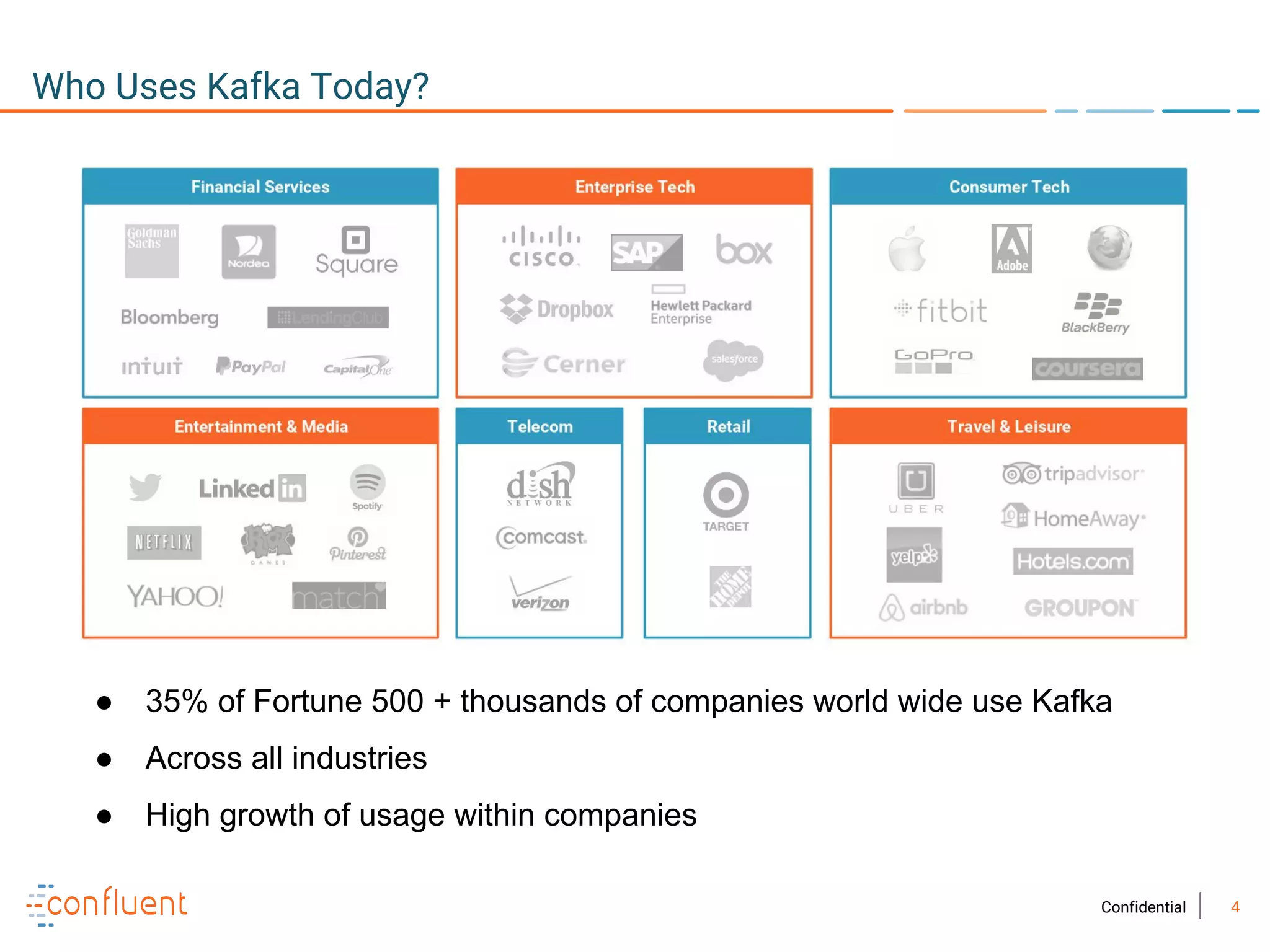

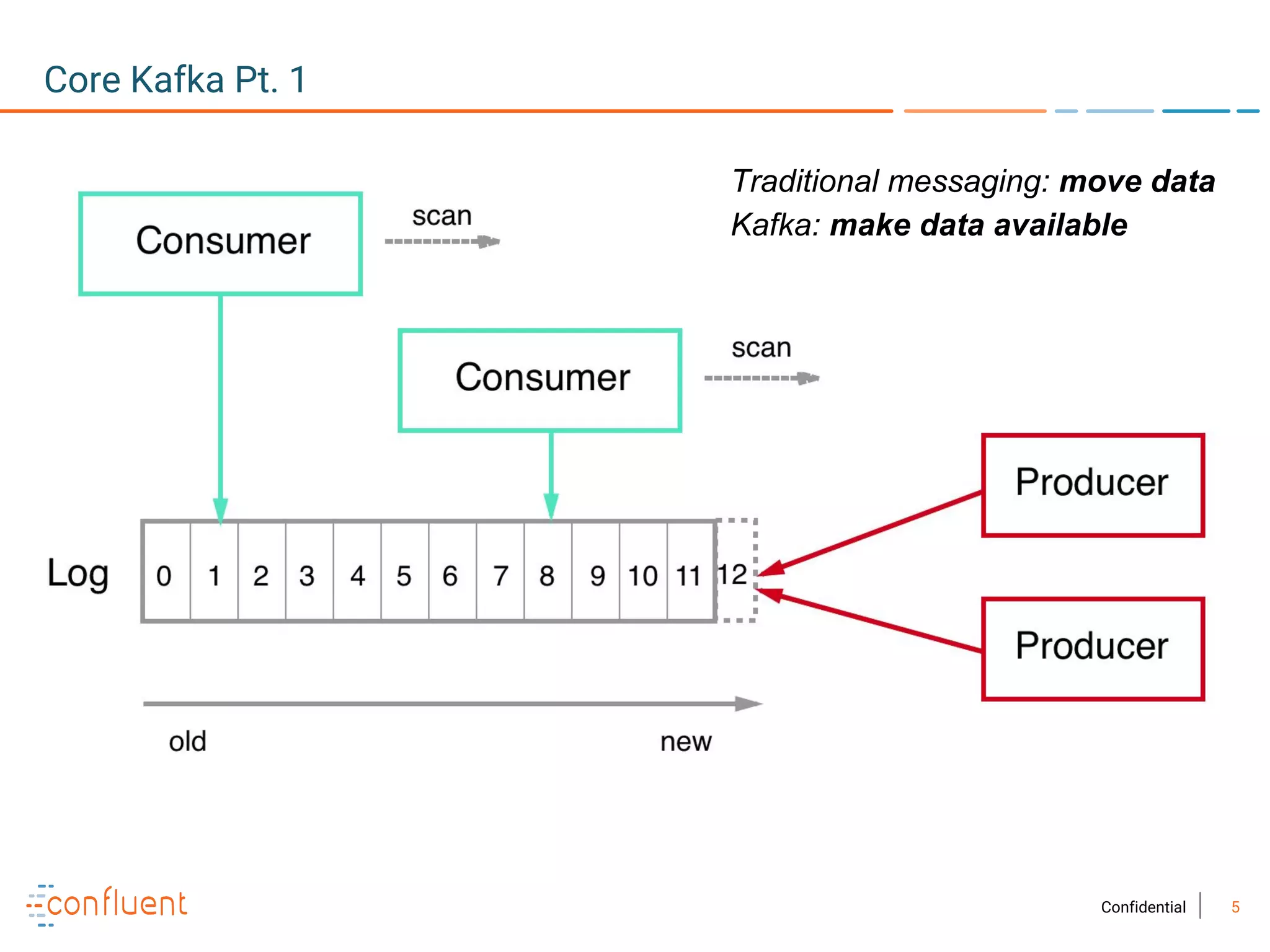

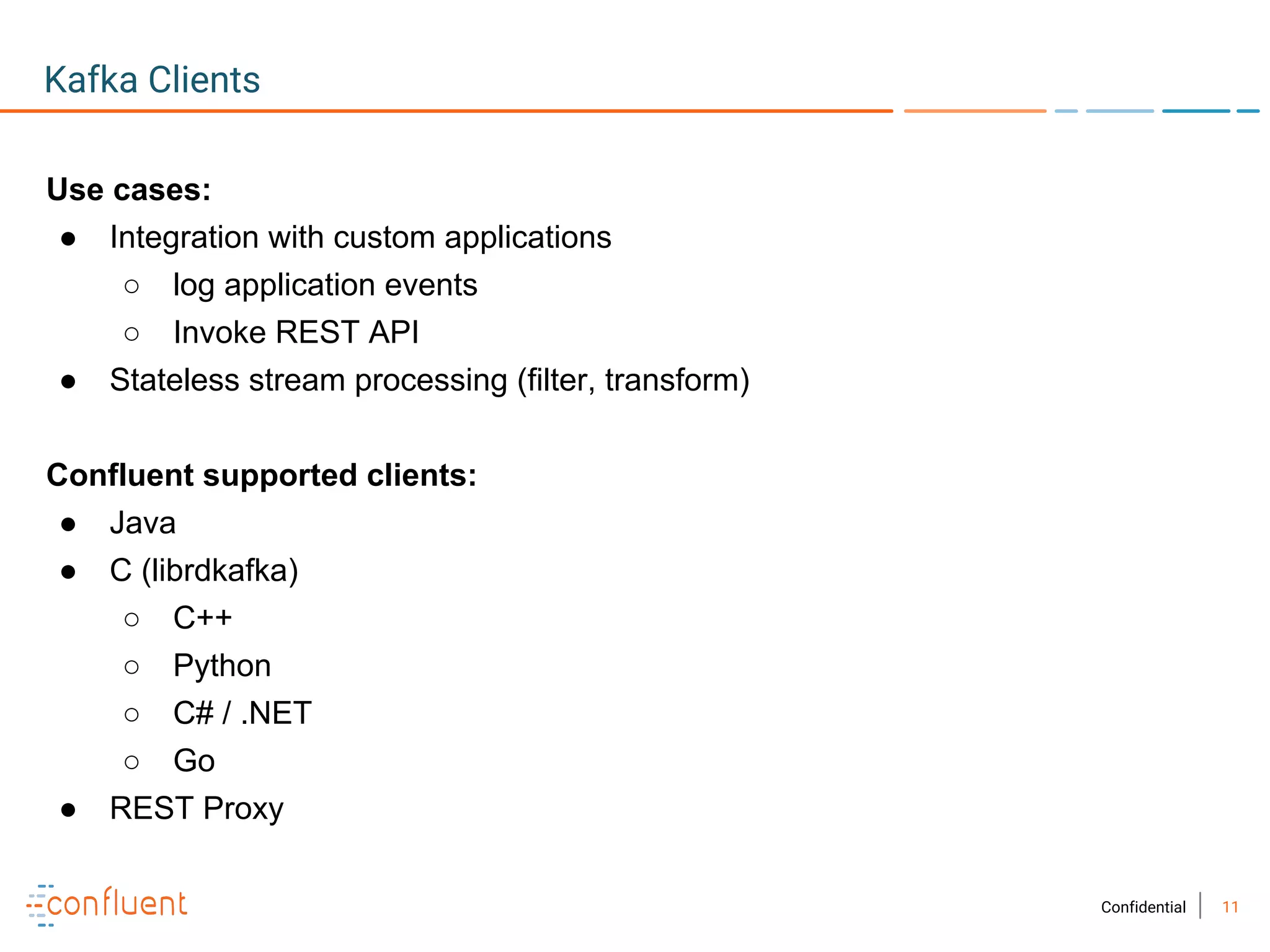

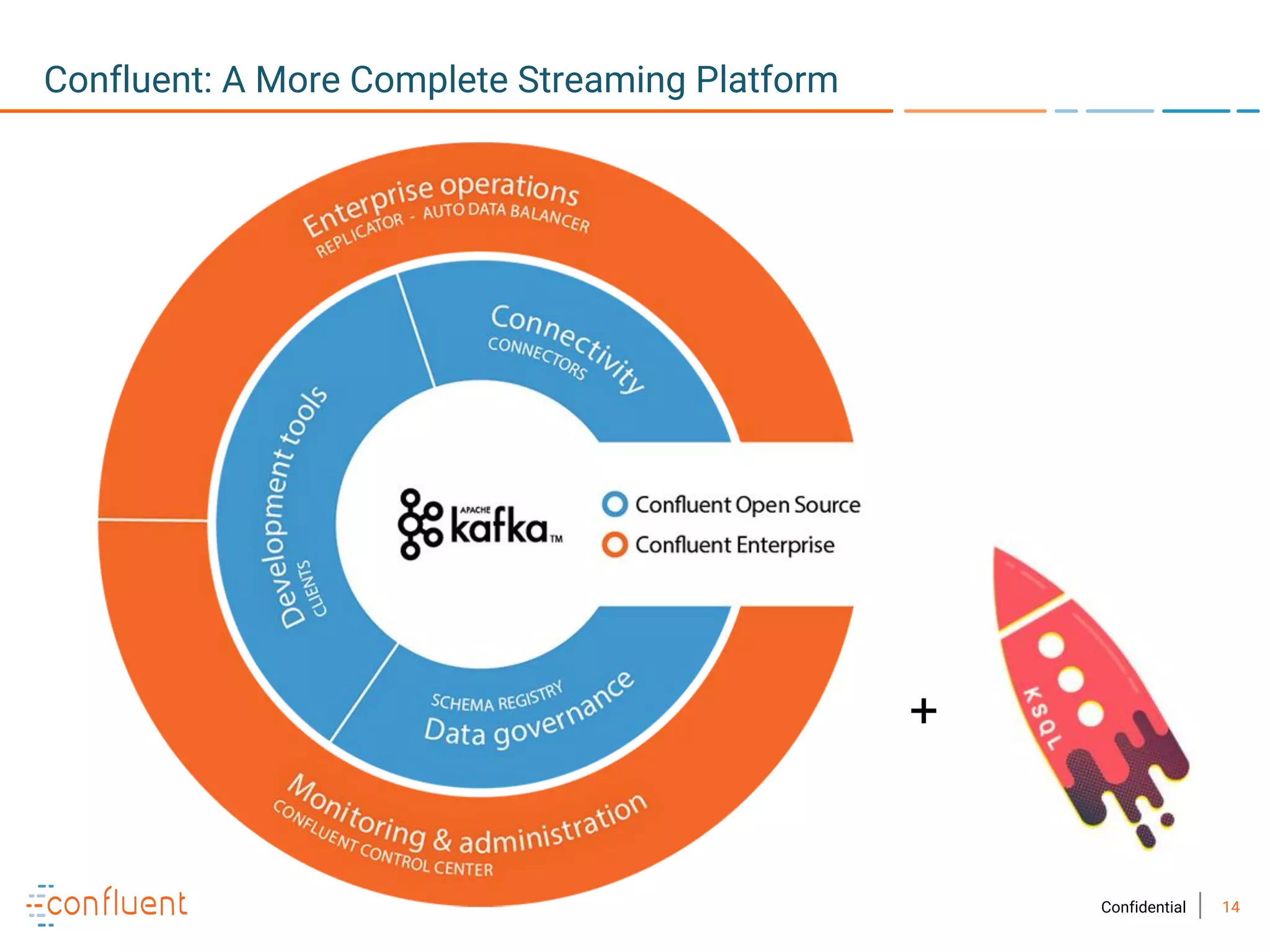

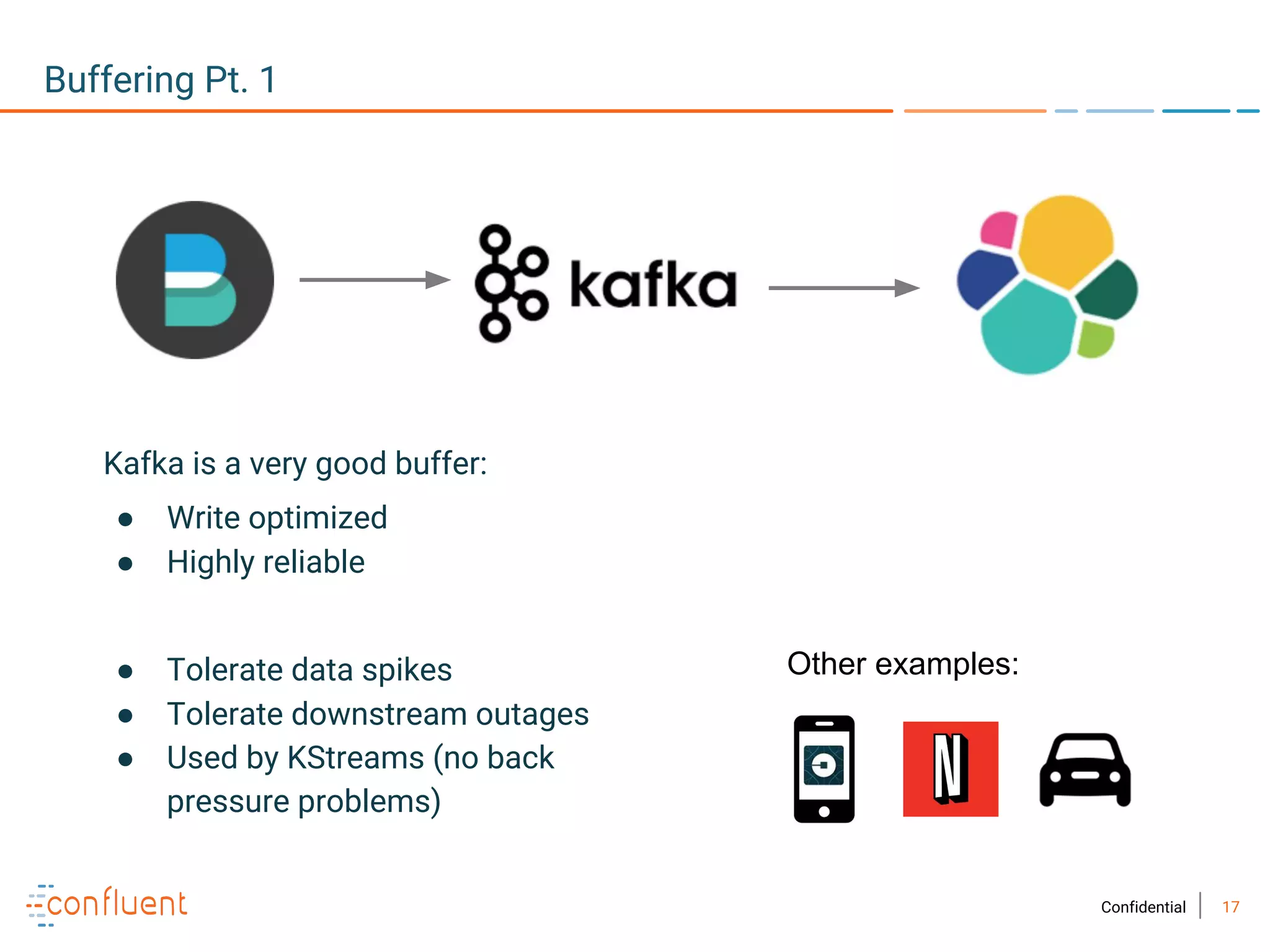

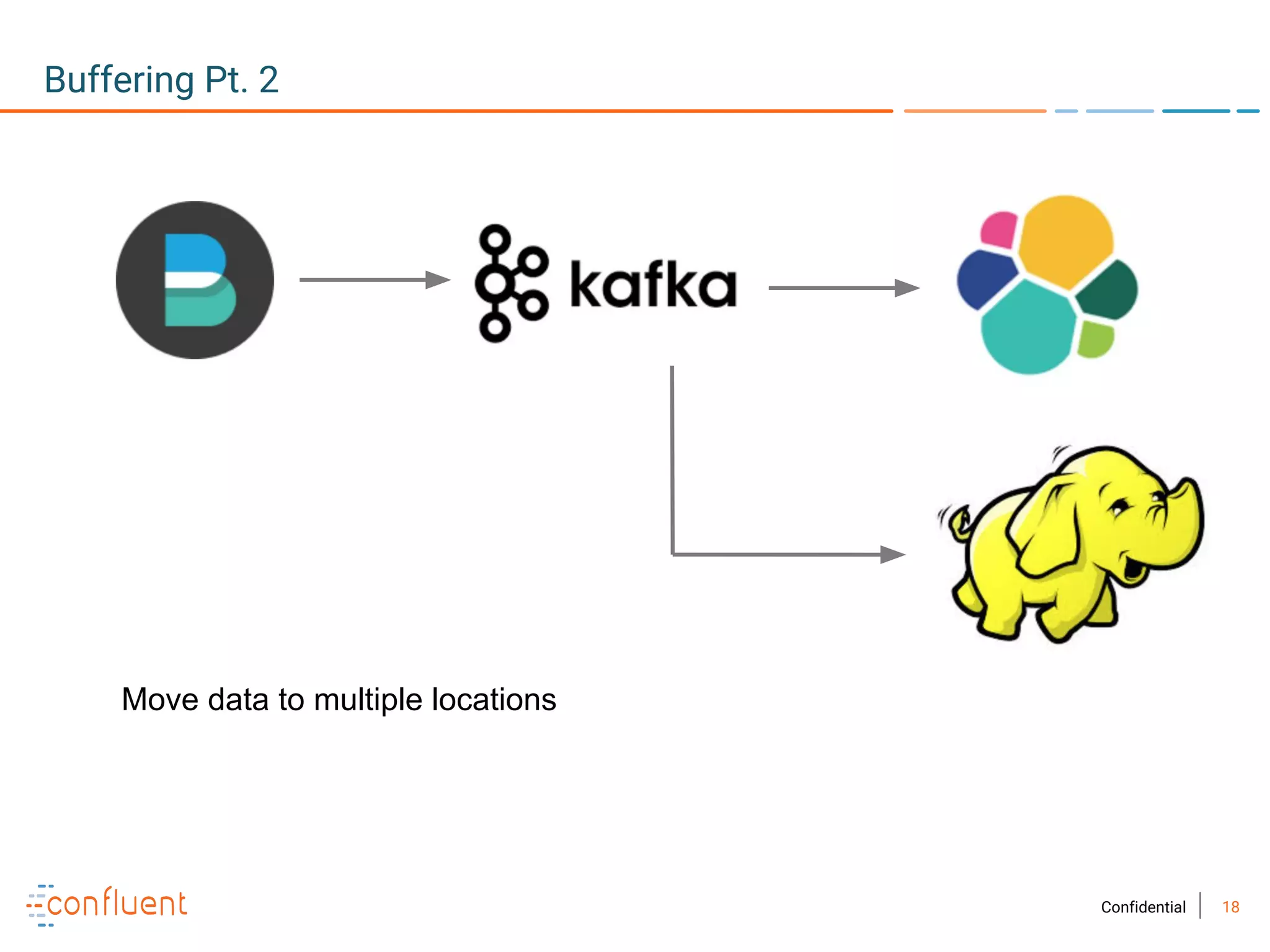

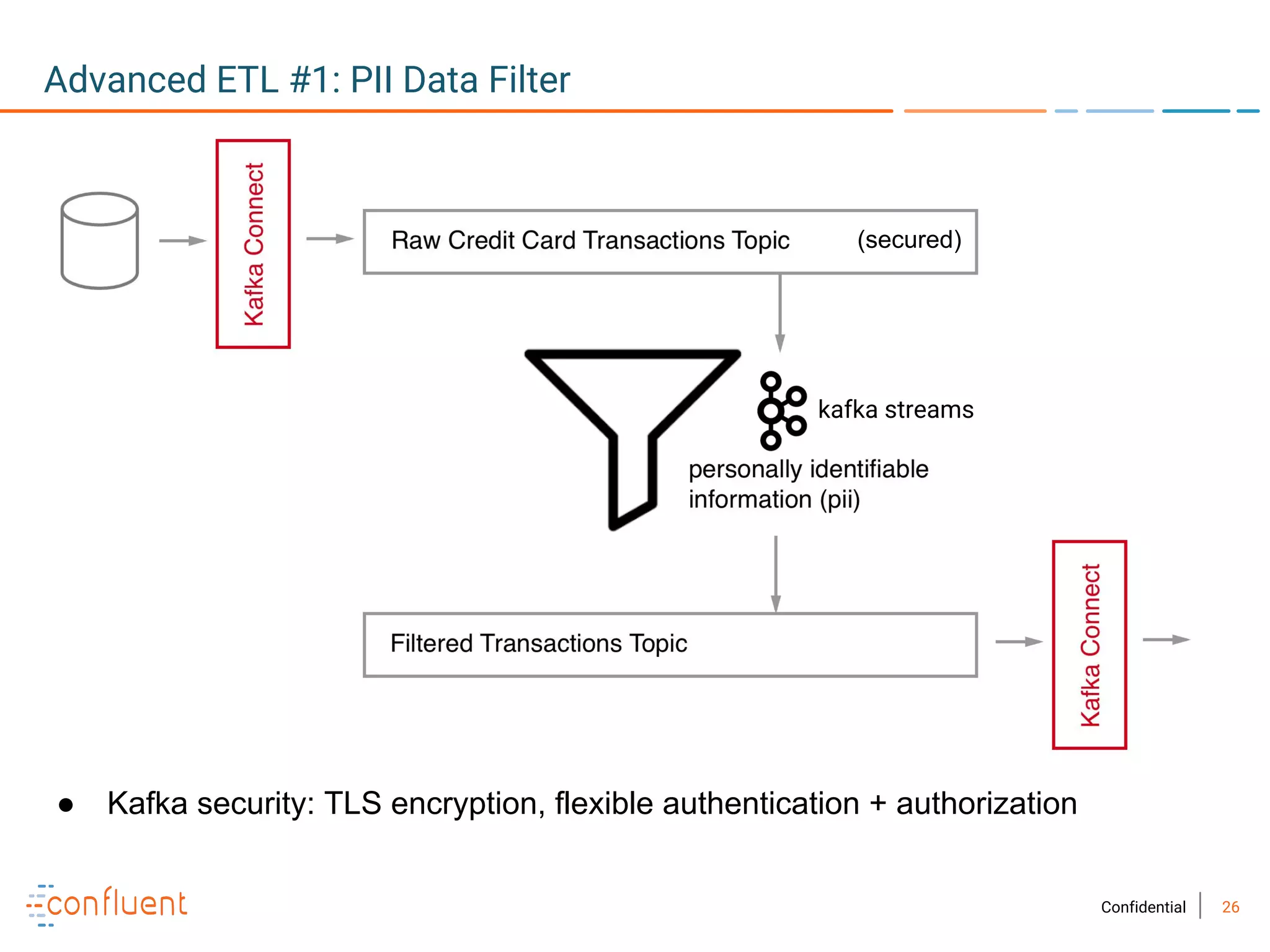

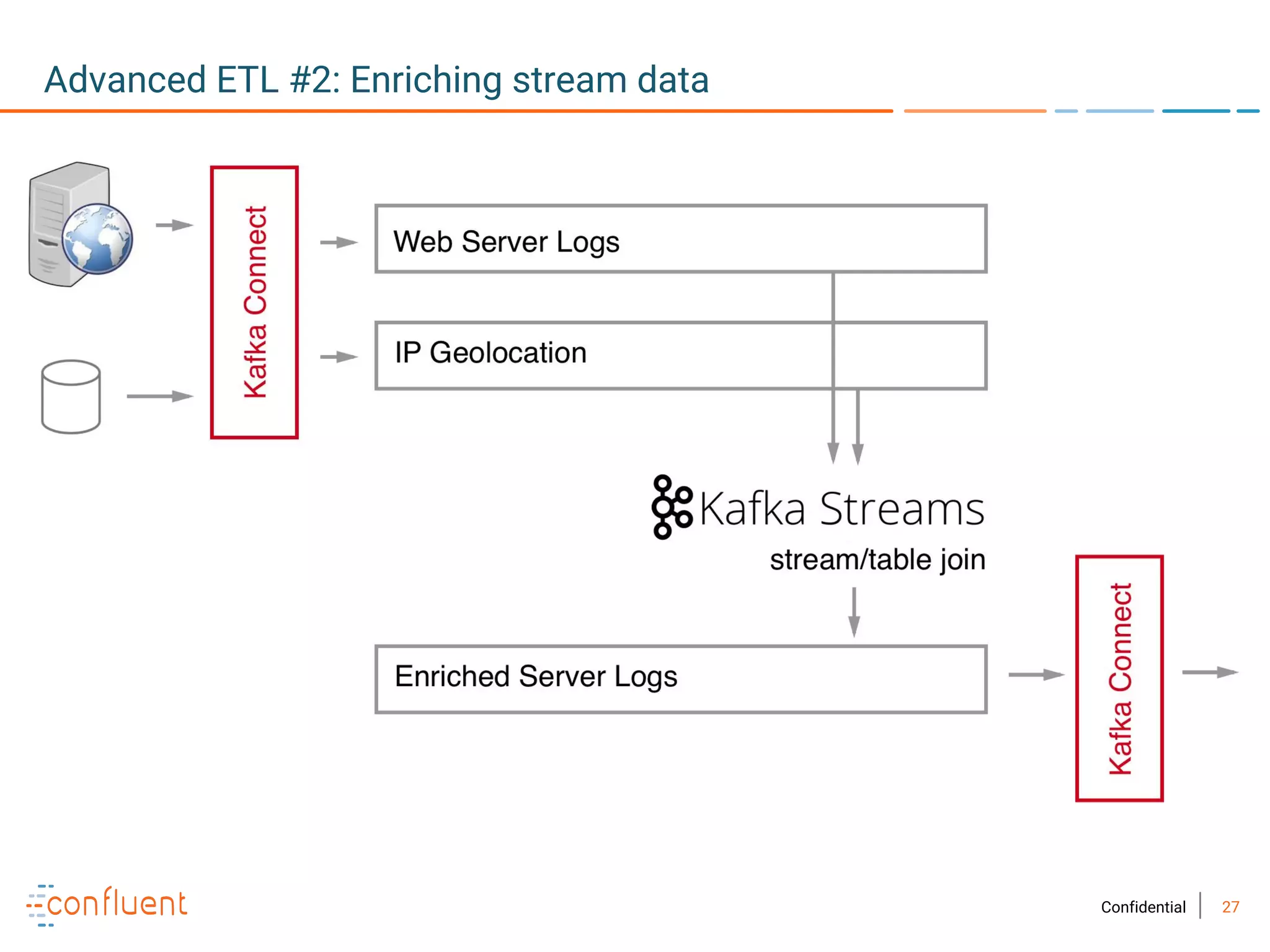

The document provides an overview of Apache Kafka, a streaming platform widely used by Fortune 500 companies for real-time data processing. It highlights Kafka's strengths in scalability, durability, and integration capabilities, as well as its applications in microservices and data integration. It also discusses specific use cases, Kafka clients, and how it can serve as a commit log for organizations.

![29Confidential

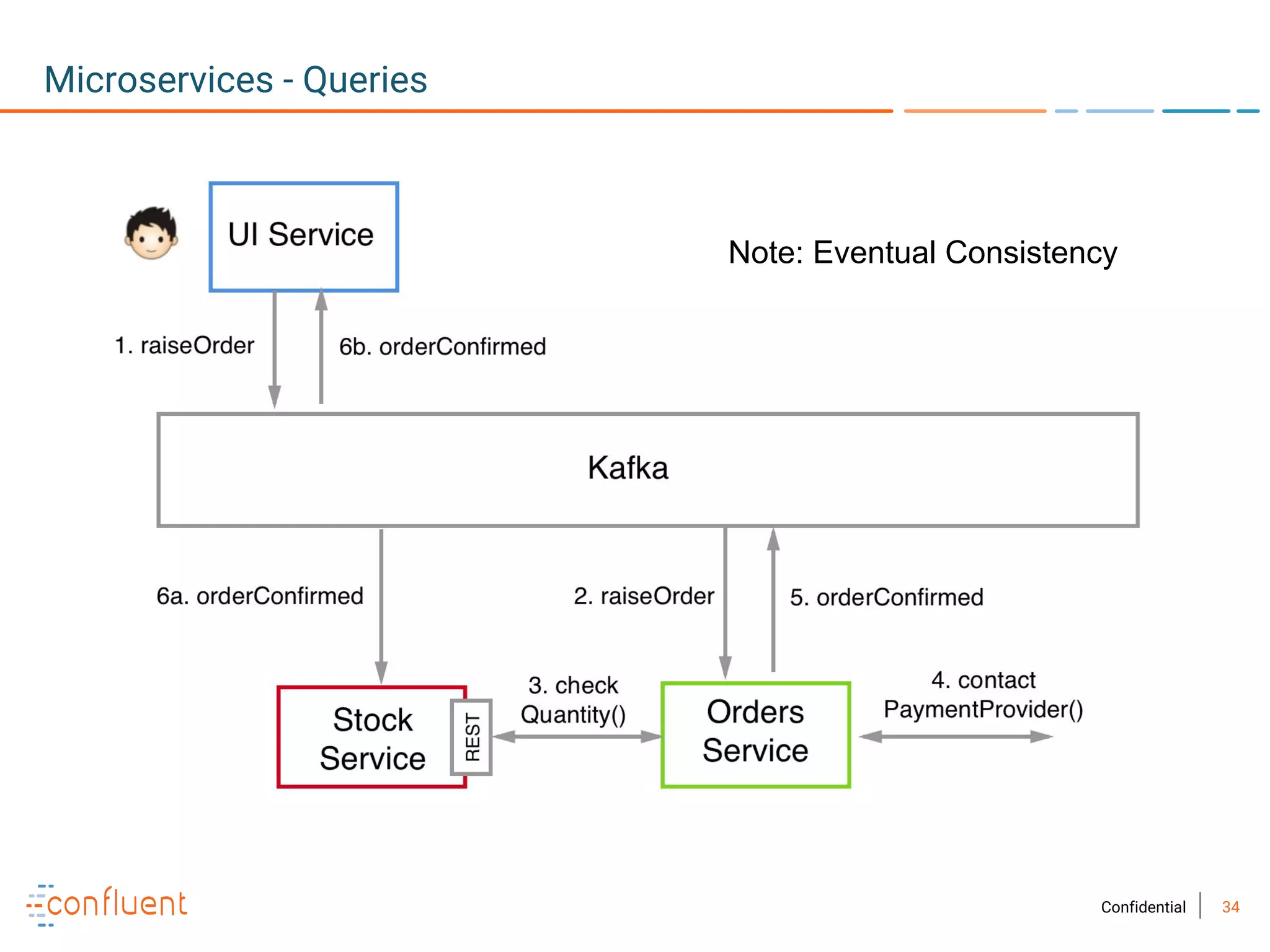

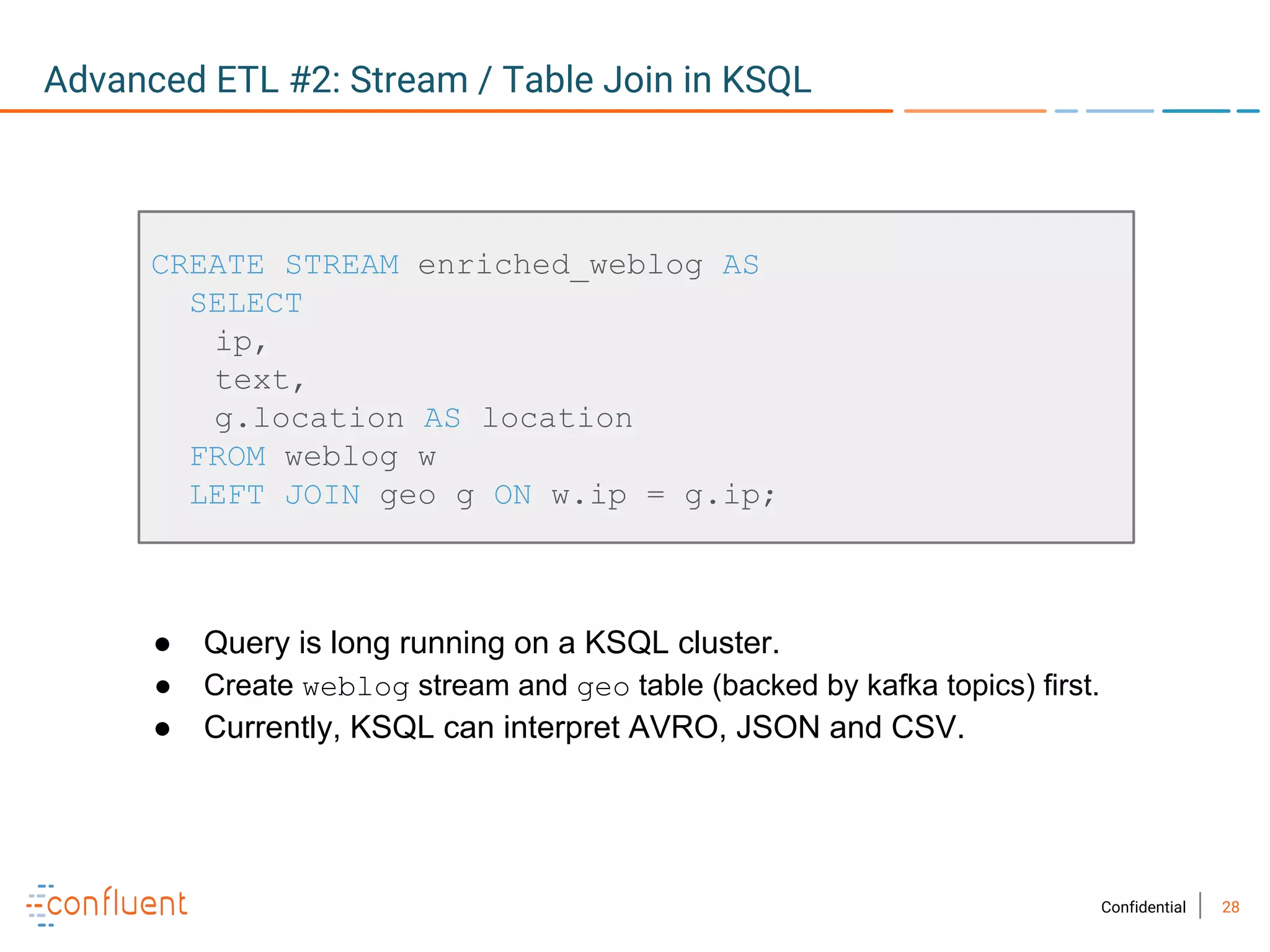

Stream Processing App #1: Anomaly Detection / Alerting

CREATE TABLE possible_fraud AS

SELECT card_number, count(*)

FROM authorization_attempts

WINDOW TUMBLING (SIZE 10 SECONDS)

GROUP BY card_number

HAVING count(*) > 3;

● Use Kafka Streams

and/or additional

input streams in

more sophisticated

algorithm

● possible_fraud

is a change log

stream where key is

[card_number,

window_start]

authorization_attempts

possible_fraud

SMS Gateway](https://image.slidesharecdn.com/tourofkafka-bangkokandsingapore-181105170304/75/A-Tour-of-Apache-Kafka-29-2048.jpg)