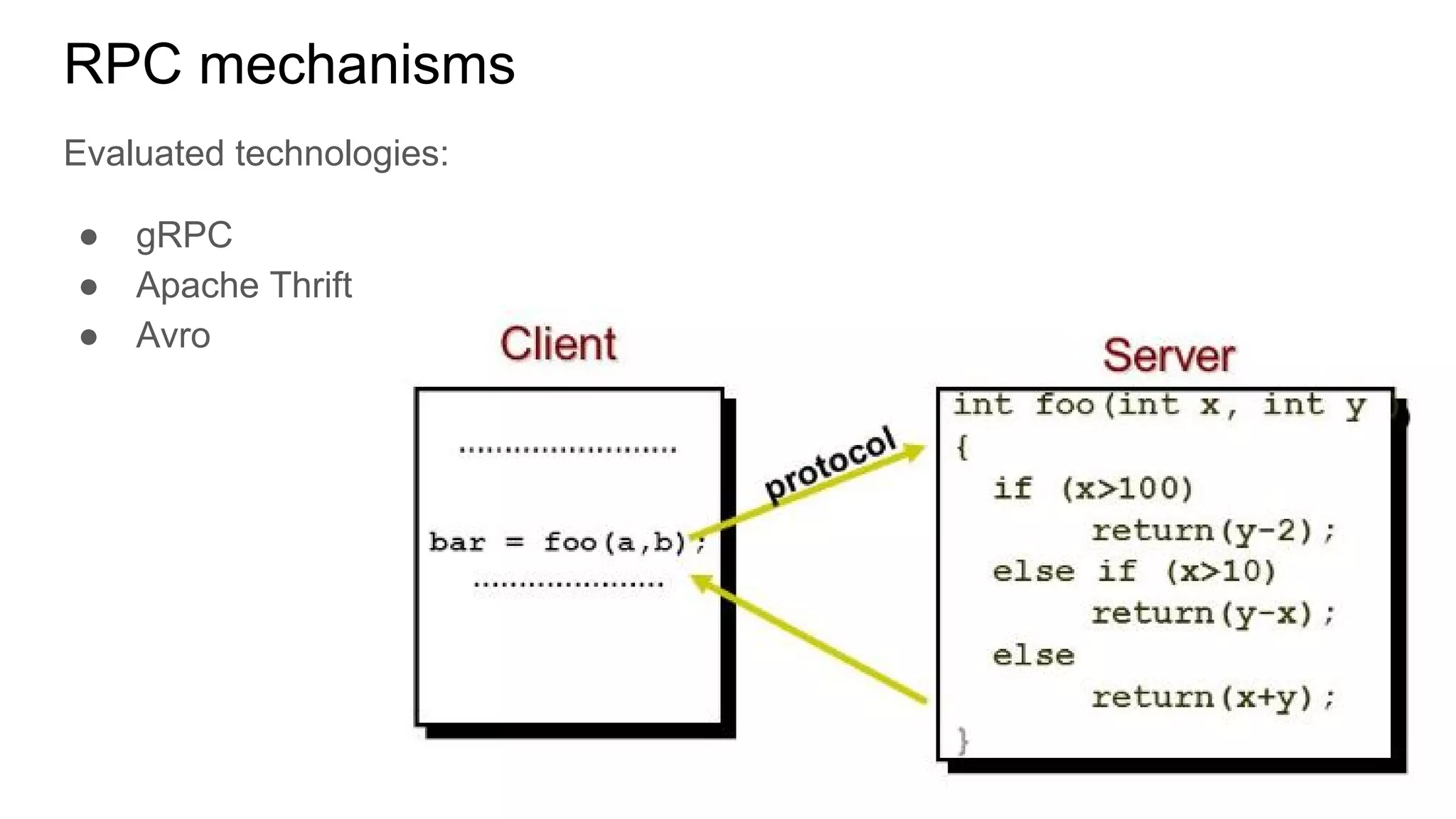

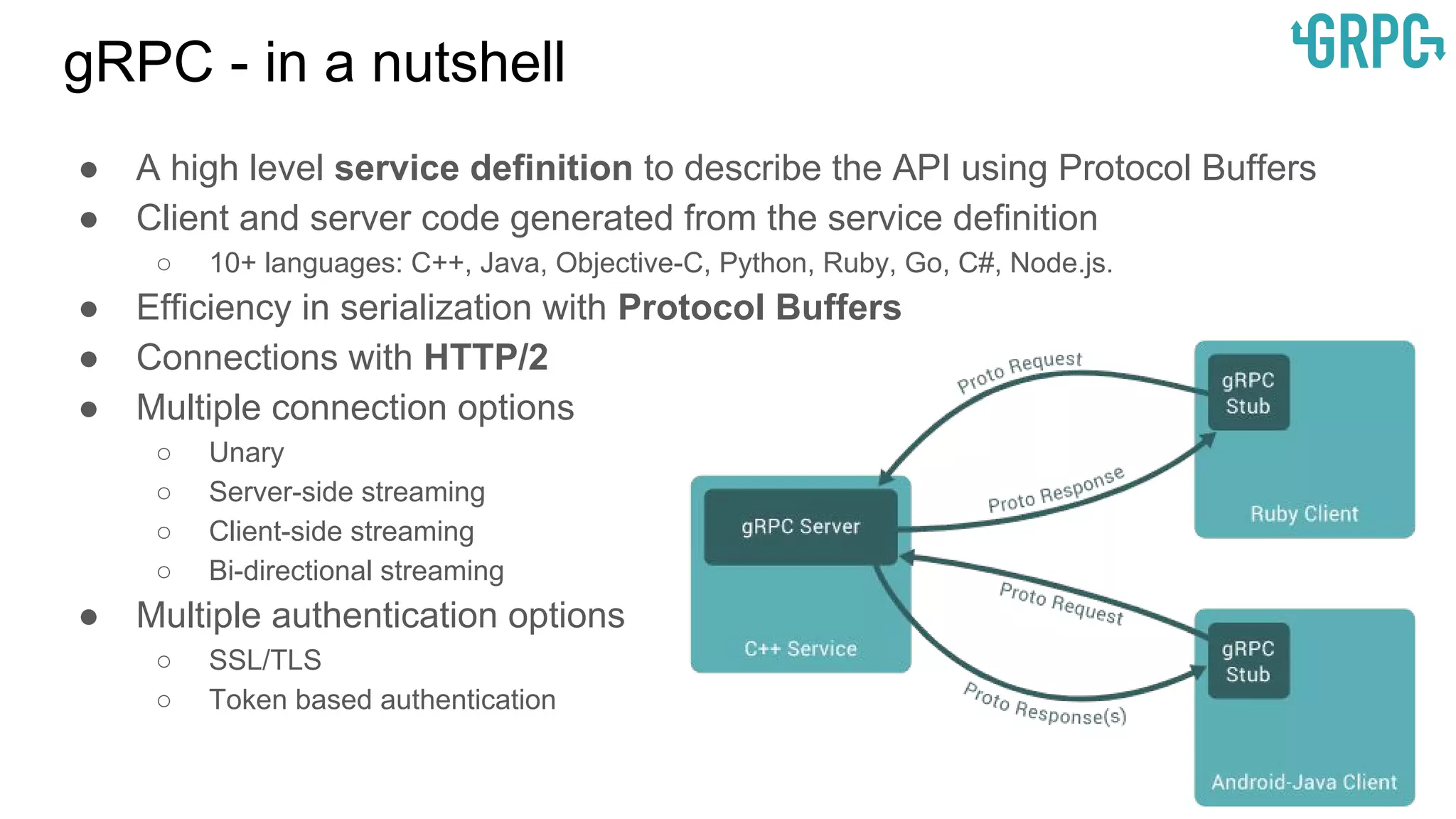

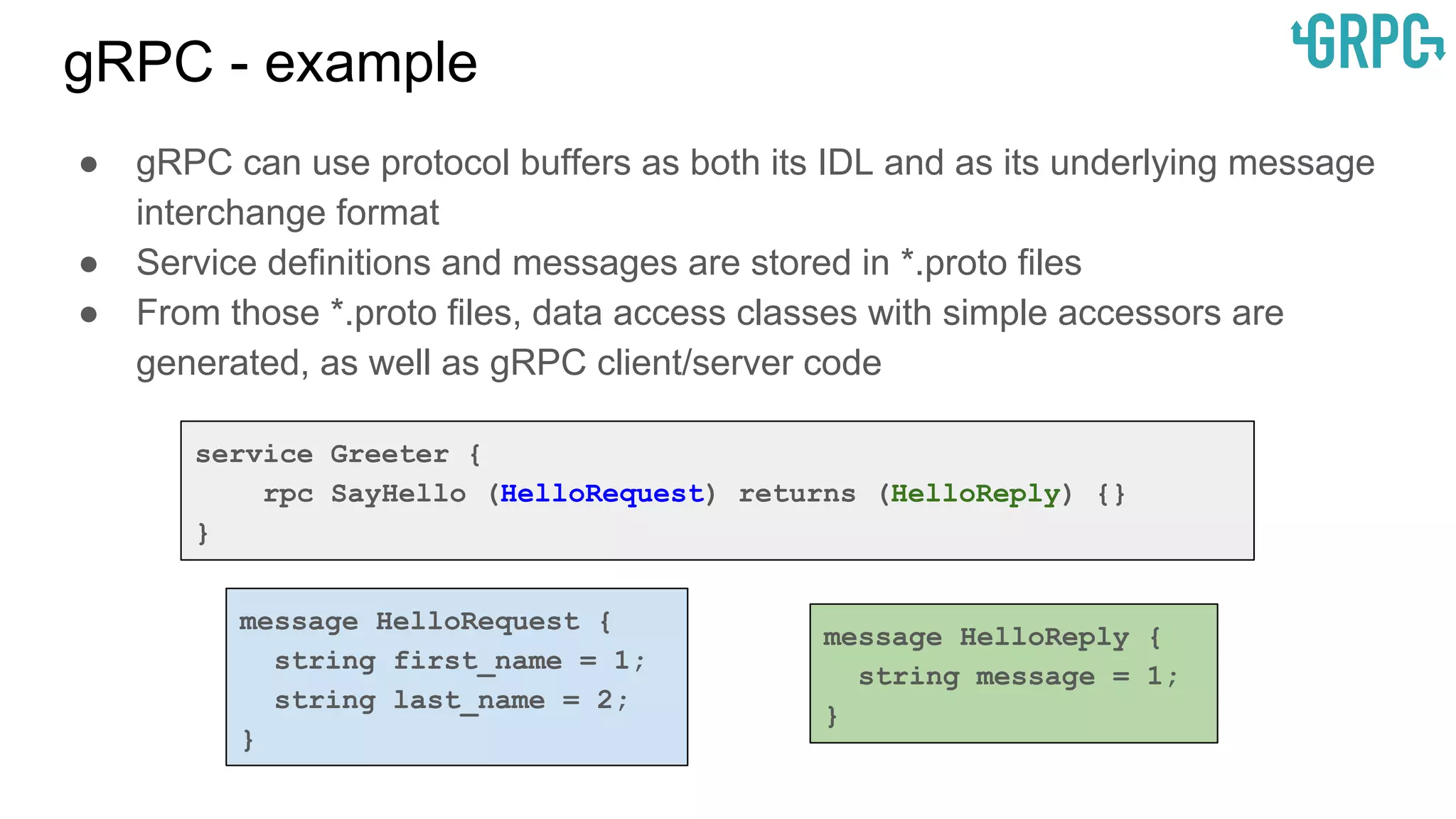

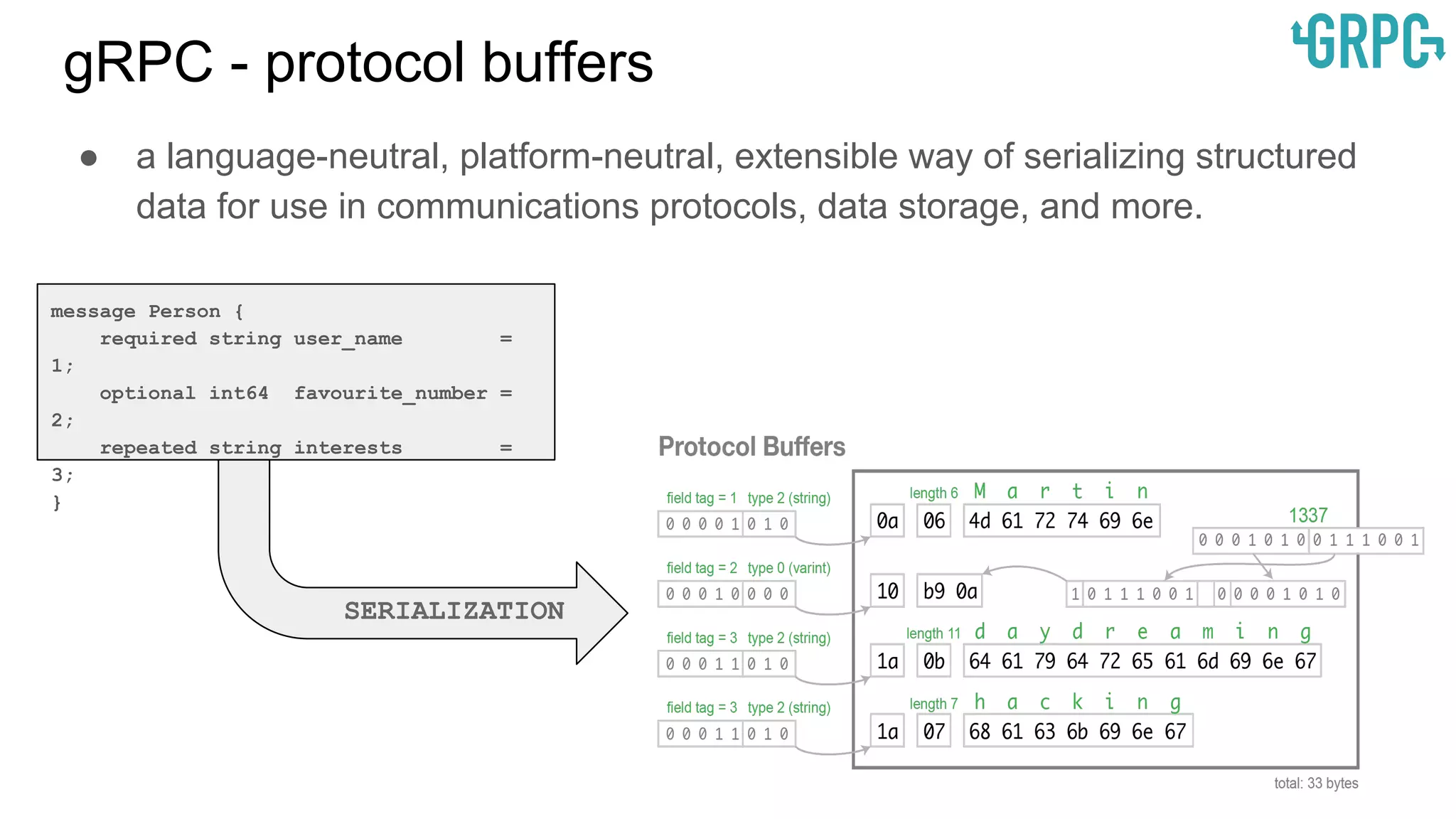

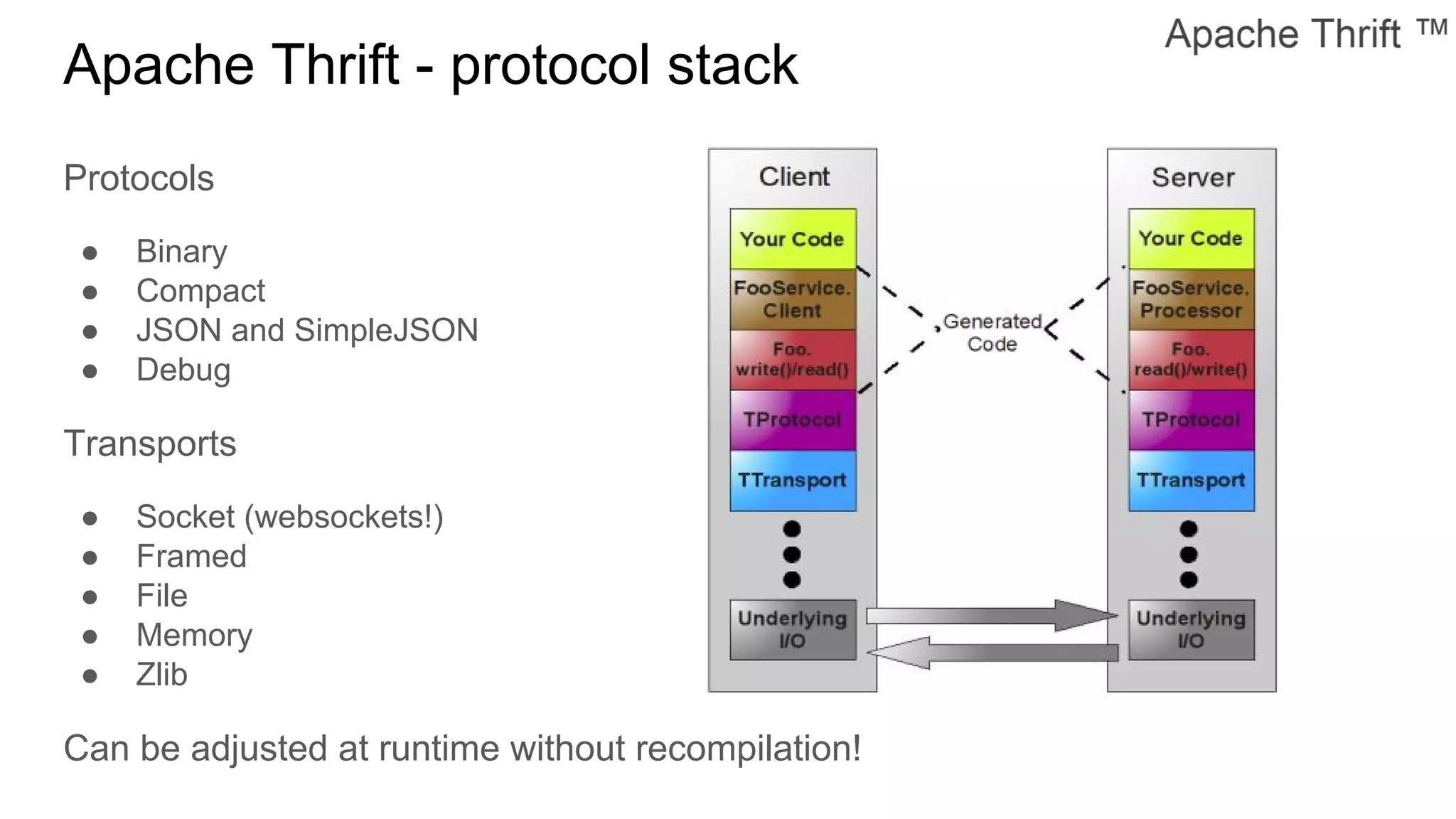

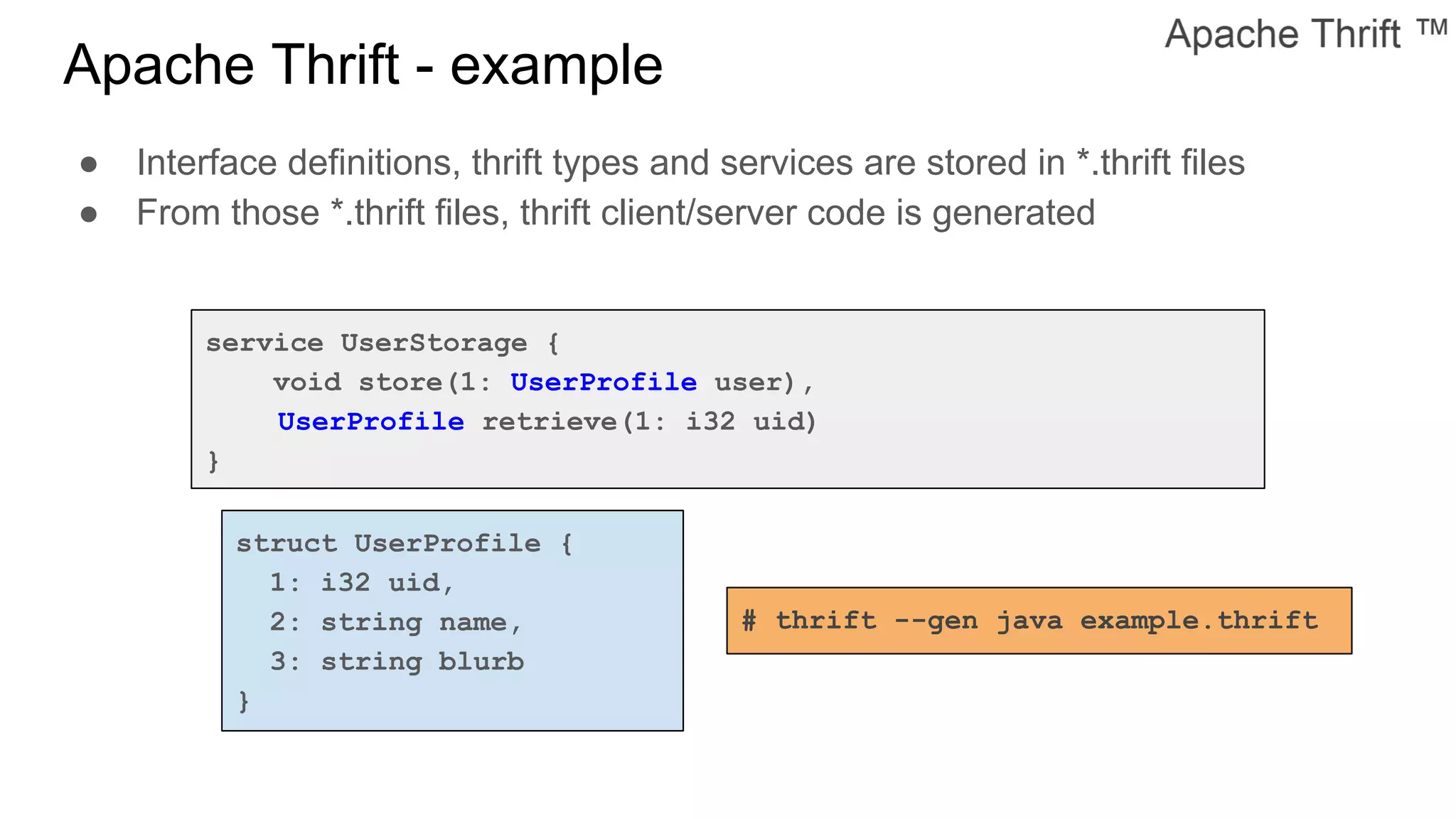

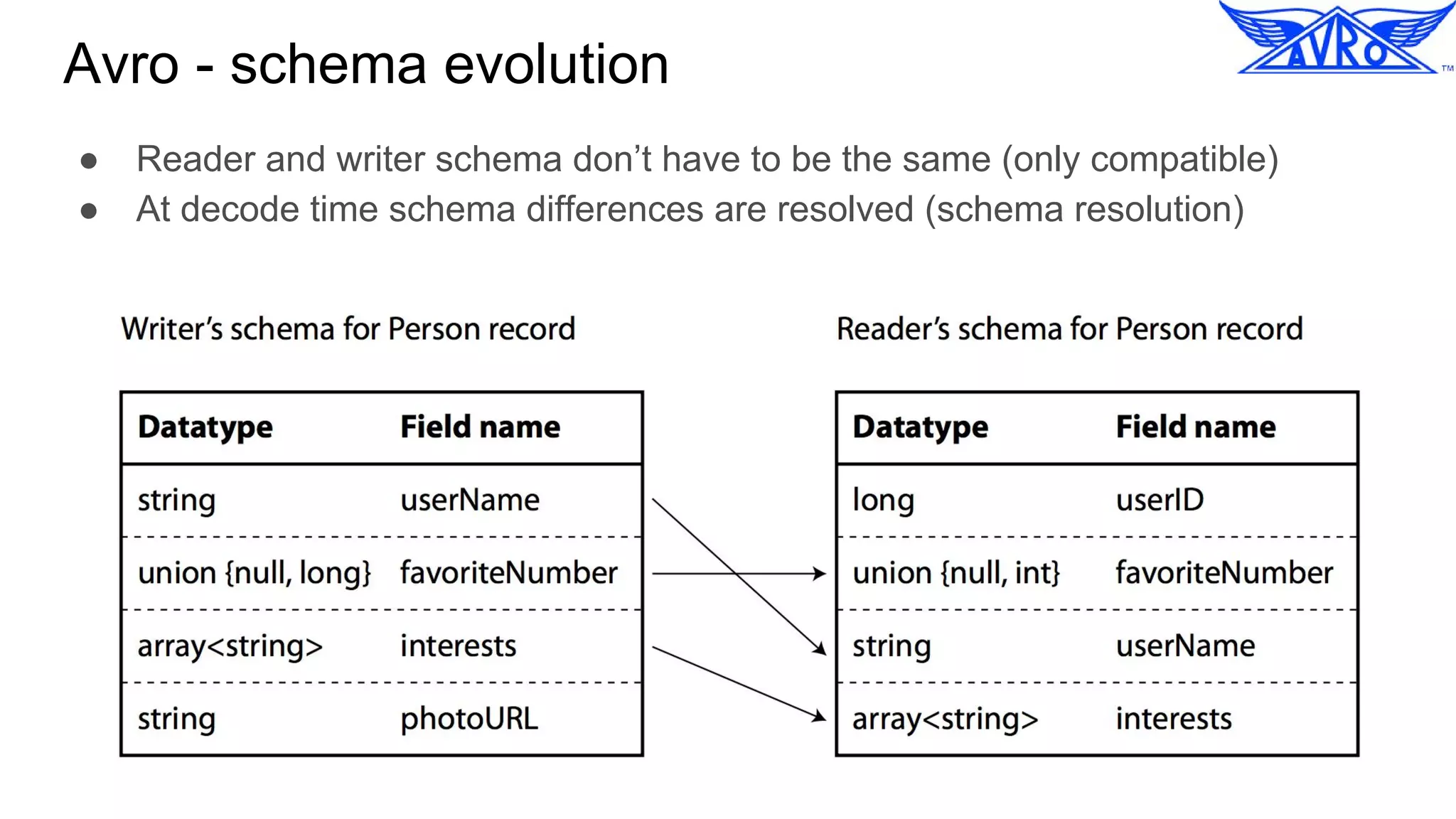

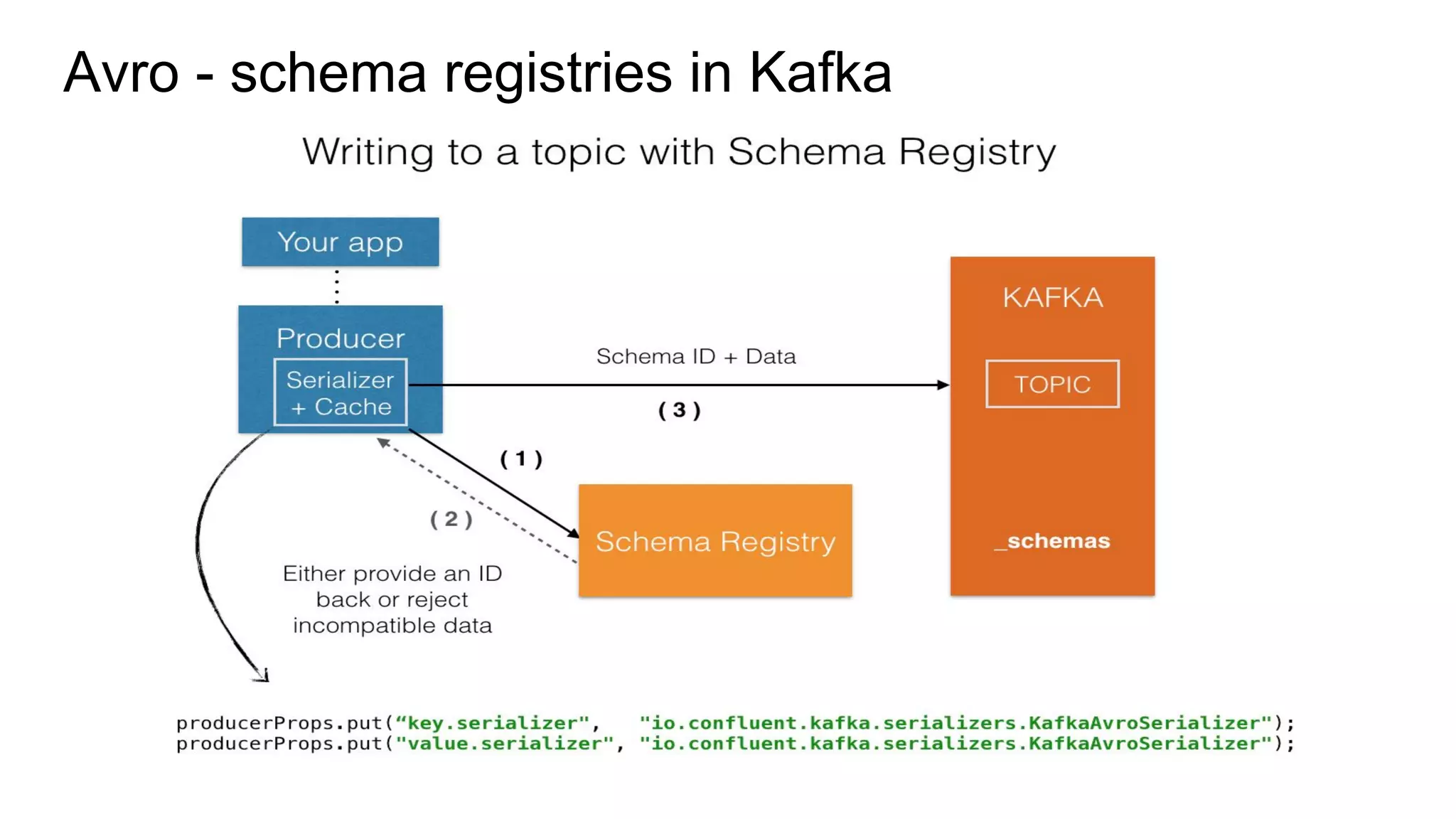

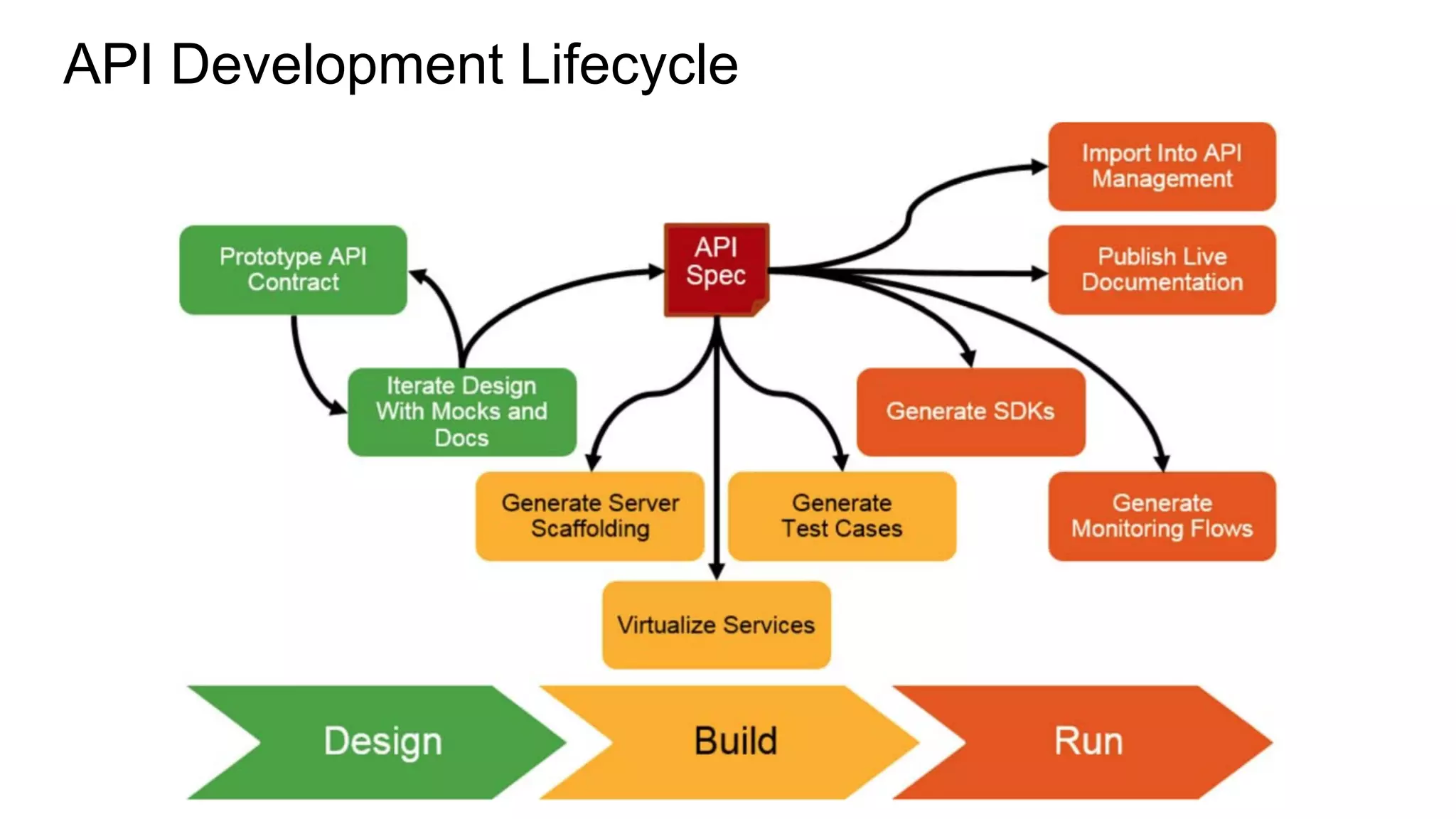

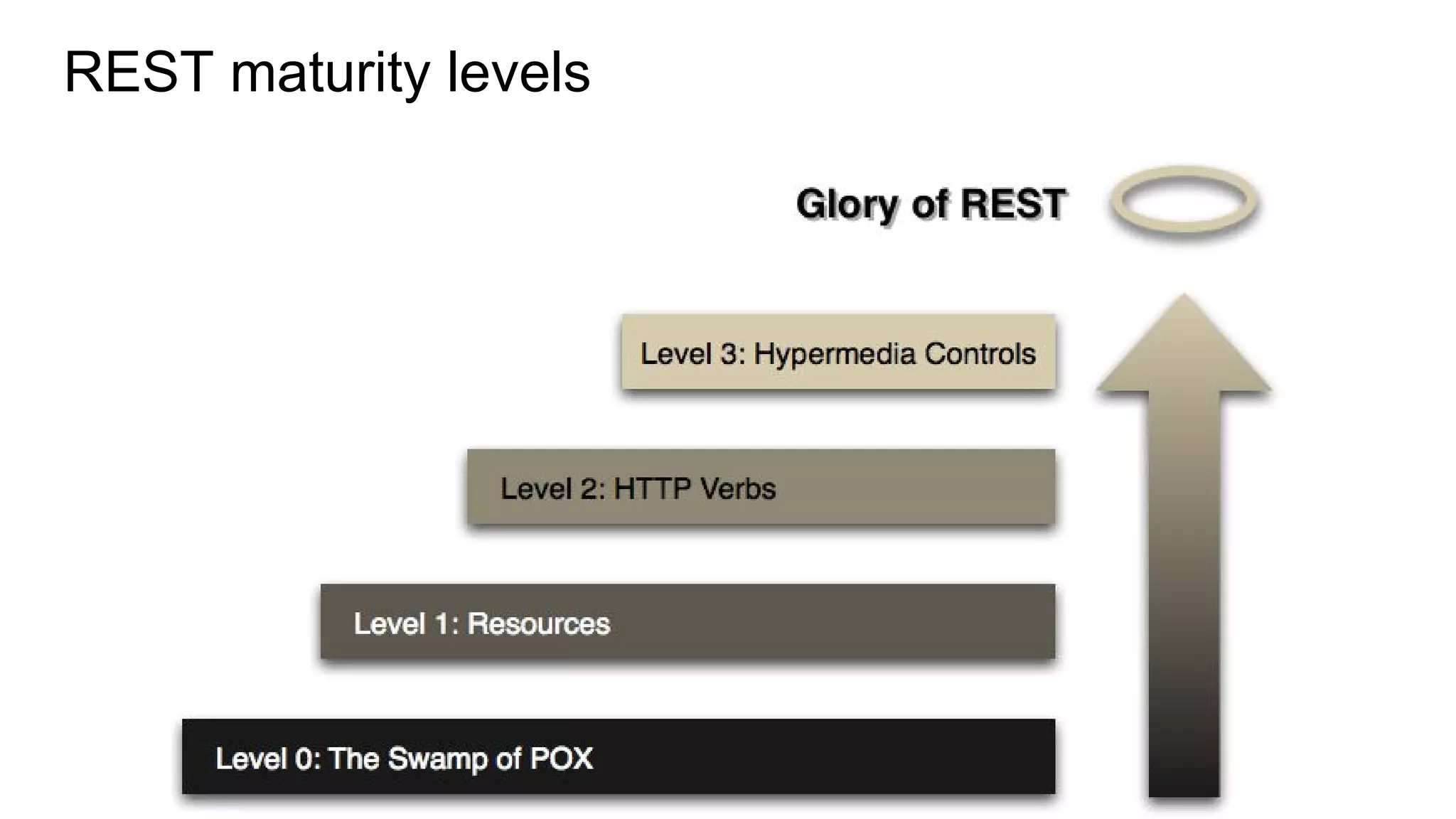

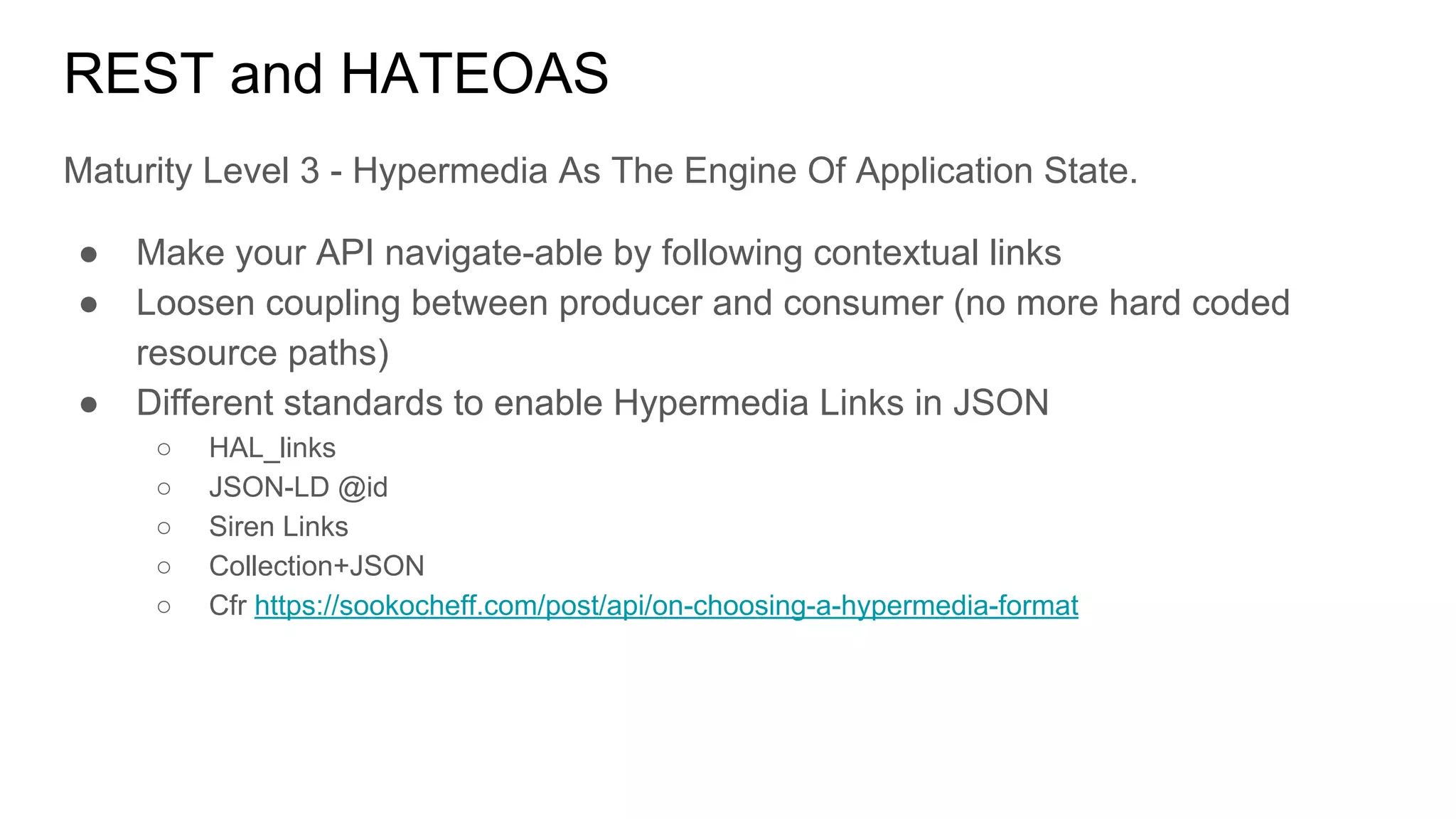

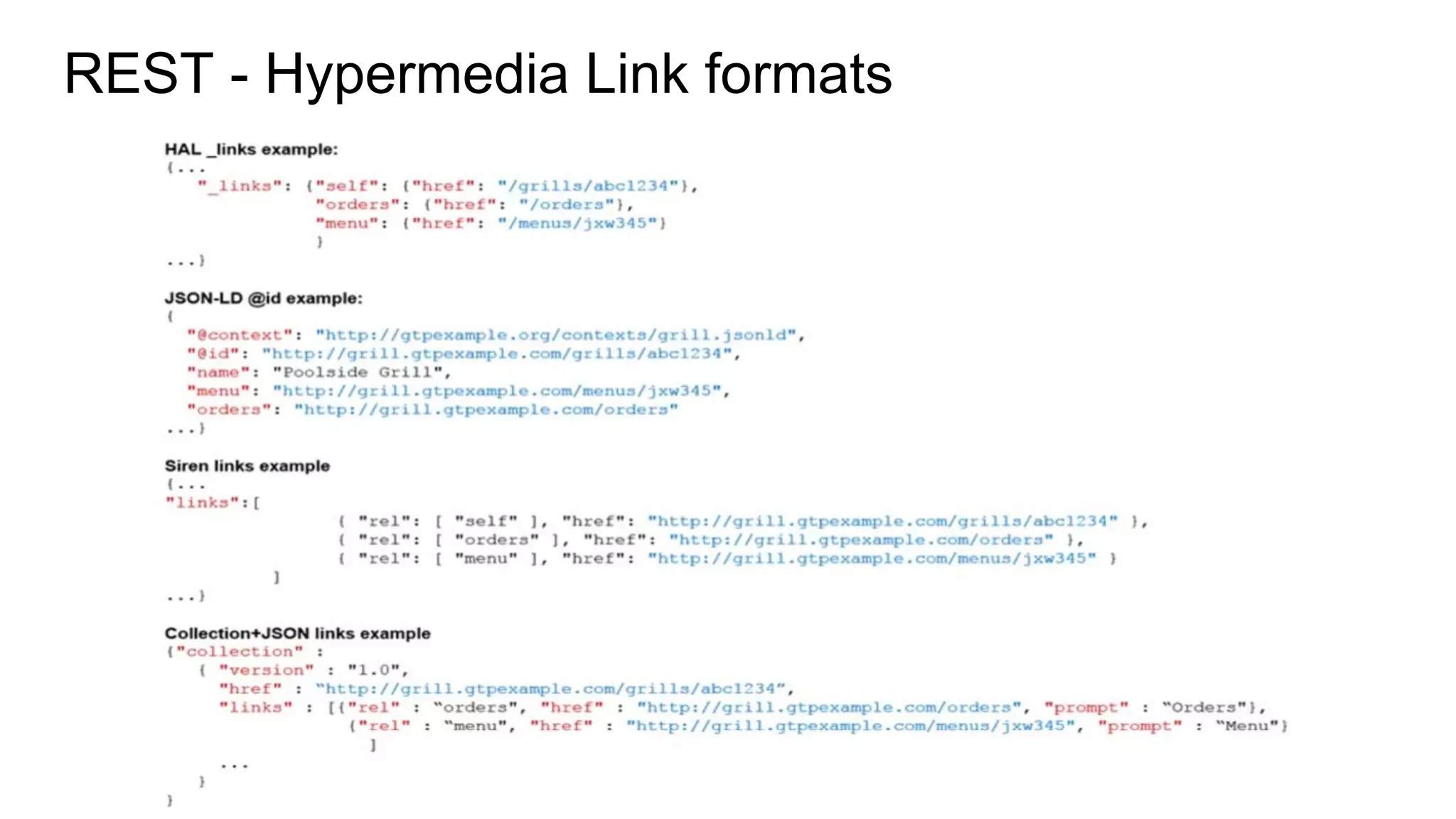

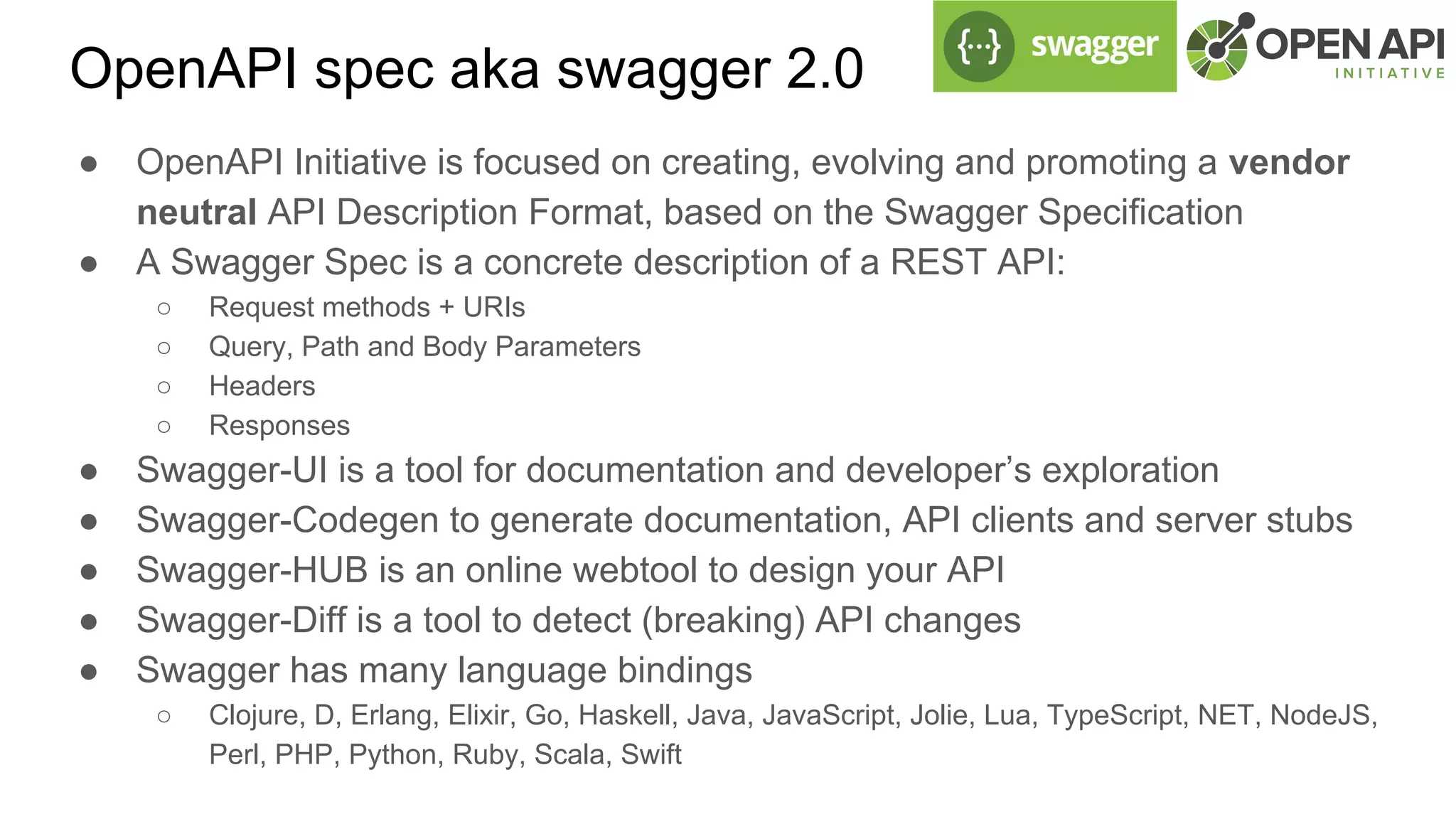

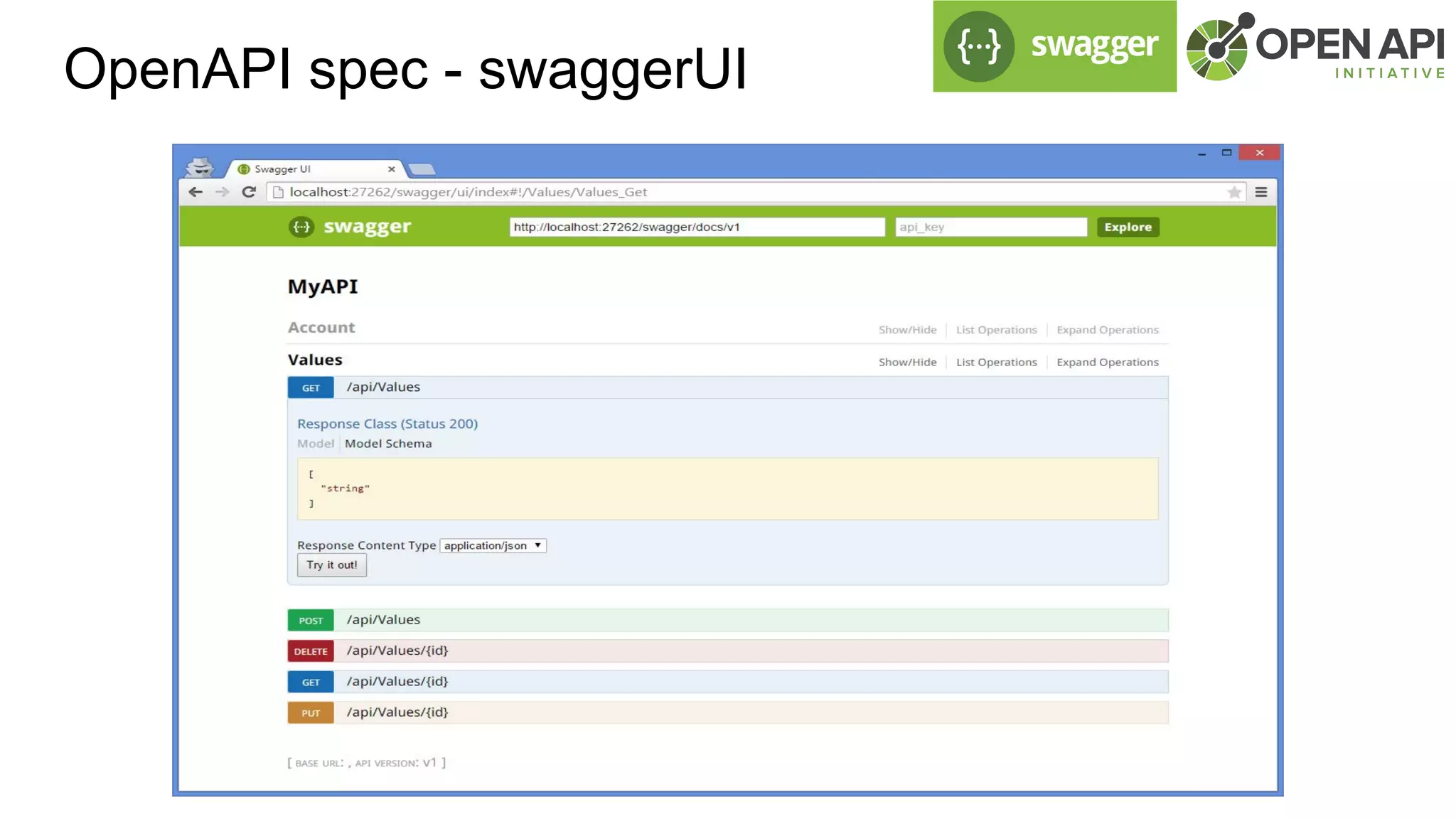

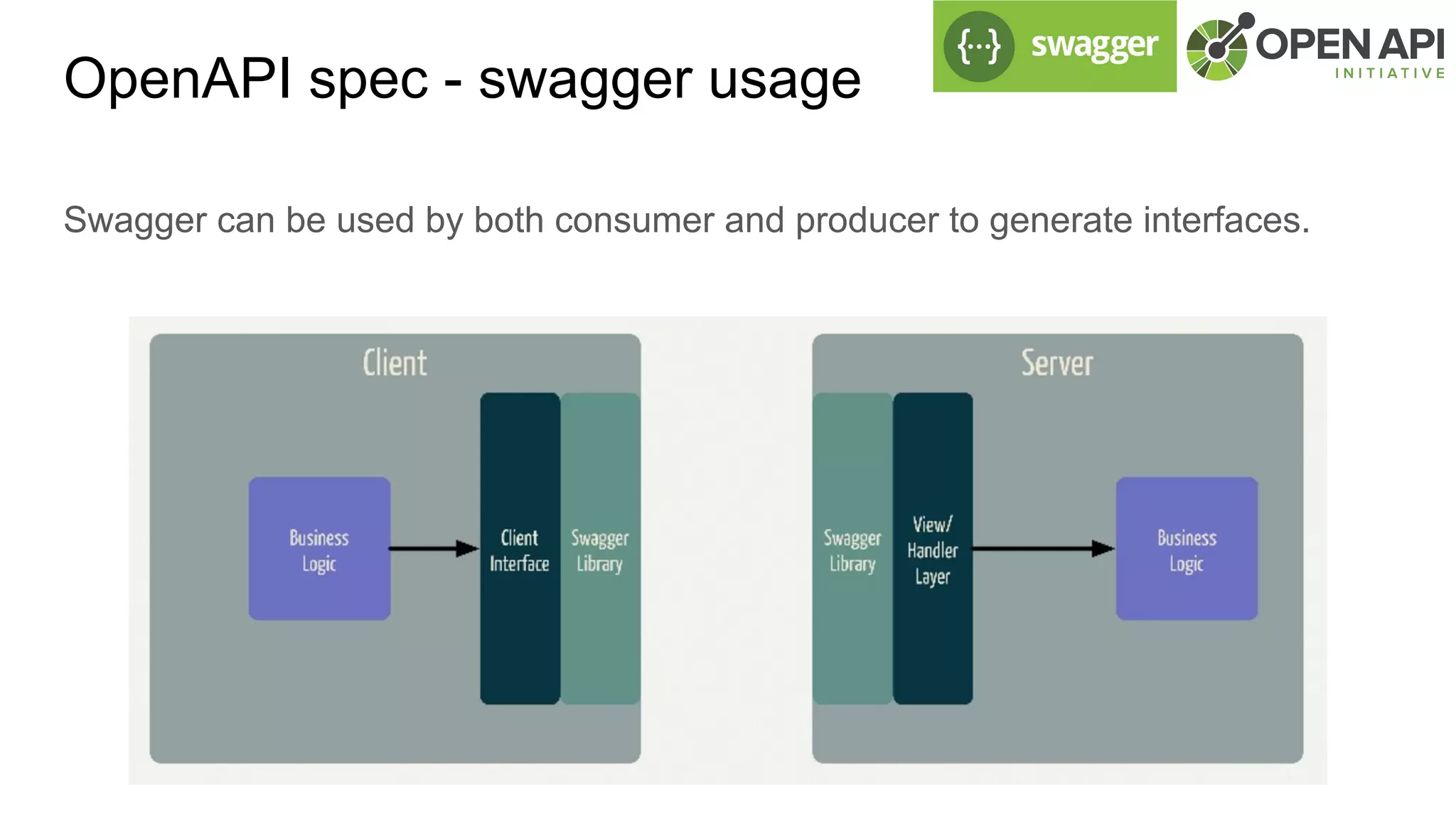

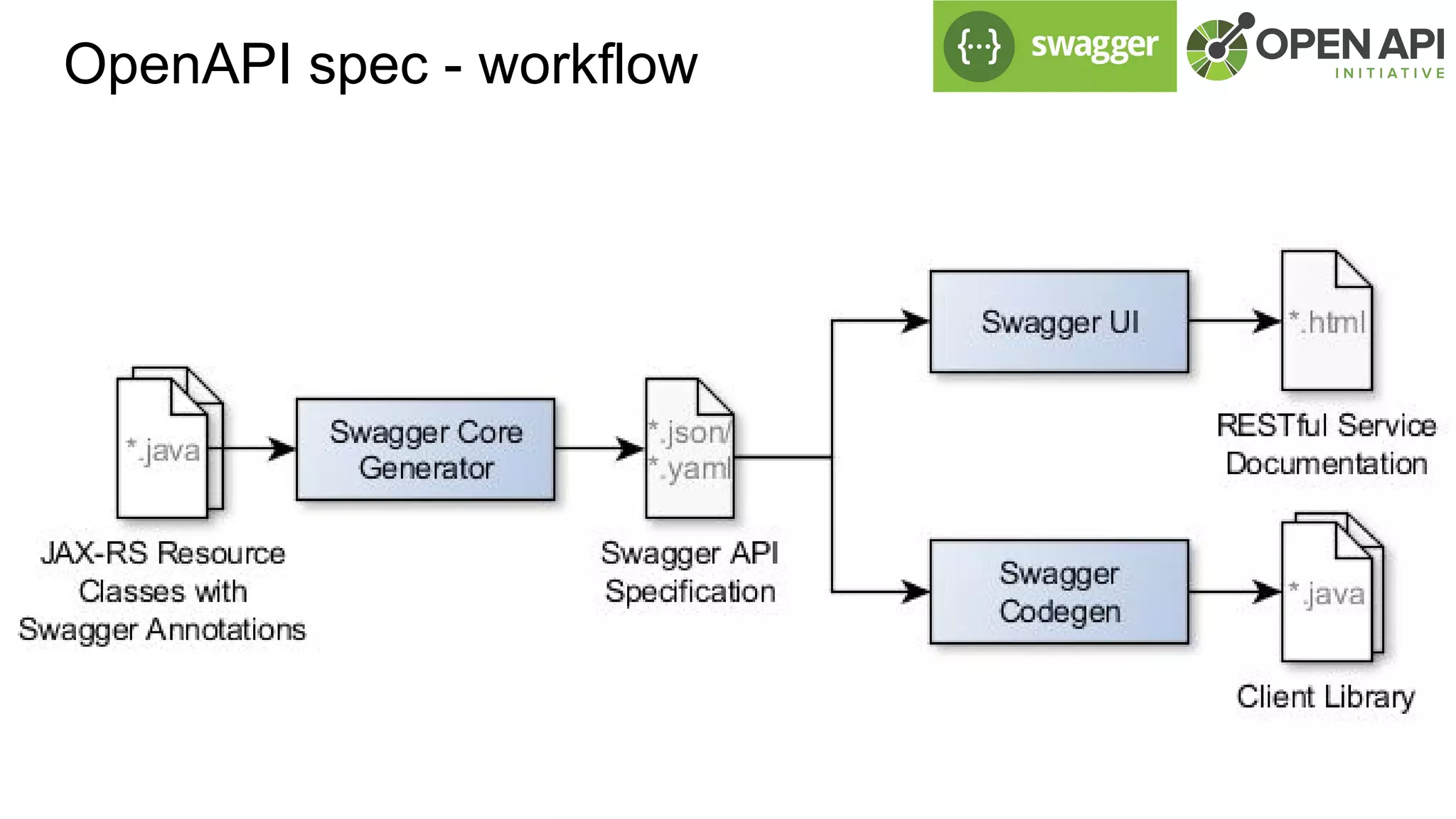

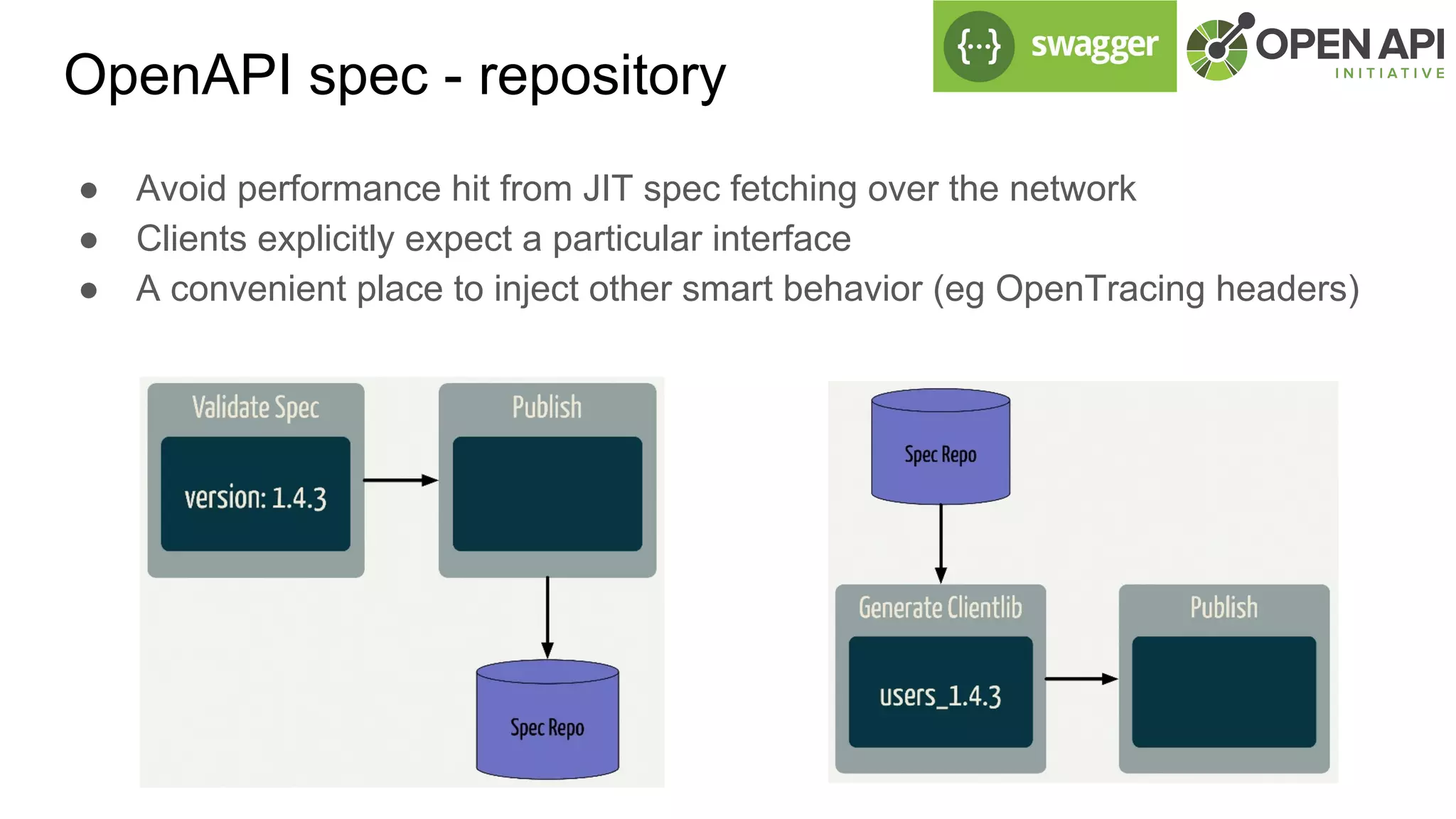

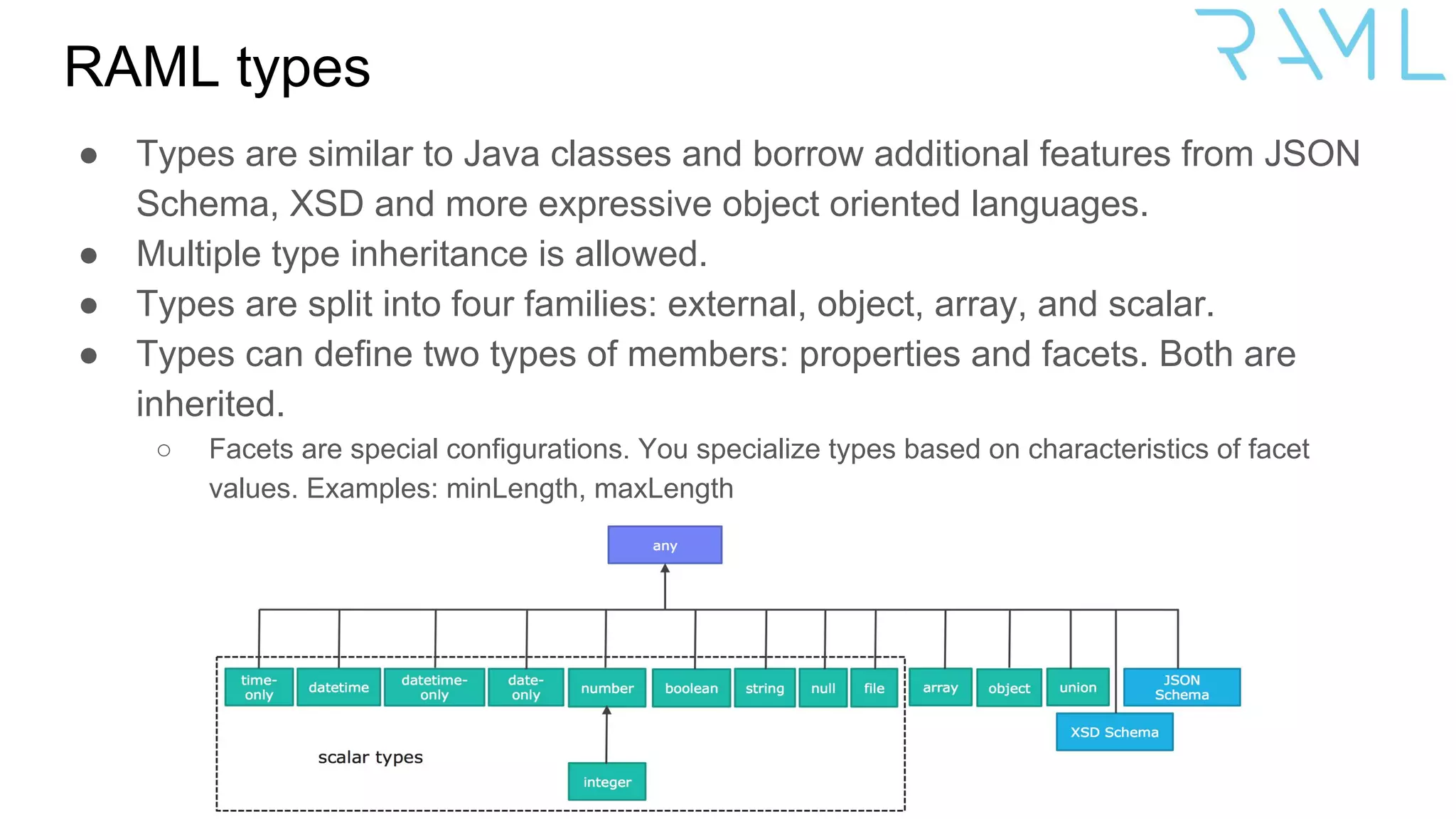

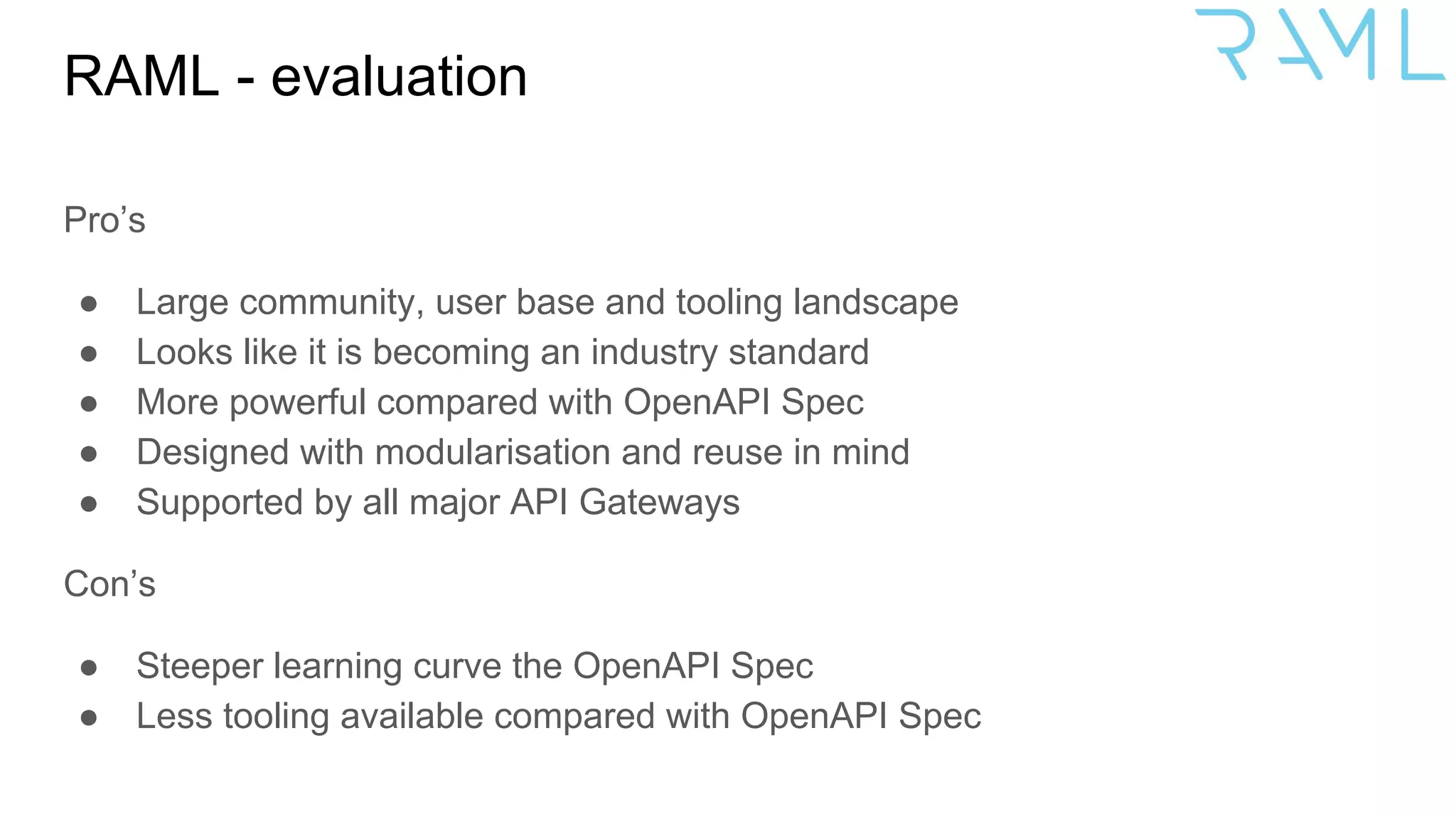

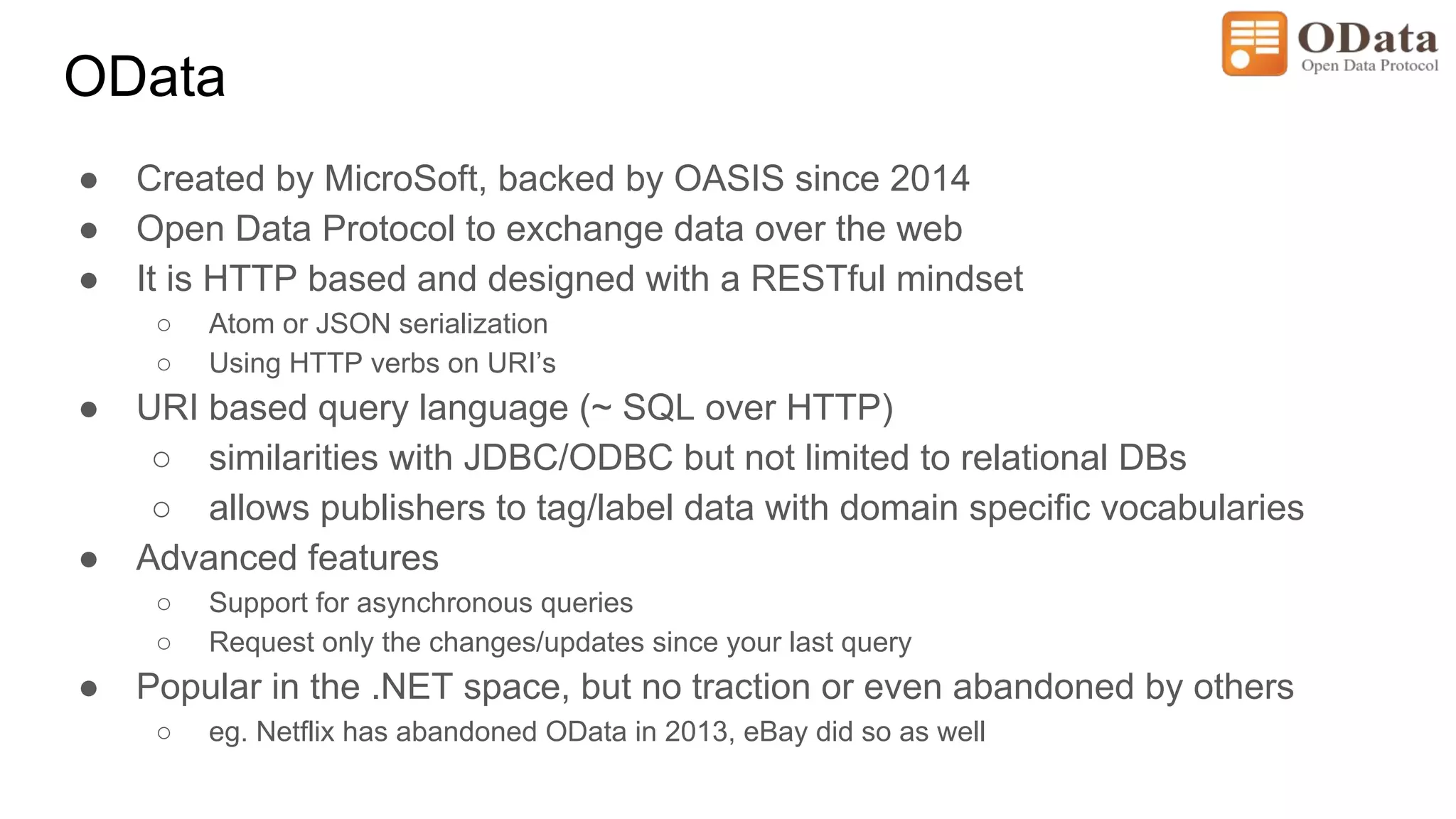

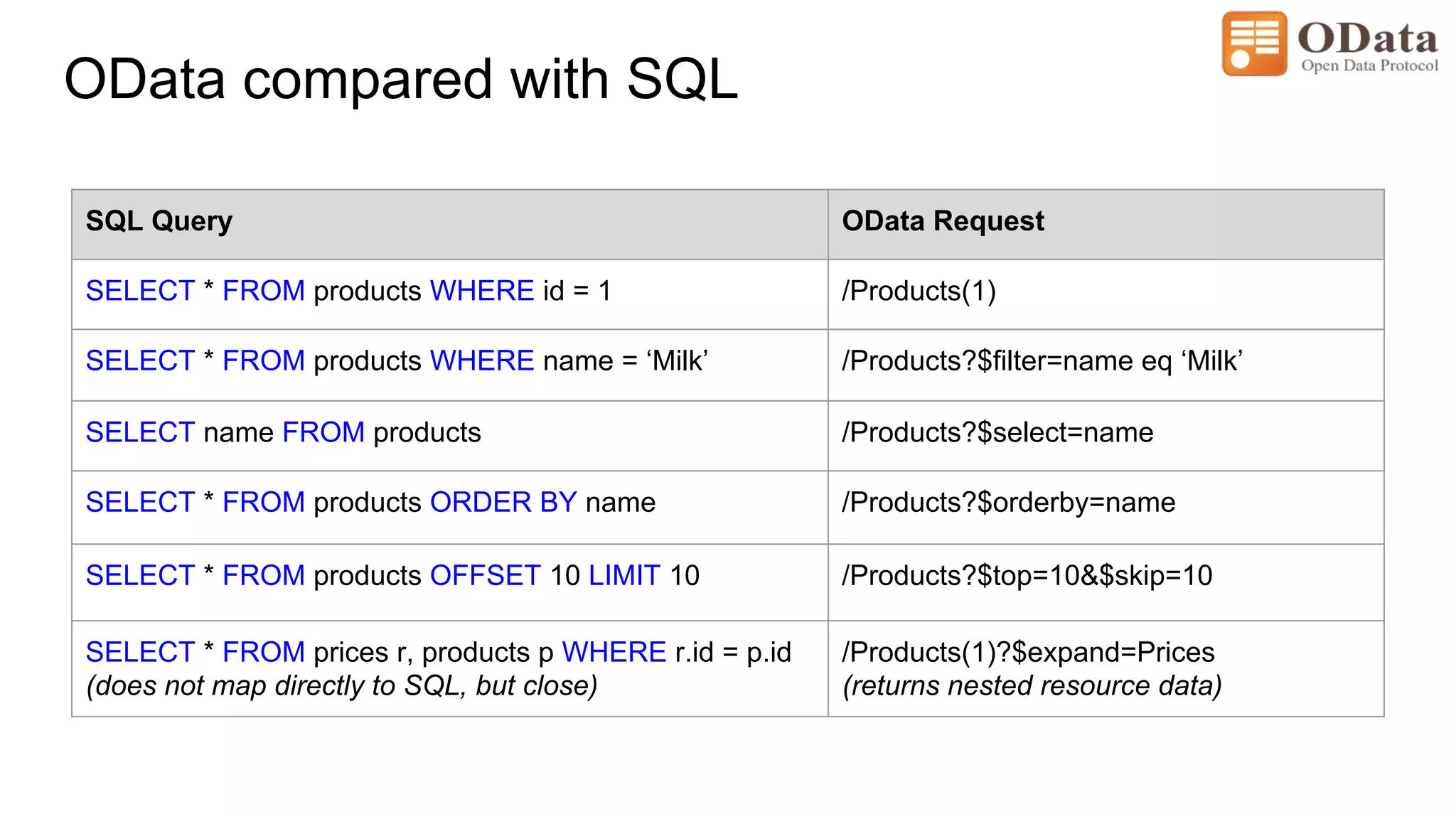

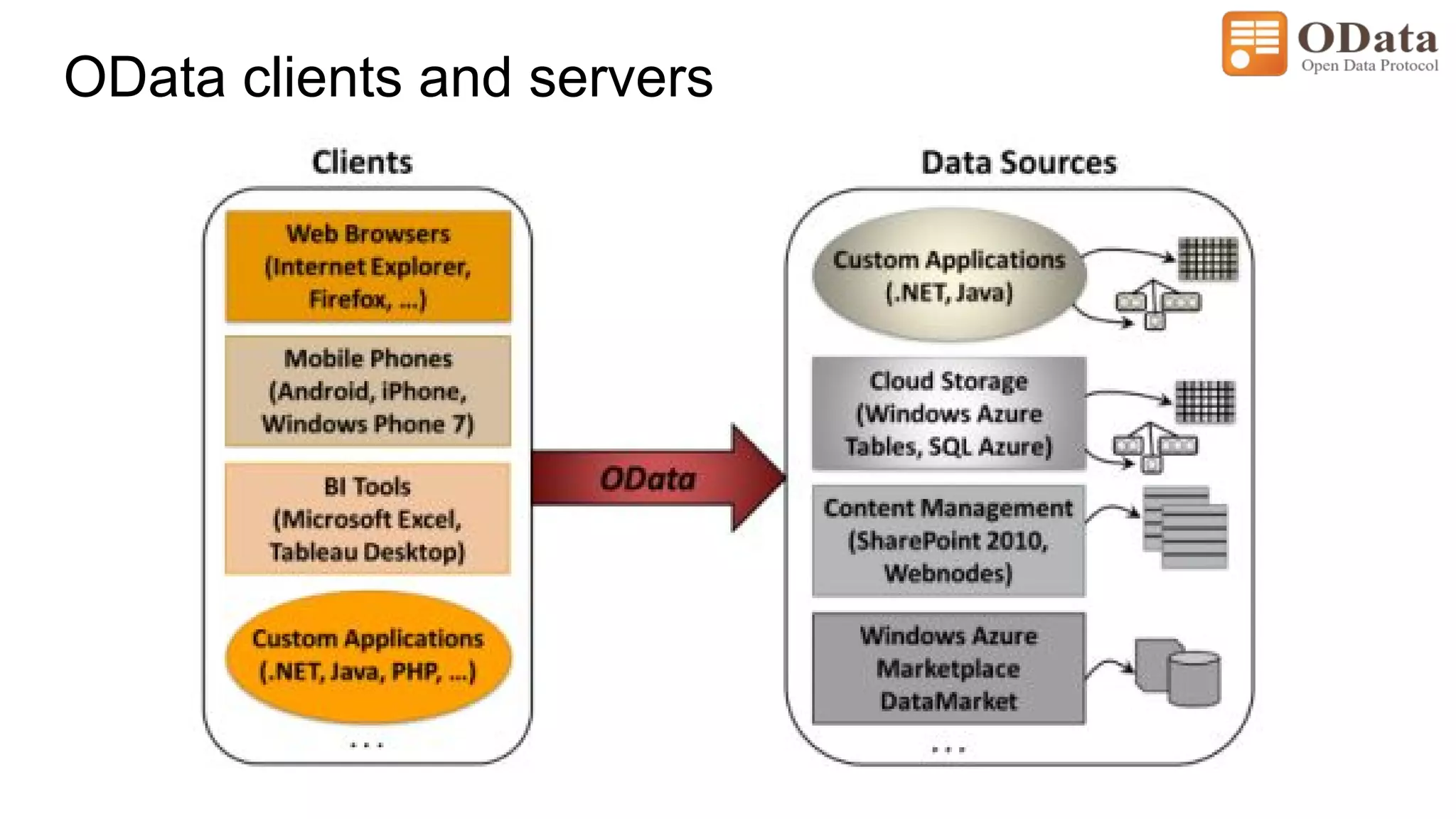

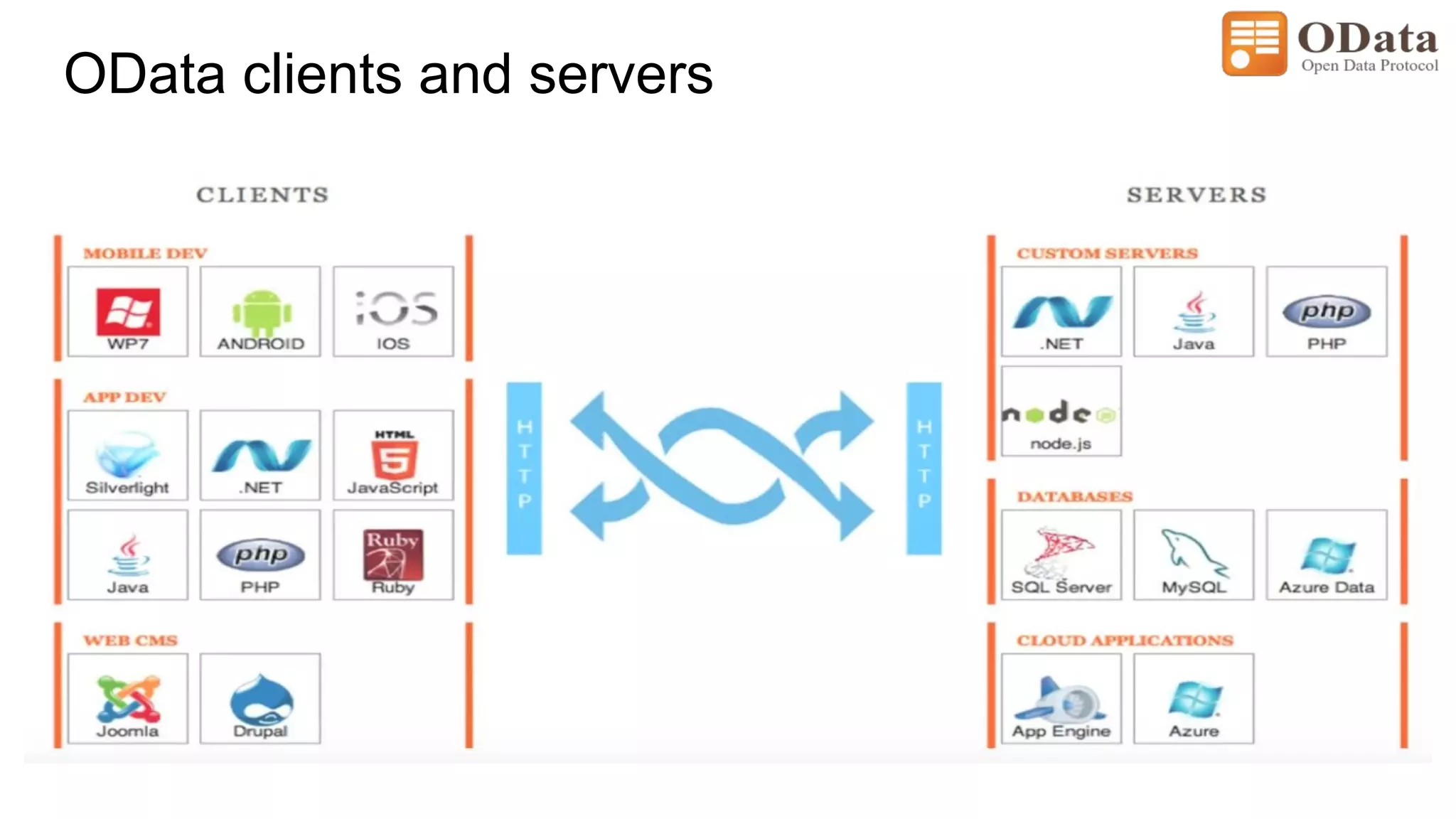

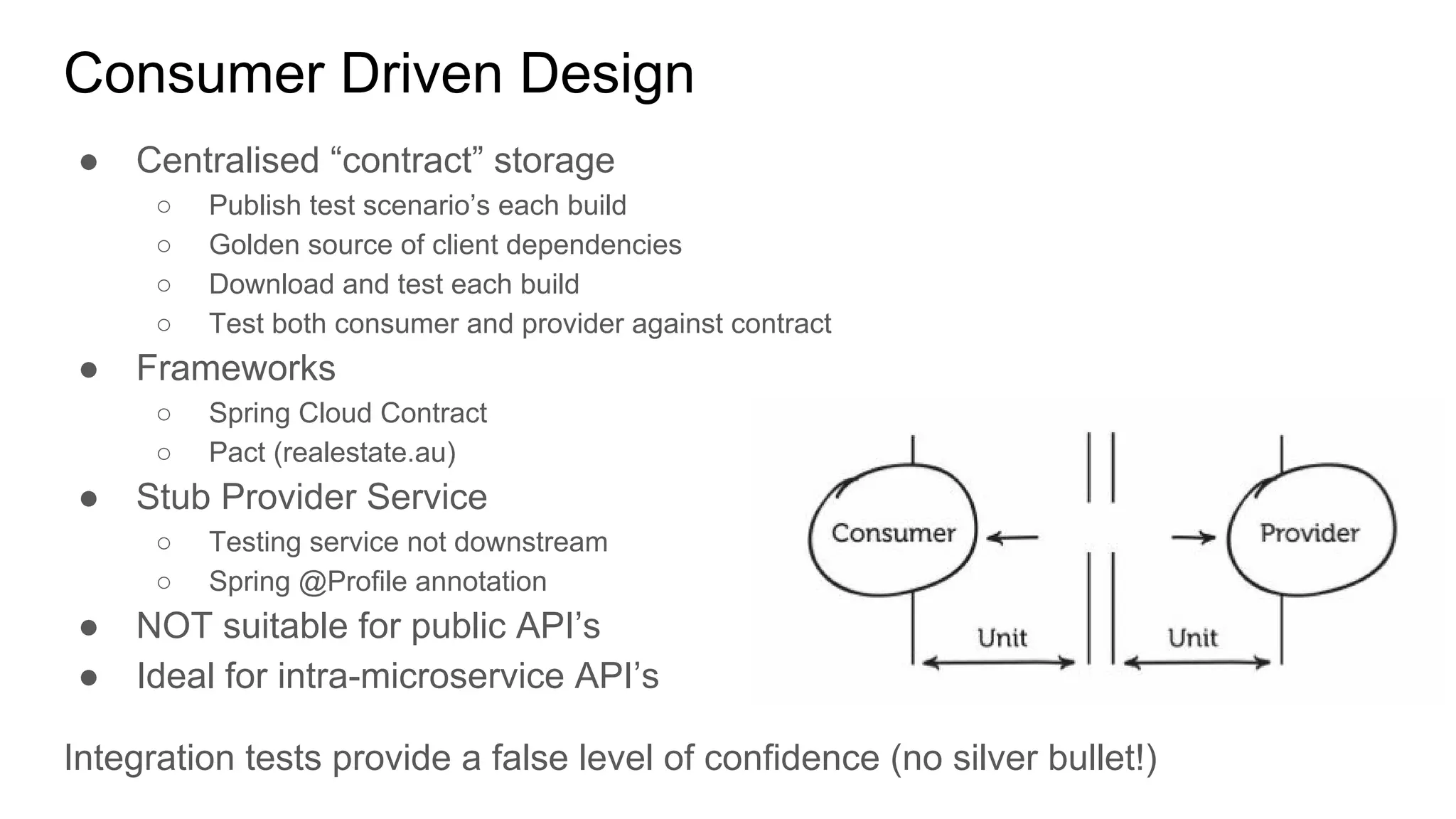

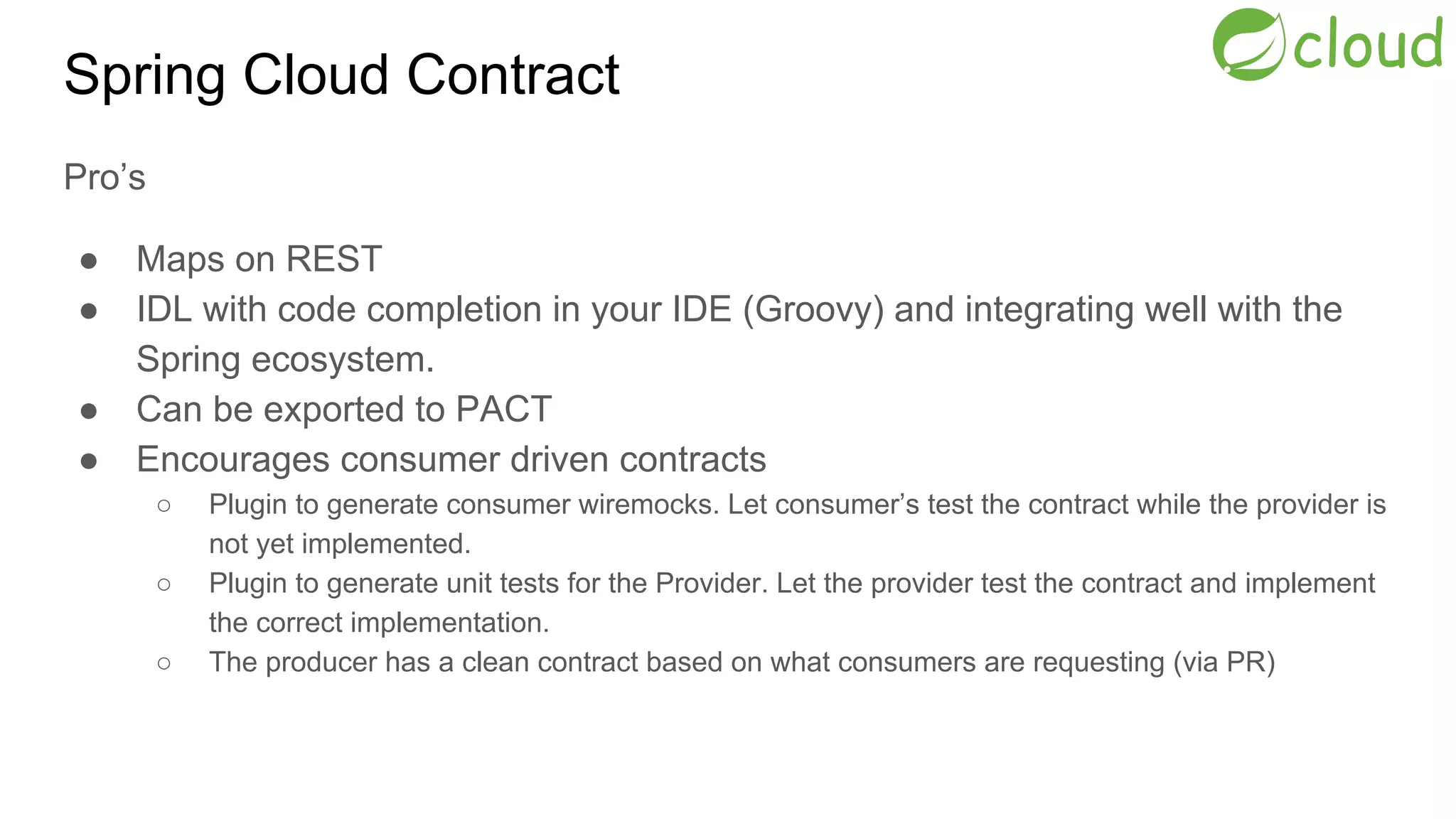

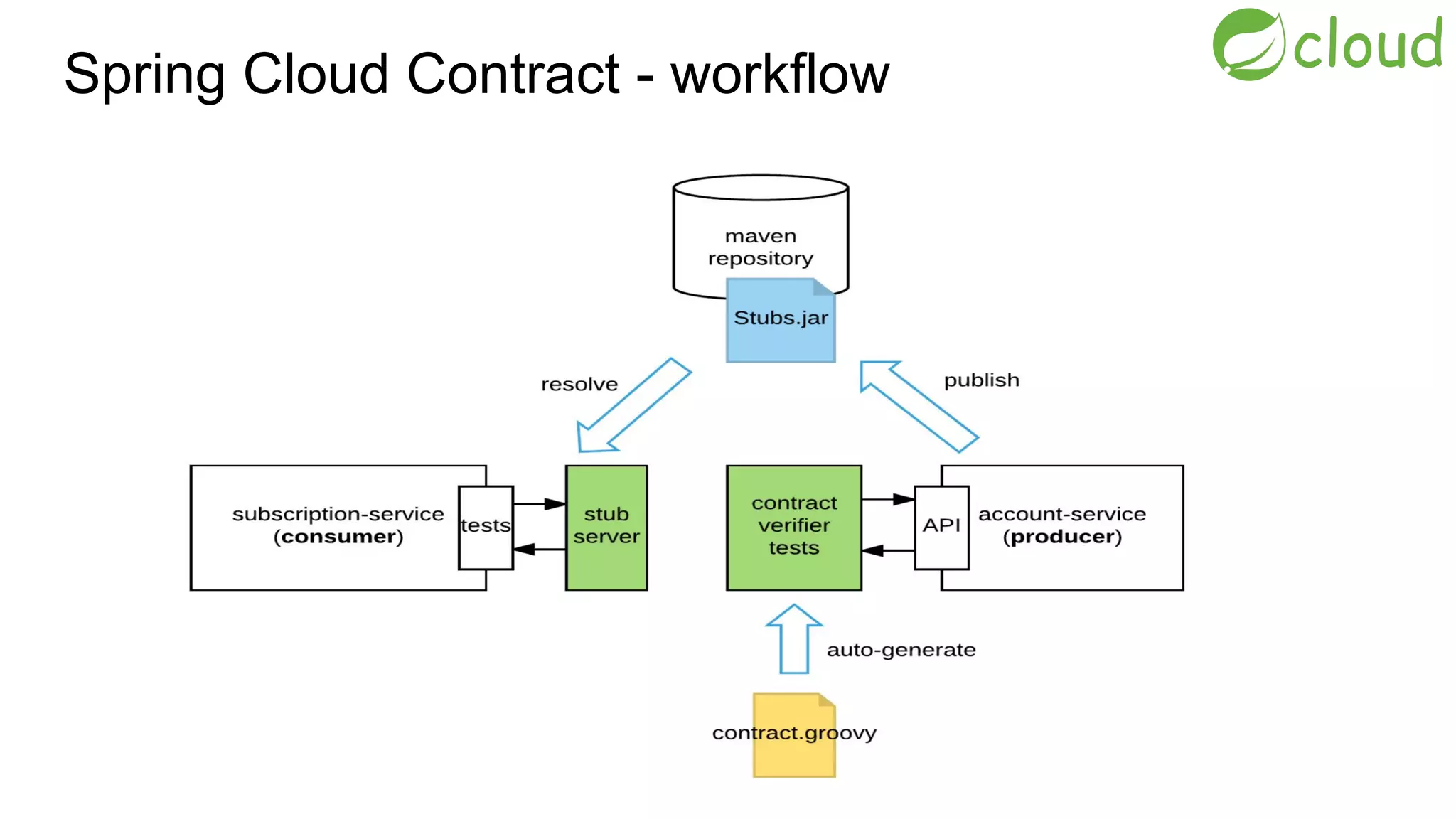

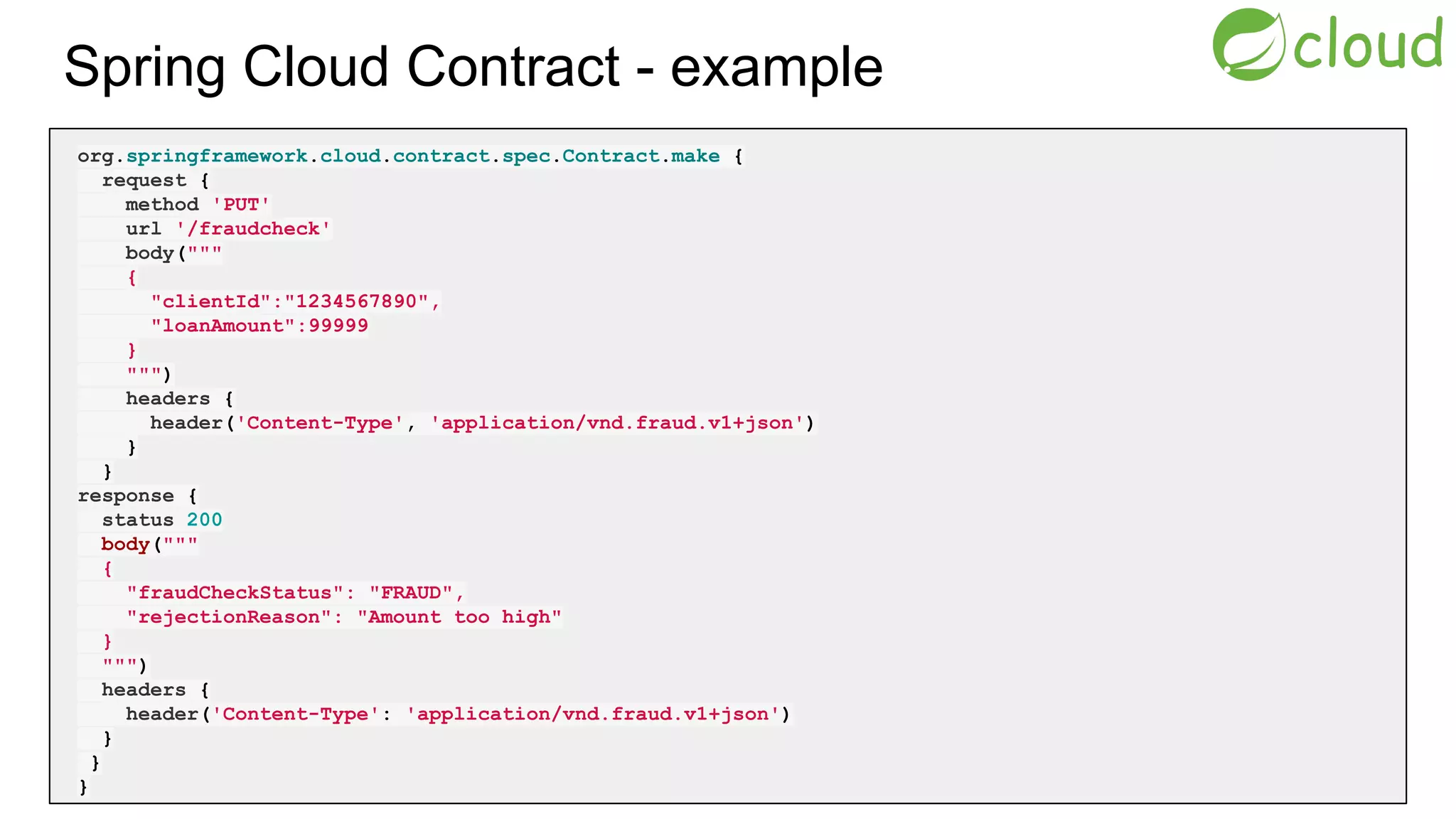

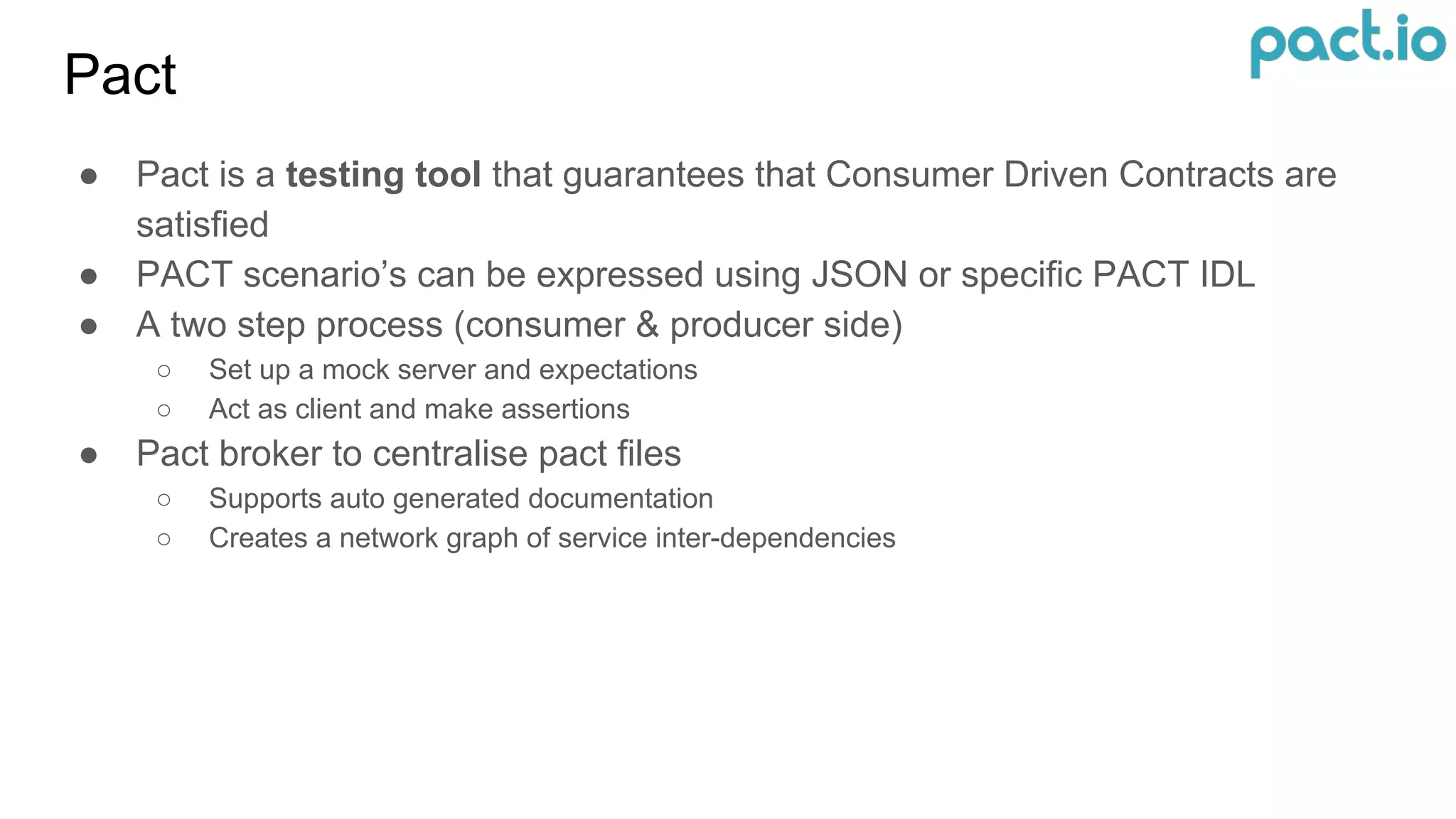

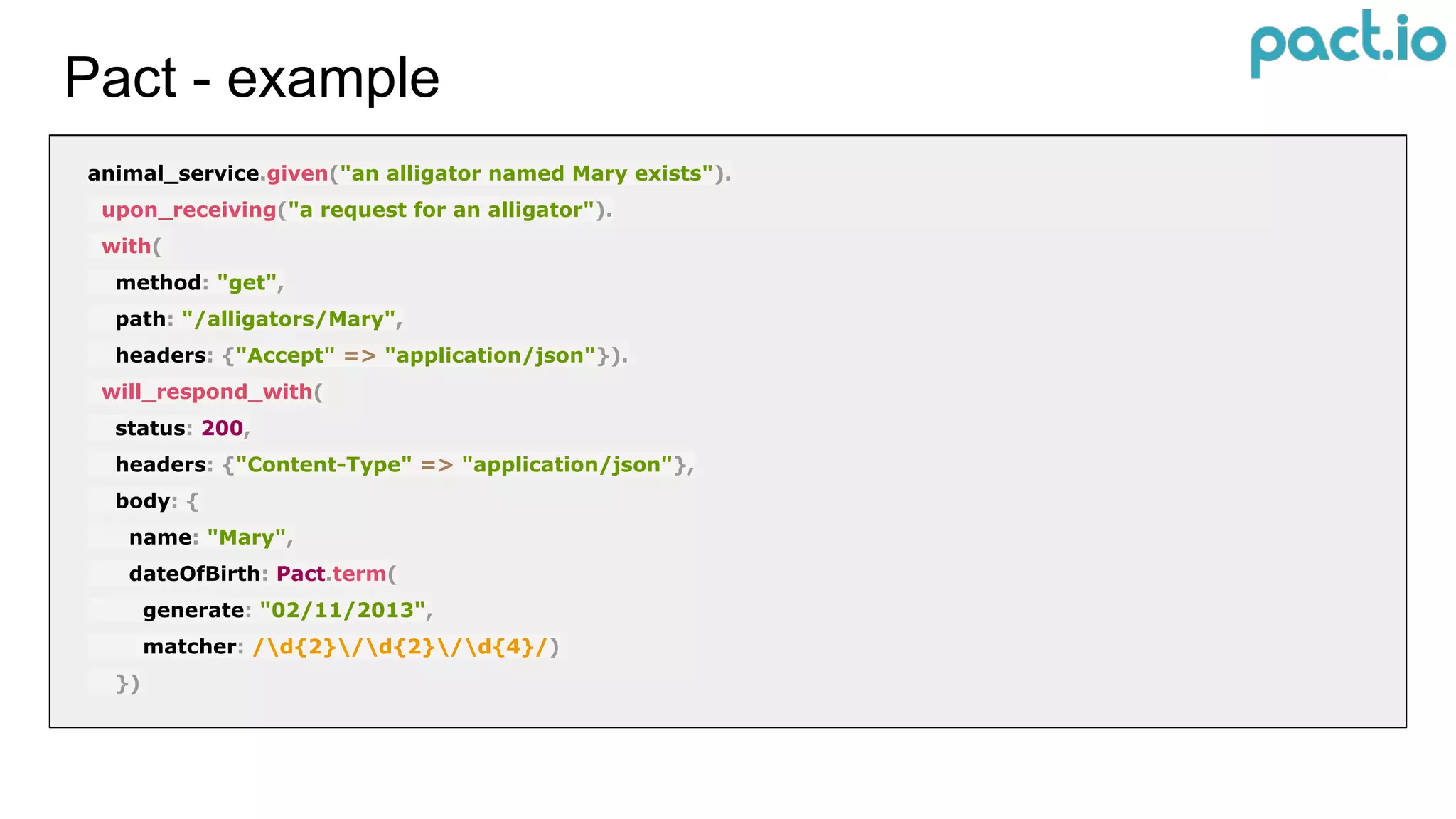

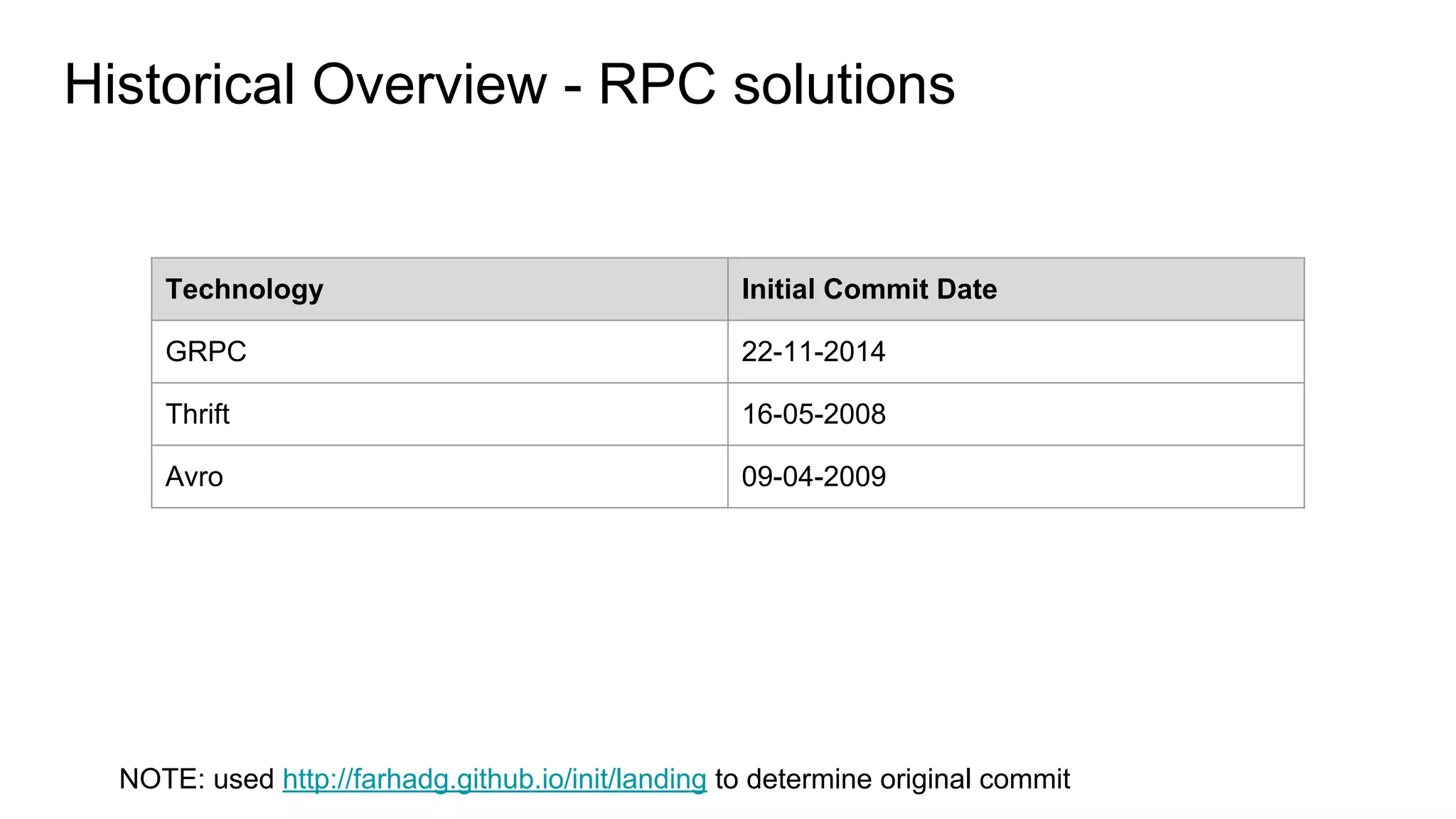

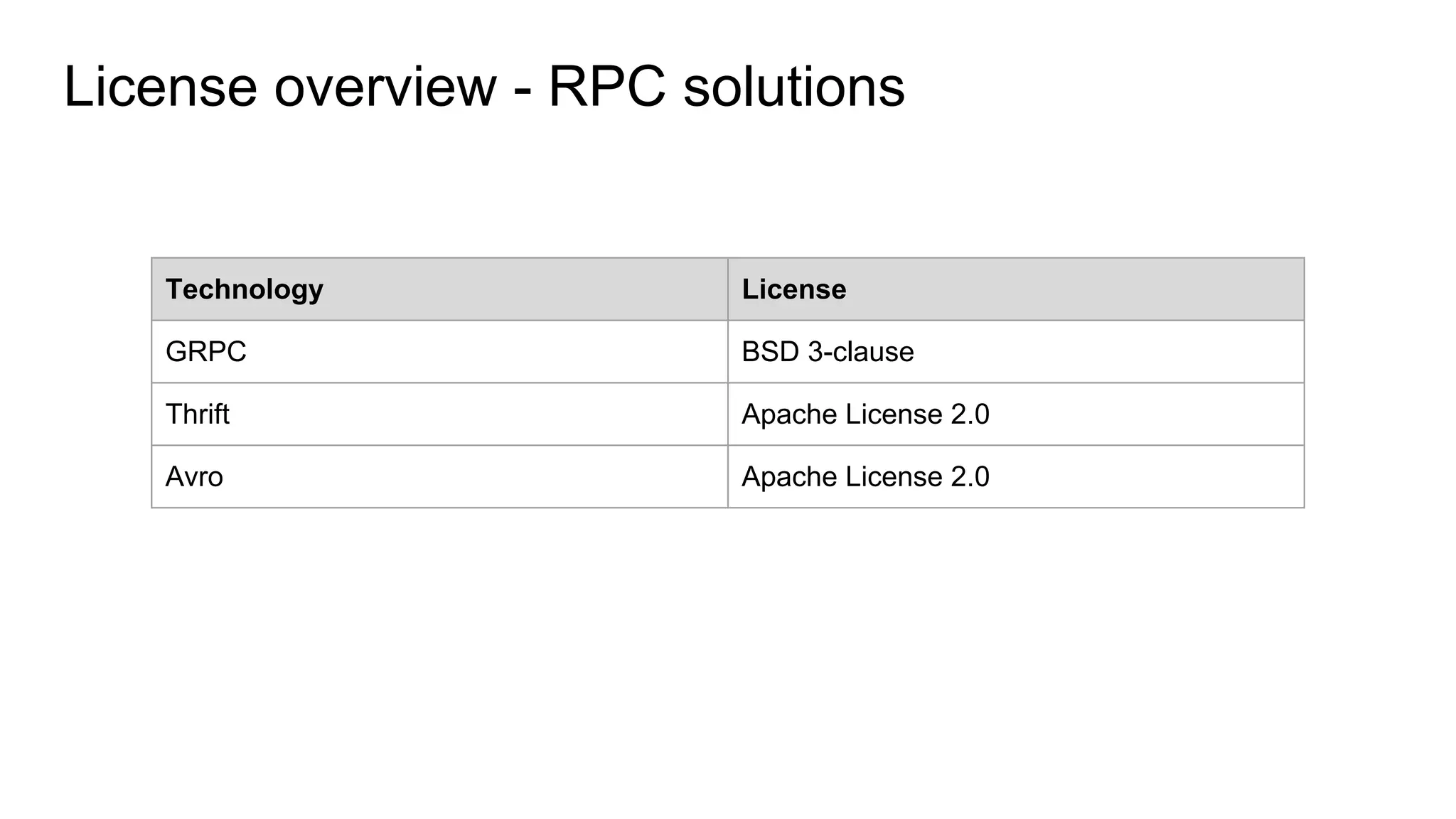

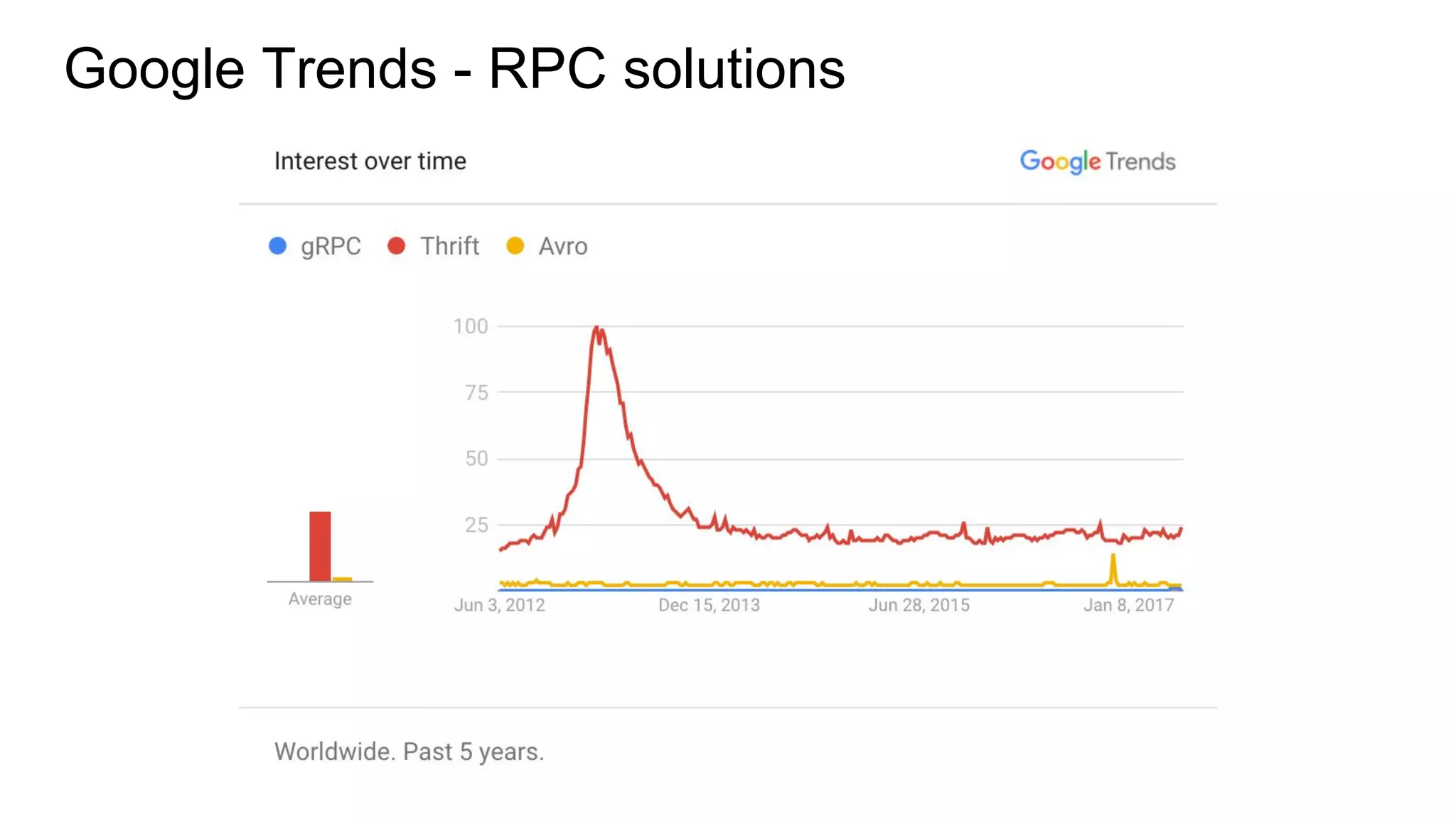

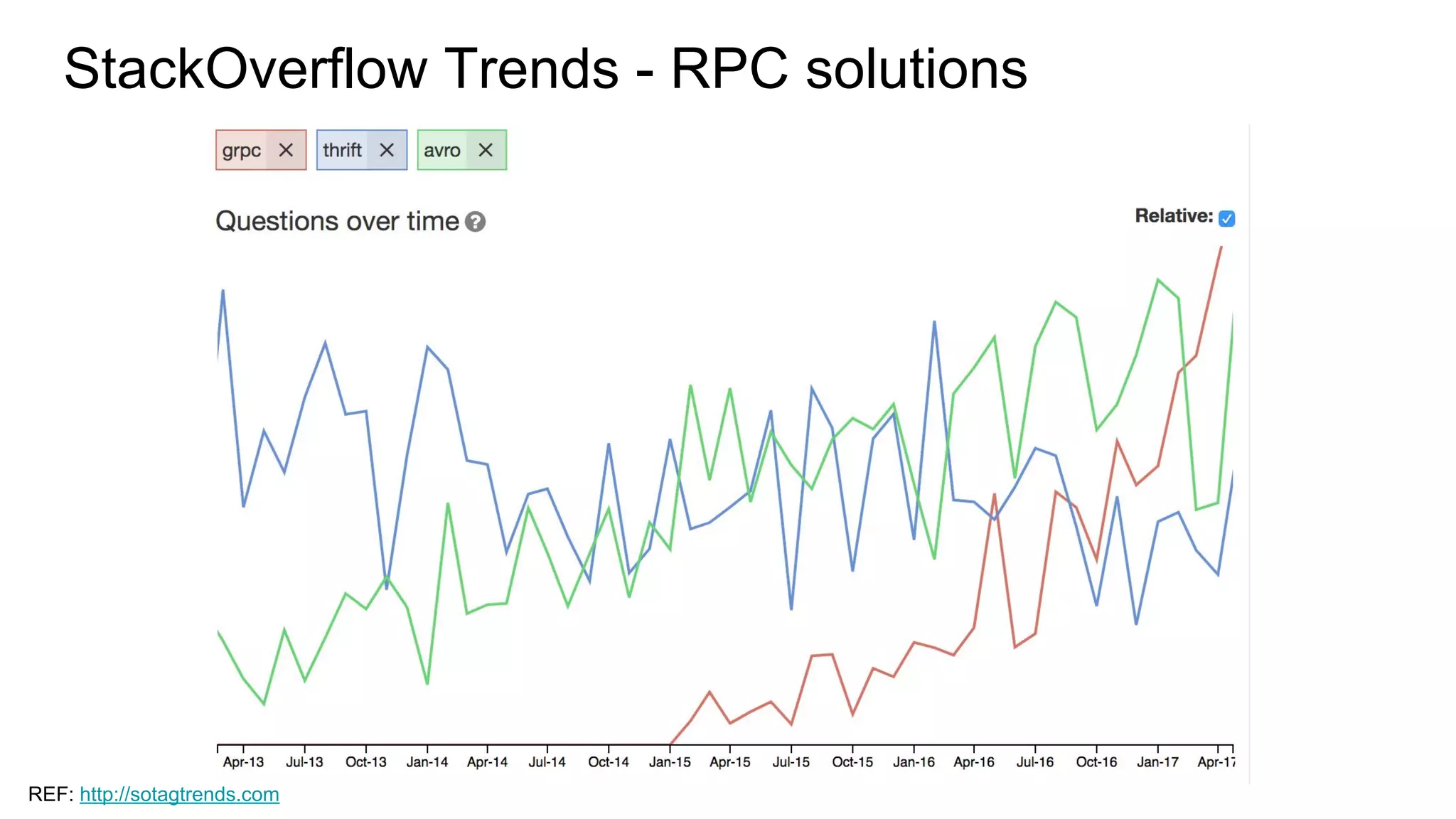

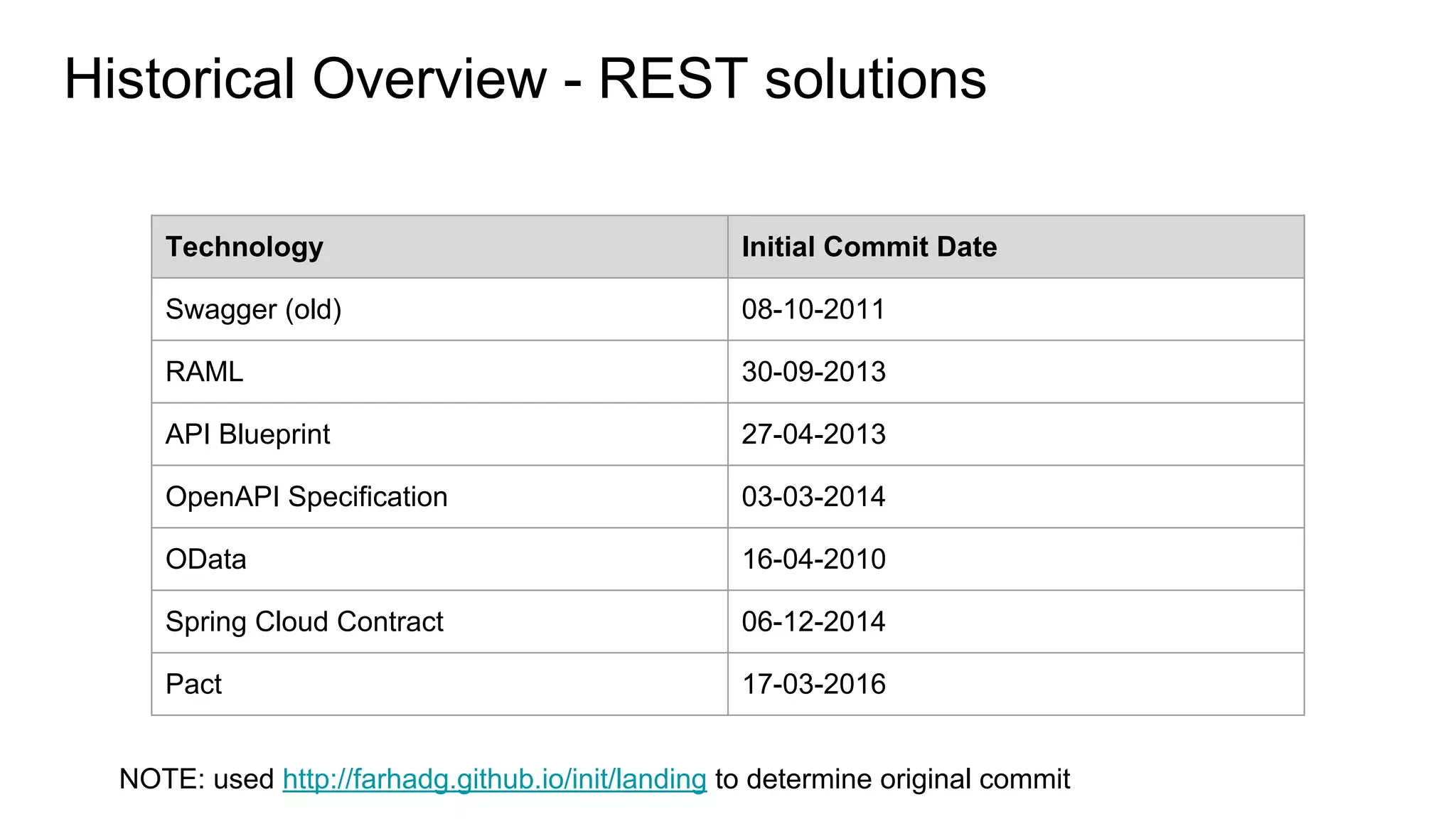

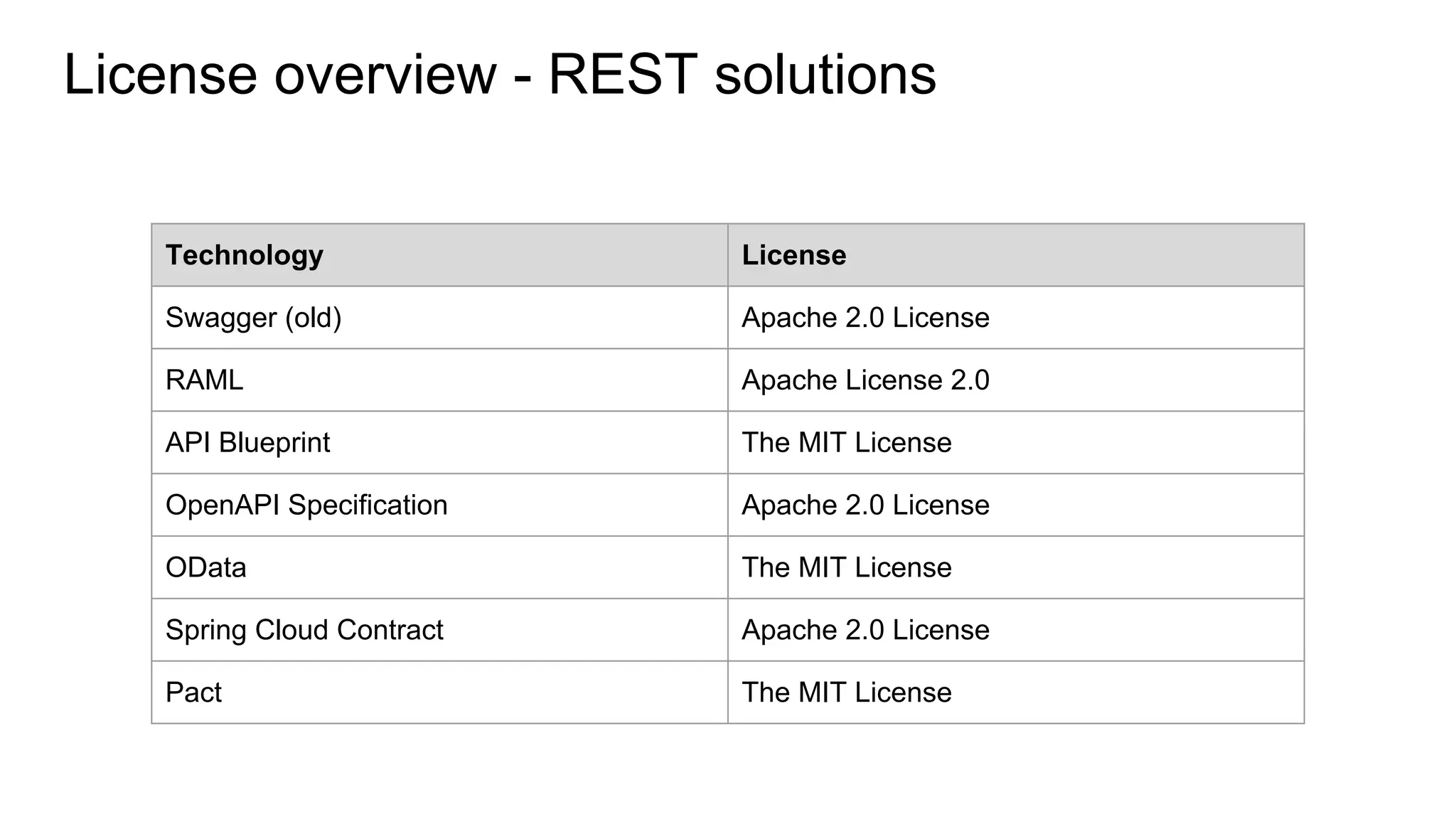

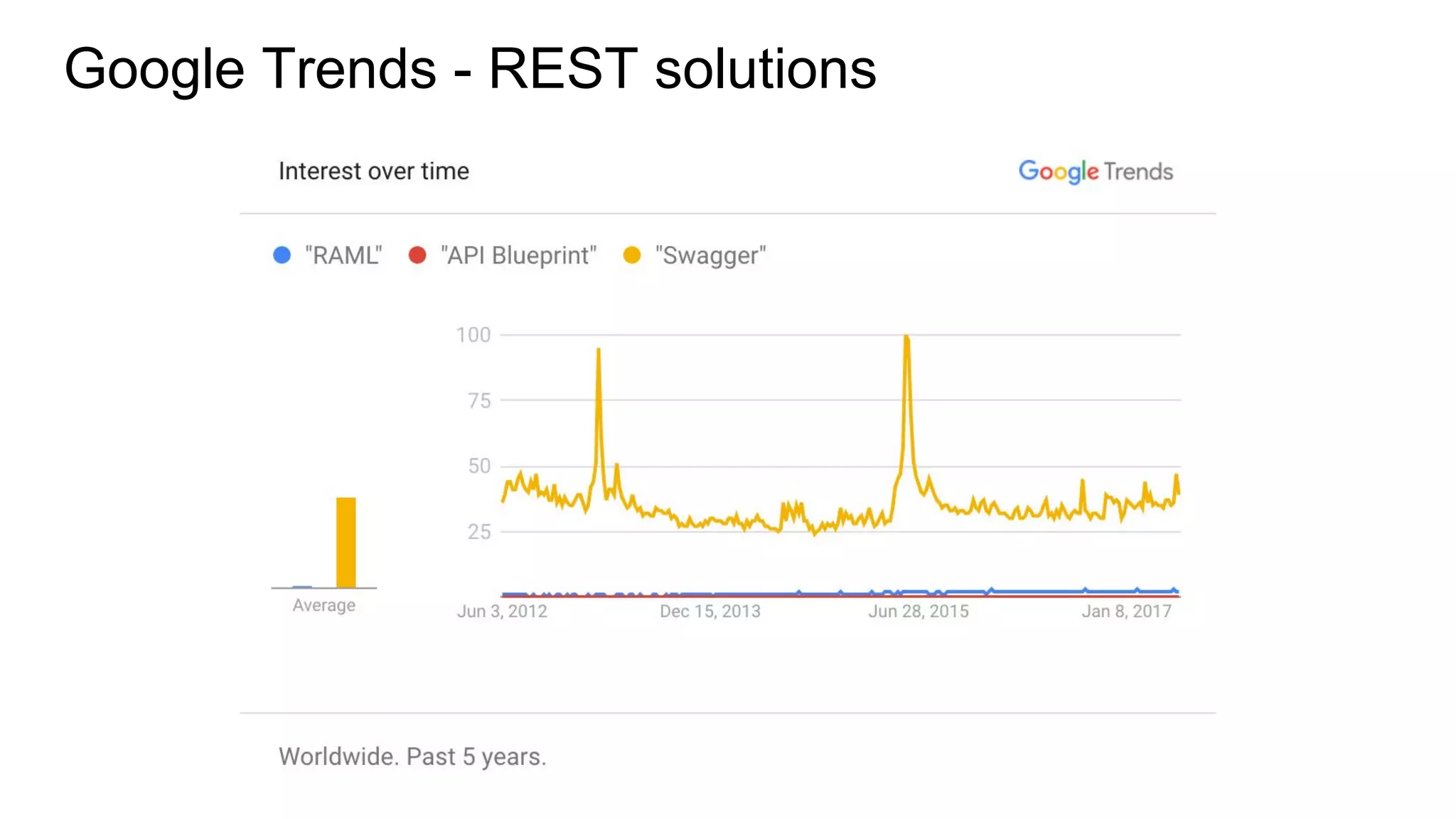

The document provides an overview of cloud-native API design and management, detailing various protocols such as RPC, REST, and tools for API development like gRPC, Apache Thrift, and Avro. It discusses the challenges in API evolution, emphasizes the importance of consumer-driven contracts, and compares several API standards including Swagger, RAML, and OData. The conclusion suggests a preference for using REST in combination with Swagger and Pact due to their popularity and consumer-driven testing capabilities.