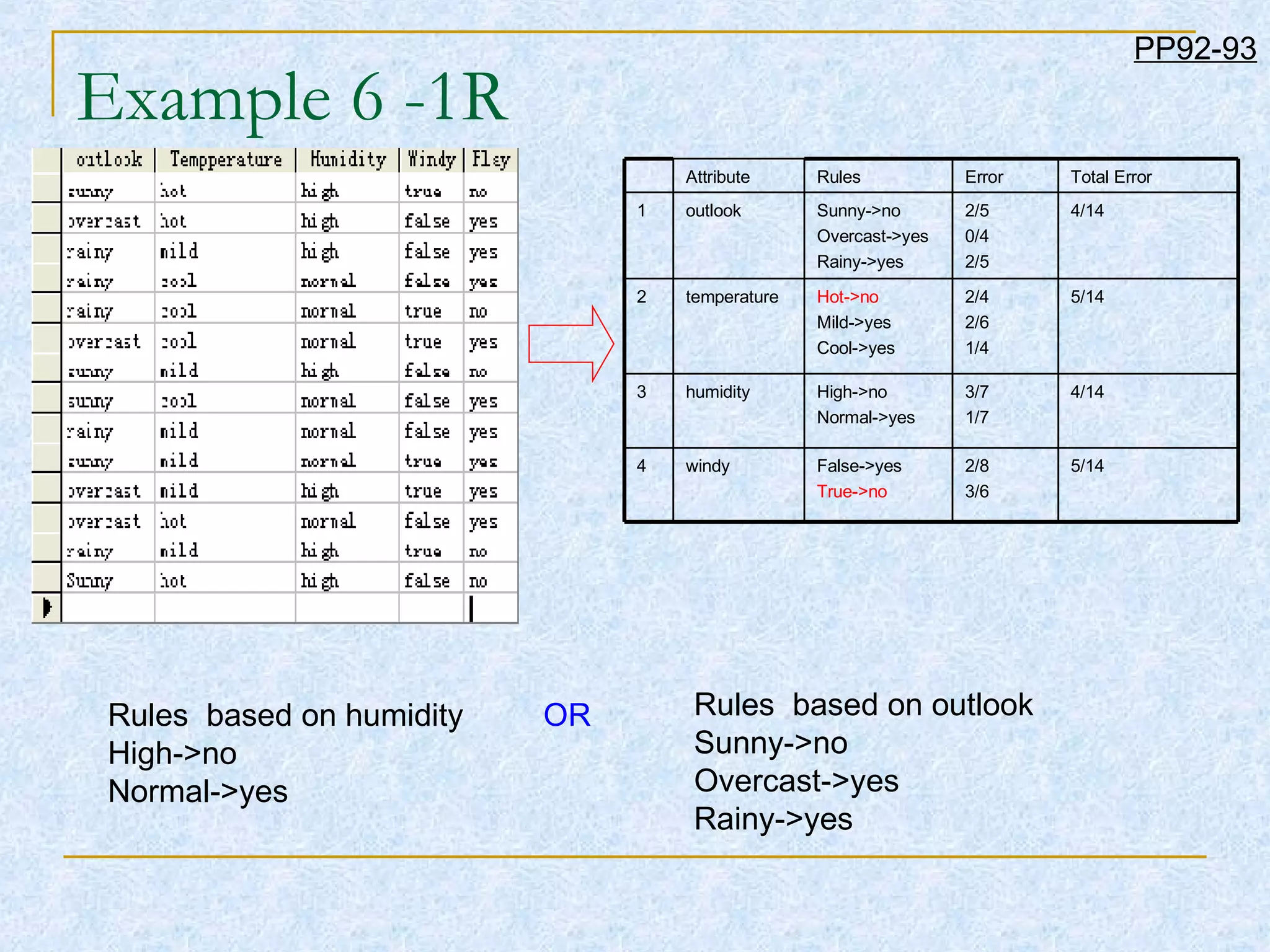

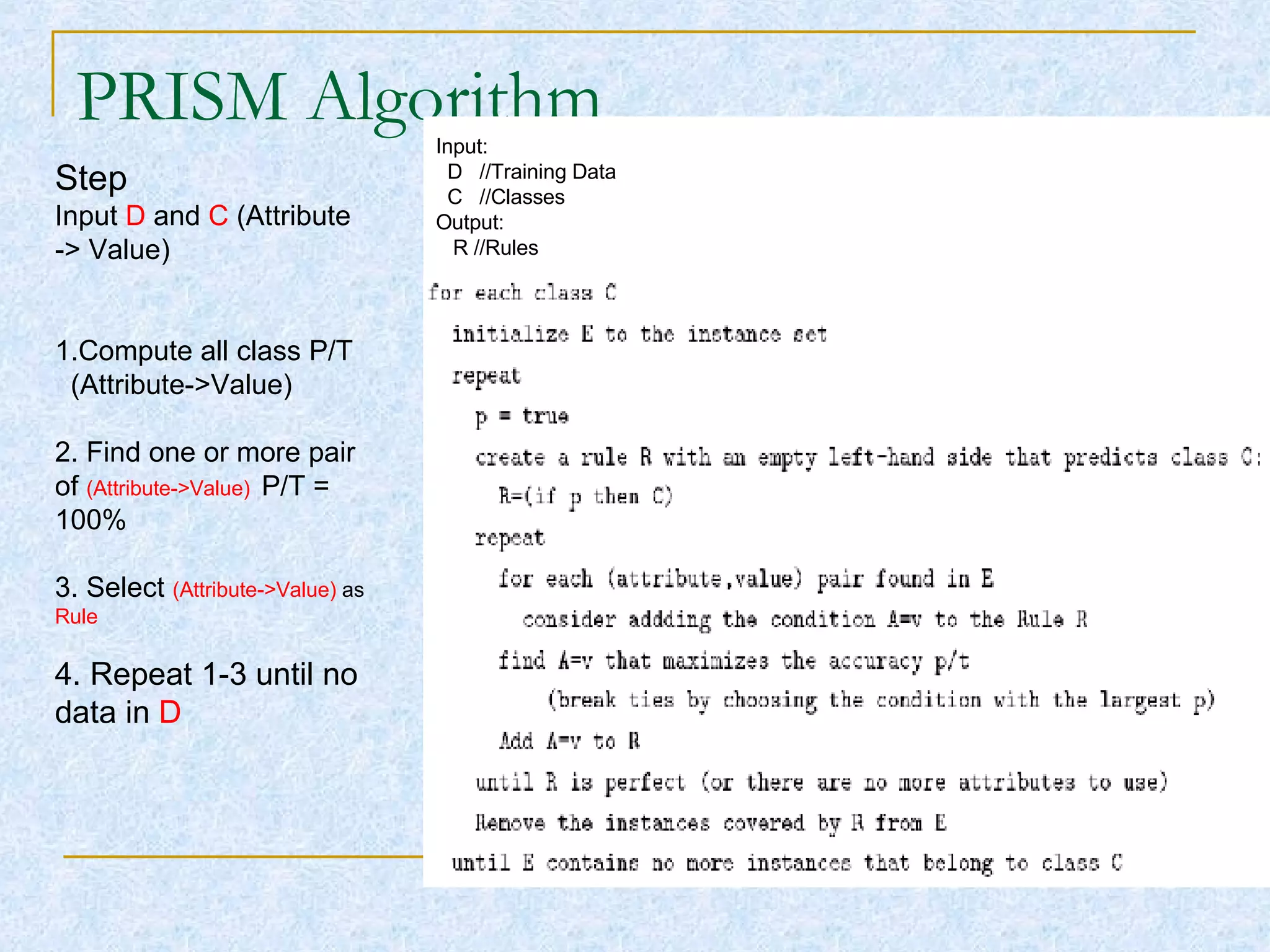

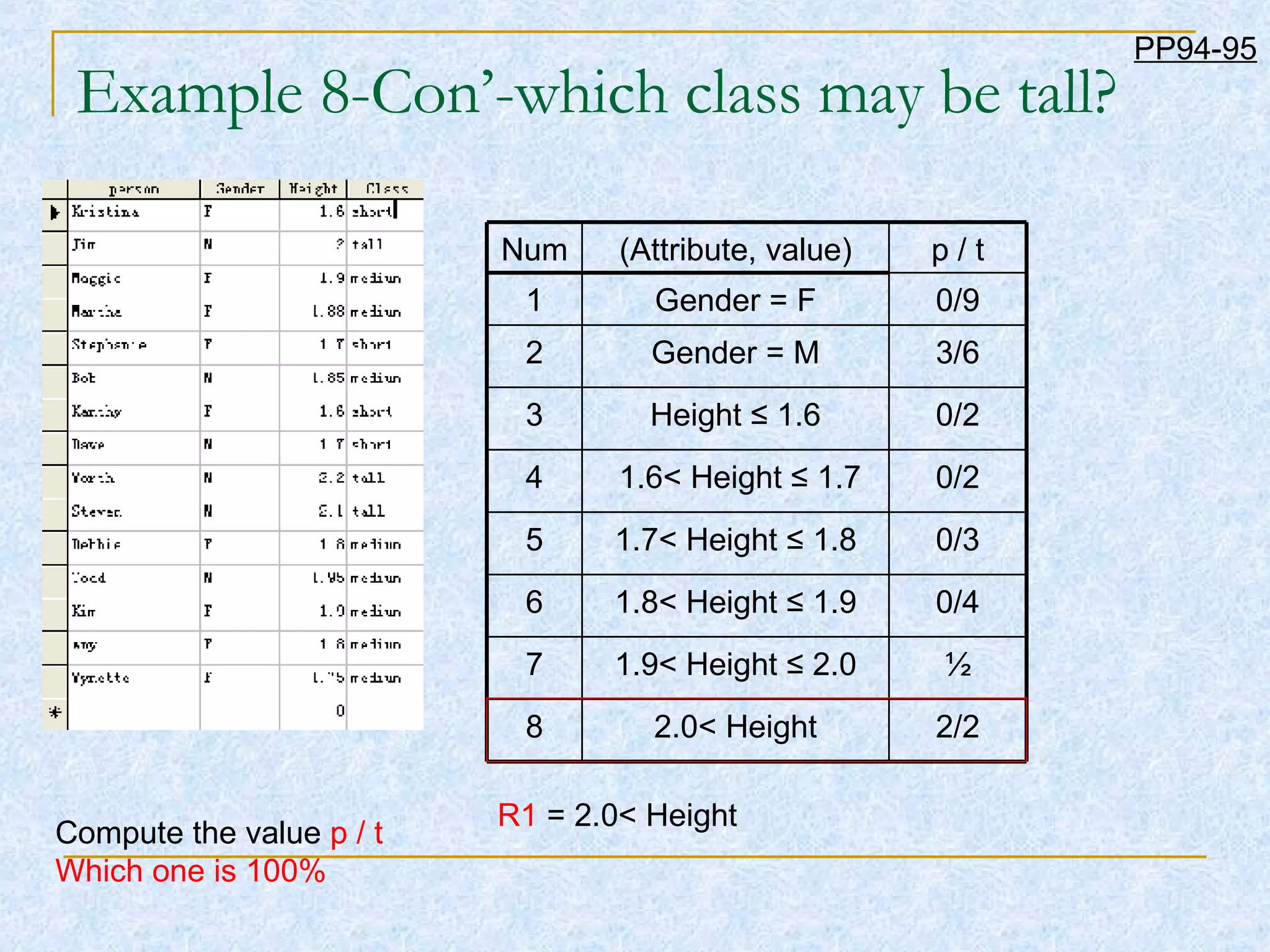

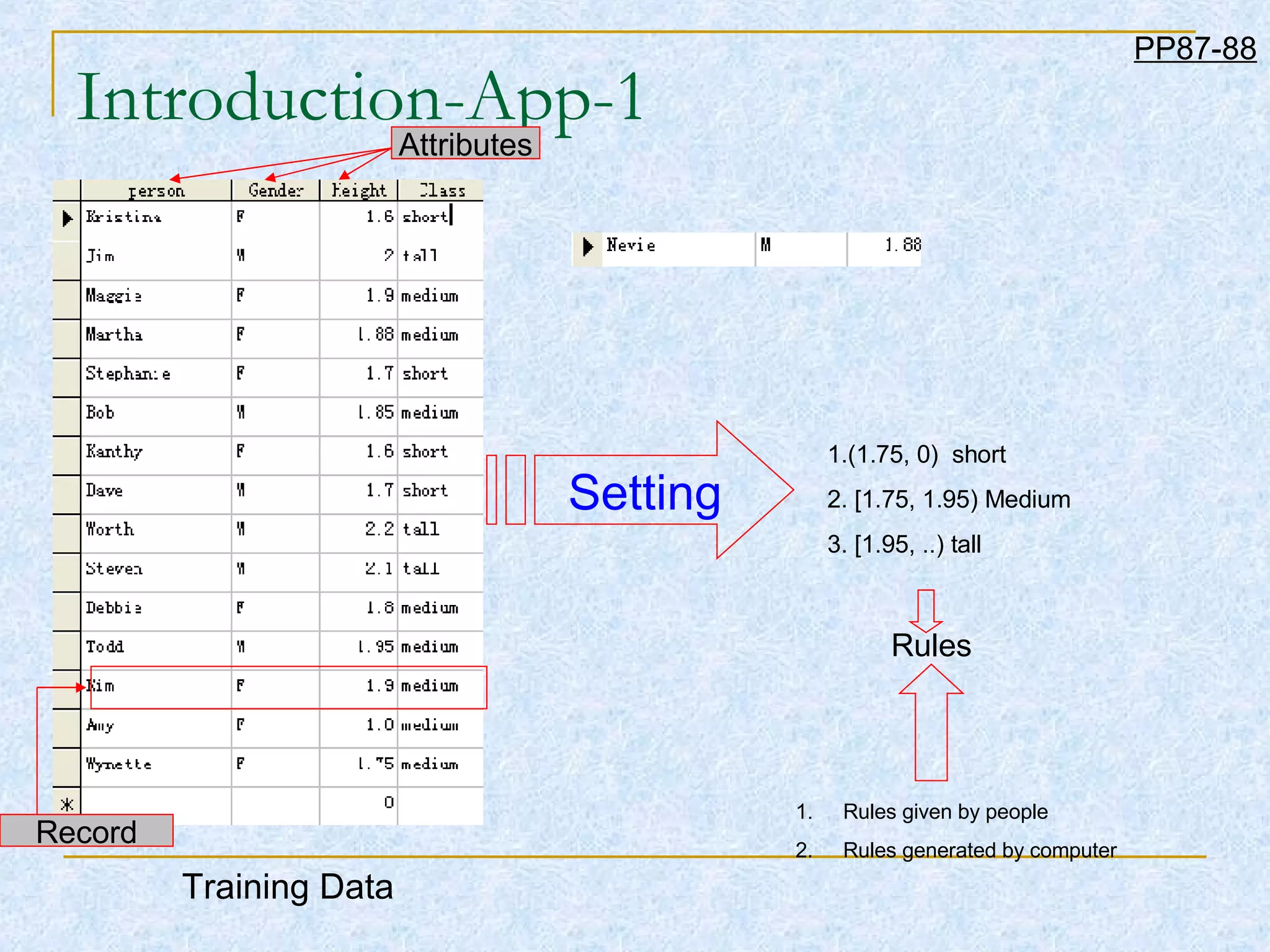

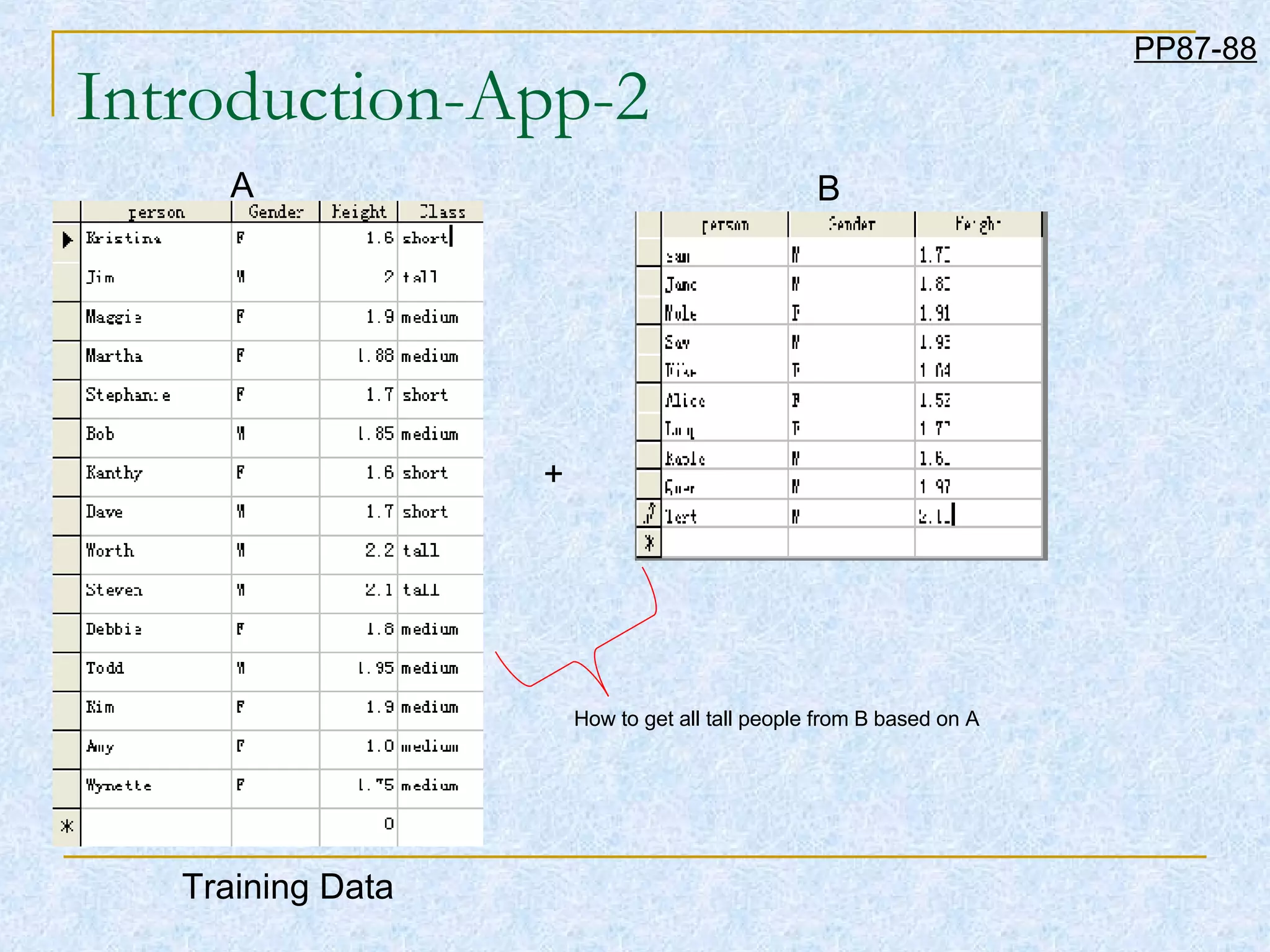

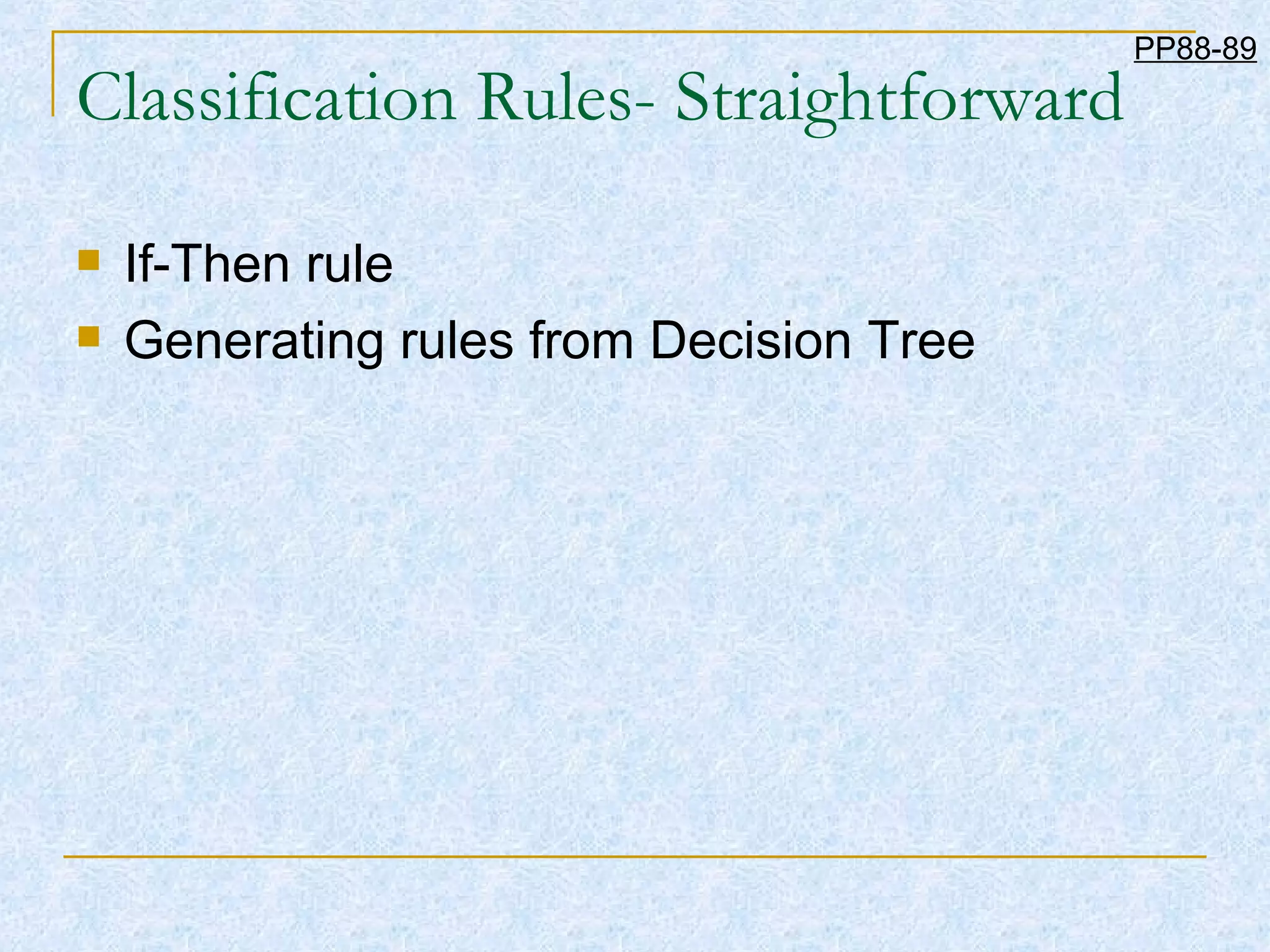

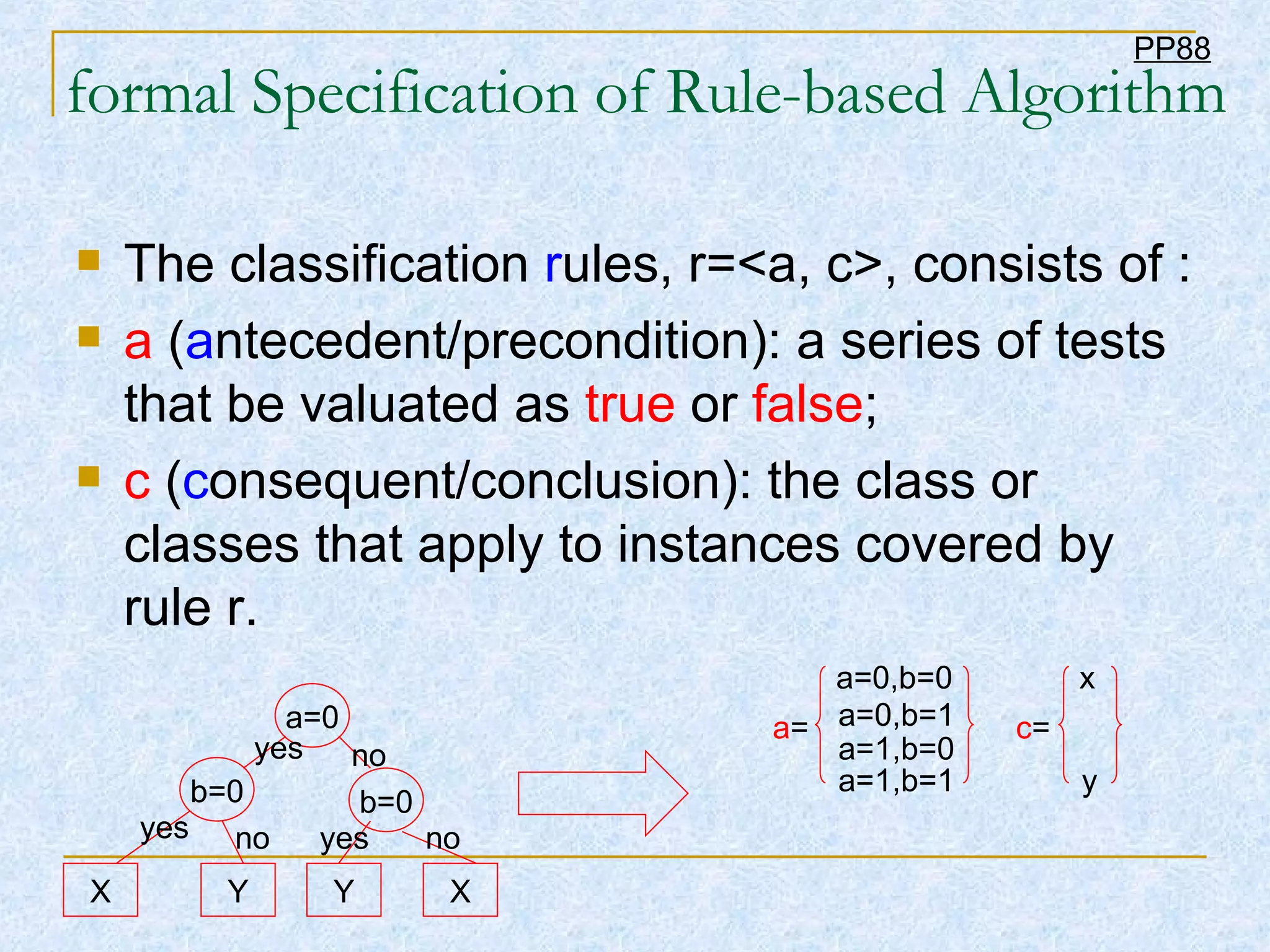

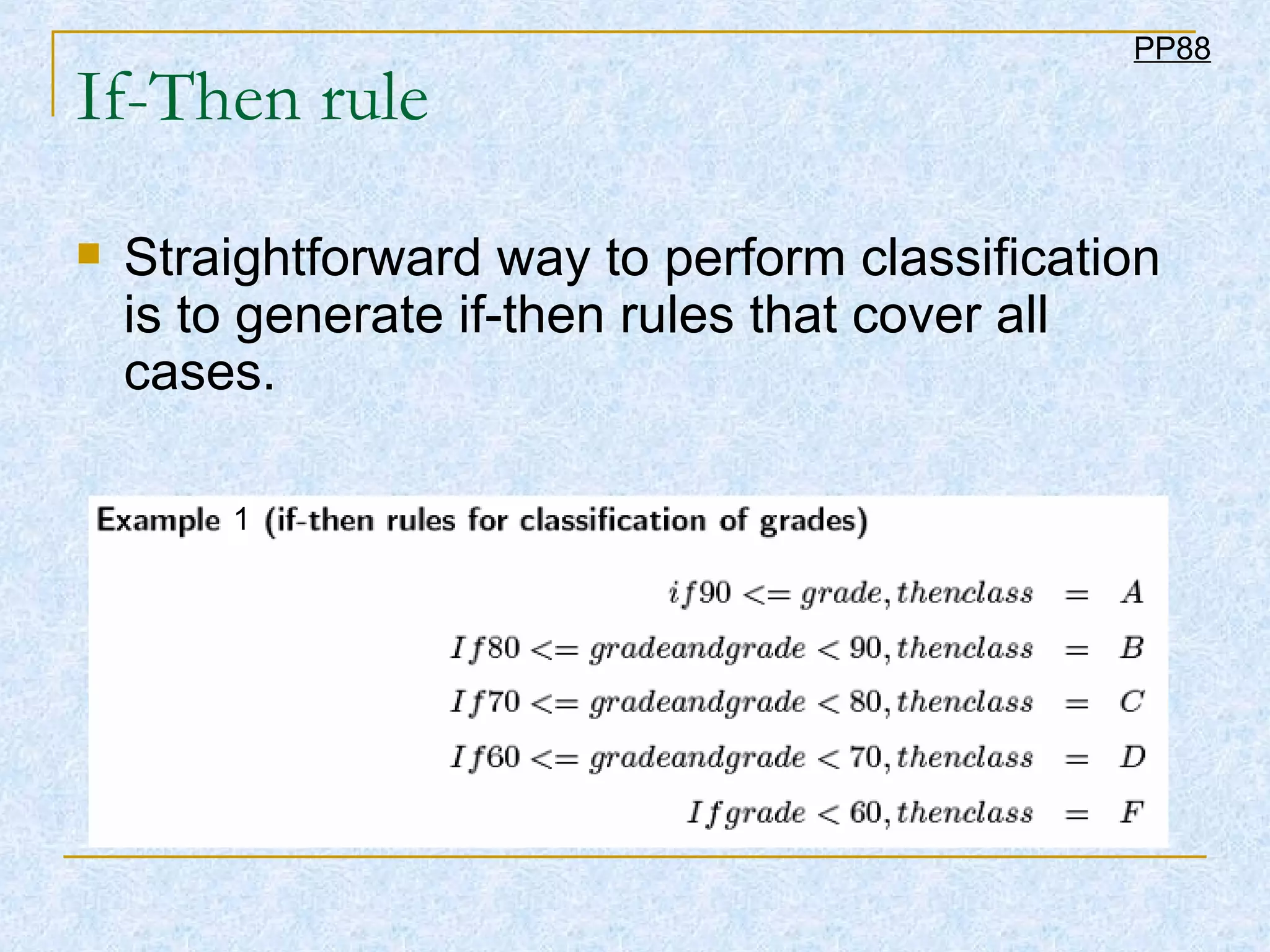

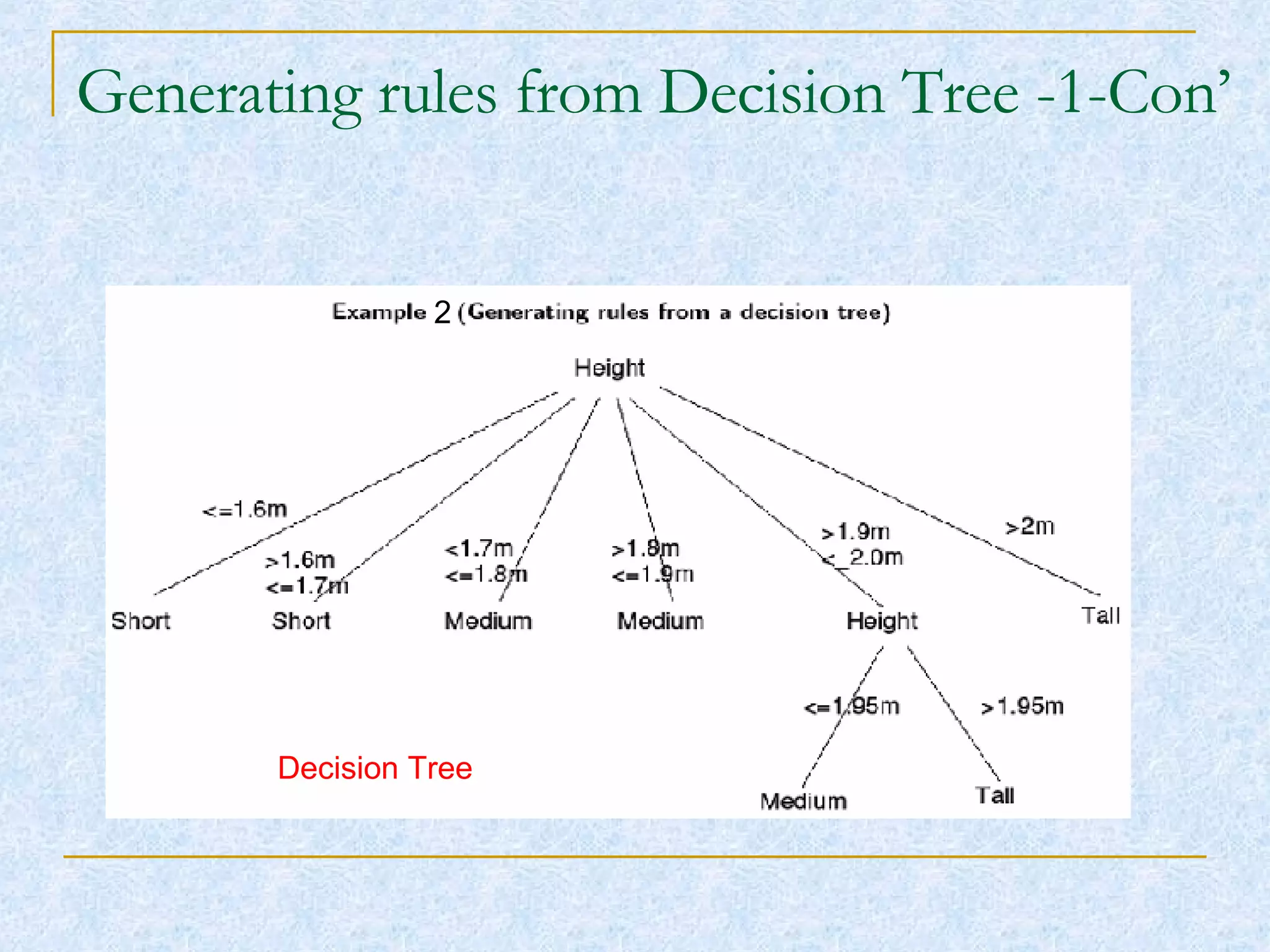

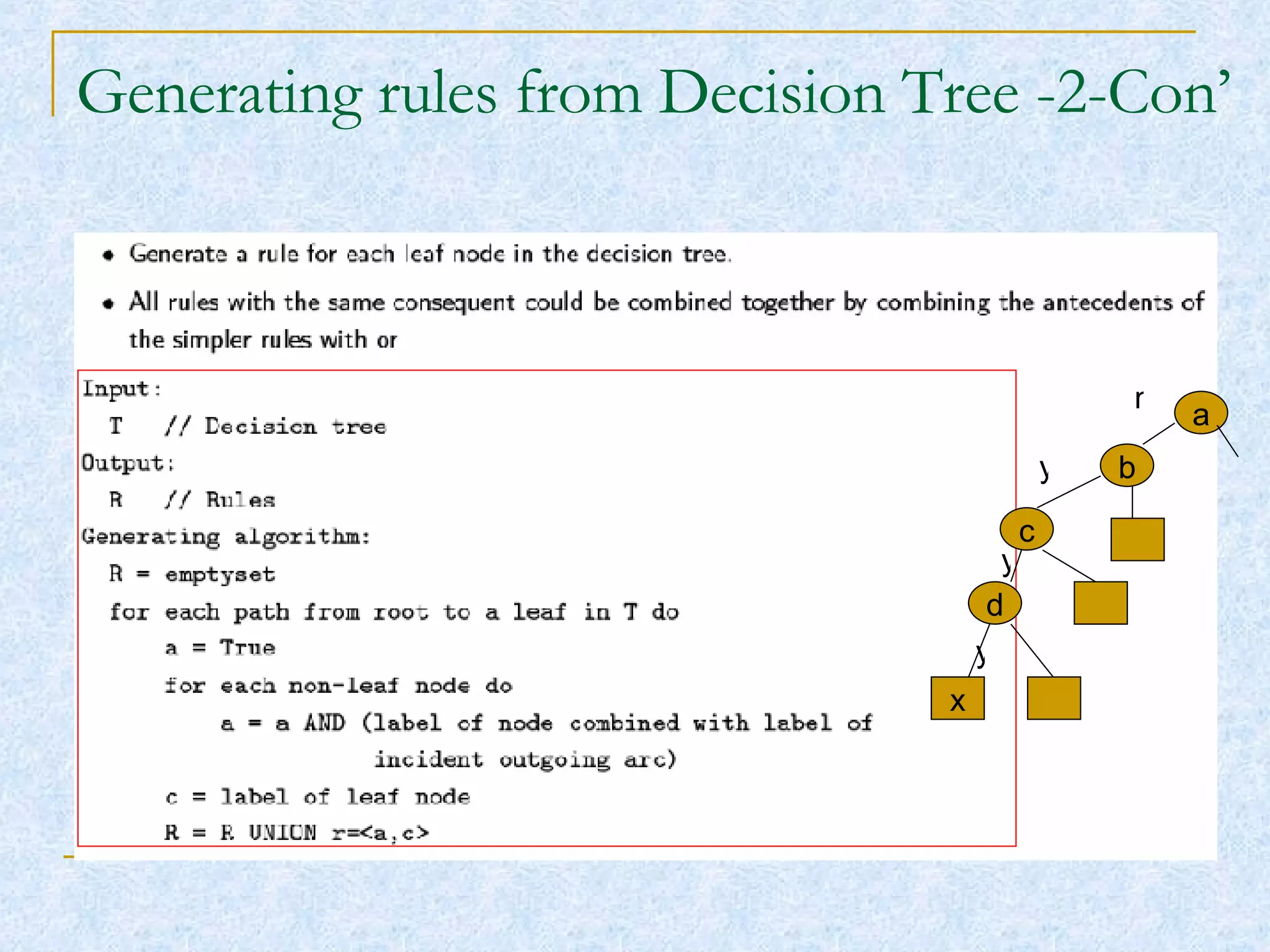

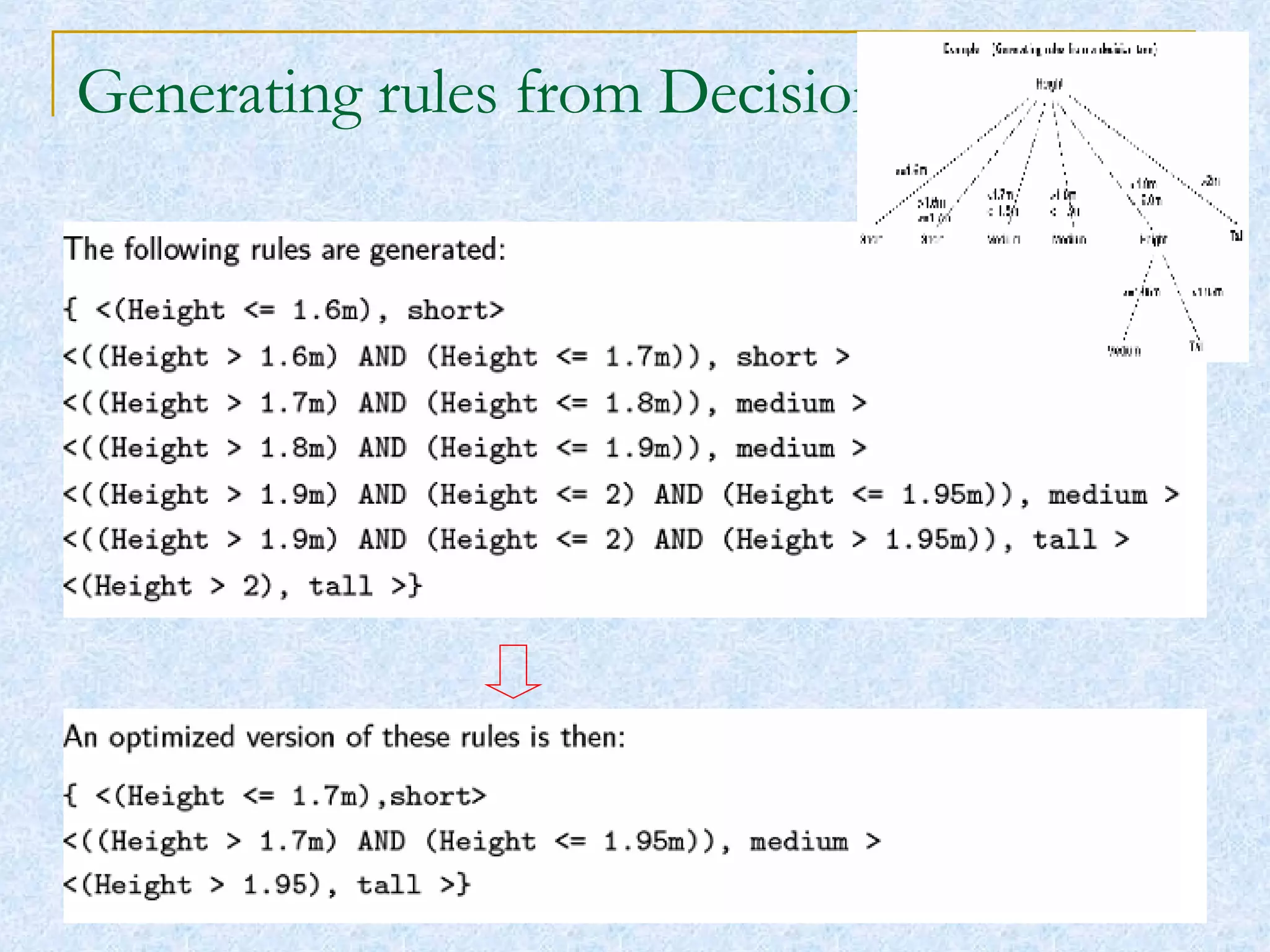

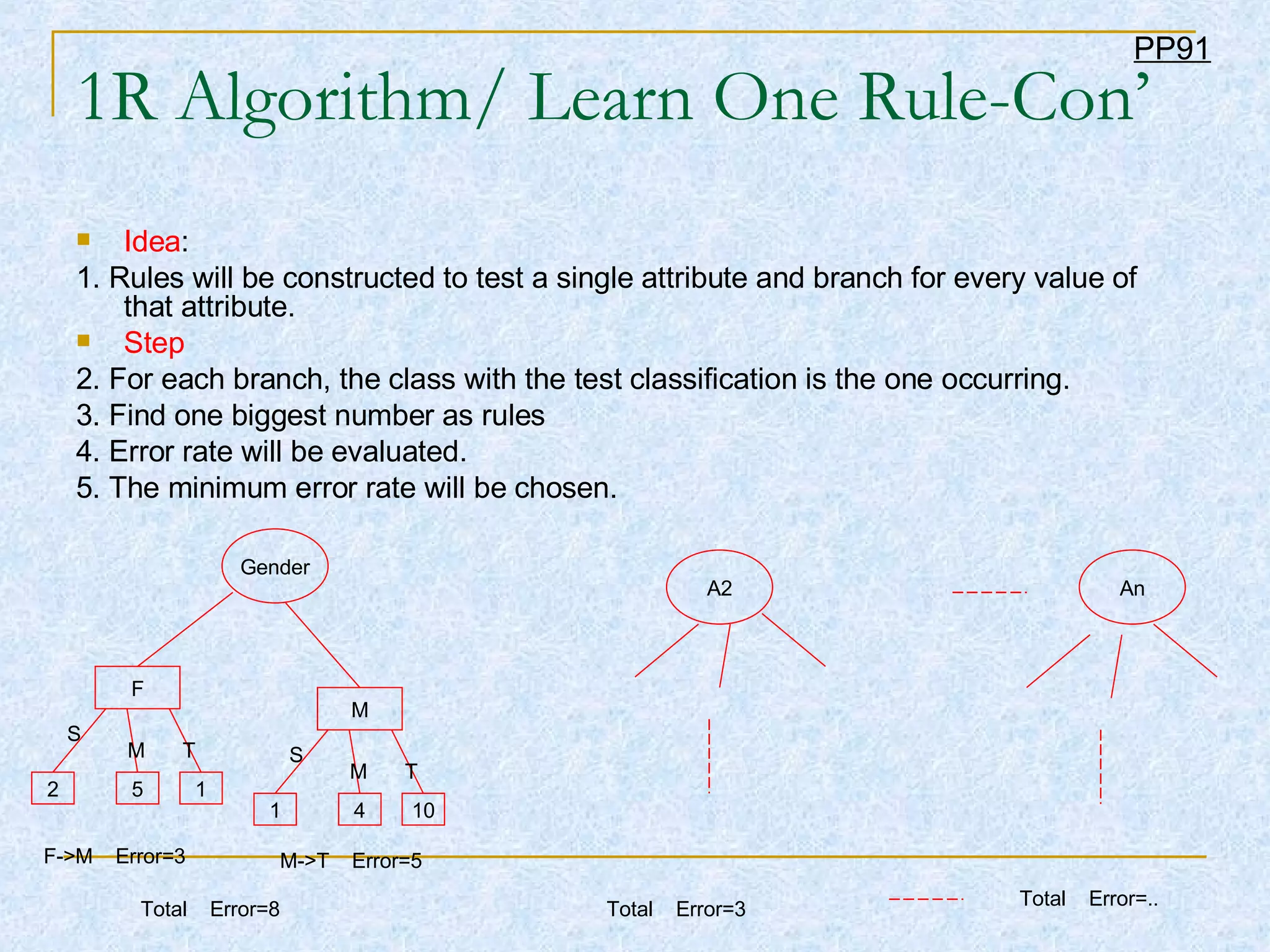

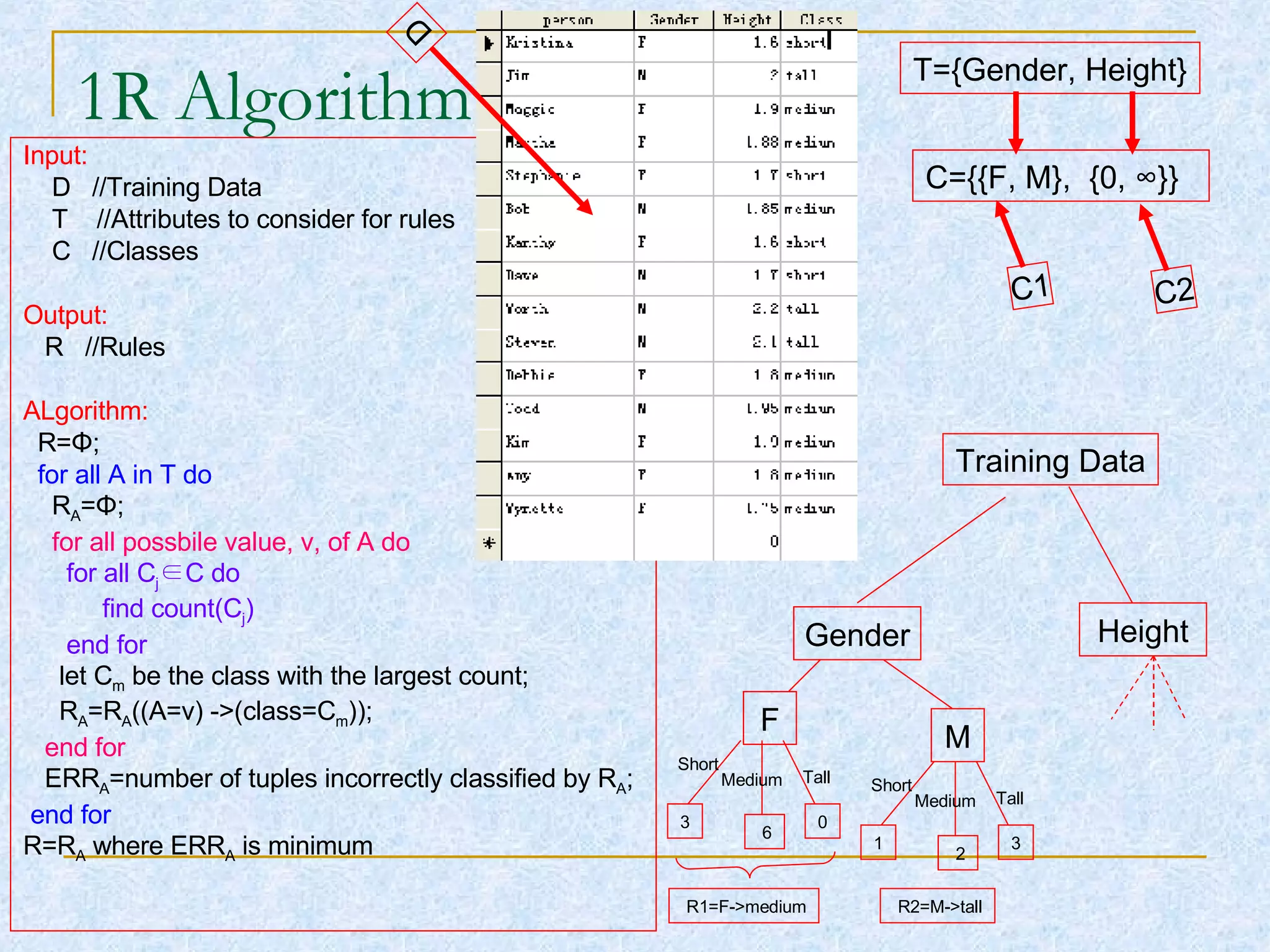

Chapter 8 discusses rules-based algorithm data mining technology, detailing methods for creating classification rules using algorithms such as 1R and PRISM. These algorithms generate rules by analyzing training data to classify instances into specific categories, with a focus on maximizing classification accuracy. Applications extend across various fields, including e-learning and healthcare, highlighting the versatility of these algorithms in generating useful insights from data.

![Chapter 8 Covering (Rules-based) Algorithm Written by Shakhina Pulatova Presented by Zhao Xinyou [email_address] 2007.11.13 Data Mining Technology Some materials (Examples) are taken from Website.](https://image.slidesharecdn.com/covering-rulesbased-algorithm-119476484338059-1/75/Covering-Rules-based-Algorithm-2-2048.jpg)

![Example 5 – 1R-3-Con’ Rules based on height … ... … 0/2 0/2 0/3 0/4 1/2 0/2 3/9 3/6 Error 1/15 (0 , 1.6]-> short (1.6, 1.7]->short (1.7, 1.8]-> medium (1.8, 1.9]-> medium (1.9, 2.0]-> medium (2.0, ∞ ]-> tall Height (Step=0.1) 2 6/15 F->medium M->tall Gender 1 Total Error Rules Attribute Option](https://image.slidesharecdn.com/covering-rulesbased-algorithm-119476484338059-1/75/Covering-Rules-based-Algorithm-23-2048.jpg)