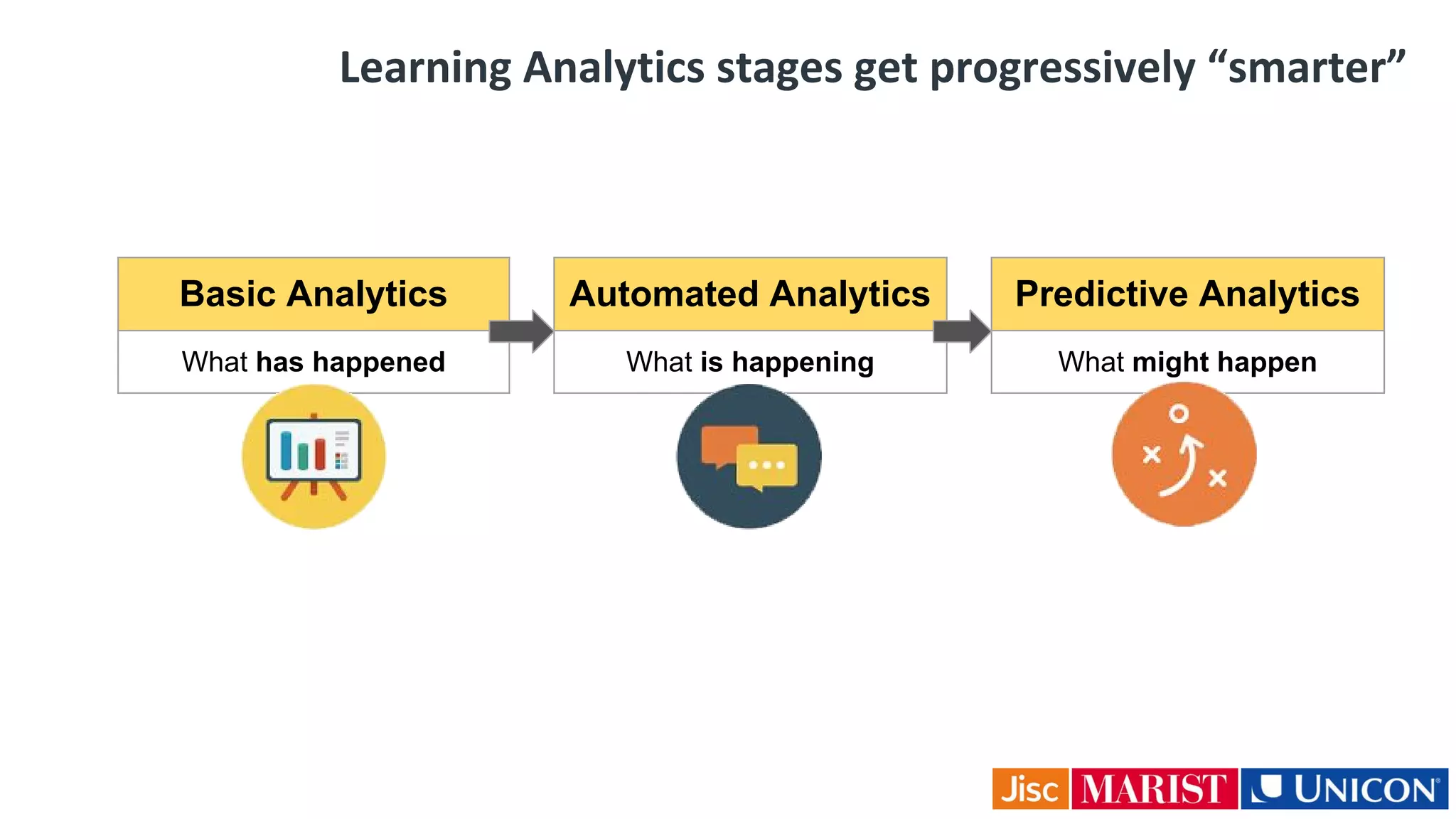

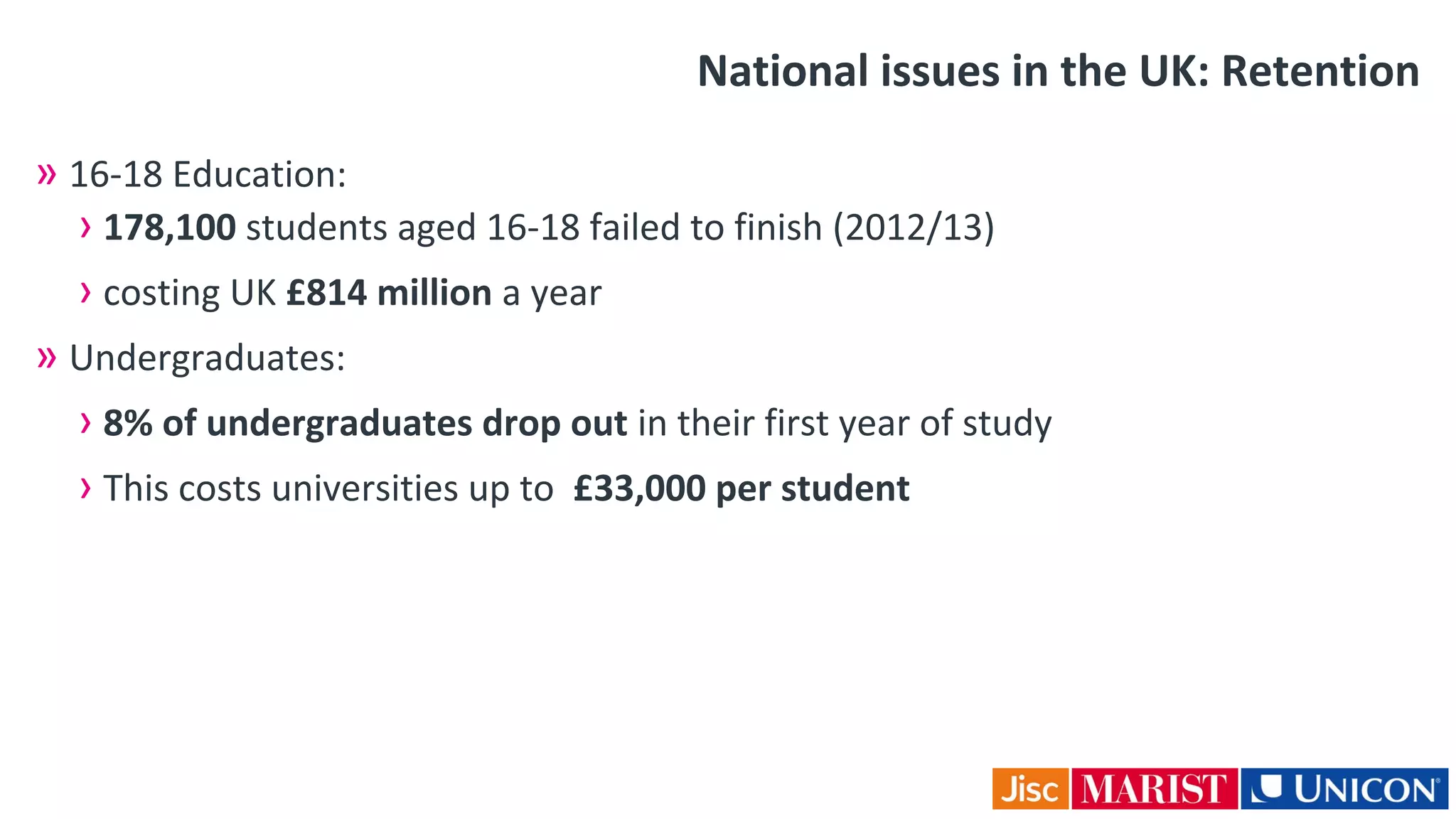

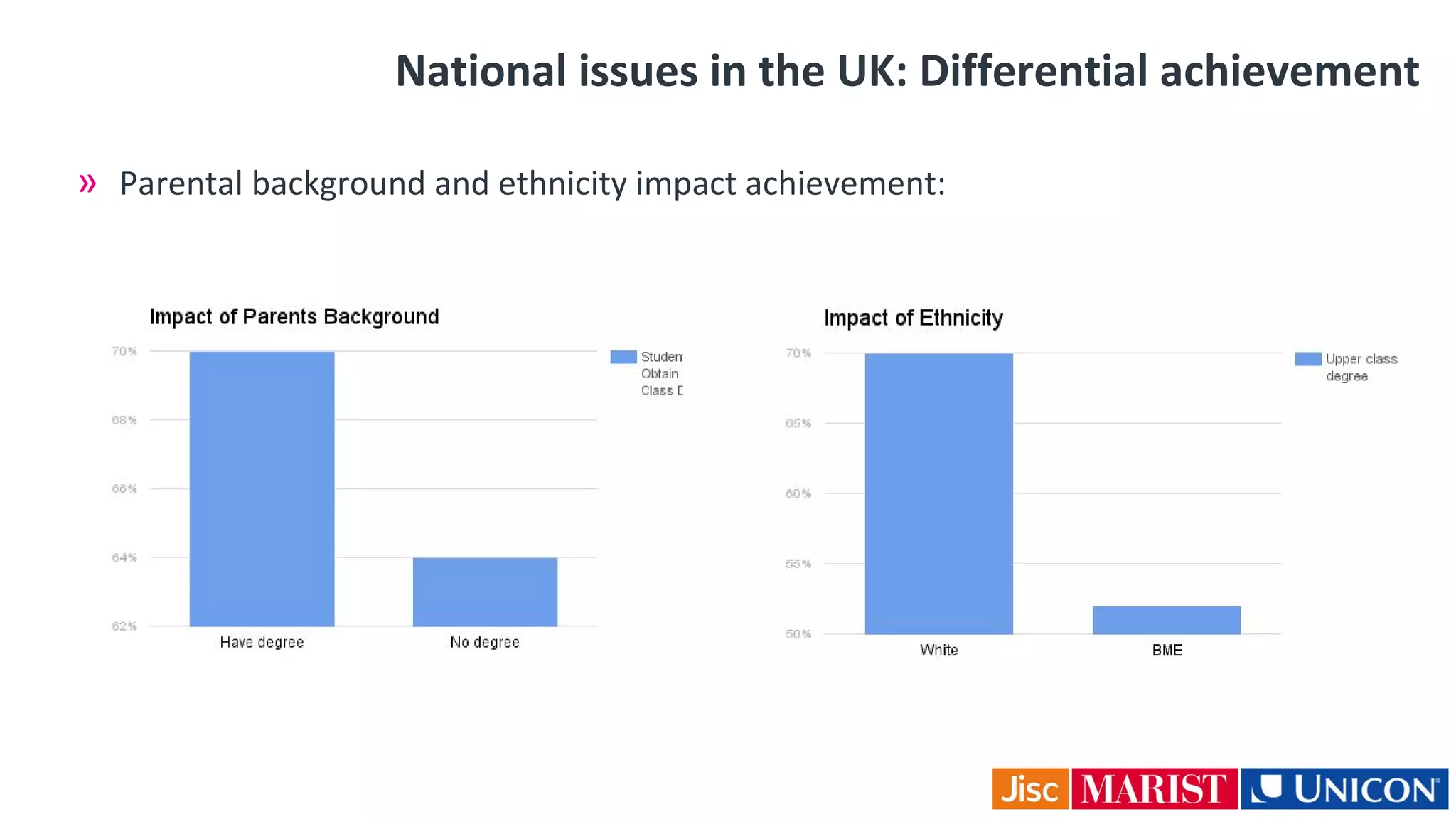

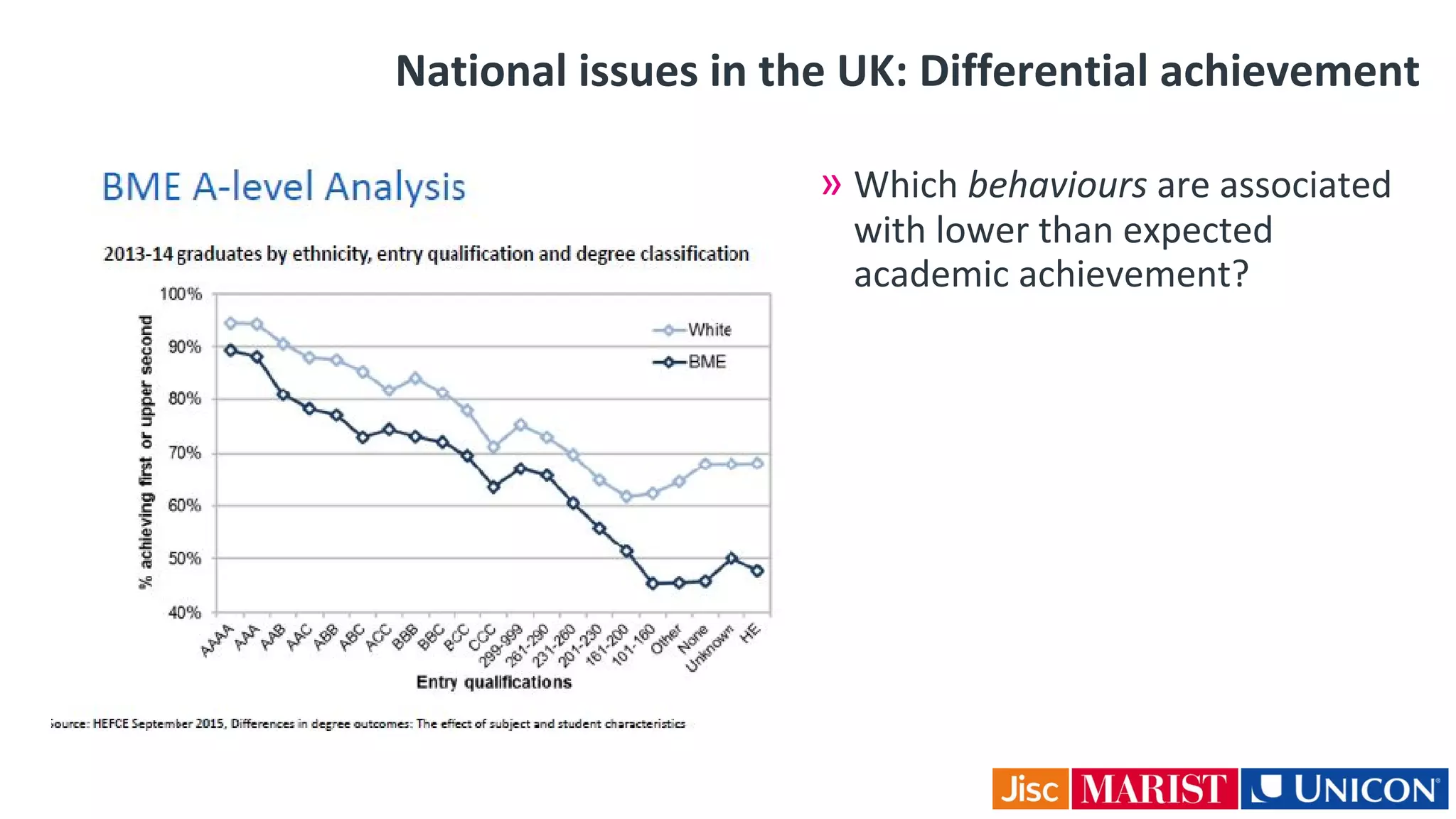

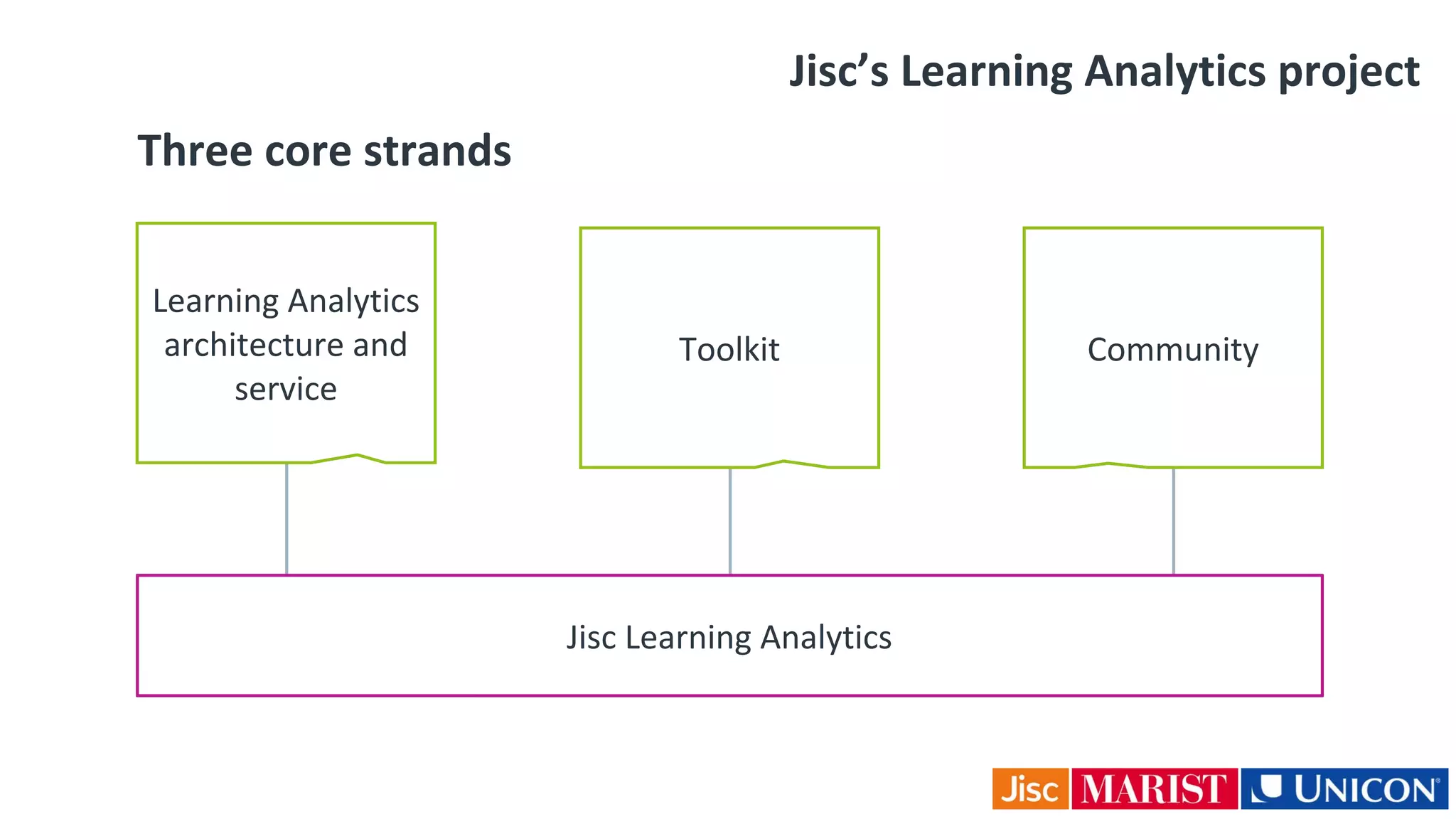

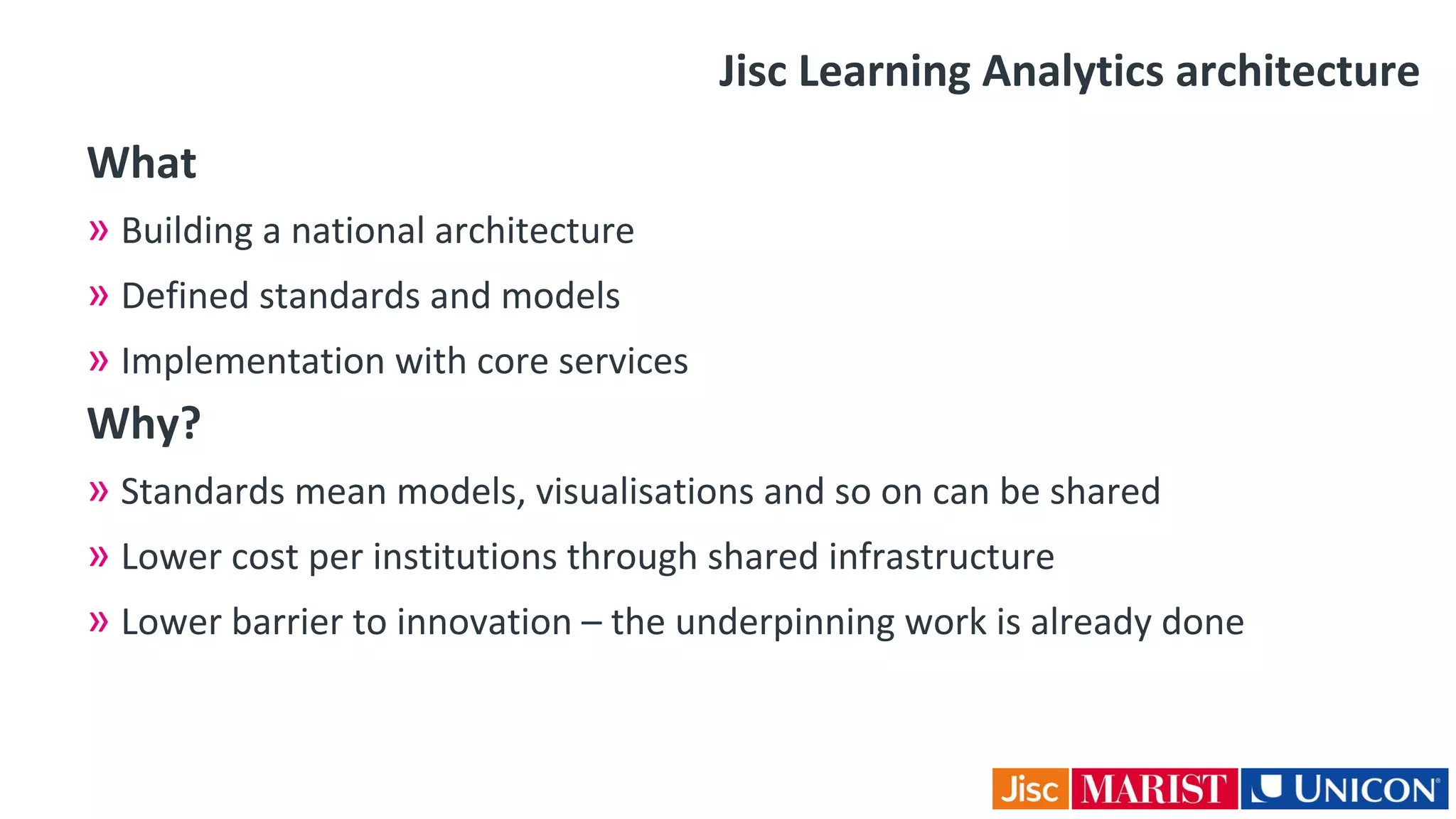

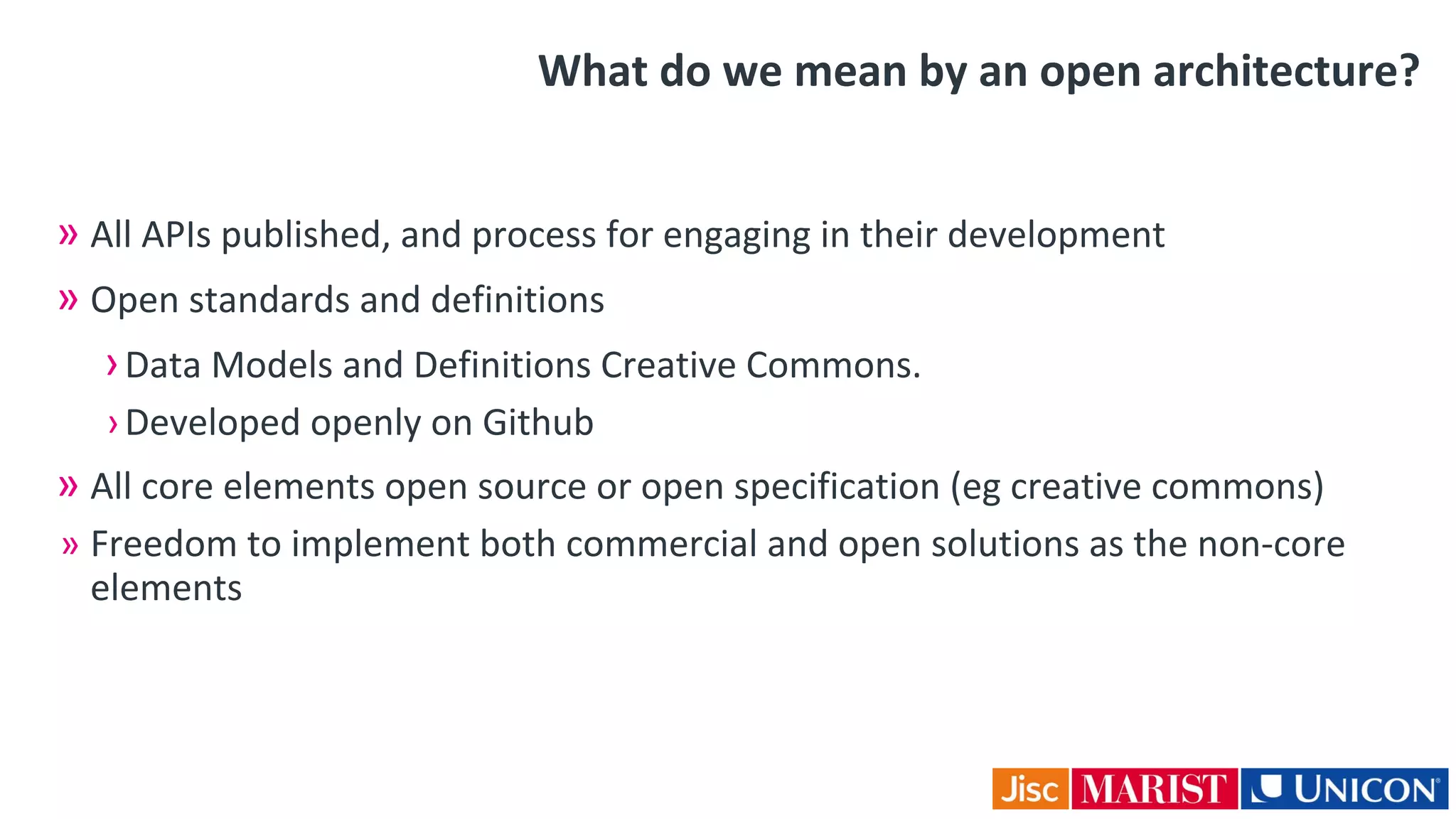

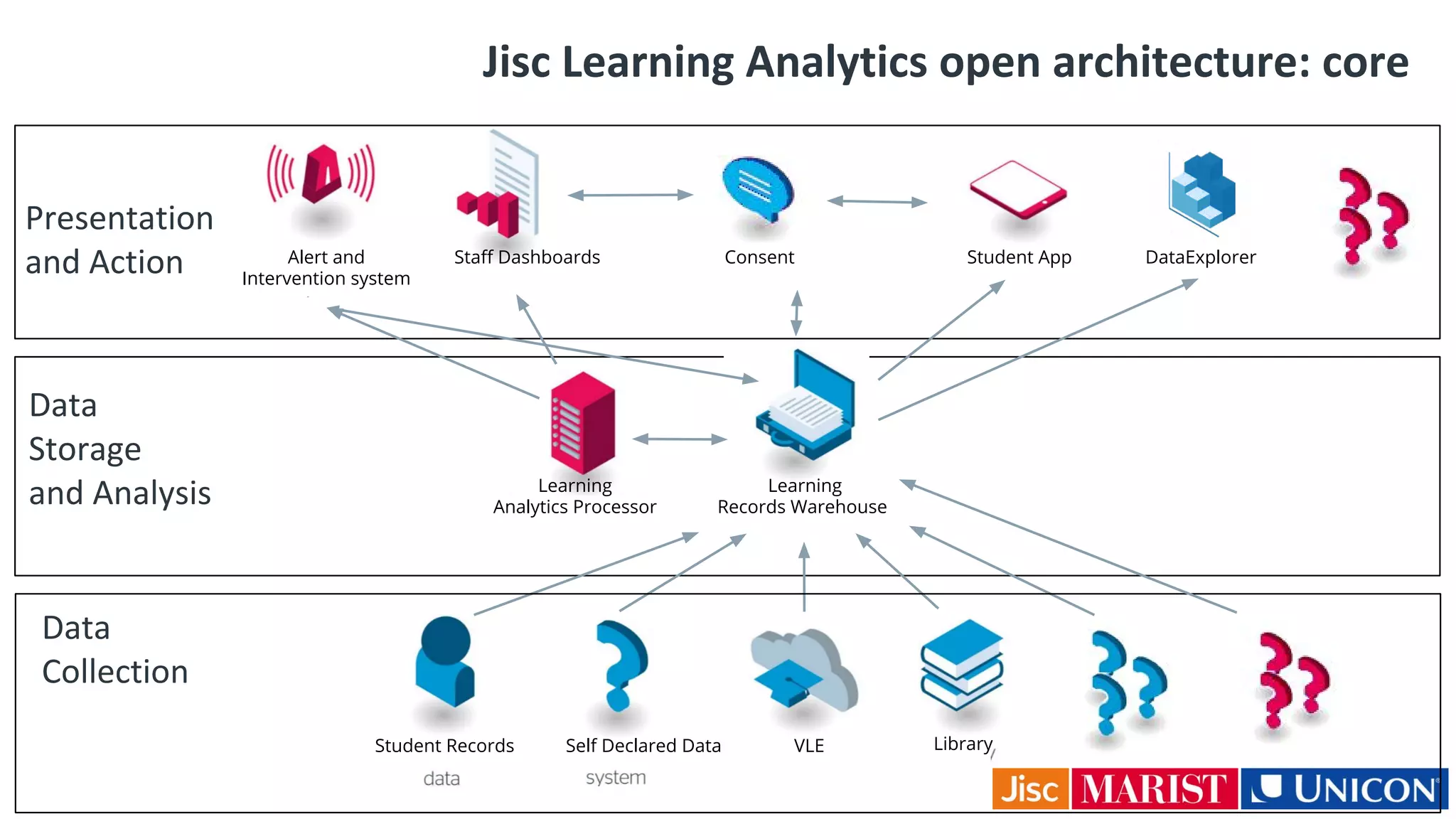

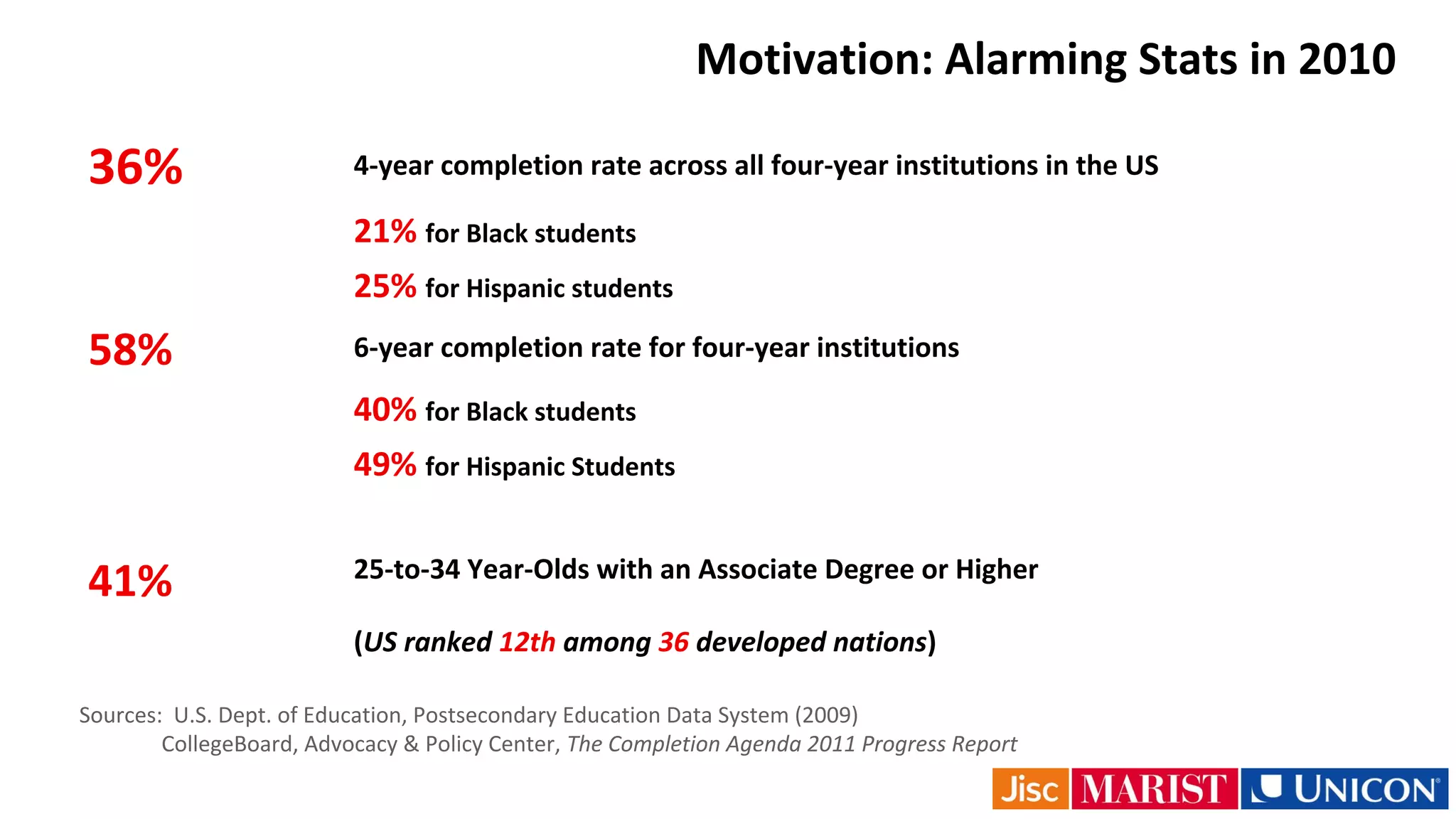

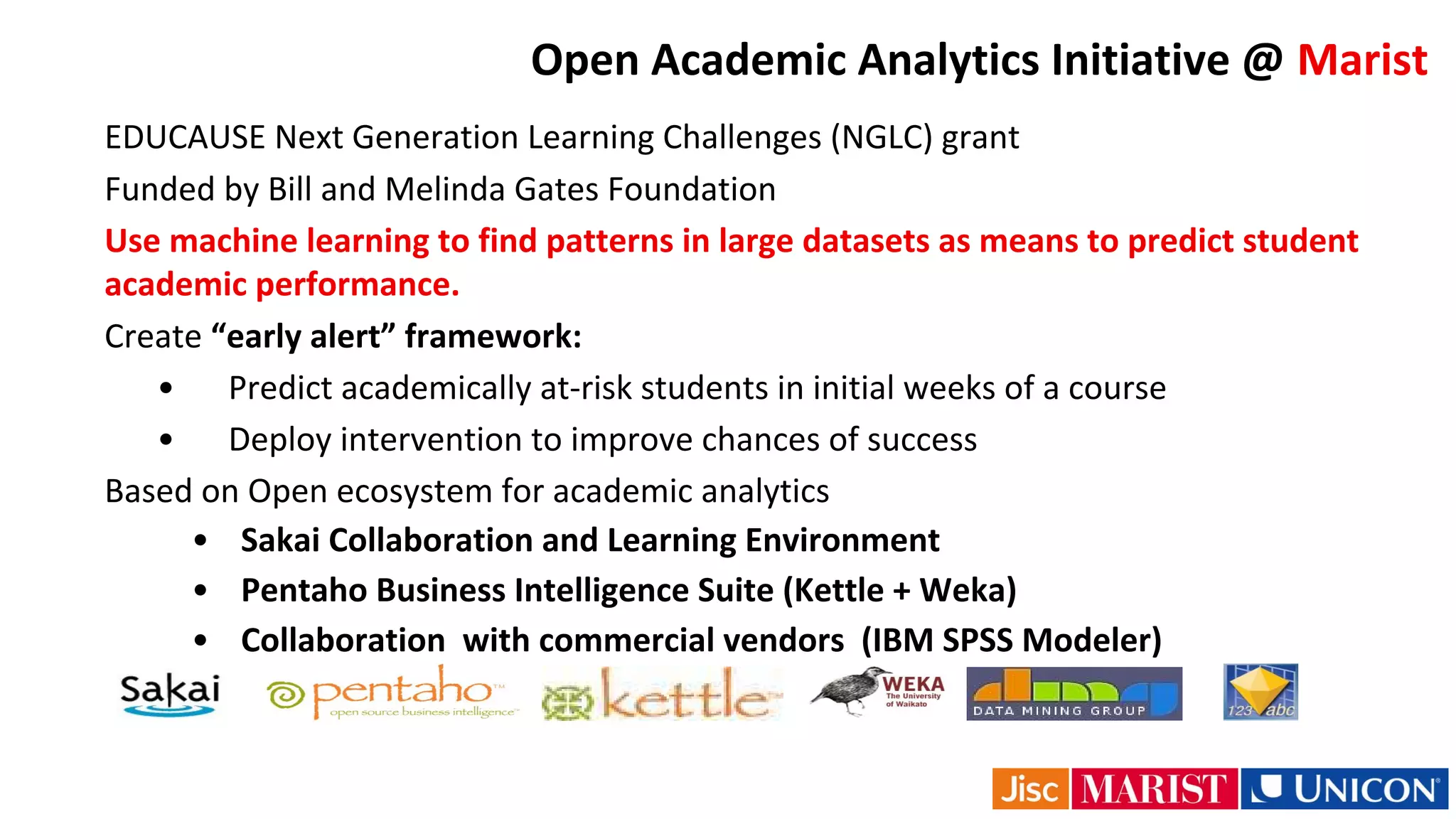

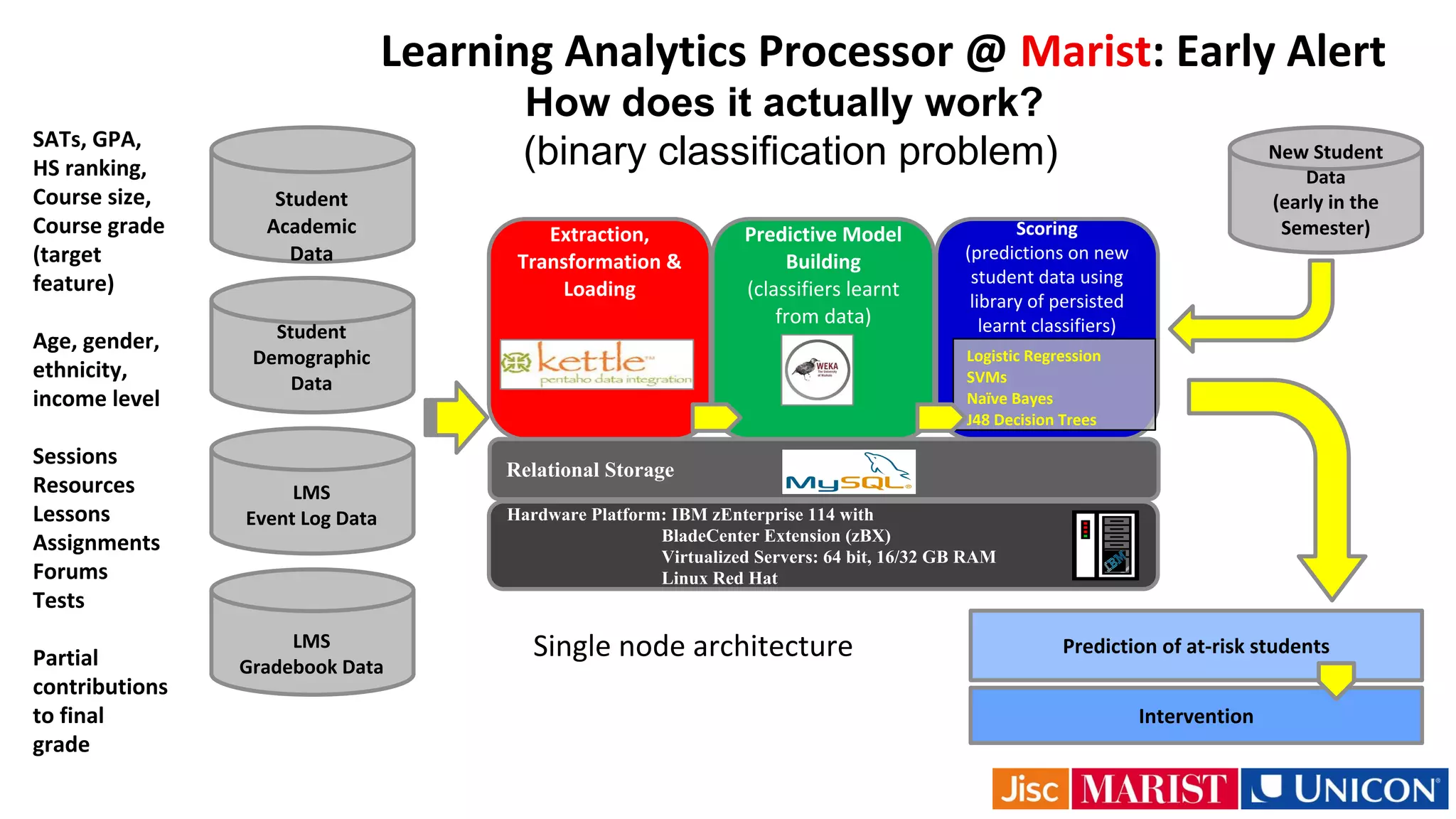

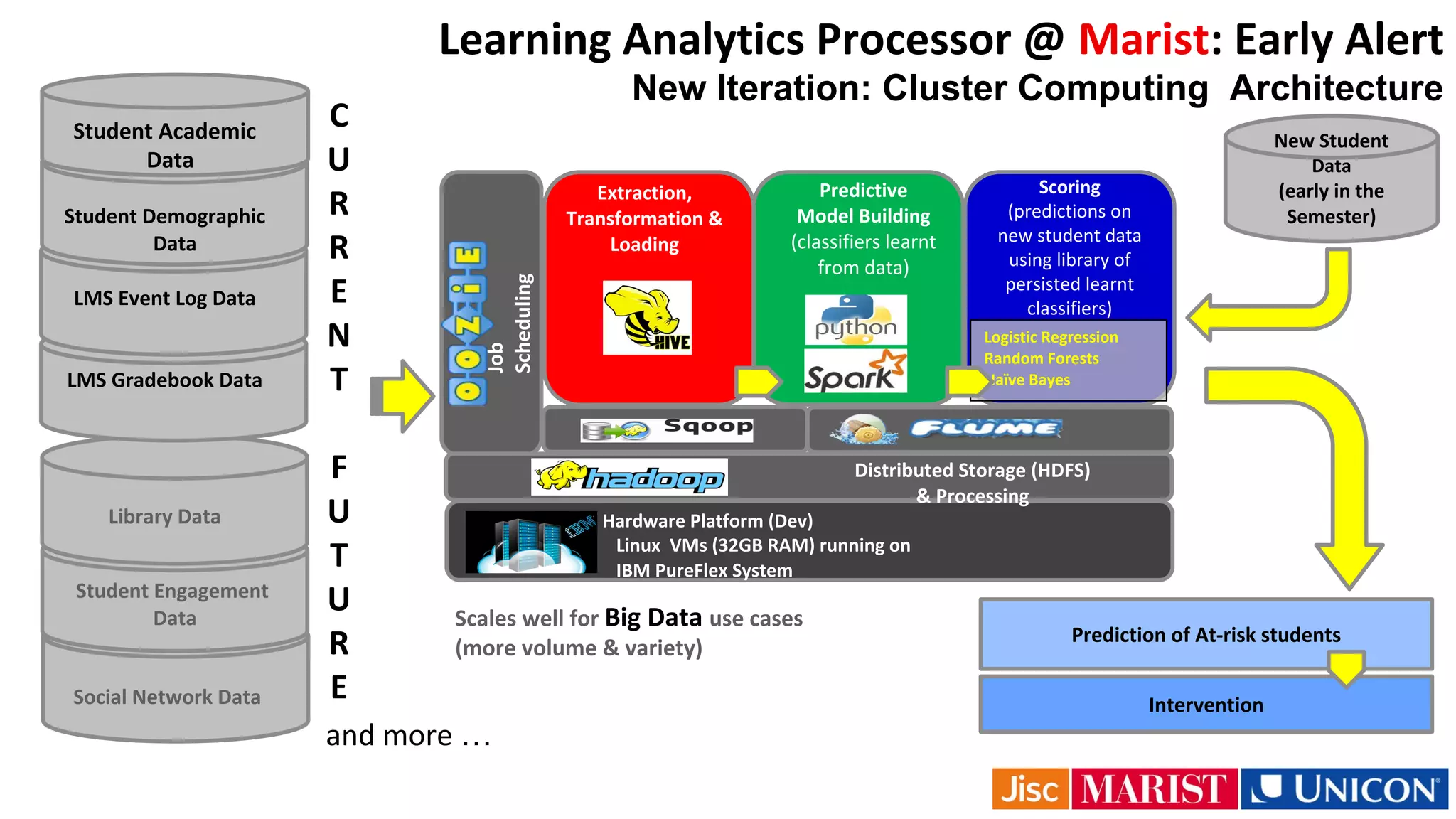

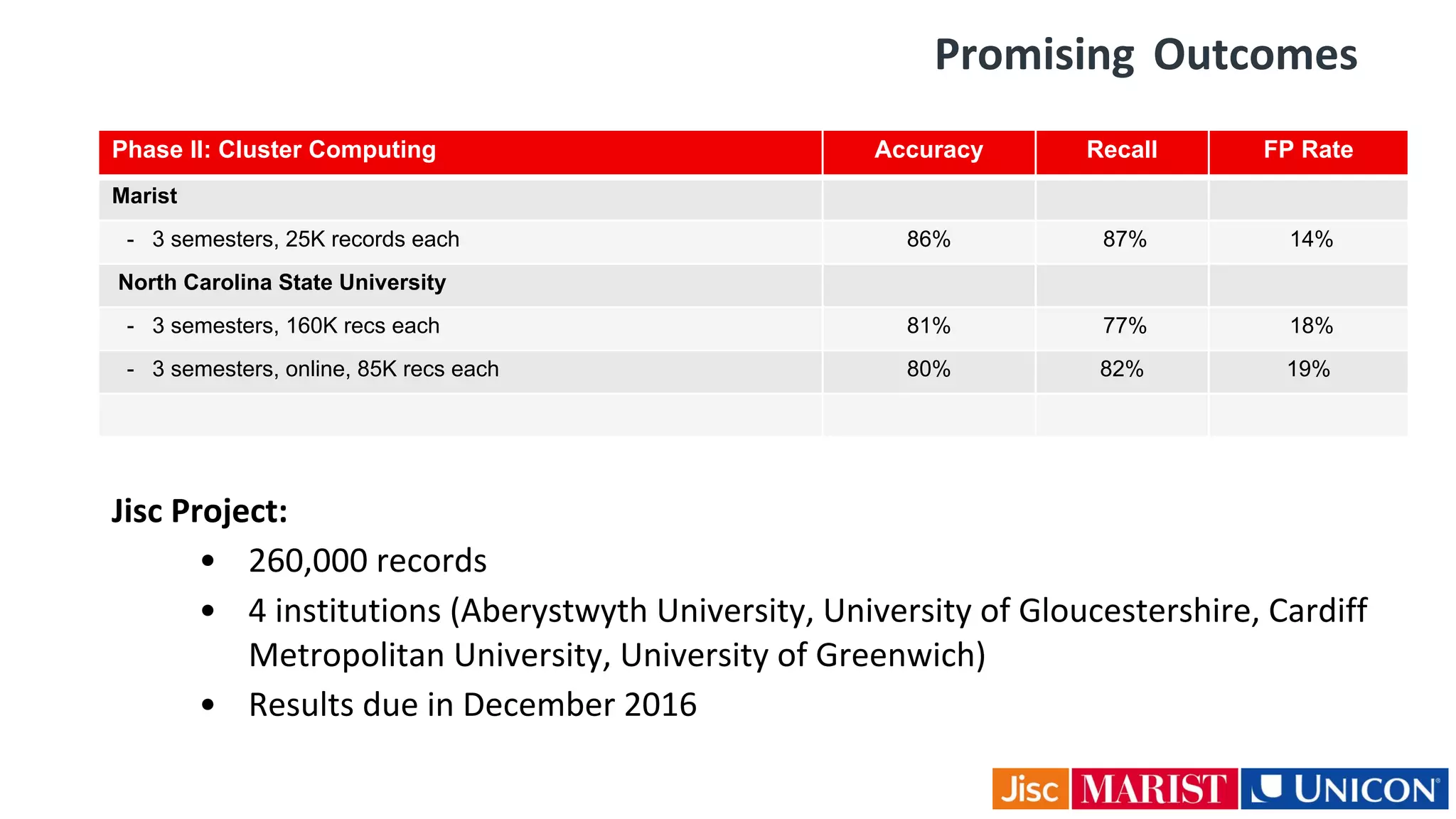

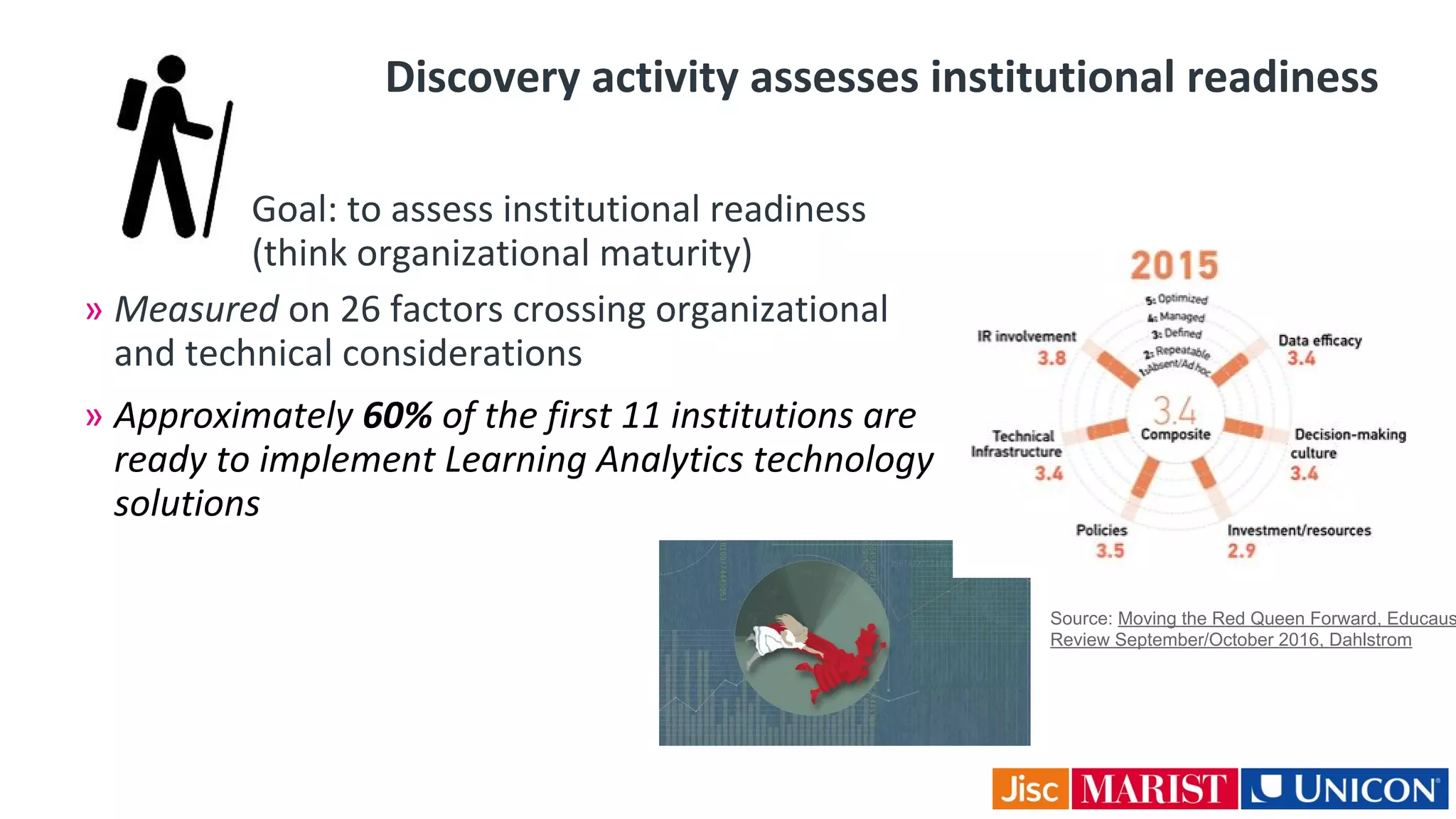

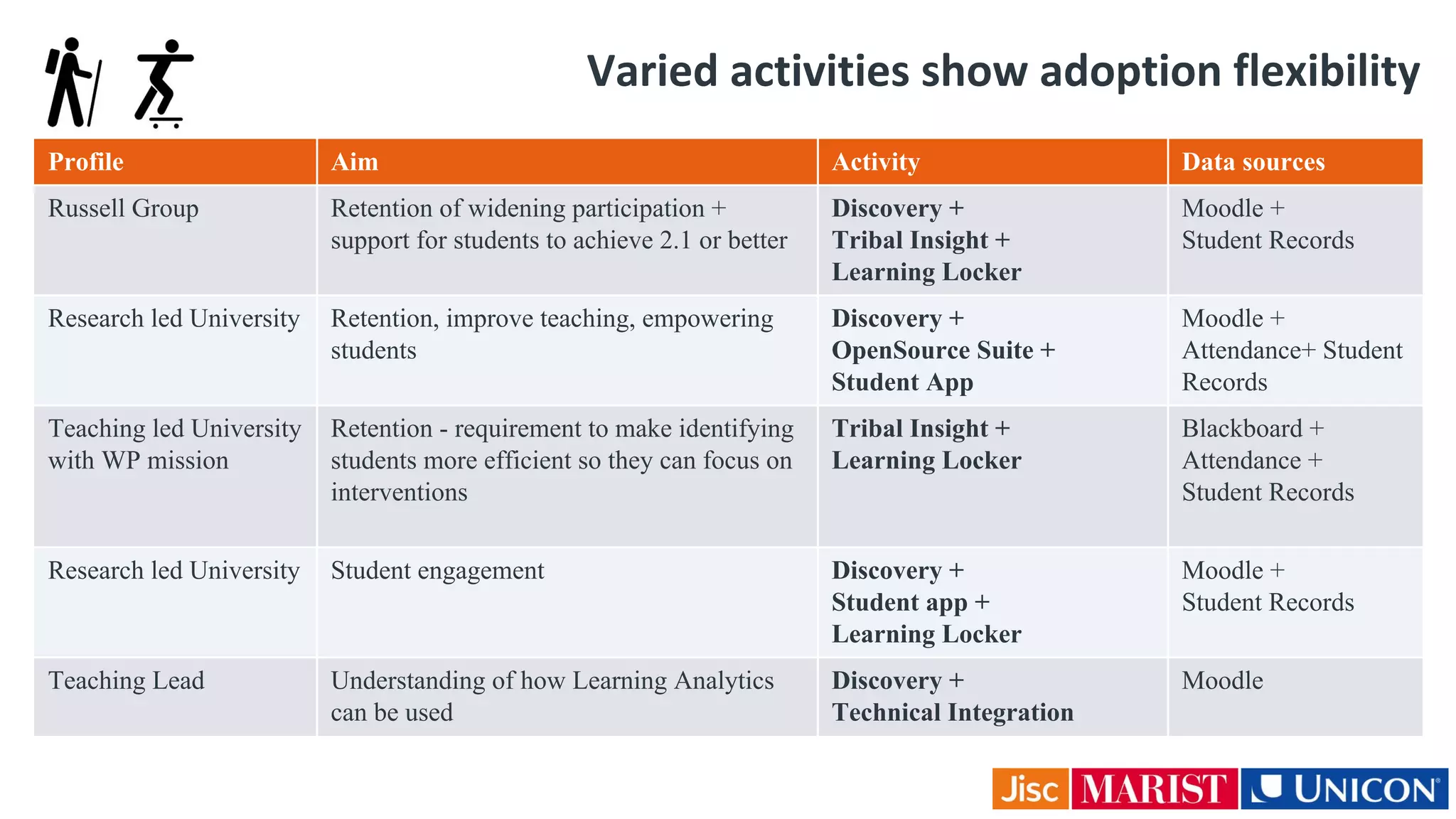

This document discusses deploying open learning analytics at a national scale in the UK. It provides an overview of learning analytics, including definitions, stages of analytics, and a strategic view of national issues related to retention, achievement, and teaching excellence. Technically, it describes Jisc's open architecture and predictive modeling framework. Implementation trends seen in the field emphasize change management, piloting solutions, focusing on data quality, and keeping initial implementations simple.