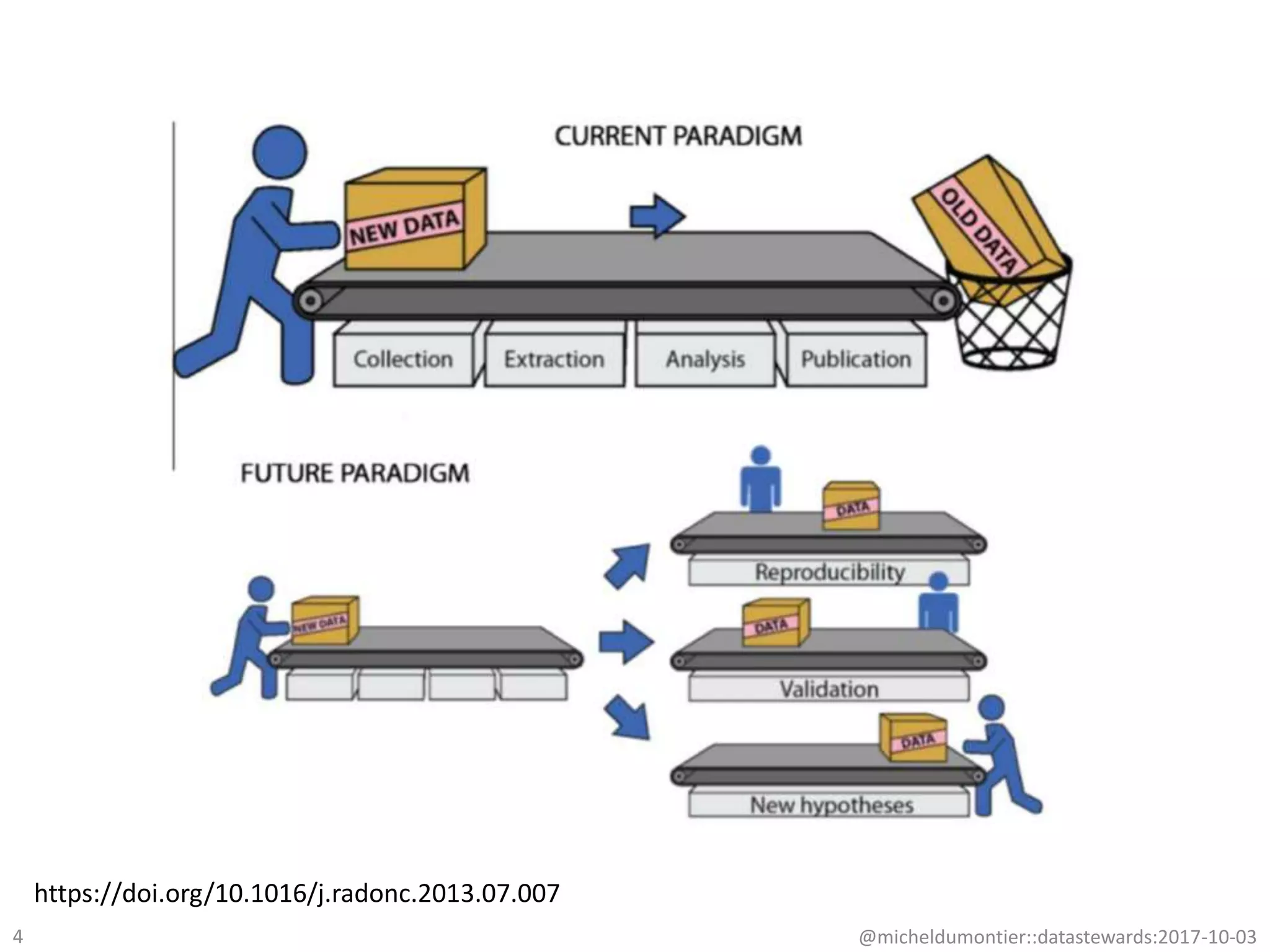

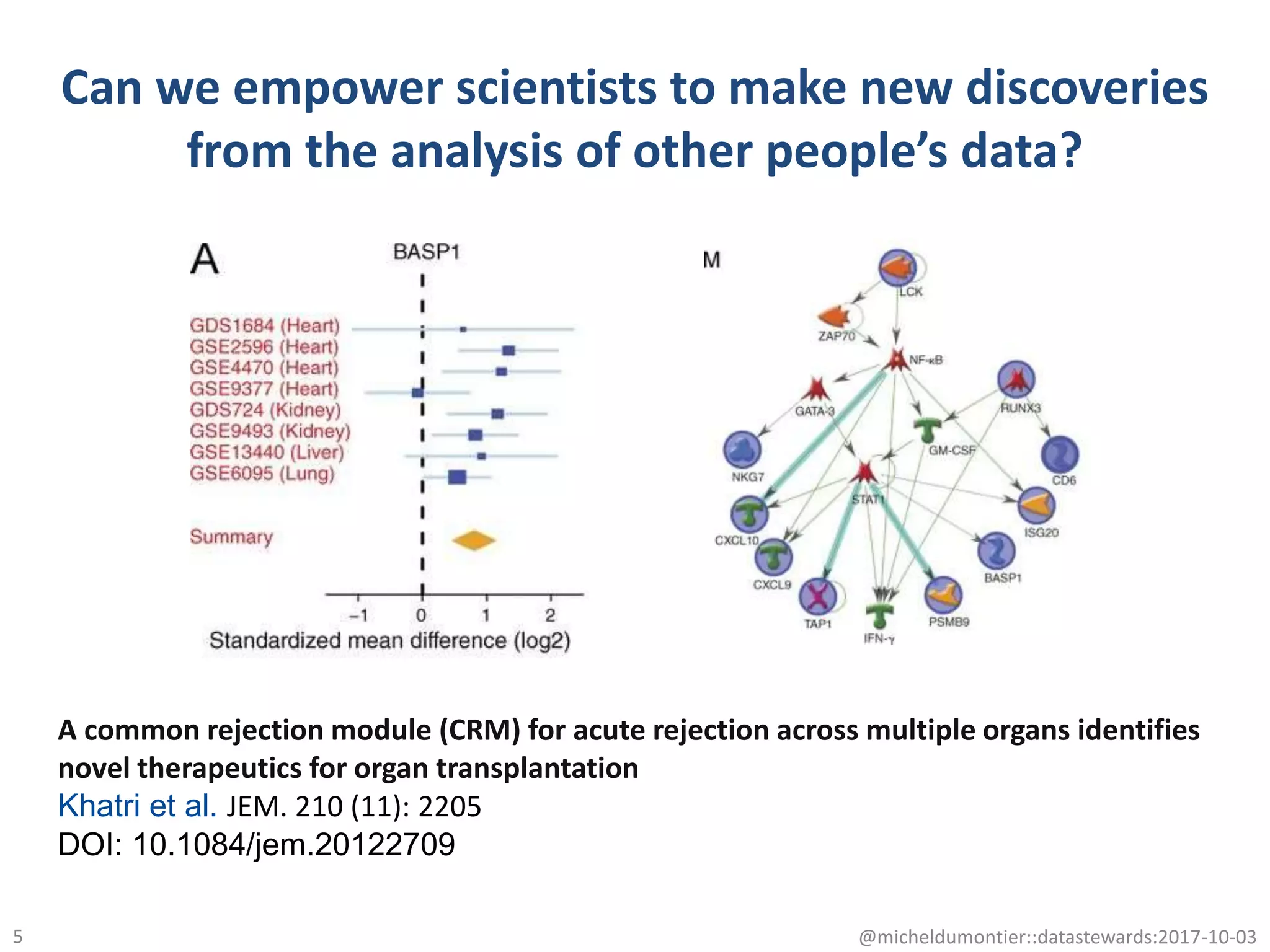

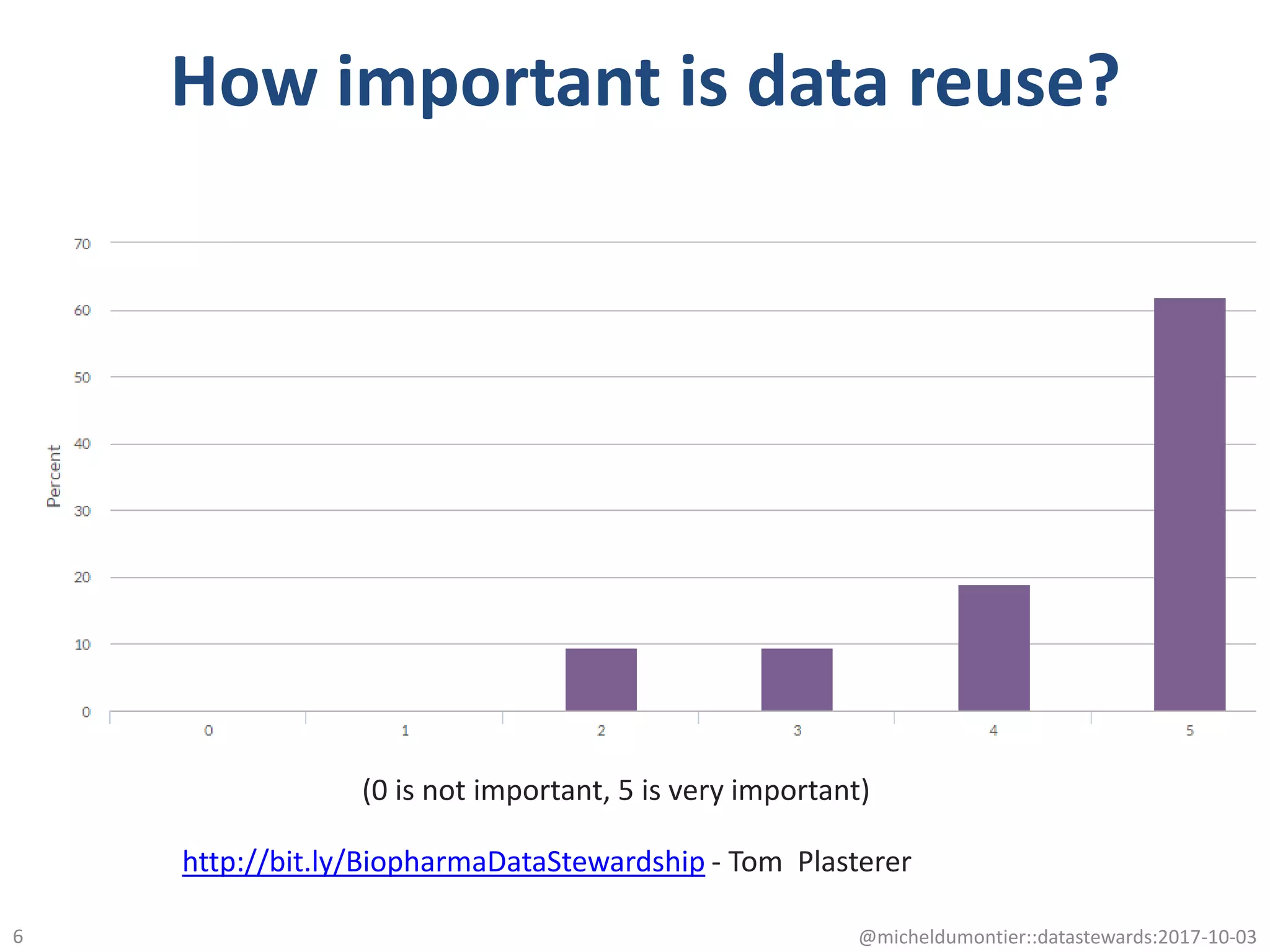

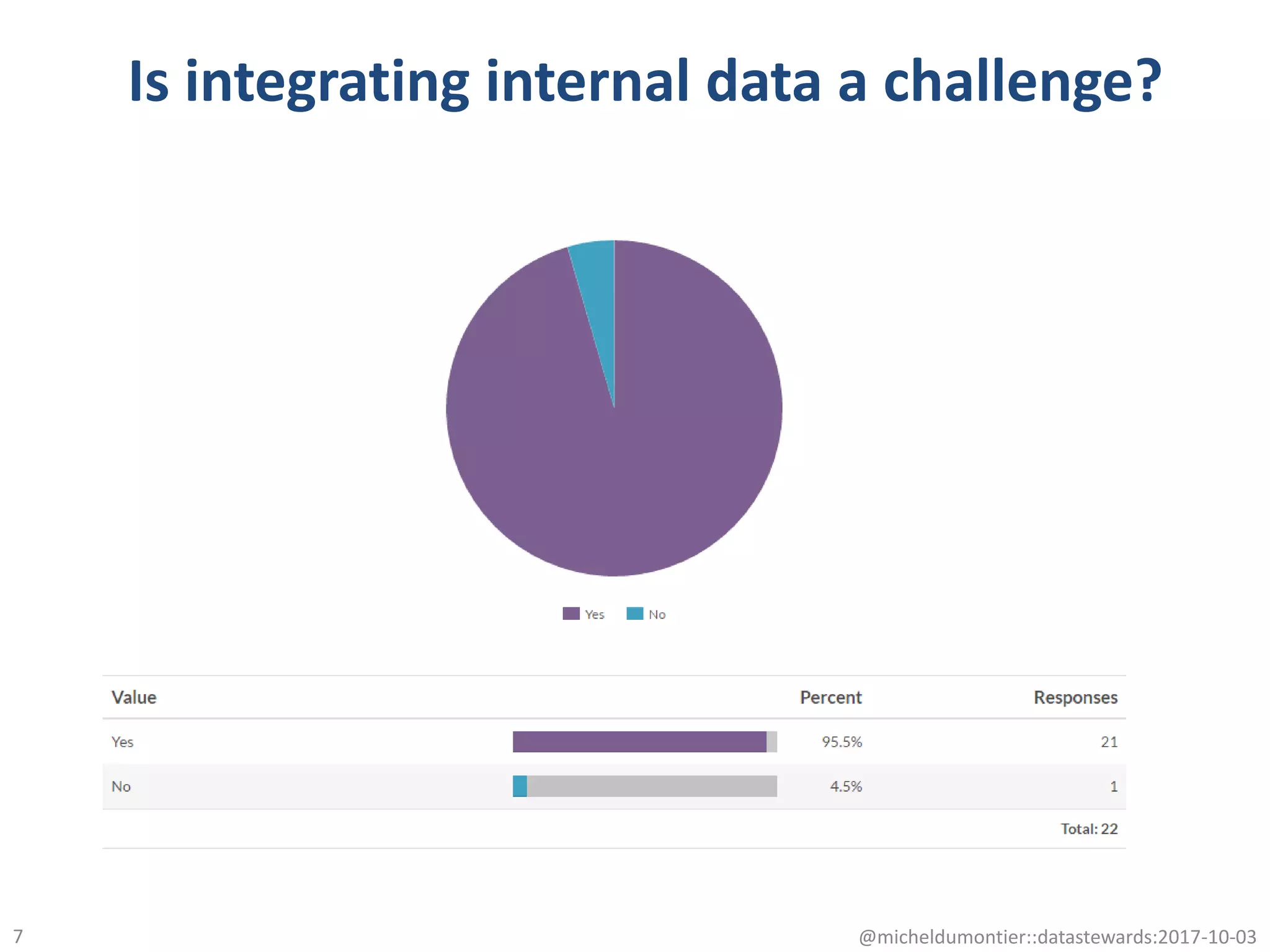

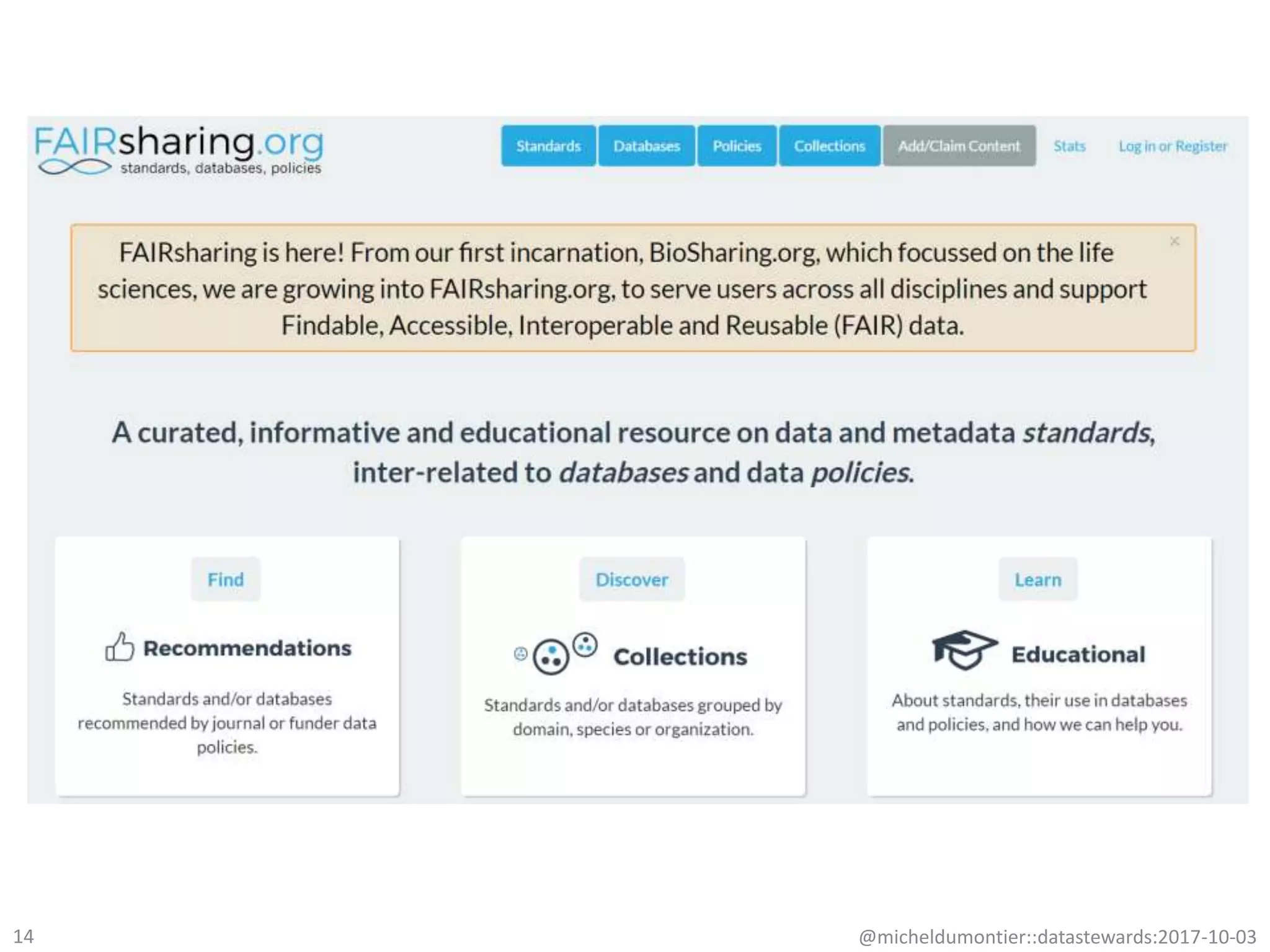

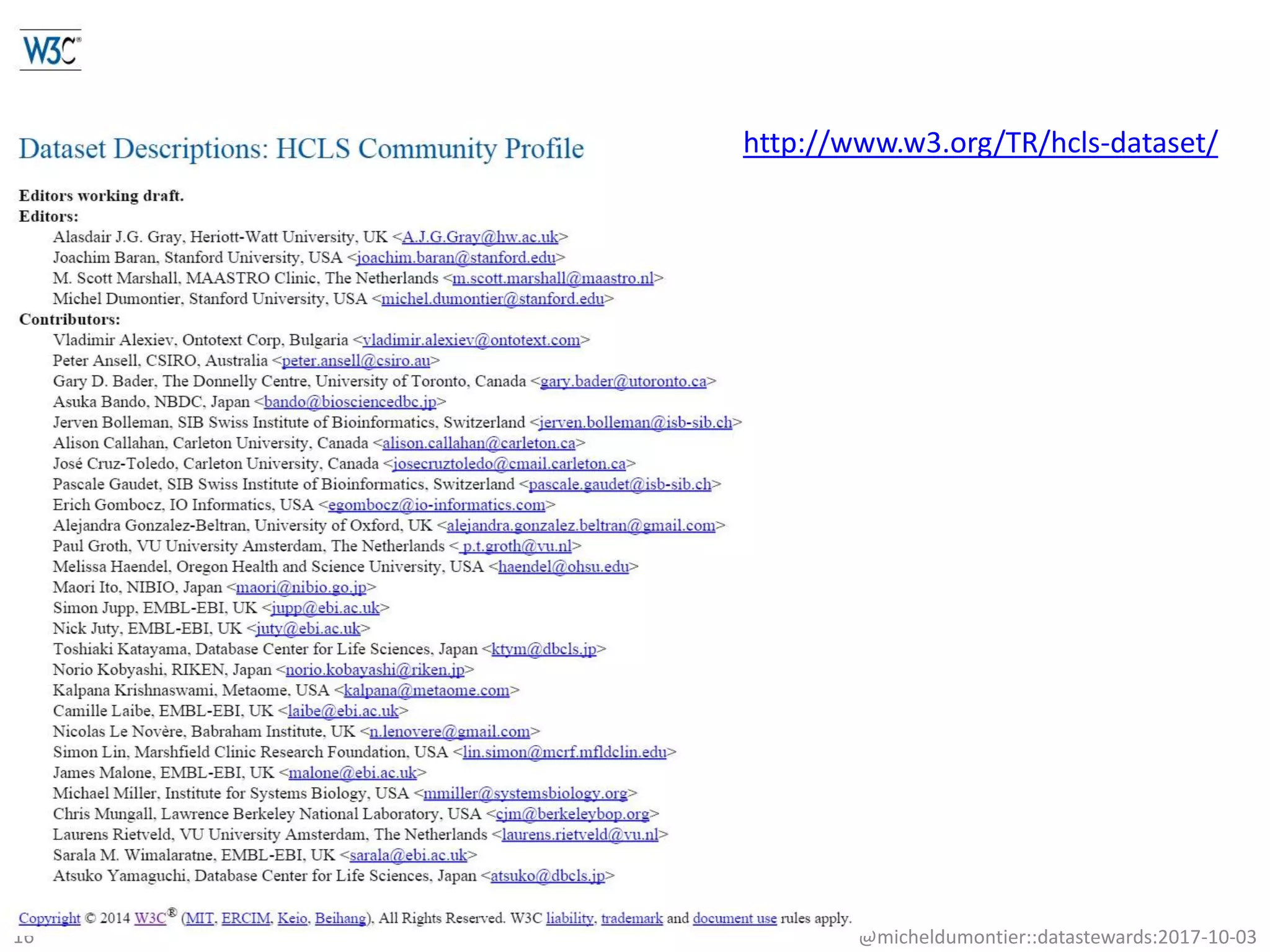

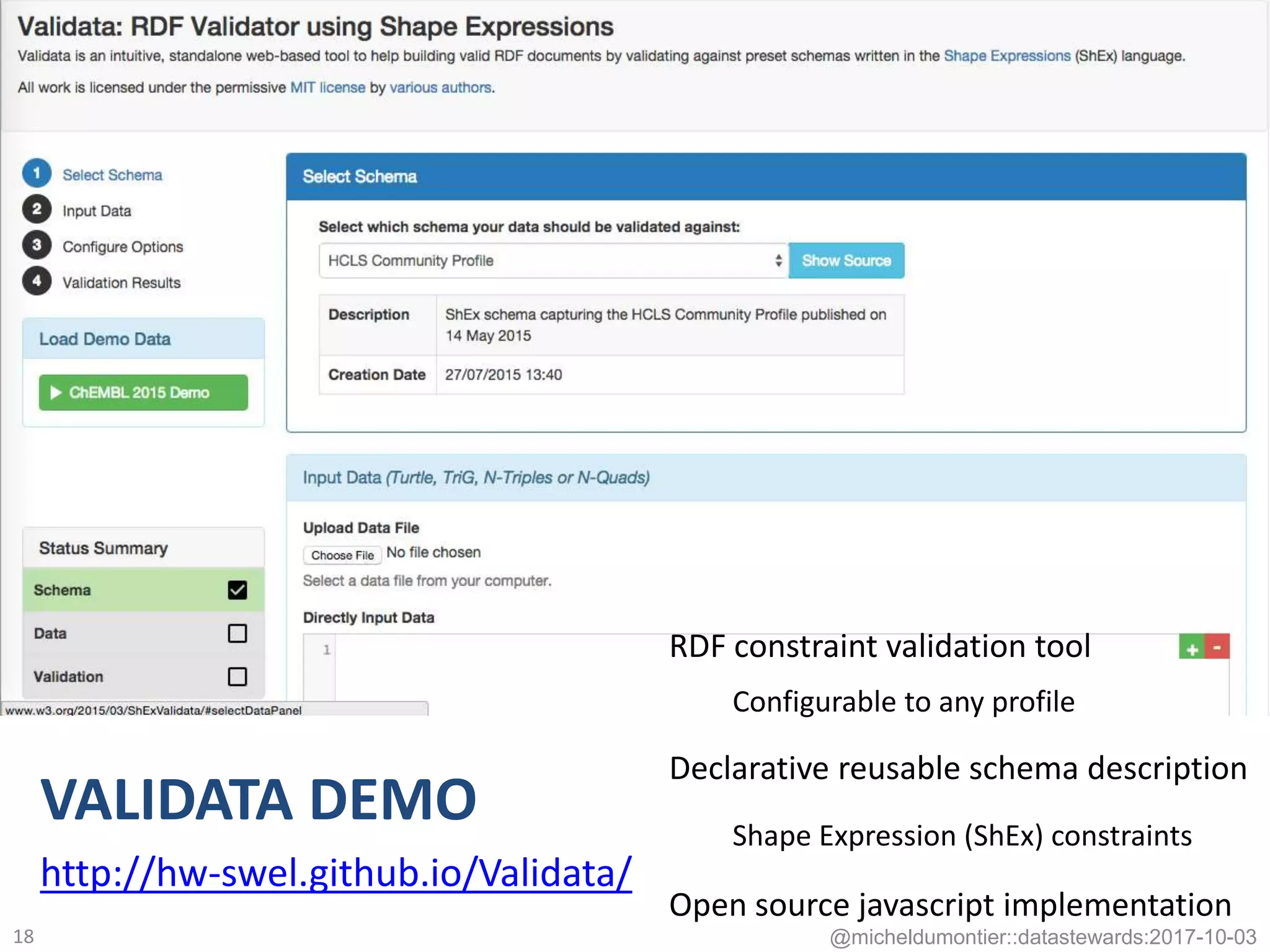

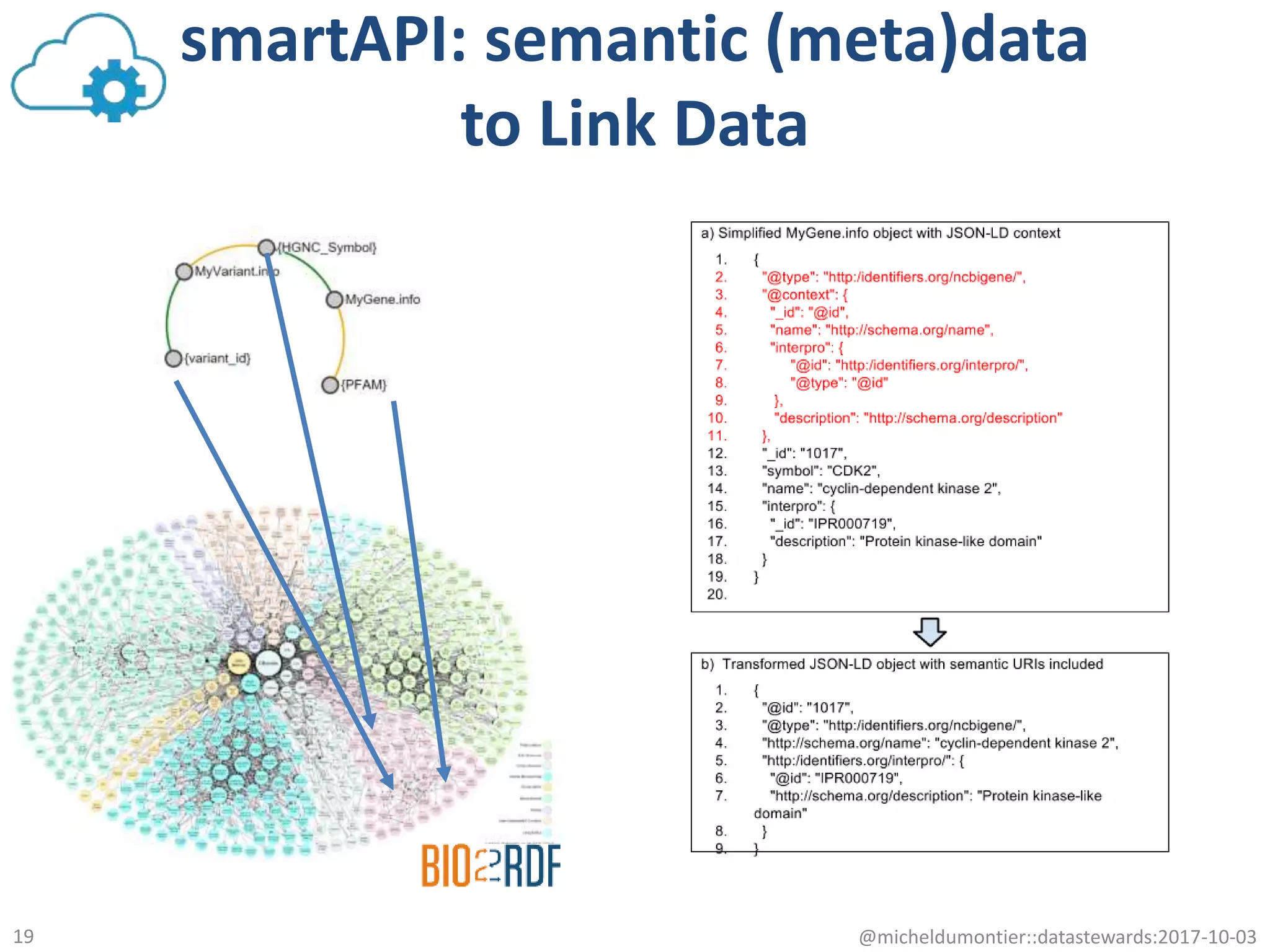

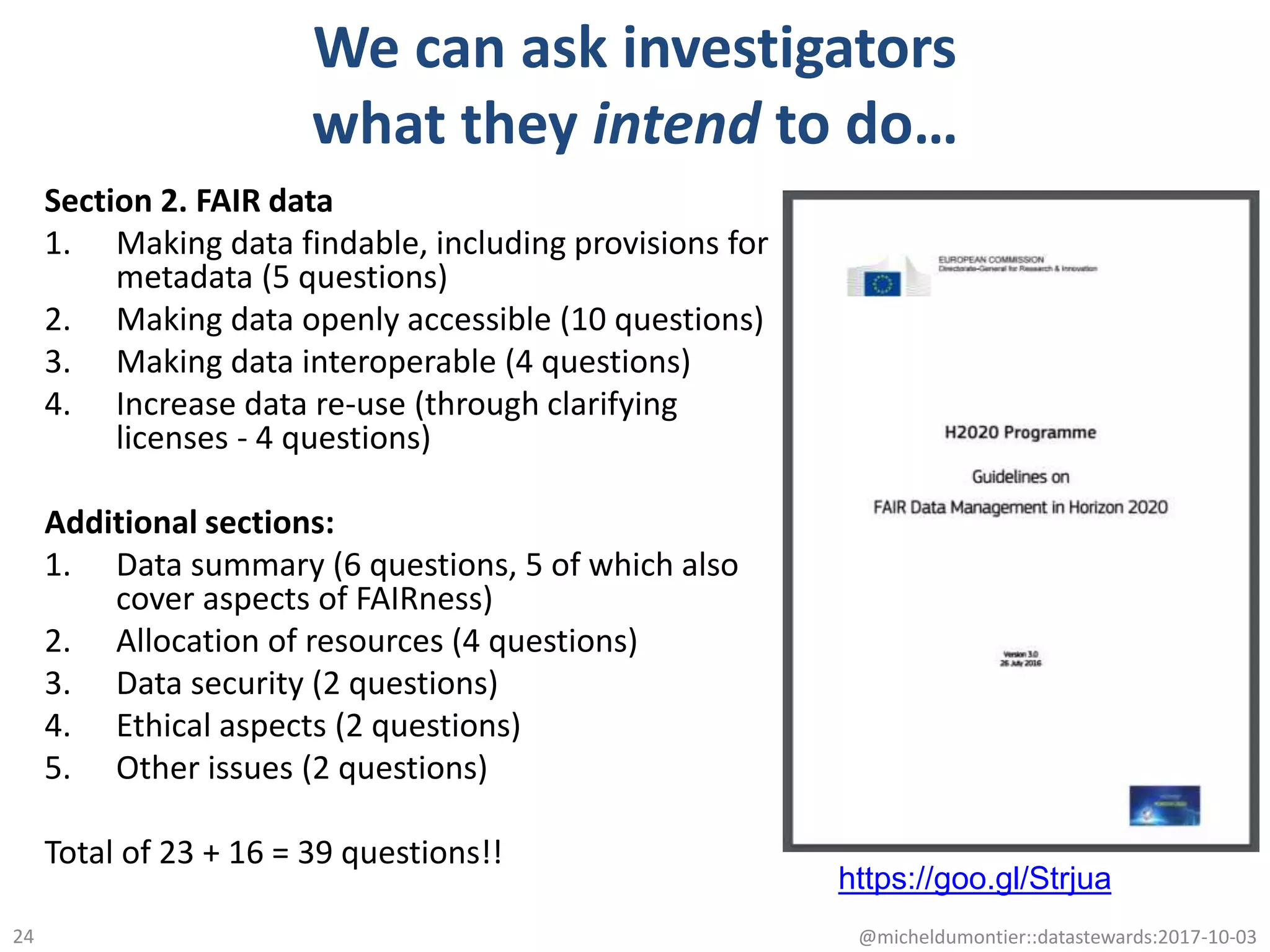

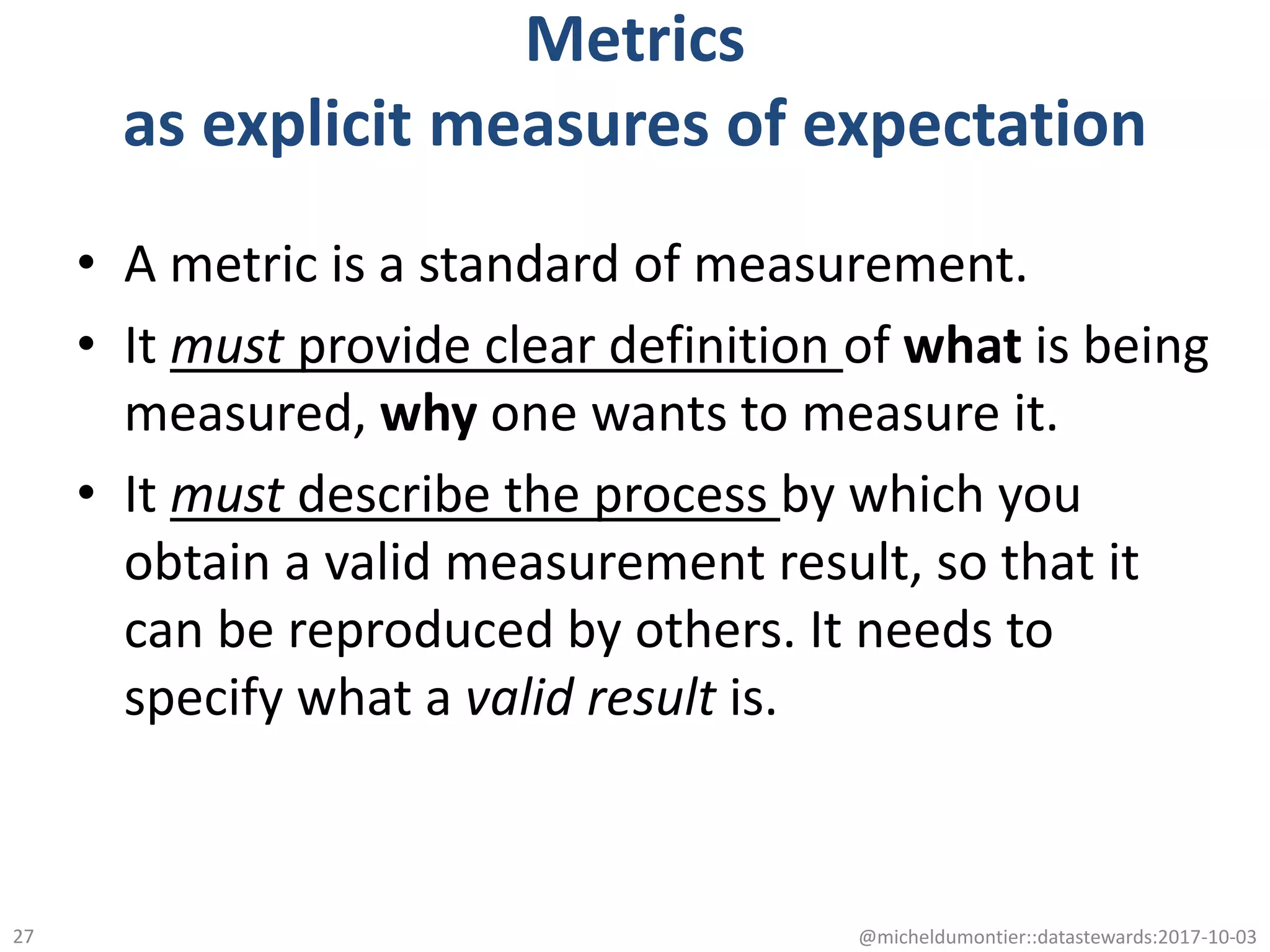

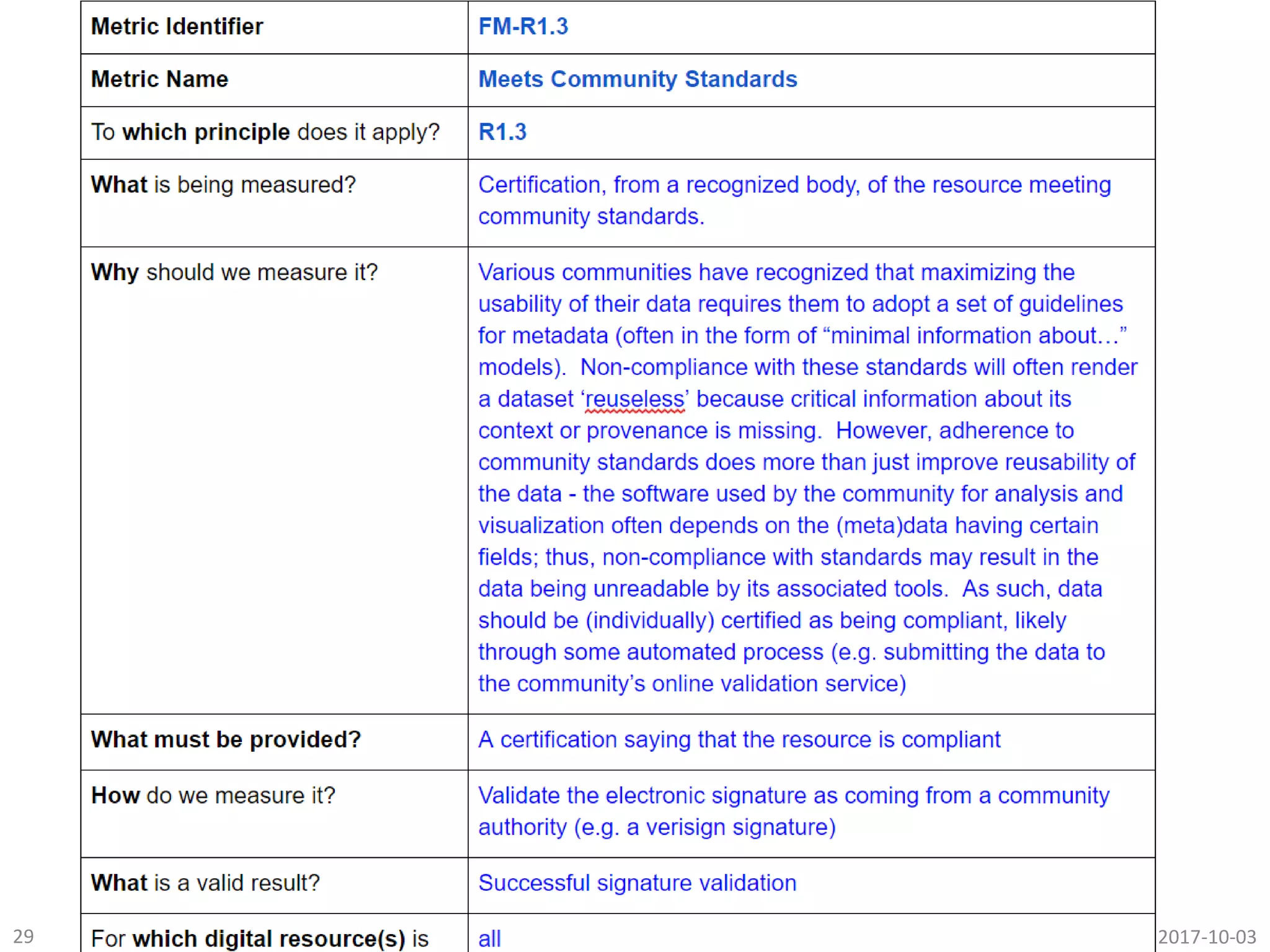

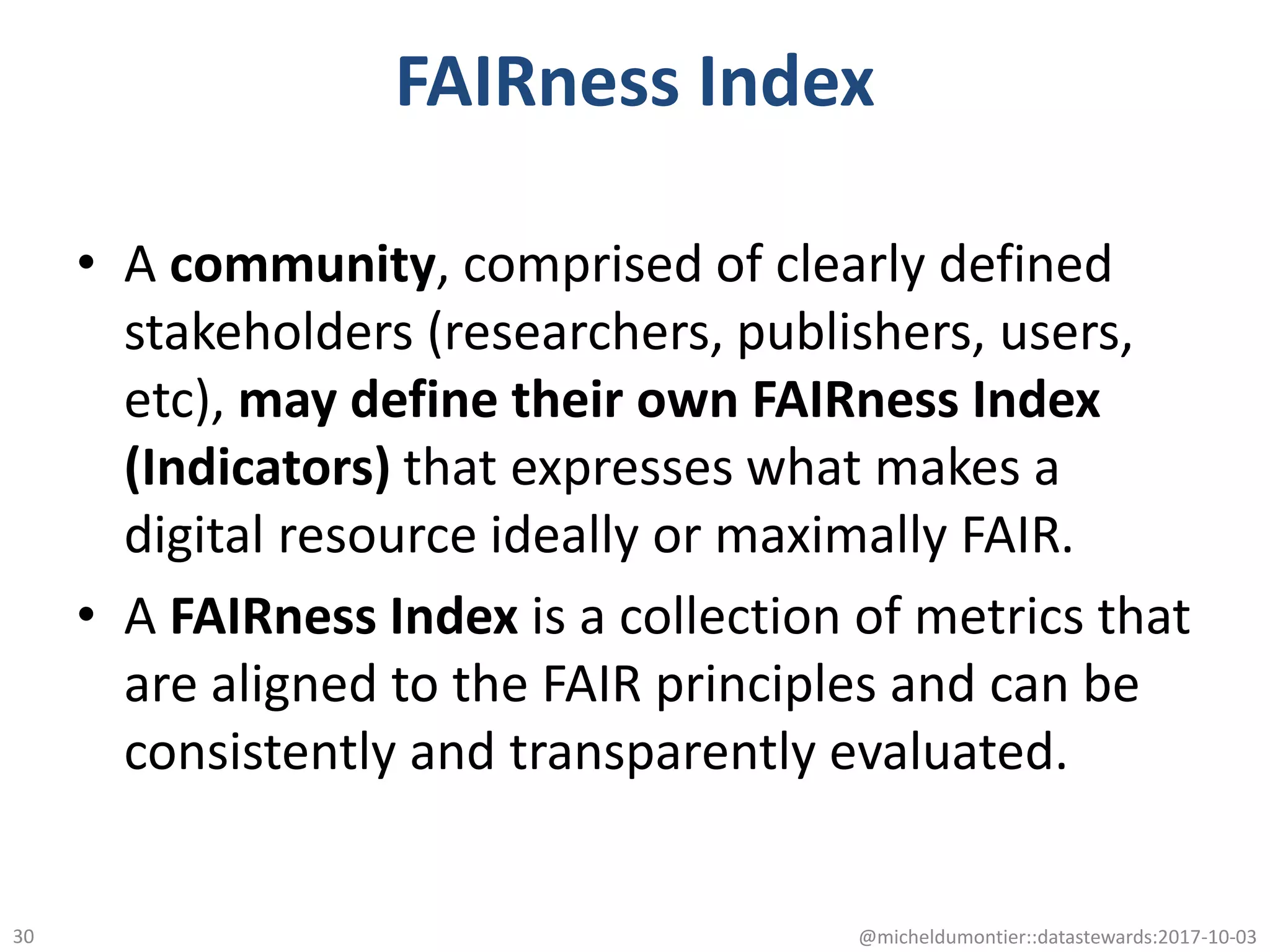

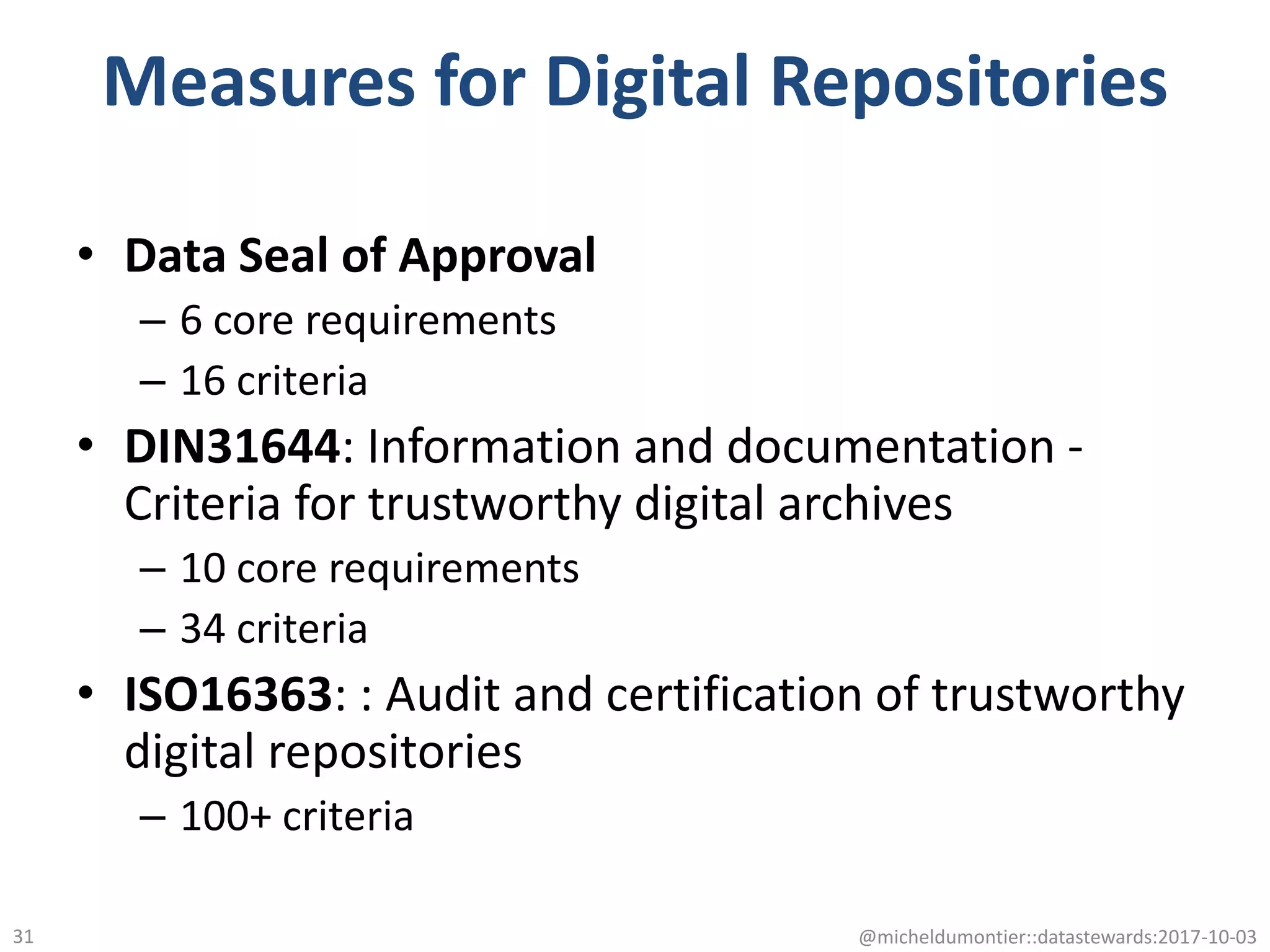

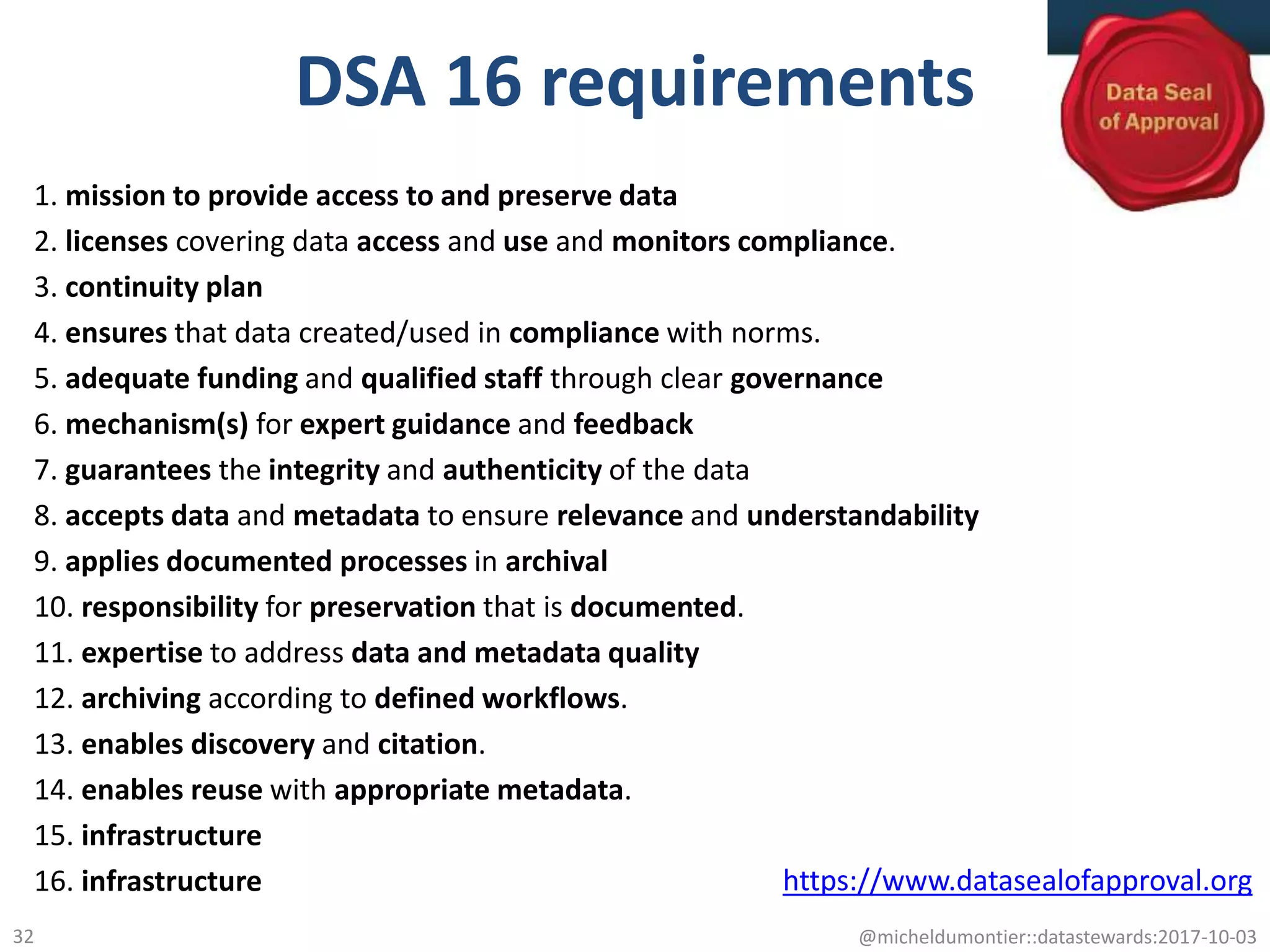

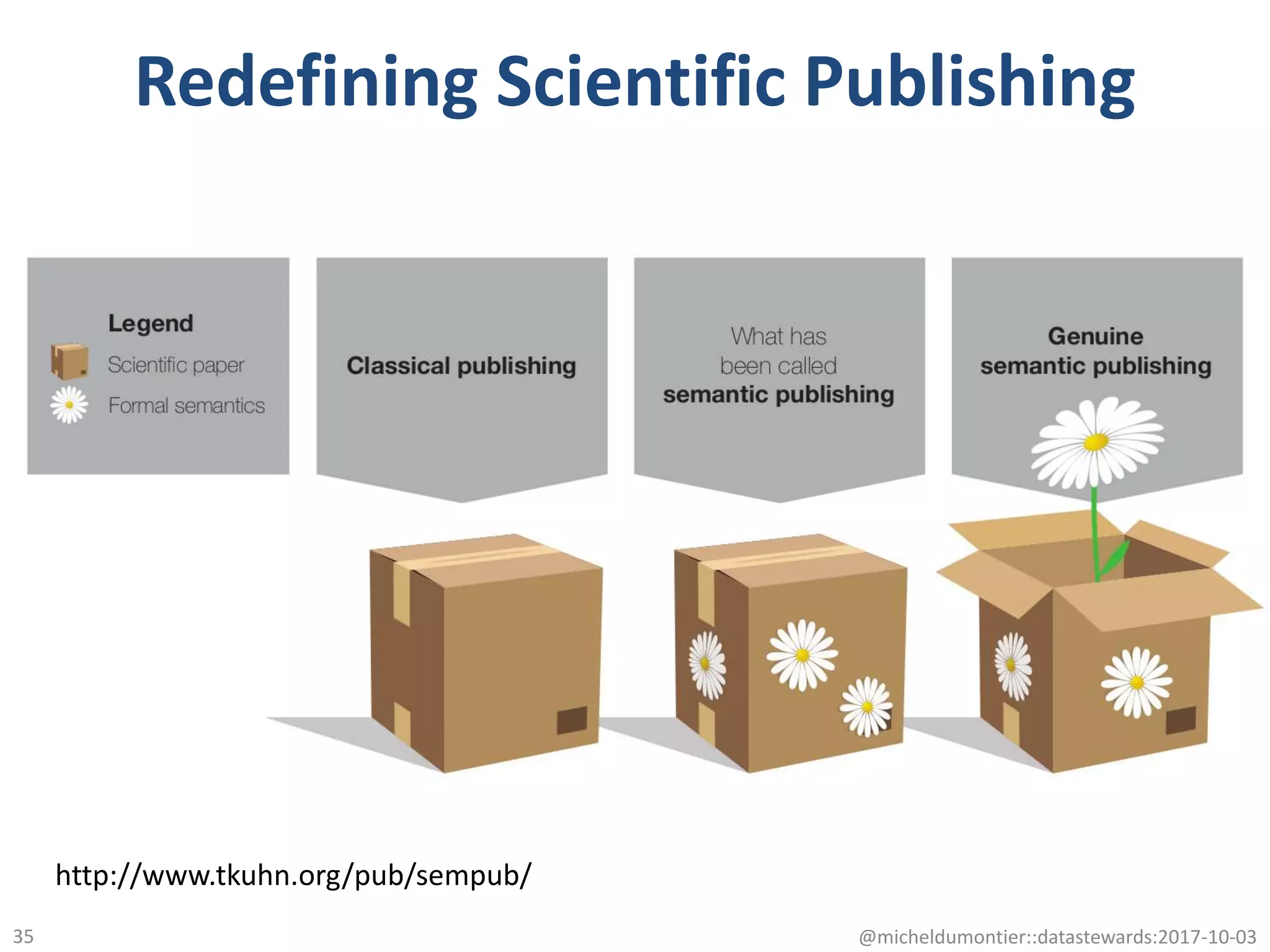

The document discusses the development and assessment of fair digital resources, emphasizing the importance of reproducibility and data reuse in scientific research. It outlines a framework for evaluating the fairness of digital resources based on community-defined metrics and principles. Key elements include creating infrastructure for data management, promoting discoverability, and ensuring interoperability and reuse of data.