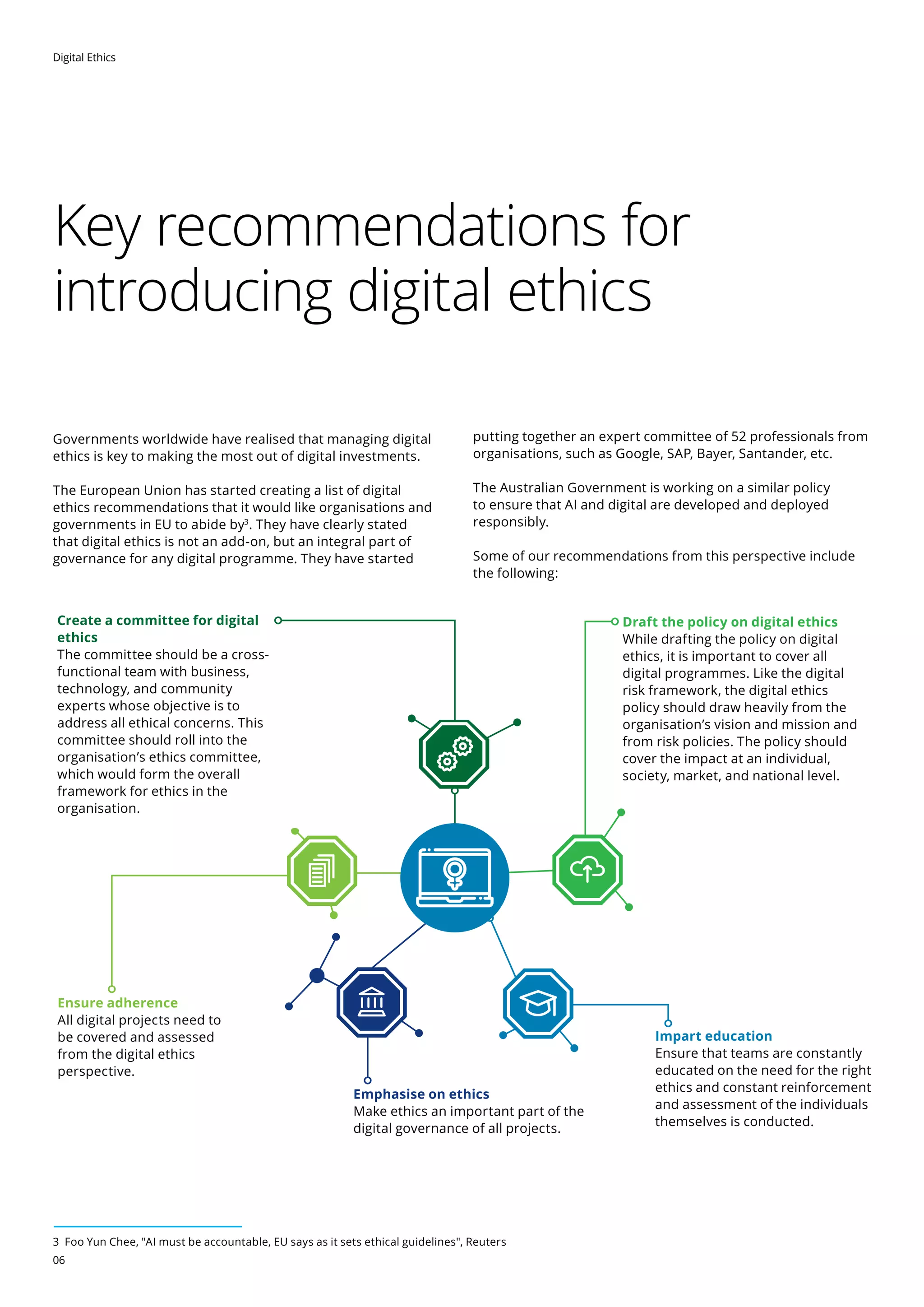

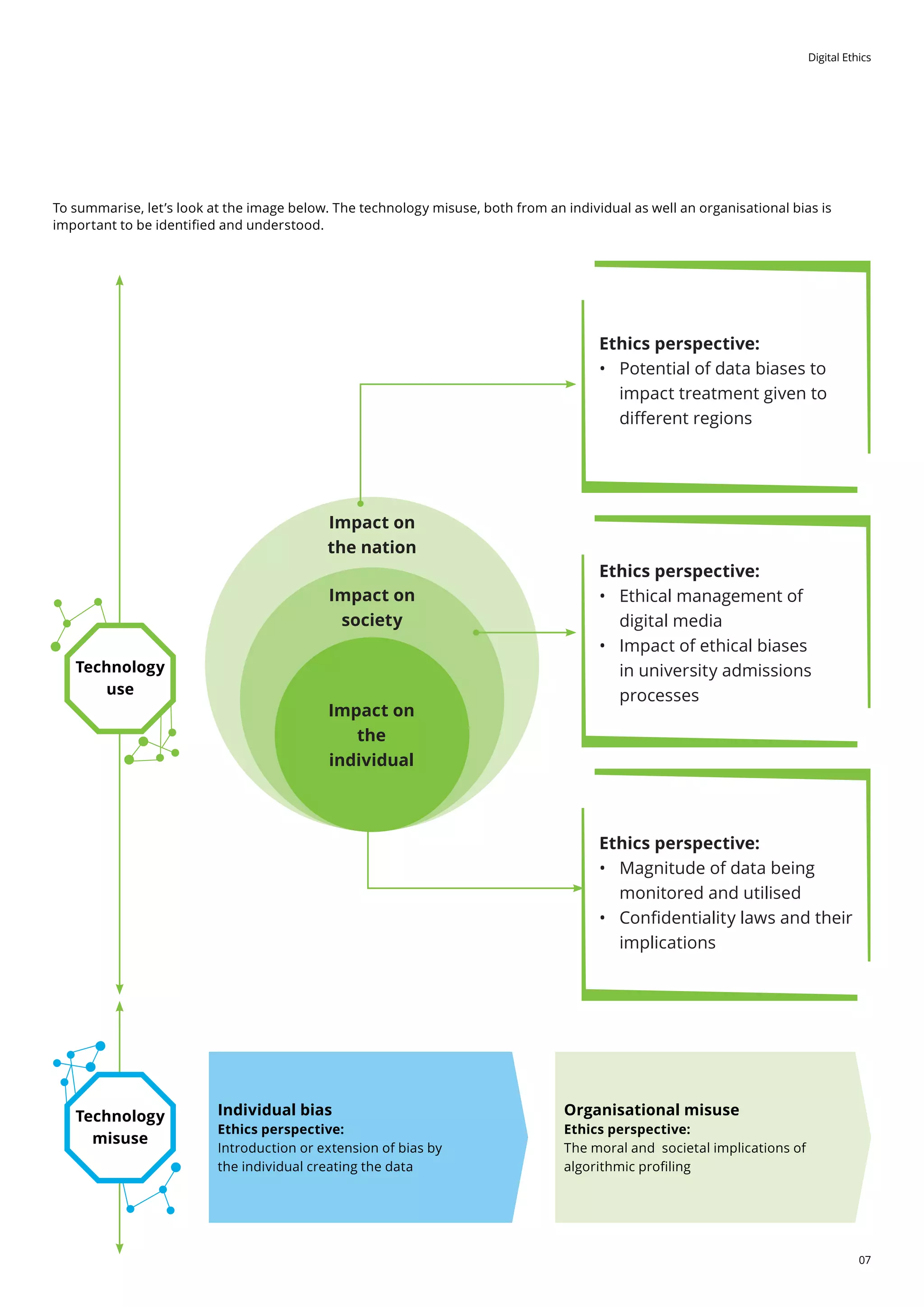

The document discusses the importance of digital ethics as organizations navigate the ethical implications of data and technology use, particularly in relation to preventing bias in automated decision-making processes. It highlights the need for institutions to establish frameworks and committees to oversee ethical practices and address potential biases introduced by historical data. Key recommendations for introducing digital ethics including creating dedicated committees, drafting comprehensive policies, ensuring adherence, and continuous education on ethical issues are also outlined.