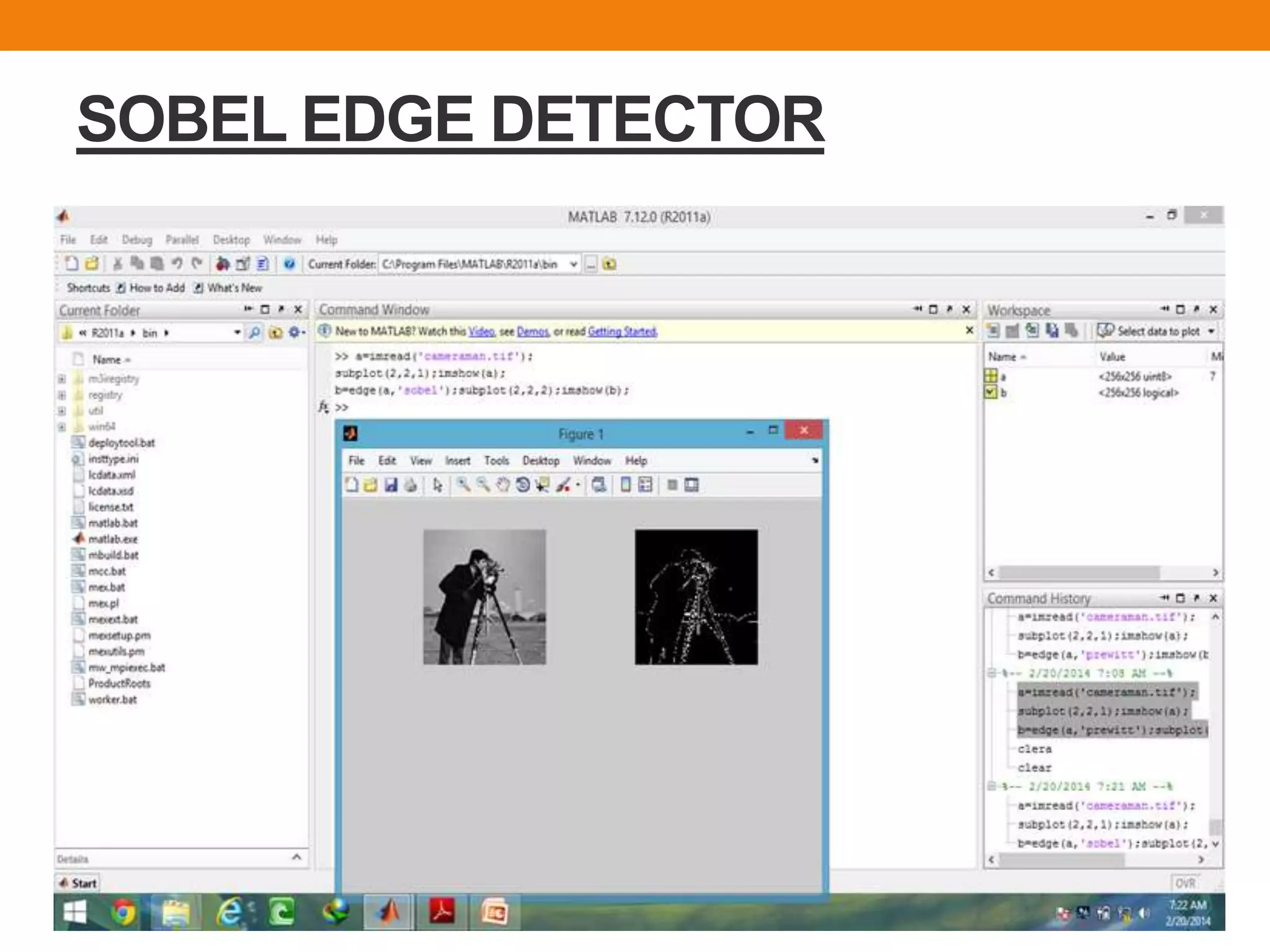

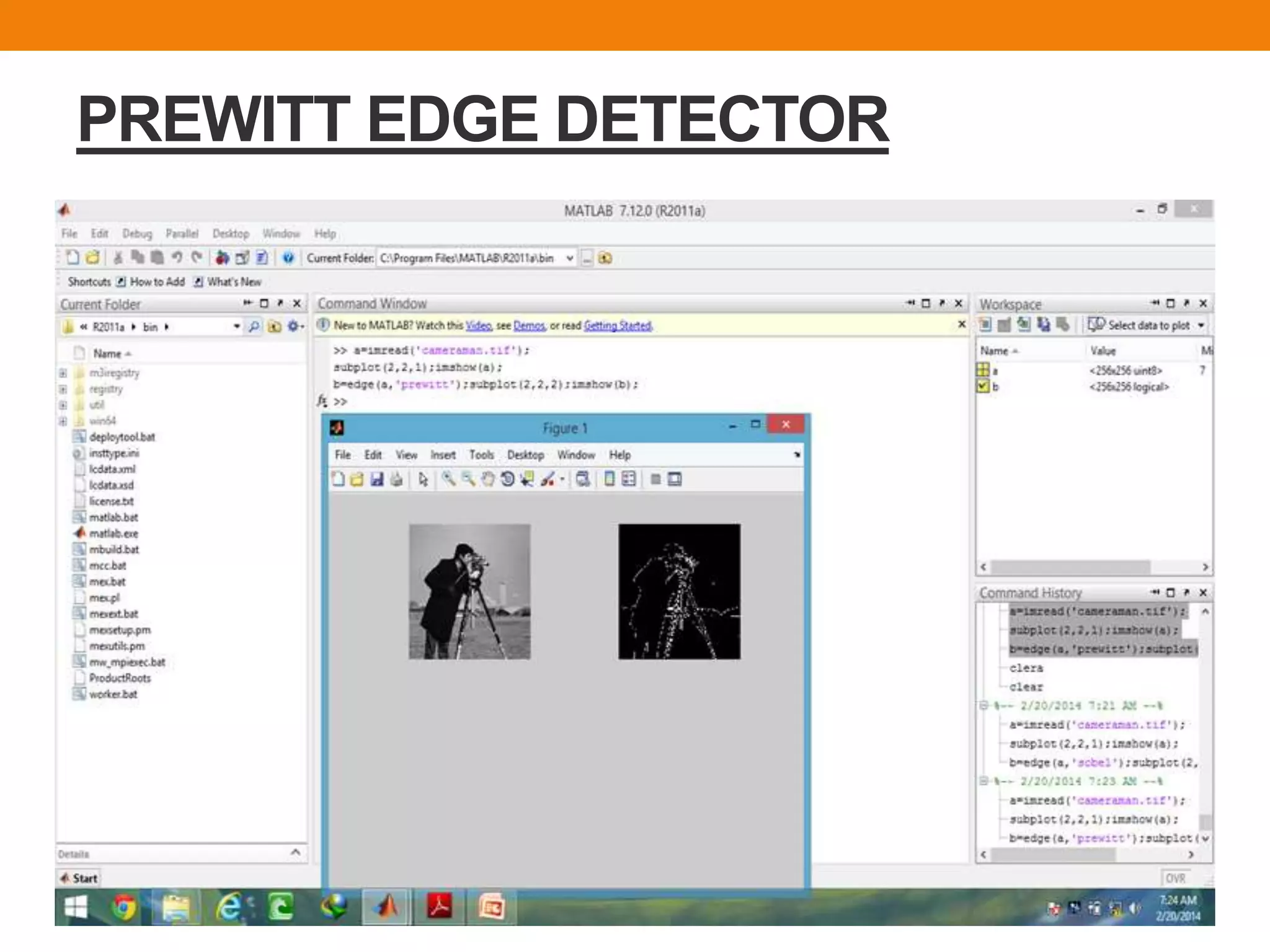

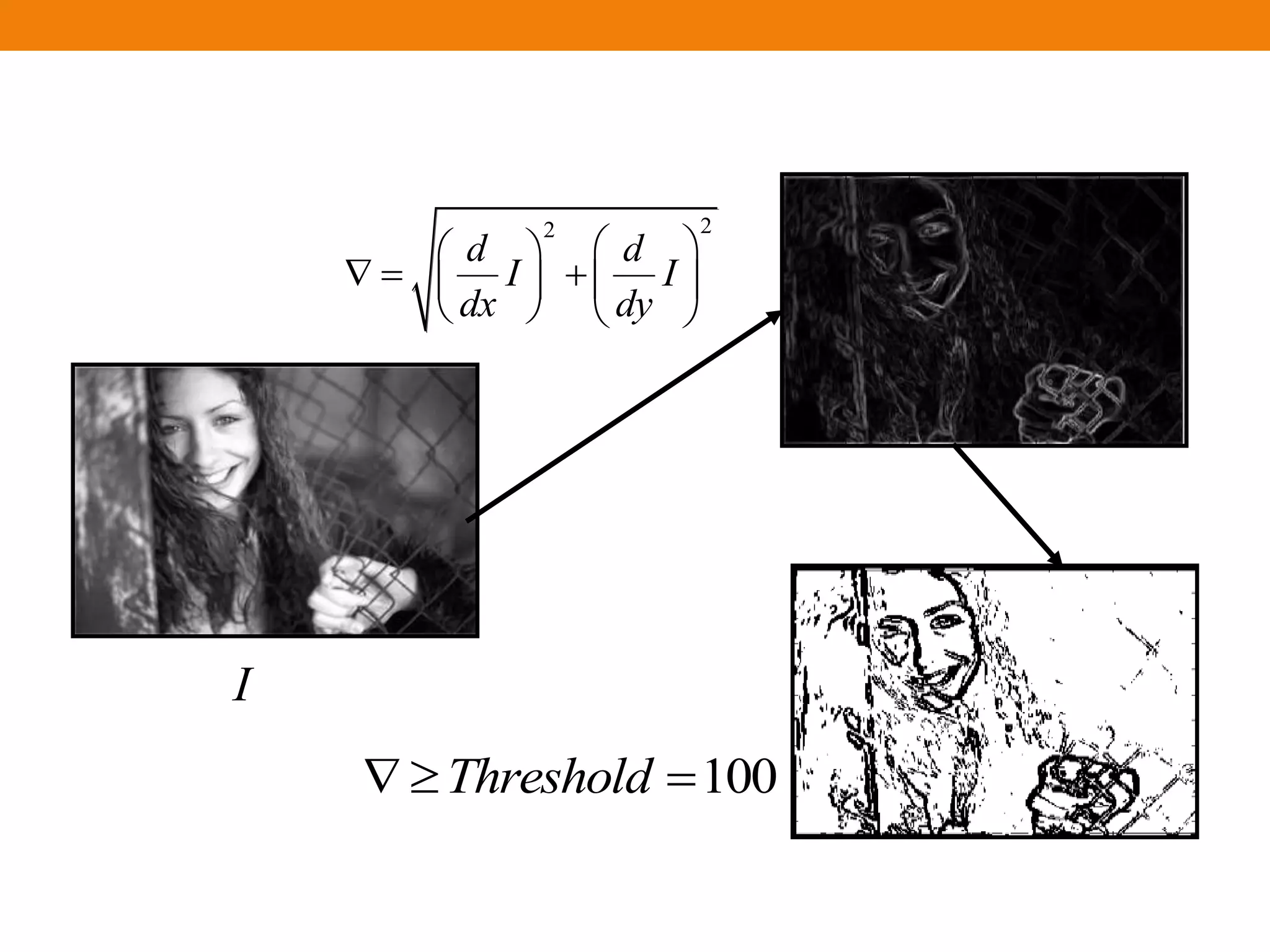

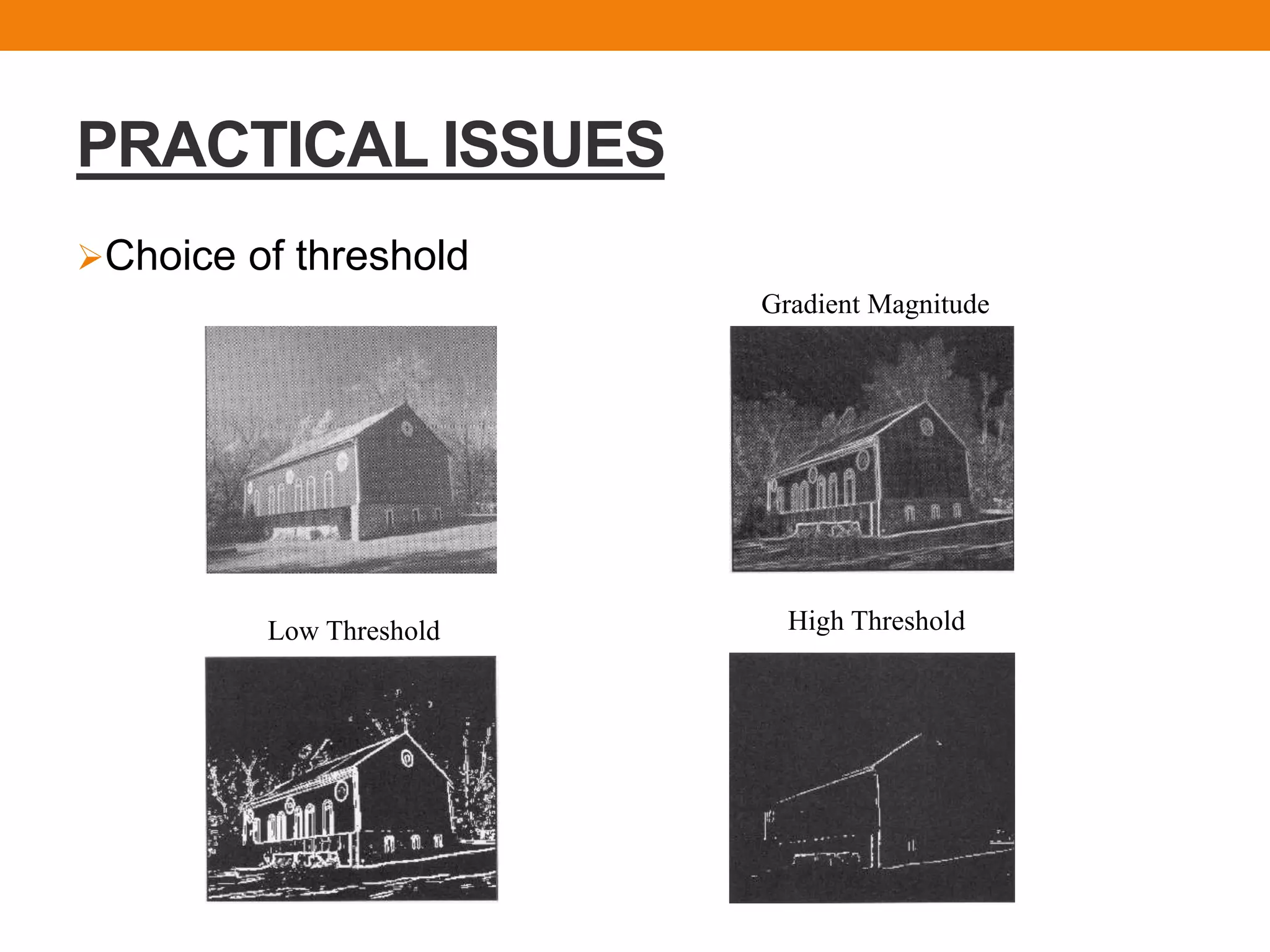

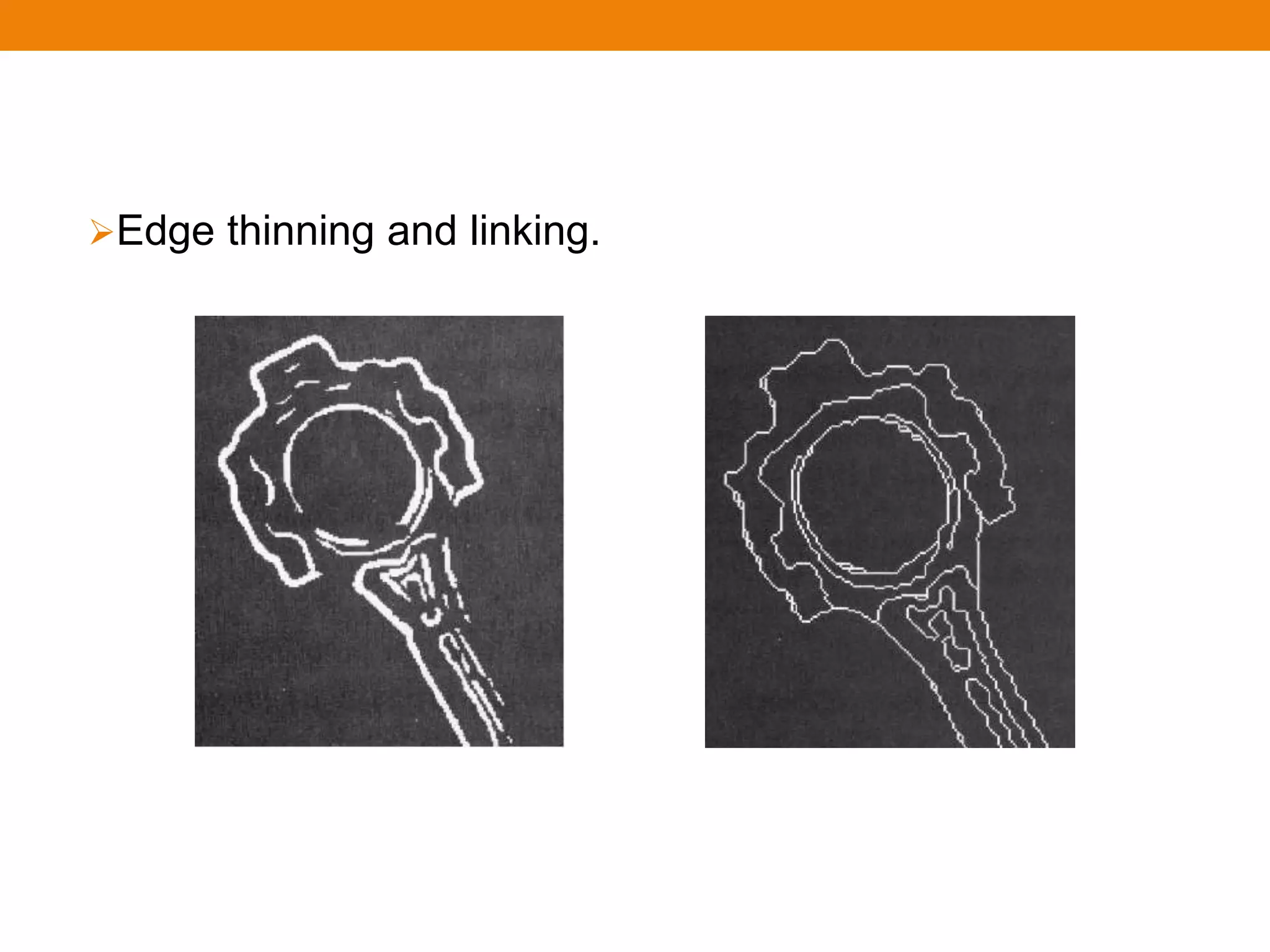

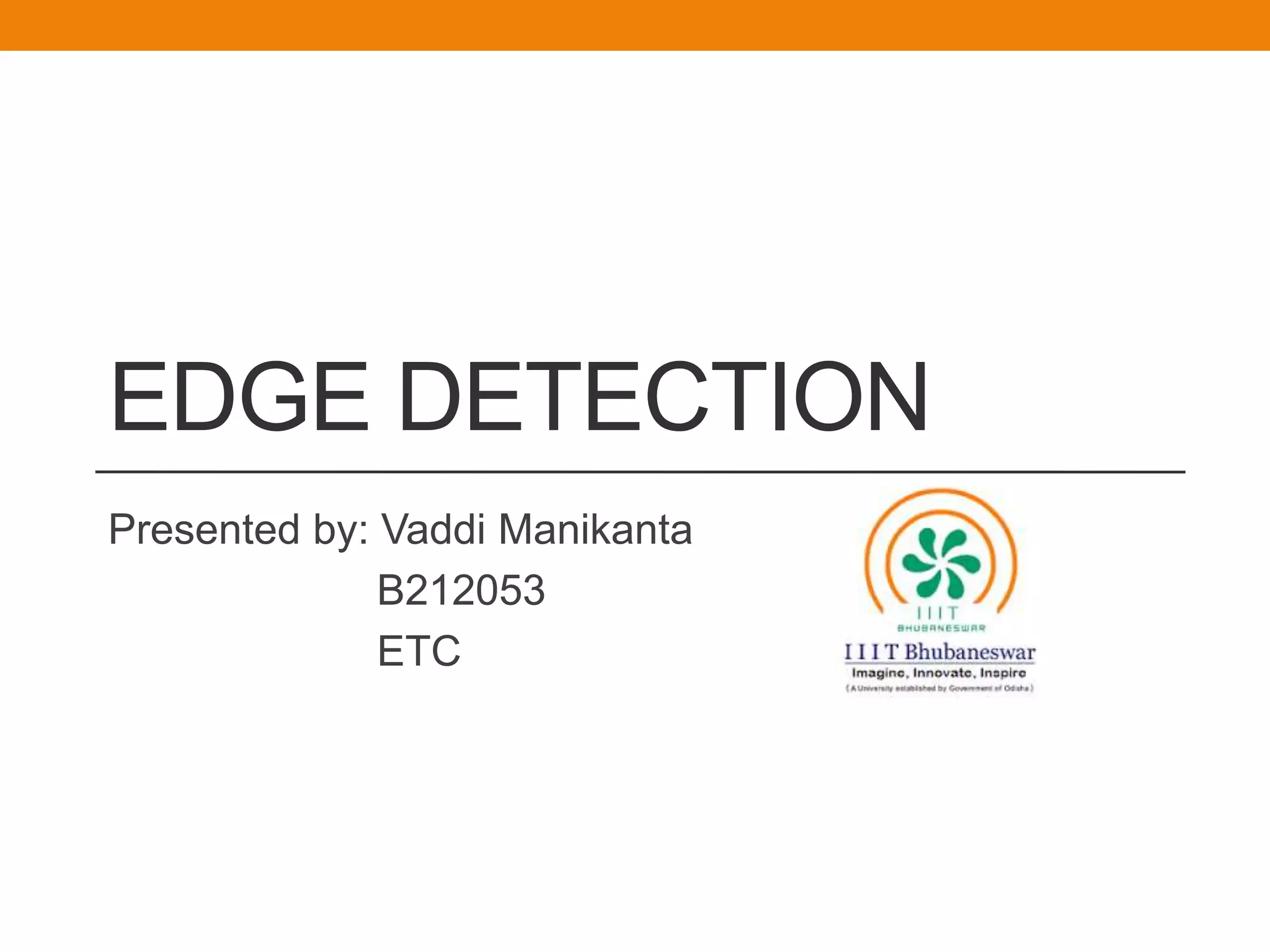

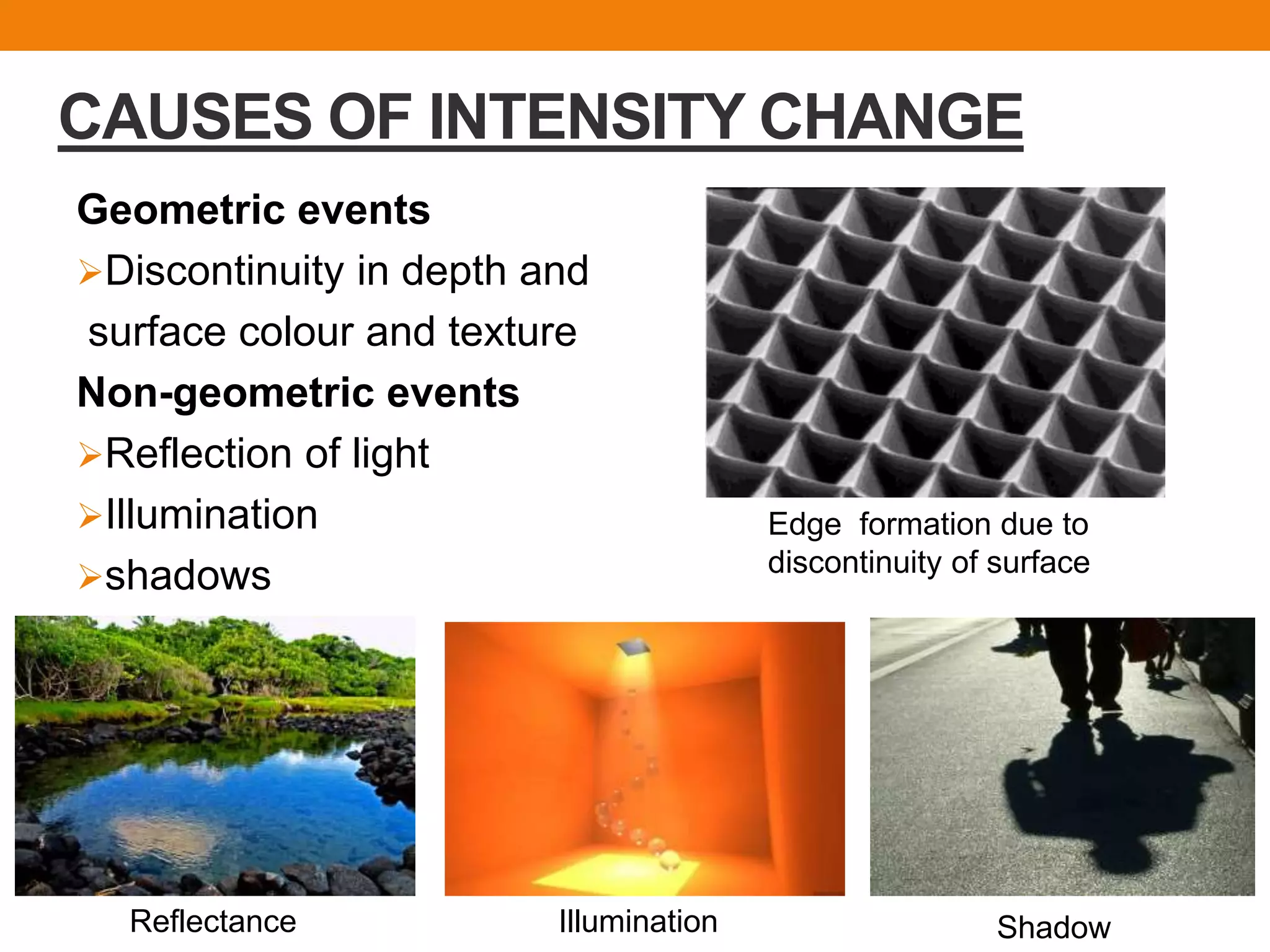

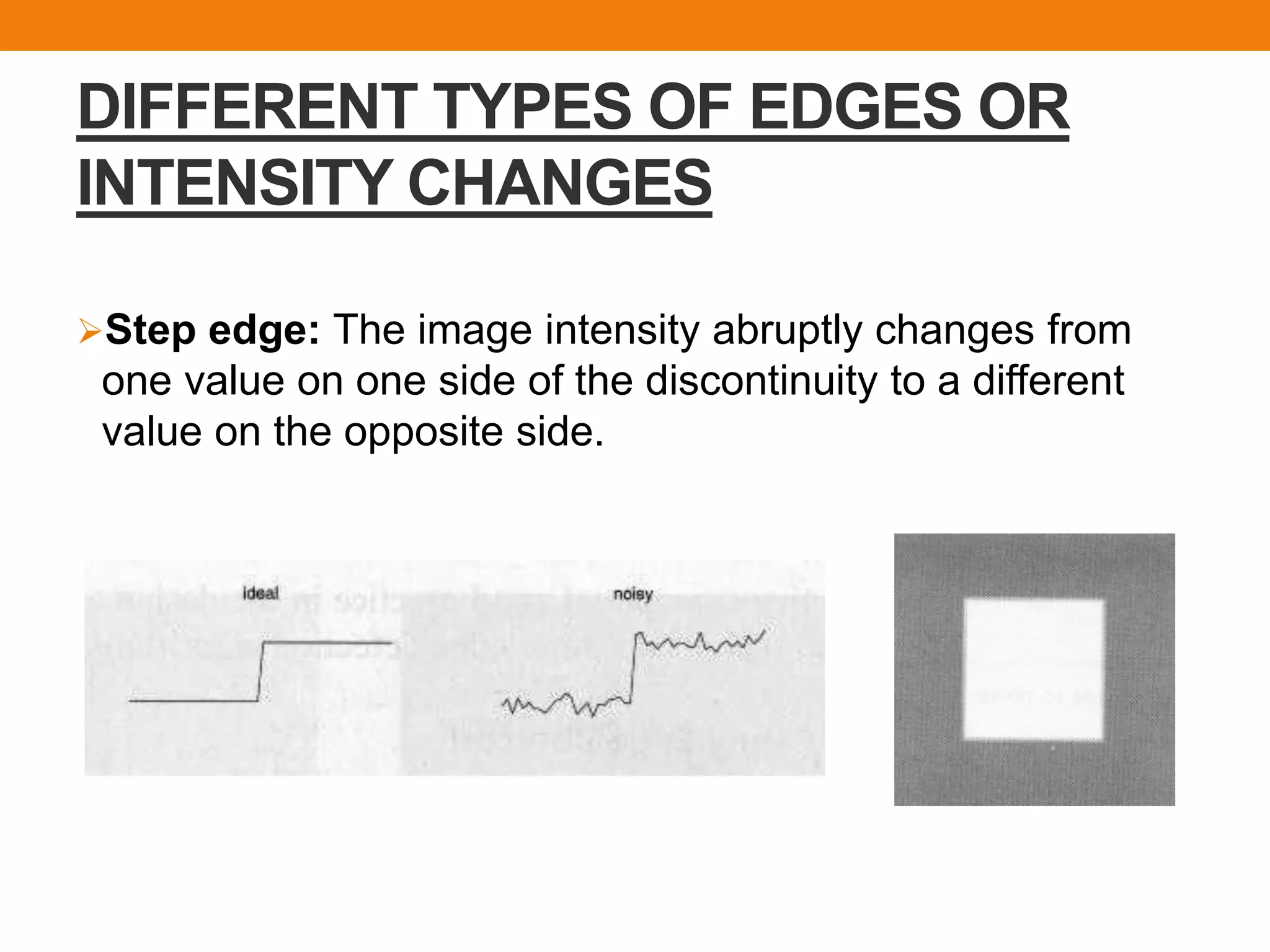

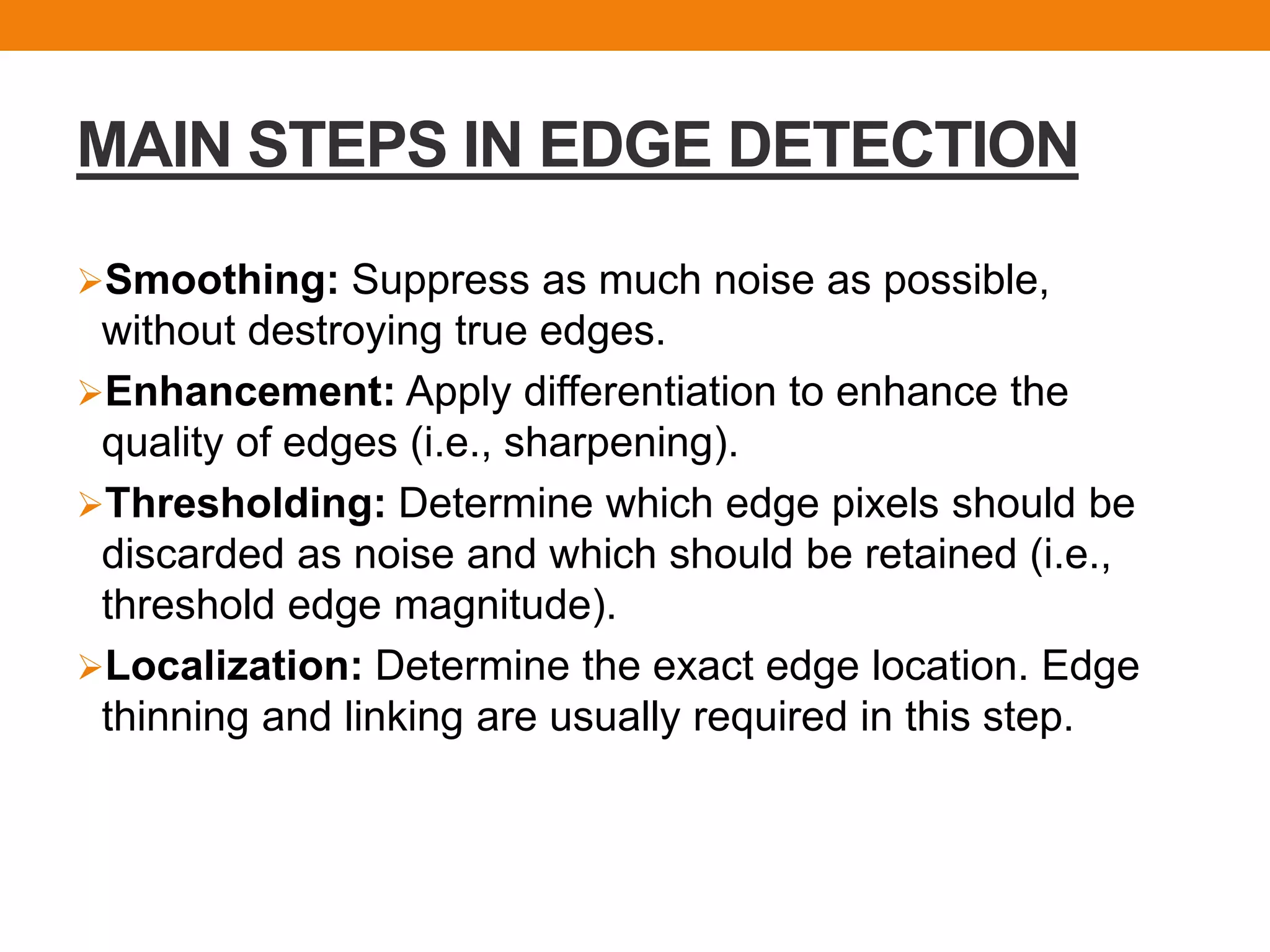

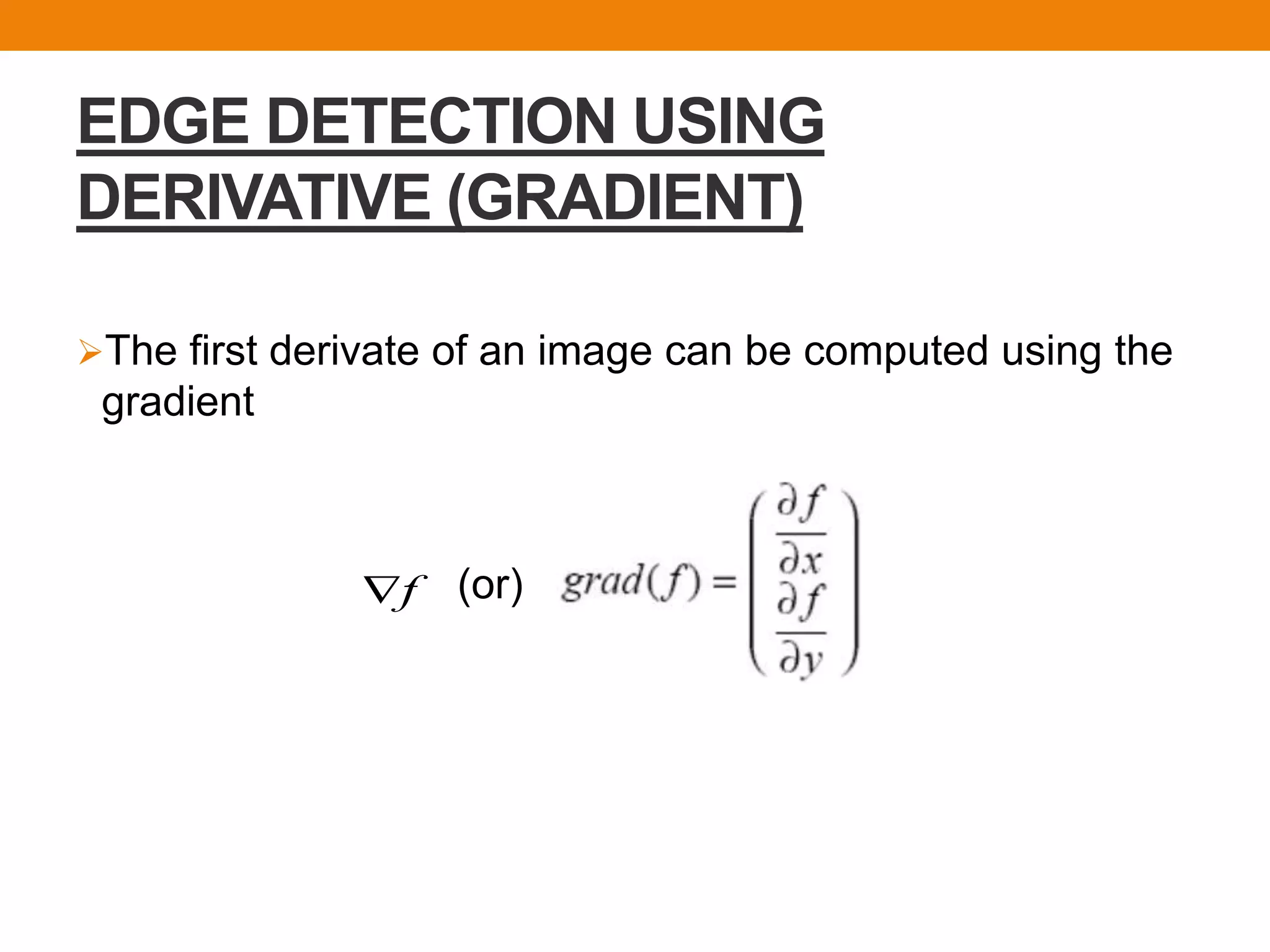

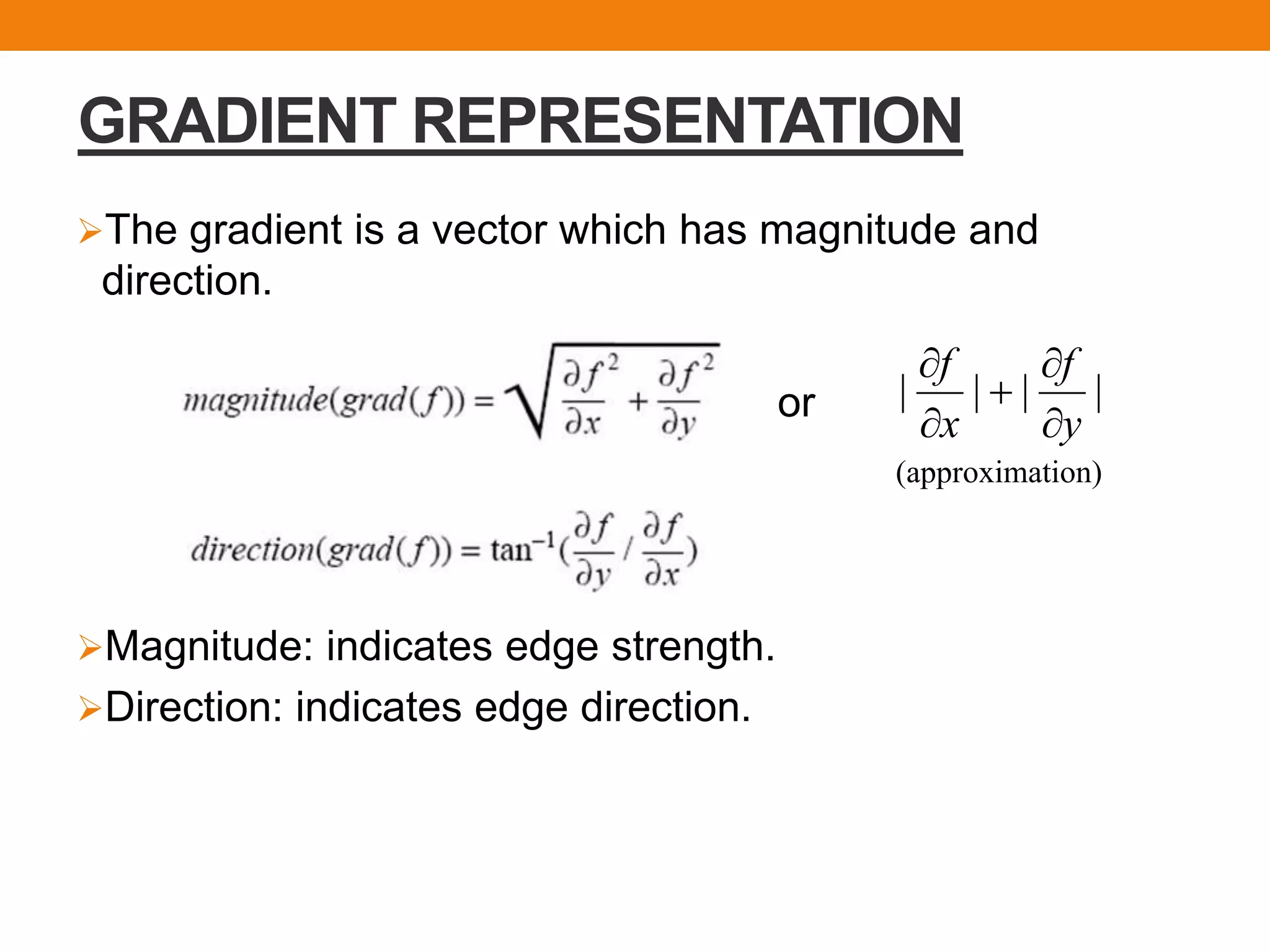

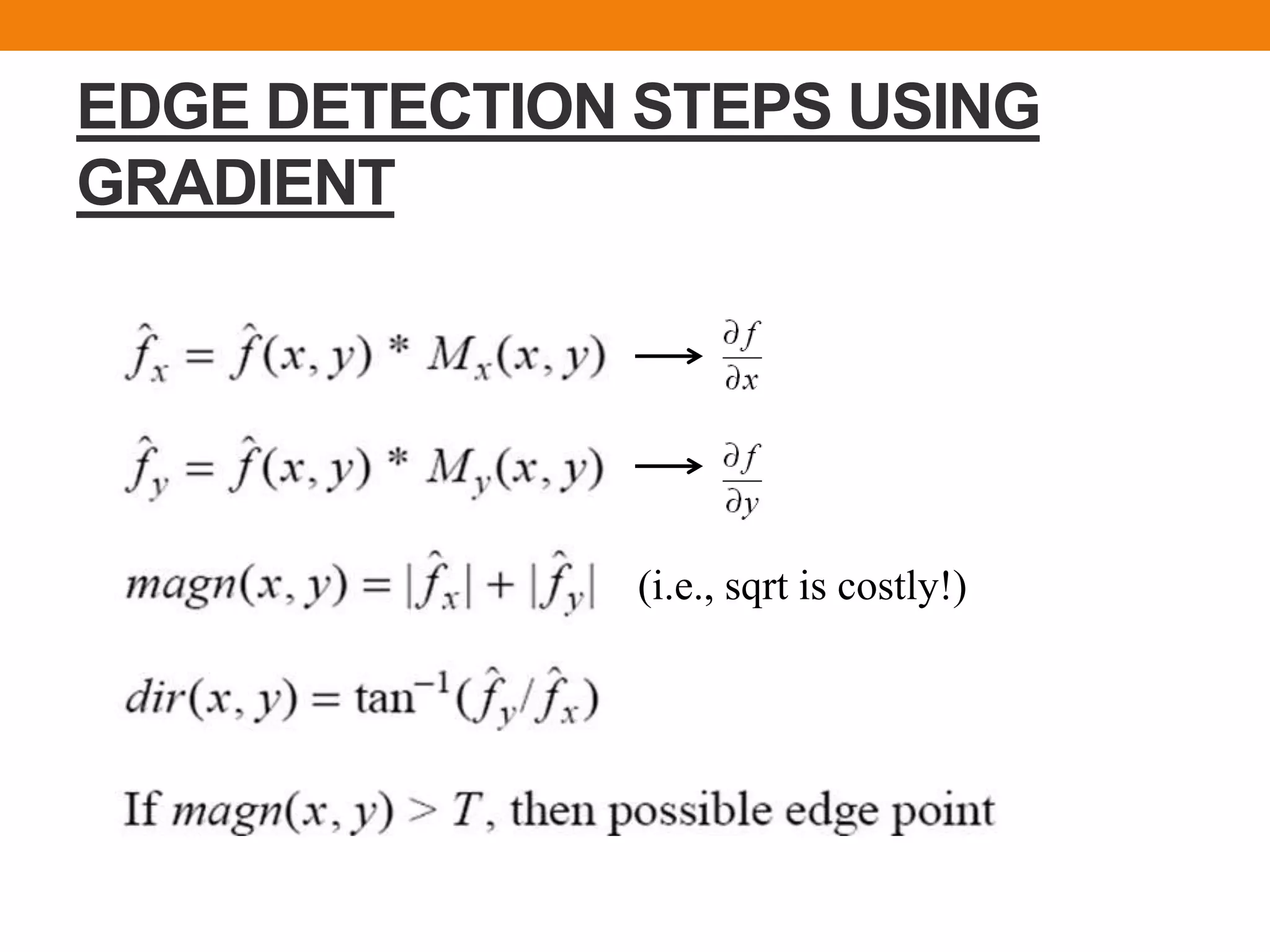

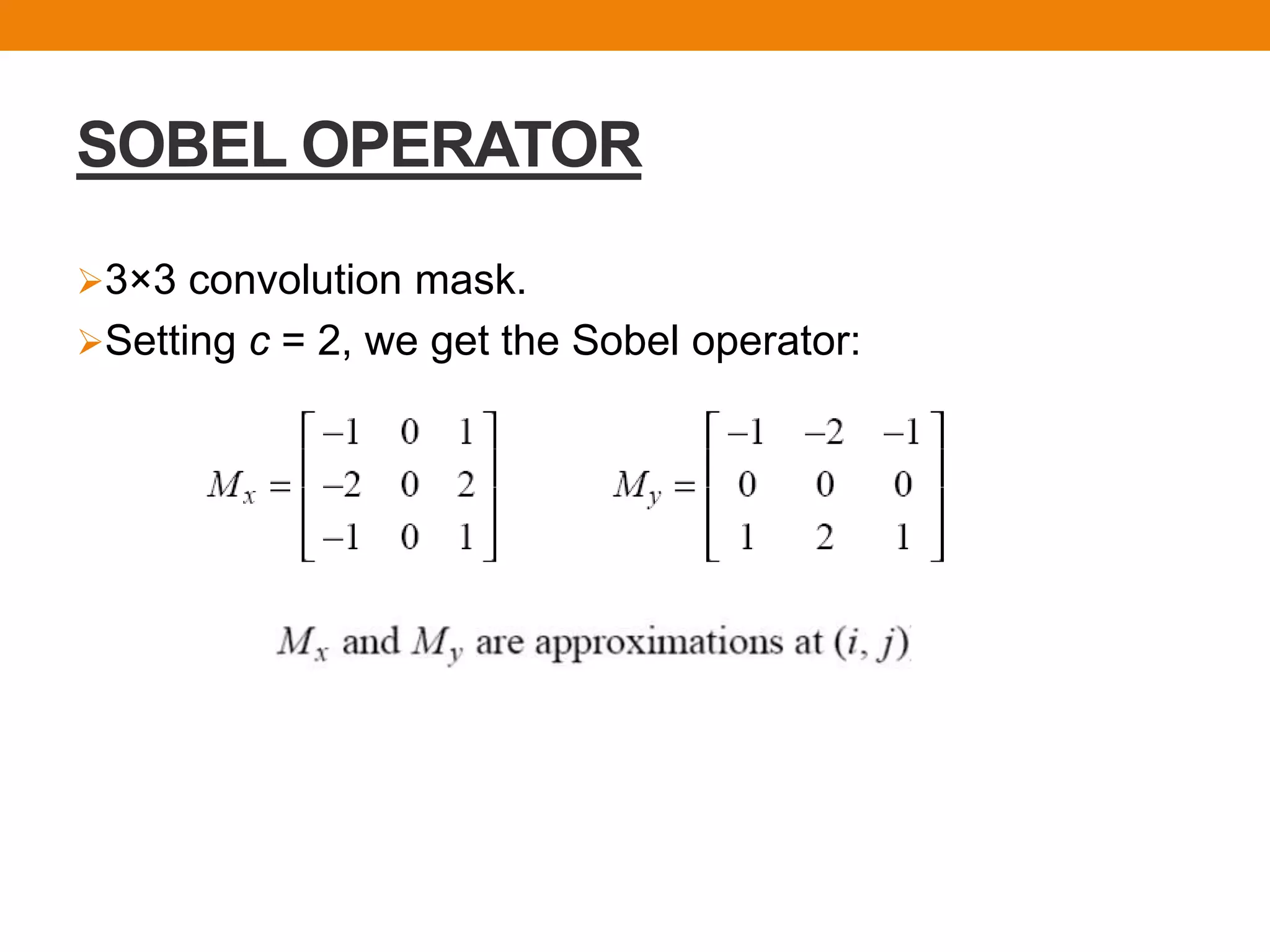

This document discusses edge detection in images. It defines edges as areas of abrupt change in pixel intensity that often correspond to object boundaries. Several edge detection techniques are covered, including gradient-based methods using the Sobel and Prewitt operators to calculate the gradient magnitude and direction at each pixel and identify edges. The key steps of edge detection are described as smoothing, enhancement, thresholding and localization. Examples of edge detection code in C language using the Sobel operator are provided. Applications of edge detection include image enhancement, text detection and video surveillance.

![GENERAL APPROXIMATION

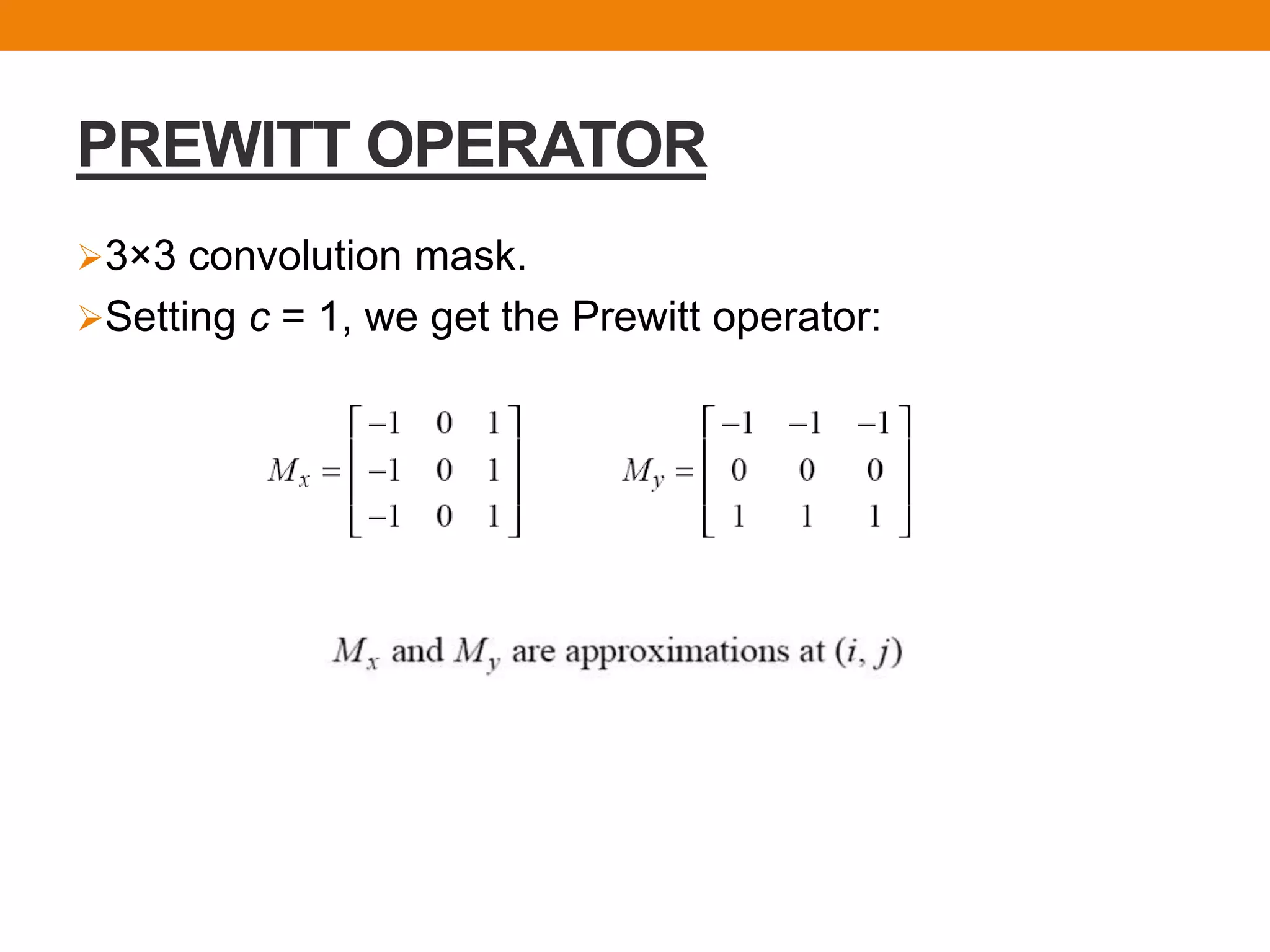

Consider the arrangement of pixels about the pixel [i, j]:

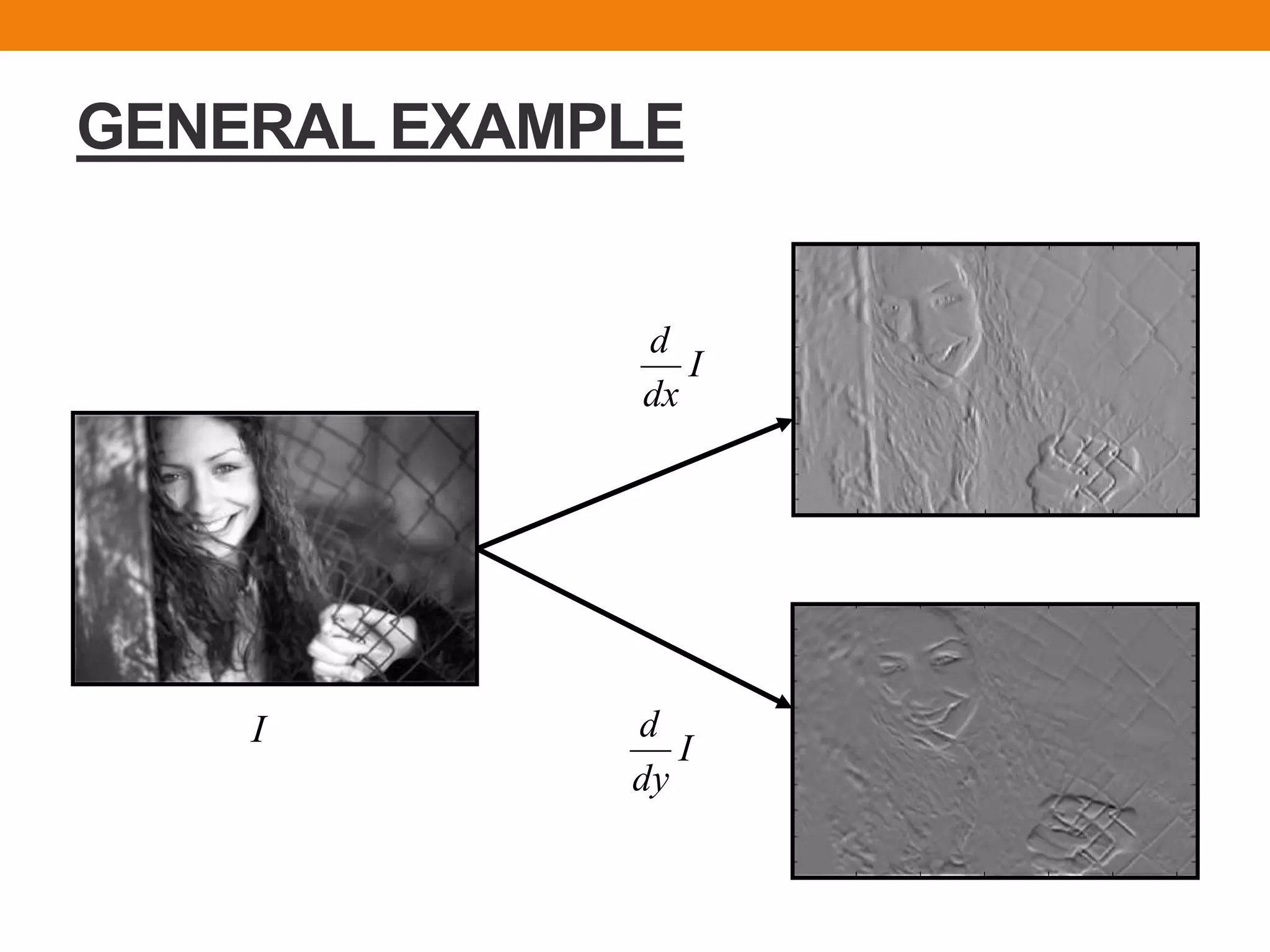

The partial derivatives , can be computed by:

The constant c implies the emphasis given to pixels

closer to the centre of the mask.

3 x 3 neighborhood:](https://image.slidesharecdn.com/manikantaedgedetection-151110182348-lva1-app6892/75/Edge-Detection-algorithm-and-code-13-2048.jpg)

![EDGE DETECTOR USING SOBEL

OPERATOR IN C LANGUAGE

#include <stdio.h>

#include <stdlib.h>

#include <float.h>

#include "mypgm.h"

void sobel_filtering( )

/* Spatial filtering of image data */

/* Sobel filter (horizontal differentiation */

/* Input: image1[y][x] ---- Outout: image2[y][x] */

{

/* Definition of Sobel filter in horizontal direction */

int weight[3][3] = { { -1, 0, 1 },

{ -2, 0, 2 },

{ -1, 0, 1 } };

double pixel_value;

double min, max;

int x, y, i, j; /* Loop variable */](https://image.slidesharecdn.com/manikantaedgedetection-151110182348-lva1-app6892/75/Edge-Detection-algorithm-and-code-15-2048.jpg)

![/* Maximum values calculation after filtering*/

printf("Now, filtering of input image is performednn");

min = DBL_MAX;

max = -DBL_MAX;

for (y = 1; y < y_size1 - 1; y++) {

for (x = 1; x < x_size1 - 1; x++) {

pixel_value = 0.0;

for (j = -1; j <= 1; j++) {

for (i = -1; i <= 1; i++) {

pixel_value += weight[j + 1][i + 1] * image1[y + j][x + i];

}

}

if (pixel_value < min) min = pixel_value;

if (pixel_value > max) max = pixel_value;

}

}](https://image.slidesharecdn.com/manikantaedgedetection-151110182348-lva1-app6892/75/Edge-Detection-algorithm-and-code-16-2048.jpg)

![if ((int)(max - min) == 0) {

printf("Nothing exists!!!nn");

exit(1);

}

/* Initialization of image2[y][x] */

x_size2 = x_size1;

y_size2 = y_size1;

for (y = 0; y < y_size2; y++) {

for (x = 0; x < x_size2; x++) {

image2[y][x] = 0;

}

}](https://image.slidesharecdn.com/manikantaedgedetection-151110182348-lva1-app6892/75/Edge-Detection-algorithm-and-code-17-2048.jpg)

![/* Generation of image2 after linear transformtion */

for (y = 1; y < y_size1 - 1; y++) {

for (x = 1; x < x_size1 - 1; x++) {

pixel_value = 0.0;

for (j = -1; j <= 1; j++) {

for (i = -1; i <= 1; i++) {

pixel_value += weight[j + 1][i + 1] * image1[y + j][x + i];

}

}

pixel_value = MAX_BRIGHTNESS * (pixel_value - min) / (max - min);

image2[y][x] = (unsigned char)pixel_value;

}

}

}

main( )

{

load_image_data( ); /* Input of image1 */

sobel_filtering( ); /* Sobel filter is applied to image1 */

save_image_data( ); /* Output of image2 */

return 0;

}](https://image.slidesharecdn.com/manikantaedgedetection-151110182348-lva1-app6892/75/Edge-Detection-algorithm-and-code-18-2048.jpg)