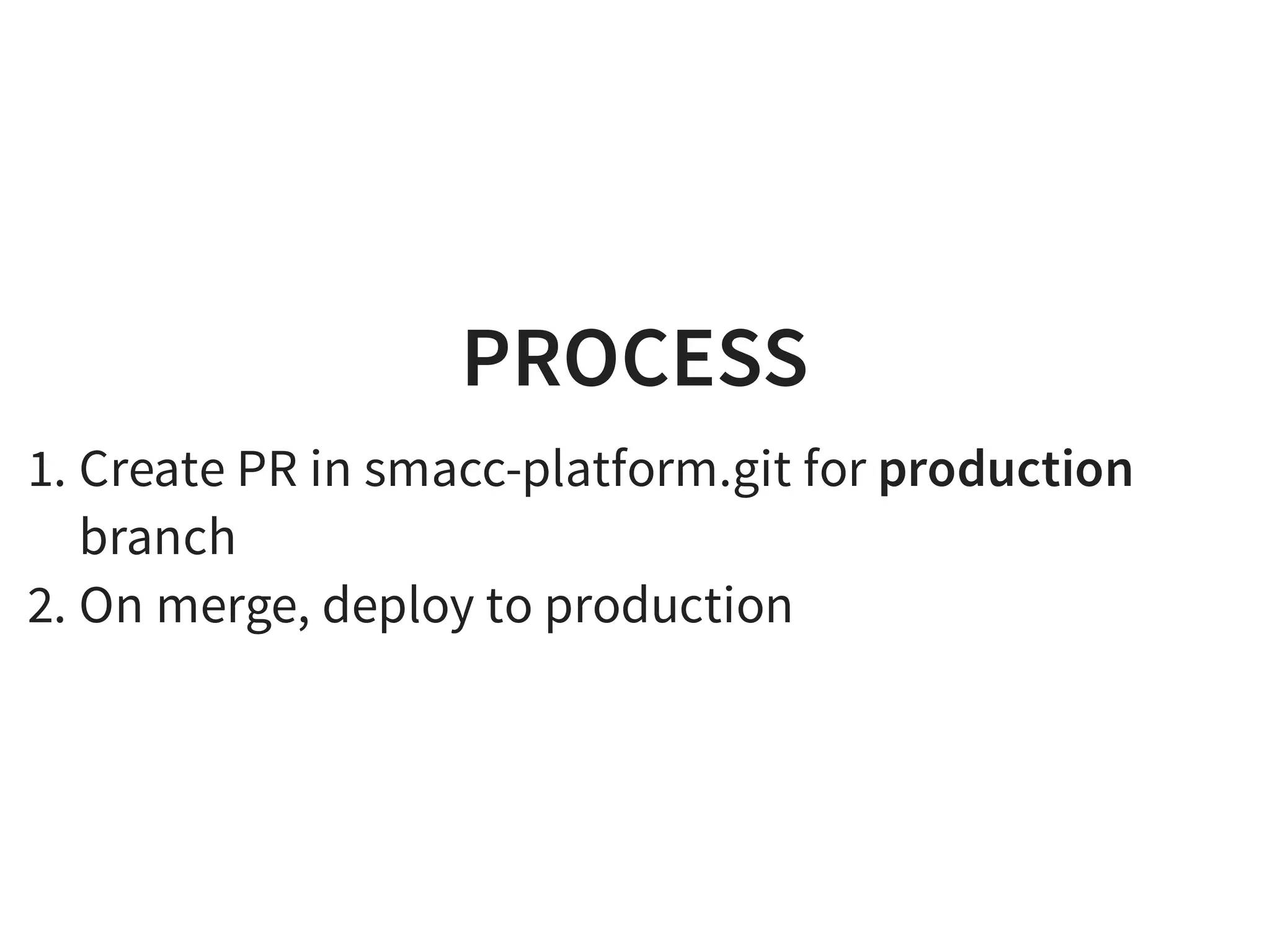

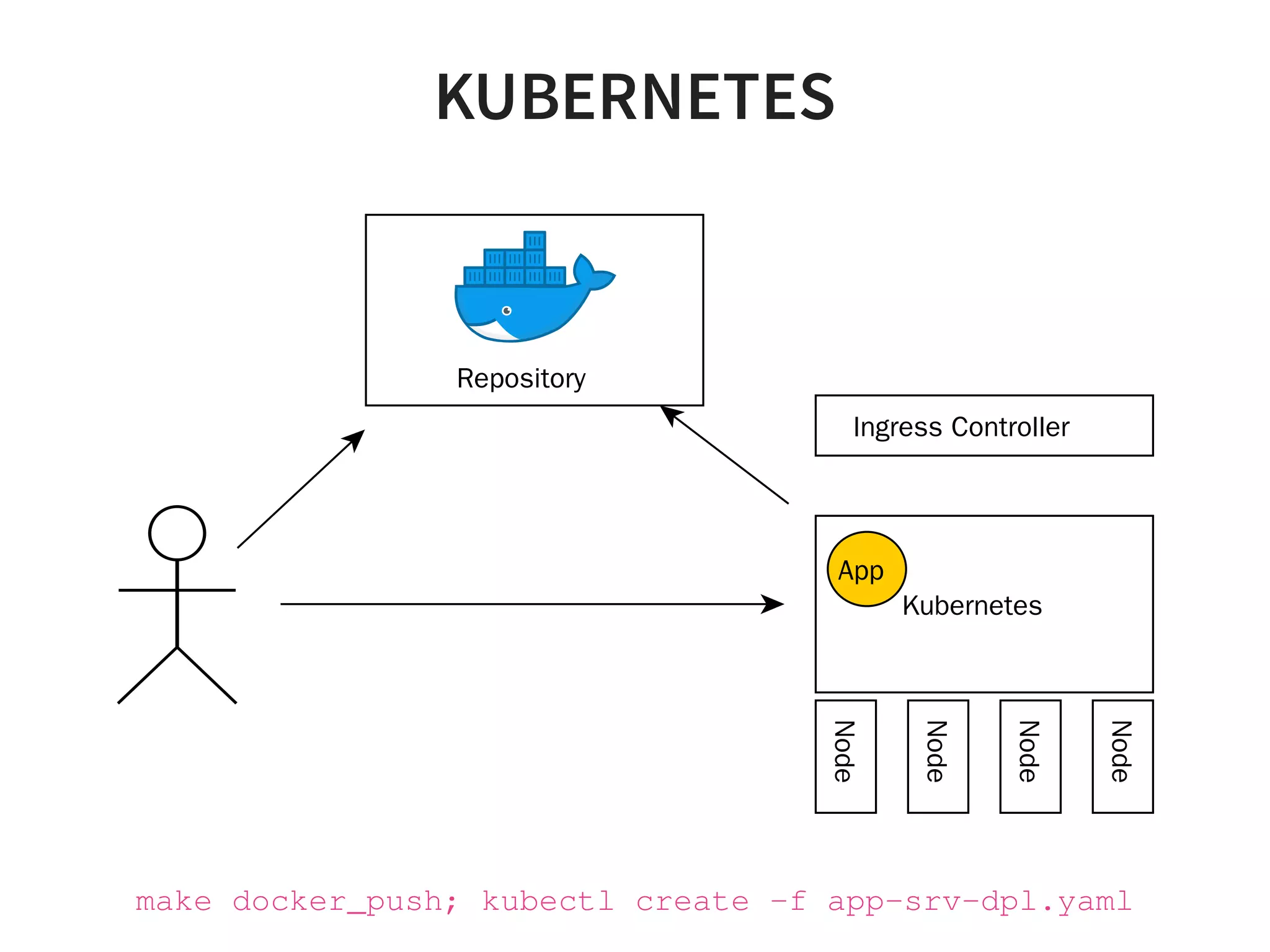

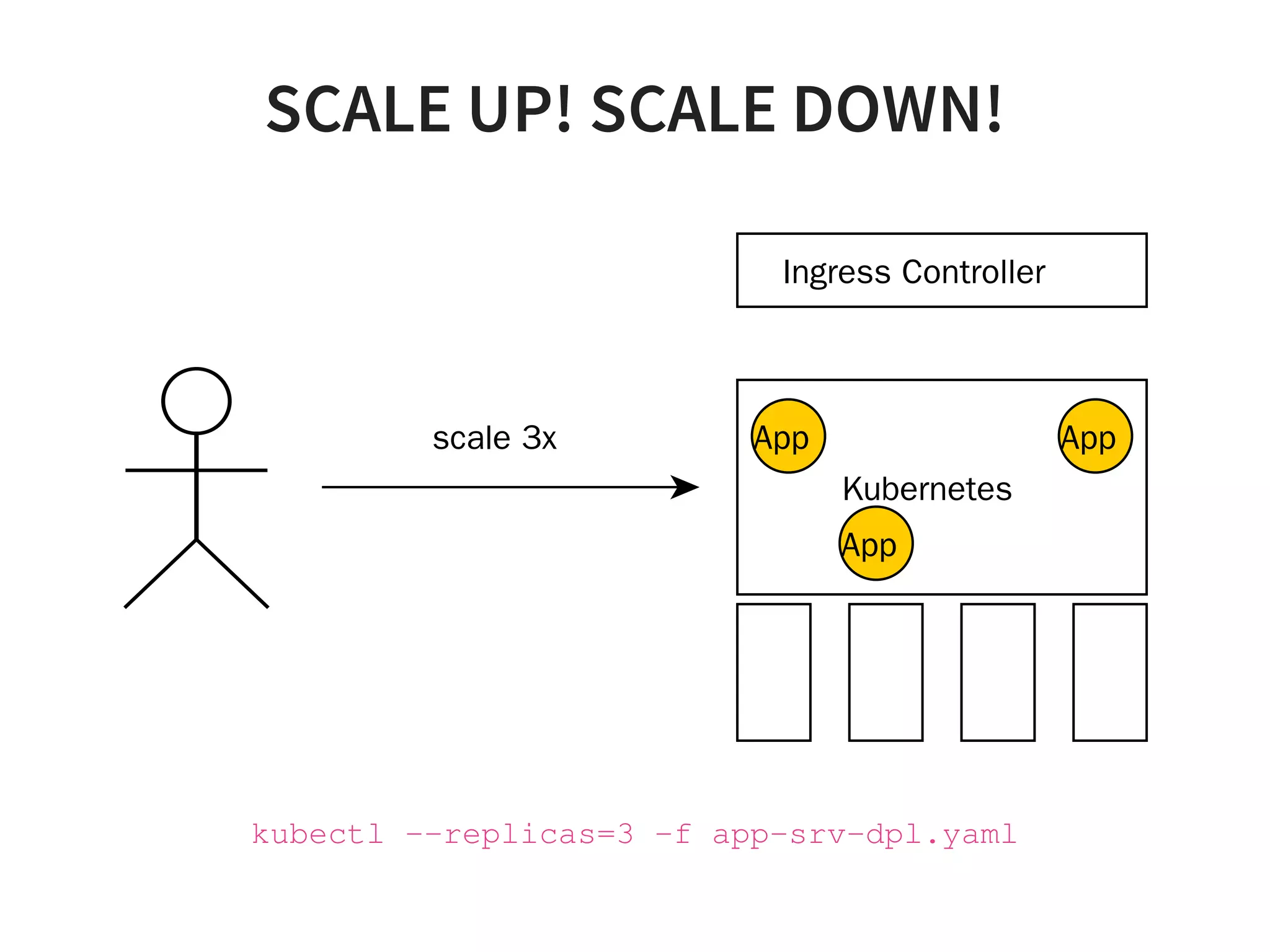

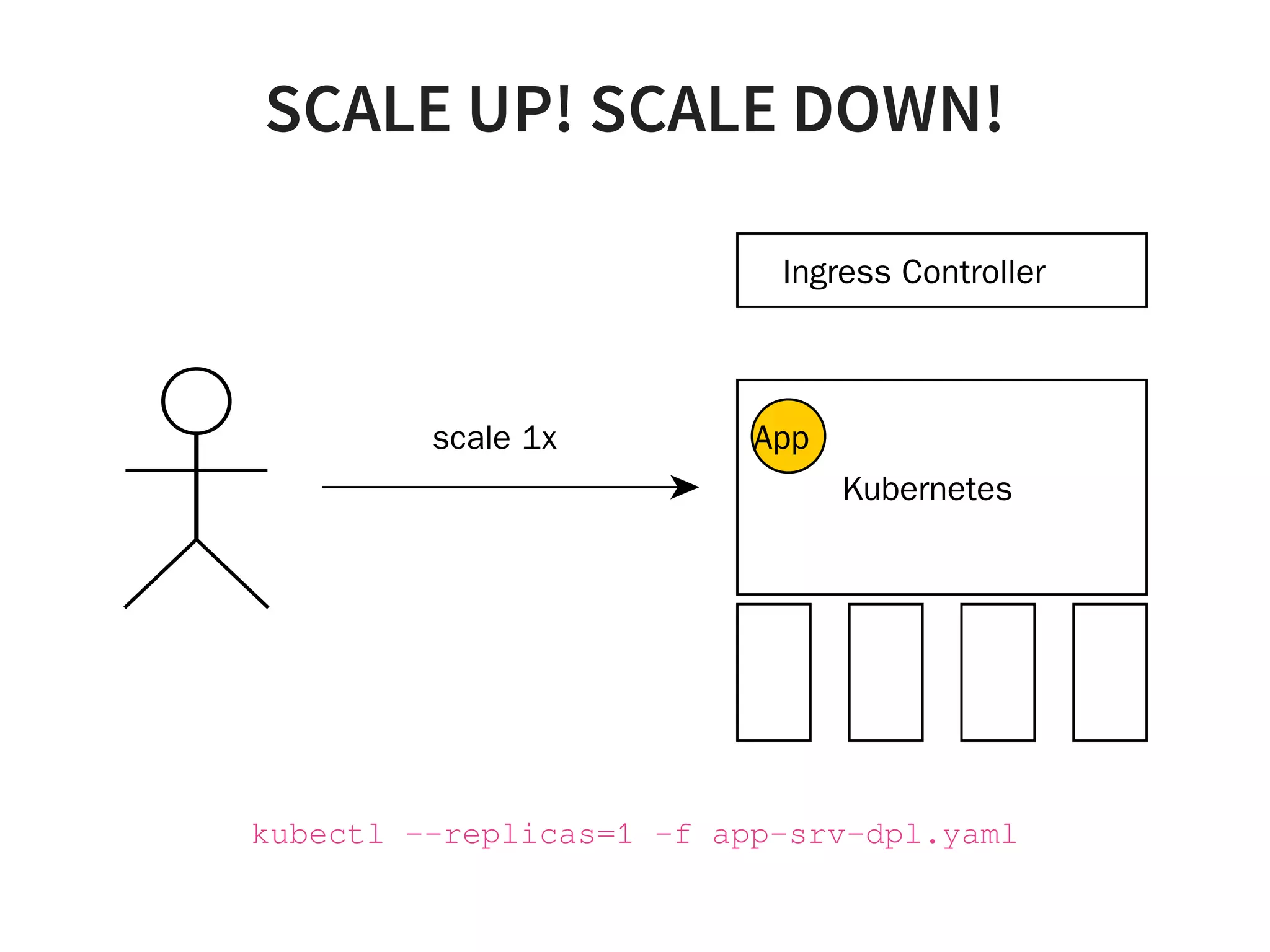

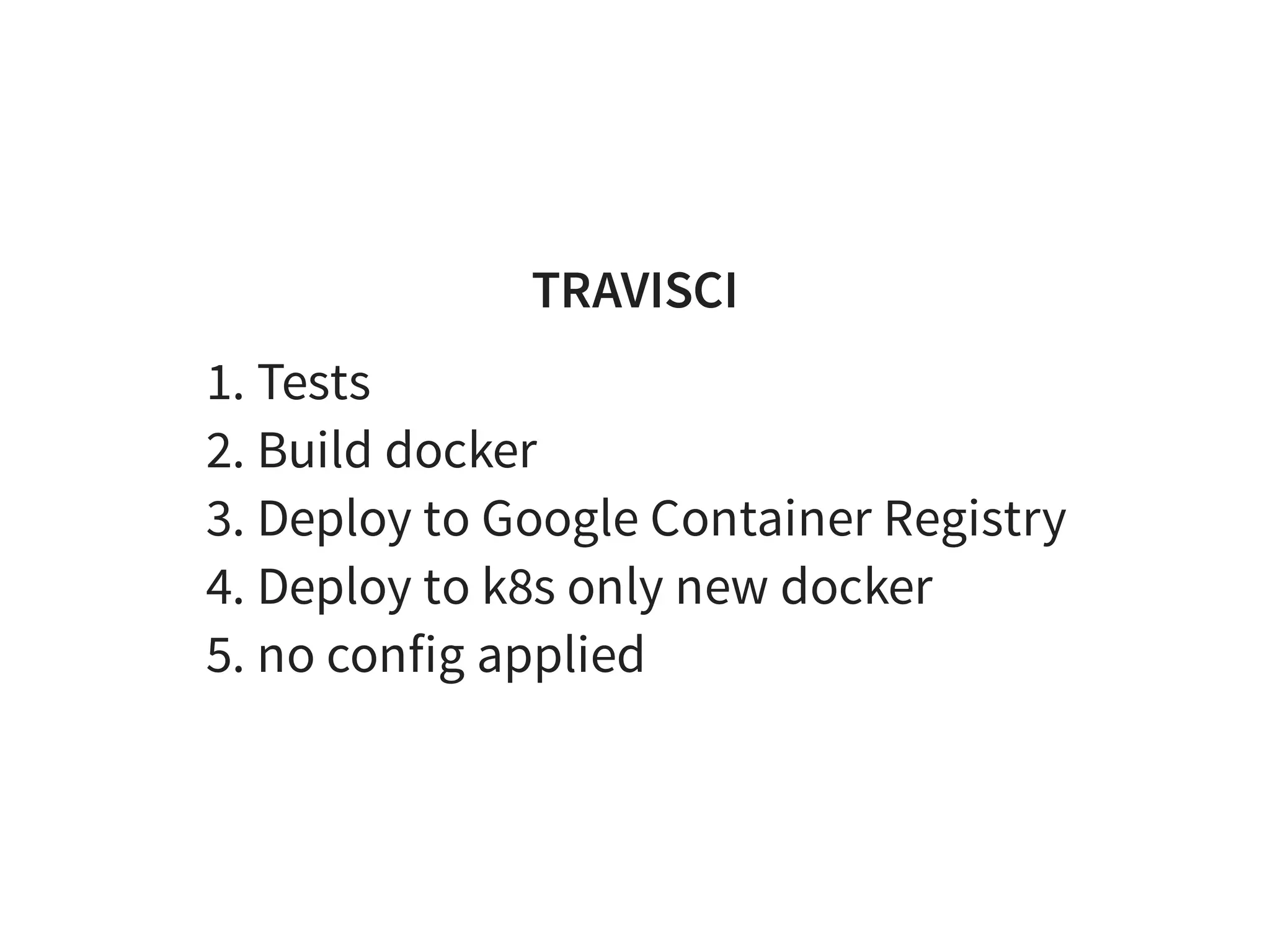

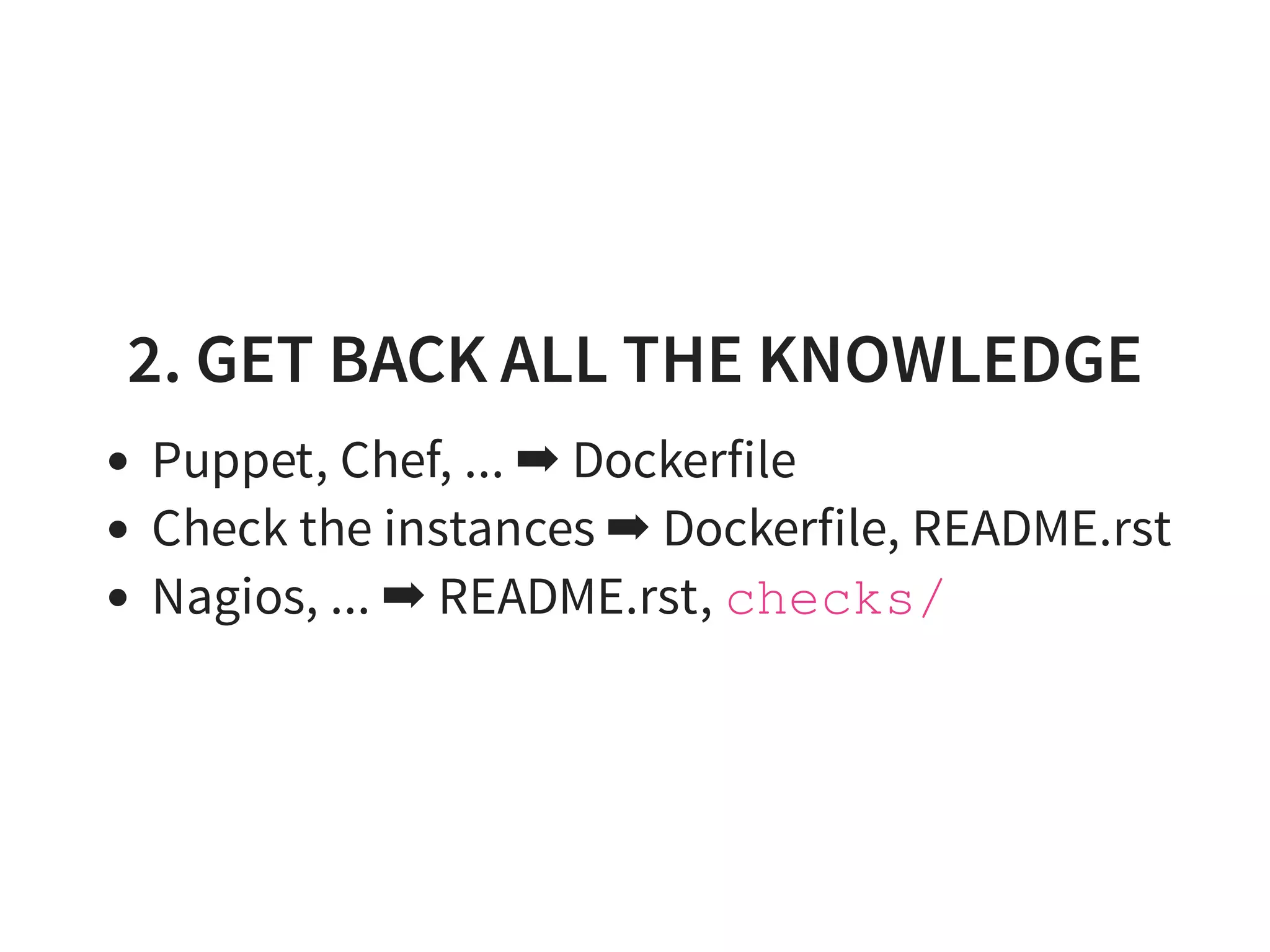

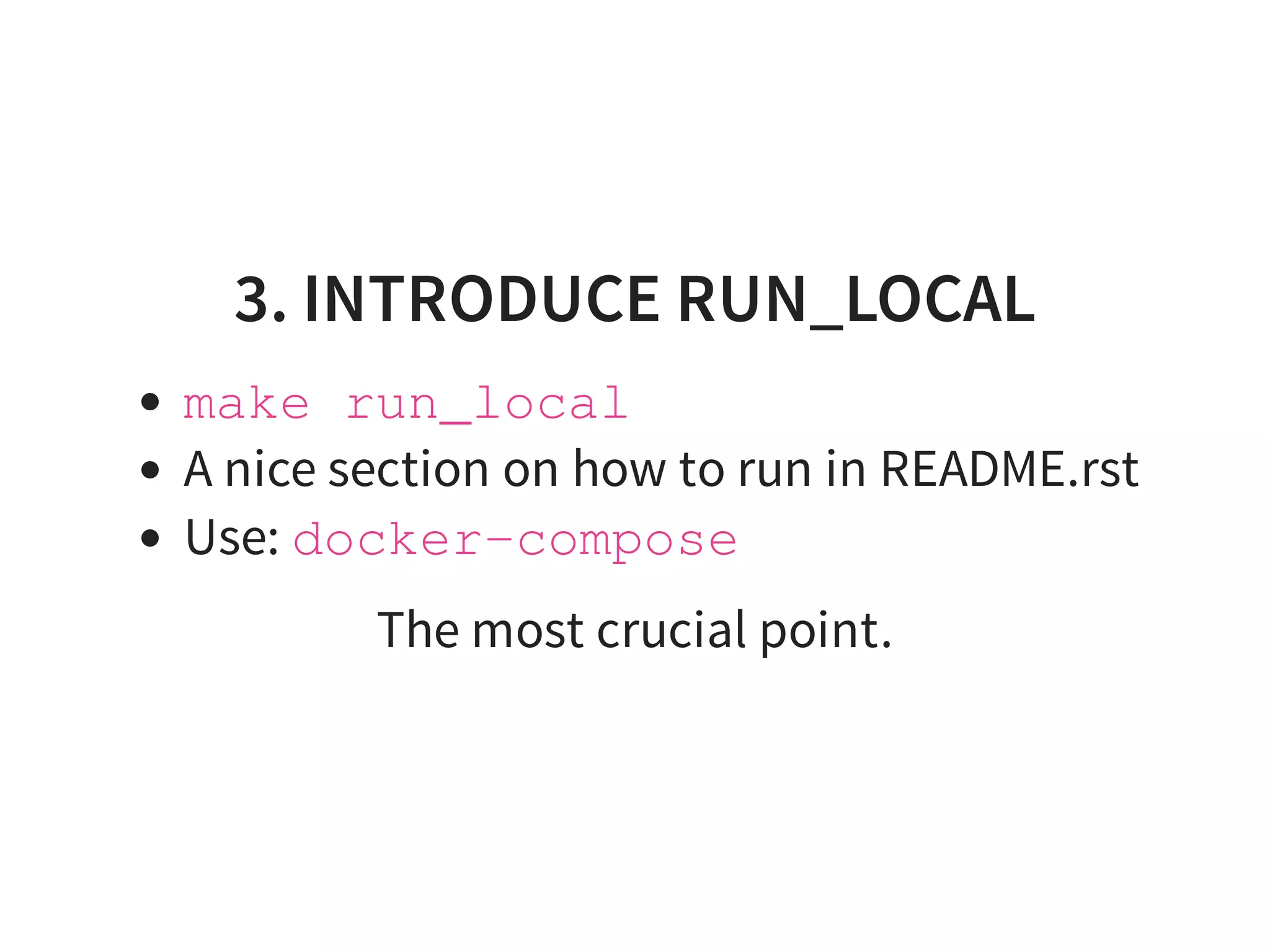

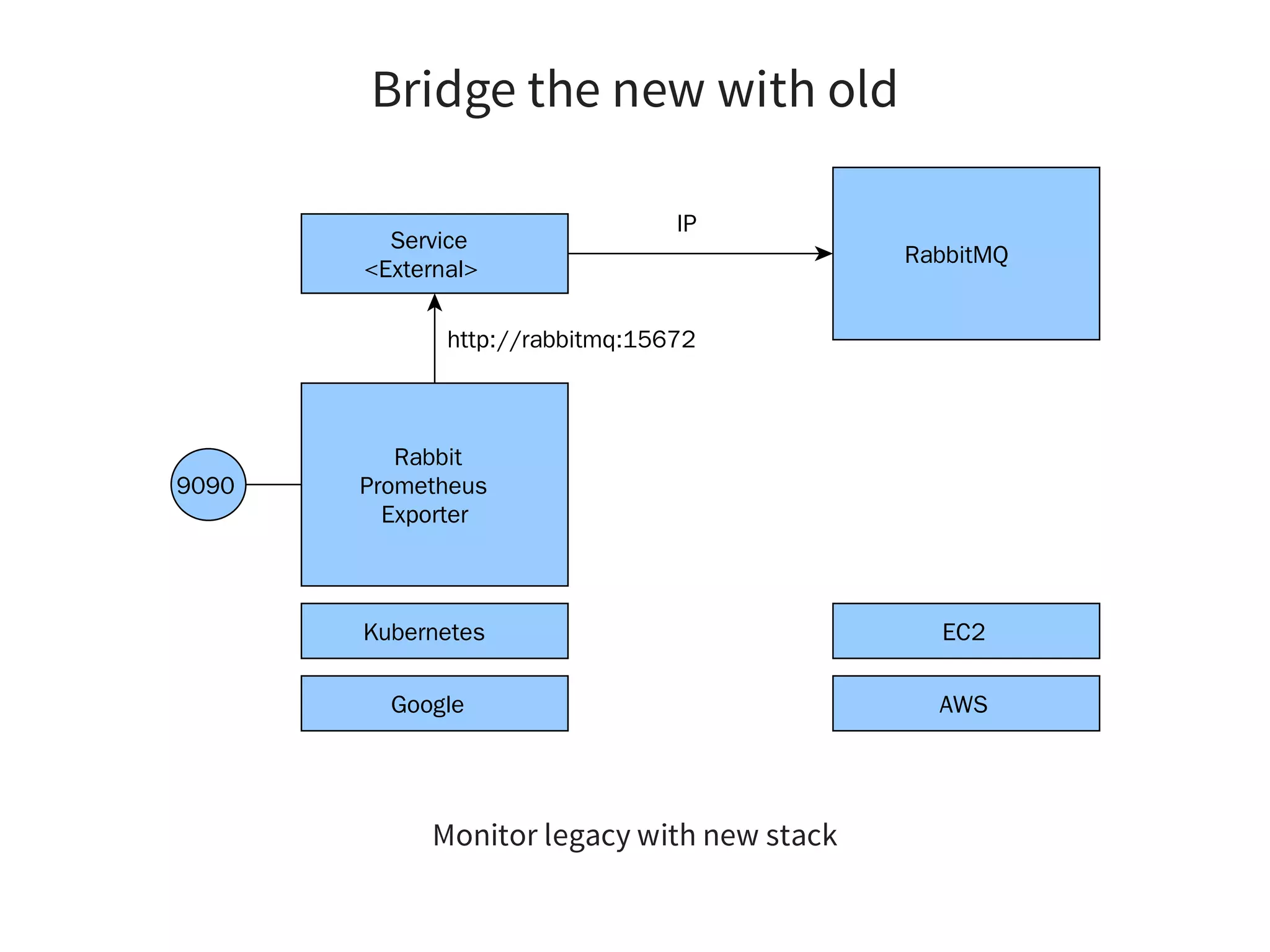

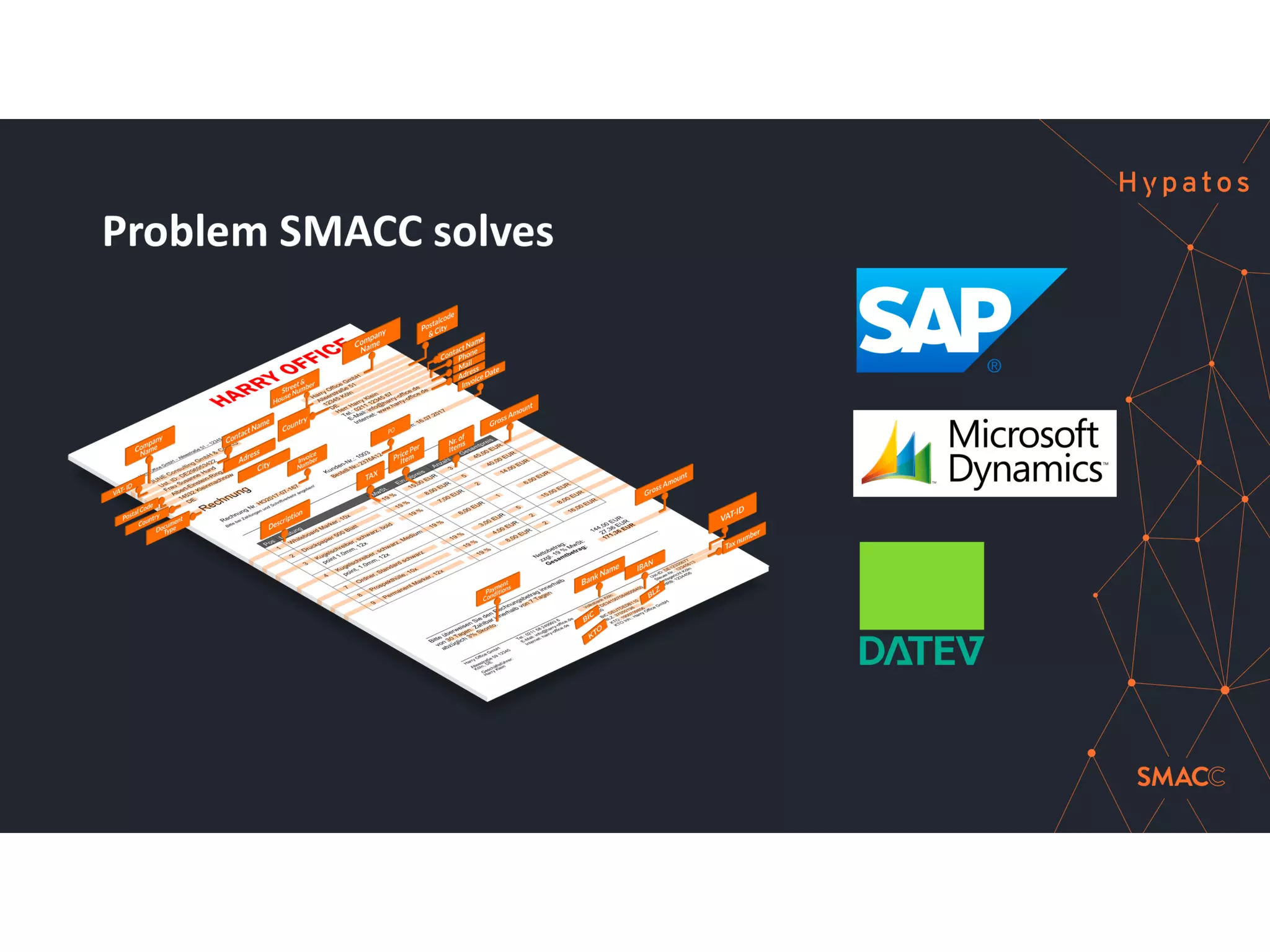

The document discusses effective platform building with Kubernetes, emphasizing its role in simplifying infrastructure management for micro-services, enhancing resource utilization, and reducing operational costs. It outlines practical approaches to deploying applications, managing rolling updates, and implementing a continuous deployment process using tools like Terraform and GitHub. The author shares experiences from their own work with various companies, detailing successes and challenges faced during transitions to Kubernetes-based architectures.

![STORY

Lyke - [12.2016 - 07.2017]

SMACC - [10.2017 - present]](https://image.slidesharecdn.com/index-180917195221/75/Effective-Kubernetes-Is-Kubernetes-the-new-Linux-Is-the-new-Application-Server-4-2048.jpg)

![1. CLEAN UP

Single script for repo - Makefile [1]

Resurrect the README

[1] With zsh or bash auto-completion plug-in in your terminal.](https://image.slidesharecdn.com/index-180917195221/75/Effective-Kubernetes-Is-Kubernetes-the-new-Linux-Is-the-new-Application-Server-39-2048.jpg)

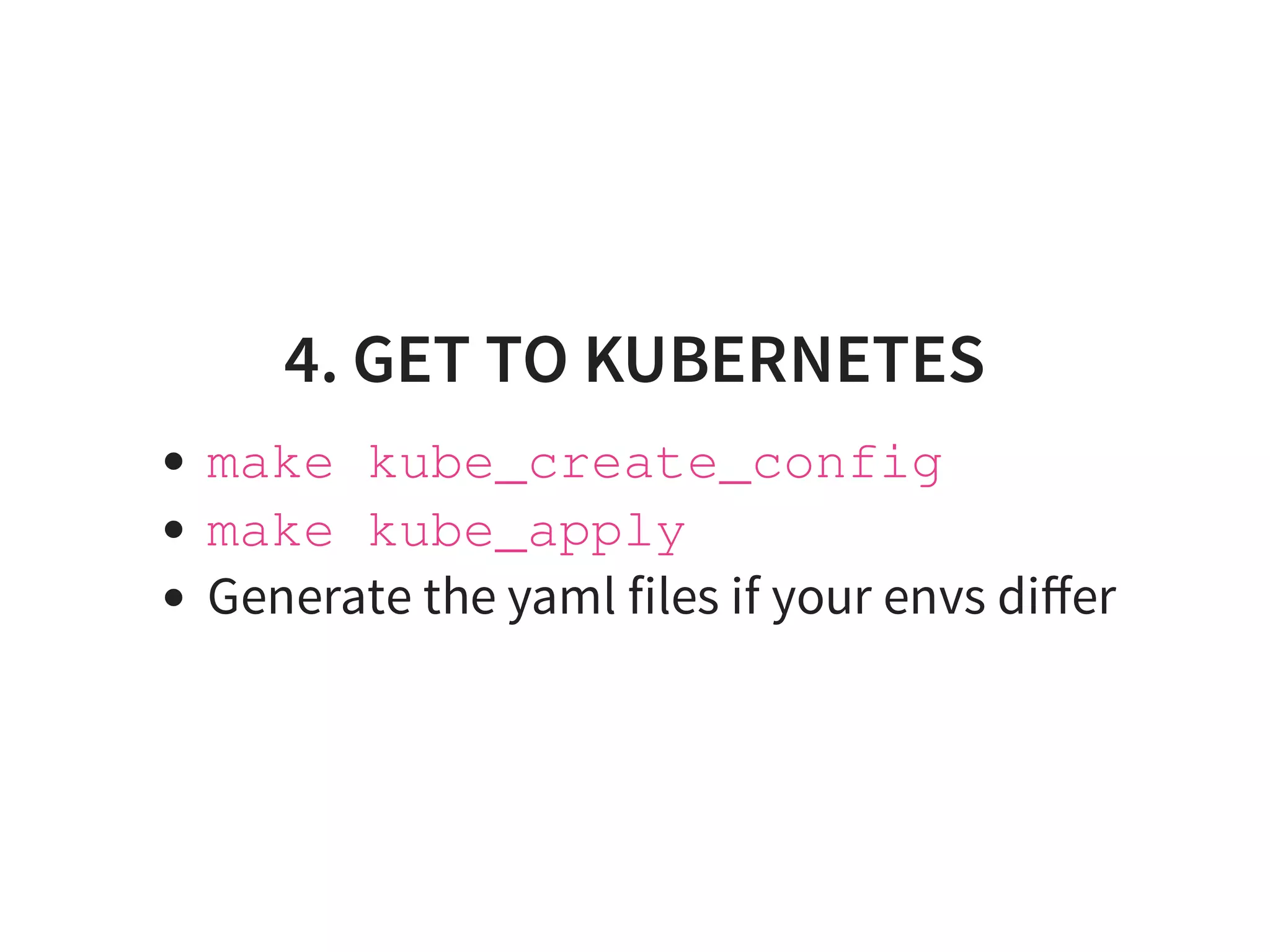

![6. KEEP IT RUNNING

Bridge the new with old:

Use external services in Kubernetes

Optional: Expose k8s in the Legacy [1]

[1] feeding K8S events to HashiCorp consul](https://image.slidesharecdn.com/index-180917195221/75/Effective-Kubernetes-Is-Kubernetes-the-new-Linux-Is-the-new-Application-Server-44-2048.jpg)

![WHAT DID NOT WORK

1. Too many PoC, should cut them to 2 weeks max

2. Do it with smaller chunks

3. Alert rules too hard to write

4. Push back to k8s yaml [*]

With coaching, I thought, it is OK](https://image.slidesharecdn.com/index-180917195221/75/Effective-Kubernetes-Is-Kubernetes-the-new-Linux-Is-the-new-Application-Server-52-2048.jpg)

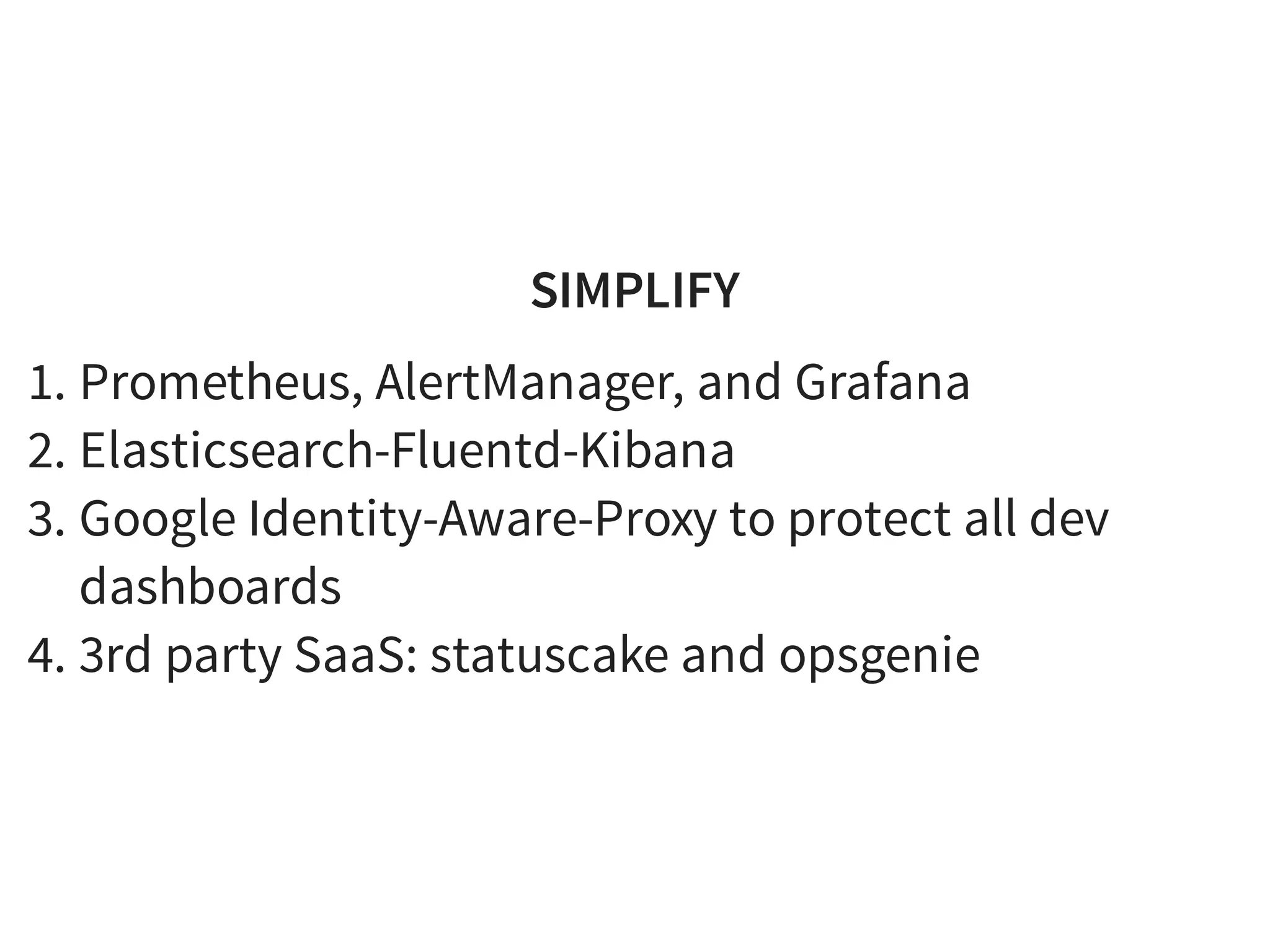

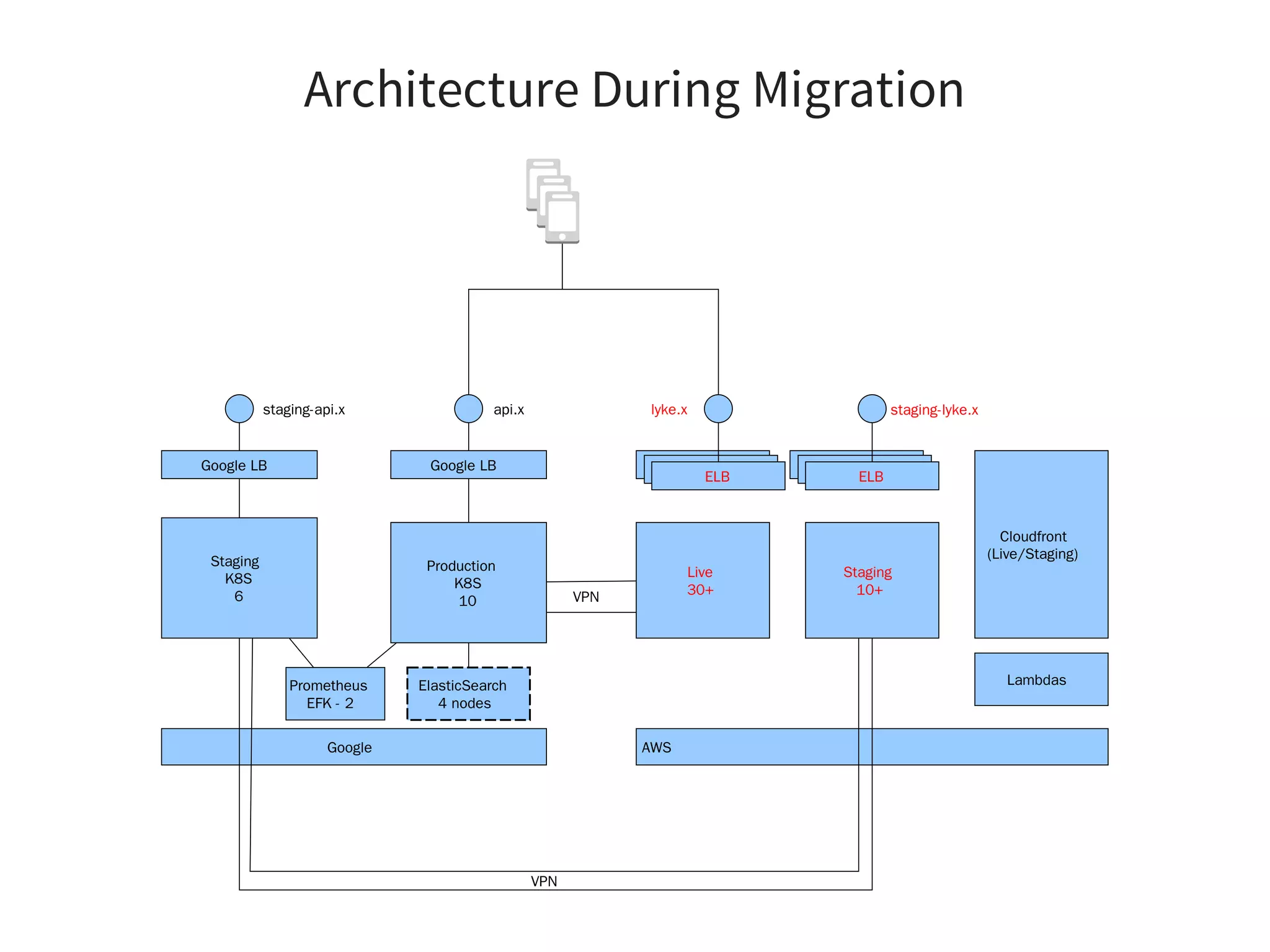

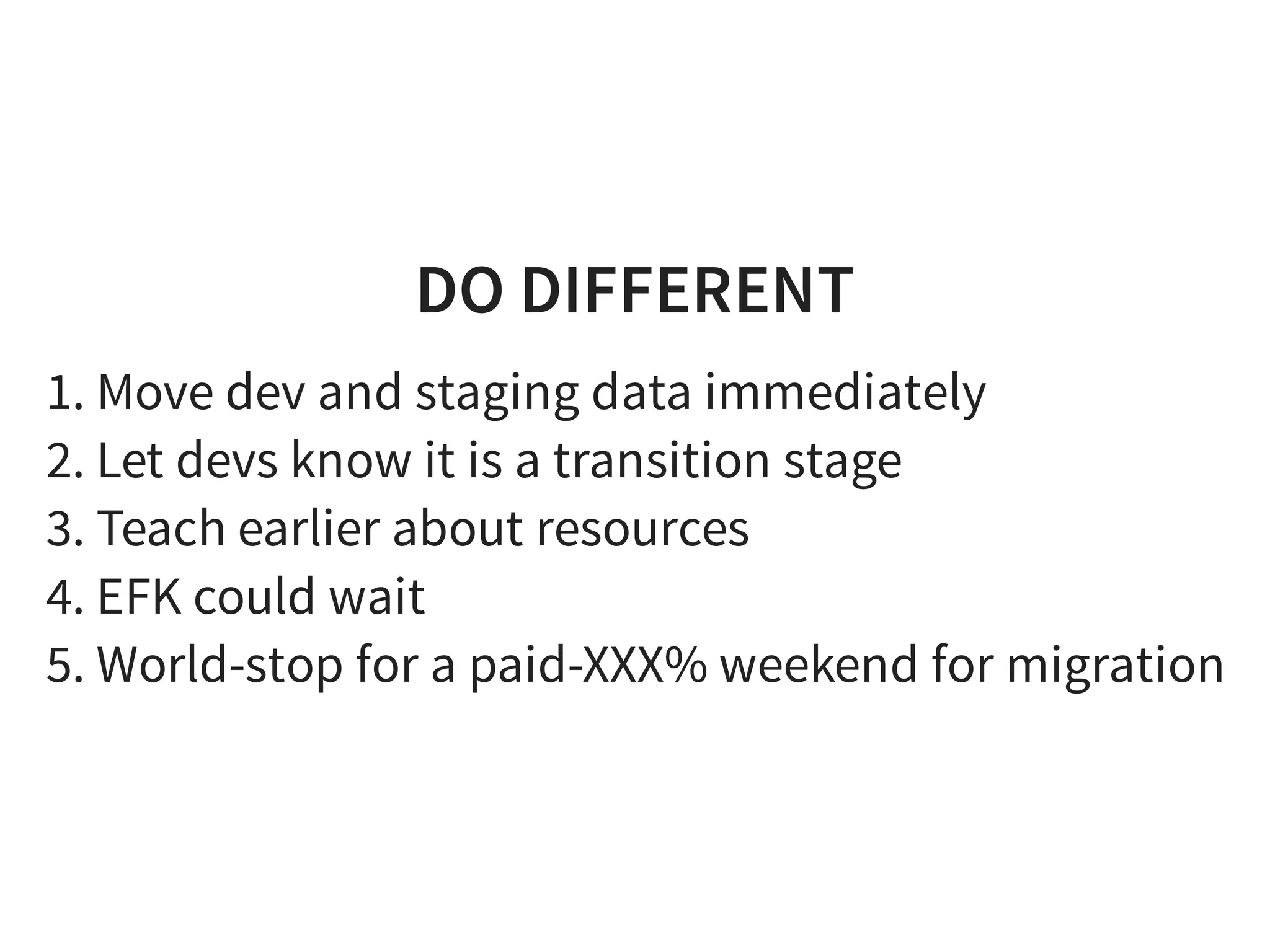

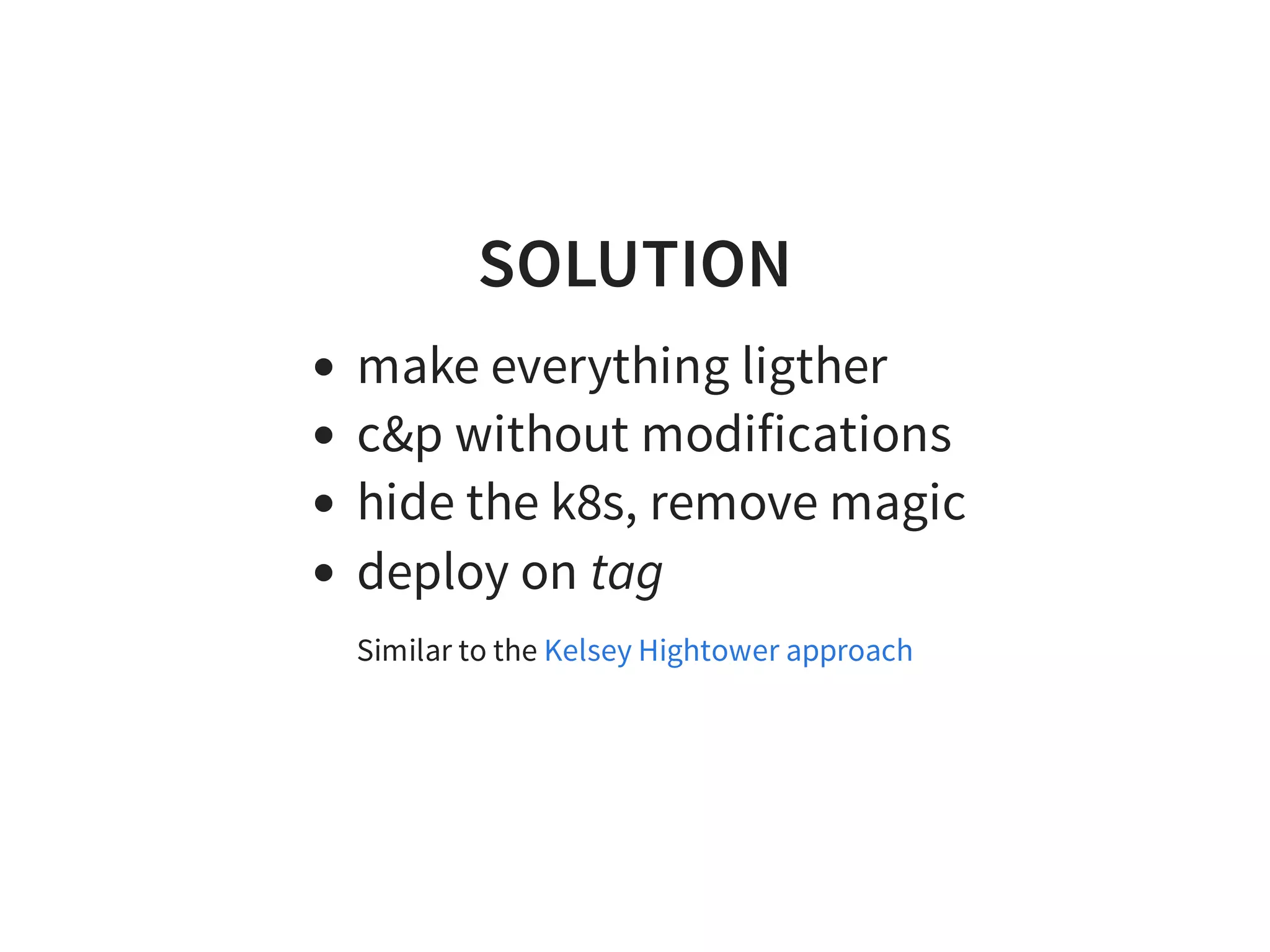

![Repo .travis.yml

language: go

go:

- '1.10'

services:

- docker

install:

- curl -sL https://${GITHUB_TOKEN}@raw.githubusercontent.com

- if [ -f "tools/travis/install.sh" ]; then bash tools/travi

script:

- dep ensure

- make lint

- make test

- if [ -z "${TRAVIS_TAG}" ]; then make snapshot; fi;

deploy:

provider: script](https://image.slidesharecdn.com/index-180917195221/75/Effective-Kubernetes-Is-Kubernetes-the-new-Linux-Is-the-new-Application-Server-63-2048.jpg)