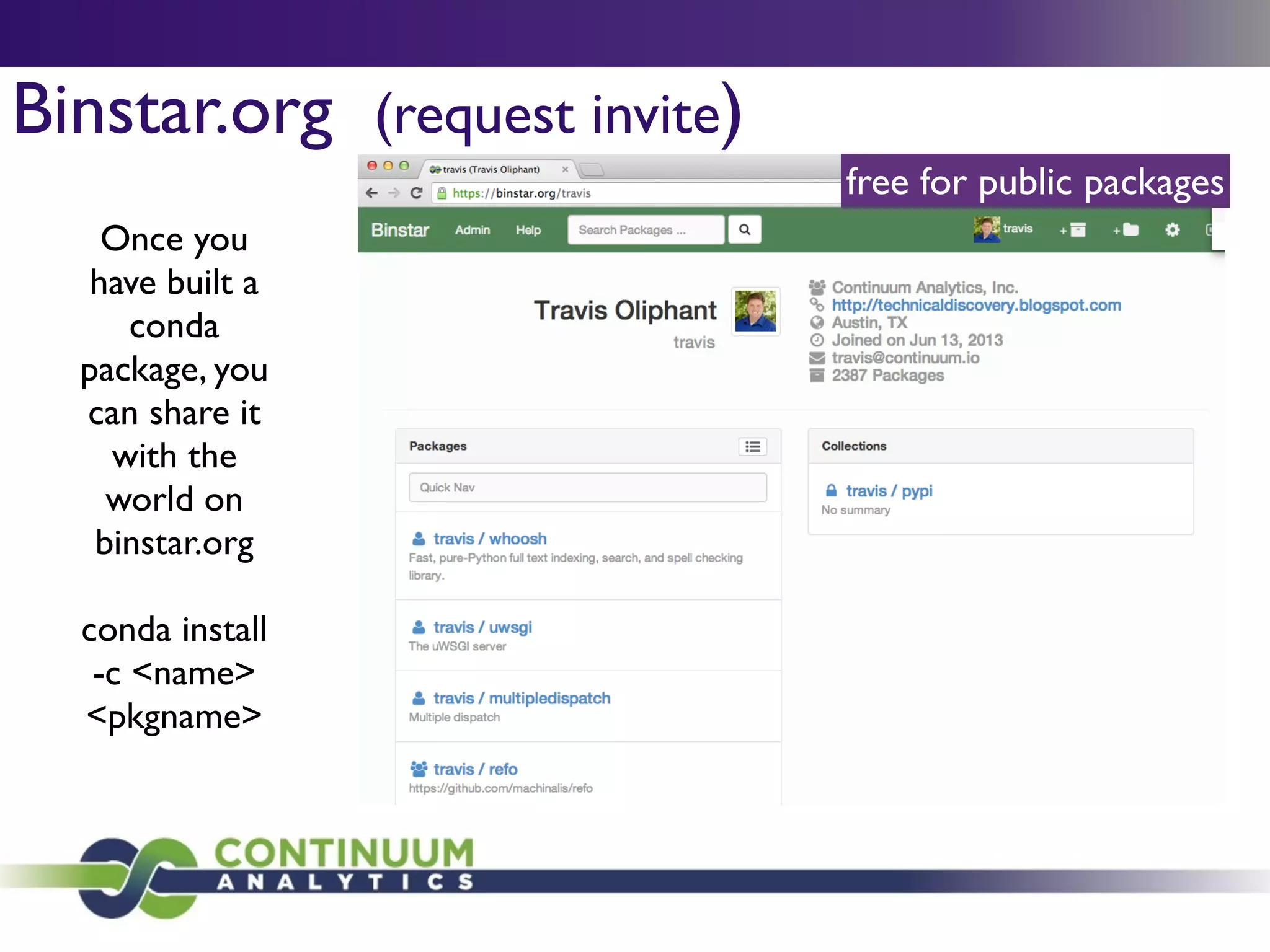

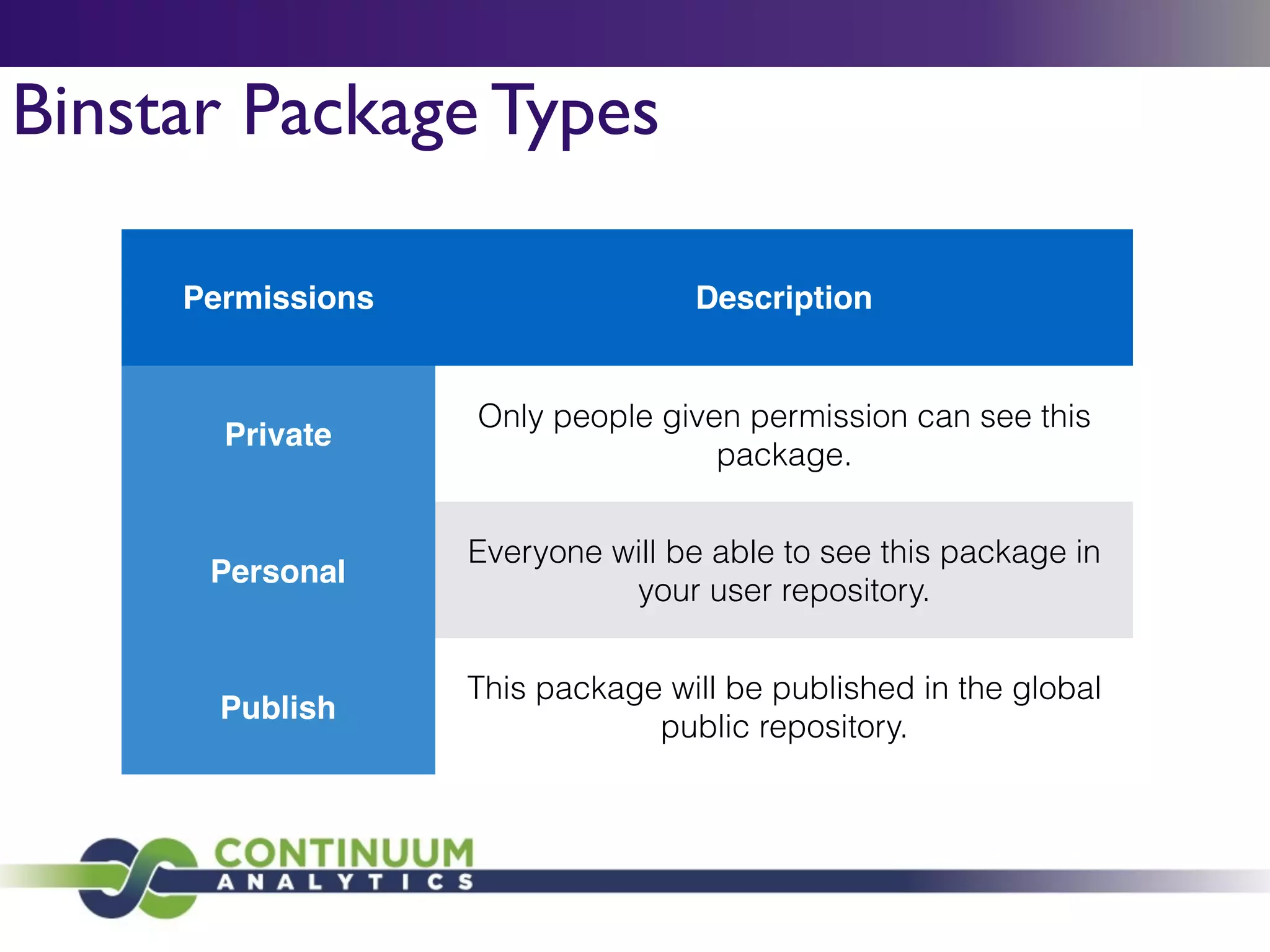

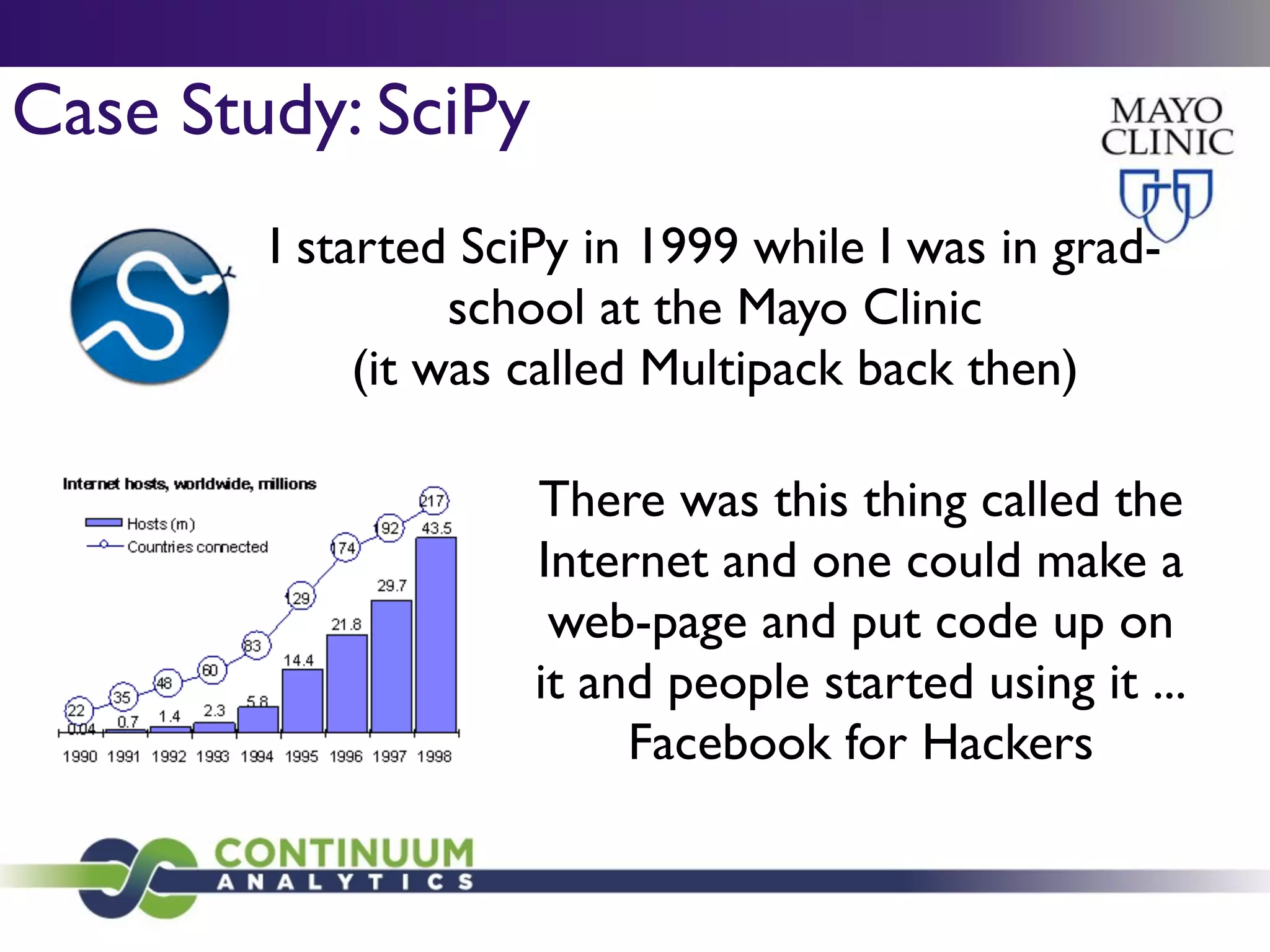

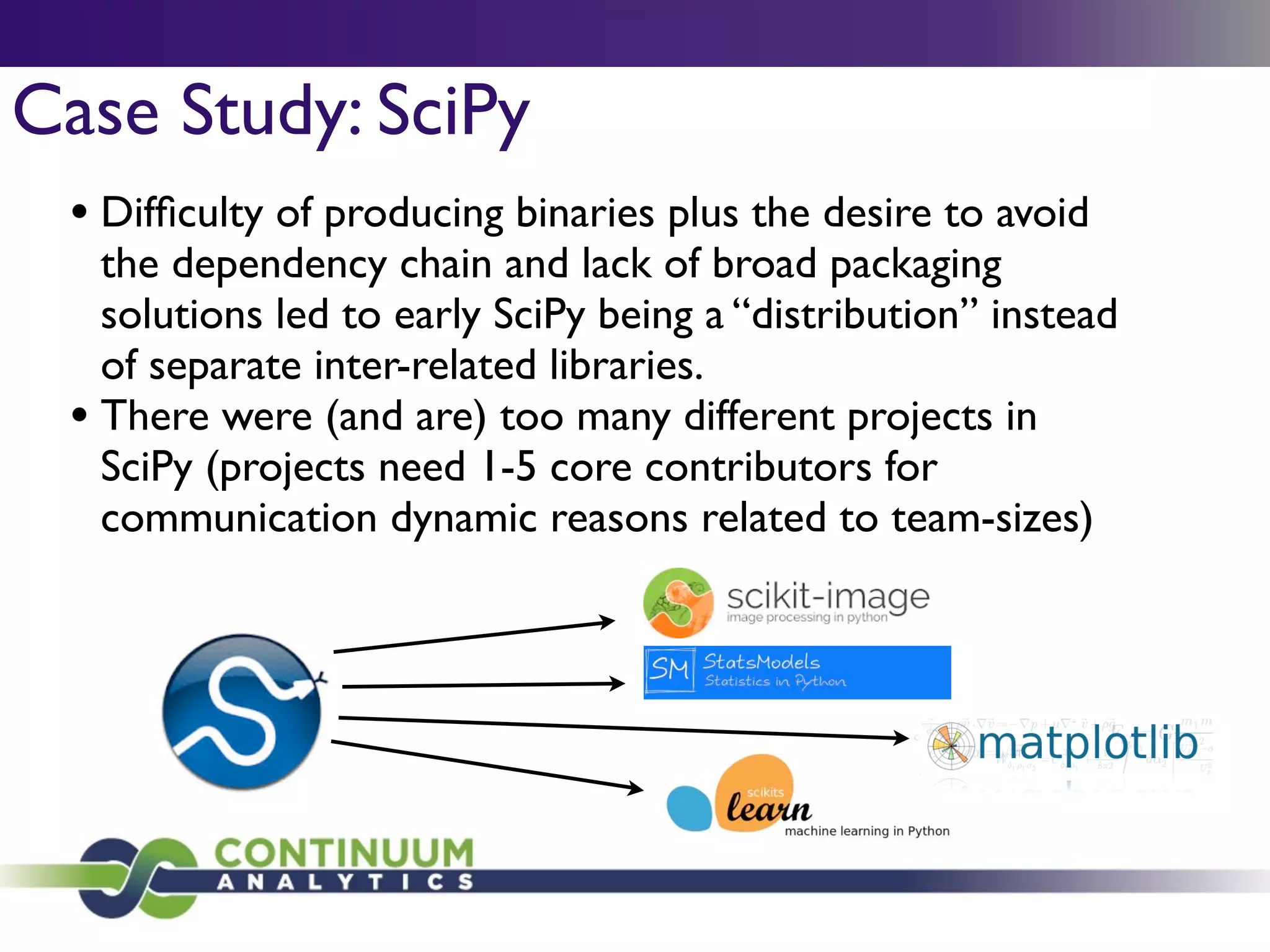

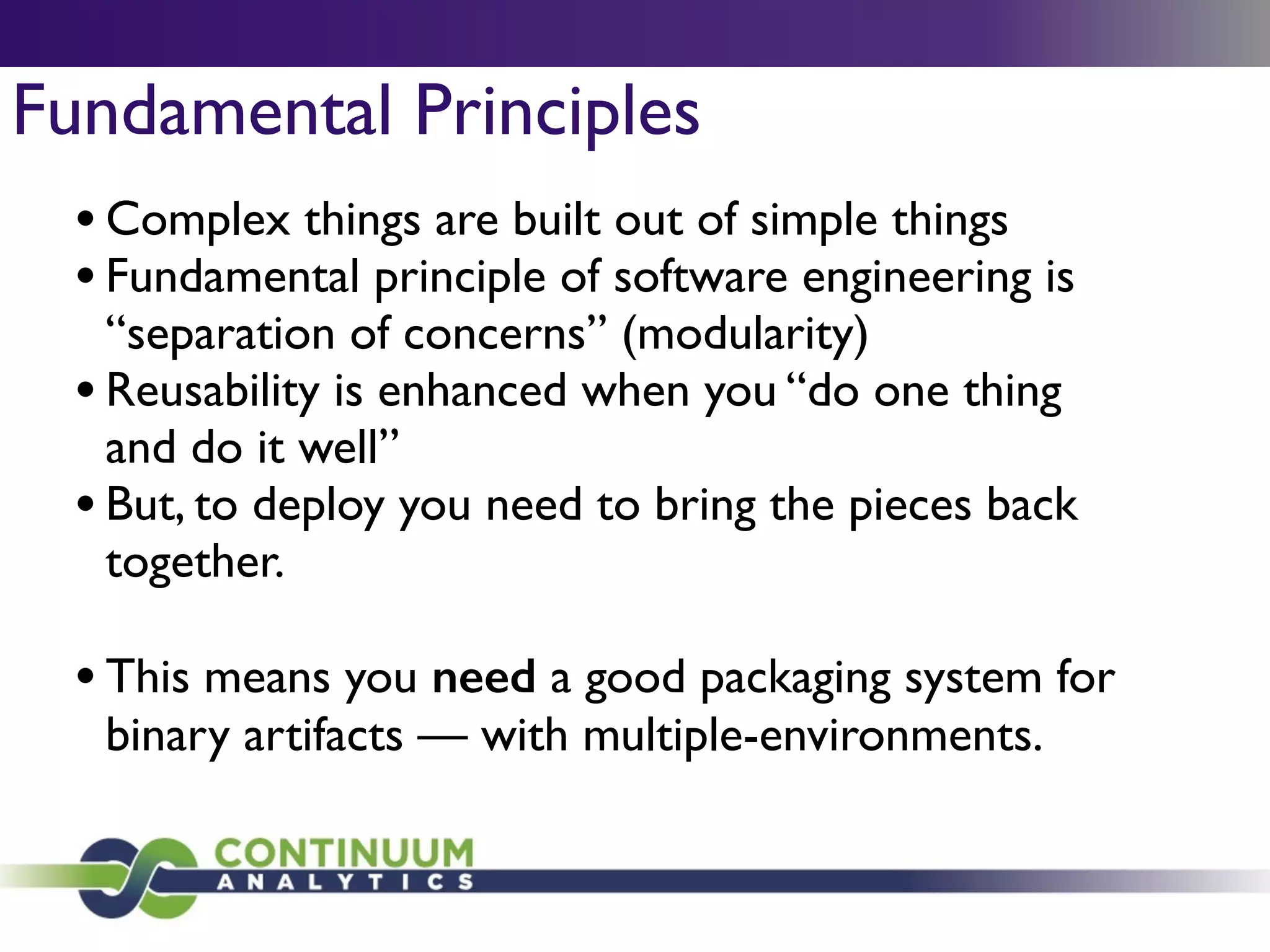

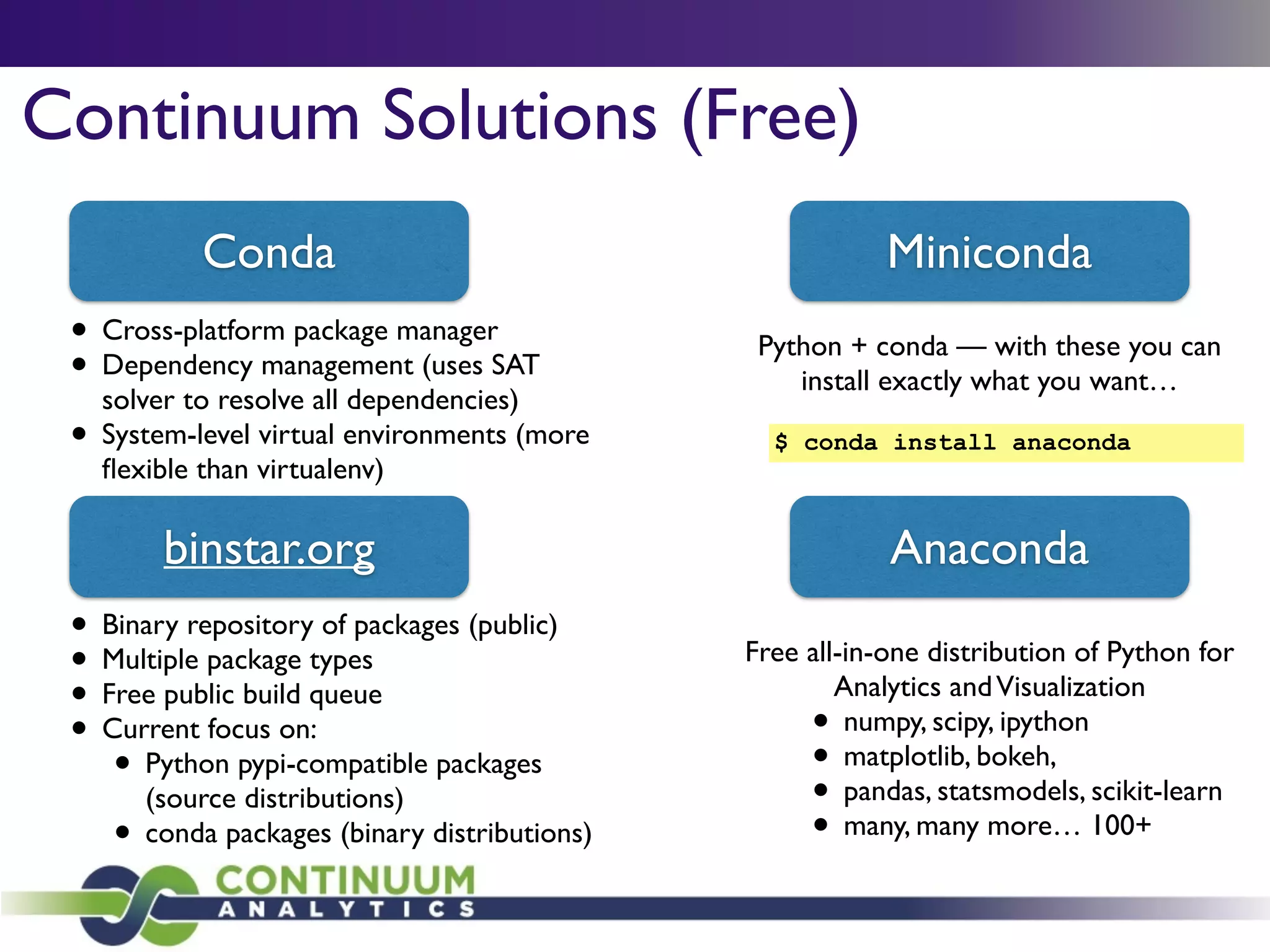

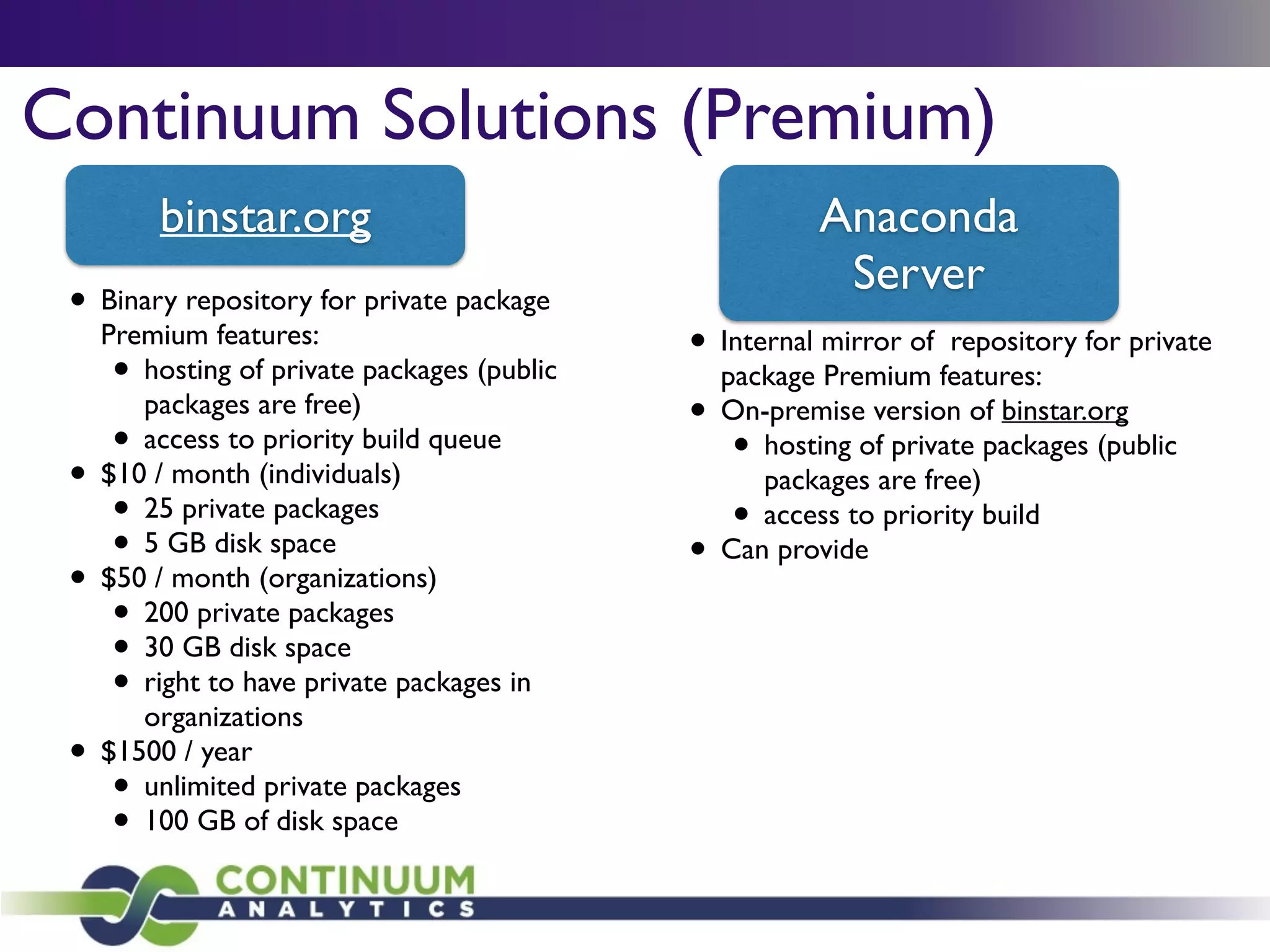

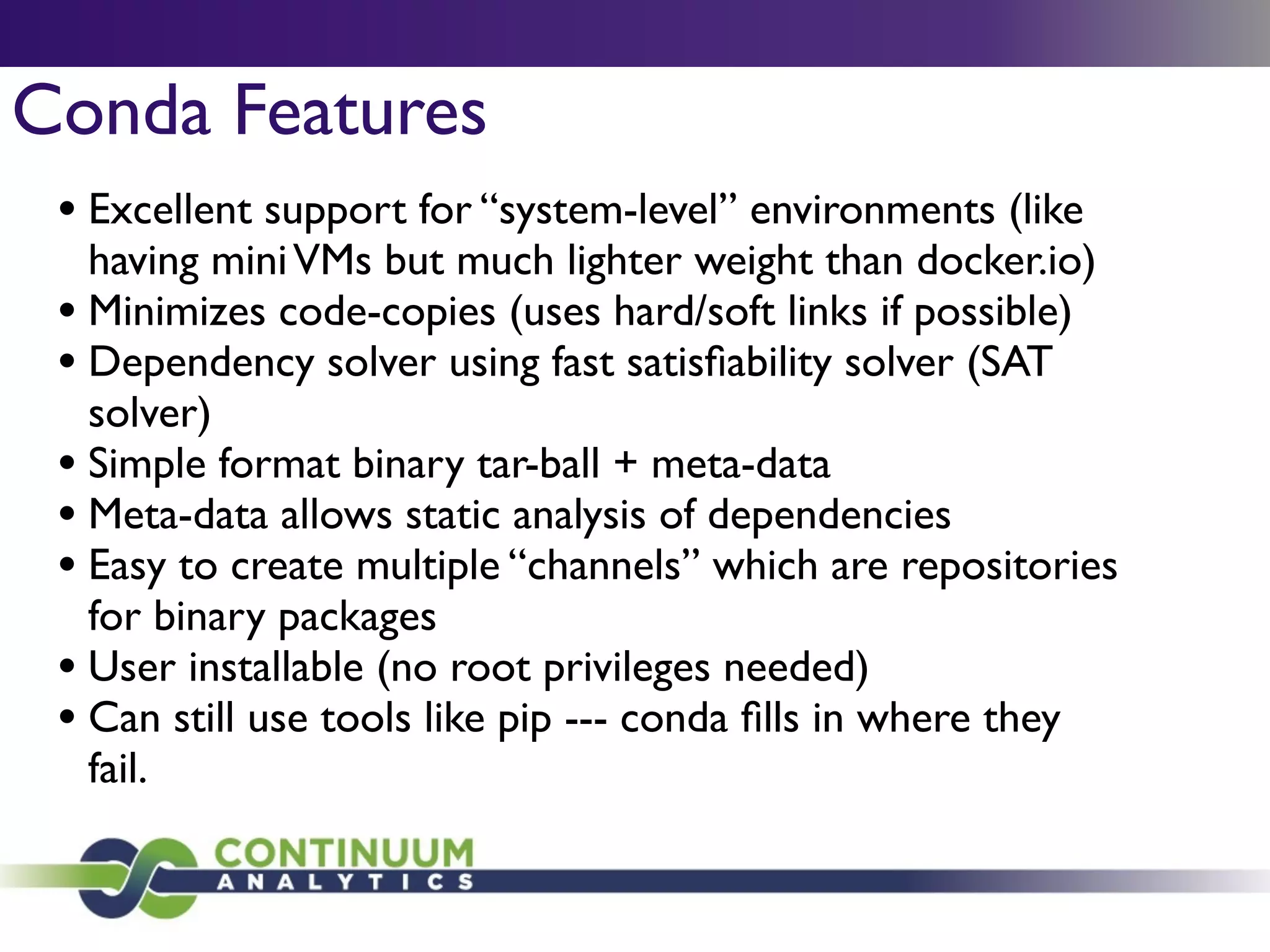

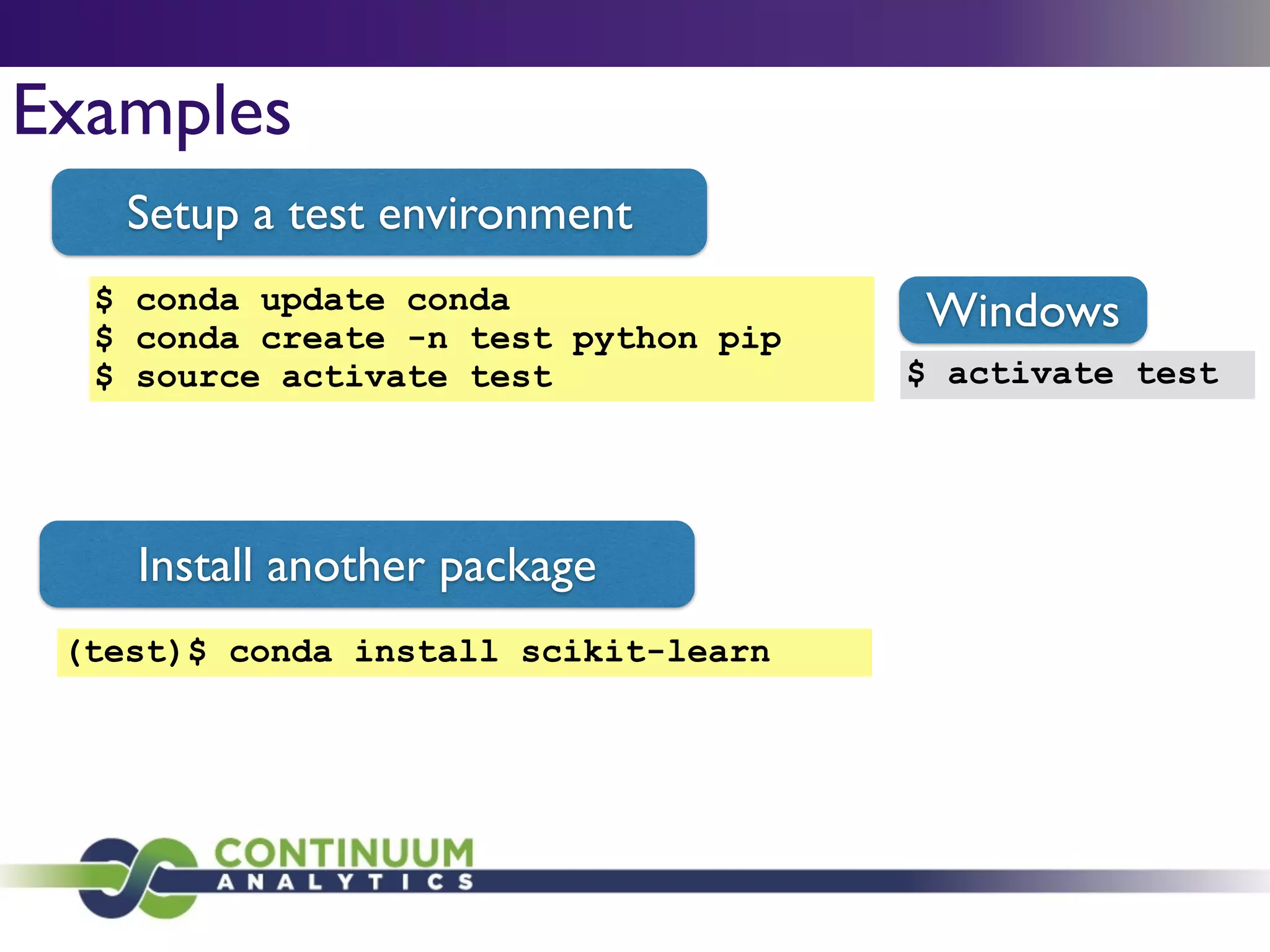

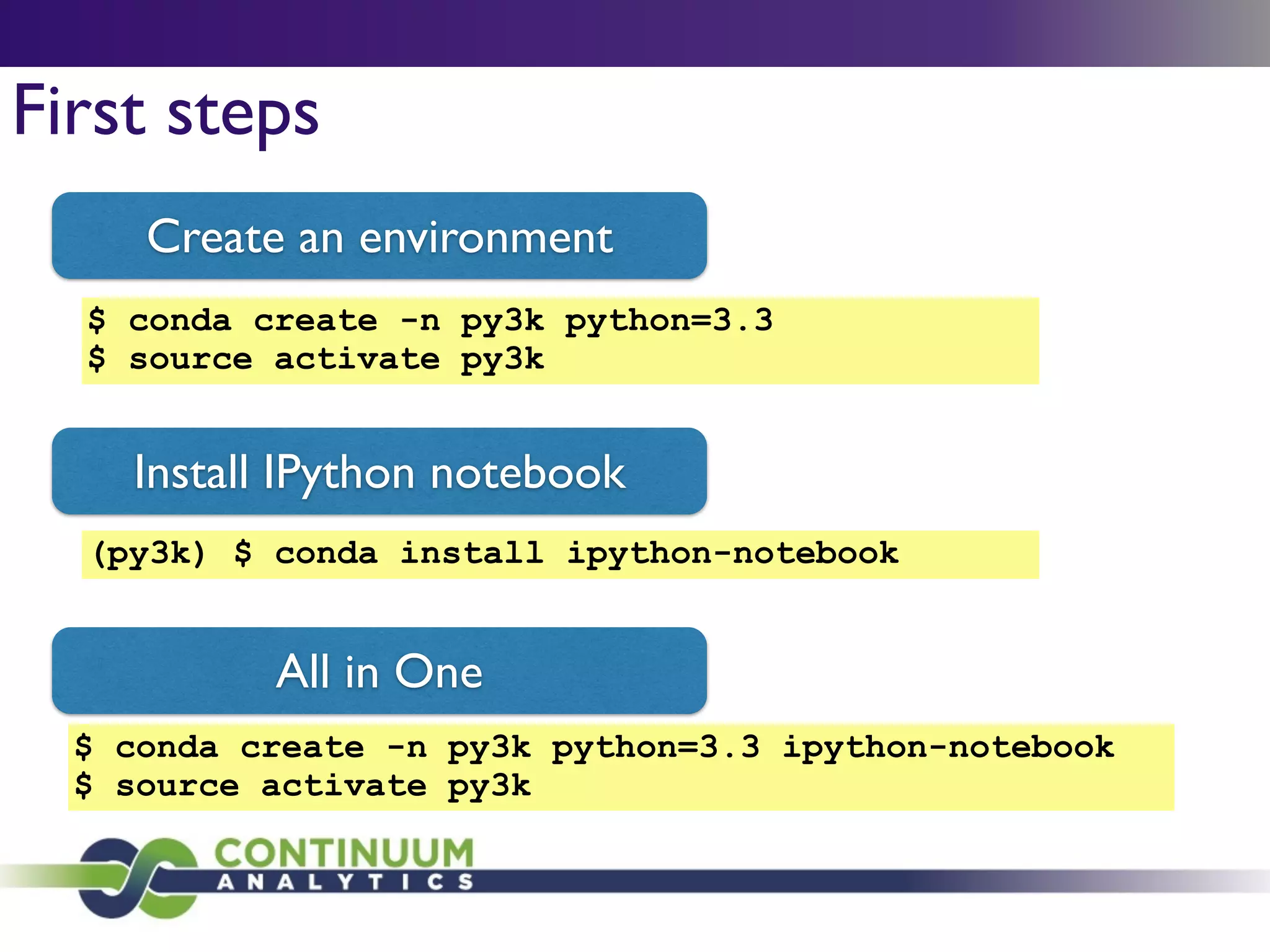

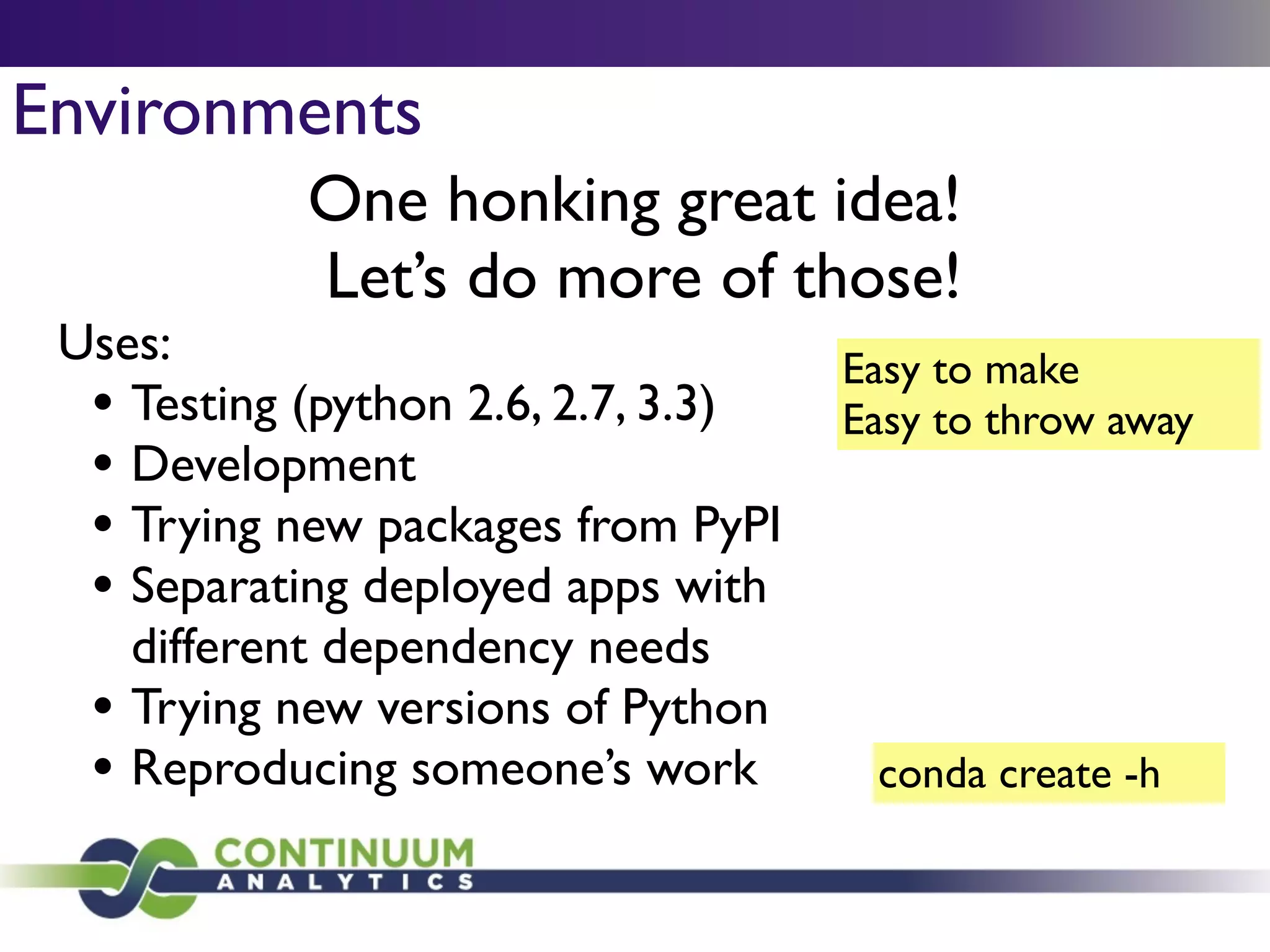

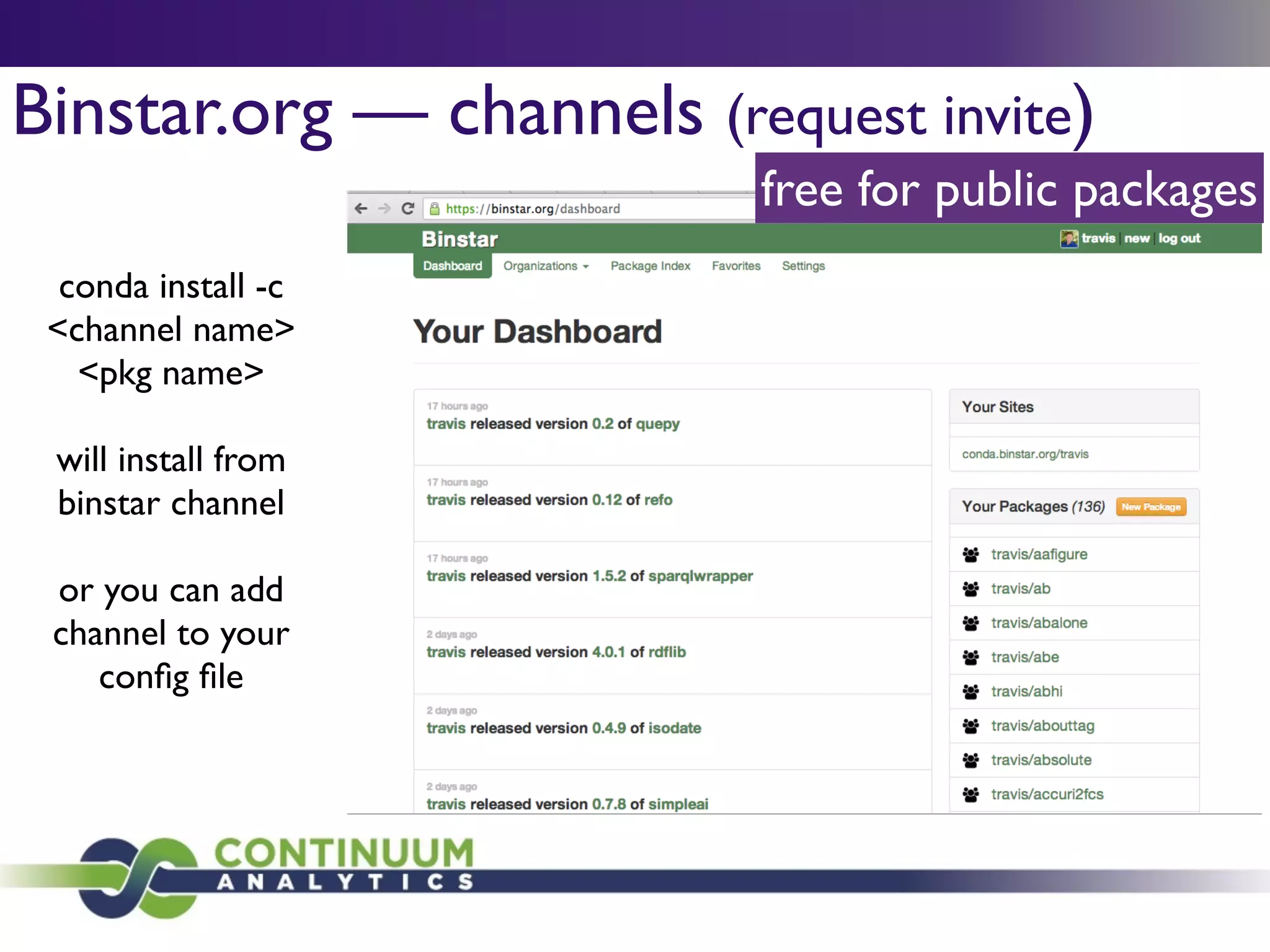

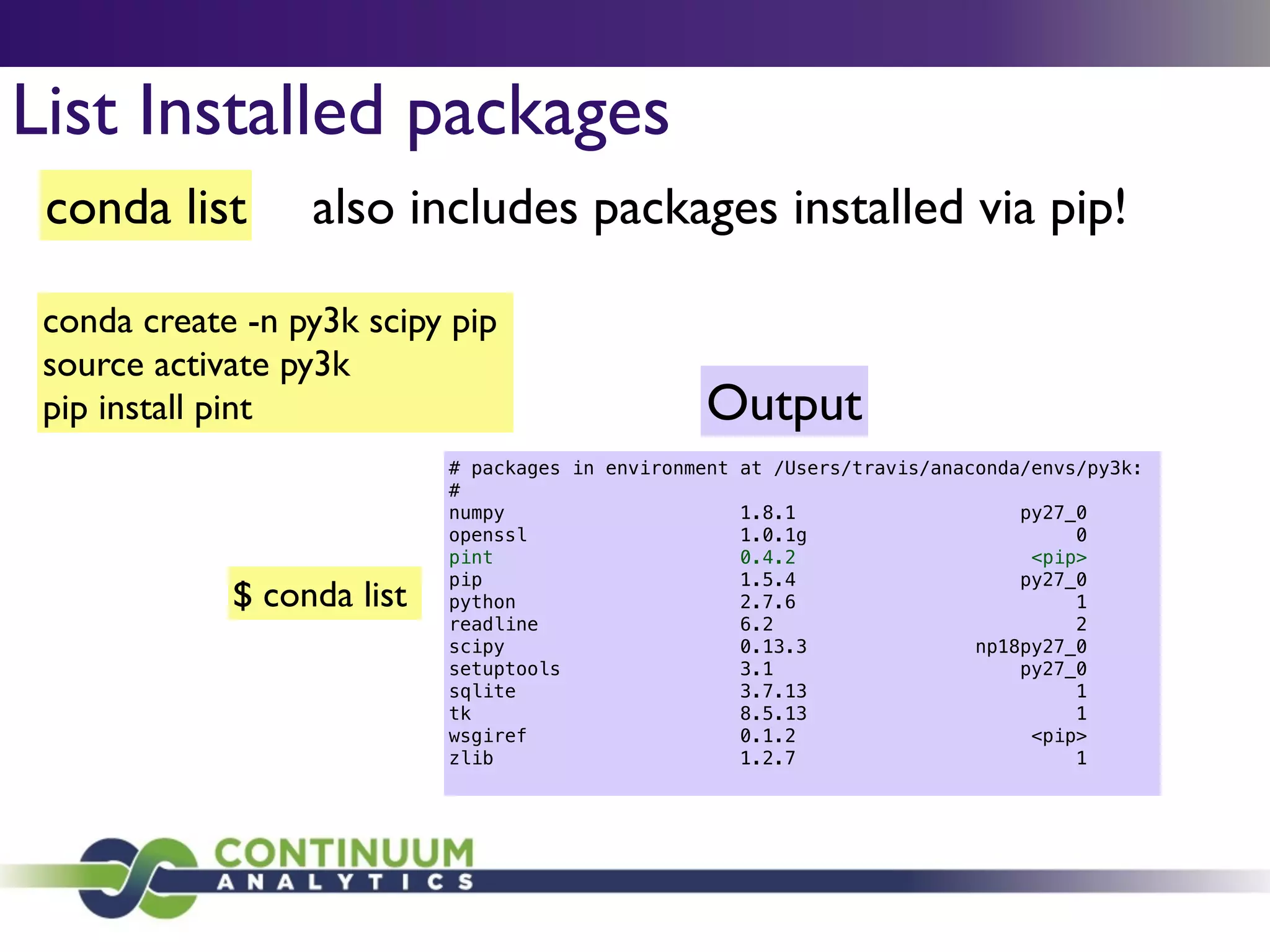

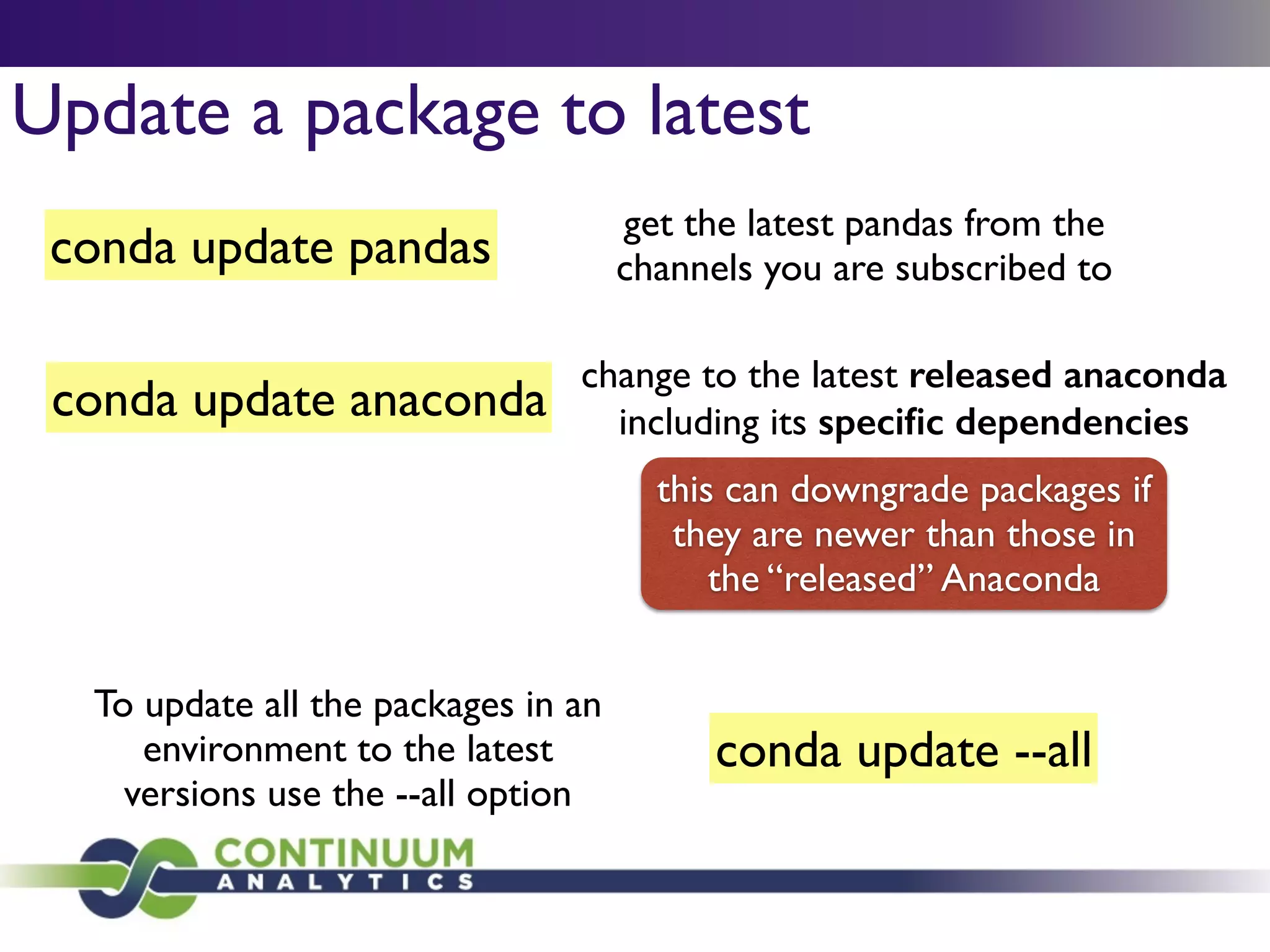

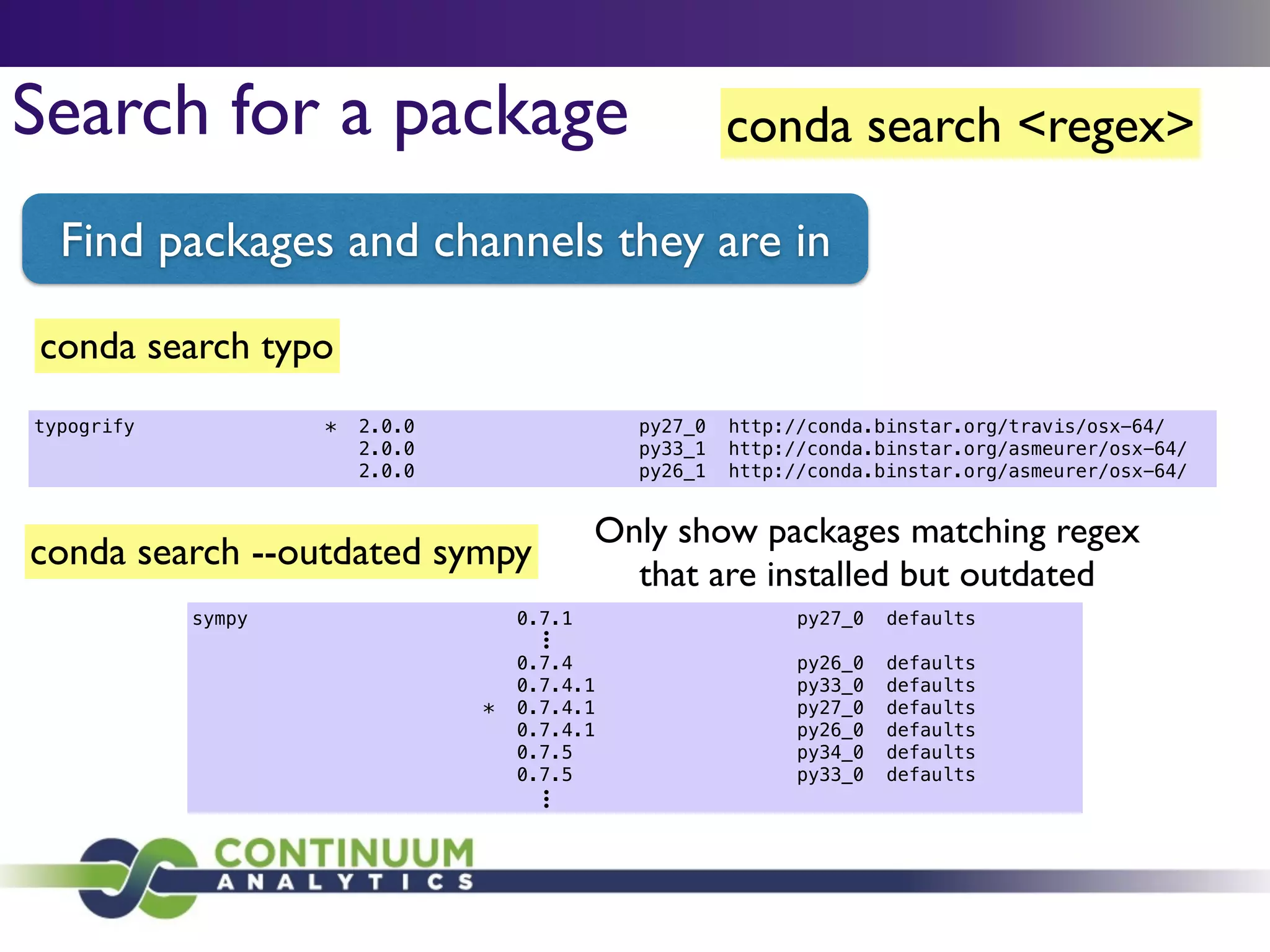

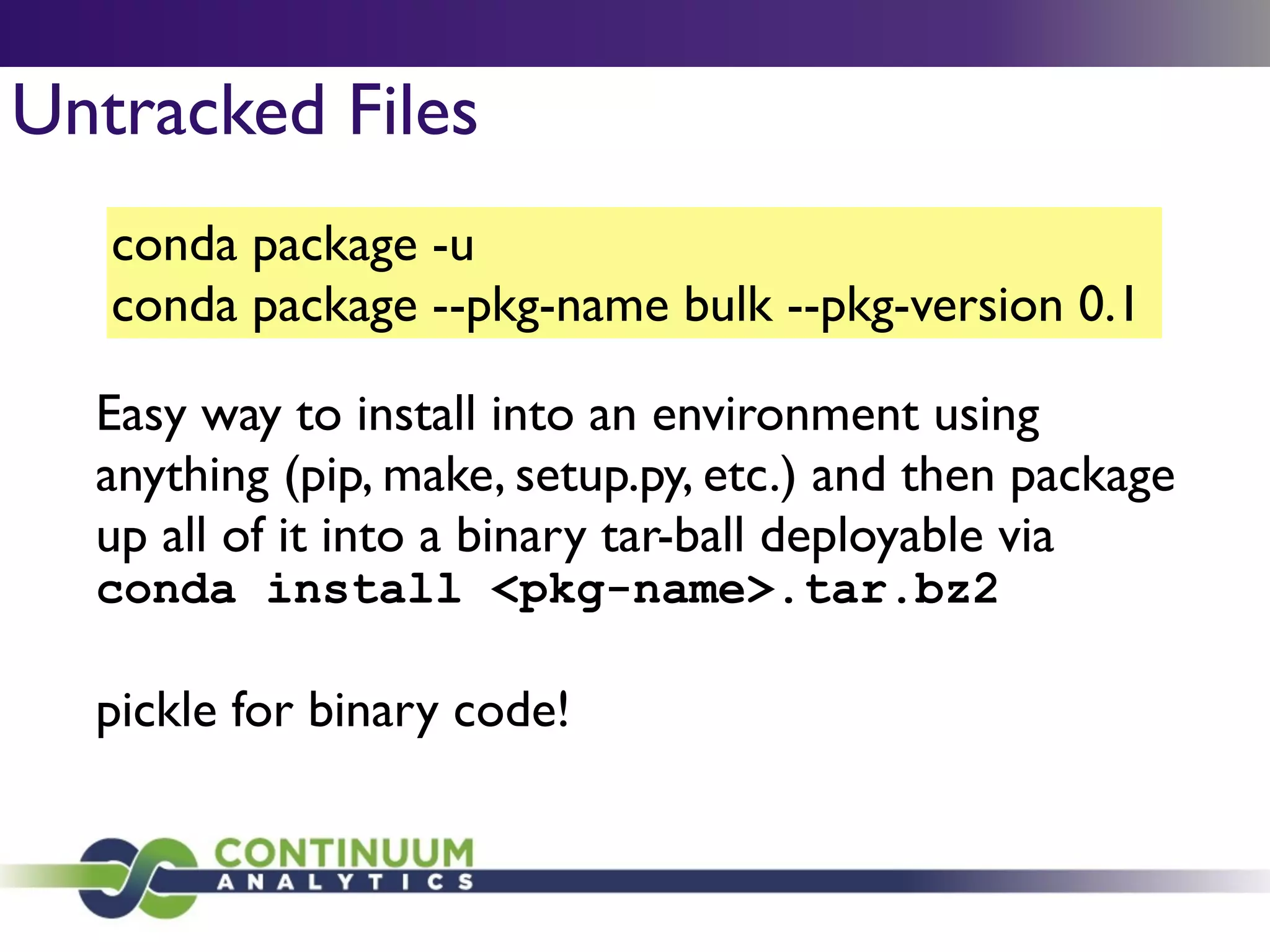

The document discusses the effective use of open source software with conda, highlighting the challenges of managing dependencies in production environments across various programming languages. It illustrates these challenges through case studies of popular libraries like SciPy and NumPy, emphasizing the need for a robust packaging and deployment solution. Conda is presented as a modern cross-platform package manager that addresses these challenges by facilitating environment management and dependency resolution, complemented by features from Binstar for distributed package hosting.

![# This is a sample .condarc file

!

# channel locations. These override conda defaults, i.e., conda will

# search *only* the channels listed here, in the order given. Use "default" to

# automatically include all default channels.

!

channels:

- defaults

- http://some.custom/channel

!

# Proxy settings

# http://[username]:[password]@[server]:[port]

proxy_servers:

http: http://user:pass@corp.com:8080

https: https://user:pass@corp.com:8080

!

envs_dirs:

- /opt/anaconda/envs

- /home/joe/my-envs

!

pkg_dirs:

- /home/joe/user-pkg-cache

- /opt/system/pkgs

!

changeps1: False

!

# binstar.org upload (not defined here means ask)

binstar_upload: True

Conda configuration

Scripting interface

conda config —add KEY VALUE

conda config —remove-key KEY

conda config —get KEY

conda config —set KEY BOOL

conda config —remove KEY VALUE](https://image.slidesharecdn.com/condawebinar-140410033441-phpapp01/75/Effectively-using-Open-Source-with-conda-33-2048.jpg)