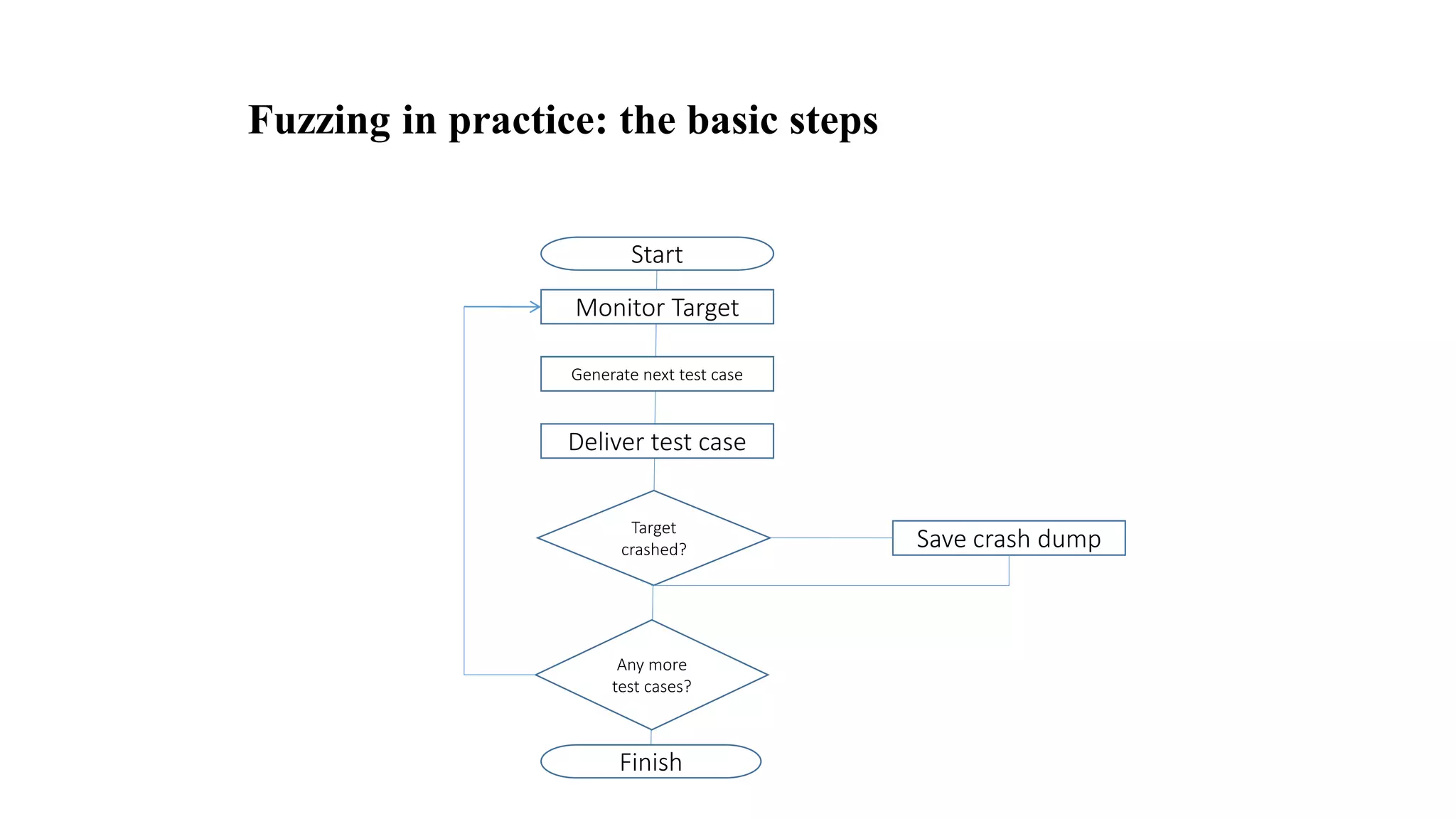

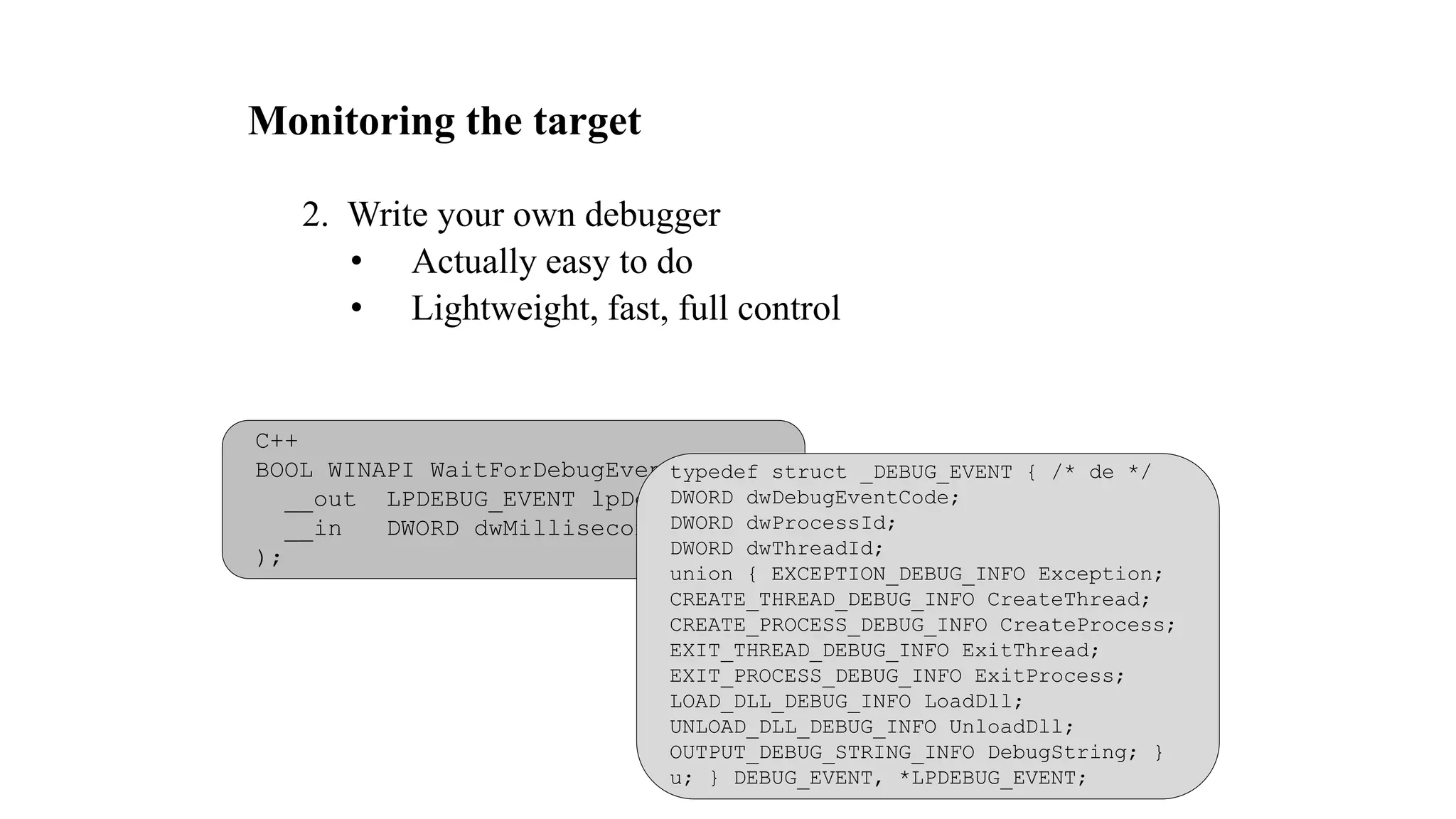

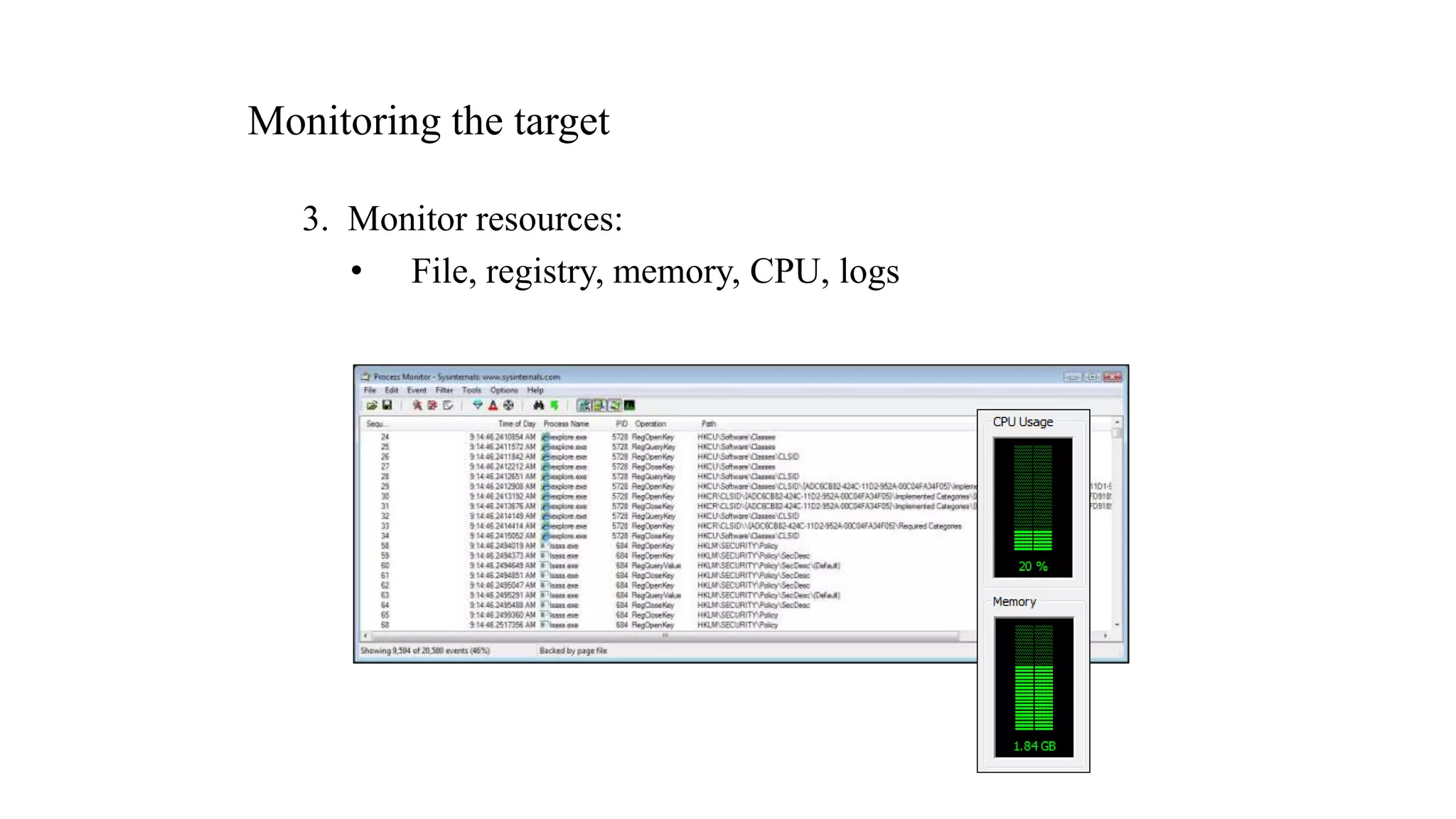

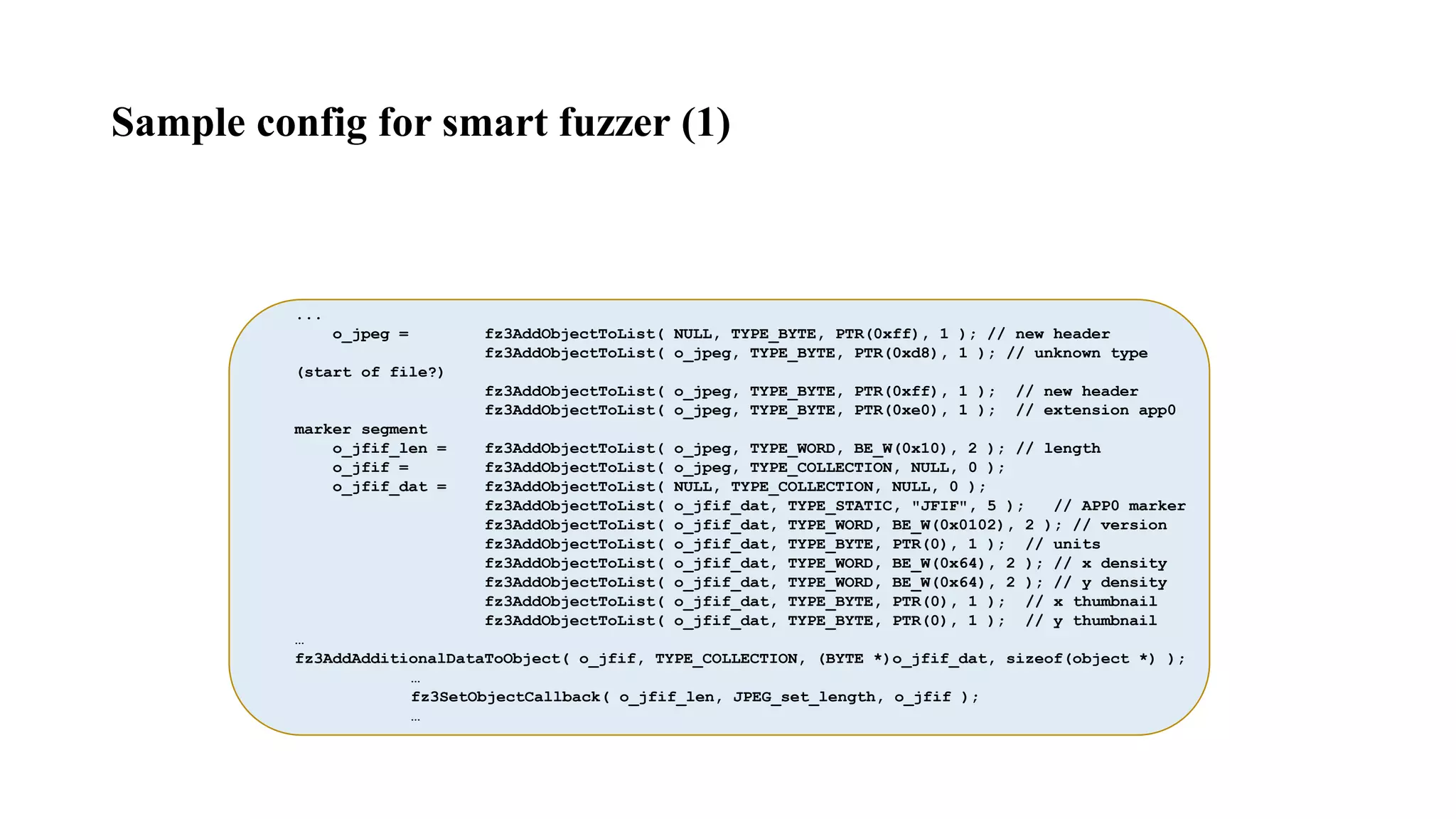

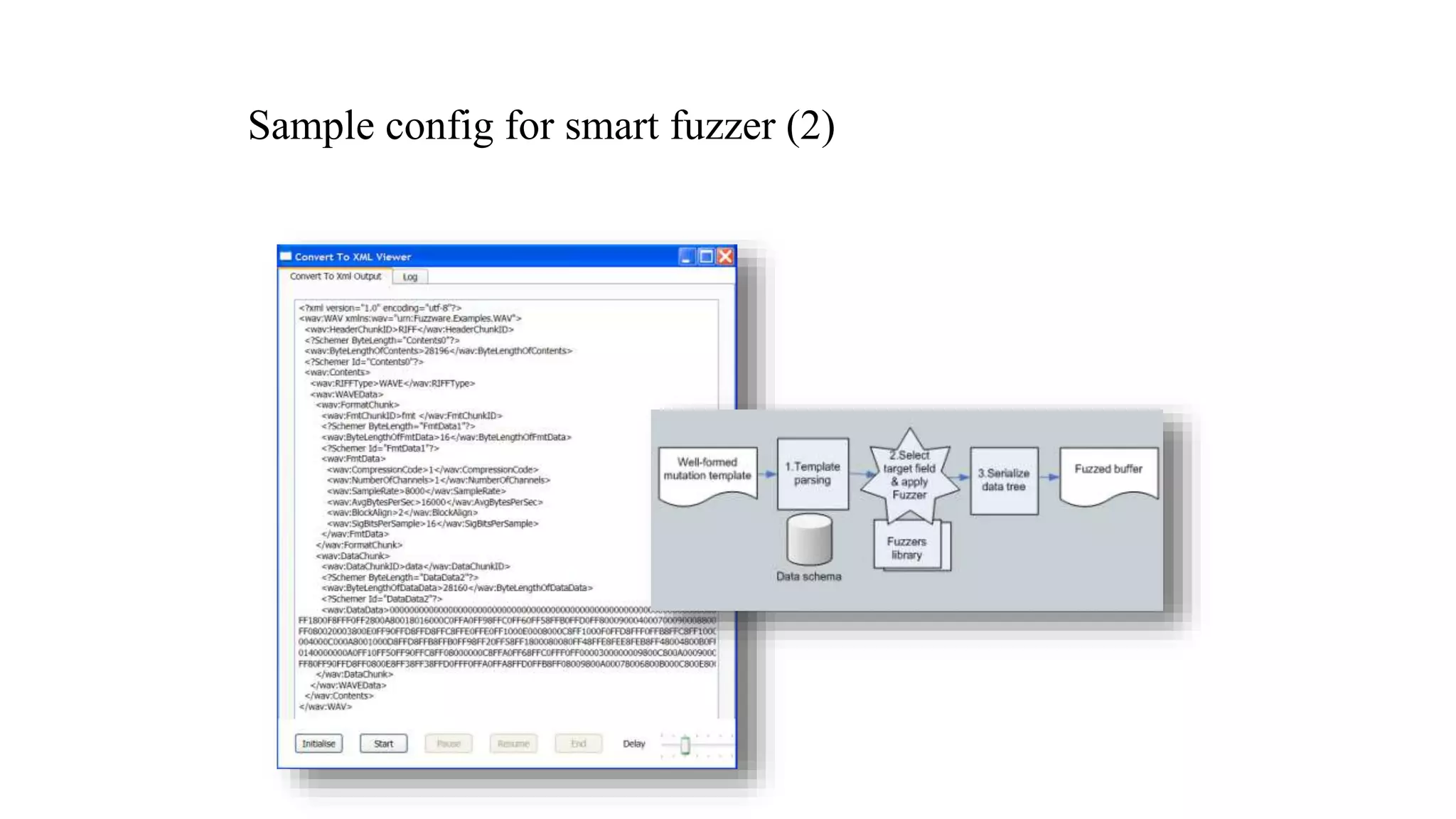

The document discusses fuzzing techniques for finding software vulnerabilities. It defines fuzzing as automatically feeding malformed data to a program to trigger flaws. It describes generating fuzzed test cases, delivering them to targets, and monitoring for crashes. The document outlines dumb and smart fuzzing approaches, and steps for basic fuzzing like generating test cases, monitoring targets, and determining exploitability of found issues.

![What are buffer overflows?

• Suppose a web server contains a function:

void func(char *str) {

char buf[128];

strcpy(buf, str);

do-something(buf);

}

• When the function is invoked the stack looks like:

• What if *str is 136 bytes long? After strcpy:

strret-addrsfpbuf

top

of

stack

str

top

of

stack

*str ret](https://image.slidesharecdn.com/3-190819093150/75/Exploitation-techniques-and-fuzzing-16-2048.jpg)

![Pseudo-code for dumb fuzzer

for each {byte|word|dword|qword} aligned location in file

for each bad_value in bad_valueset

{

file[location] := bad_value

deliver_test_case()

}](https://image.slidesharecdn.com/3-190819093150/75/Exploitation-techniques-and-fuzzing-32-2048.jpg)

![Two approaches cont.

• Which approach is better?

• Depends on:

• Time: how long to develop and run fuzzer

• [Security] Code quality of target

• Amount of validation performed by target

• Can patch out CRC check to allow dumb fuzzing

• Complexity of relations between entities in data format

• Don’t rule out either!

• My personal approach: get a dumb fuzzer working first

• Run it while you work on a smart fuzzer](https://image.slidesharecdn.com/3-190819093150/75/Exploitation-techniques-and-fuzzing-35-2048.jpg)