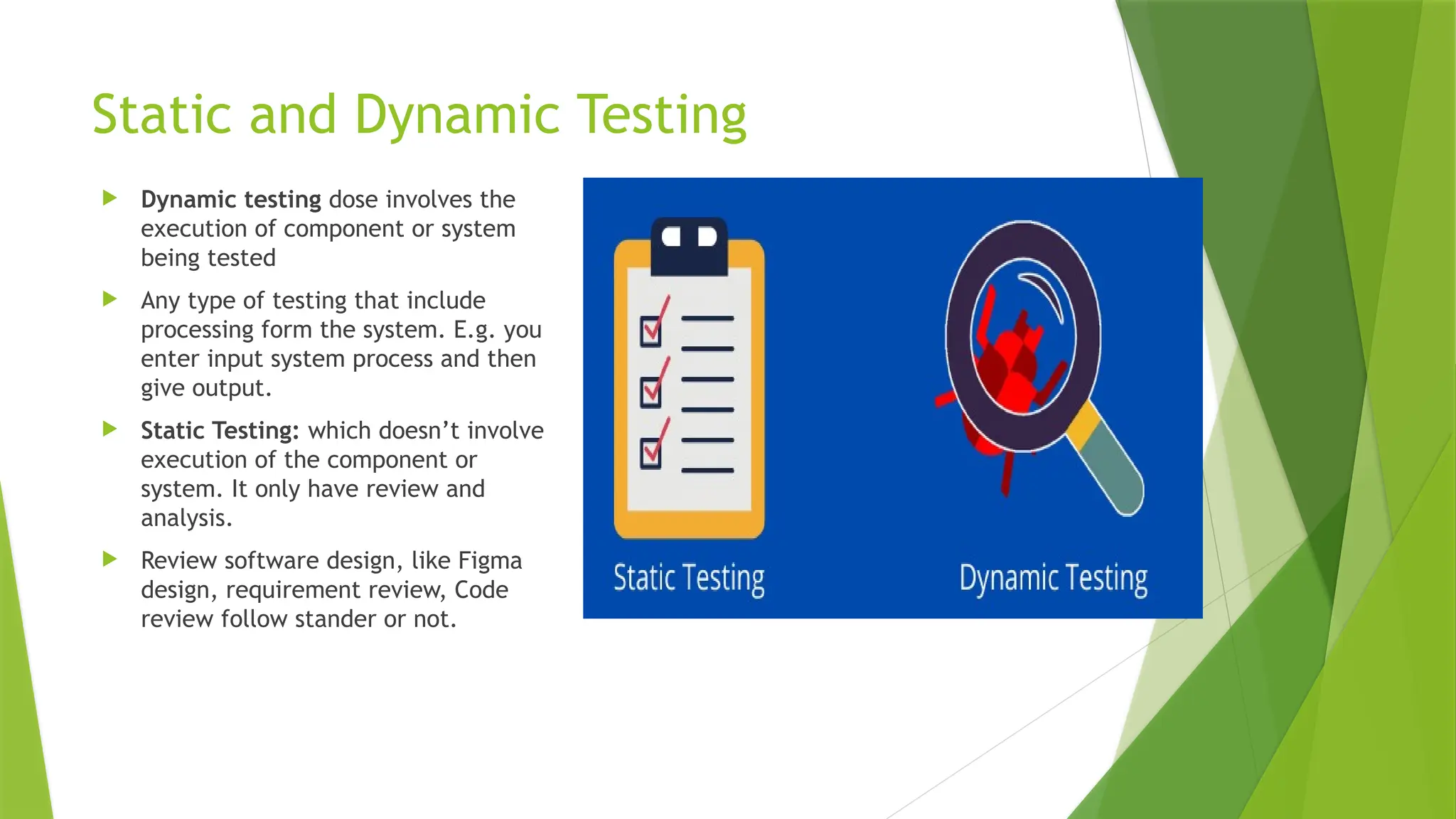

his presentation covers Chapter 1: Fundamentals of Testing from the ISTQB Foundation Level syllabus. It provides a structured overview of the importance of software testing, key testing principles, the role of testing in quality assurance, and how testing fits into the software development lifecycle (SDLC).

The slides include:

Importance and objectives of testing

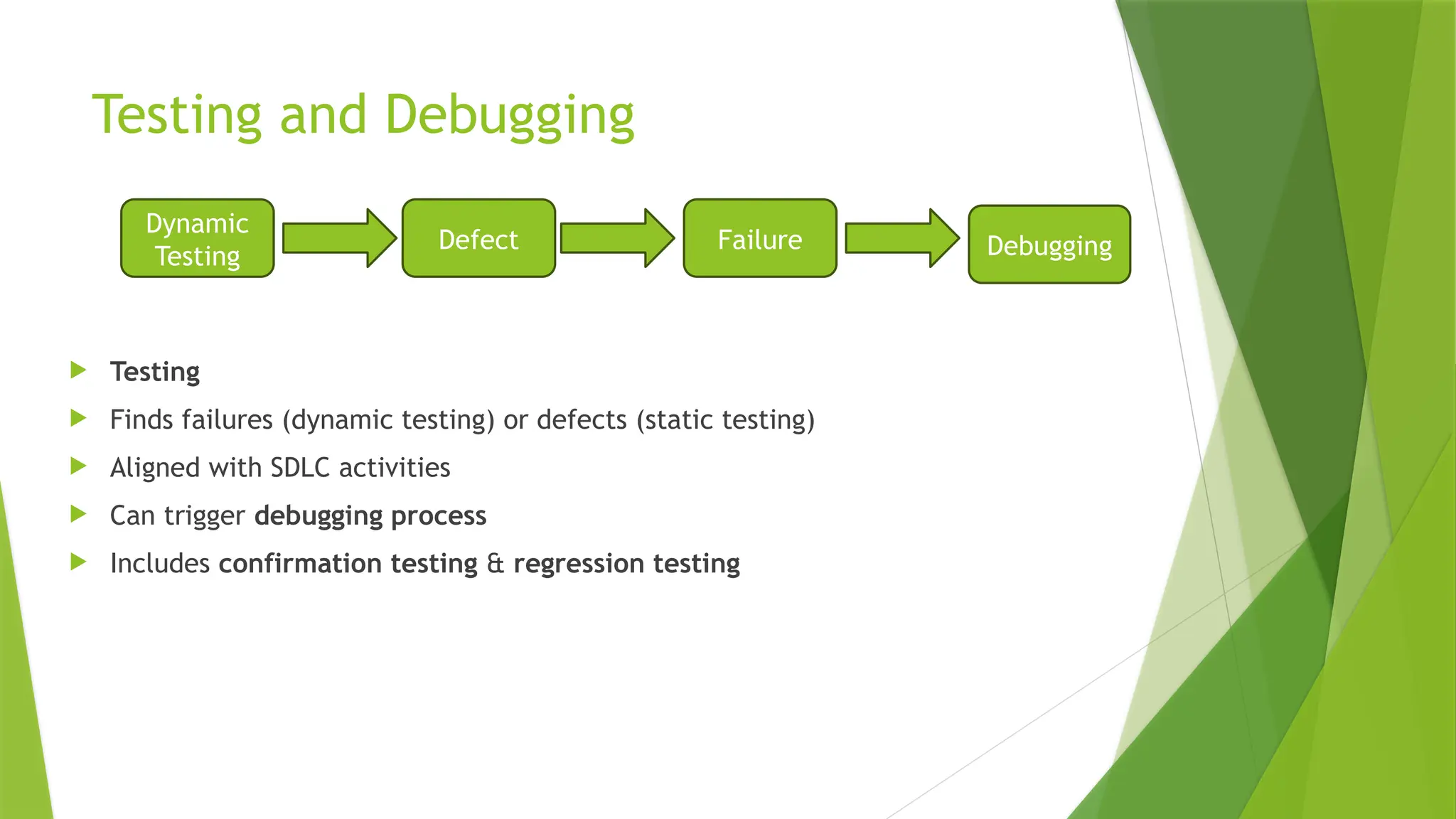

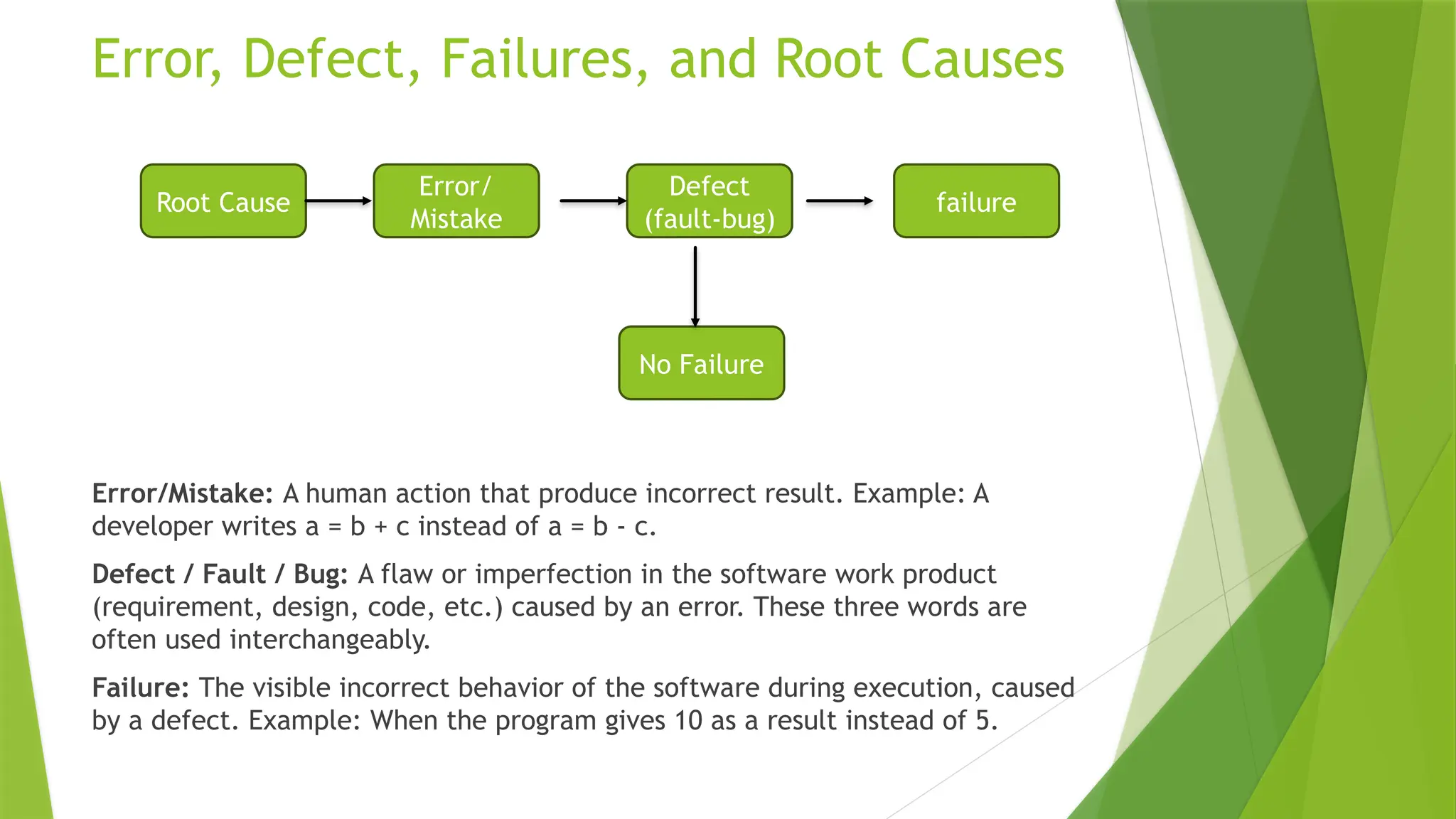

Error, defect, and failure concepts

Seven testing principles

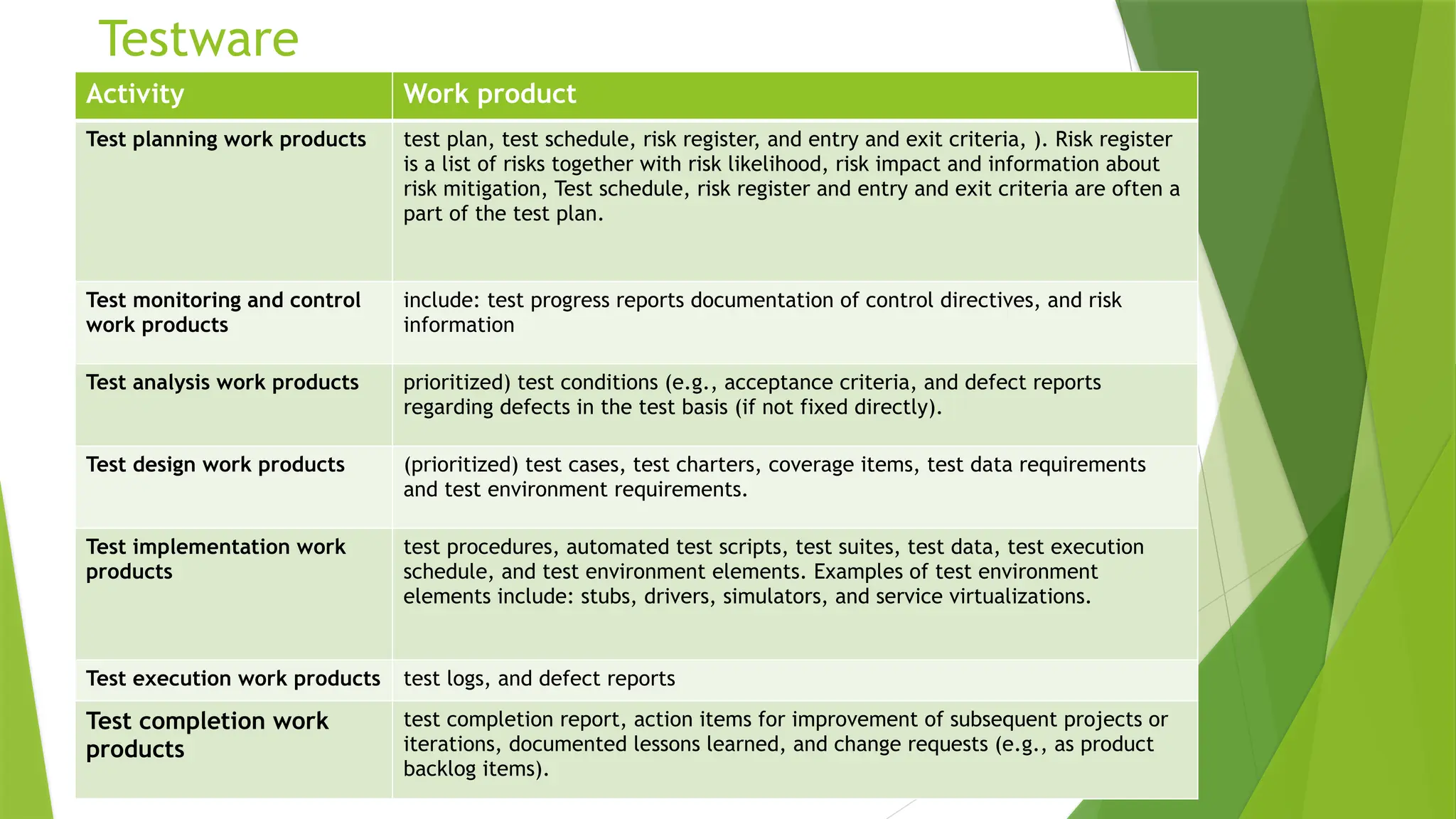

Testing activities in the software lifecycle

The psychology of testing

These slides are designed to help students, QA engineers, and professionals preparing for the ISTQB Foundation Level Certification Exam understand the core concepts in a clear and concise way.