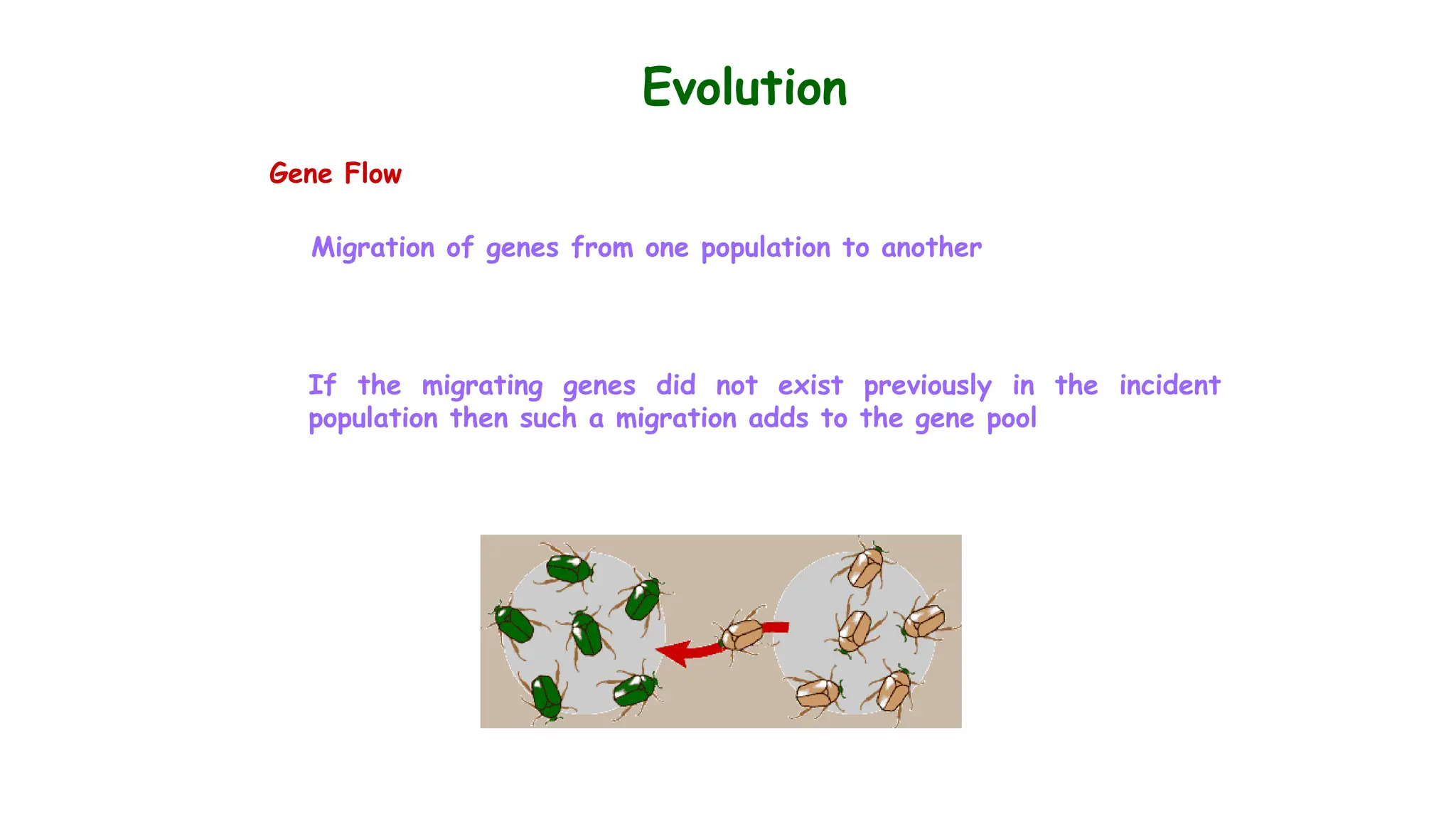

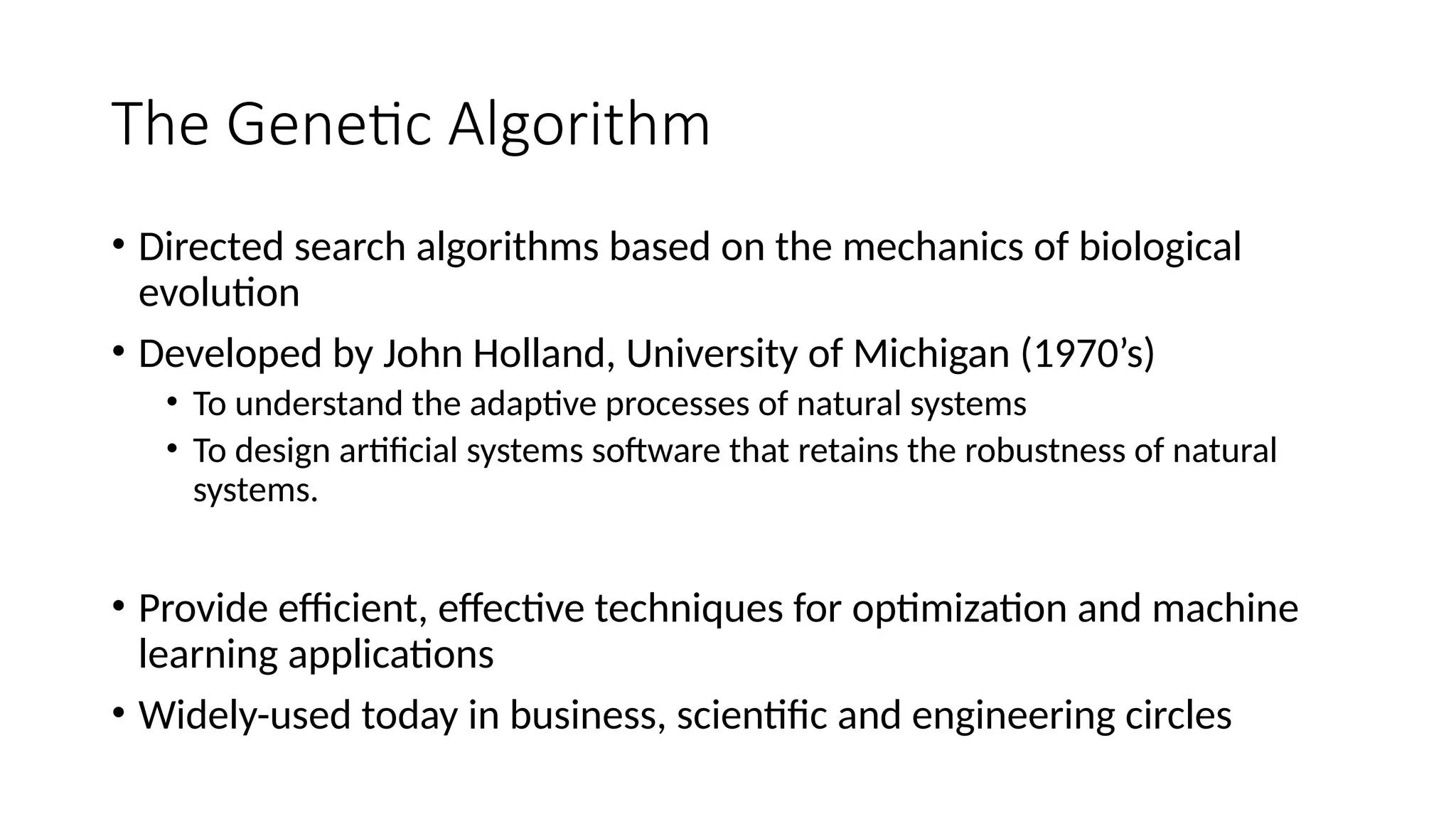

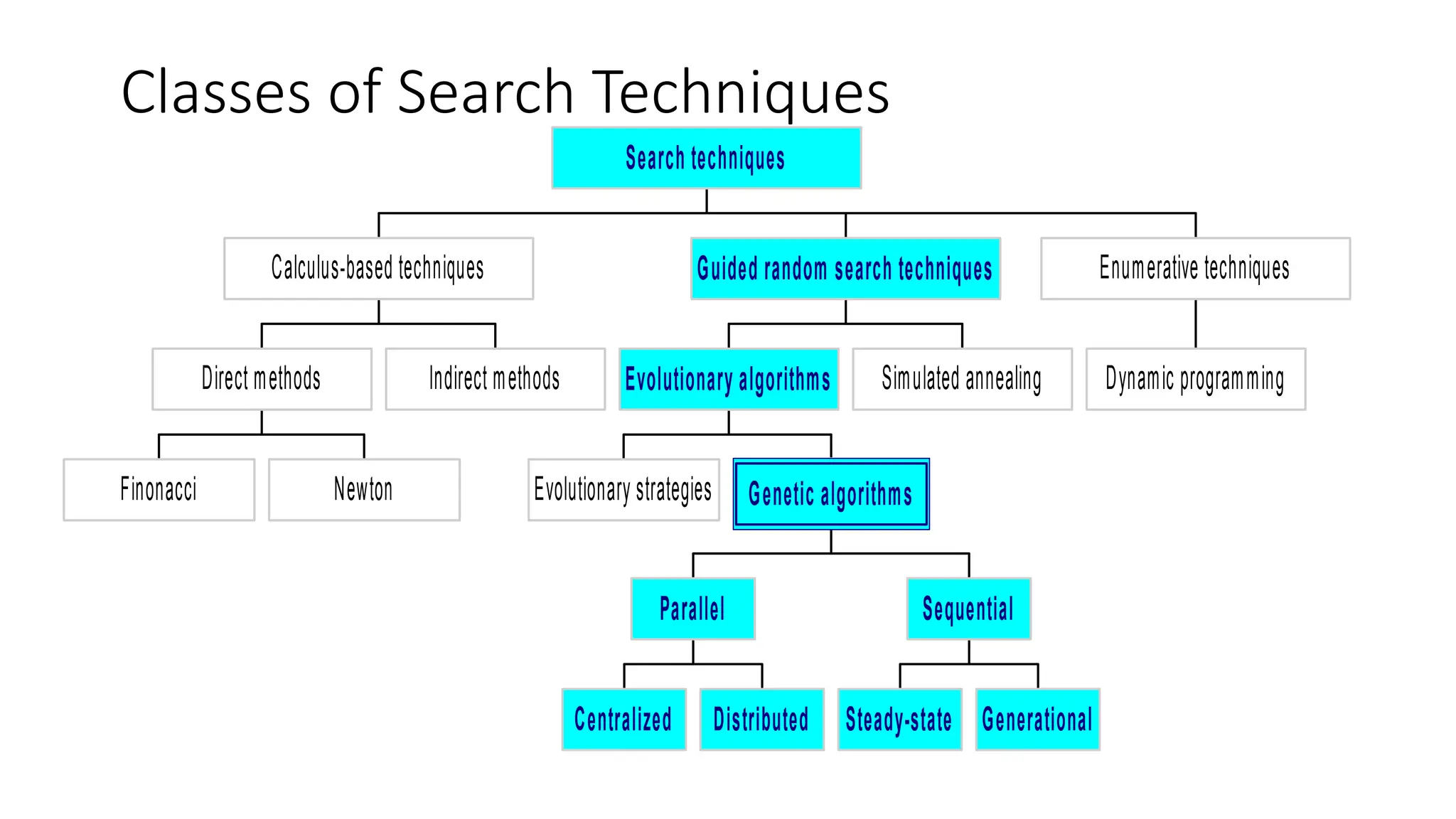

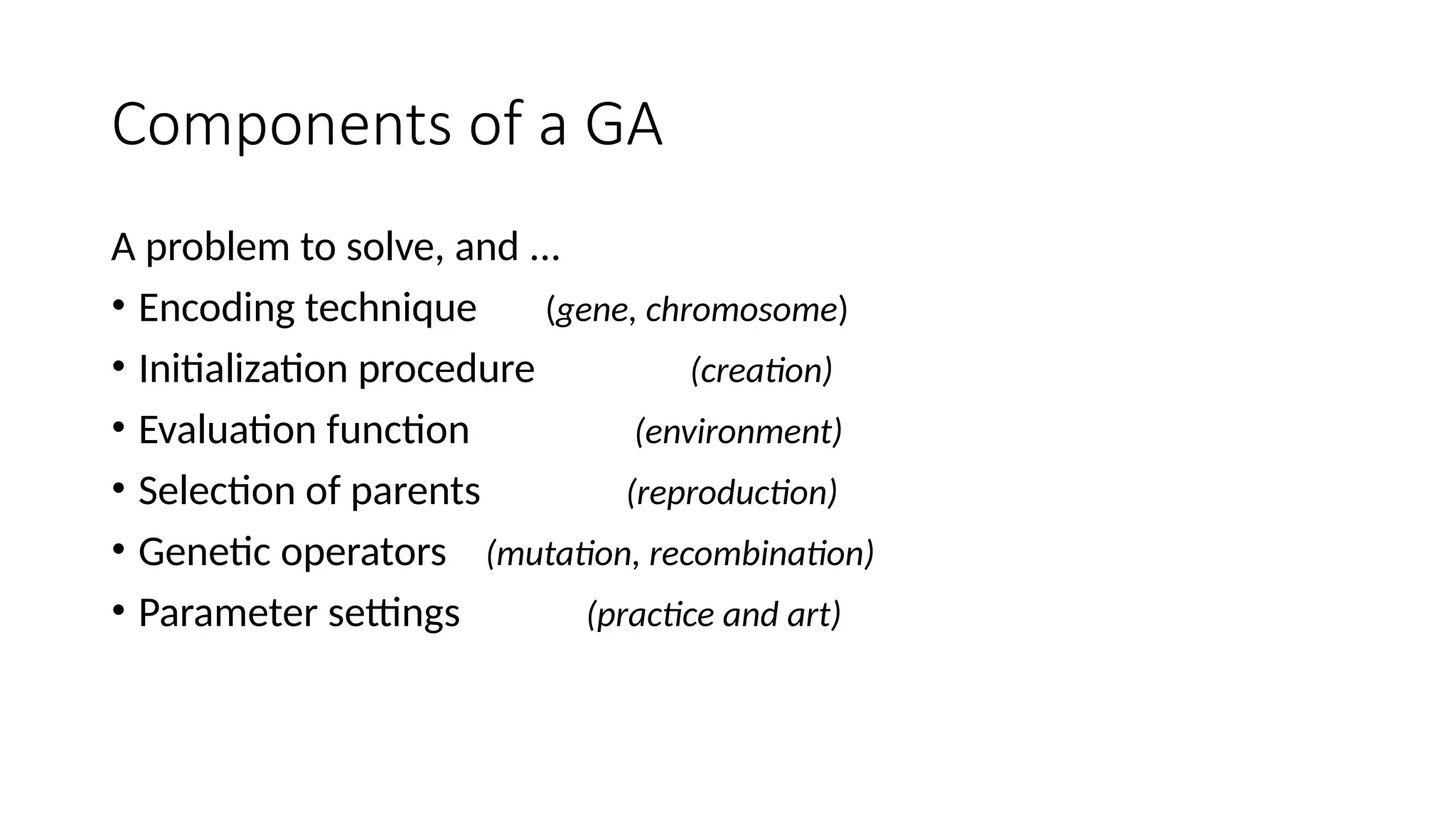

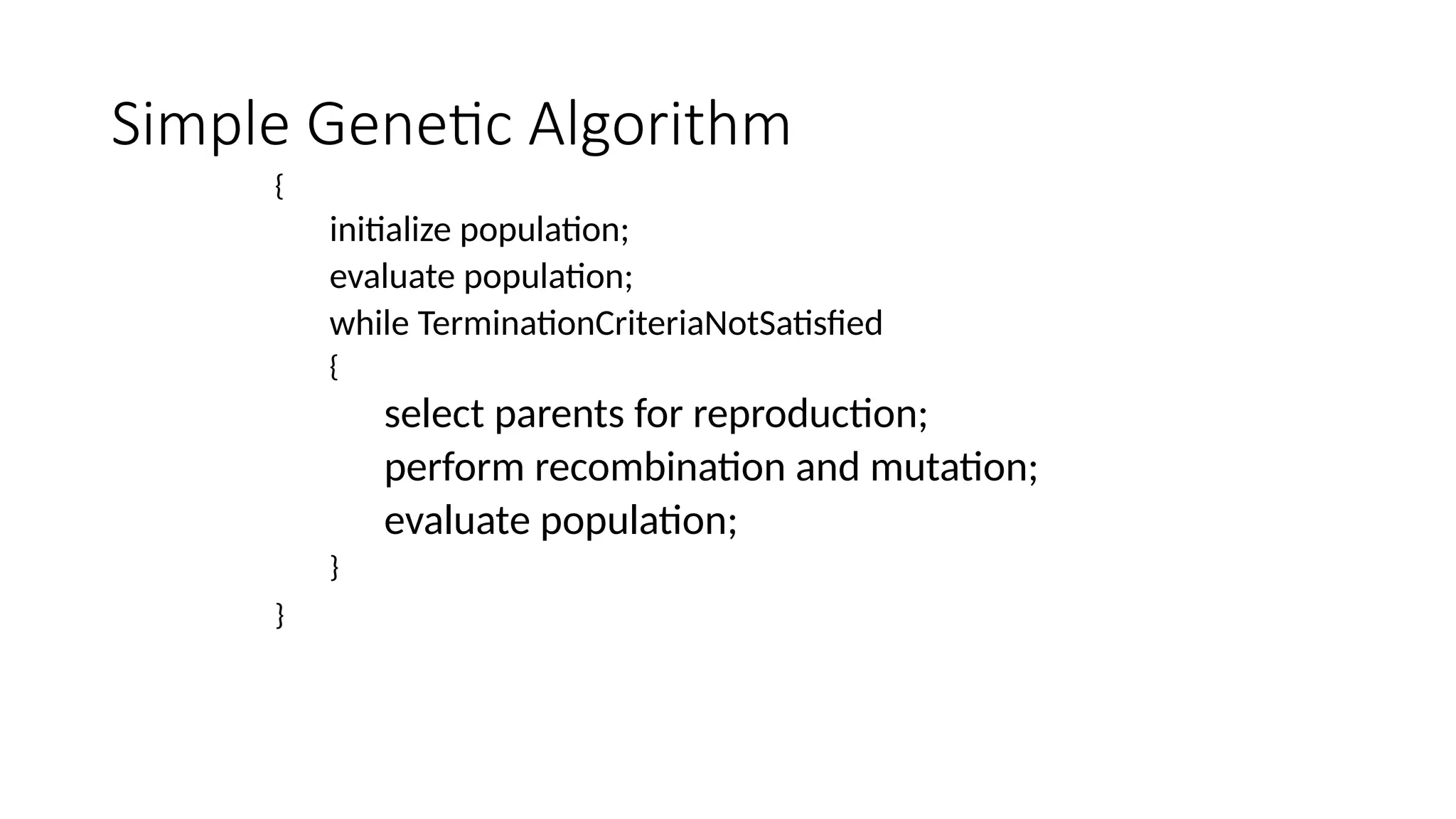

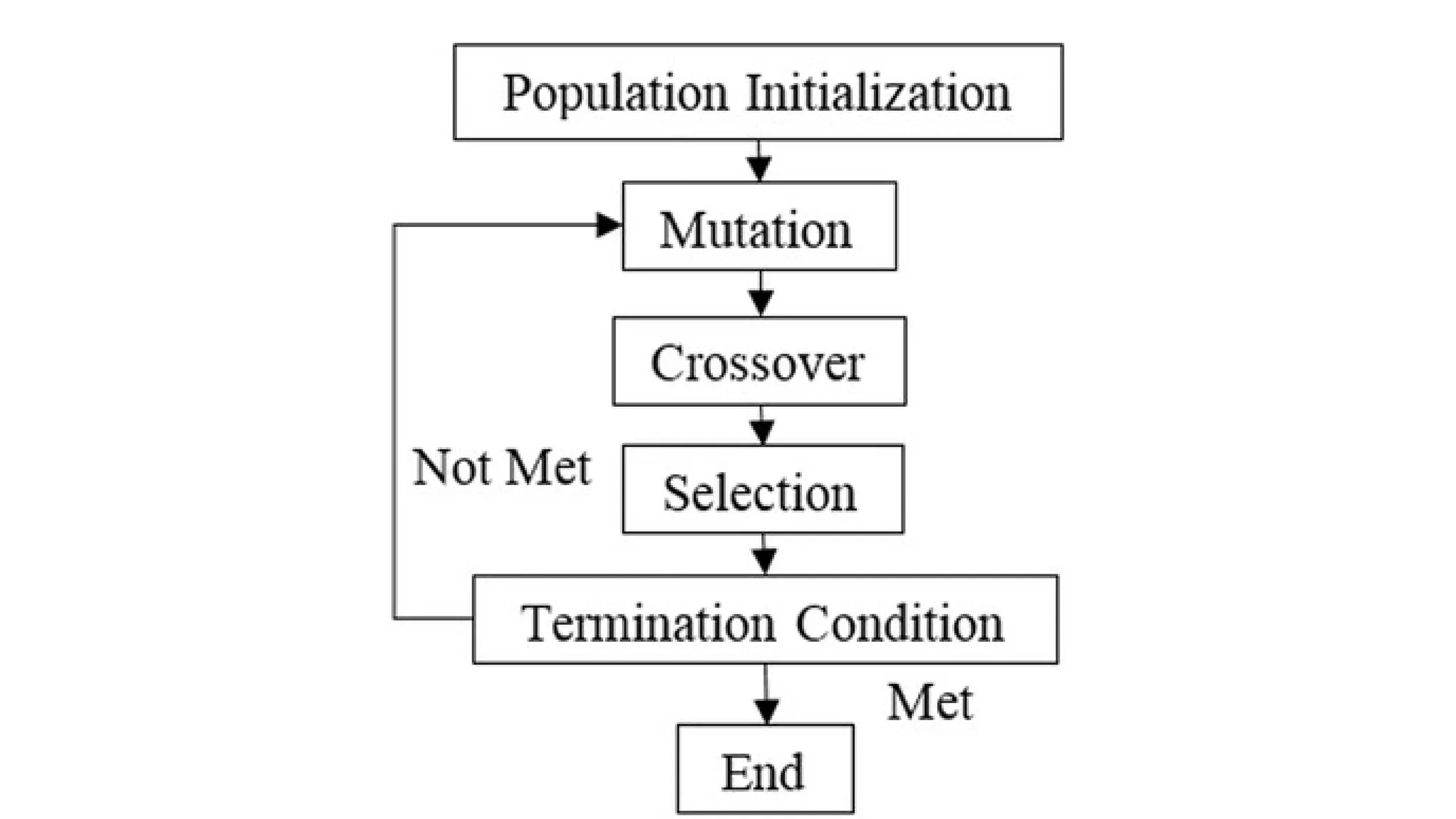

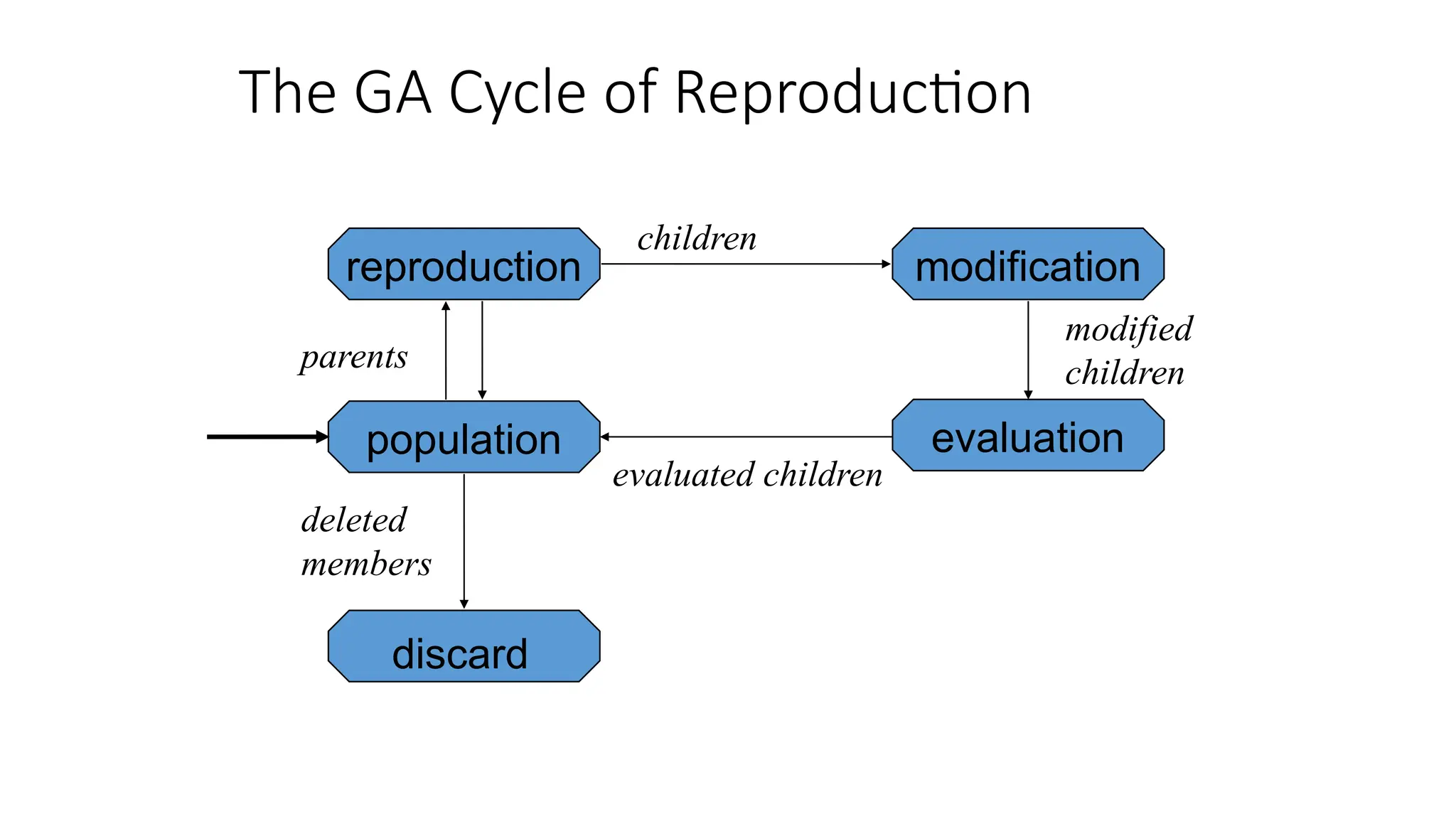

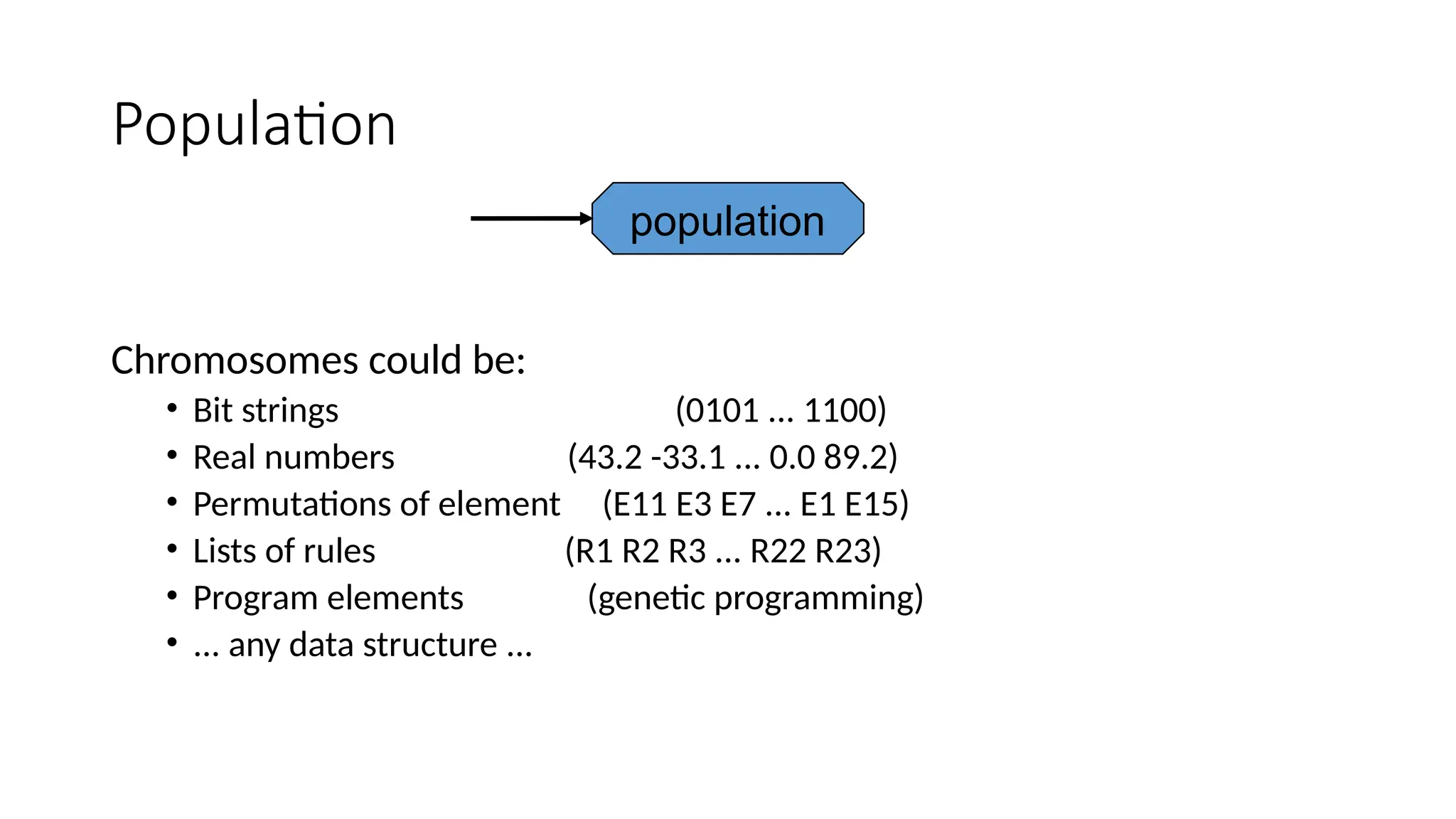

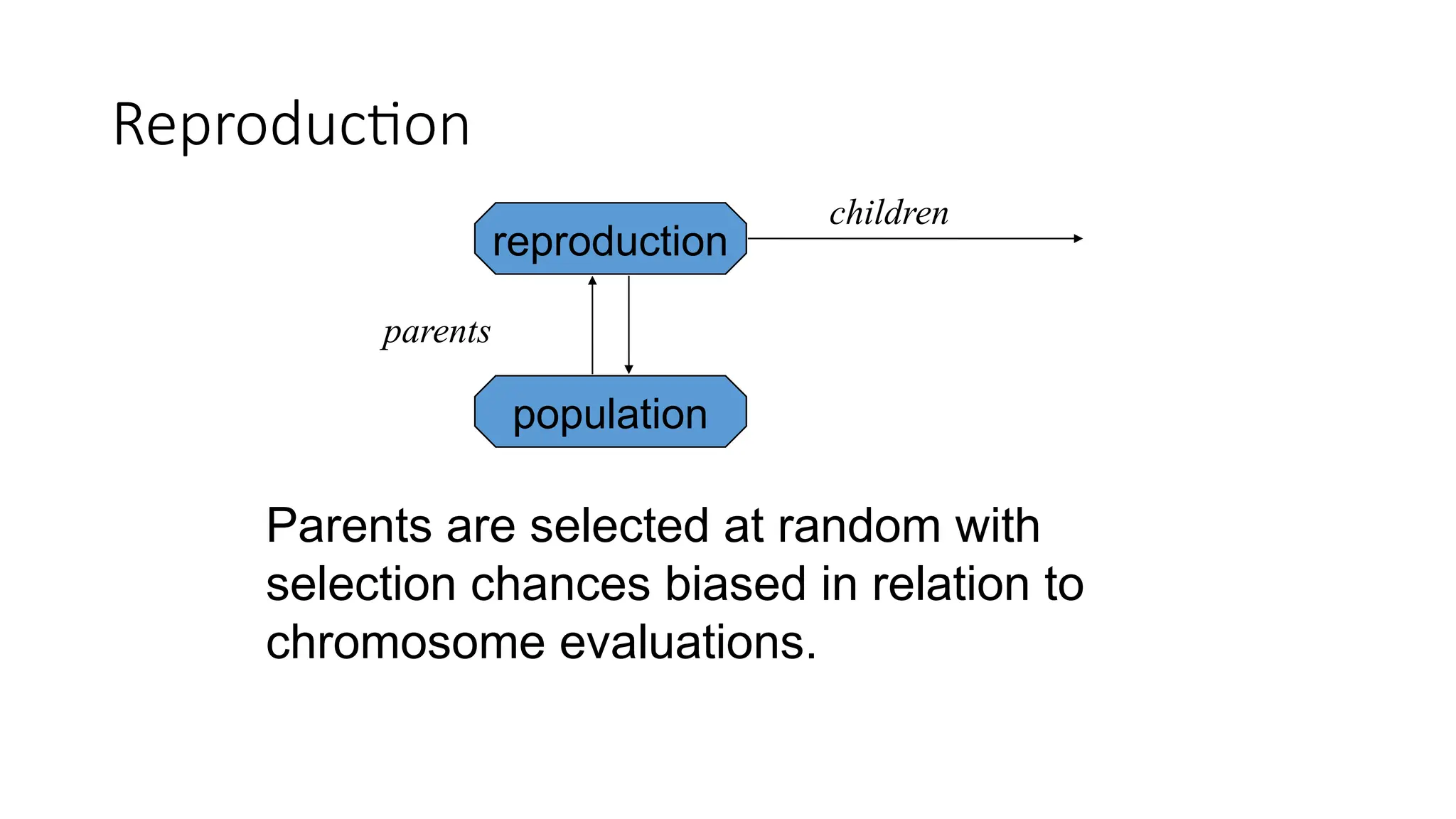

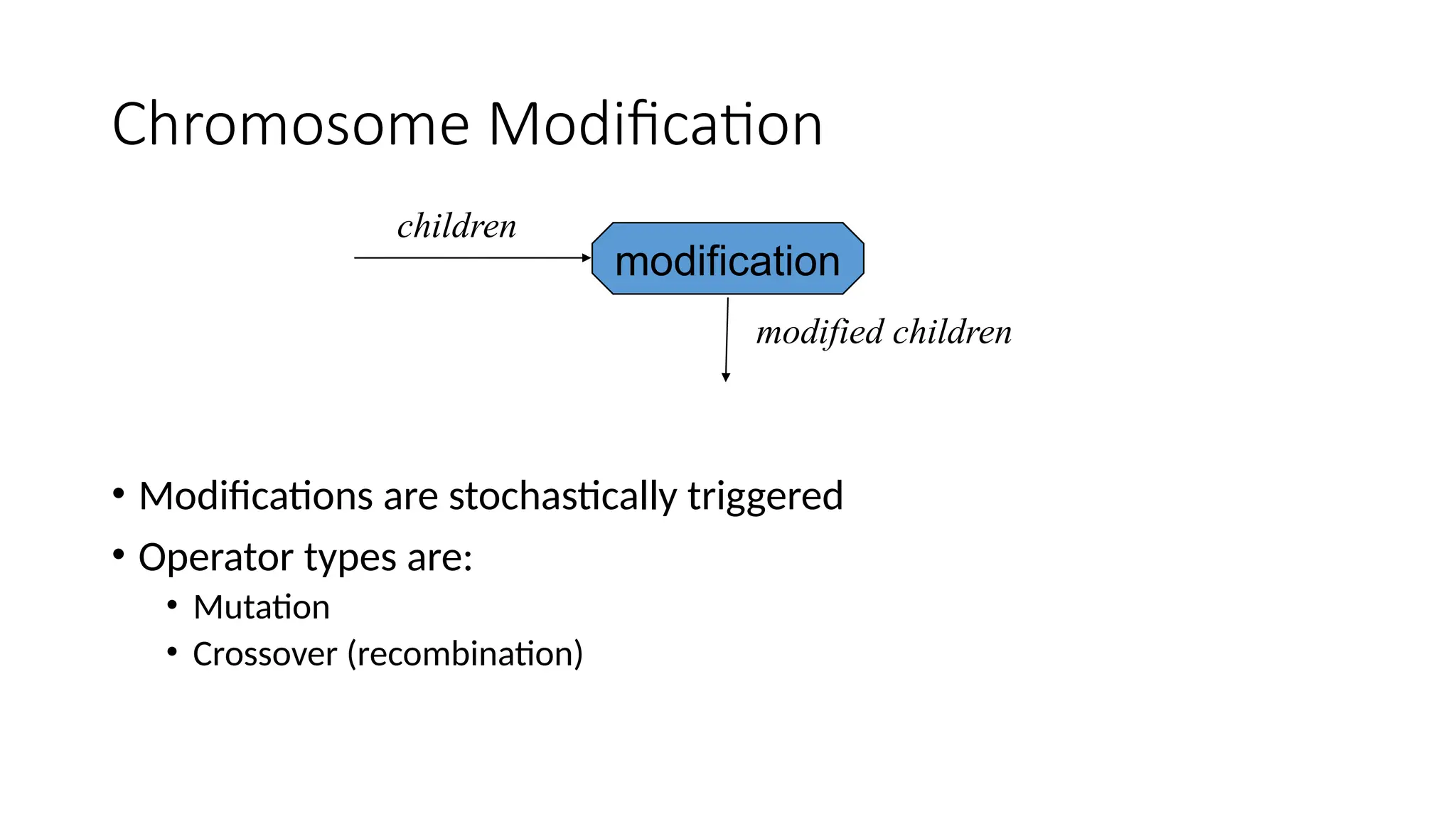

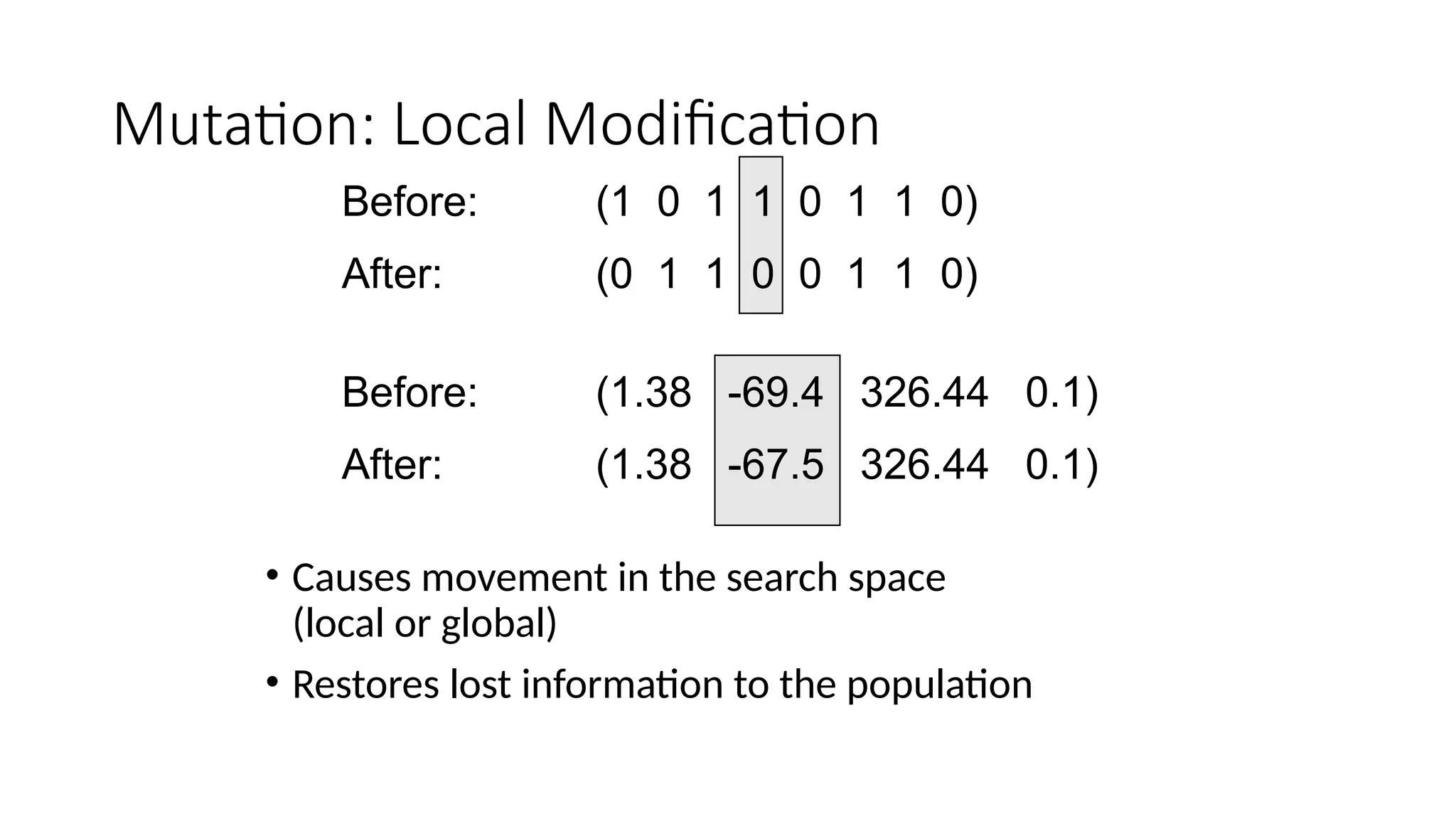

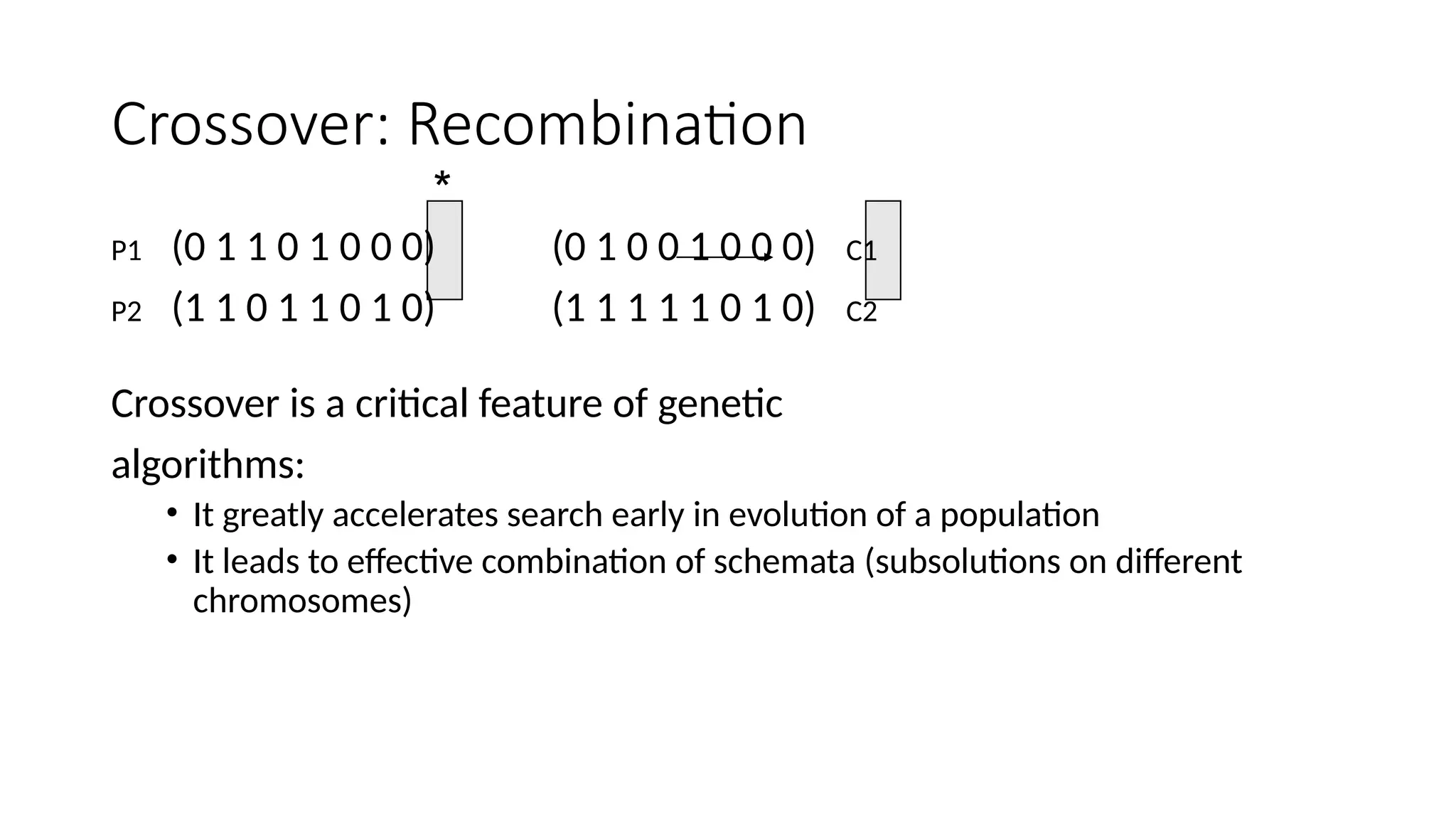

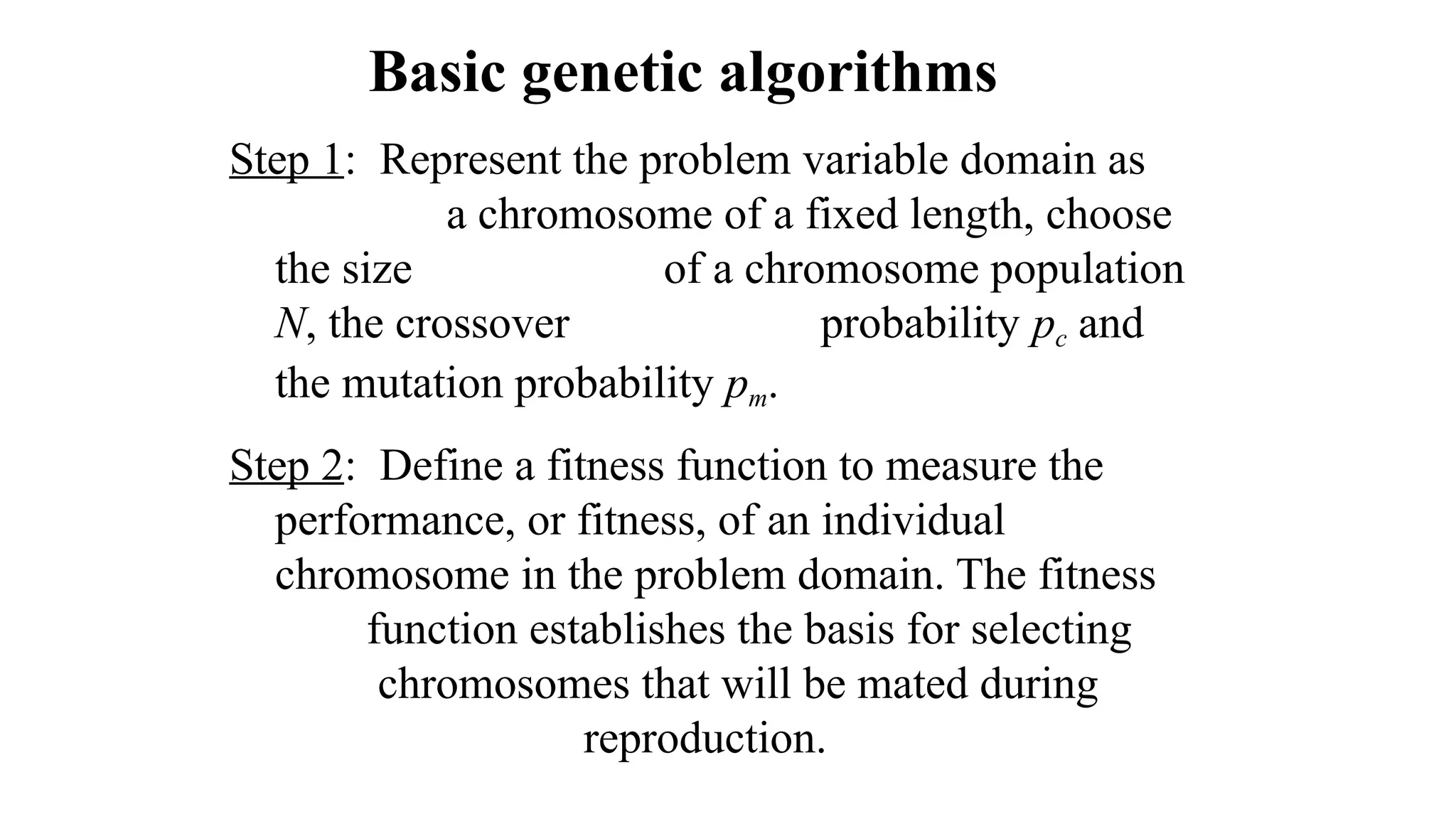

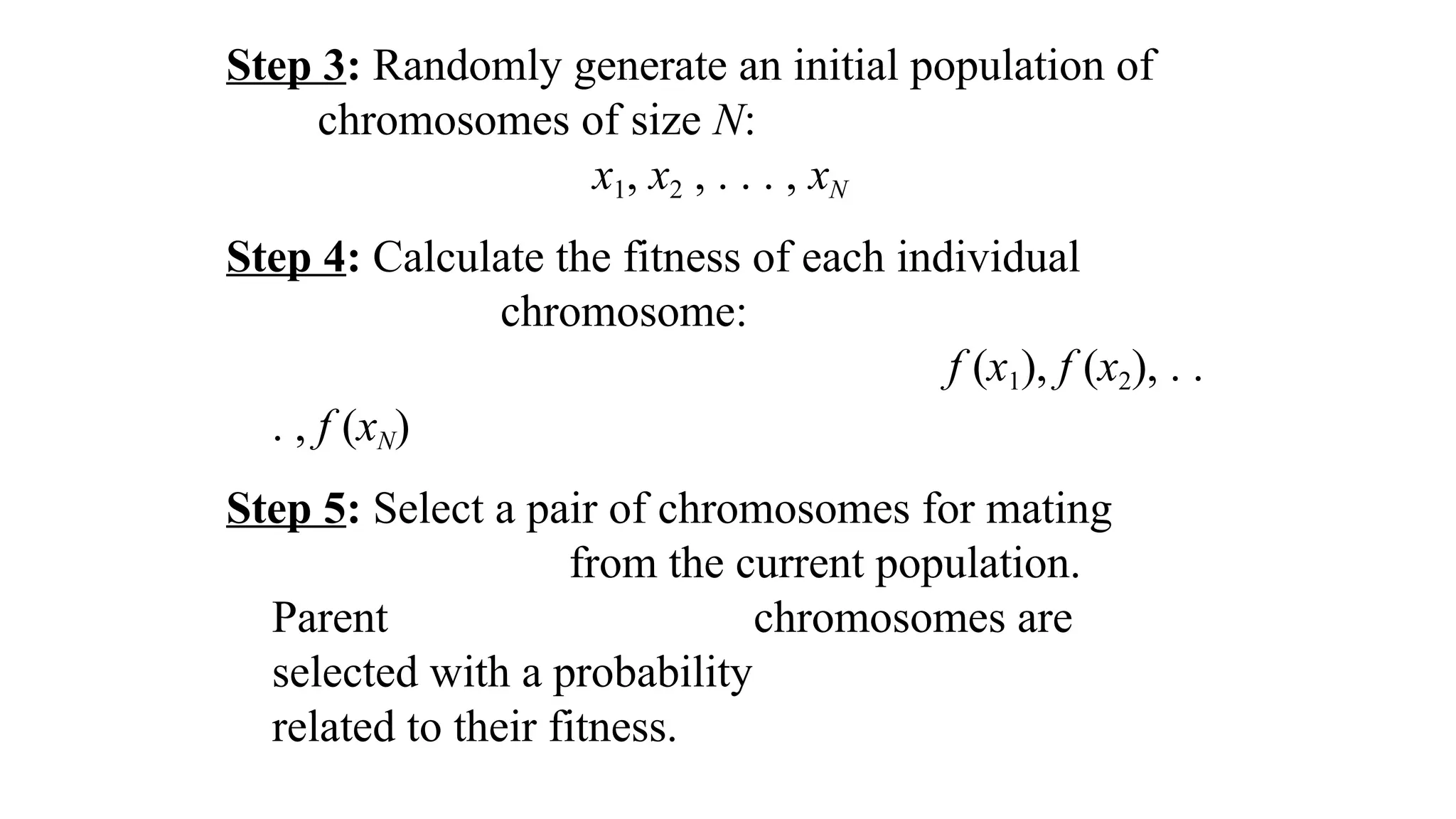

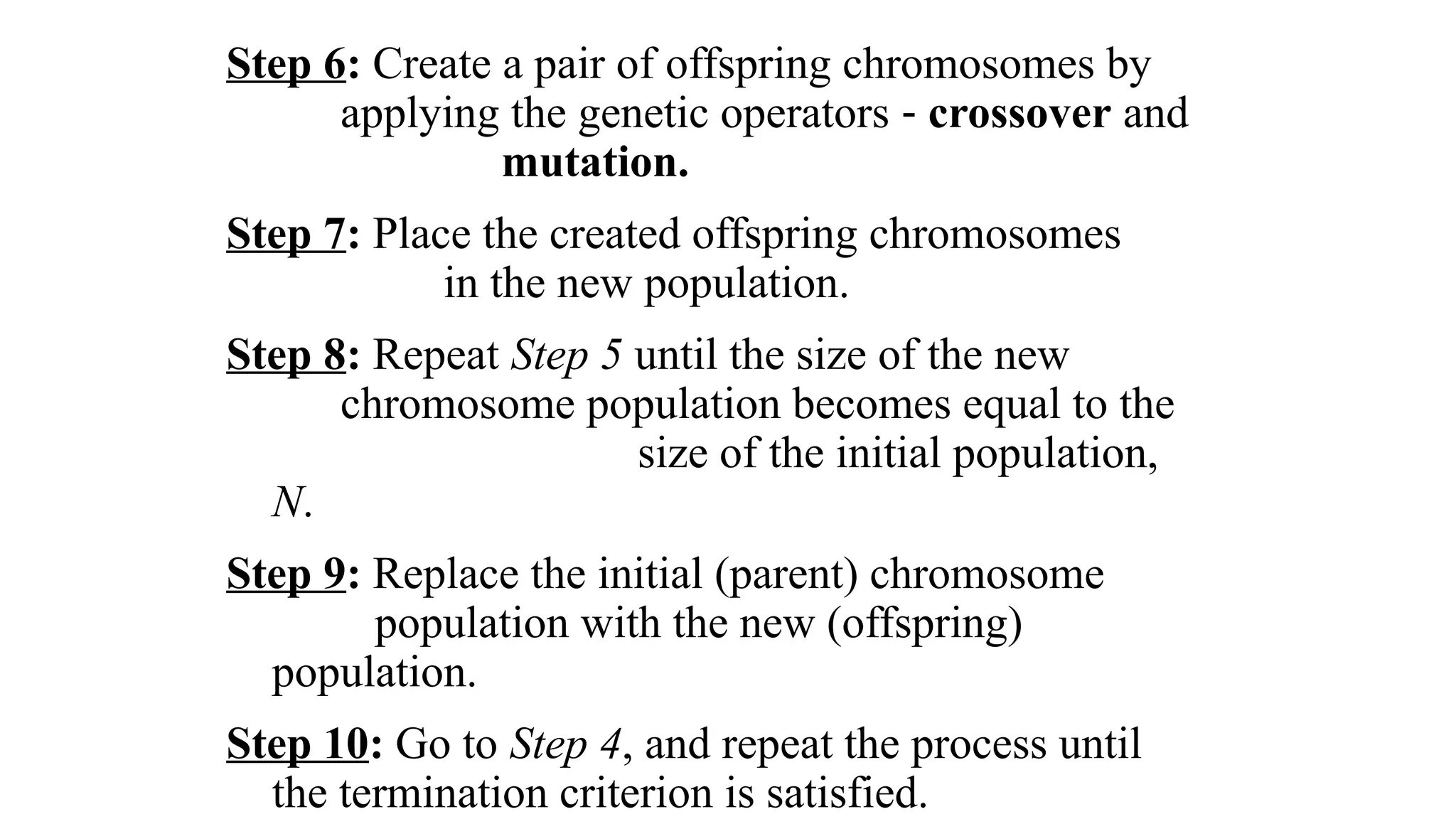

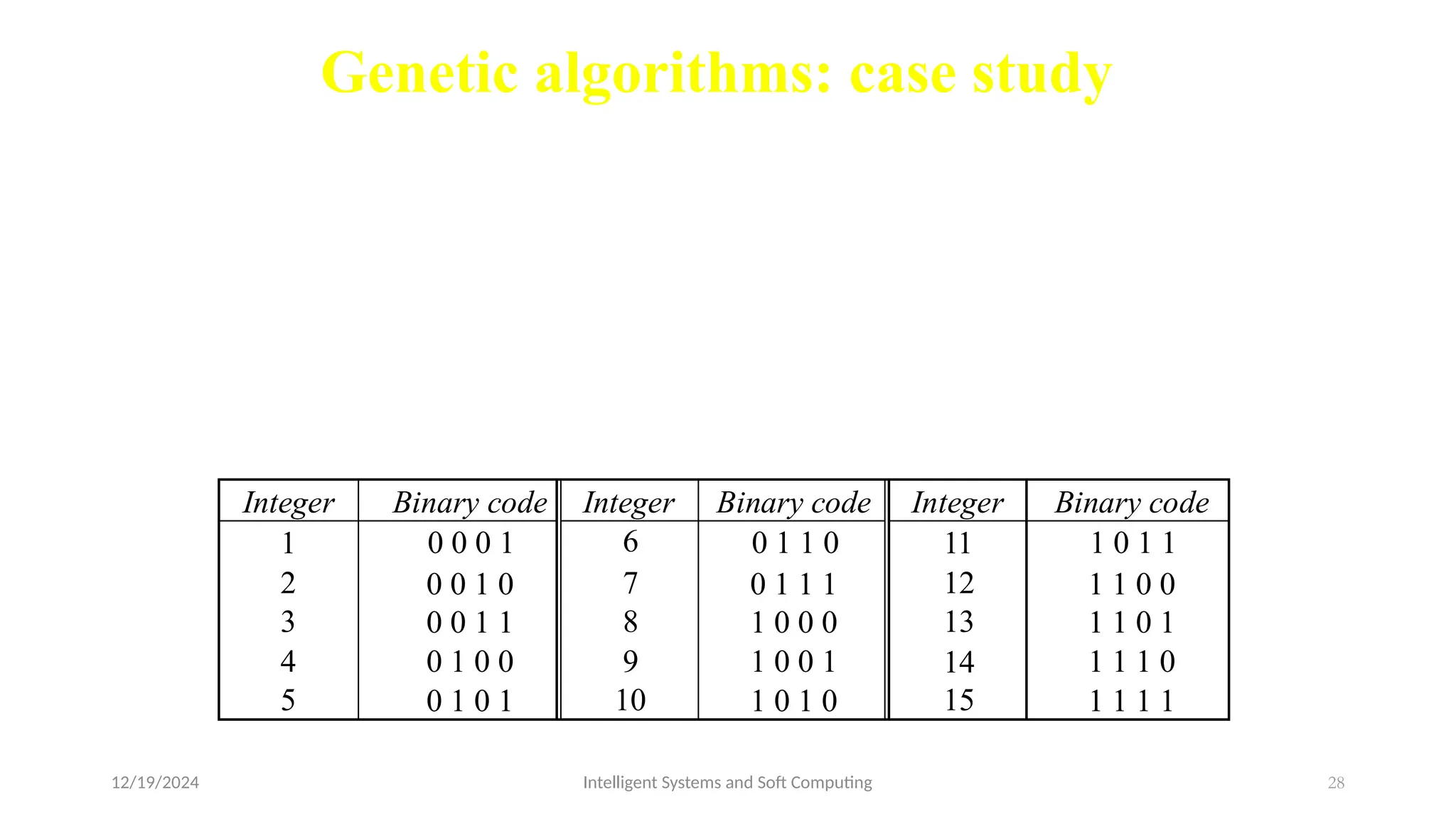

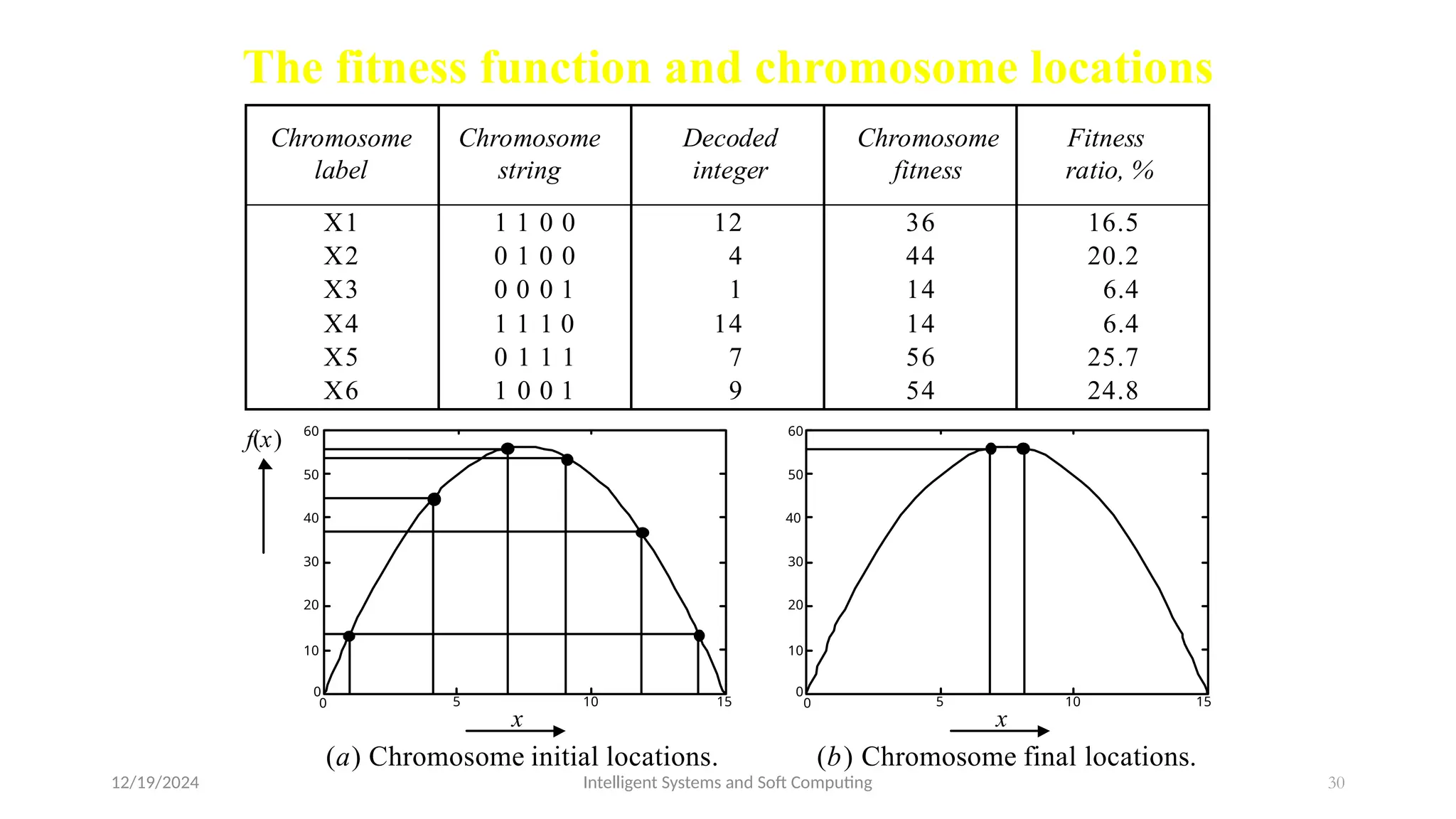

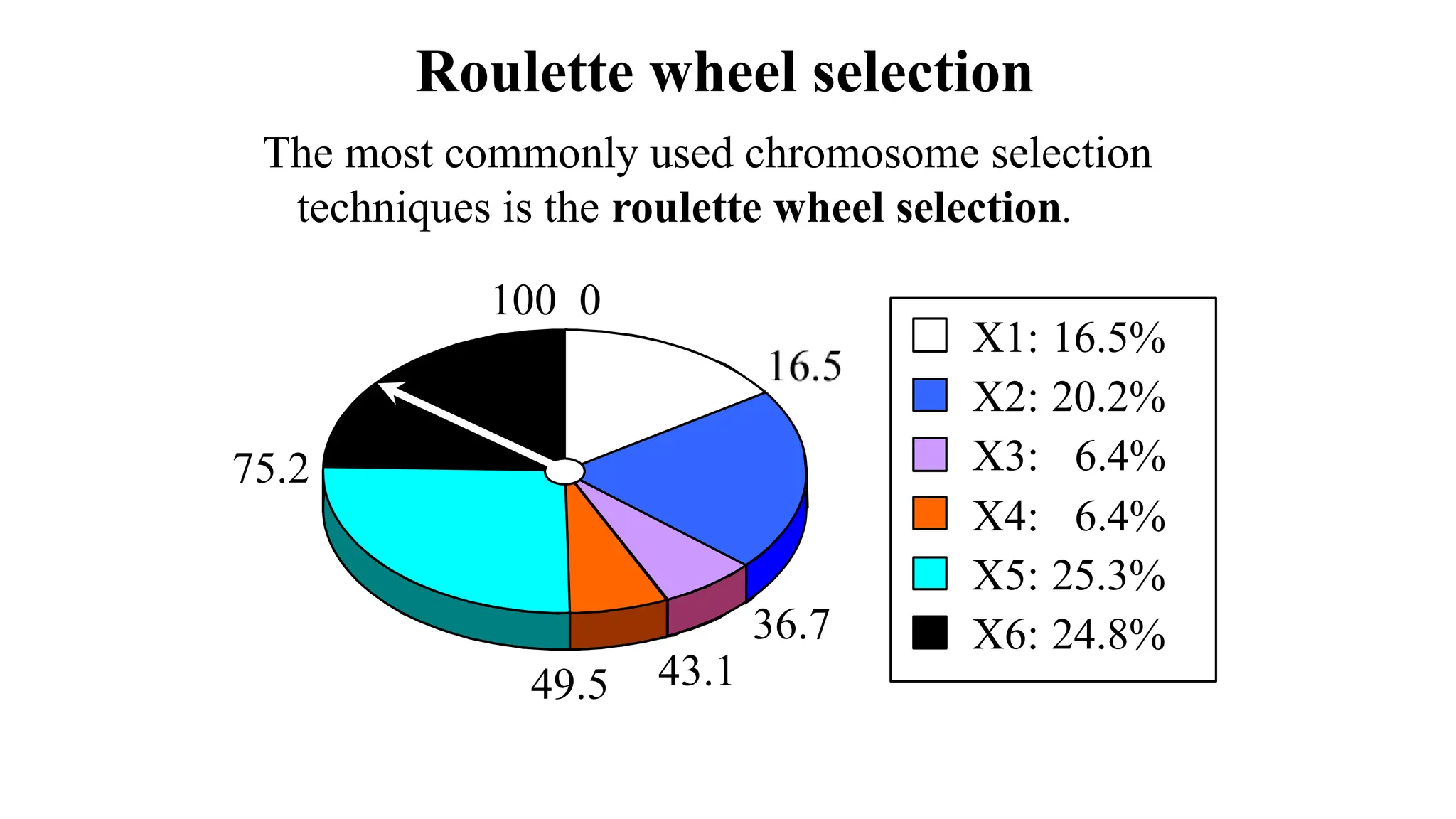

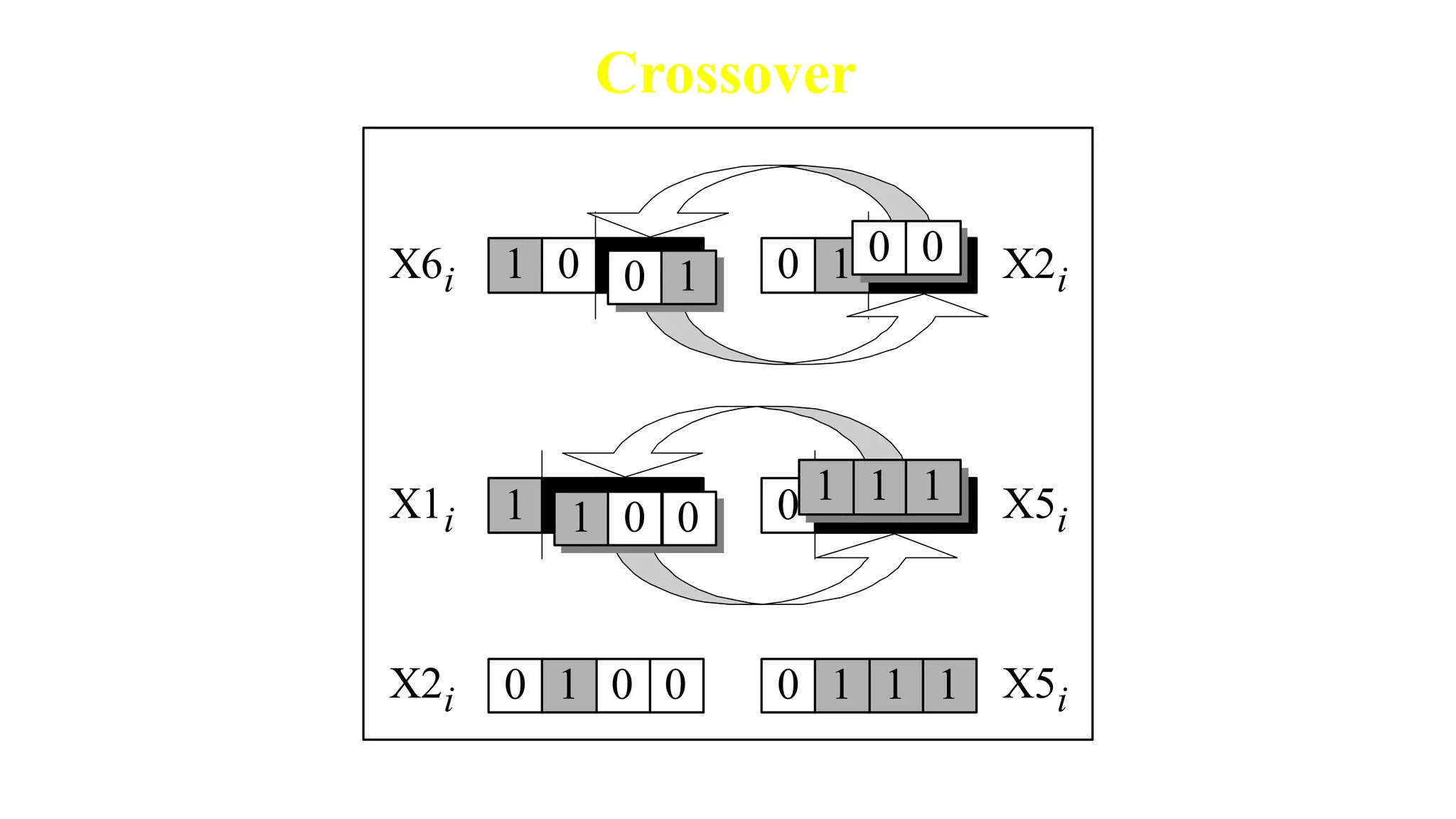

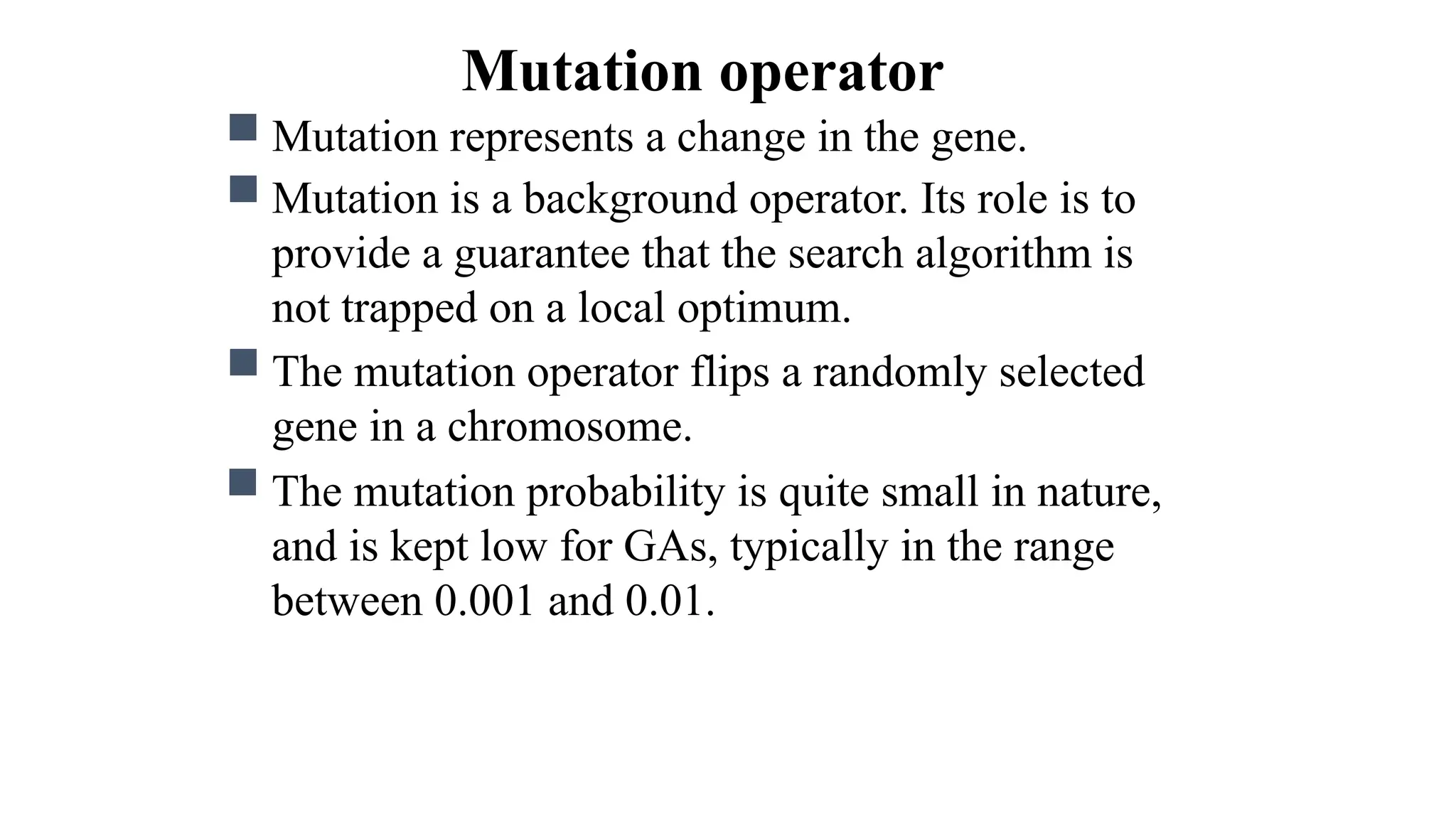

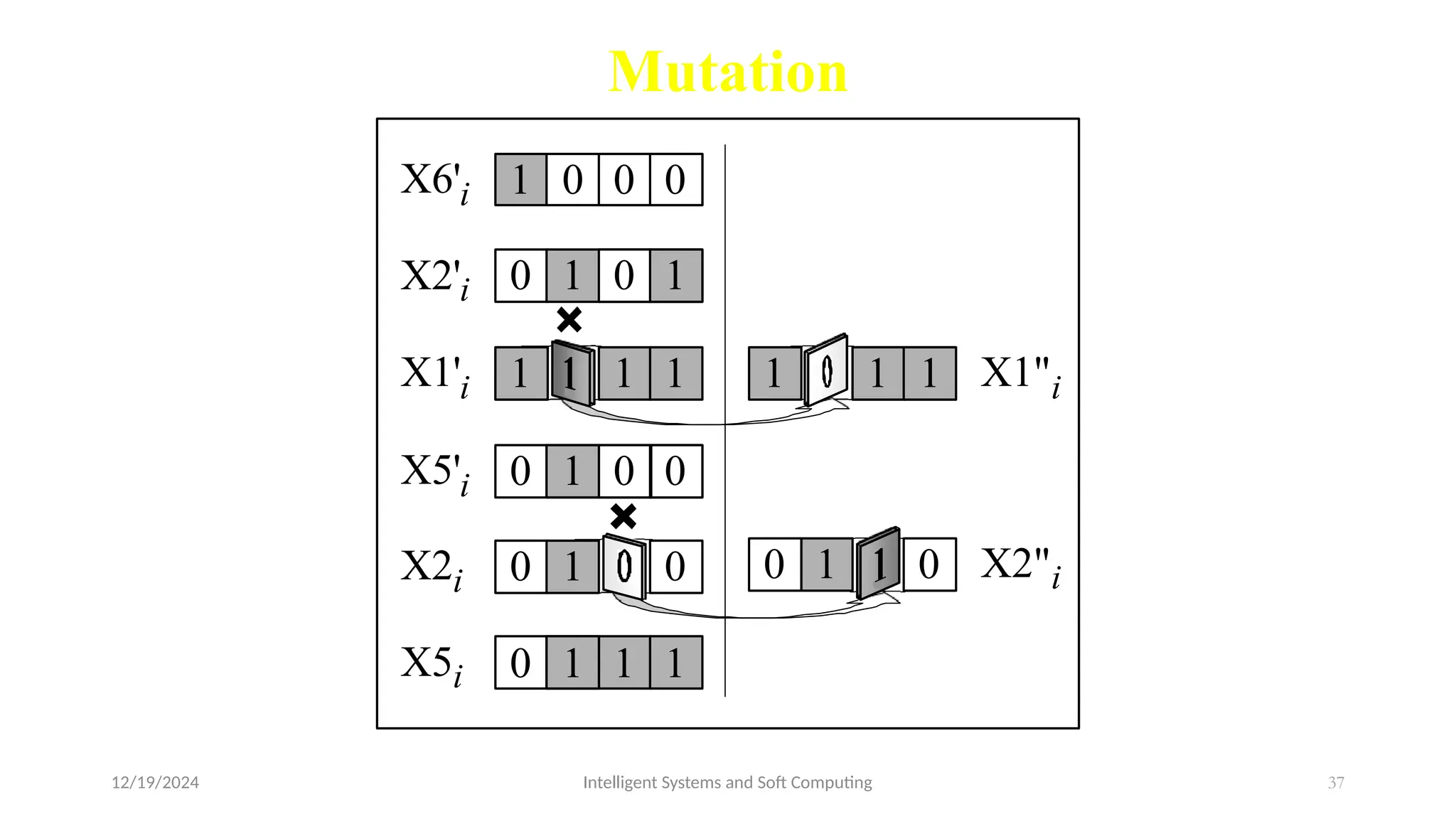

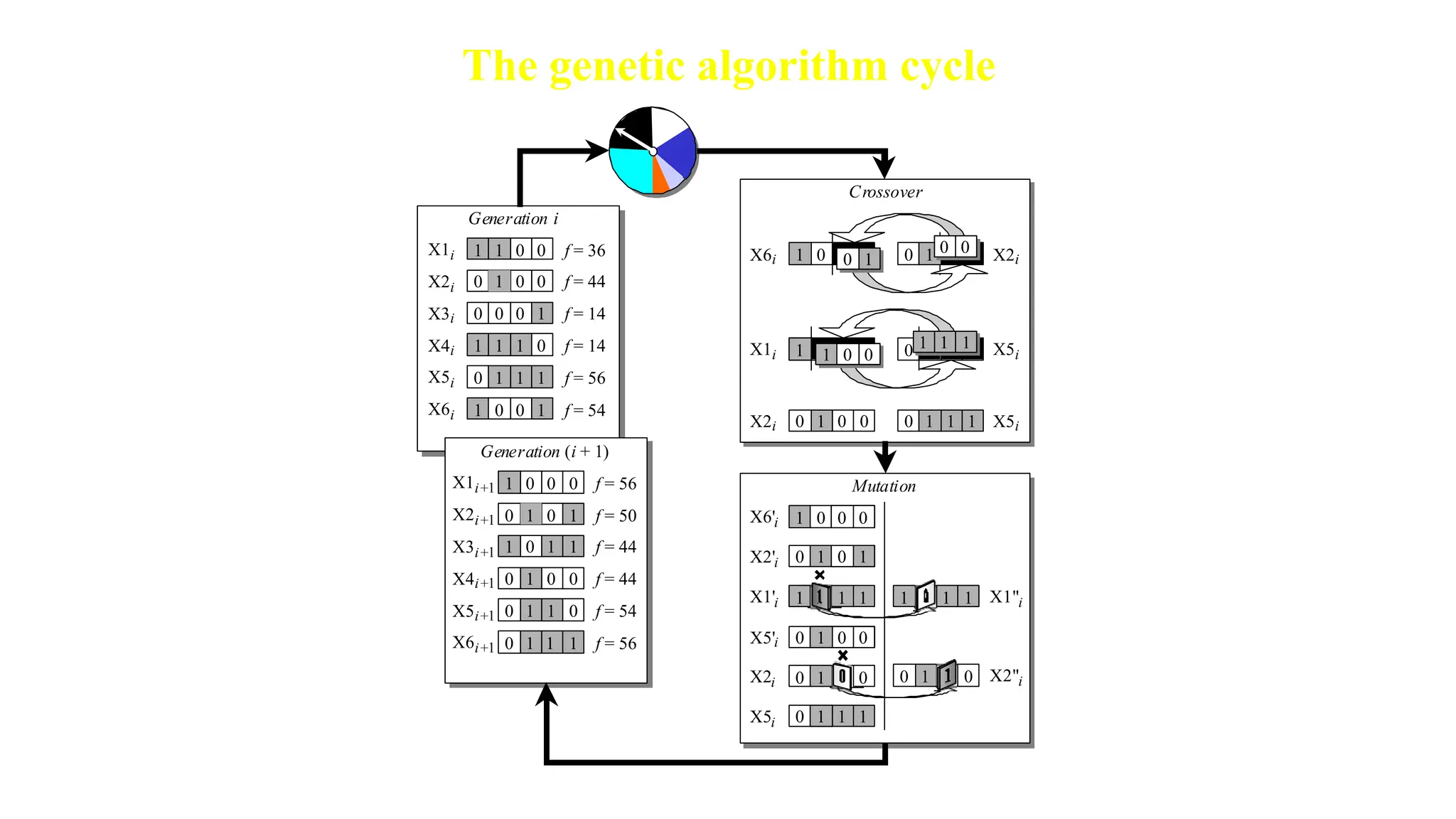

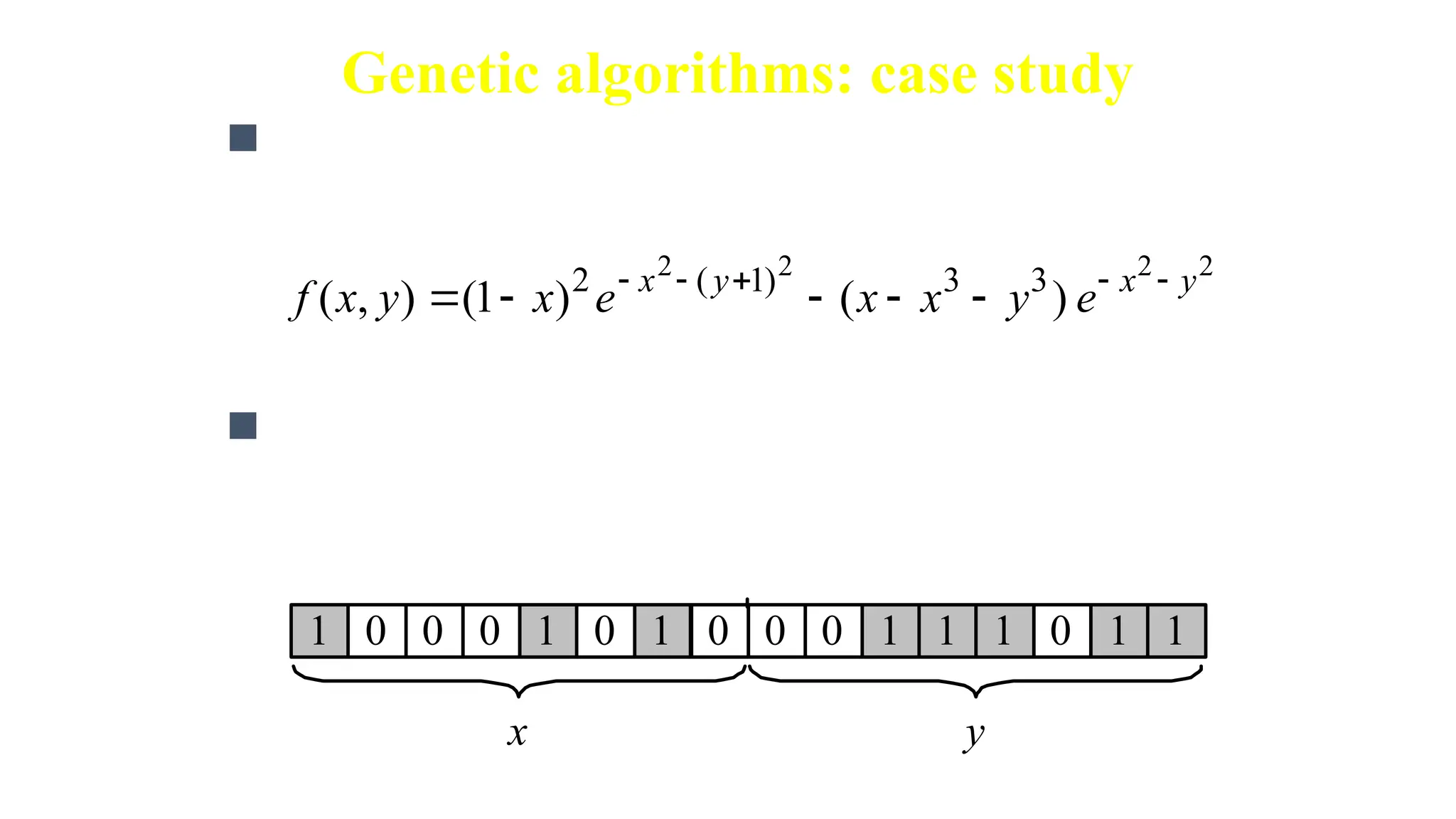

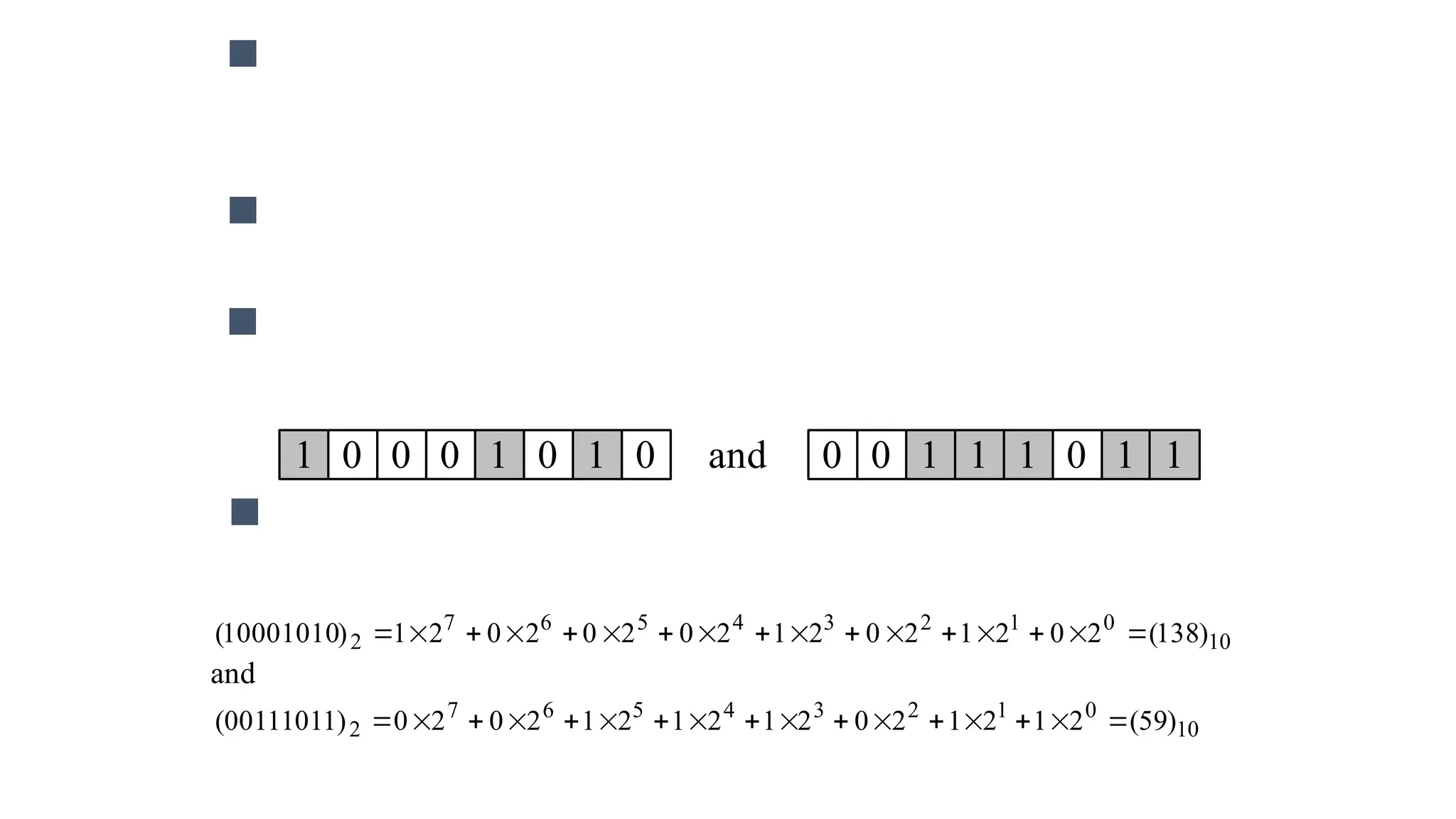

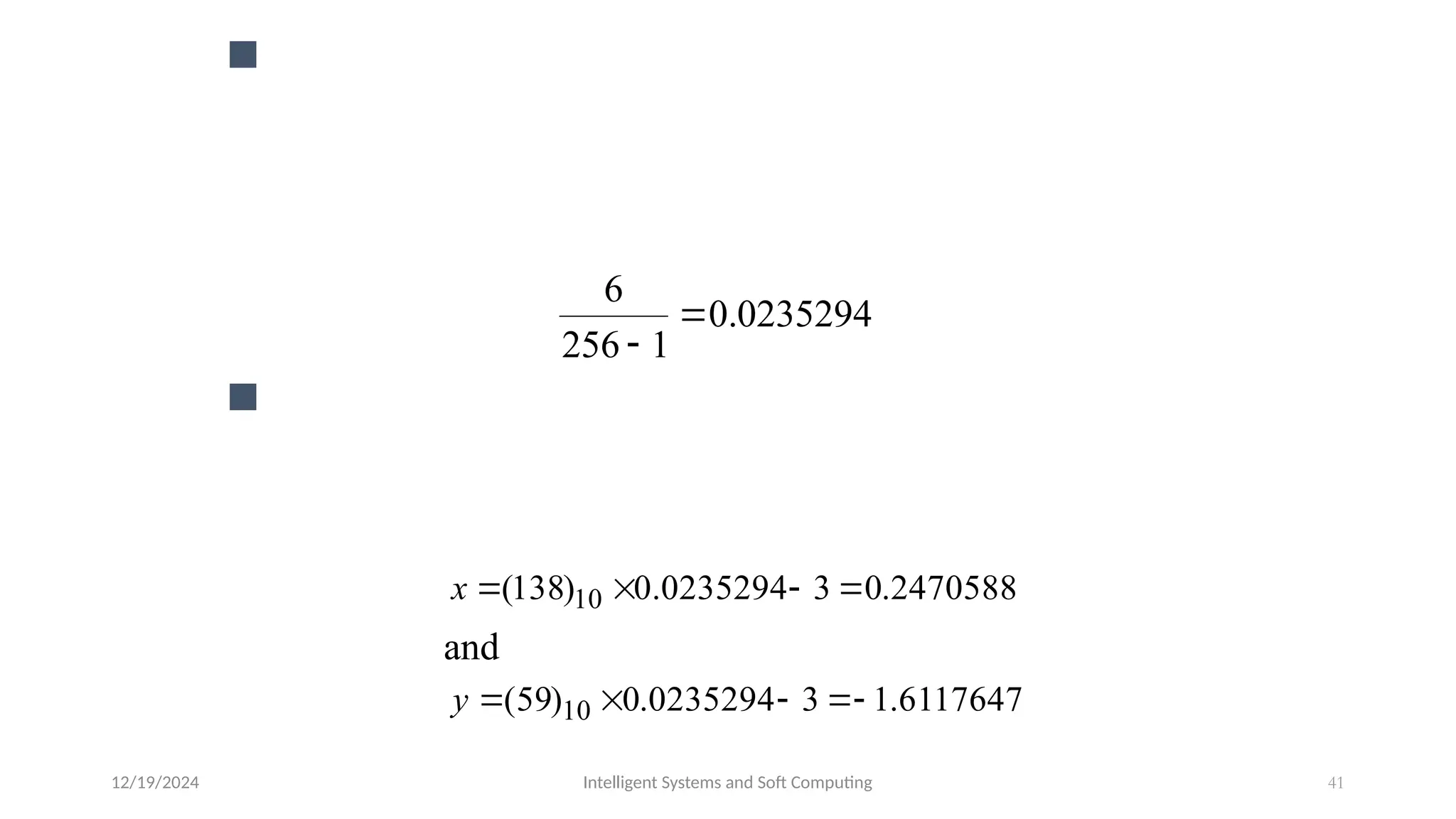

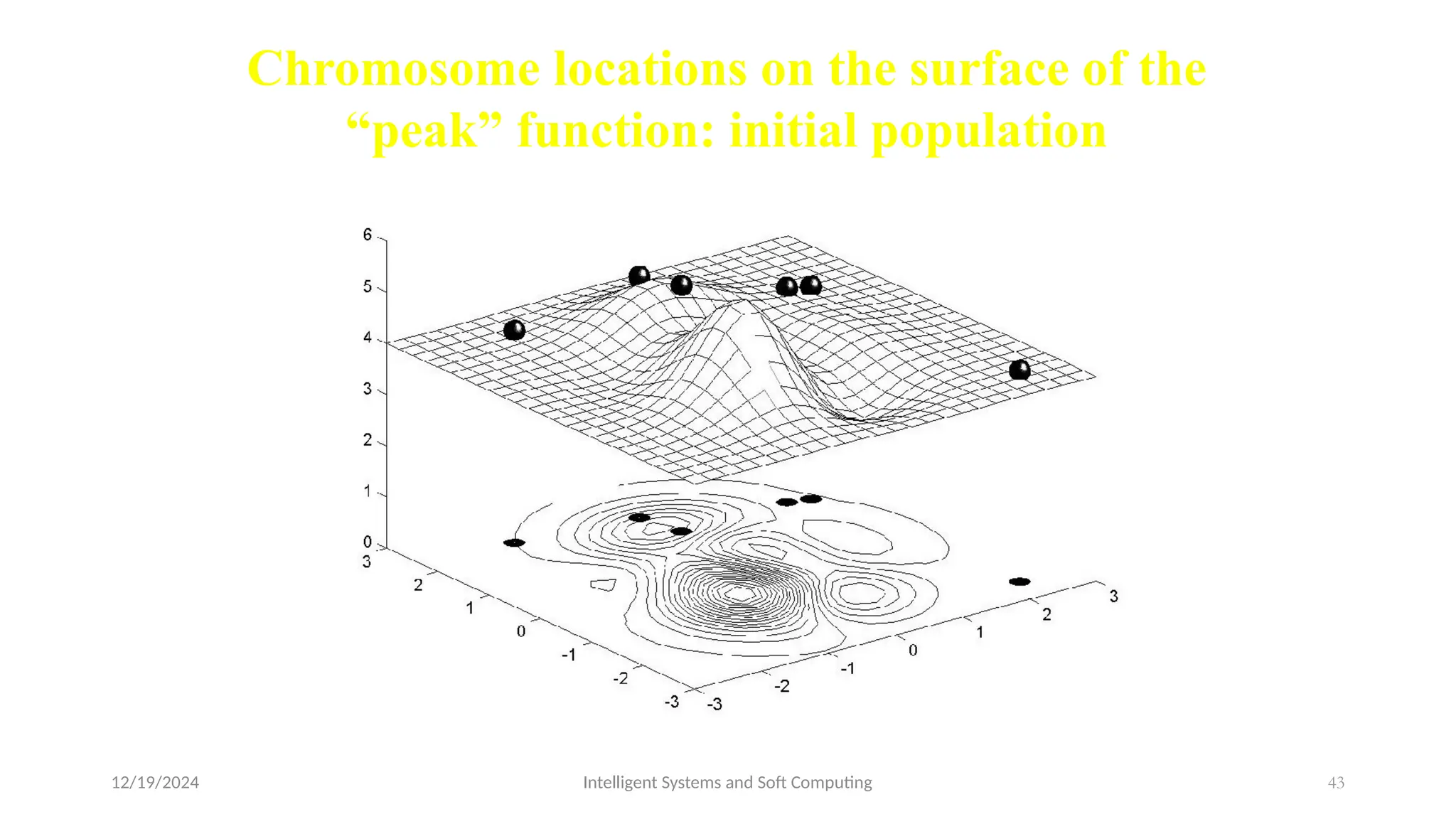

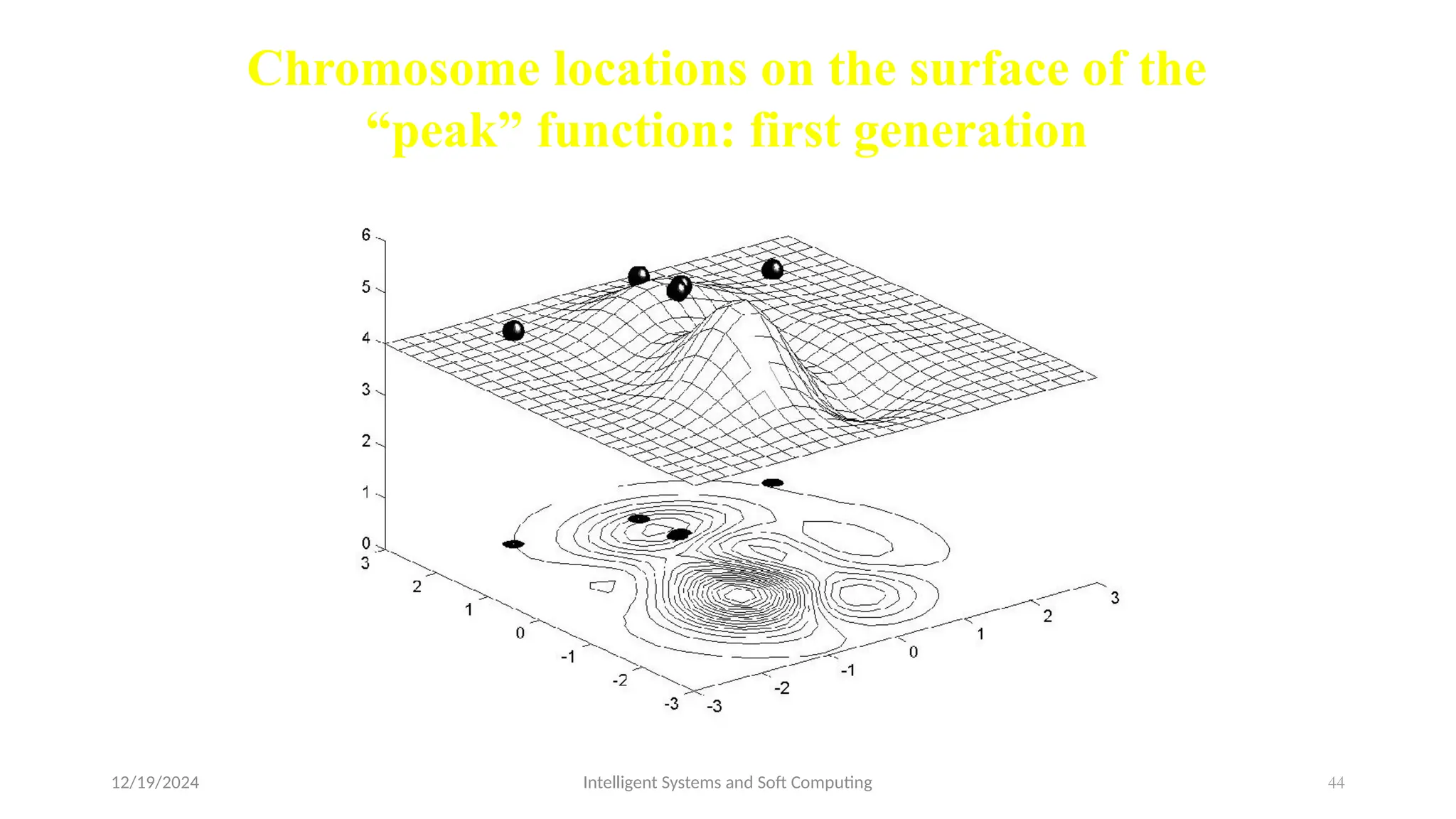

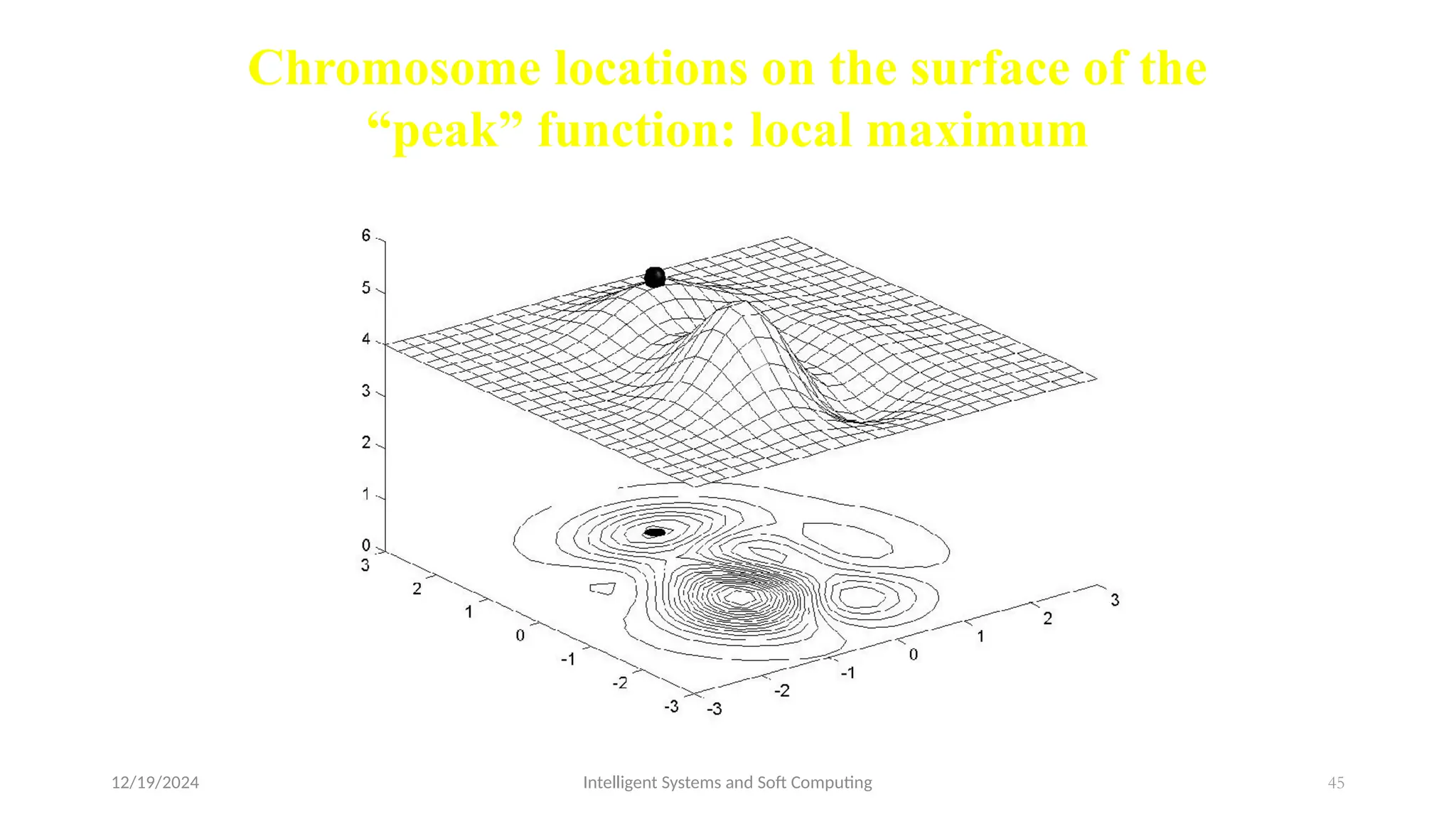

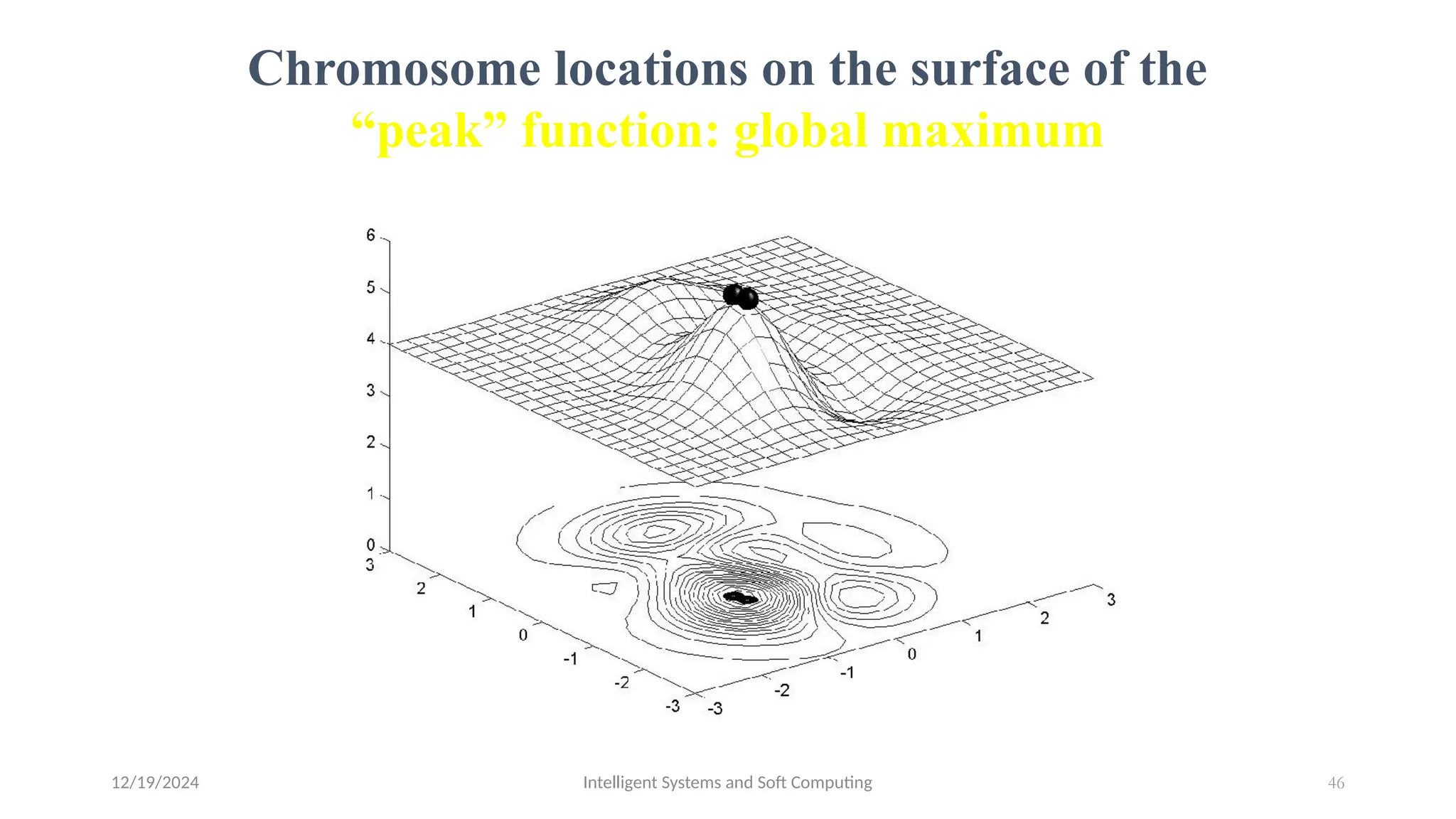

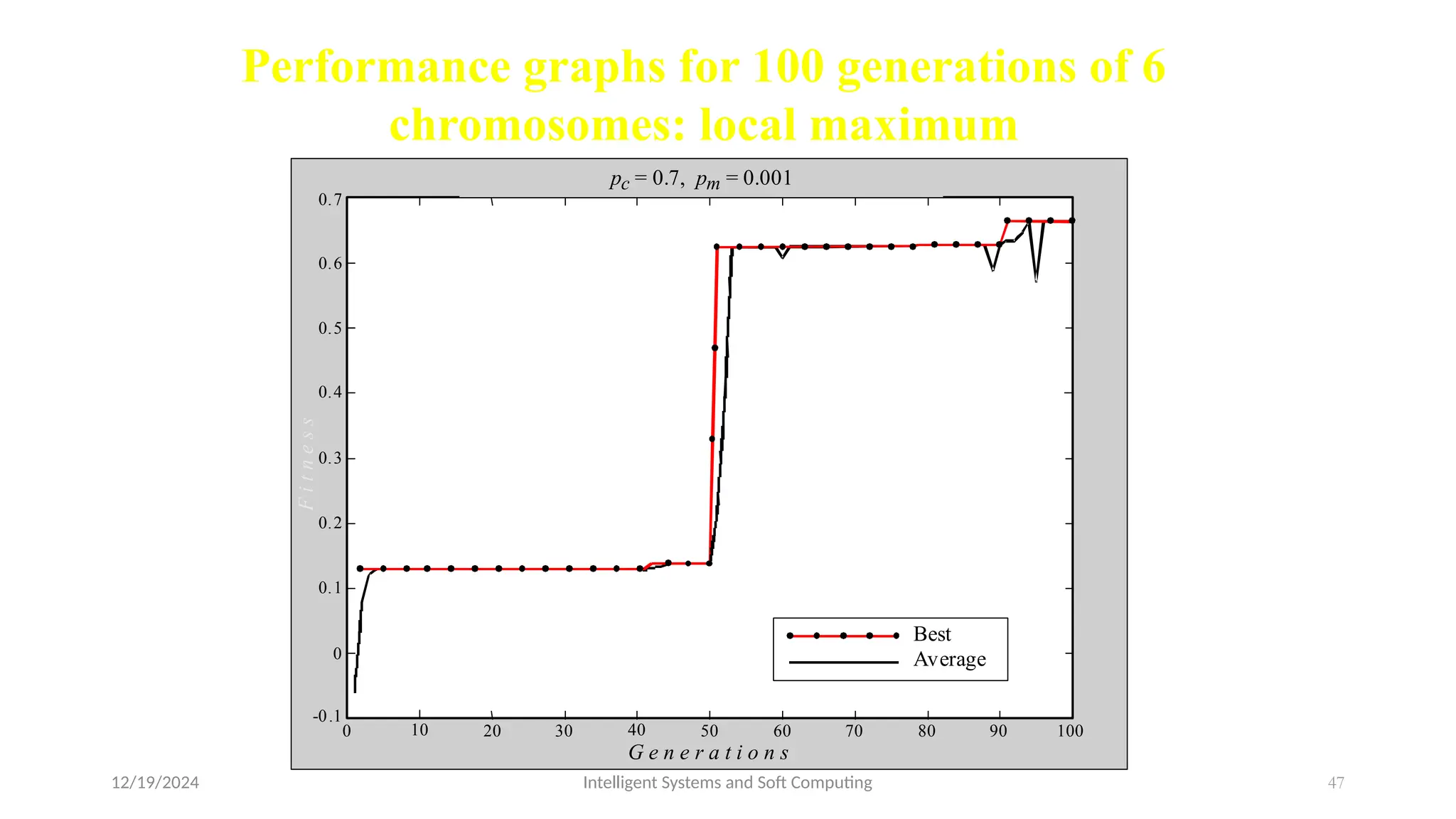

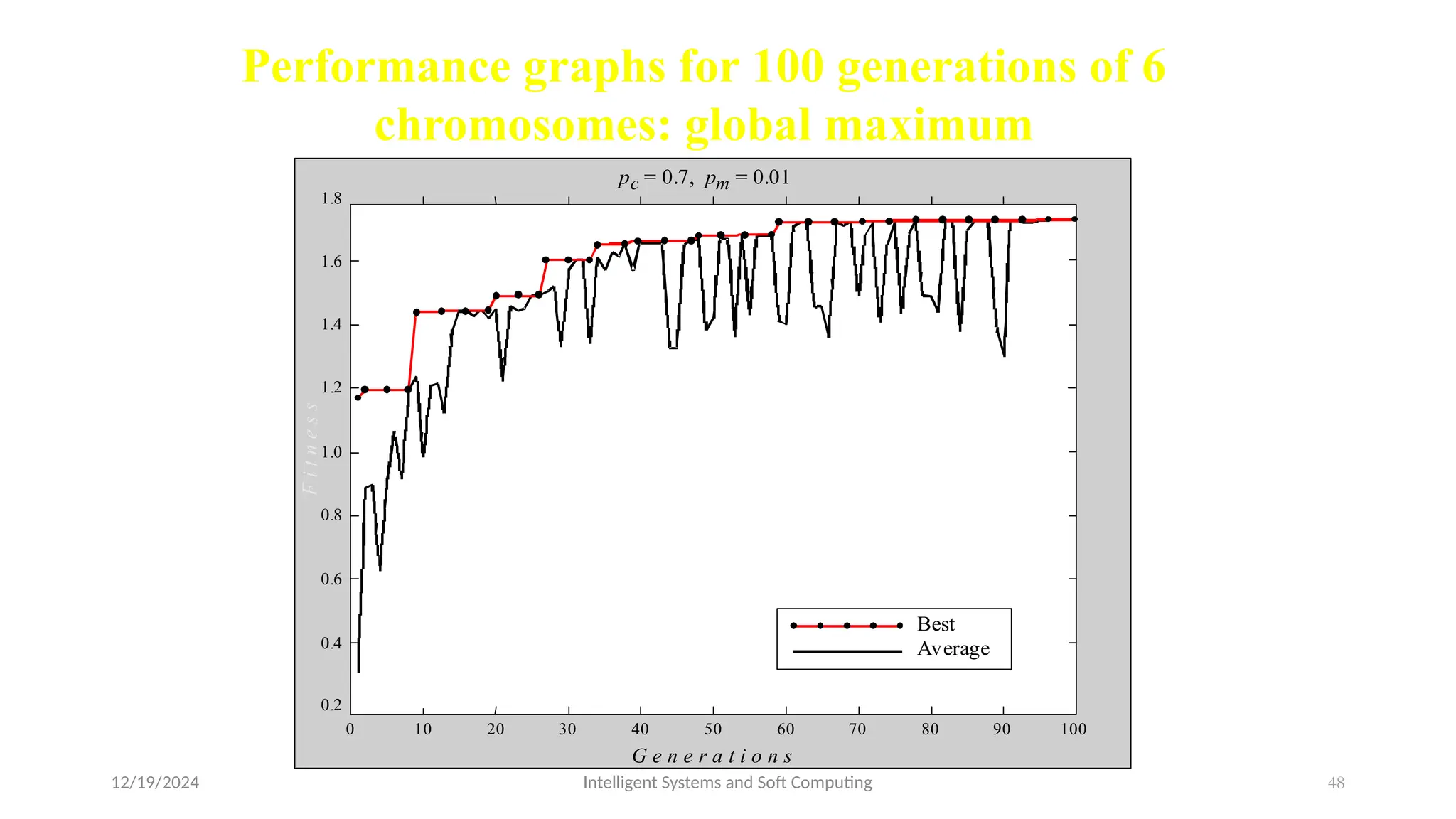

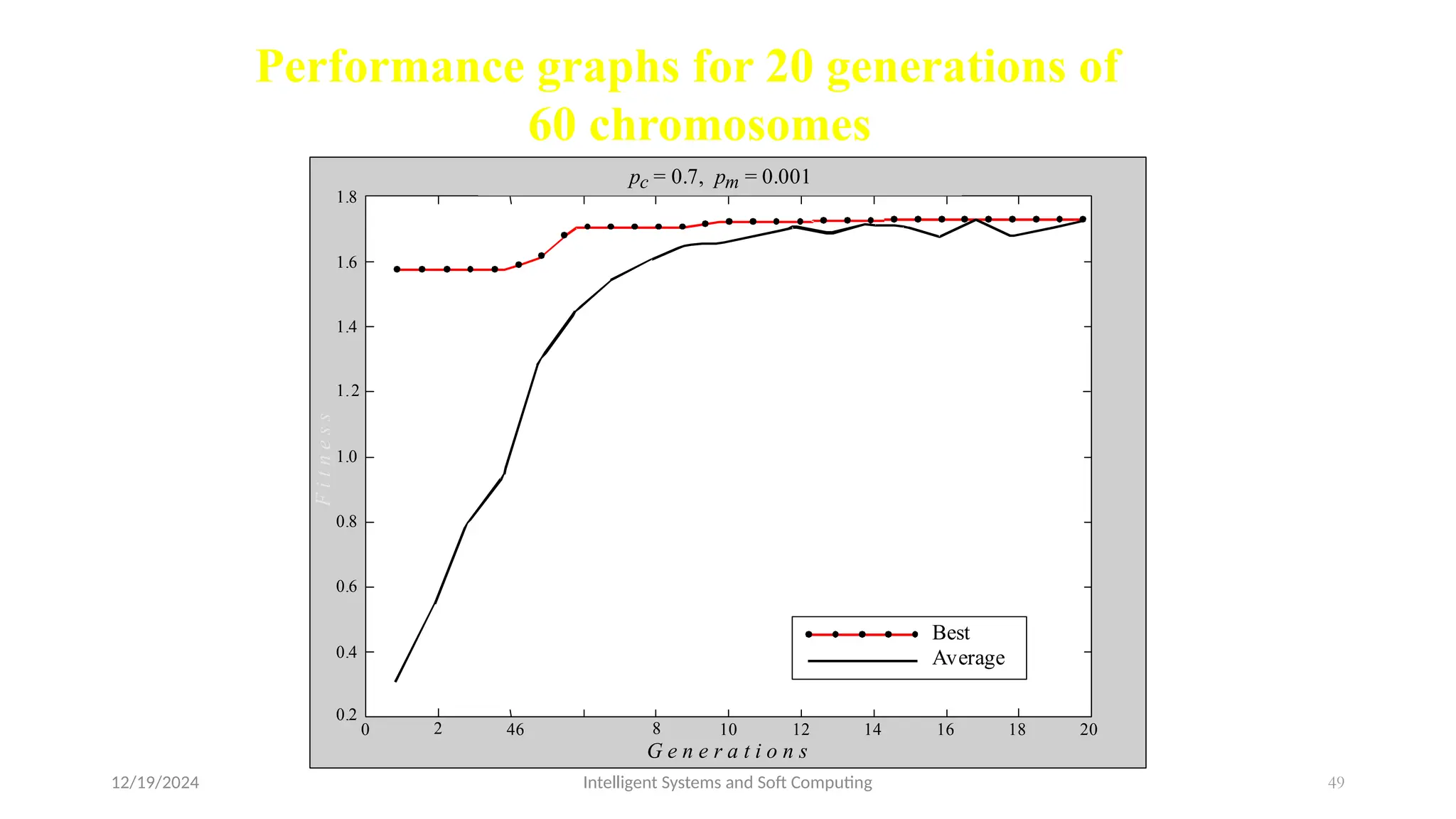

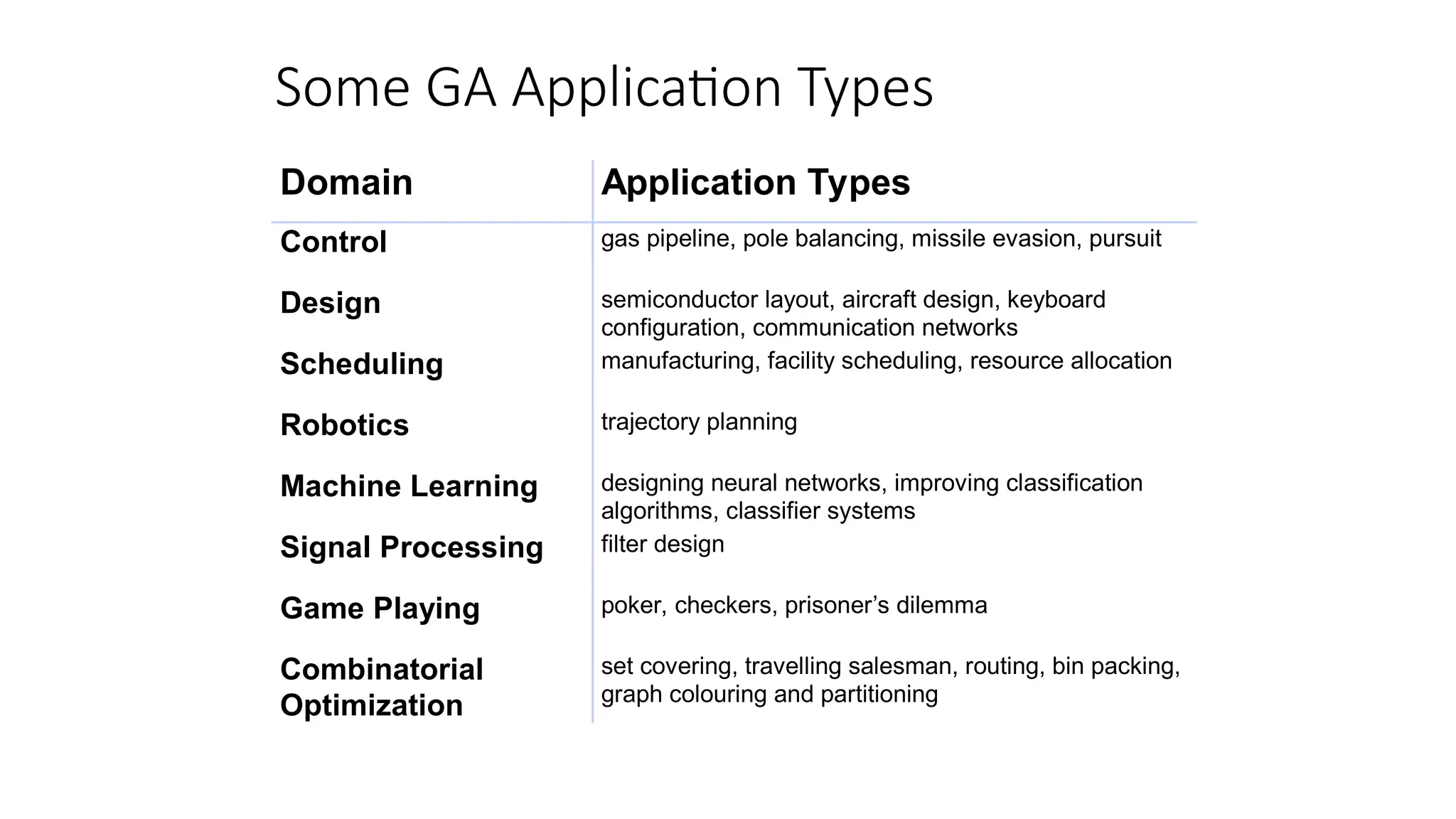

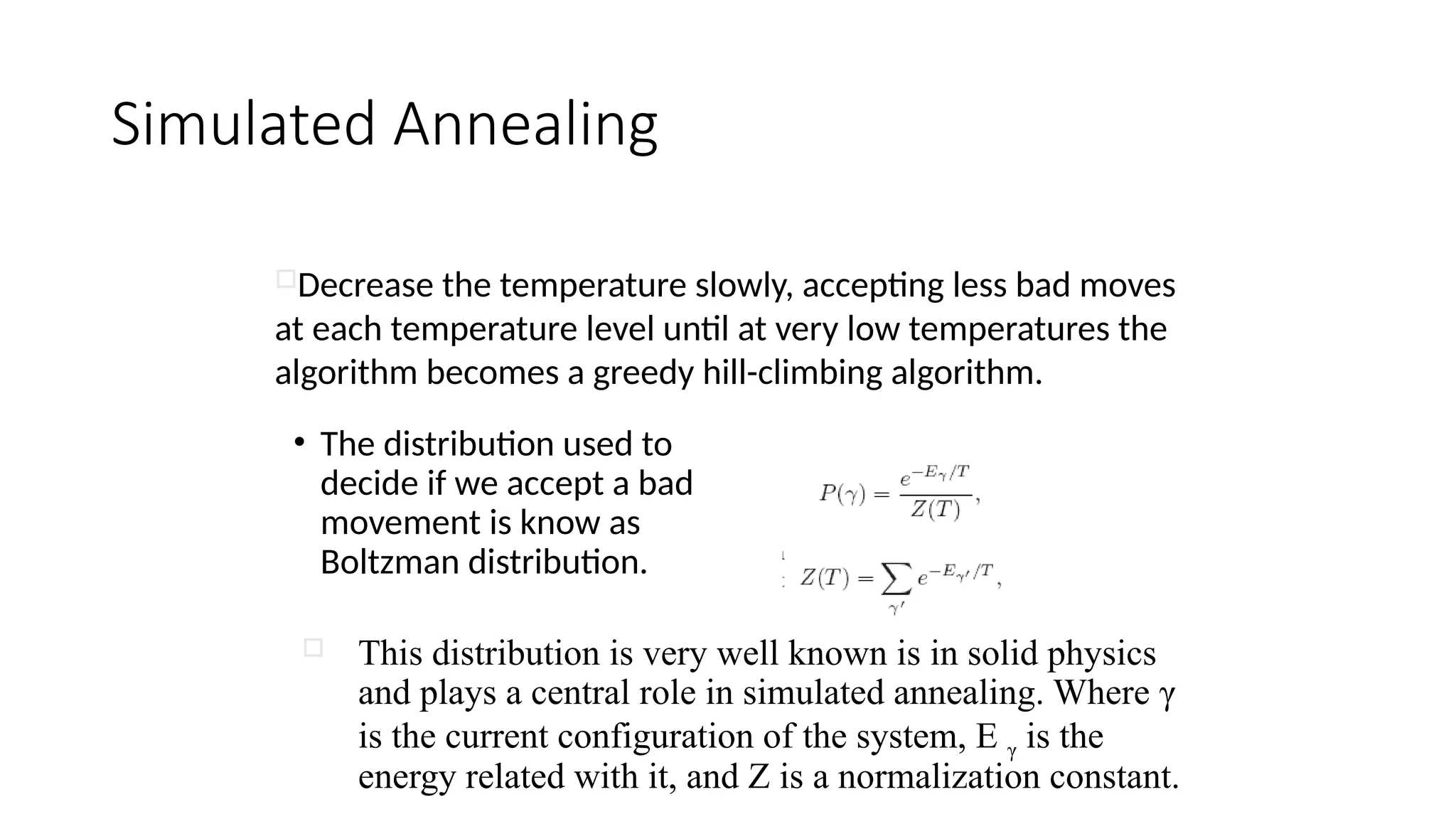

This document covers optimization techniques including genetic algorithms, particle swarm optimization, and simulated annealing, emphasizing their applications in various fields like engineering and science. It describes the process of genetic algorithms from encoding solutions to reproduction and mutation, highlighting the principles of evolution that underlie these methods. The document also provides examples and case studies to illustrate the functioning and effectiveness of genetic algorithms in finding optimal solutions.