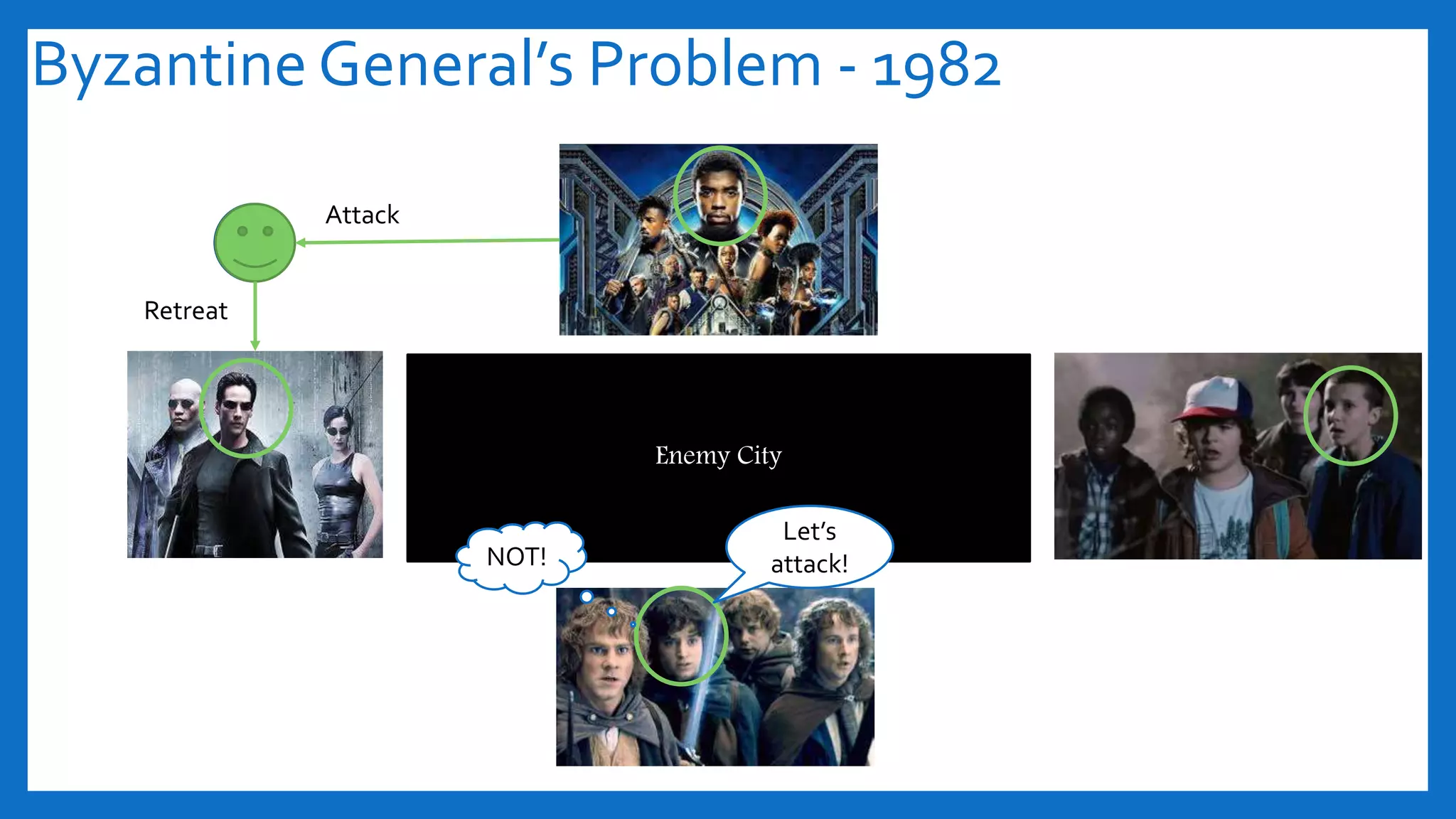

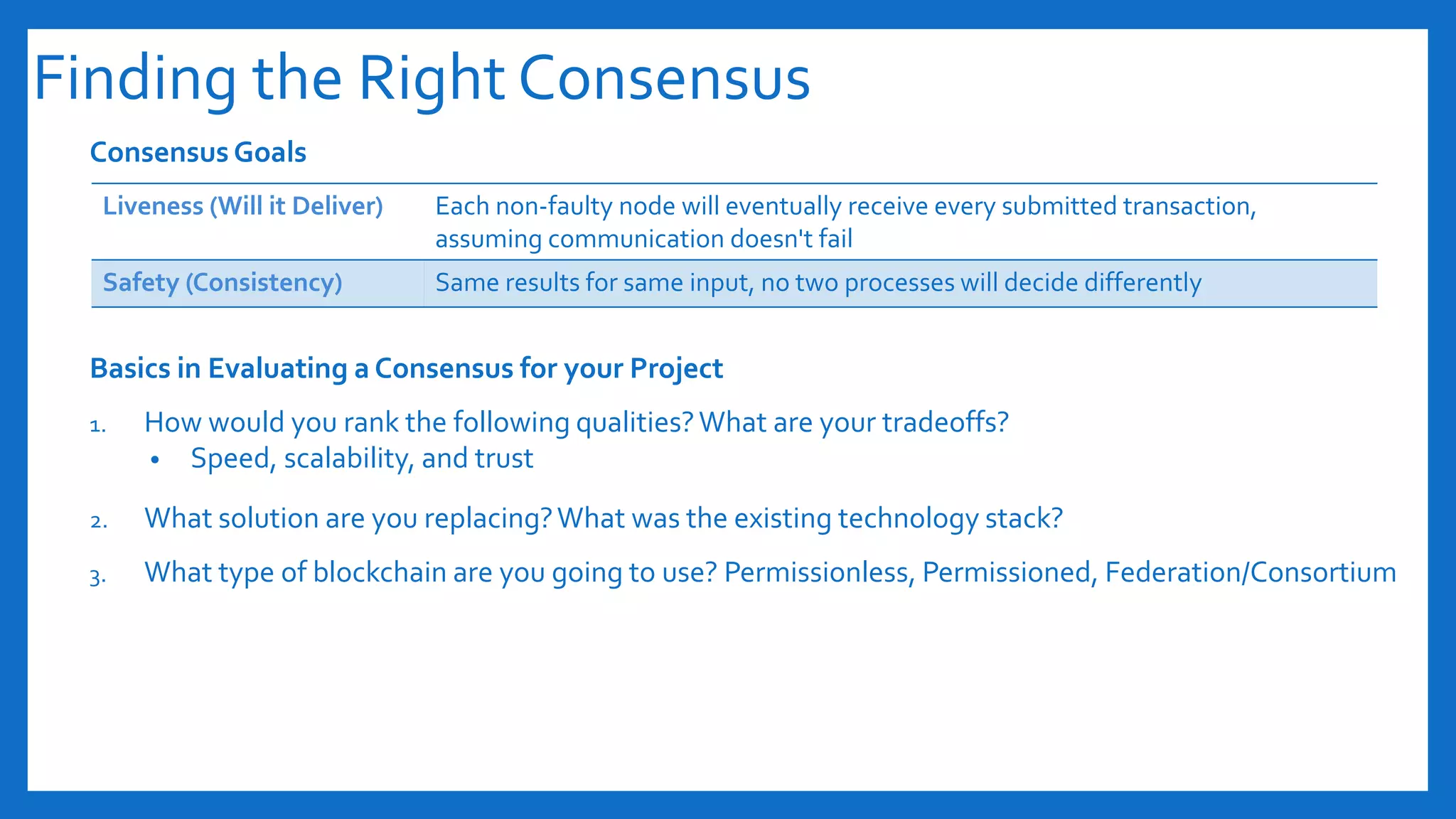

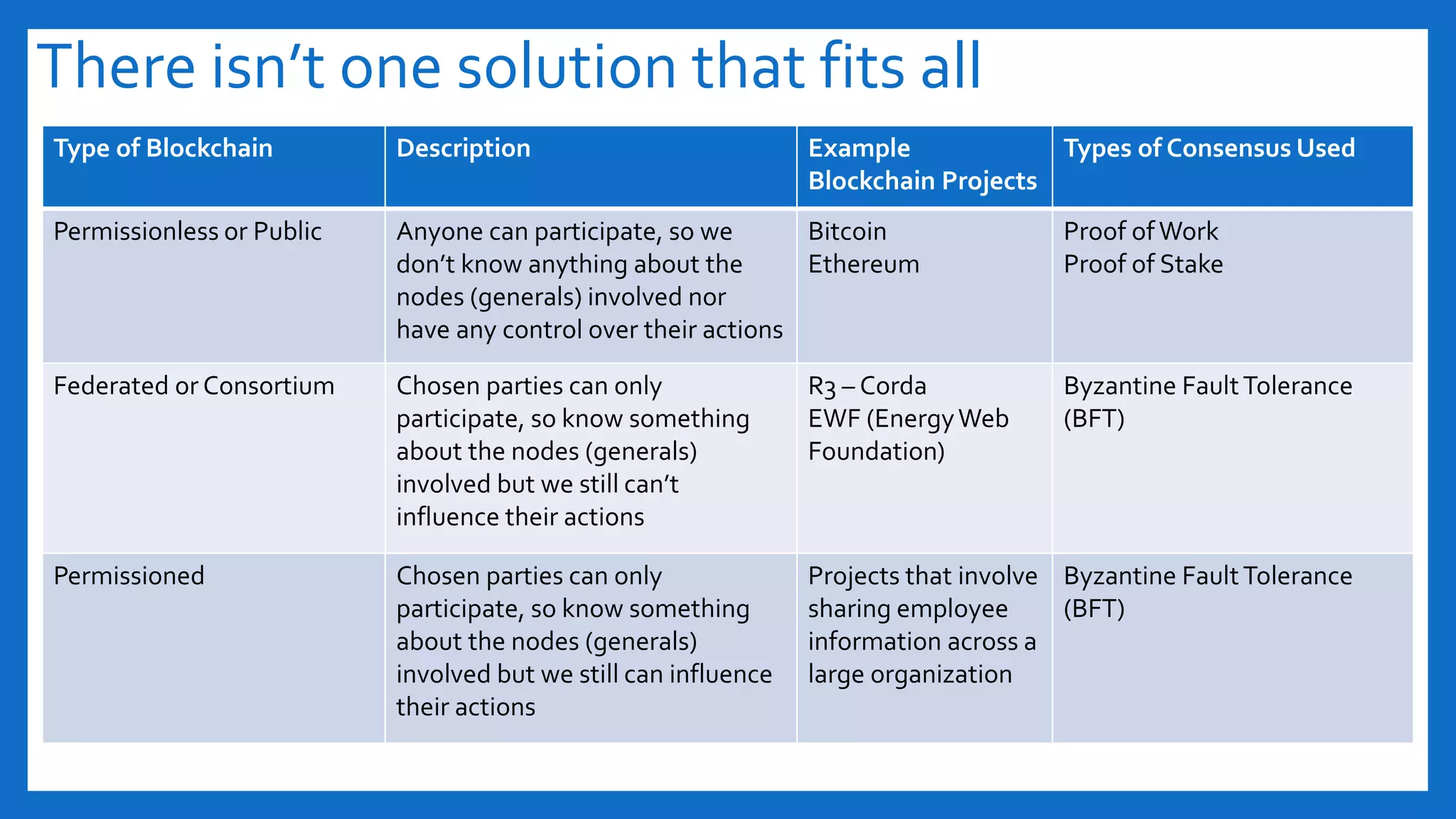

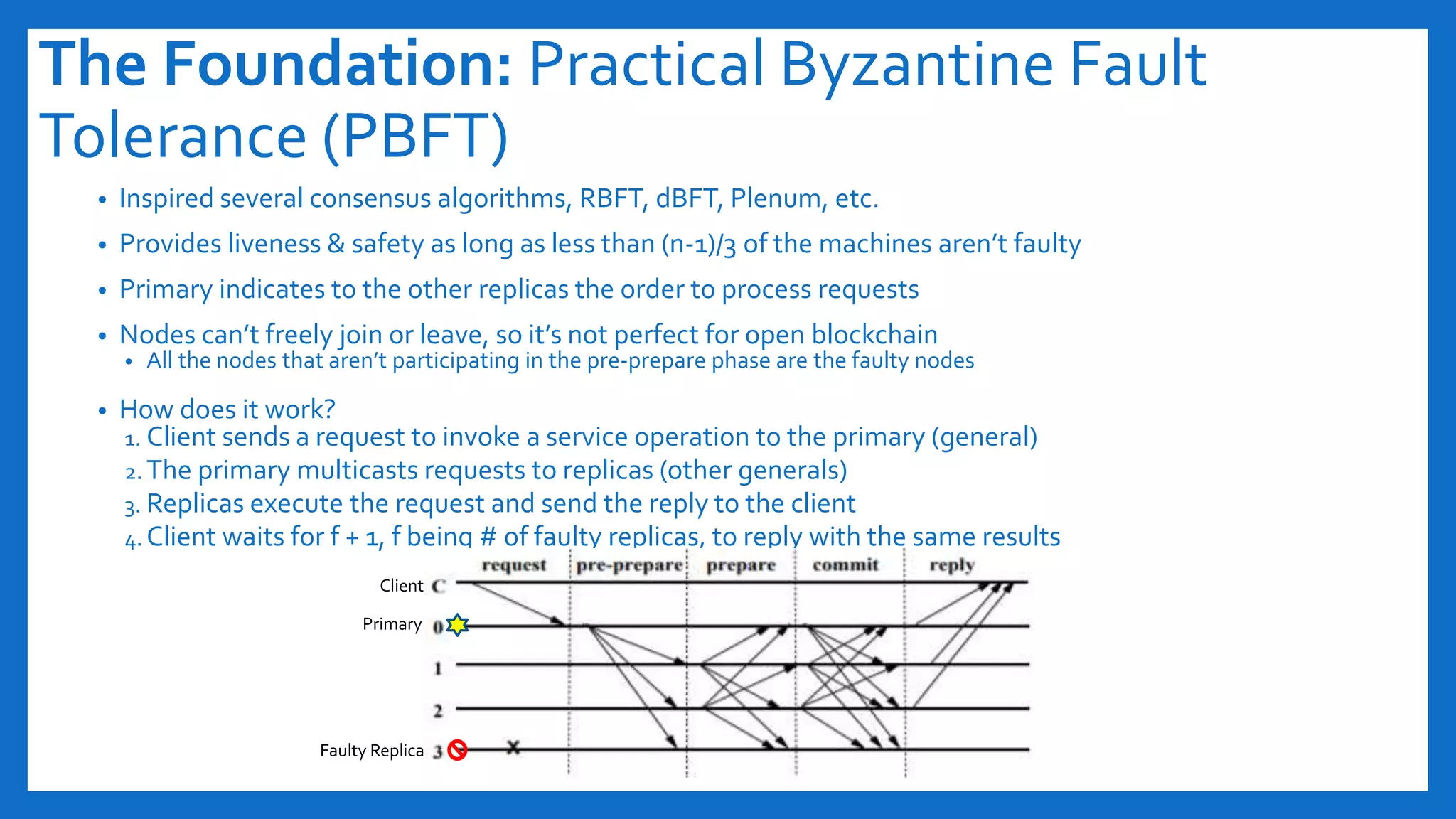

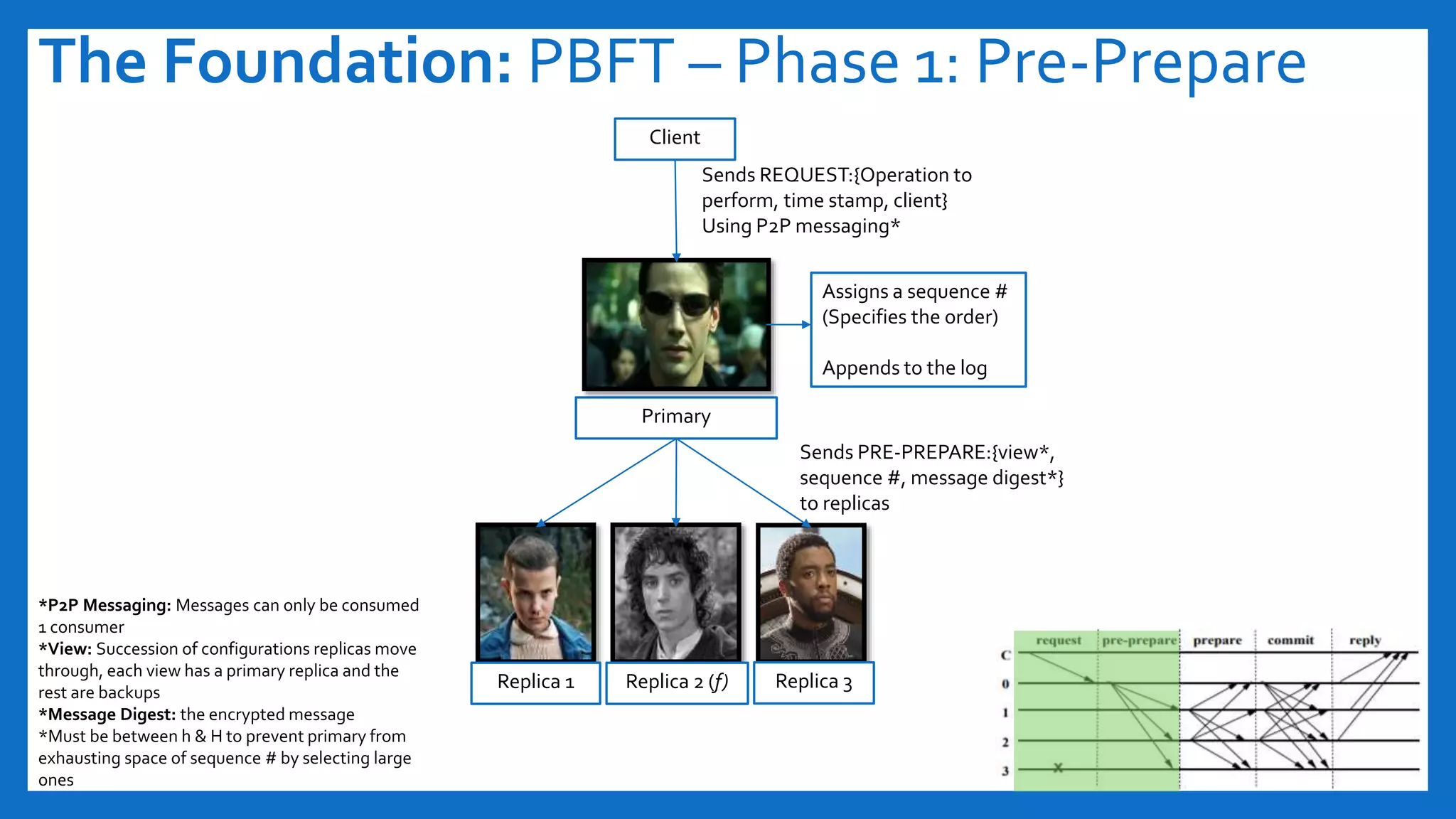

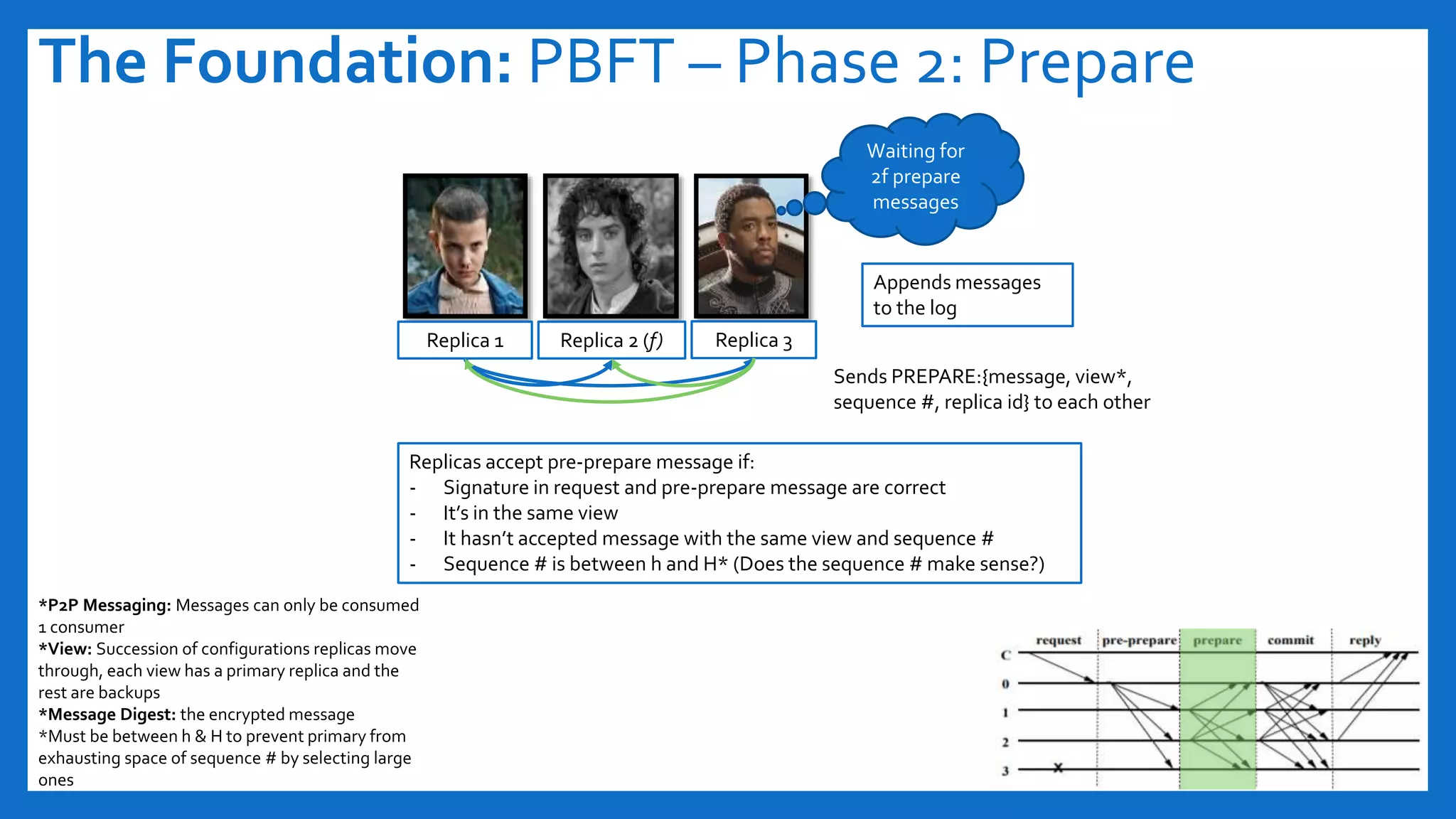

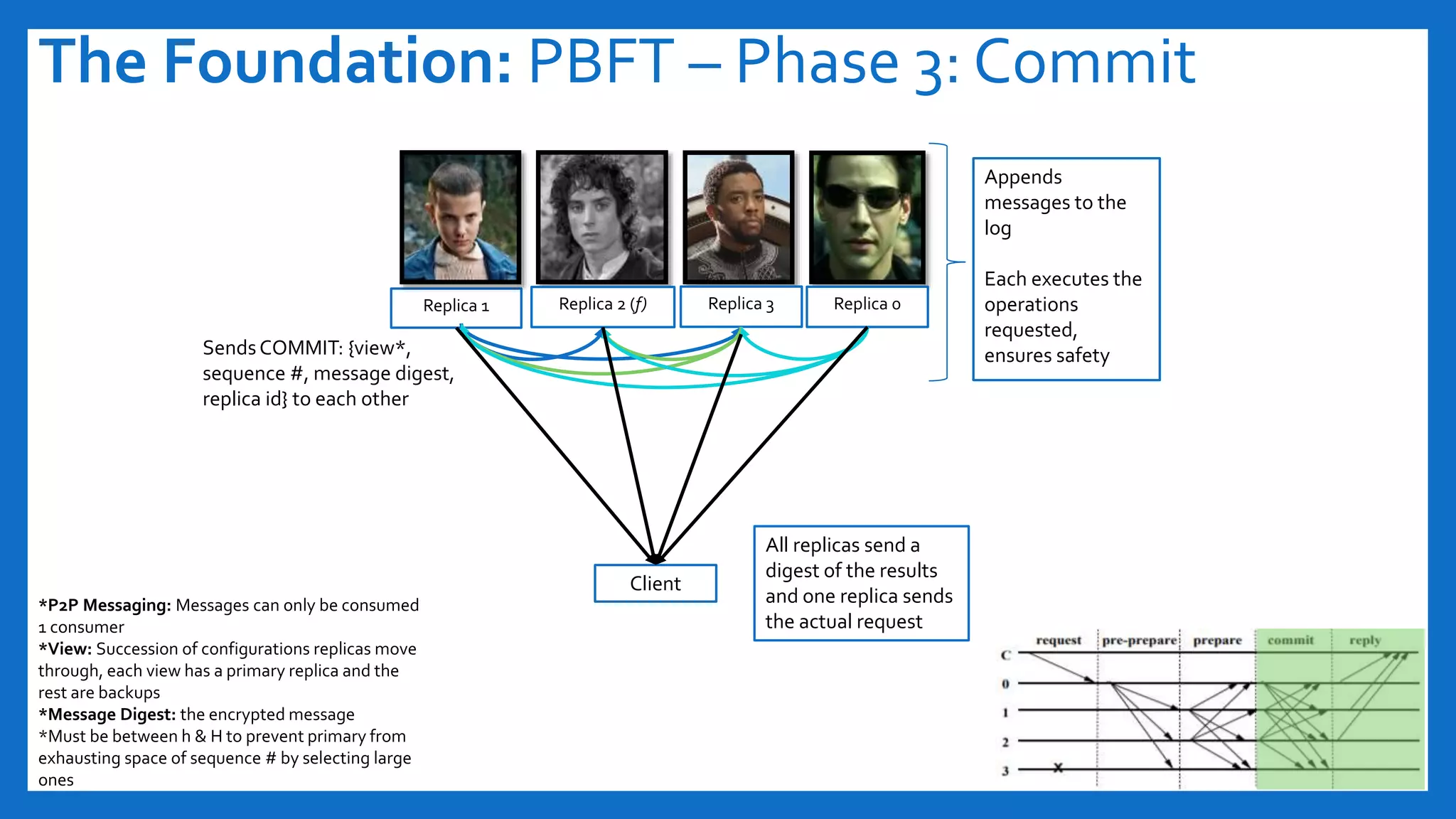

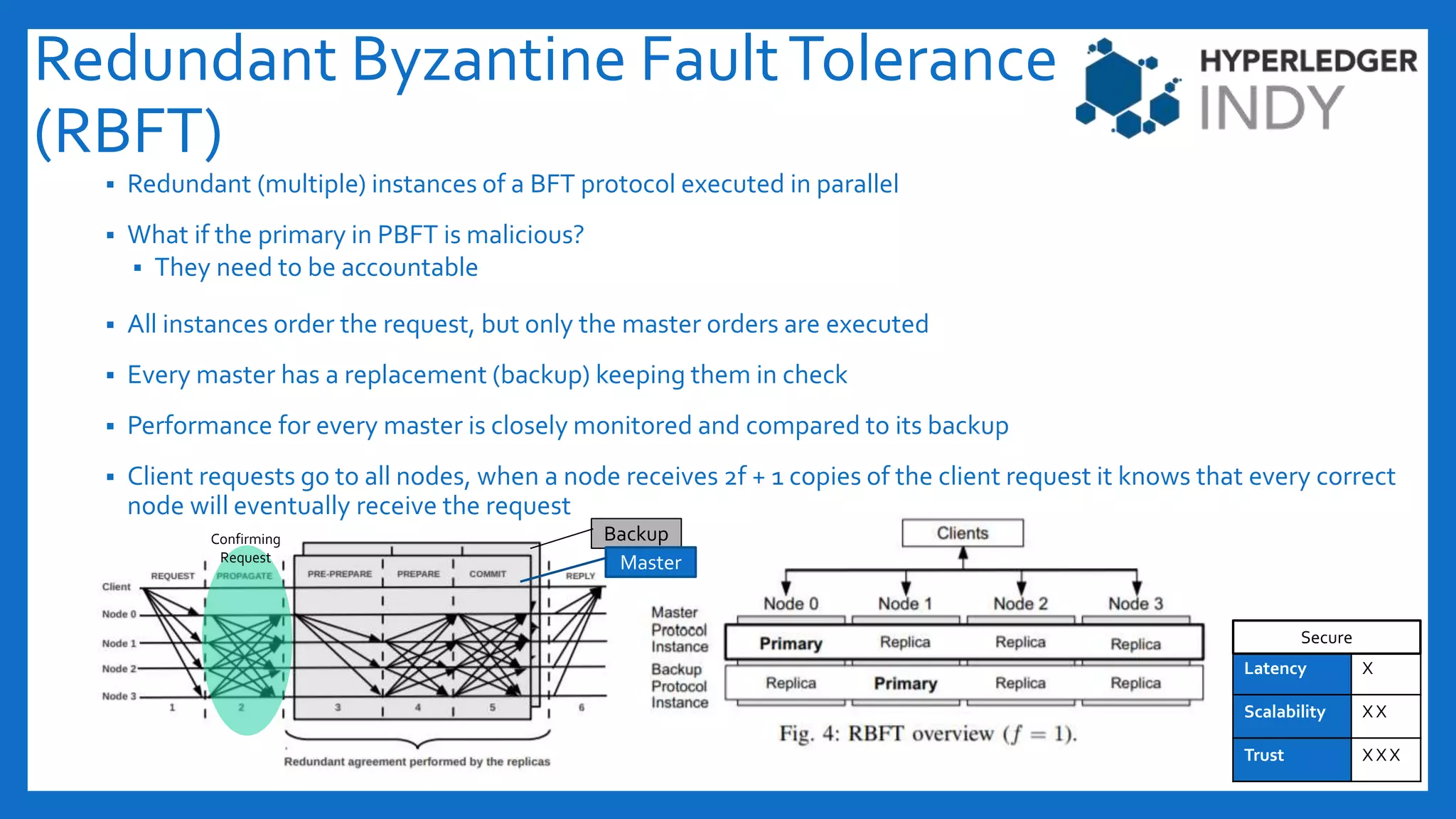

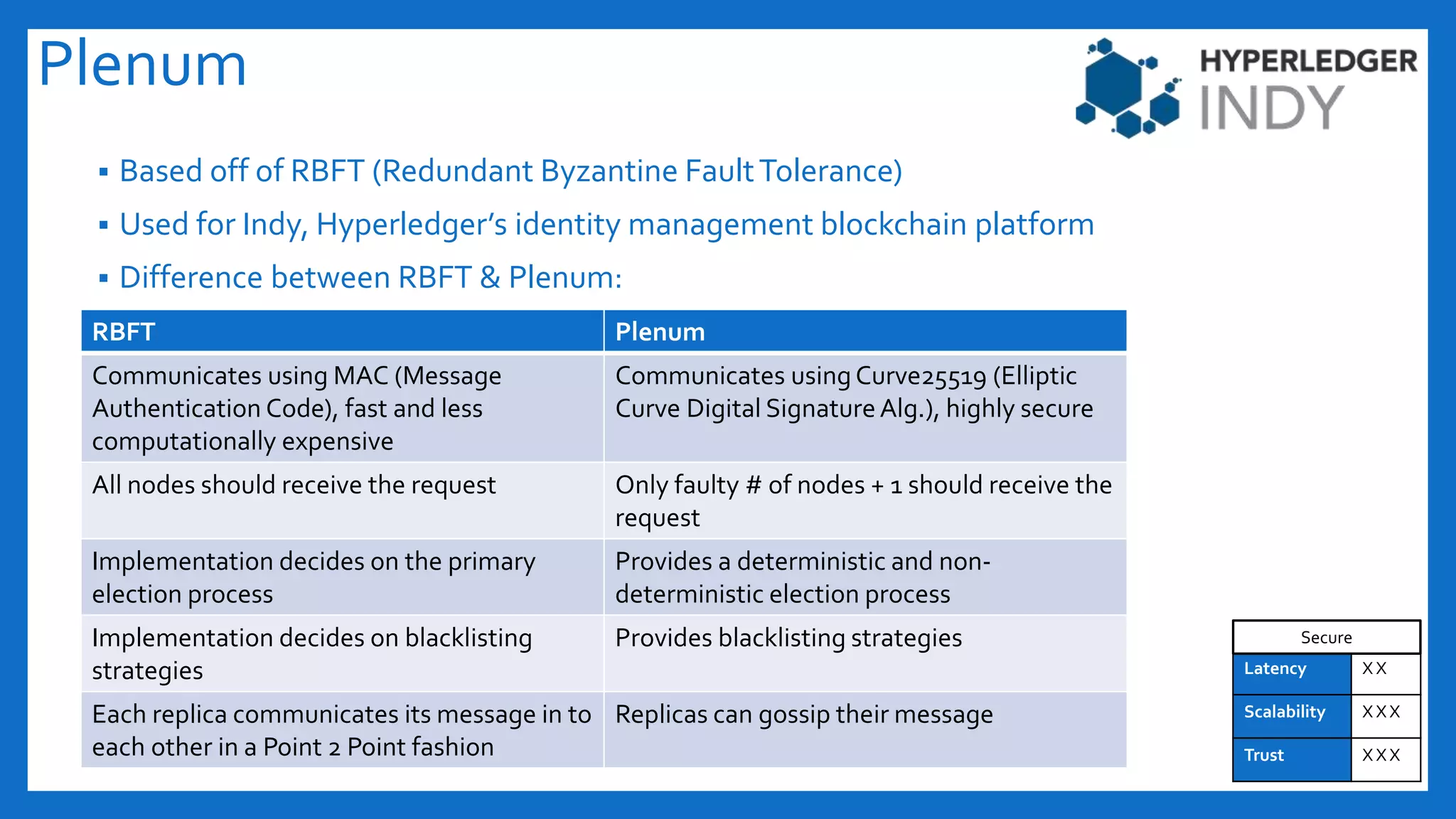

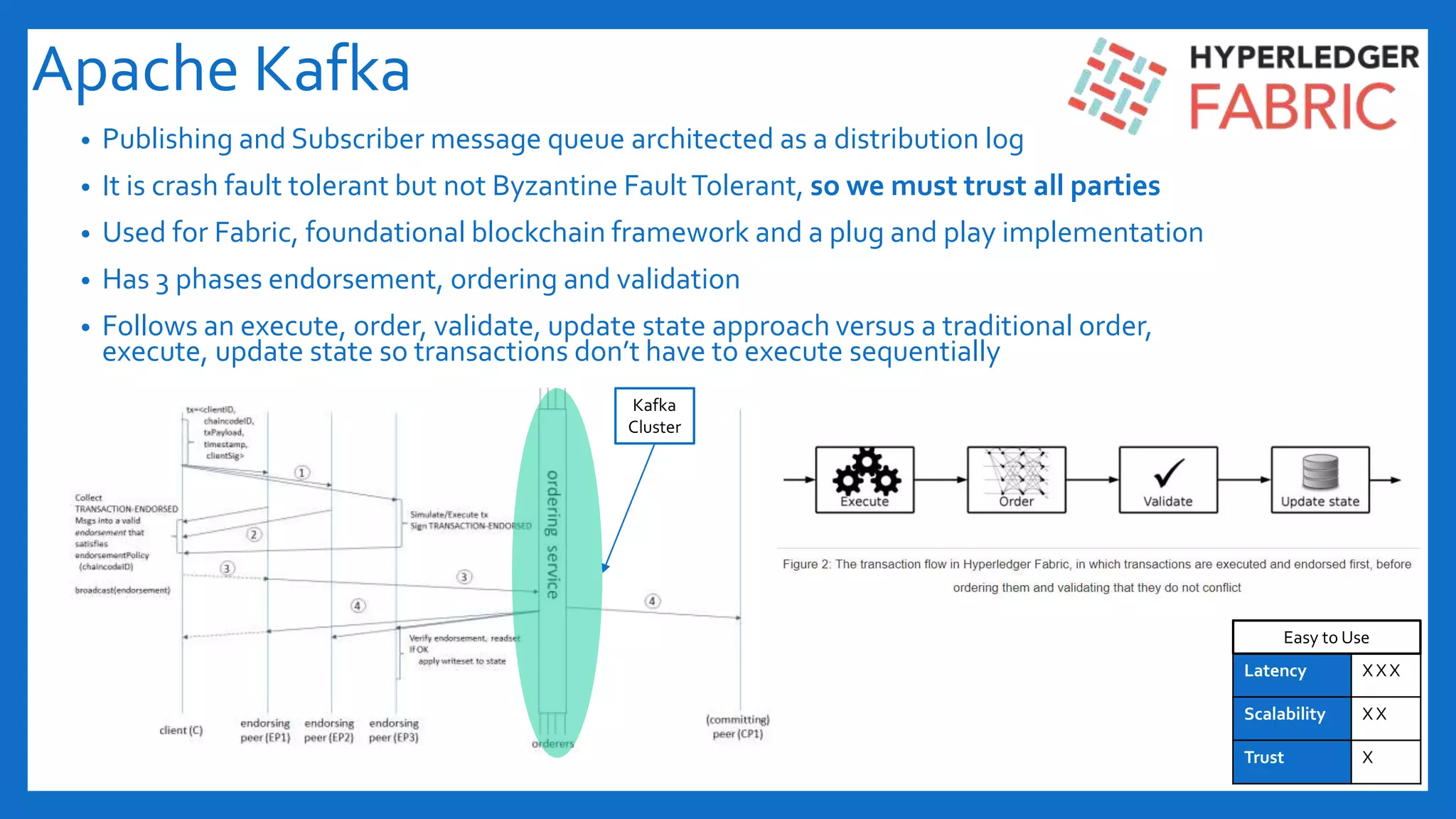

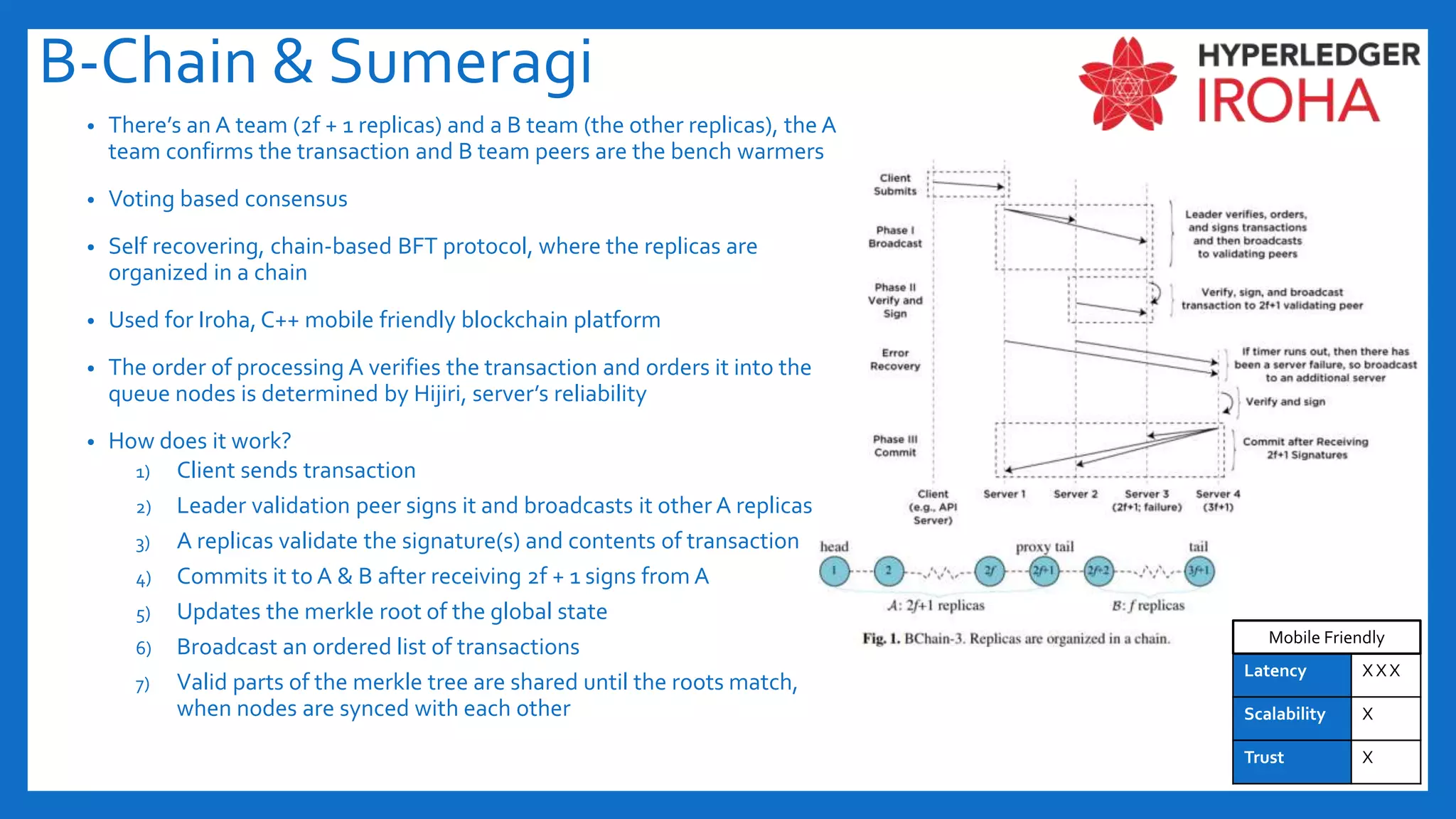

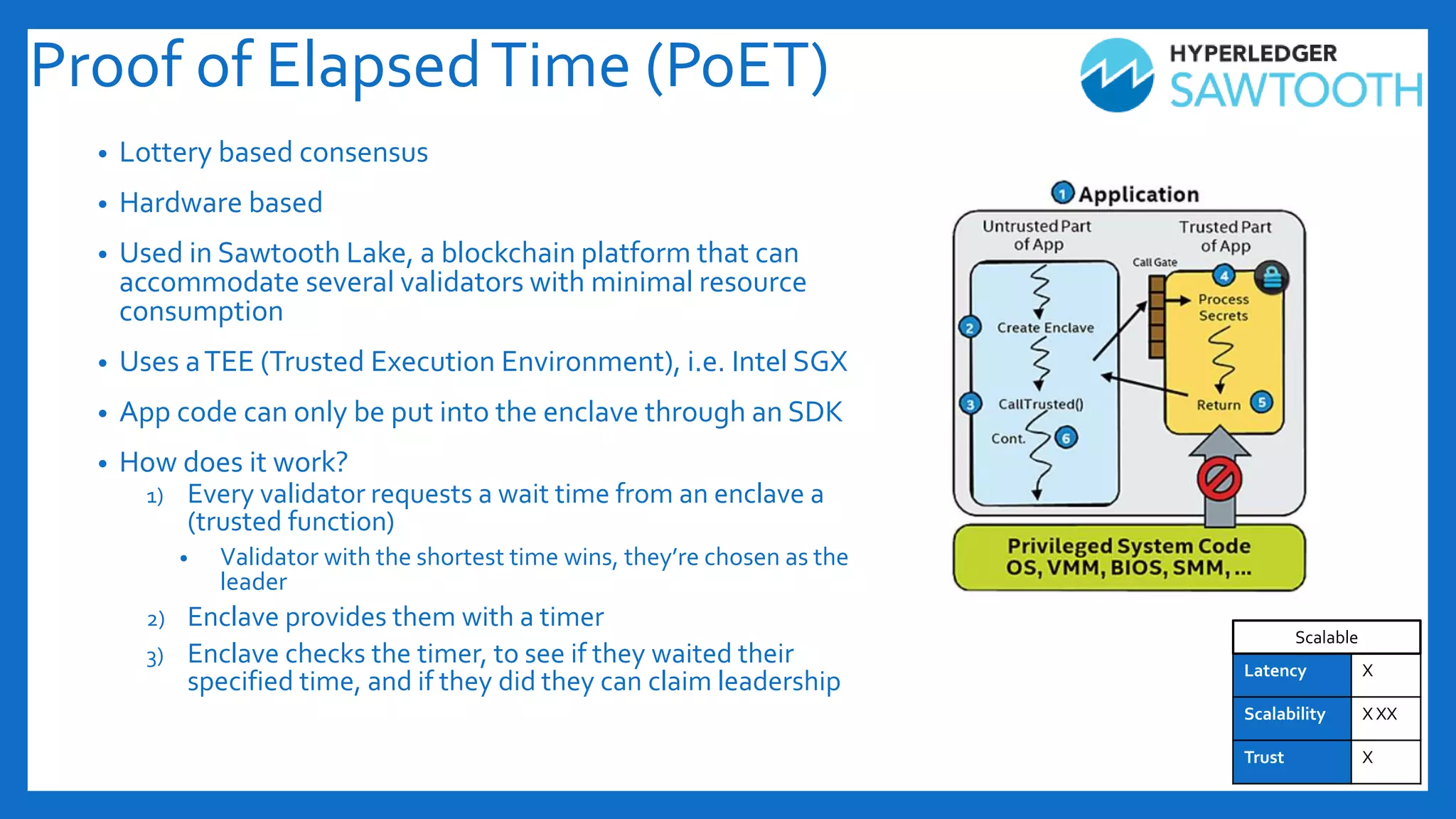

The document discusses Byzantine fault tolerance (BFT) consensus algorithms used in blockchain technology to address issues arising from untrustworthy nodes that need to reach a consensus. It elaborates on different types of consensus mechanisms, such as Proof of Work, Proof of Stake, and various implementations of BFT like PBFT, RBFT, and Plenum, highlighting their characteristics and applications. The importance of evaluating consensus algorithms based on project needs, such as speed, scalability, and trust, is emphasized along with practical considerations for implementing these solutions.