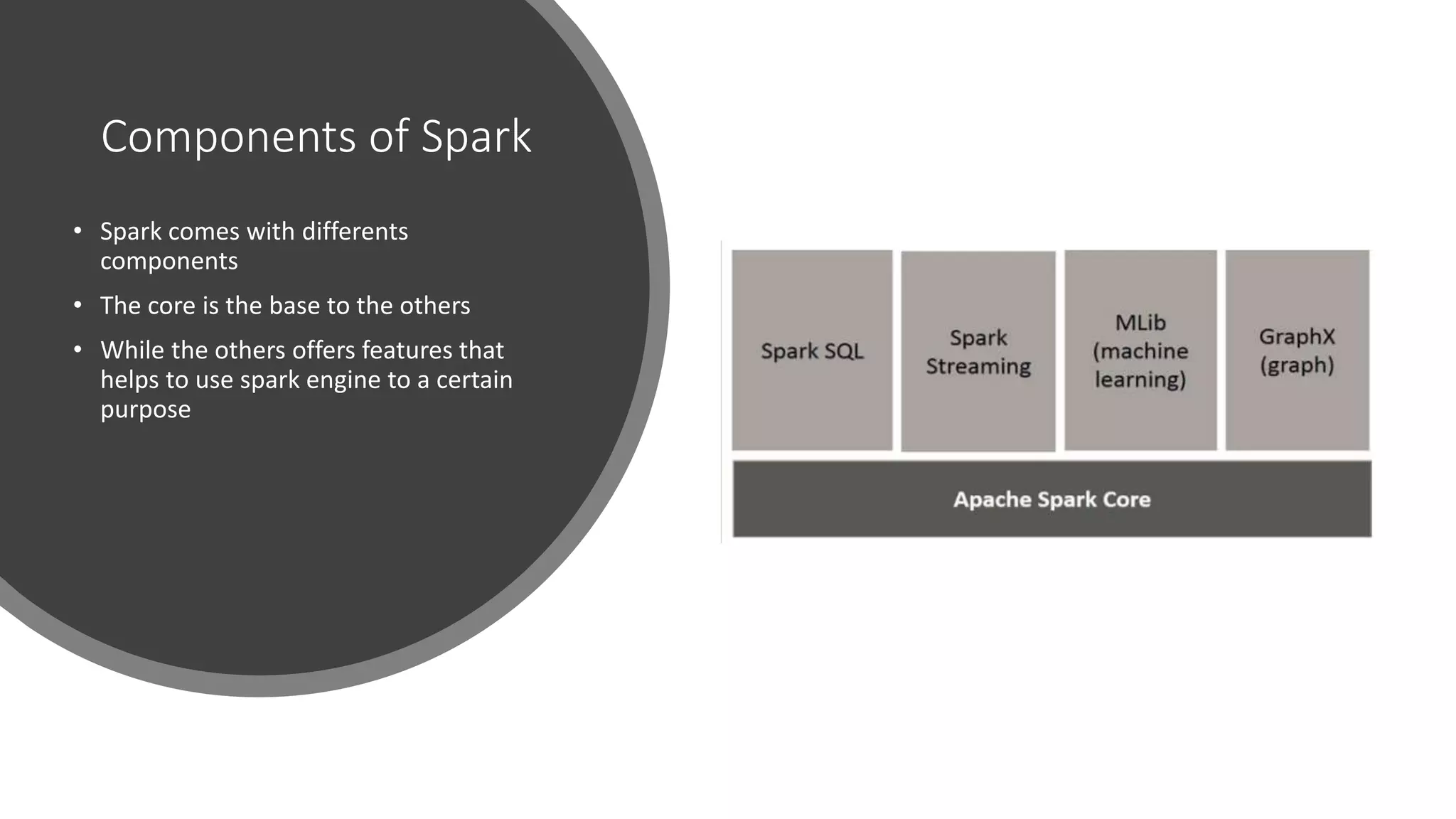

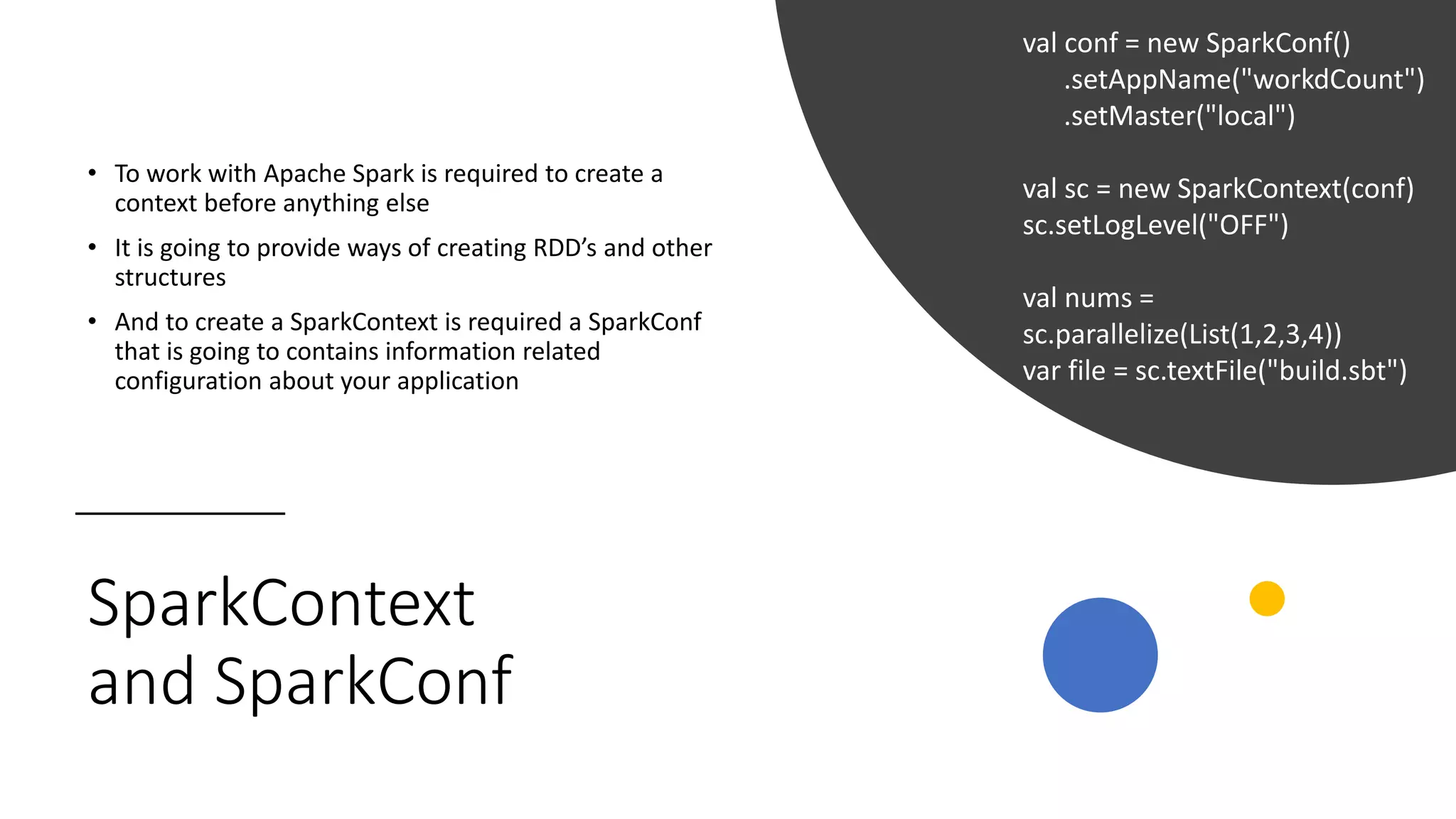

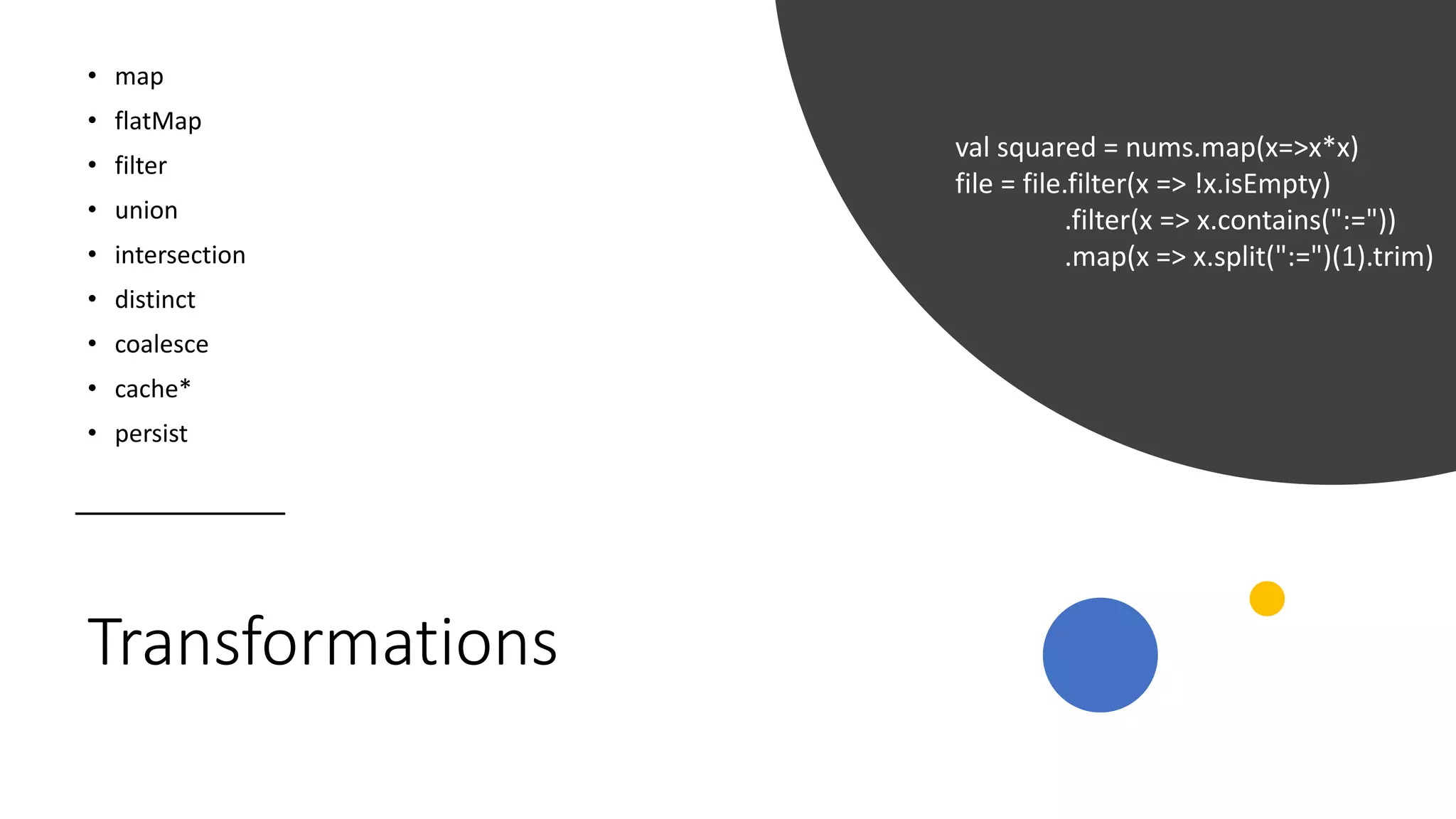

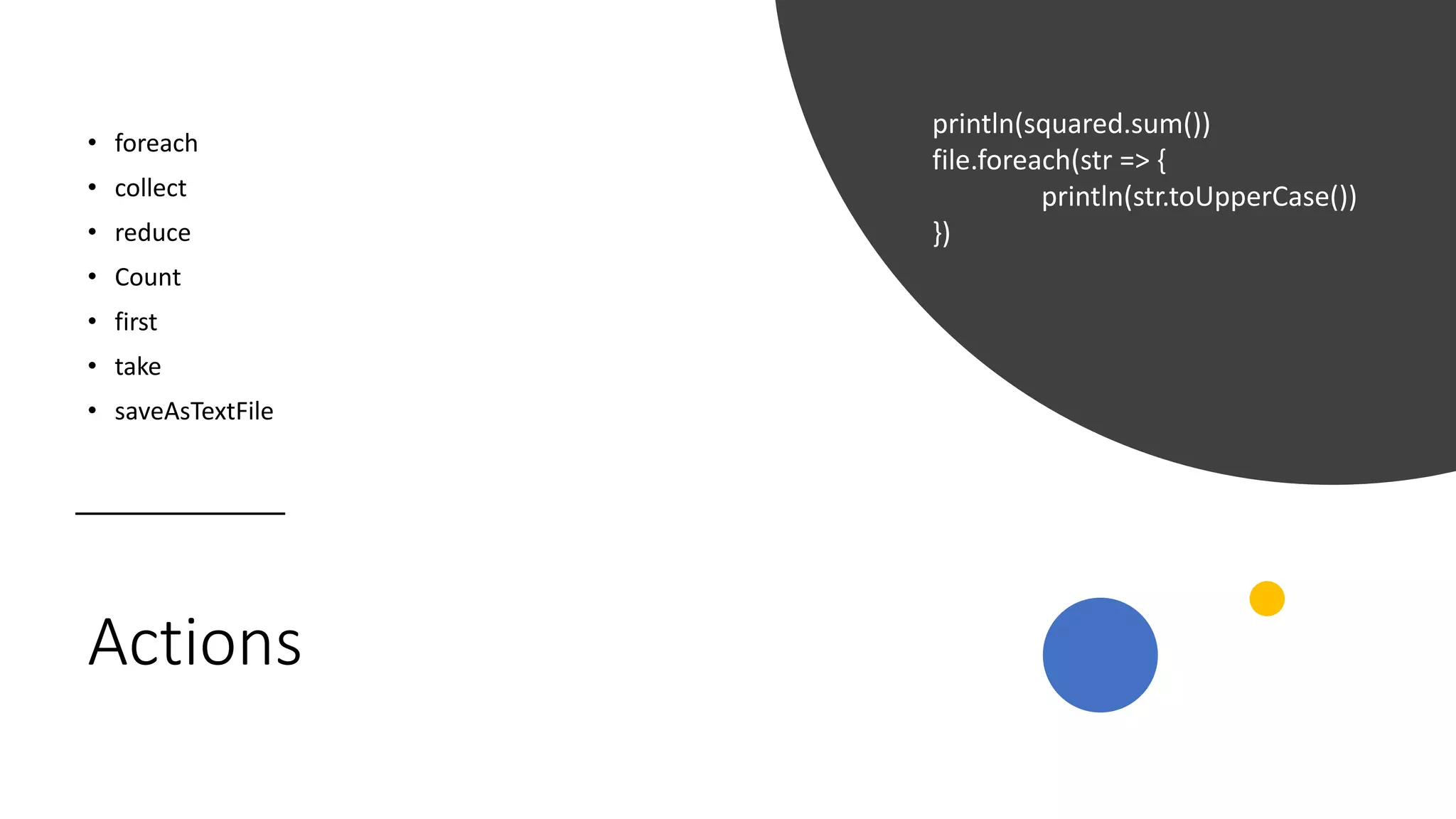

Apache Spark is an open-source distributed processing engine that allows for iterative and interactive processing of big data. It provides a framework with a functional API to create distributed applications that run across a cluster. Spark contains various components, with the core providing the base functionality and other components adding features for specific purposes like SQL, streaming, and machine learning. The functional programming paradigm underlies Spark's API, with immutable data and functions without side effects. Spark uses the map-reduce model where transformations are lazy and actions trigger execution, similar to Hadoop but with improved performance through in-memory caching of data.