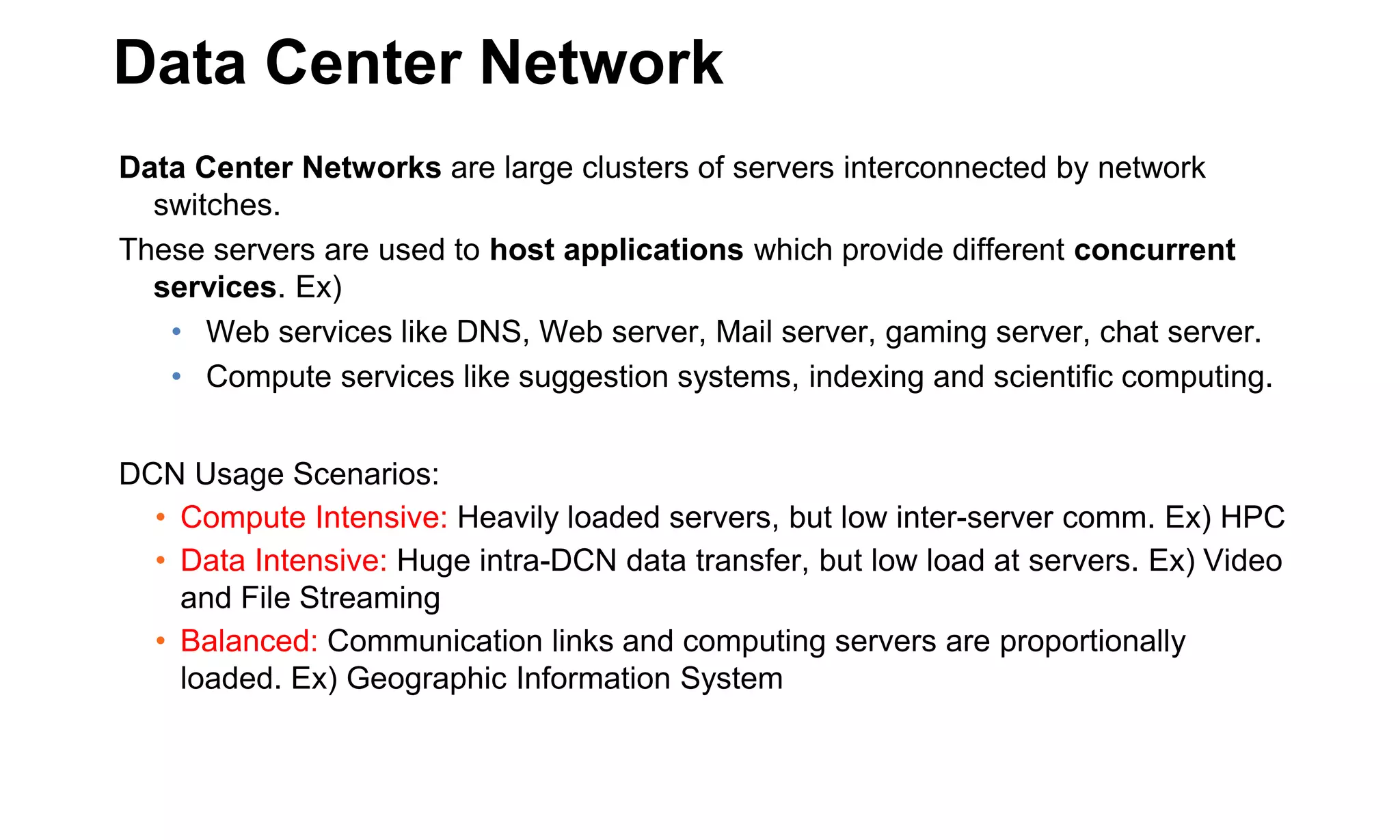

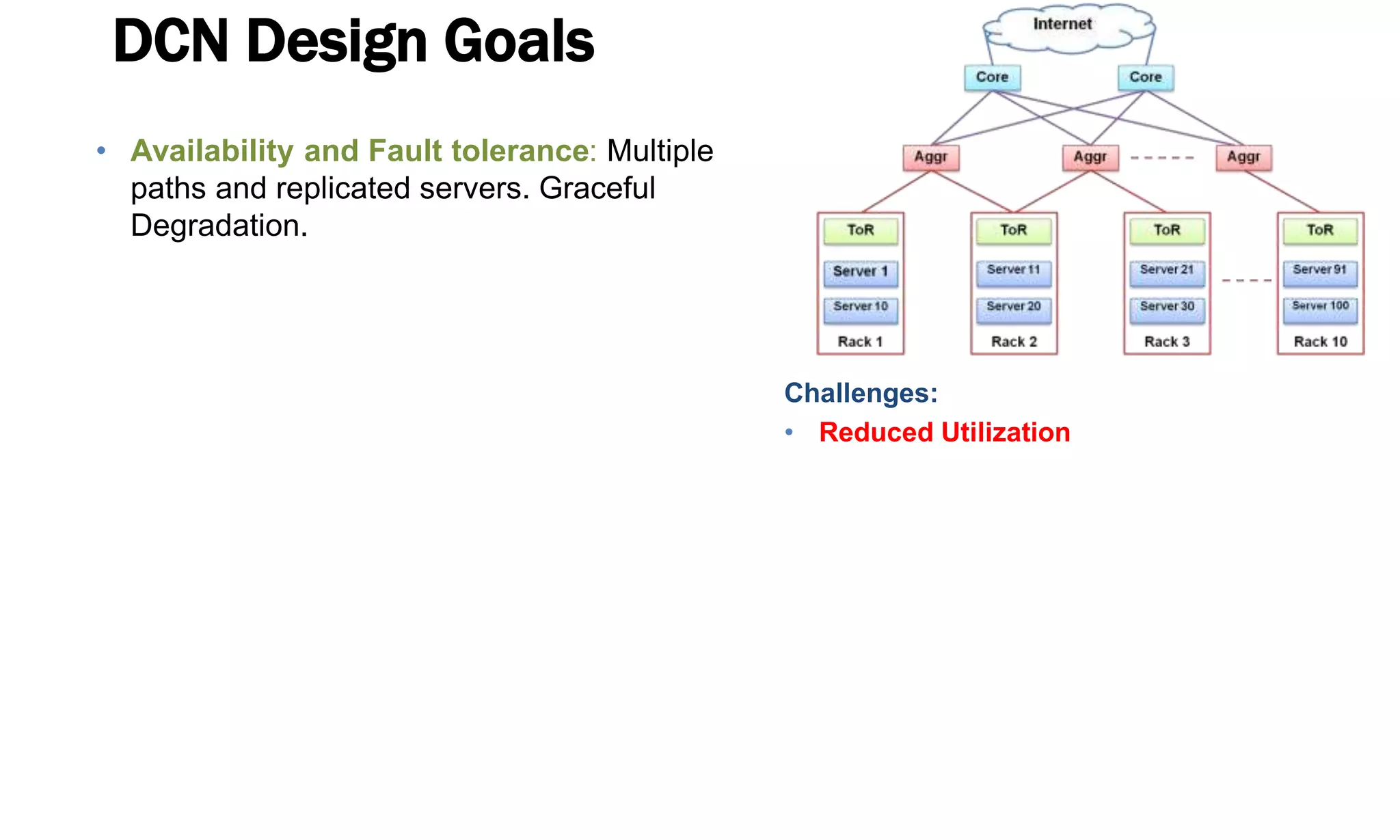

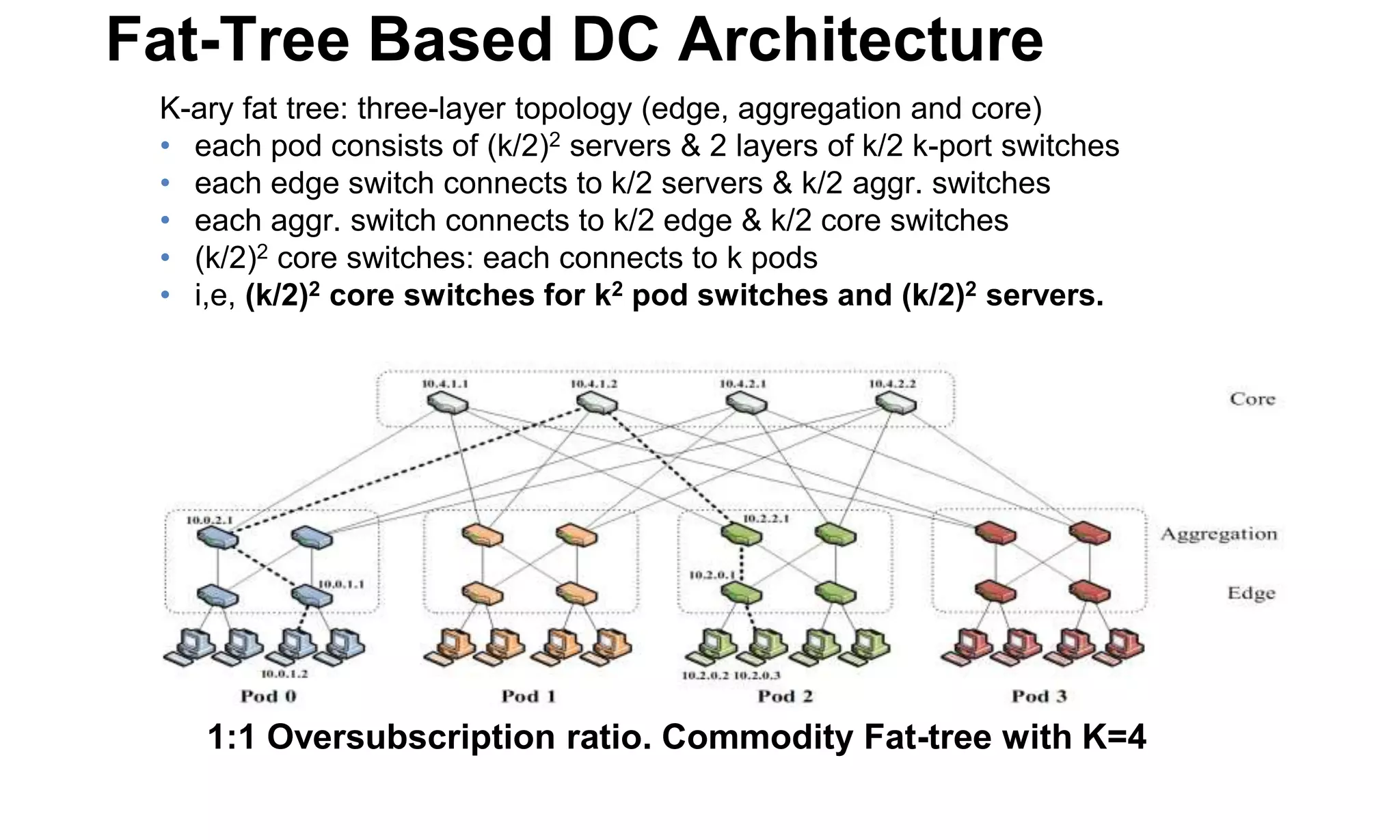

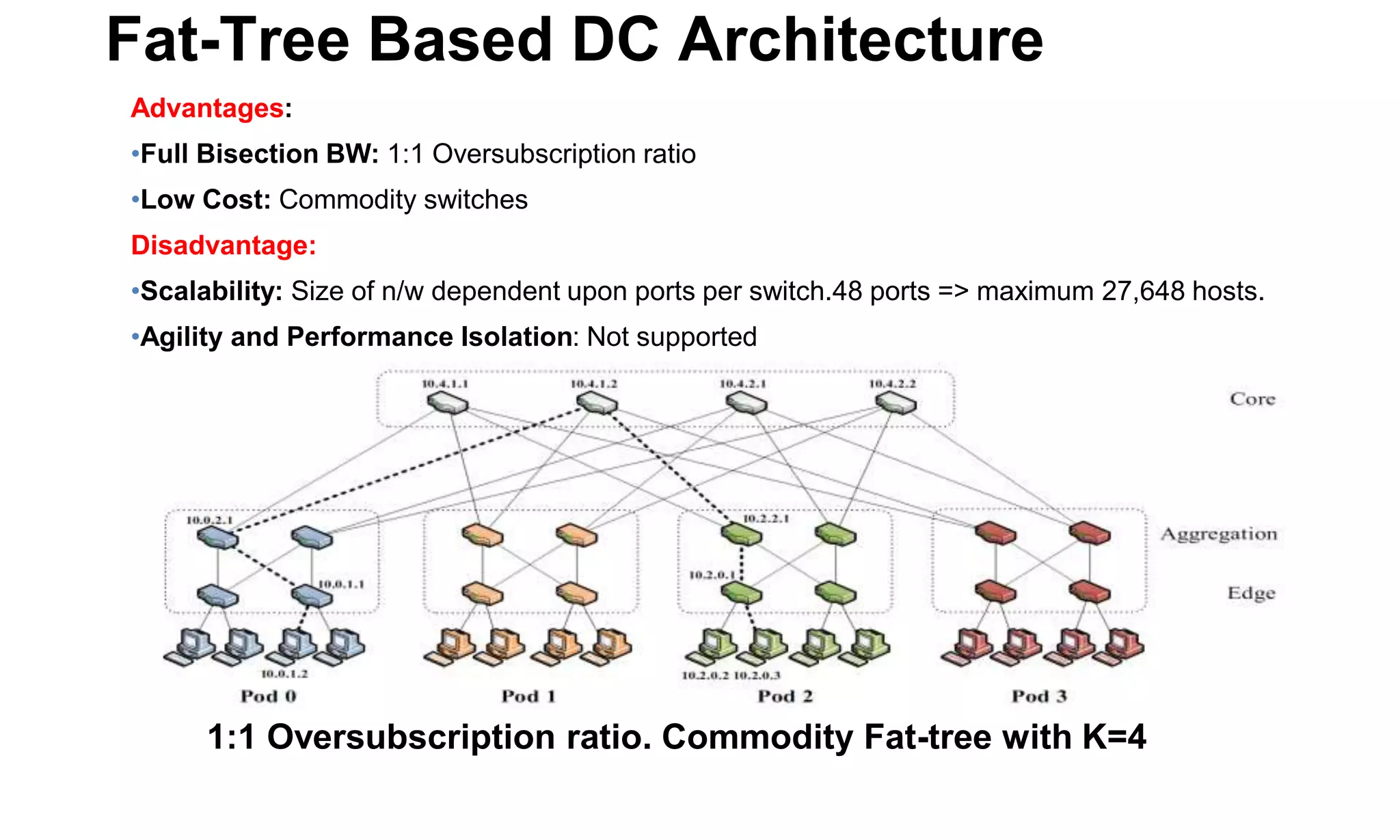

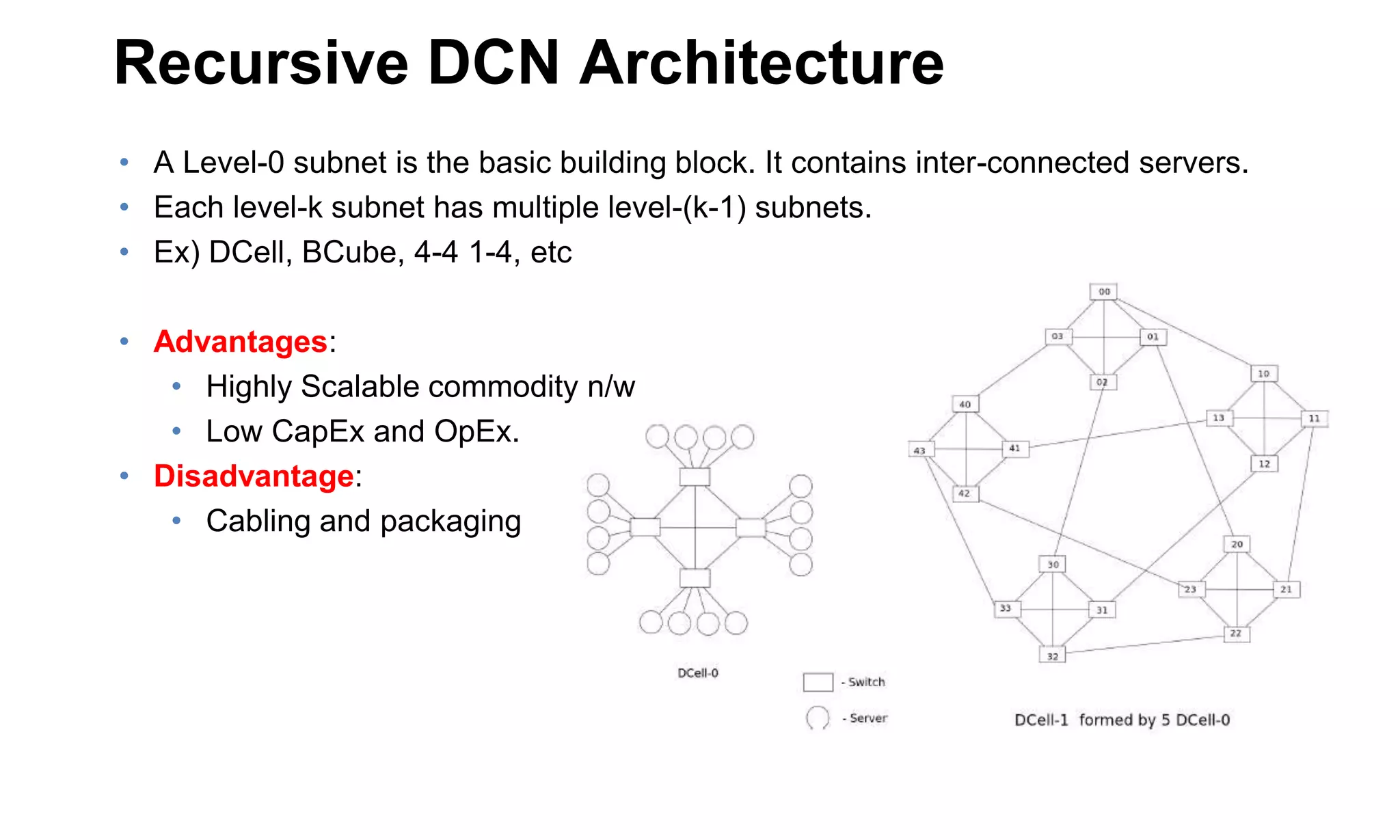

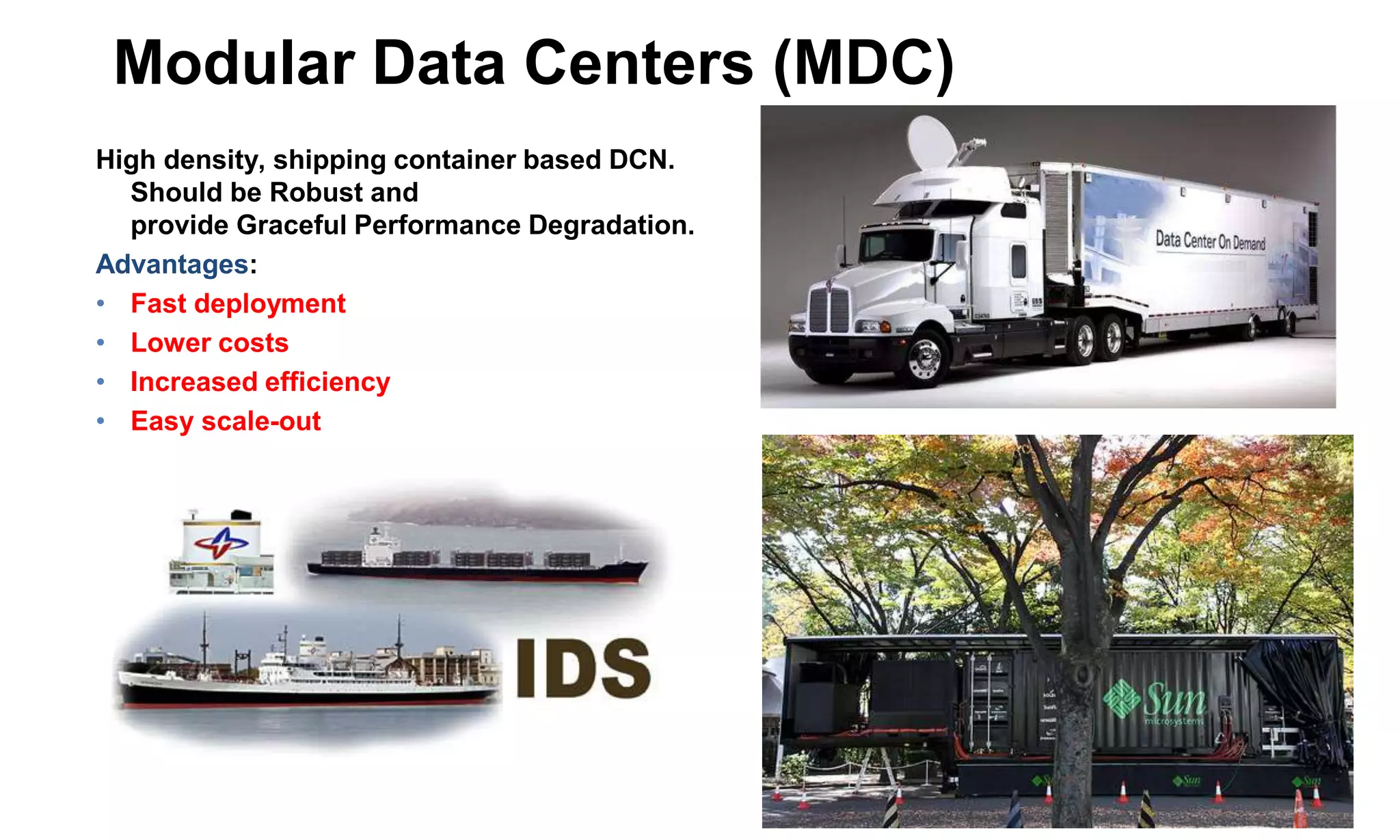

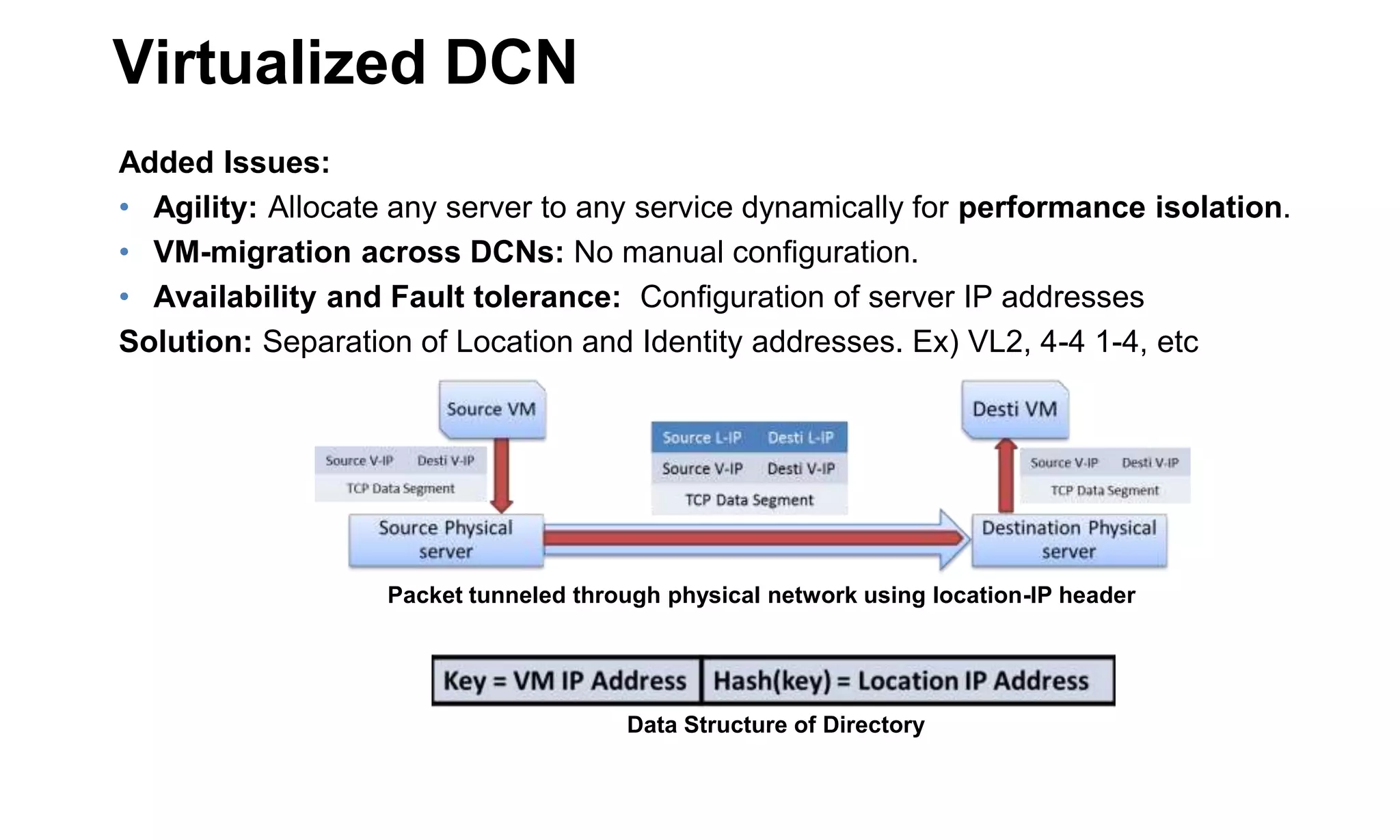

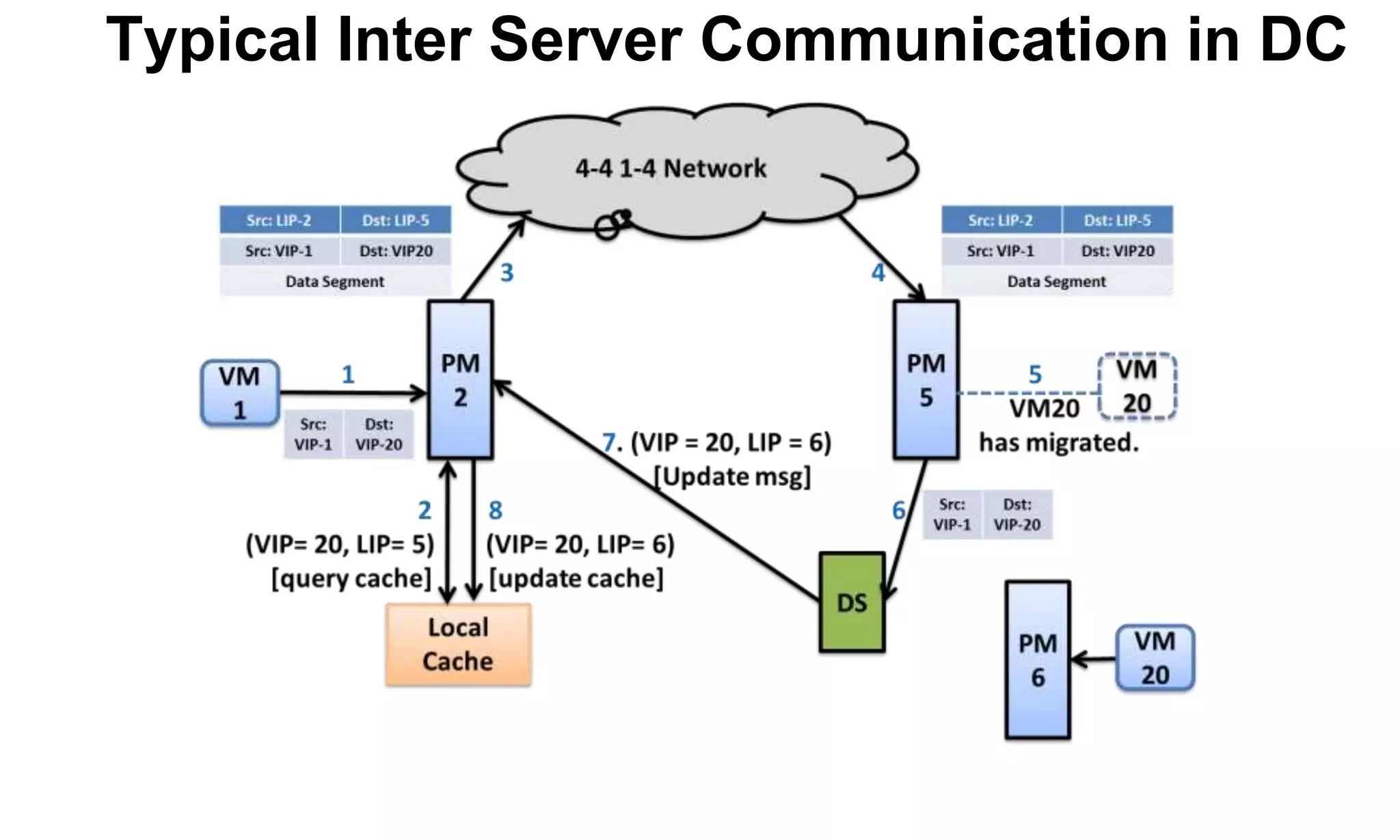

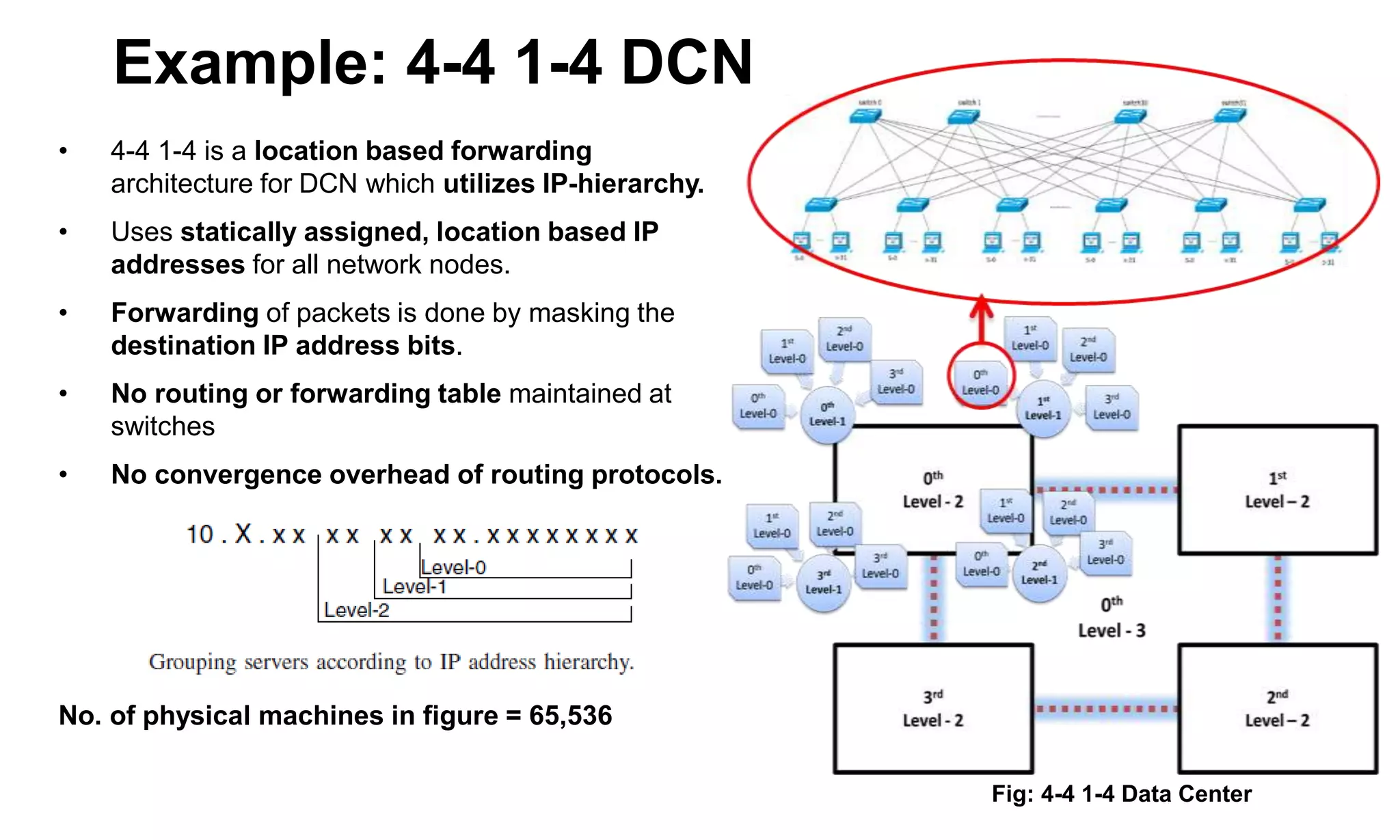

The document outlines data center network (DCN) architectures, including fat-tree and recursive designs, with a focus on design goals such as availability, scalability, and cost-effectiveness. It discusses various usage scenarios, including compute-intensive and data-intensive applications, and highlights challenges like reduced utilization and scalability issues. Additionally, it introduces the concept of modular data centers and virtualized DCNs, emphasizing their benefits in deployment efficiency and performance management.

![Conventional DCN Architecture

Rack 3 Rack 10Rack 1 Rack 2

Server 21

Server 100

Server 91

Server 30

Server 1

Server 20

Server 11

Server 10

ToR ToRToR ToR

AggrAggr Aggr

Core Core

Core

[10 GigE switches]

Aggregation

[10 GigE switches]

Edge

[Commodity

switches]

Internet

ETHERNET](https://image.slidesharecdn.com/dcnintroduction-141010054657-conversion-gate01/75/Introduction-to-Data-Center-Network-Architecture-4-2048.jpg)

![References

• A. Kumar, S. V. Rao, and D. Goswami, “4-4, 1-4: Architecture for Data Center Network Based

on IP Address Hierarchy for Efficient Routing," in Parallel and Distributed Computing (ISPDC),

2012 11th International Symposium on, 2012, pp. 235-242.

• M. Al-Fares, A. Loukissas, and A. Vahdat, “A scalable, commodity data center network

architecture," in Proceedings of the ACM SIGCOMM 2008 conference on Data

communication, ser. SIGCOMM '08. New York, NY, USA: ACM, 2008, pp. 63-74.[Online].

Available: http://doi.acm.org/10.1145/1402958.1402967

• C. Guo, G. Lu, D. Li, H. Wu, X. Zhang, Y. Shi, C. Tian, Y. Zhang, and S. Lu, “Bcube:a high

performance, server-centric network architecture for modular data centers.“

• T. Benson, A. Anand, A. Akella, and M. Zhang, “Understanding data center trac

characteristics," SIGCOMM Comput. Commun. Rev., vol. 40, no. 1, pp. 92{99, Jan. 2010.

[Online]. Available: http://doi.acm.org/10.1145/1672308.1672325

• A. Greenberg, J. Hamilton, D. A. Maltz, and P. Patel. “The cost of a cloud: research problems

in data center networks.” SIGCOMM Comput. Commun. Rev.,39(1):68–73, 2009.](https://image.slidesharecdn.com/dcnintroduction-141010054657-conversion-gate01/75/Introduction-to-Data-Center-Network-Architecture-16-2048.jpg)