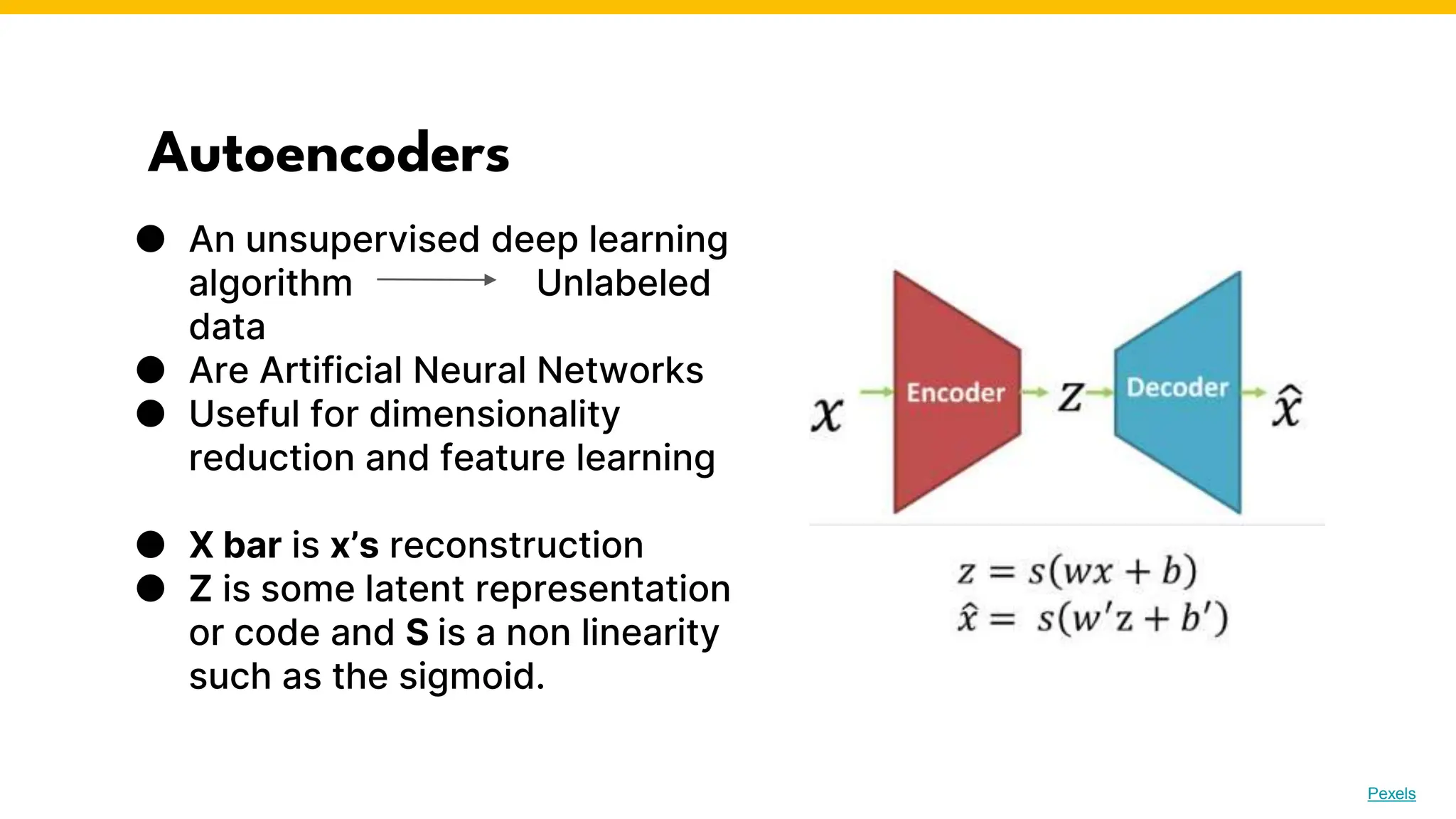

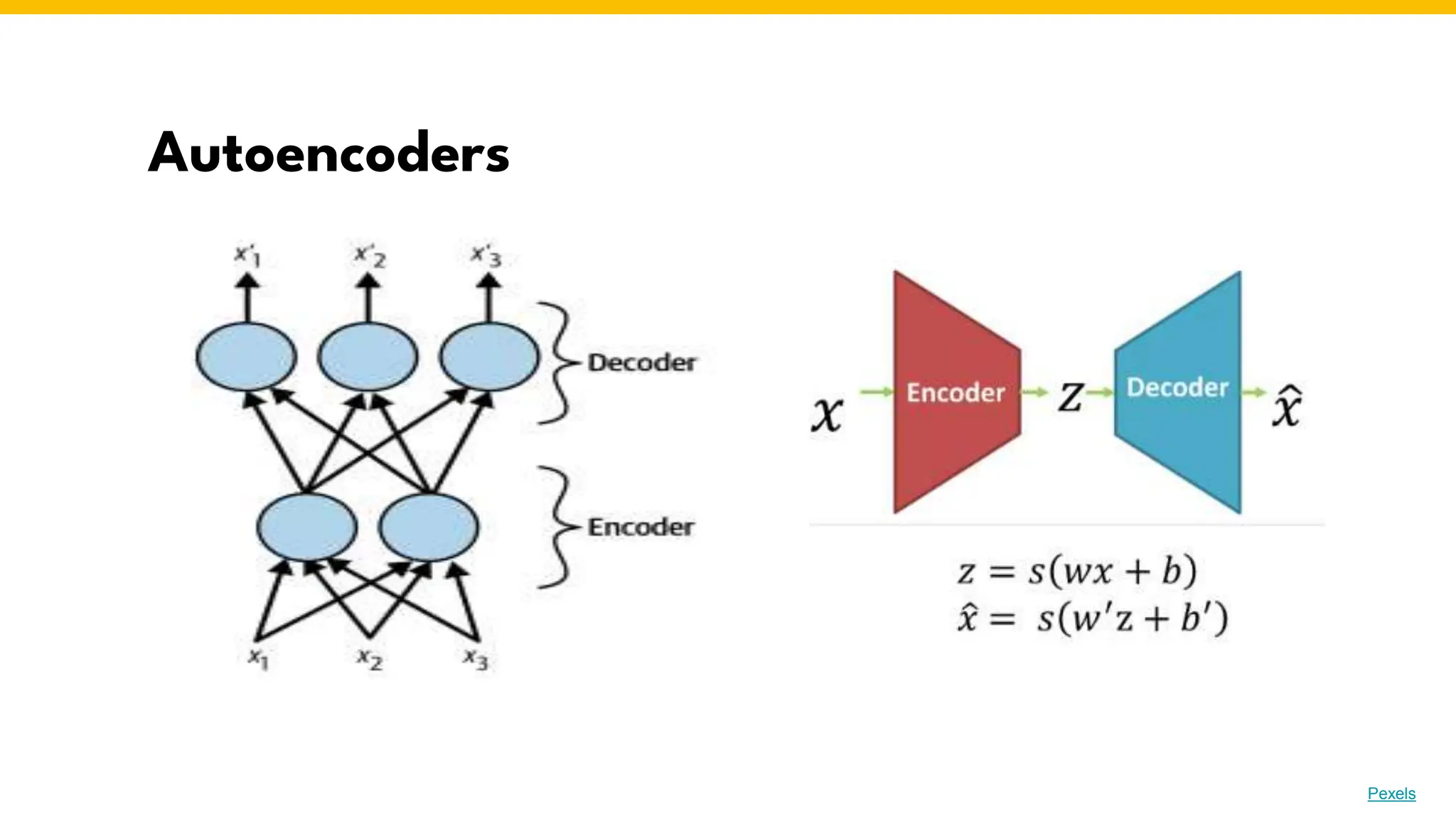

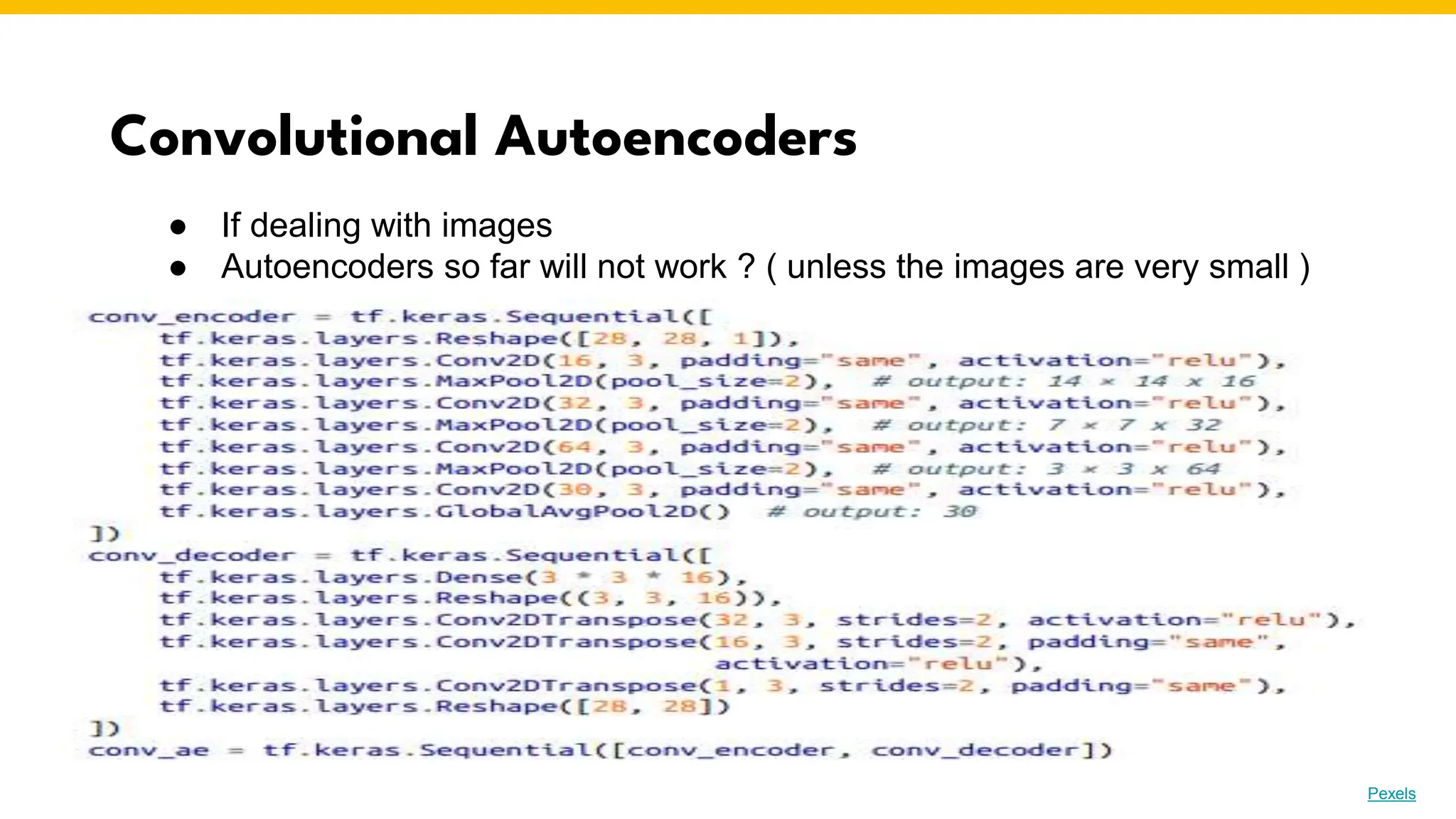

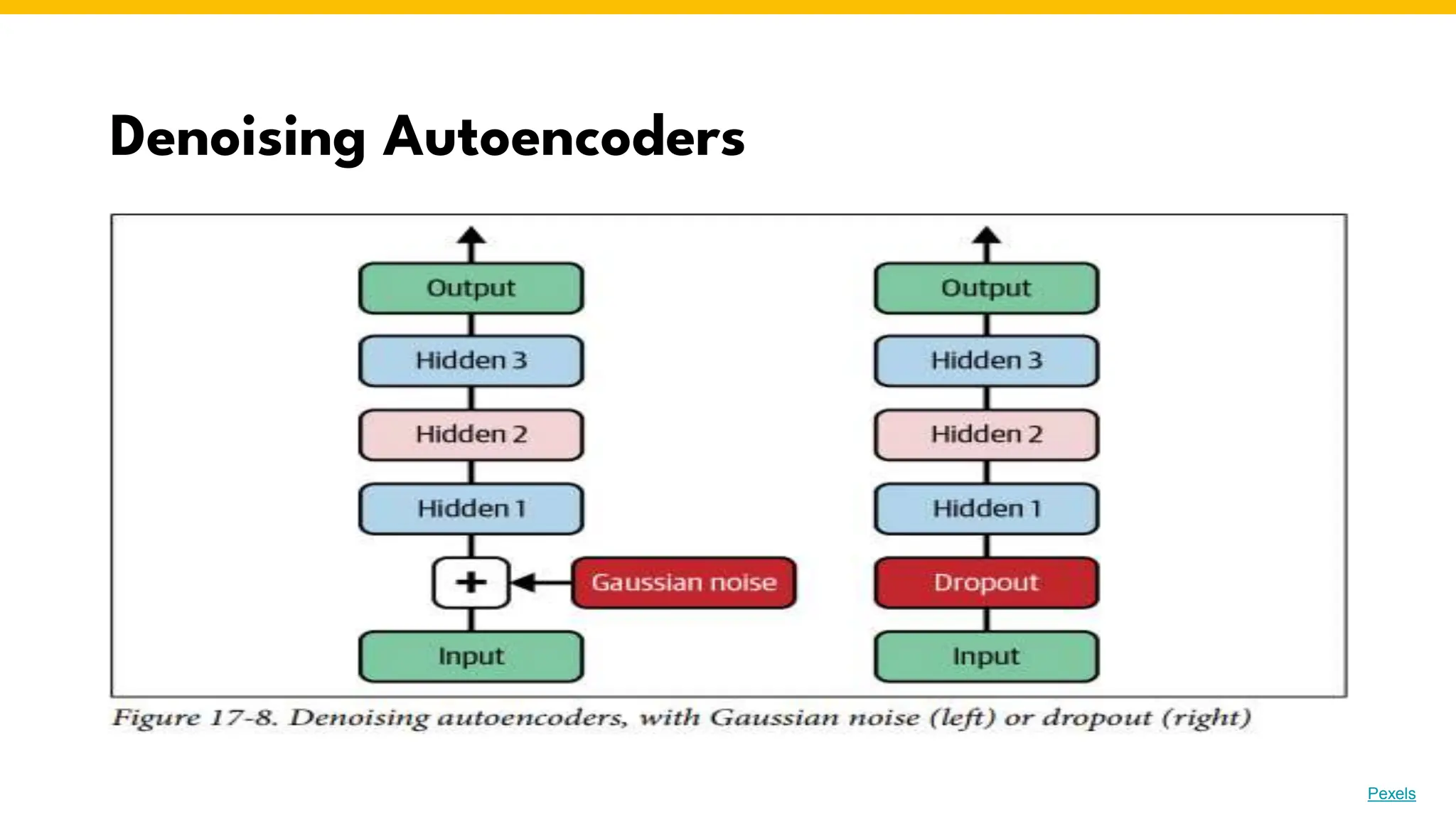

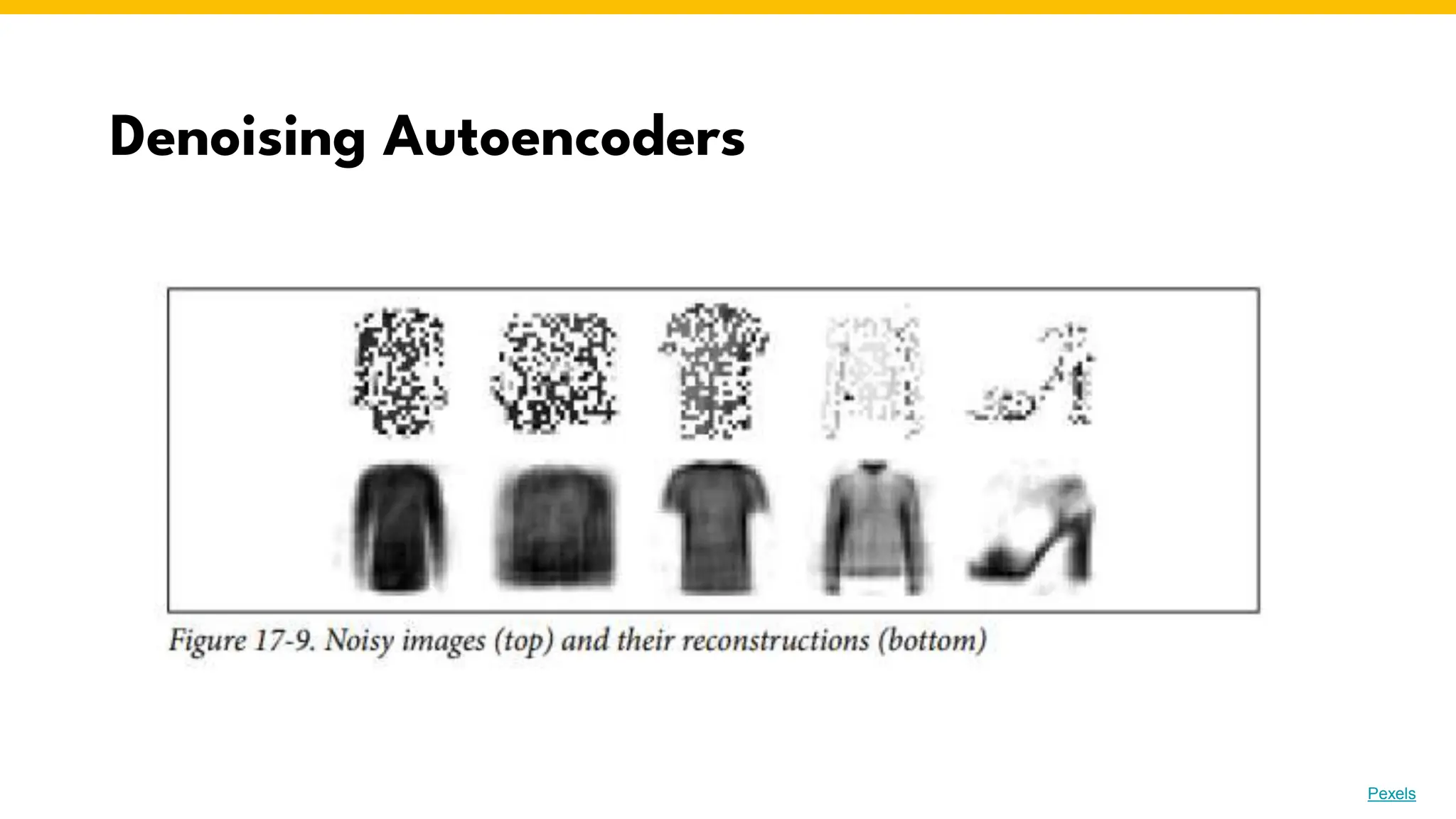

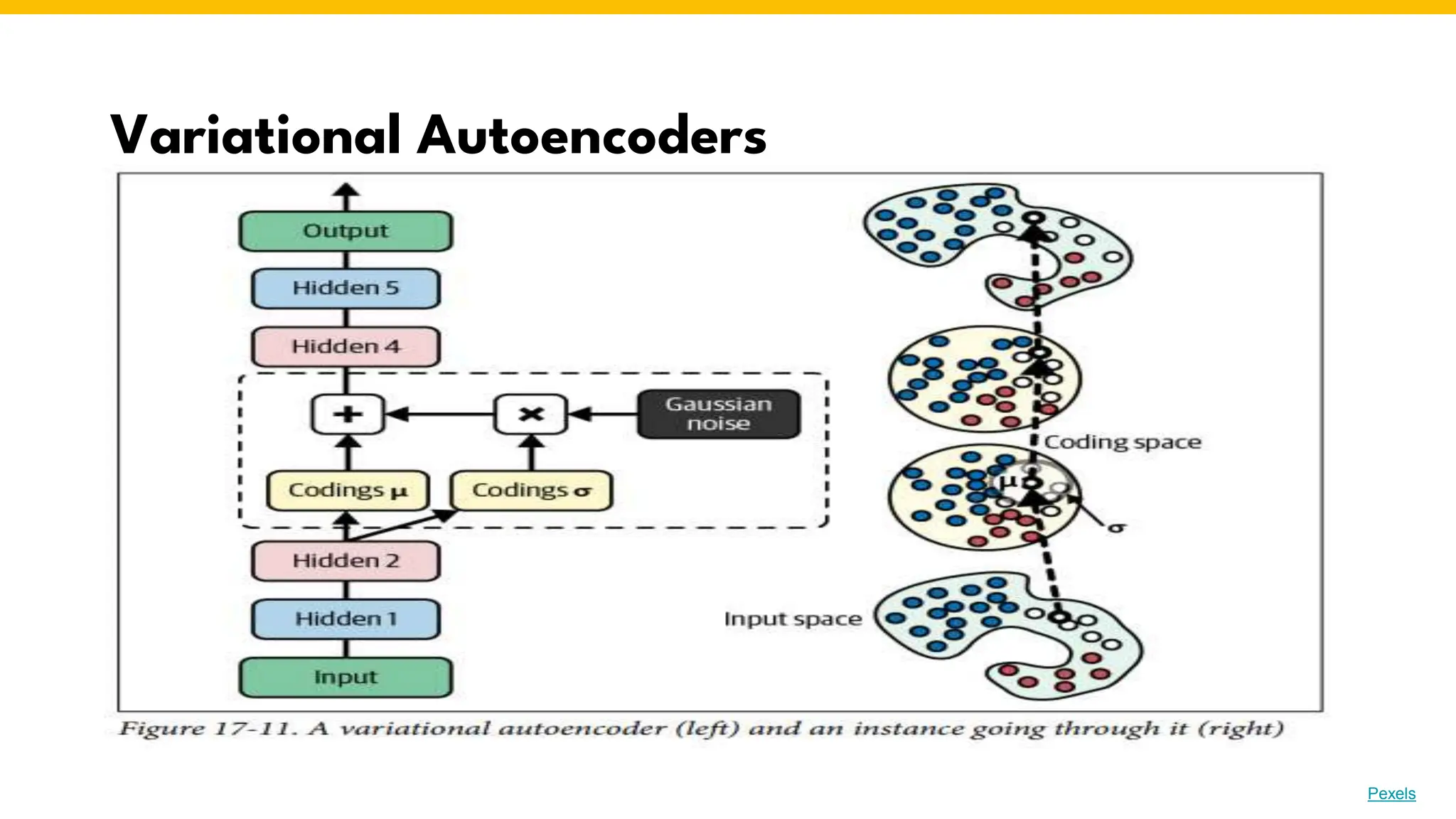

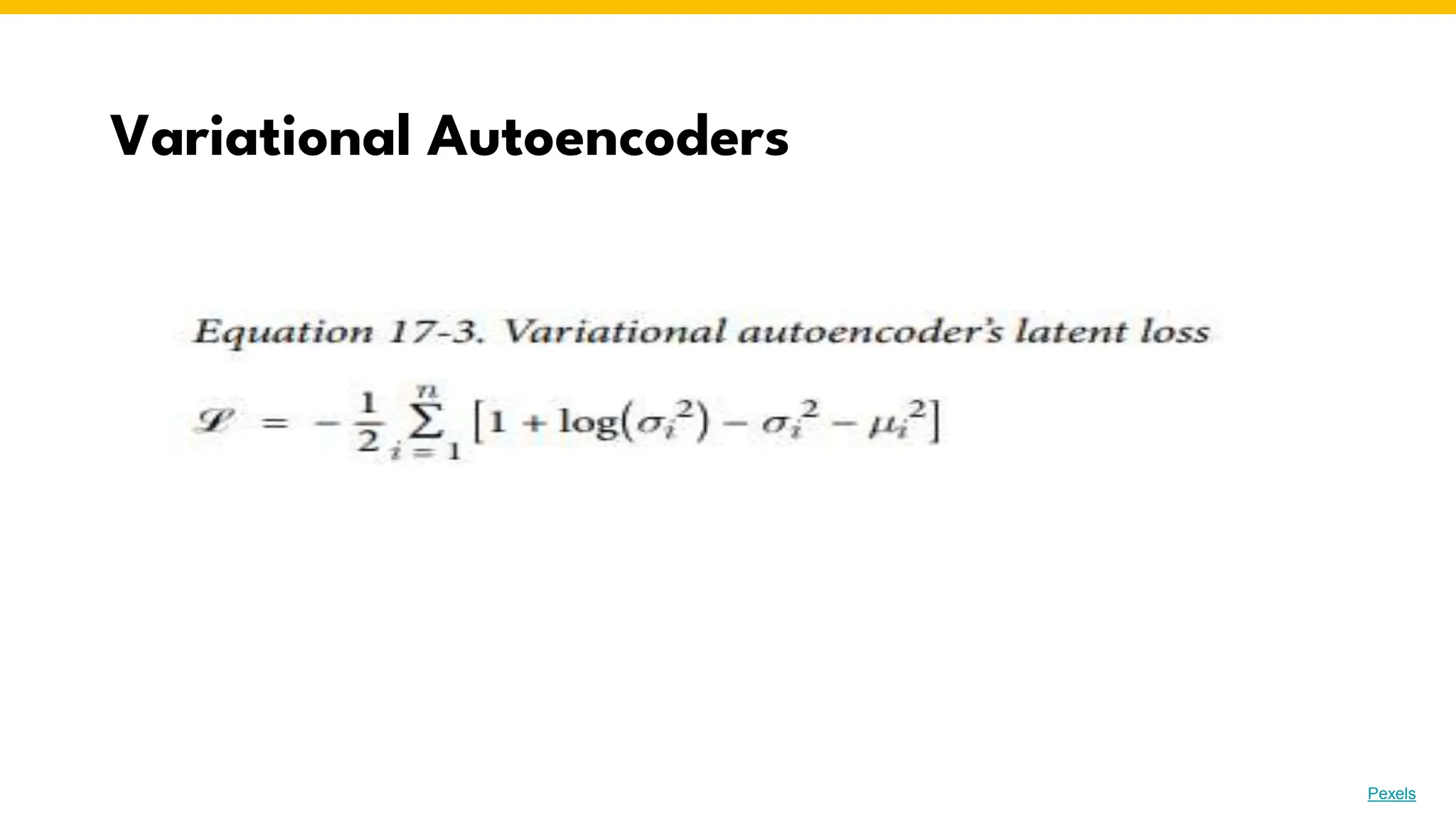

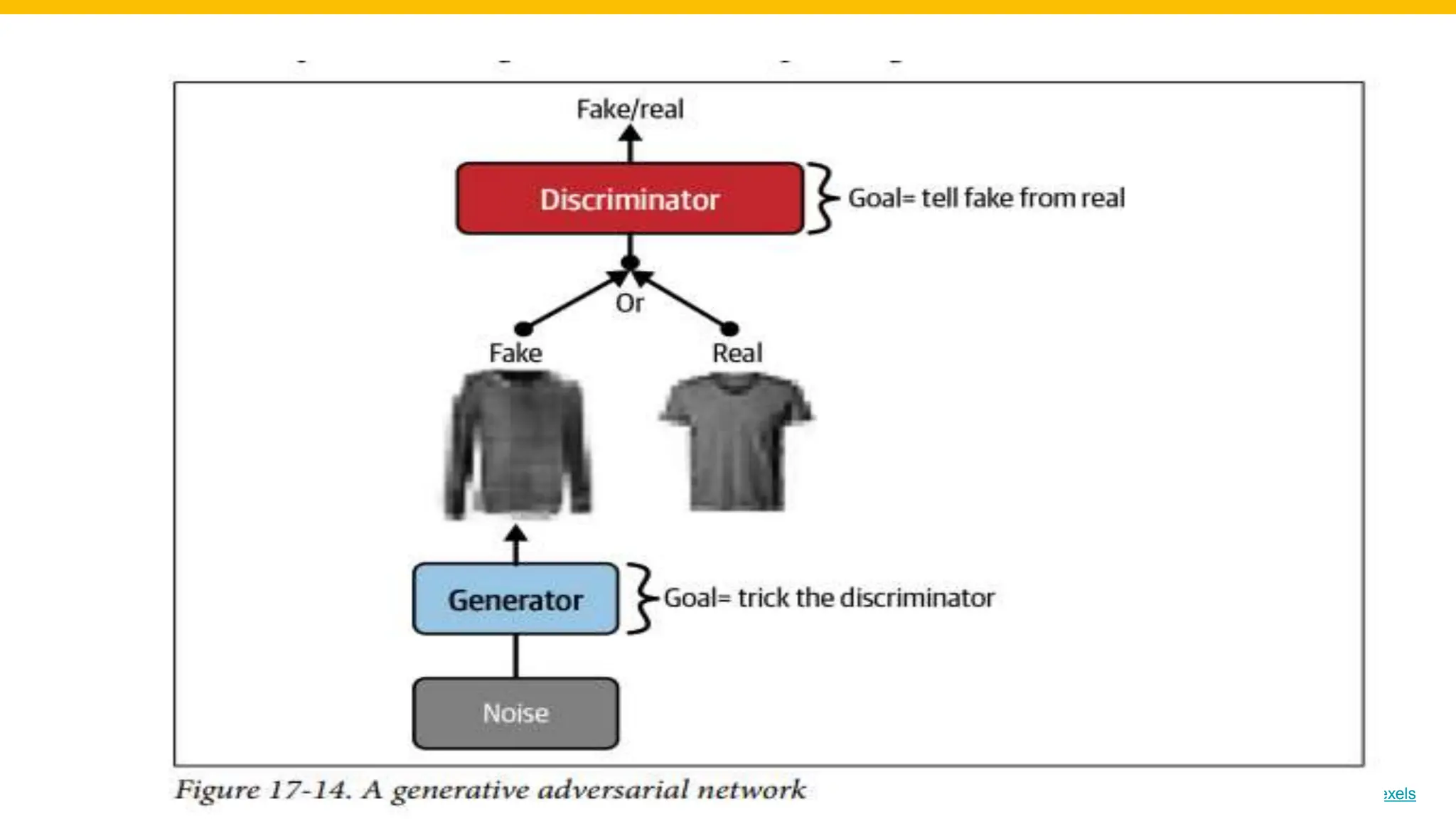

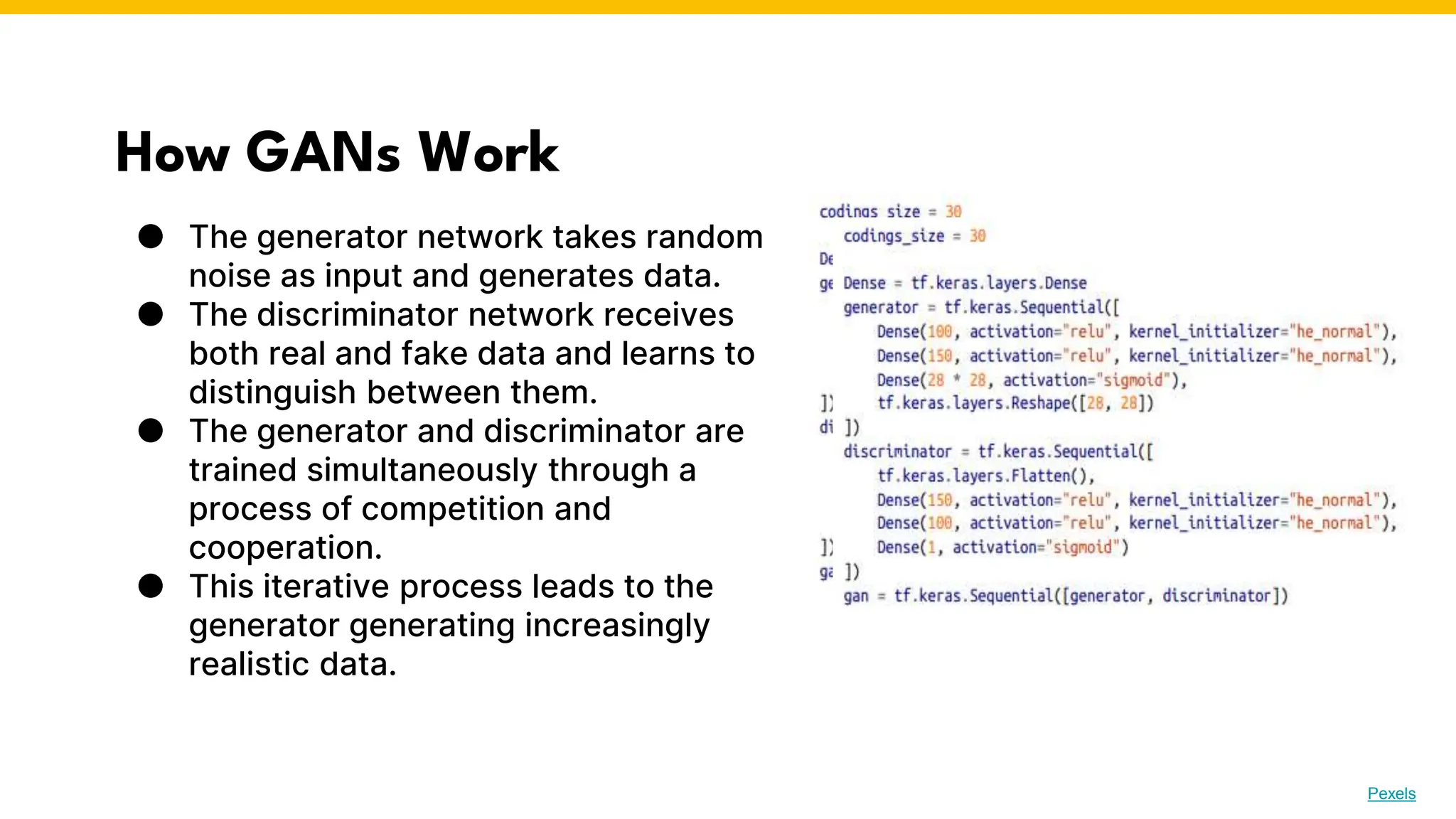

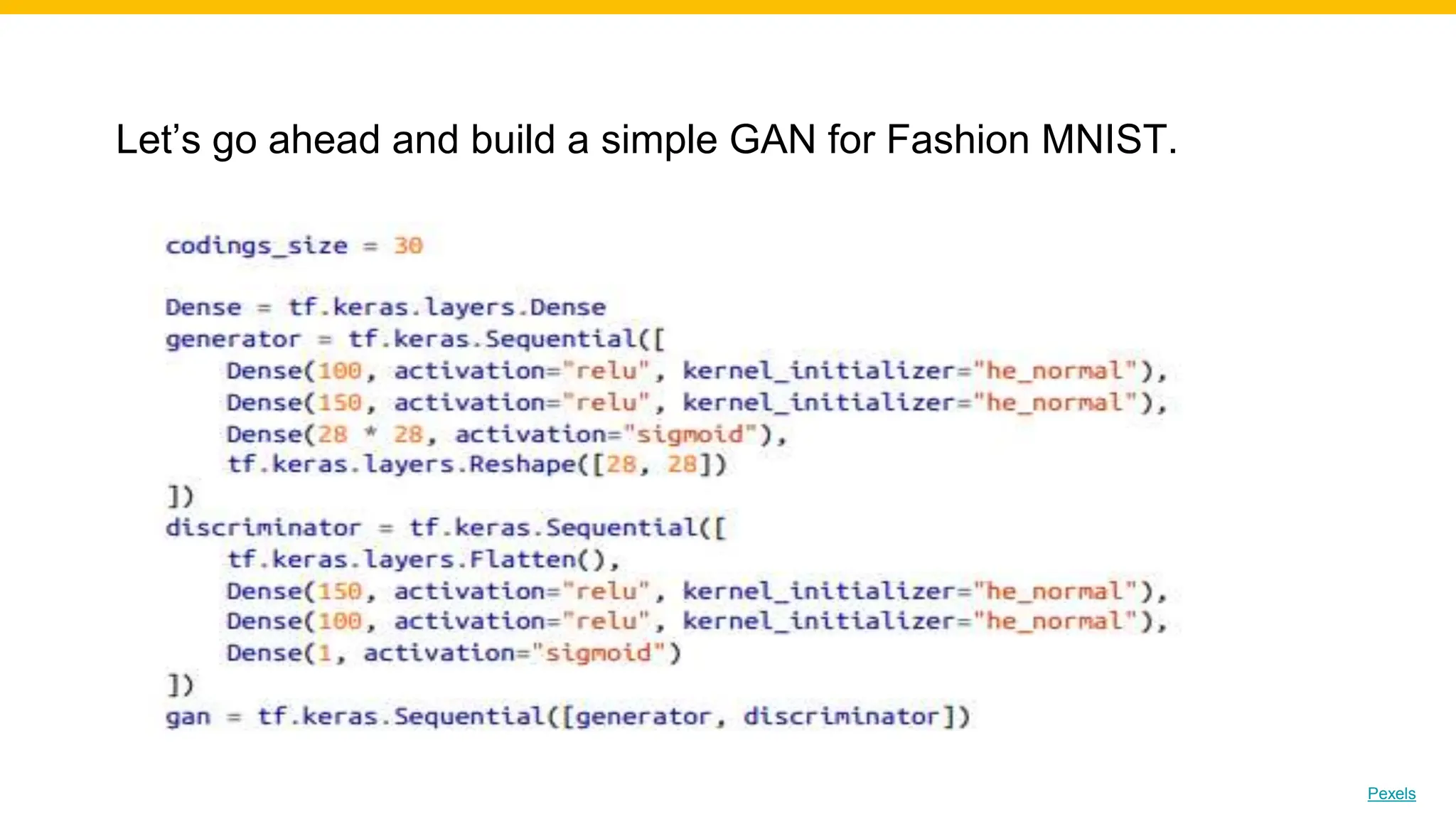

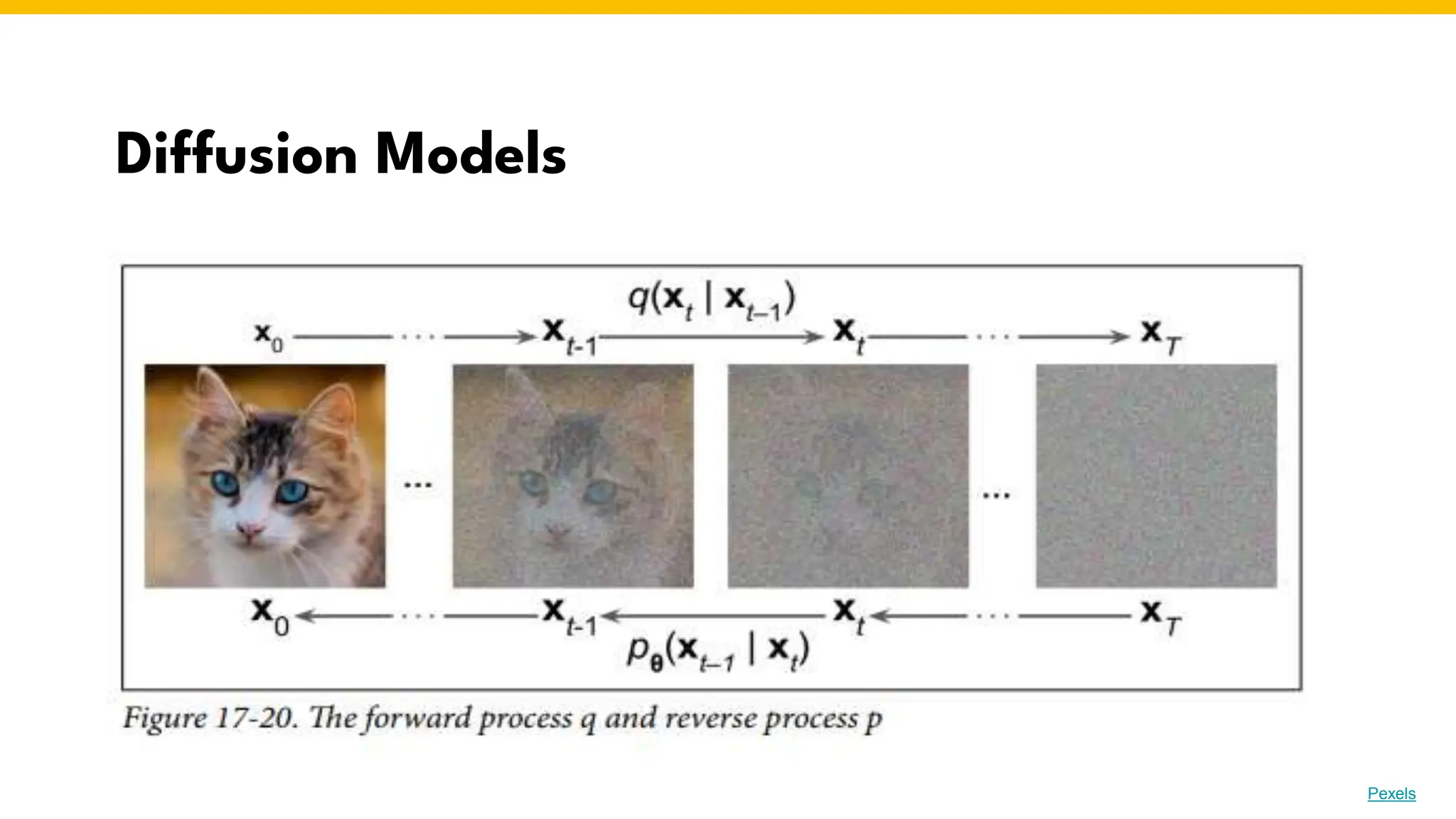

Generative models are machine learning models that can generate new data based on patterns in existing data. Popular examples include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). The document discusses different types of generative models like autoencoders, stacked autoencoders, denoising autoencoders, variational autoencoders, and GANs. It covers applications of generative models in areas like data augmentation, natural language processing, medical imaging, and more. Challenges in training generative models and ethical considerations around their use are also discussed.