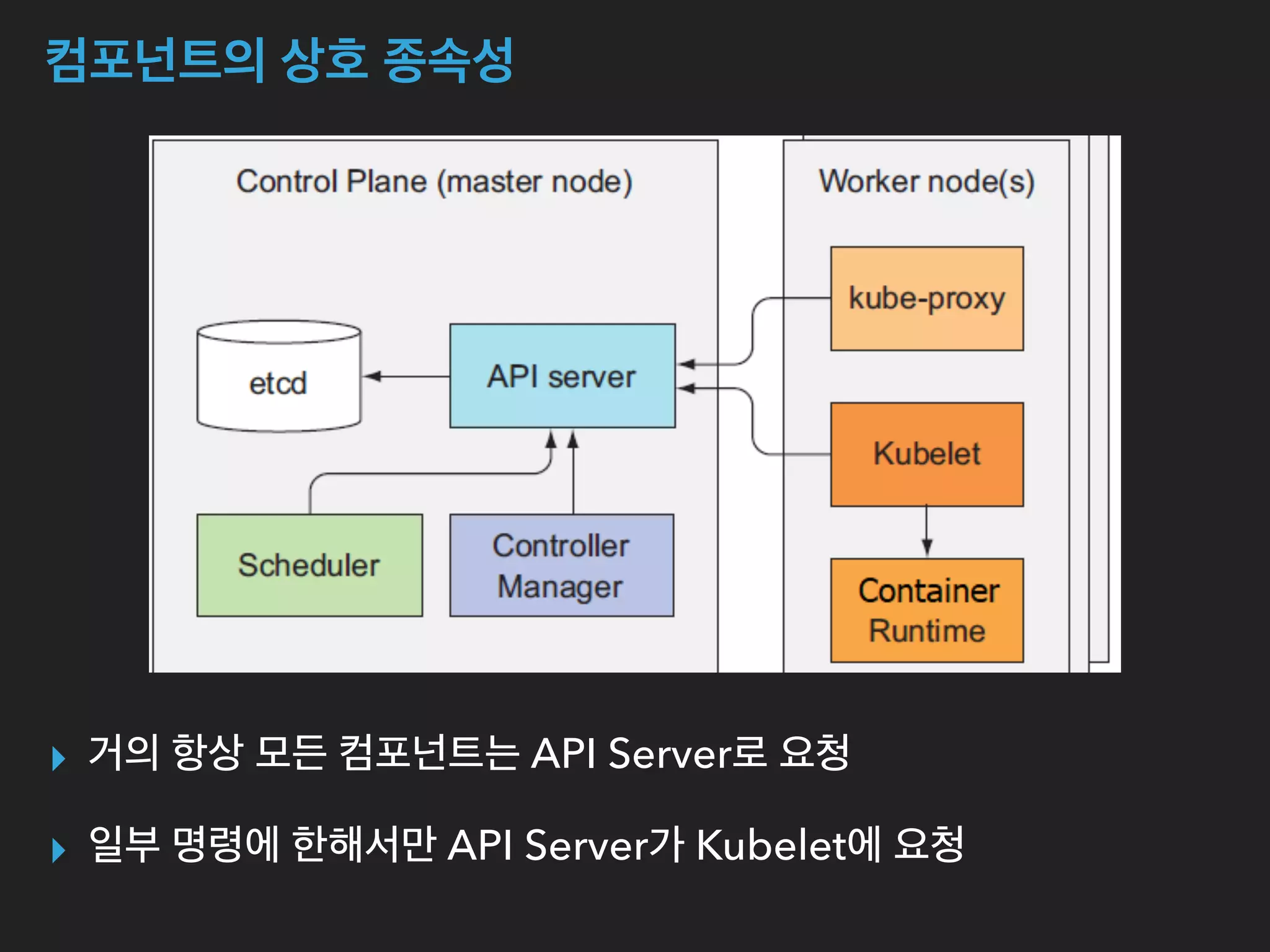

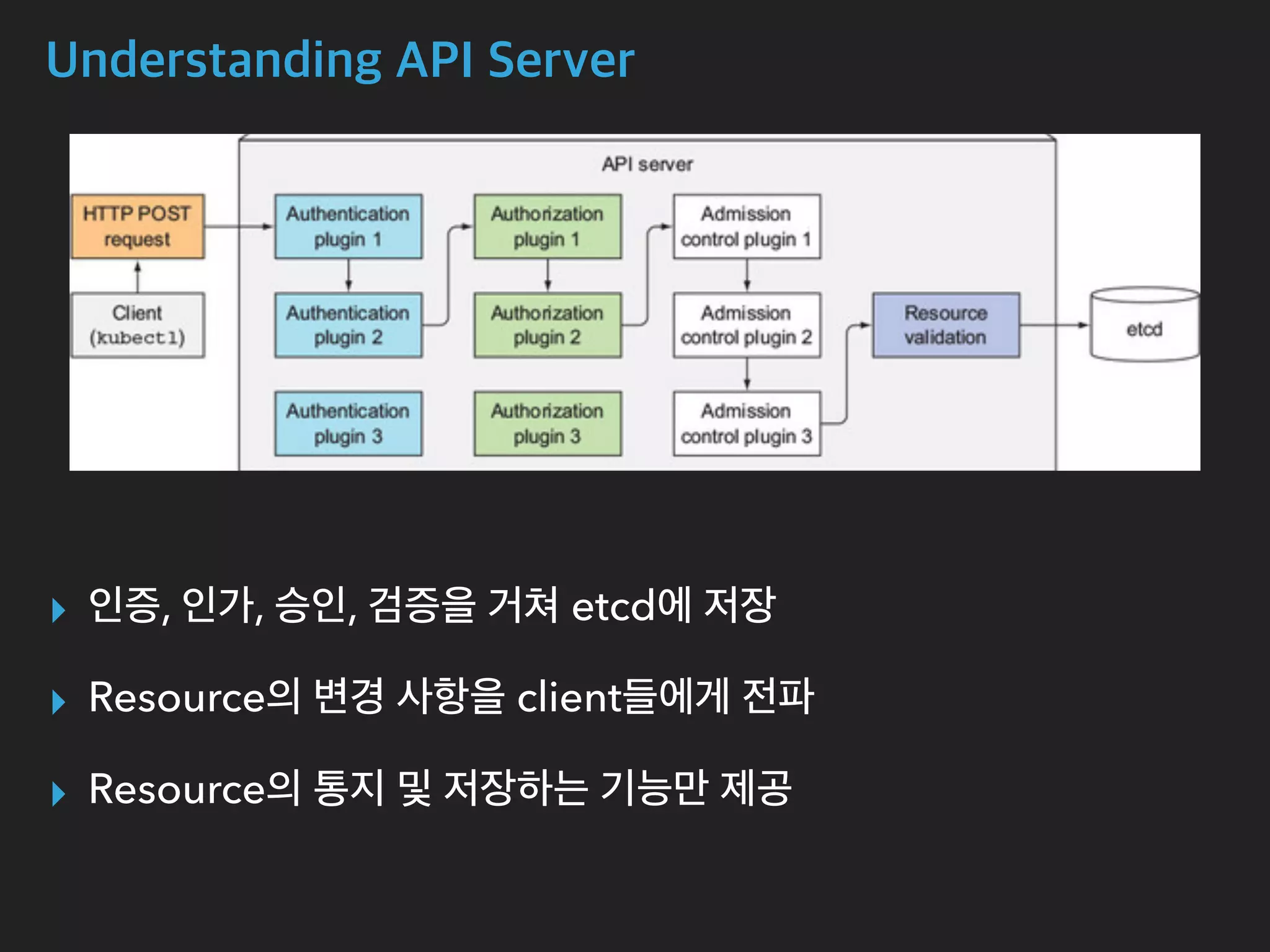

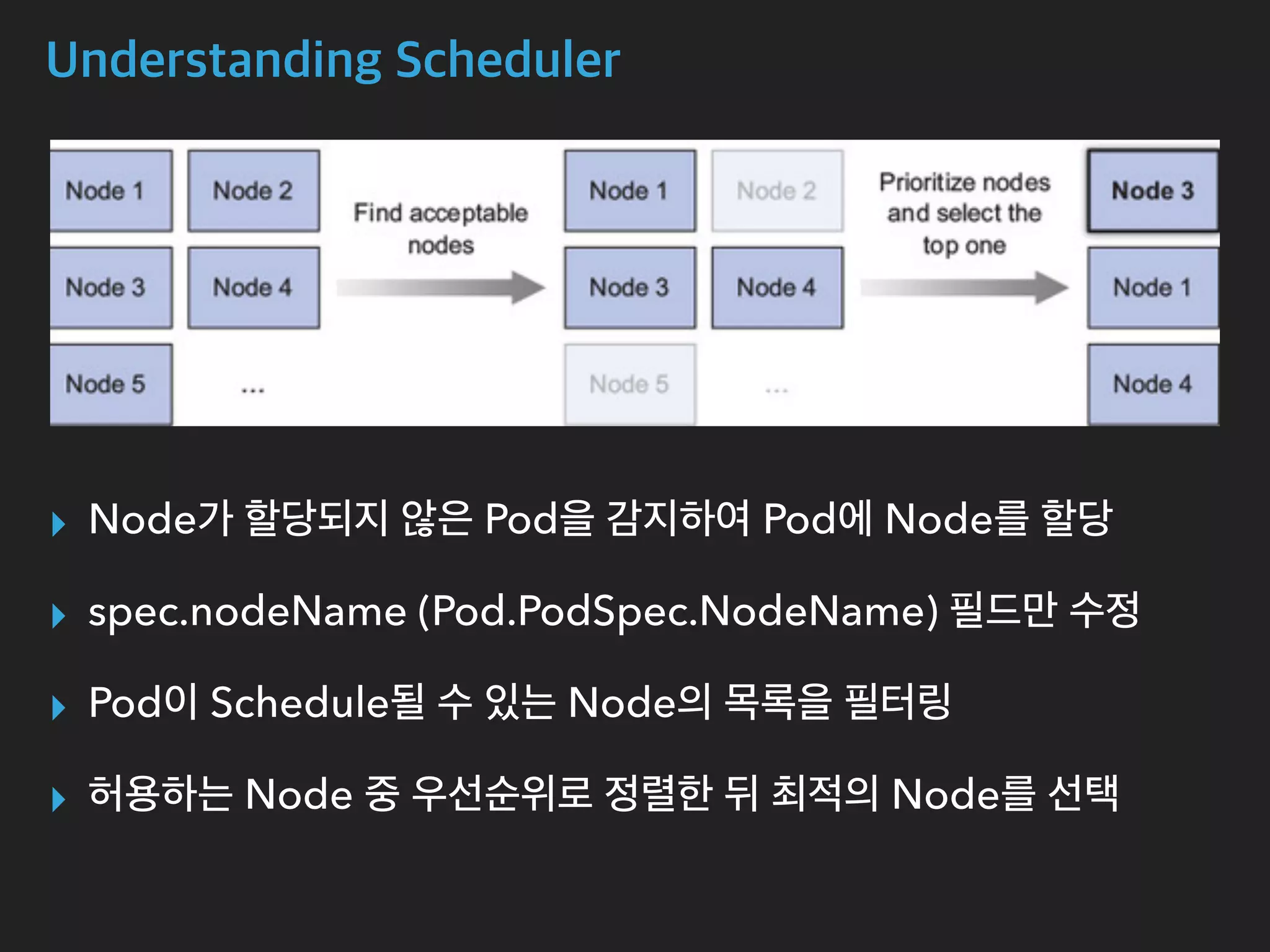

The document provides an in-depth analysis of Kubernetes' internal components, including master, node, and add-on components, alongside their roles in managing networking, pod scheduling, and service abstraction. It extensively discusses the functioning of the API server, kubelet, kube-proxy, and various aspects of pod and service networking, ensuring efficient intercommunication between containers. Furthermore, detailed packet flows and operational procedures of service networking and resource management in Kubernetes are also outlined.

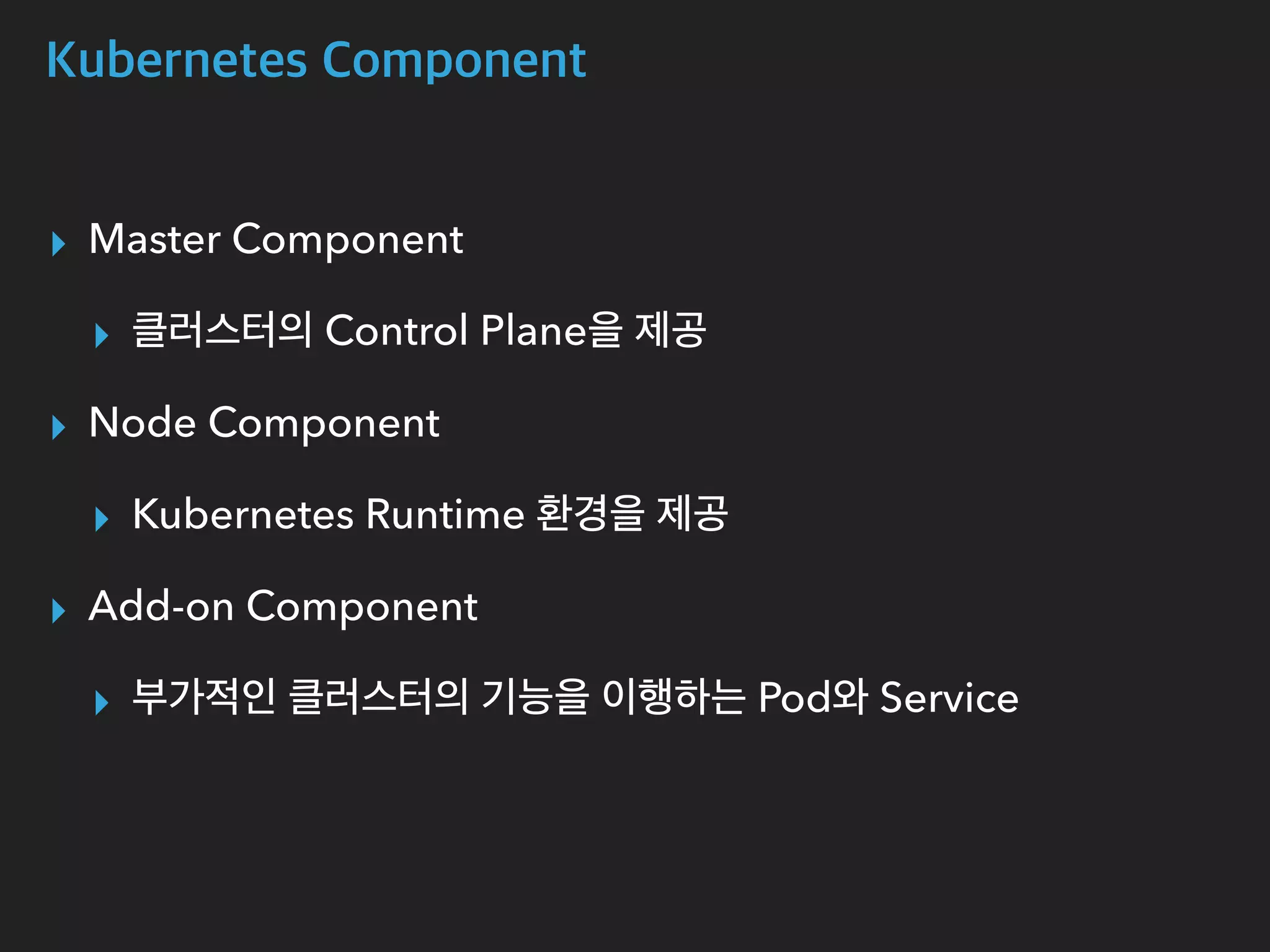

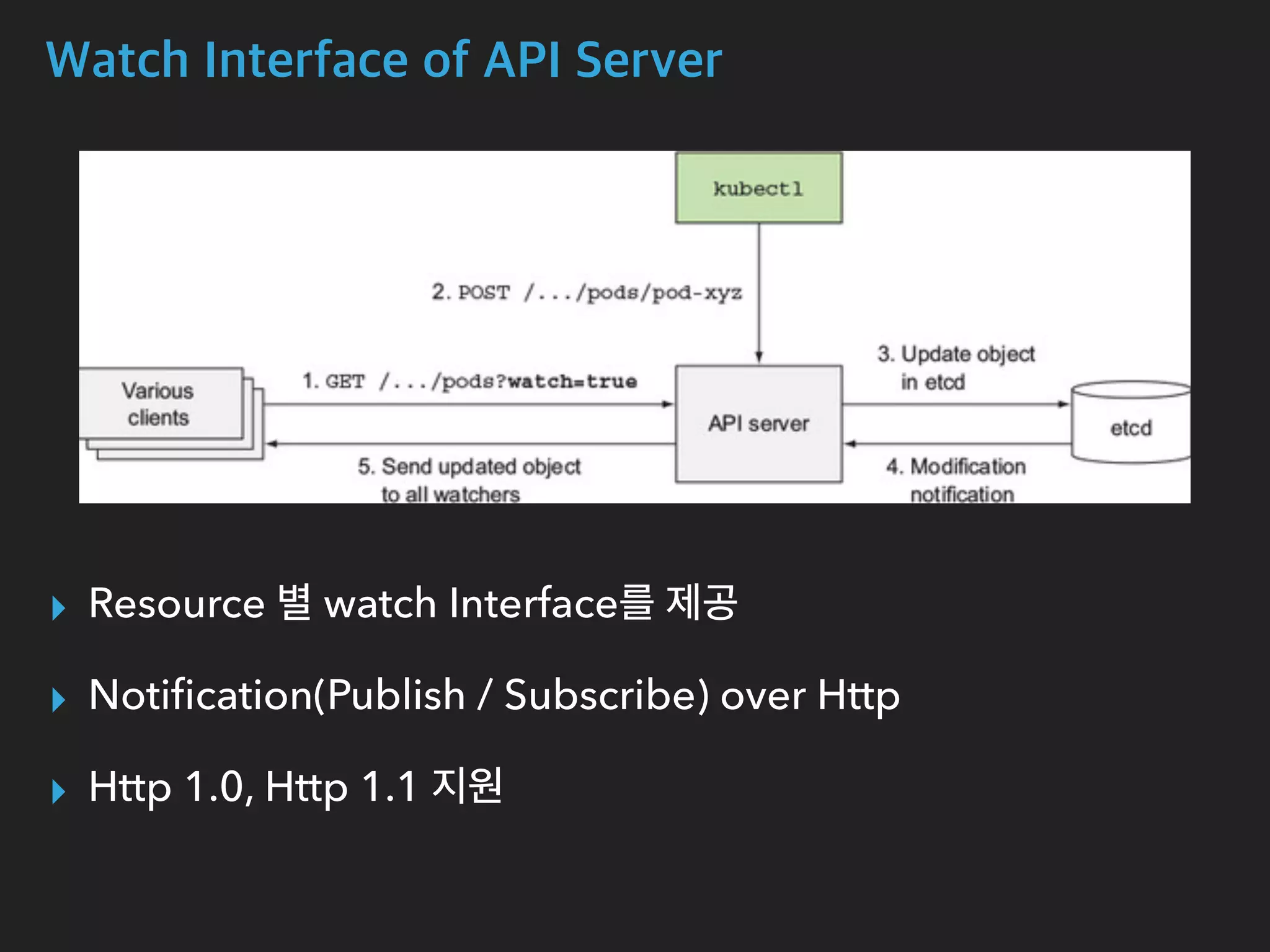

![Watch Interface of API Server

$ curl --http1.0 http://localhost:8080/api/v1/pods?watch=true

$ tcpdump -nlA -i lo port 8080

05:42:32.087199 IP 127.0.0.1.47318 > 127.0.0.1.8080: Flags [P.], seq 1:101, ack 1, win 342, options [nop,nop,TS val 926521512

ecr 926521512], length 100: HTTP: GET /api/v1/pods?watch=true HTTP/1.0

E...9<@.@.."............rC.u...,...V.......

79..79..GET /api/v1/pods?watch=true HTTP/1.0

Host: localhost:8080

User-Agent: curl/7.58.0

Accept: */*

05:42:32.087785 IP 127.0.0.1.8080 > 127.0.0.1.47318: Flags [P.], seq 1:89, ack 101, win 342, options [nop,nop,TS val

926521513 ecr 926521512], length 88: HTTP: HTTP/1.0 200 OK

E...`c@.@..................,rC.....V.......

79..79..HTTP/1.0 200 OK

Content-Type: application/json

Date: Fri, 22 Mar 2019 05:42:32 GMT

05:42:32.090370 IP 127.0.0.1.8080 > 127.0.0.1.47318: Flags [P.], seq 56470:60566, ack 101, win 342, options [nop,nop,TS val

926521516 ecr 926521515], length 4096: HTTP

{"type":"ADDED","object":{ ... }}

...](https://image.slidesharecdn.com/kubernetesinternals-190605134546/75/Kubernetes-internals-Kubernetes-11-2048.jpg)

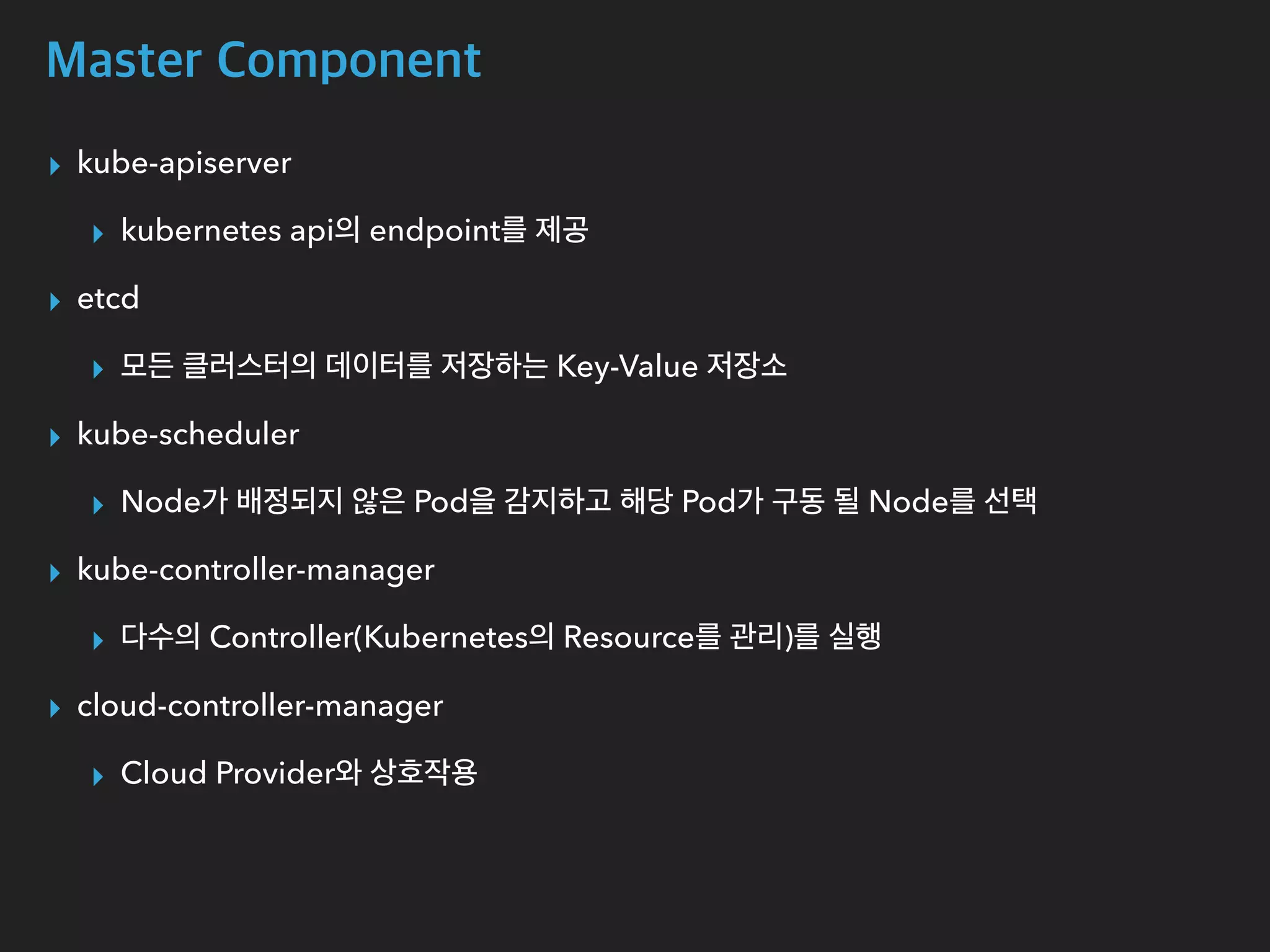

![Watch Interface of API Server

$ curl http://localhost:8080/api/v1/pods?watch=true

$ tcpdump -nlA -i lo port 8080

05:33:24.628863 IP 127.0.0.1.44242 > 127.0.0.1.8080: Flags [P.], seq 1:101, ack 1, win 342, options [nop,nop,TS val 925974024

ecr 925974024], length 100: HTTP: GET /api/v1/pods?watch=true HTTP/1.1

E....w@.@.o..............Q..jn.....V.......

71>.71>.GET /api/v1/pods?watch=true HTTP/1.1

Host: localhost:8080

User-Agent: curl/7.58.0

Accept: */*

05:33:24.629526 IP 127.0.0.1.8080 > 127.0.0.1.44242: Flags [P.], seq 1:117, ack 101, win 342, options [nop,nop,TS val

925974025 ecr 925974024], length 116: HTTP: HTTP/1.1 200 OK

E...;_@.@...............jn...Q.=...V.......

71> 71>.HTTP/1.1 200 OK

Content-Type: application/json

Date: Fri, 22 Mar 2019 05:33:24 GMT

Transfer-Encoding: chunked

9cf

{"type":"ADDED","object":{ ... }}

aab

{"type":"MODIFIED","object":{ ... }}

....](https://image.slidesharecdn.com/kubernetesinternals-190605134546/75/Kubernetes-internals-Kubernetes-12-2048.jpg)

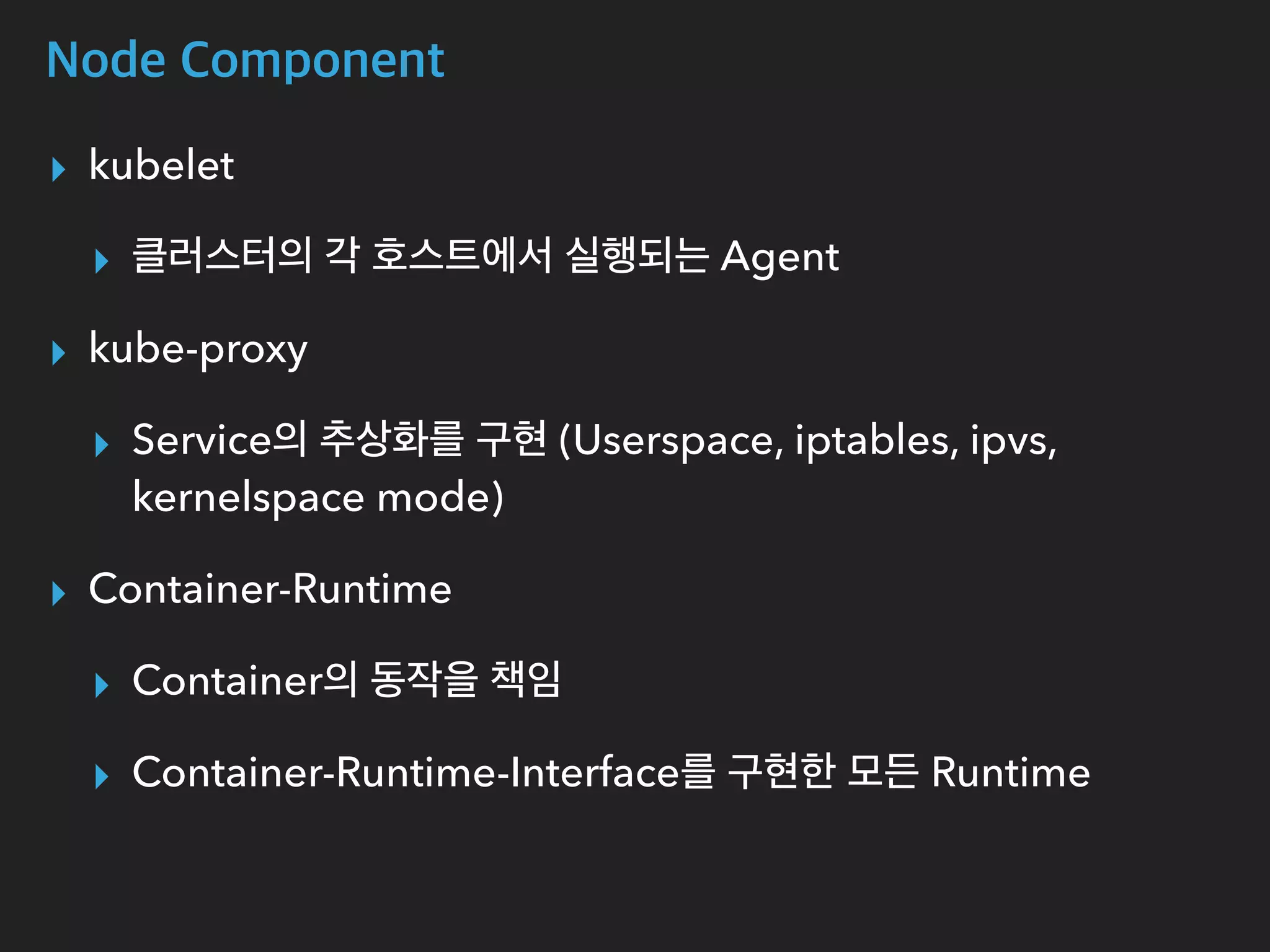

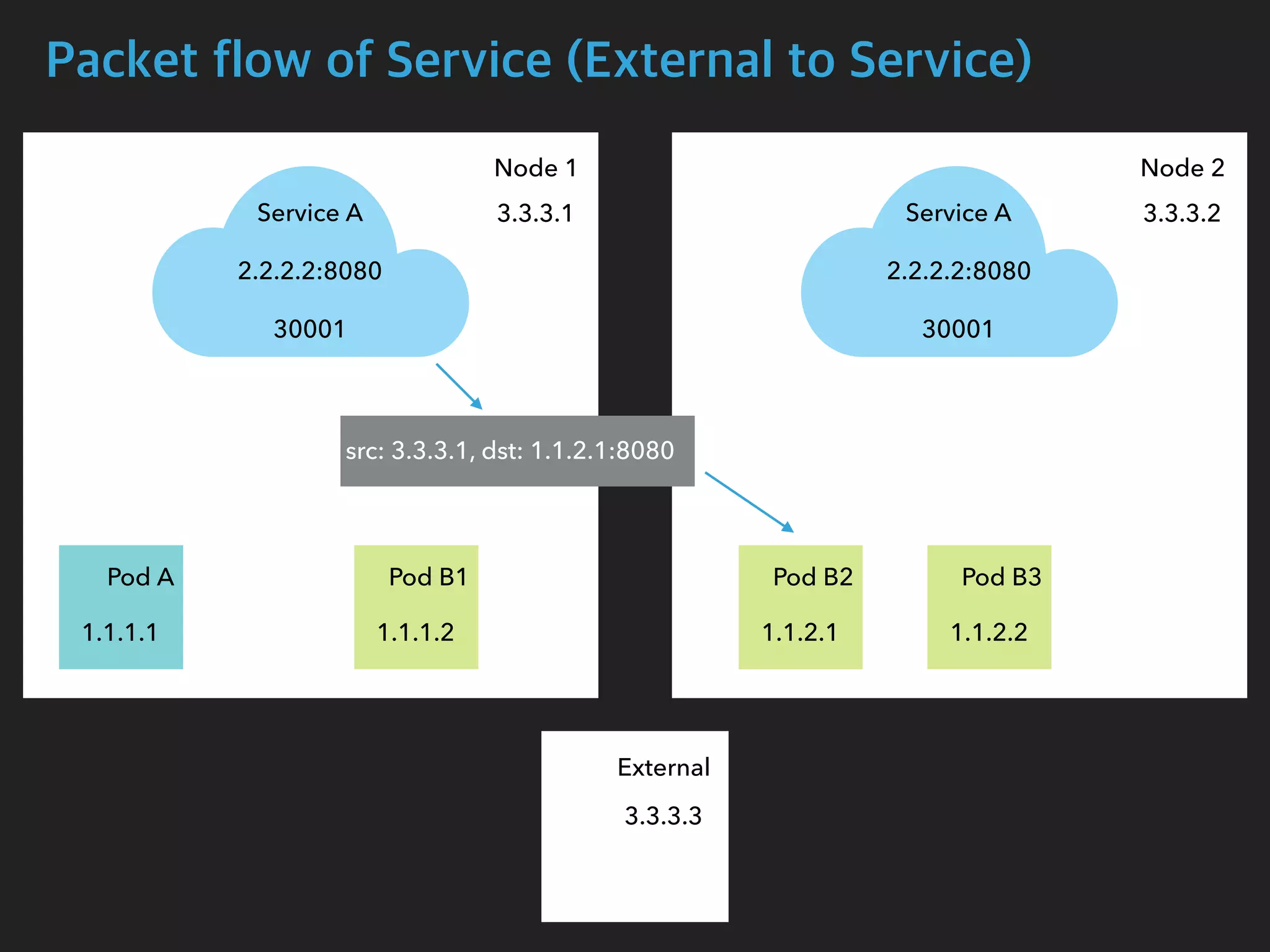

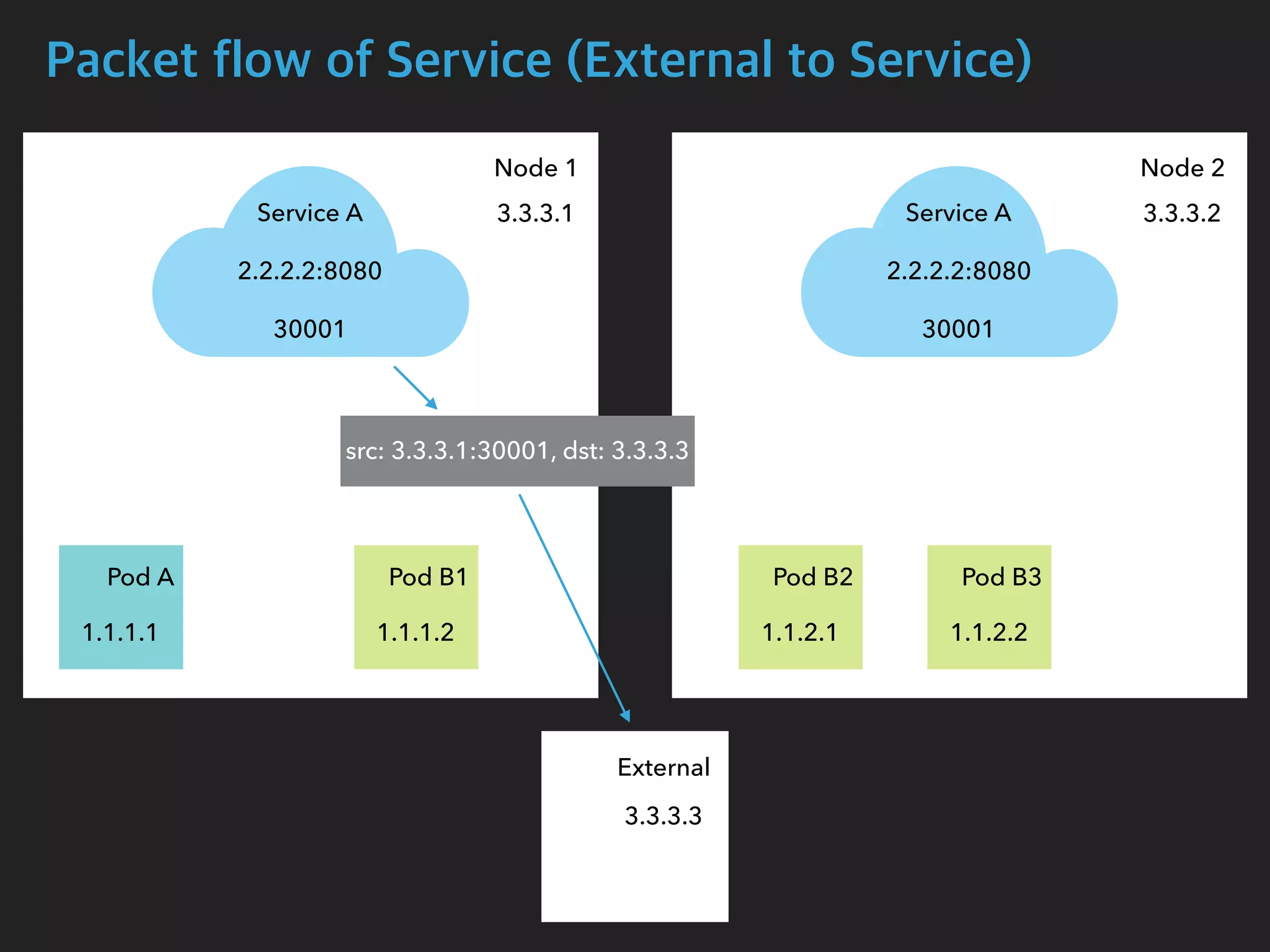

![Packet flow of Service (External to Service)

$ tcpdump -i enp0s8 port 30001 -n

05:17:50.632656 IP 3.3.3.3.55824 > 3.3.3.1.30001: Flags [SEW], seq 920096640, win

65535, options [mss 1460,nop,wscale 6,nop,nop,TS val 449946274 ecr 0,sackOK,eol],

length 0

05:17:50.632886 IP 3.3.3.1.30001 > 3.3.3.3.55824: Flags [S.E], seq 2034560536, ack

920096641, win 28960, options [mss 1460,sackOK,TS val 167059923 ecr

449946274,nop,wscale 7], length 0

$ tcpdump -i cali27c81818b22 -n

05:17:50.632712 IP 3.3.3.1.55824 > 1.1.2.1.8080: Flags [SEW], seq 920096640, win

65535, options [mss 1460,nop,wscale 6,nop,nop,TS val 449946274 ecr 0,sackOK,eol],

length 0

05:17:50.632874 IP 1.1.2.1.8080 > 3.3.3.1.55824: Flags [S.E], seq 2034560536, ack

920096641, win 28960, options [mss 1460,sackOK,TS val 167059923 ecr

449946274,nop,wscale 7], length 0

Node1 Interface

Pod B2 Interface](https://image.slidesharecdn.com/kubernetesinternals-190605134546/75/Kubernetes-internals-Kubernetes-36-2048.jpg)

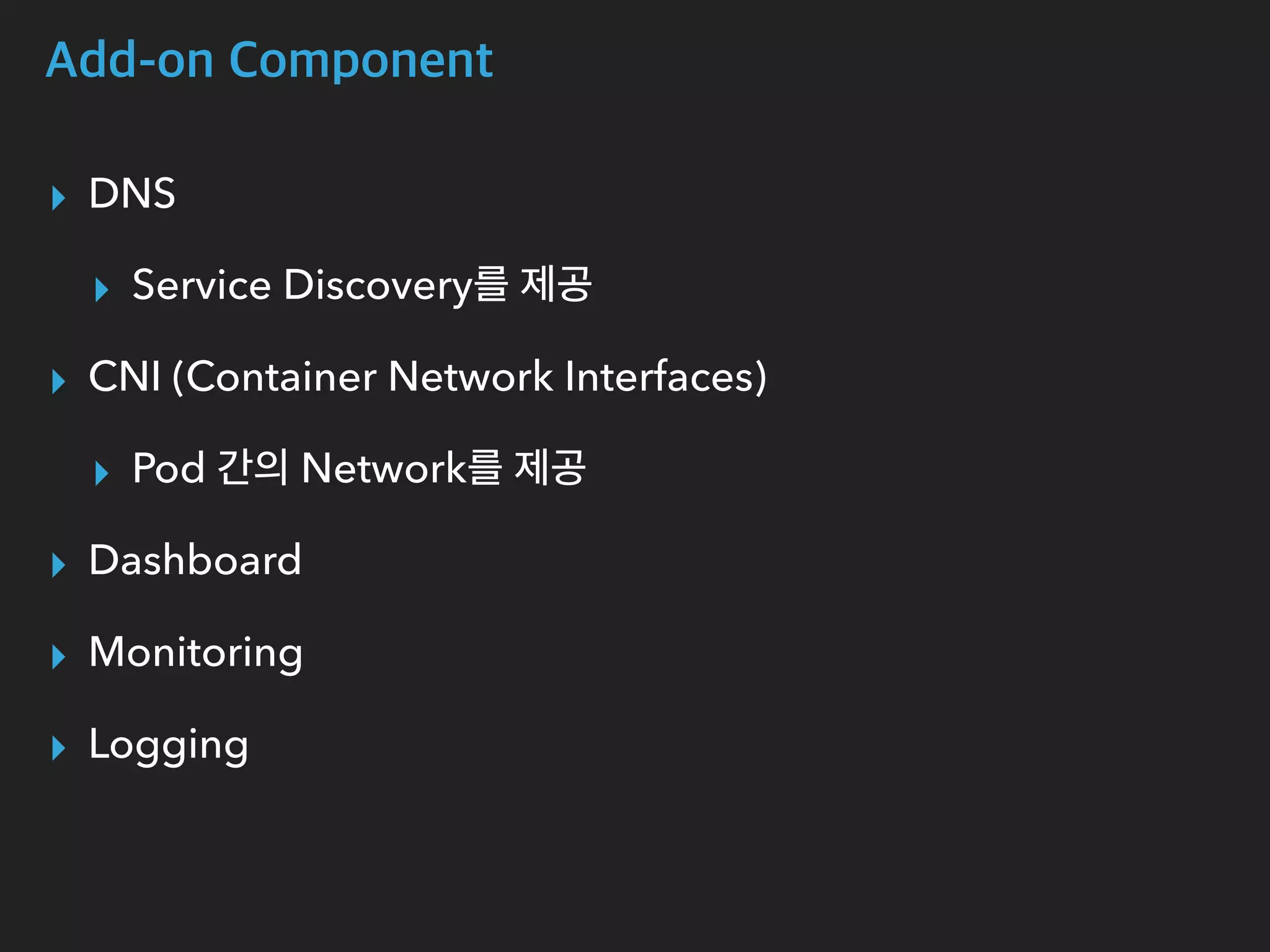

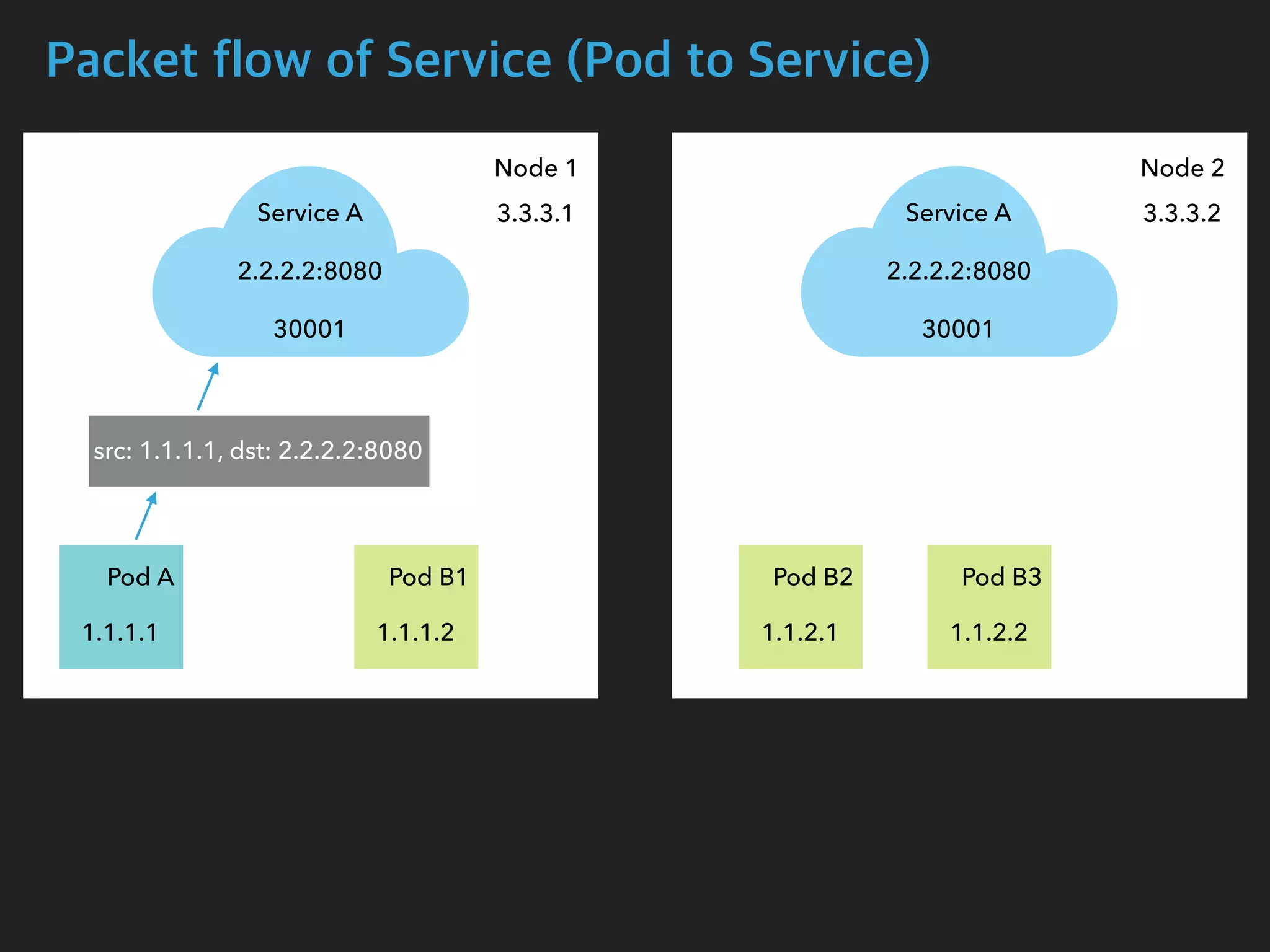

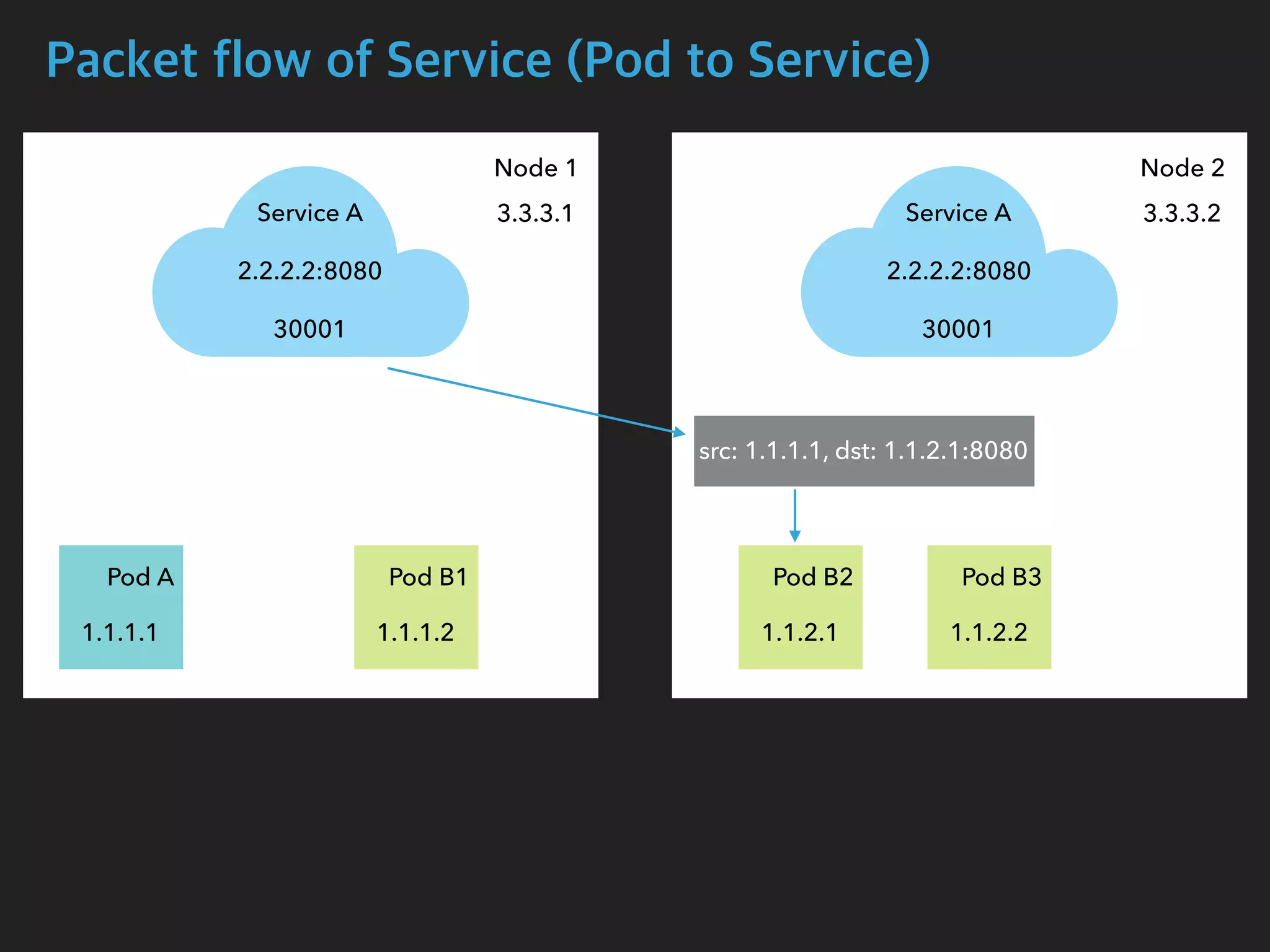

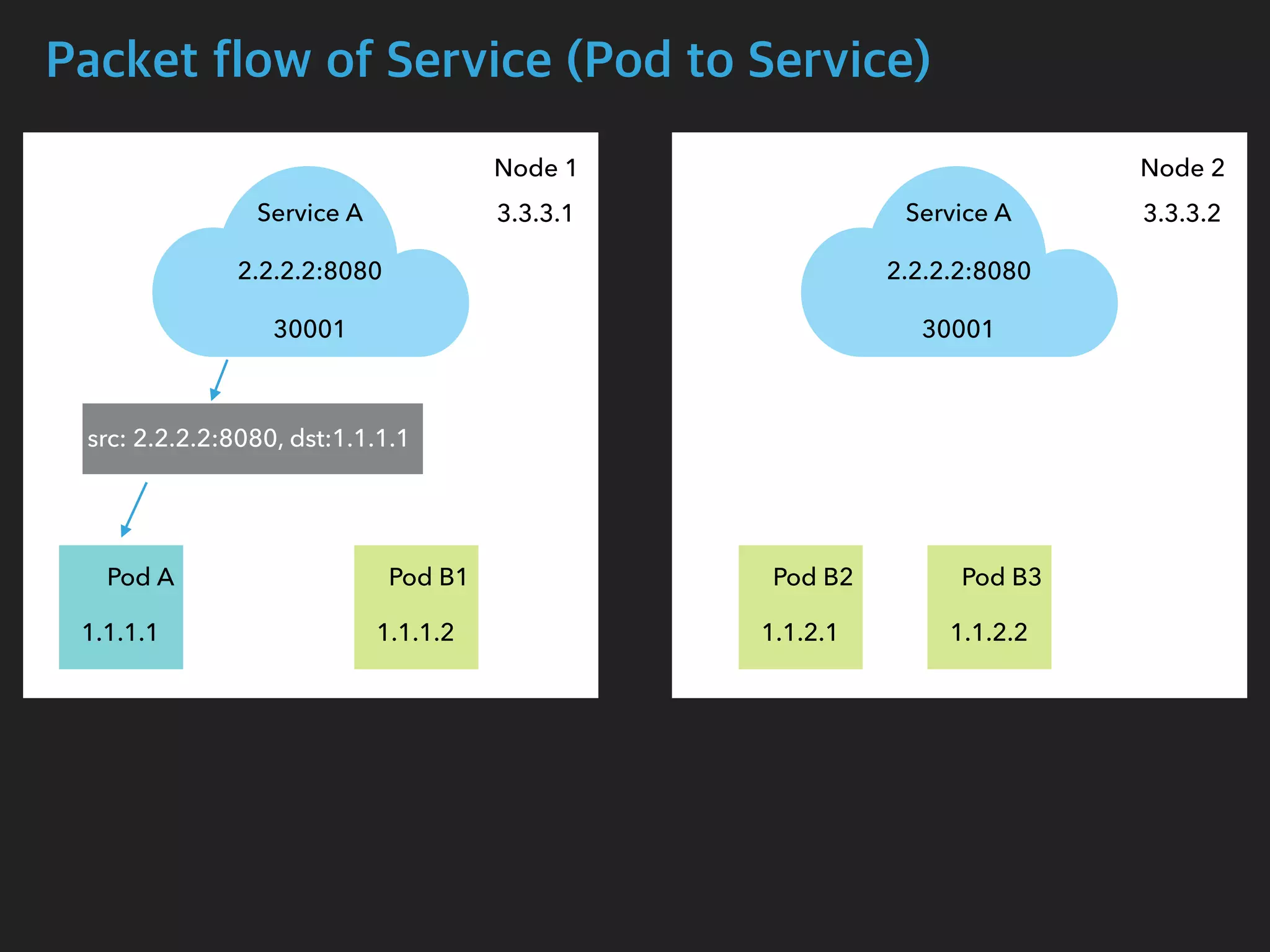

![Packet flow of Service (Pod to Service)

$ tcpdump -i calib43f921251f -n

05:14:05.077057 IP 1.1.1.1.54122 > 2.2.2.2.8080: Flags [S], seq 1210630612, win

29200, options [mss 1460,sackOK,TS val 2710881183 ecr 0,nop,wscale 7], length 0

05:14:05.077767 IP 2.2.2.2.8080 > 1.1.1.1.54122: Flags [S.], seq 4123667957, ack

1210630613, win 28960, options [mss 1460,sackOK,TS val 411294588 ecr

2710881183,nop,wscale 7], length 0

$ tcpdump -i cali27c81818b22 -n

05:14:05.099668 IP 1.1.1.1.54122 > 1.1.2.1.8080: Flags [S], seq 1210630612, win

29200, options [mss 1460,sackOK,TS val 2710881183 ecr 0,nop,wscale 7], length 0

05:14:05.099826 IP 1.1.2.1.8080 > 1.1.1.1.54122: Flags [S.], seq 4123667957, ack

1210630613, win 28960, options [mss 1460,sackOK,TS val 411294588 ecr

2710881183,nop,wscale 7], length 0

Pod B2 Interface

Pod A Interface](https://image.slidesharecdn.com/kubernetesinternals-190605134546/75/Kubernetes-internals-Kubernetes-41-2048.jpg)

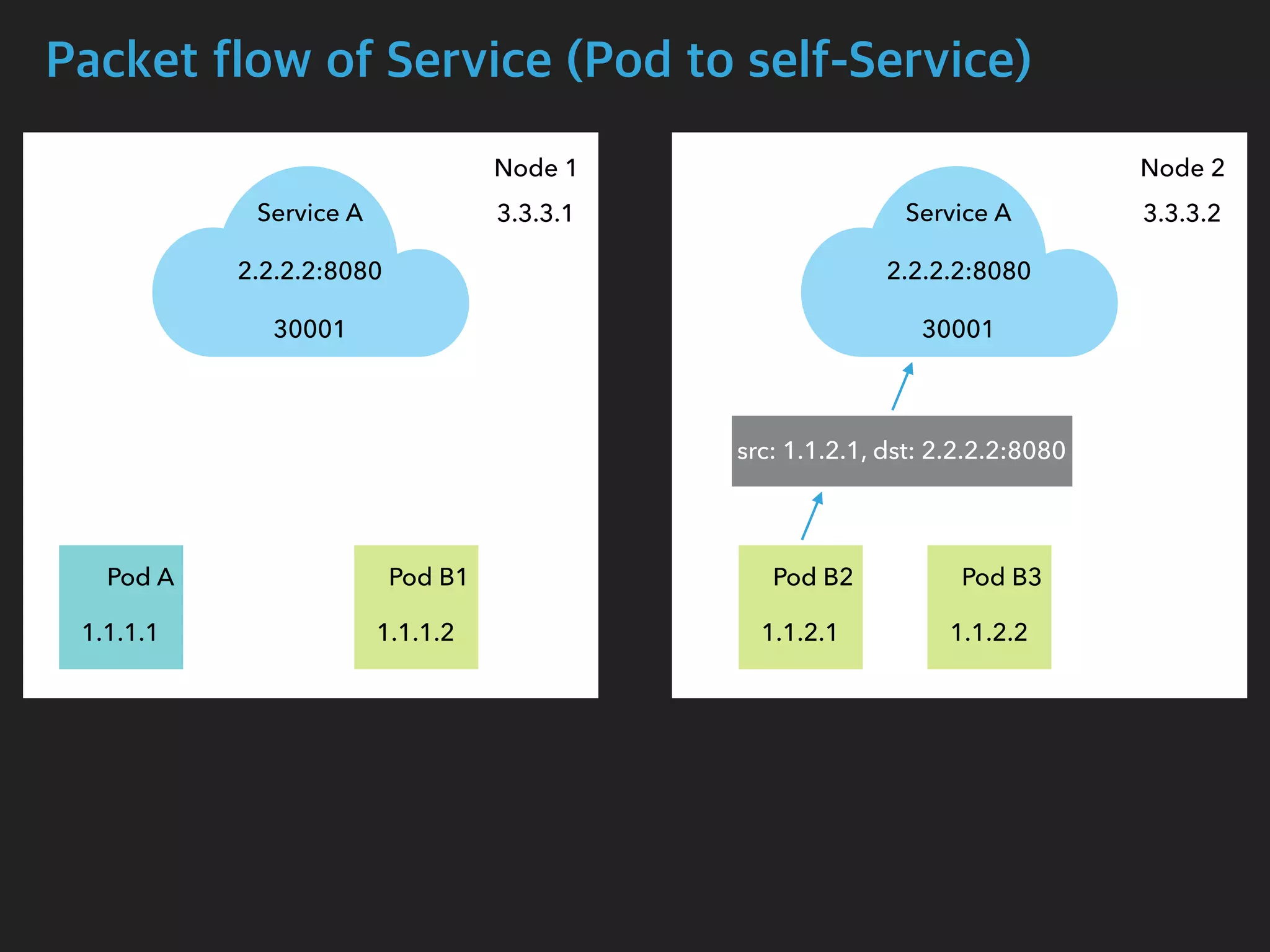

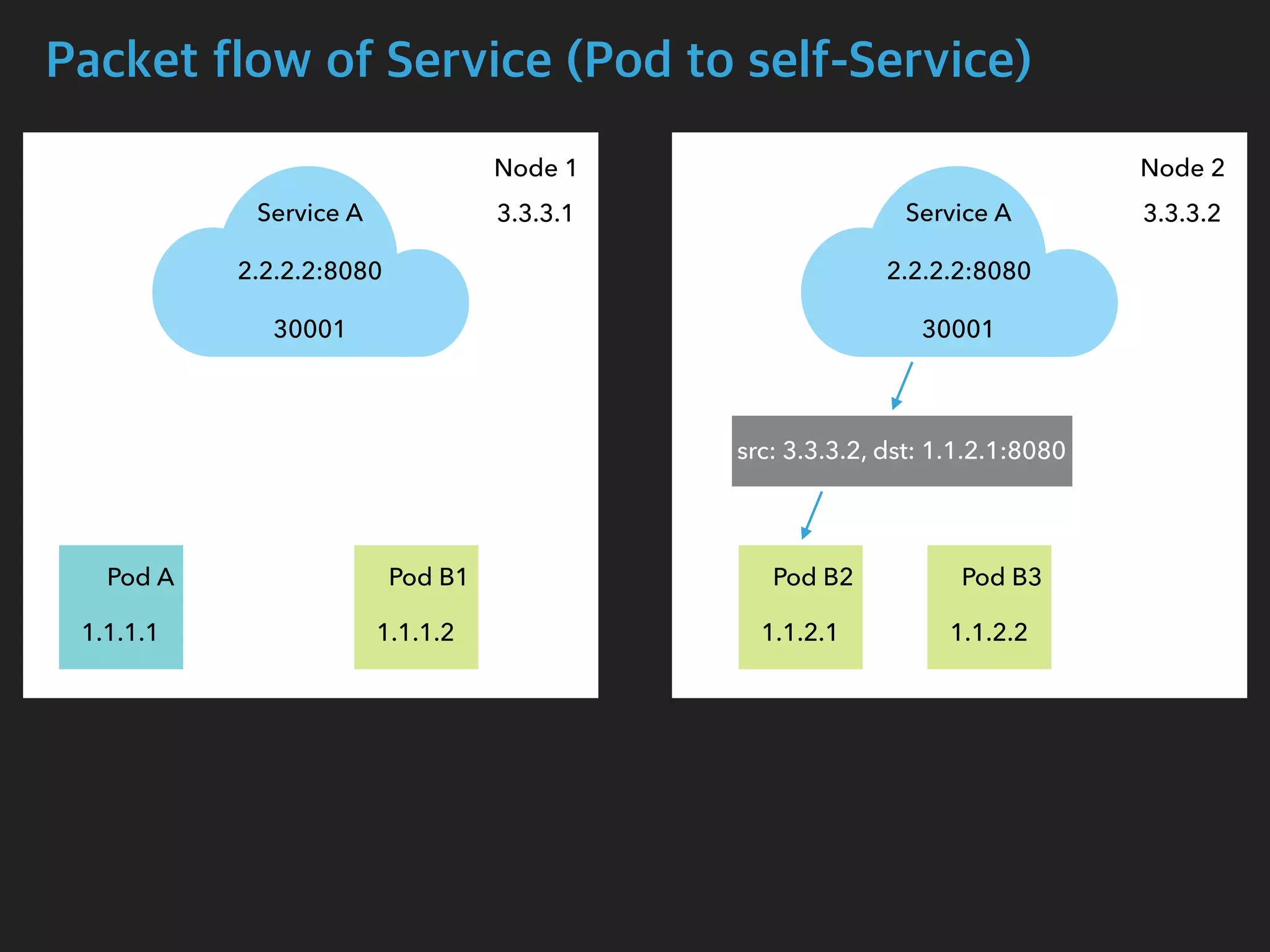

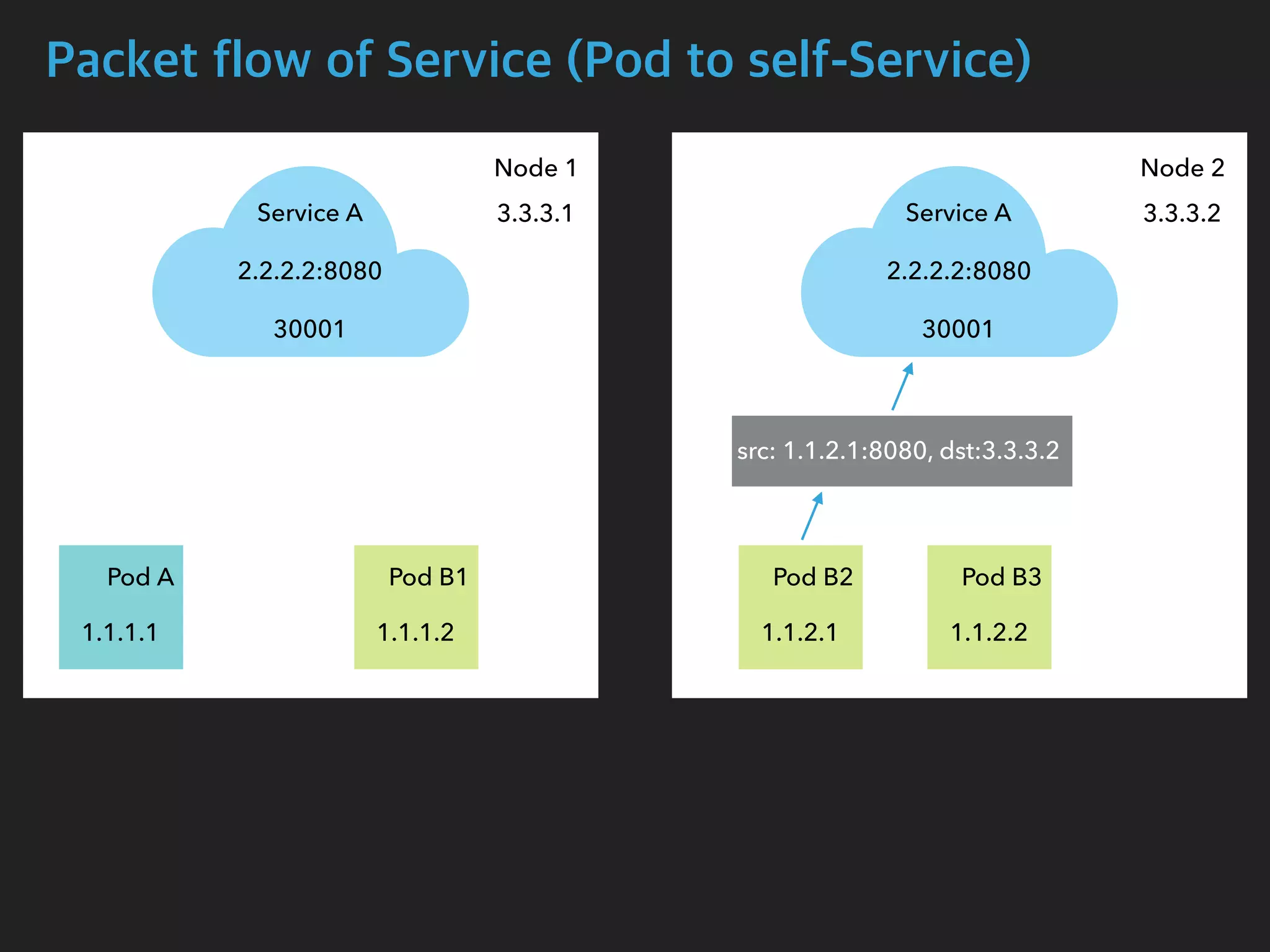

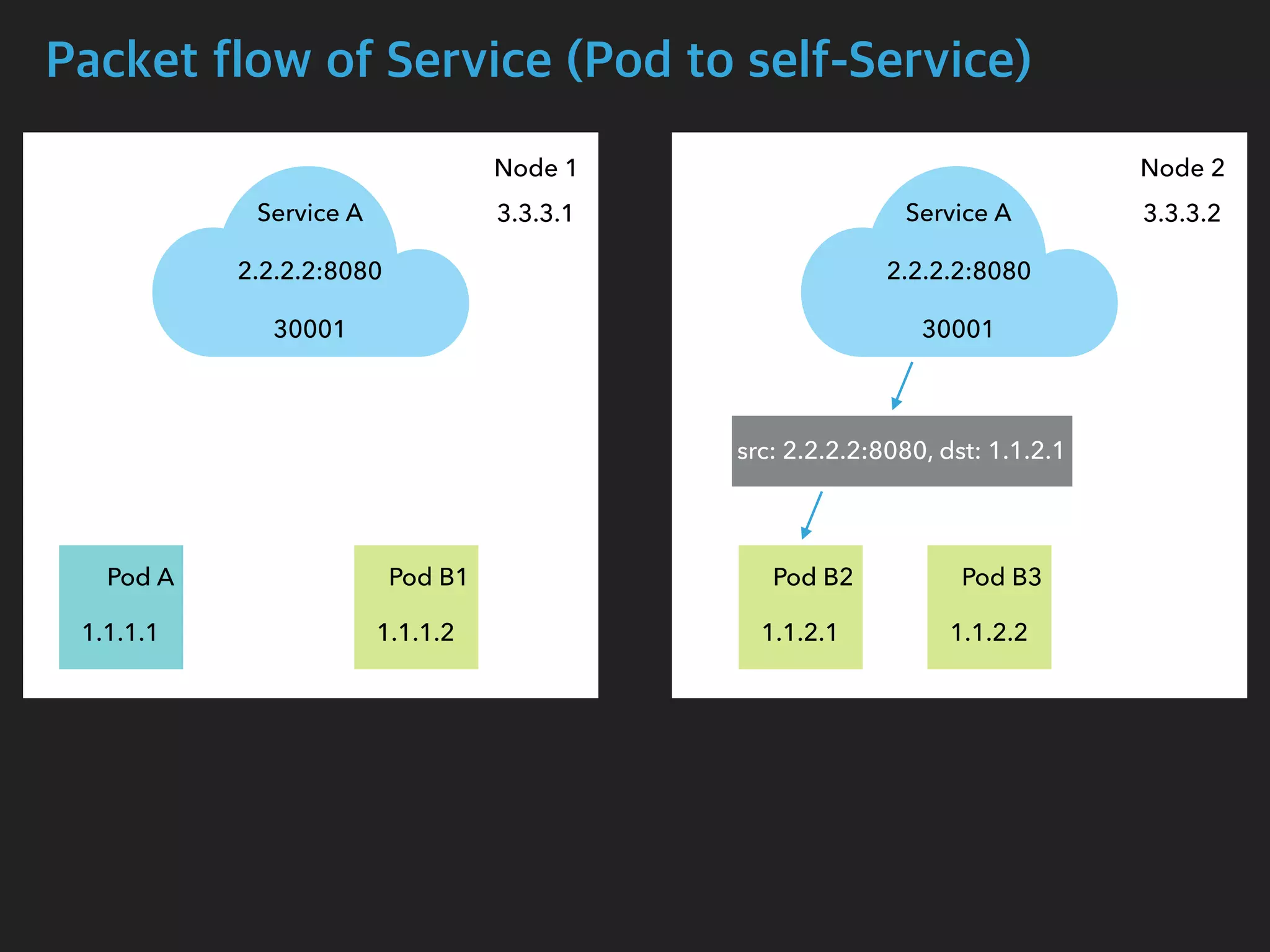

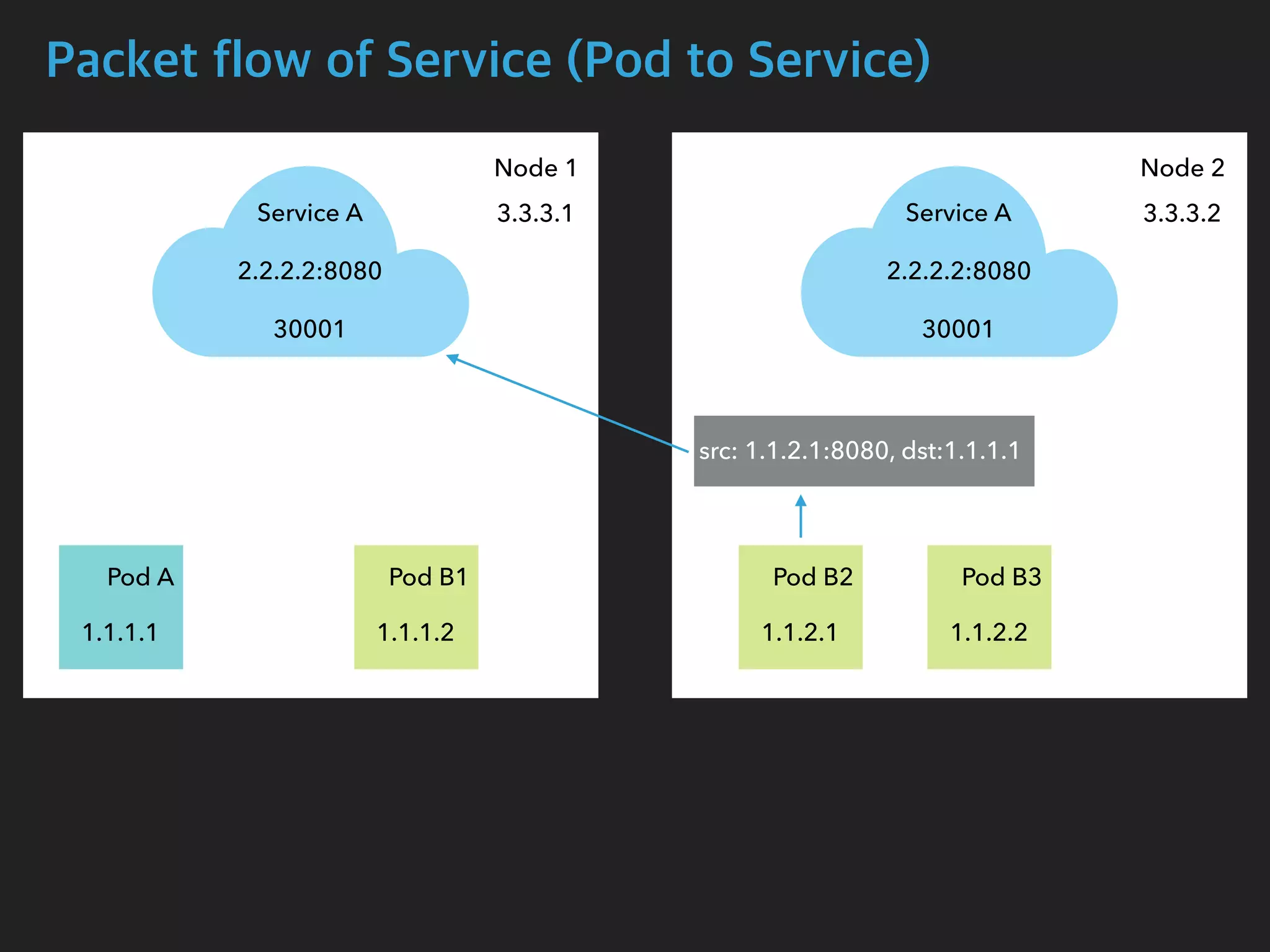

![Packet flow of Service (Pod to self-Service)

$ tcpdump -i calib43f921251f -n

05:15:59.556723 IP 1.1.2.1.54308 > 2.2.2.2.8080: Flags [S], seq 4048875942, win

29200, options [mss 1460,sackOK,TS val 2710995663 ecr 0,nop,wscale 7], length 0

05:15:59.556770 IP 3.3.3.2.54308 > 1.1.2.1.8080: Flags [S], seq 4048875942, win

29200, options [mss 1460,sackOK,TS val 2710995663 ecr 0,nop,wscale 7], length 0

05:15:59.556779 IP 1.1.2.1.8080 > 3.3.3.2.54308: Flags [S.], seq 2680204874, ack

4048875943, win 28960, options [mss 1460,sackOK,TS val 1749589035 ecr

2710995663,nop,wscale 7], length 0

05:15:59.556785 IP 2.2.2.2.8080 > 1.1.2.1.54308: Flags [S.], seq 2680204874, ack

4048875943, win 28960, options [mss 1460,sackOK,TS val 1749589035 ecr

2710995663,nop,wscale 7], length 0

Pod B2 Interface](https://image.slidesharecdn.com/kubernetesinternals-190605134546/75/Kubernetes-internals-Kubernetes-46-2048.jpg)