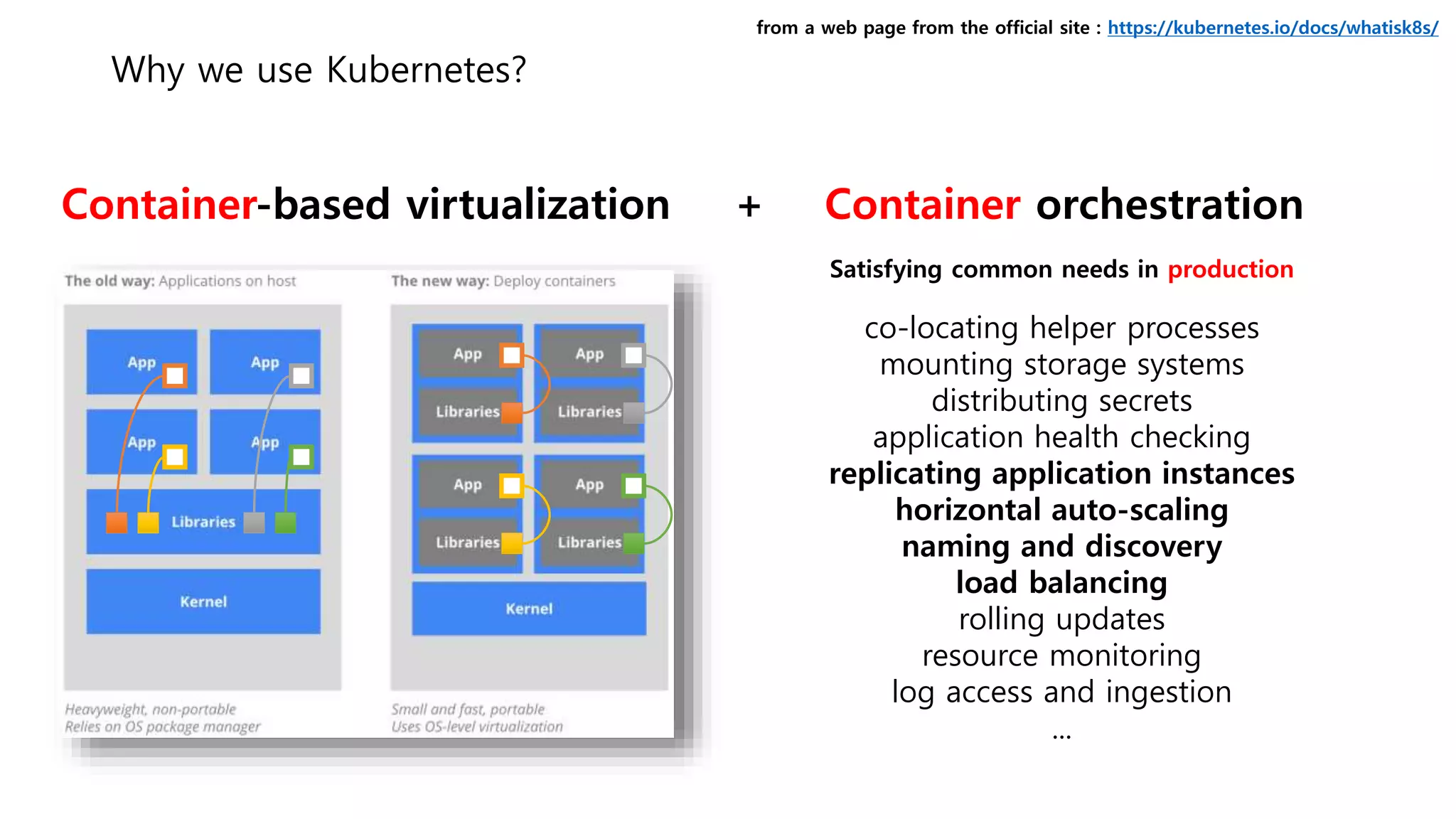

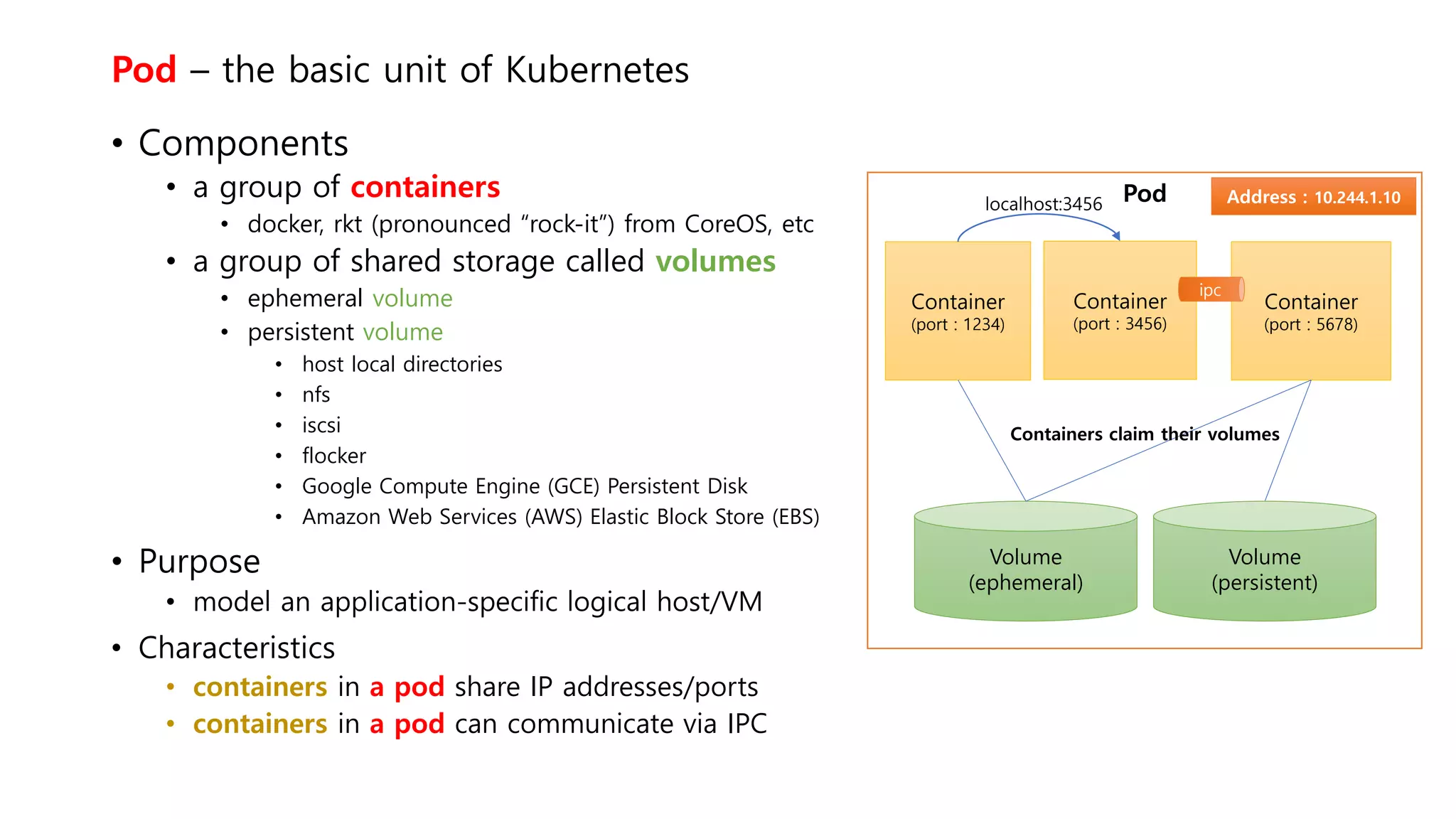

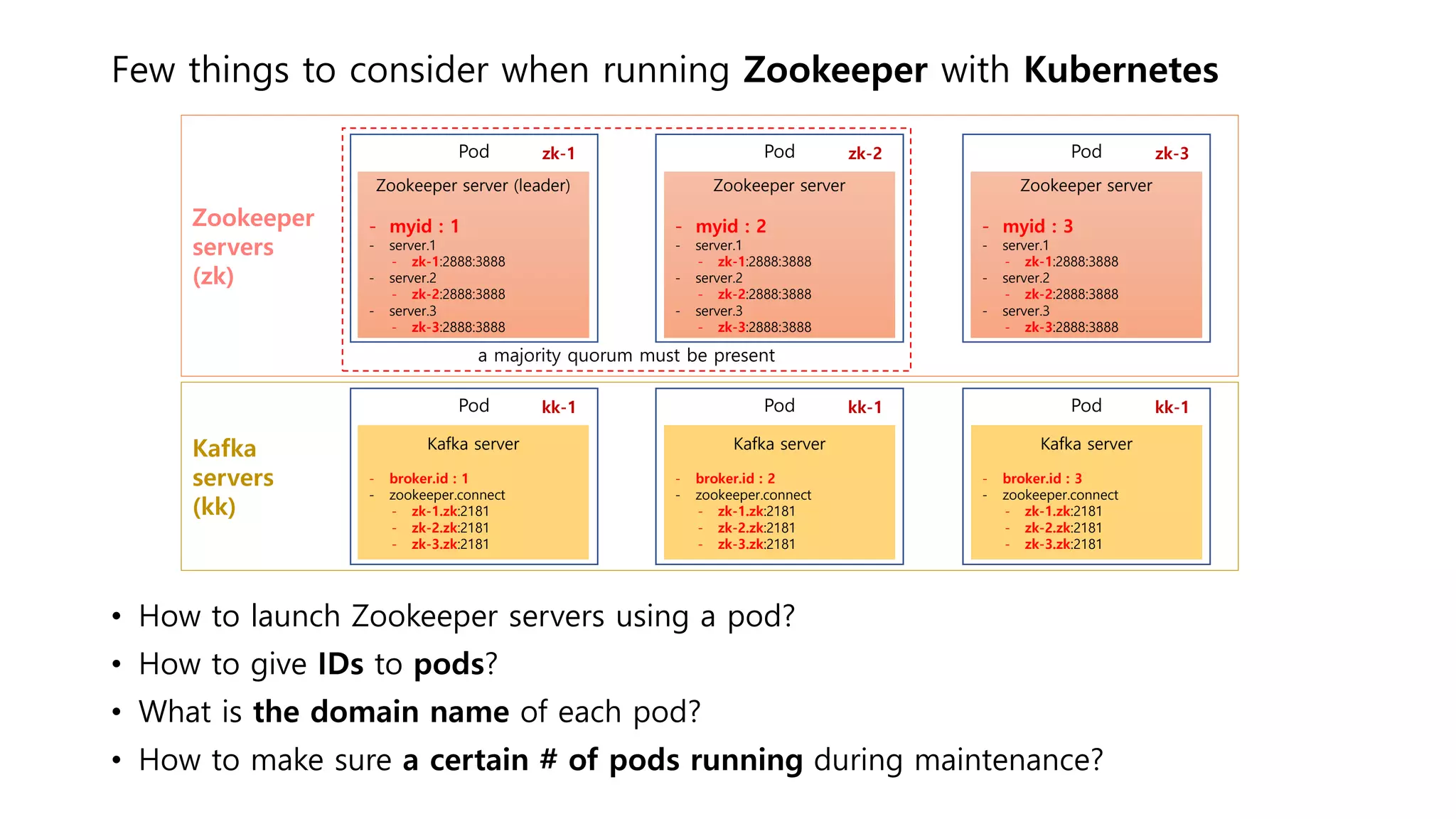

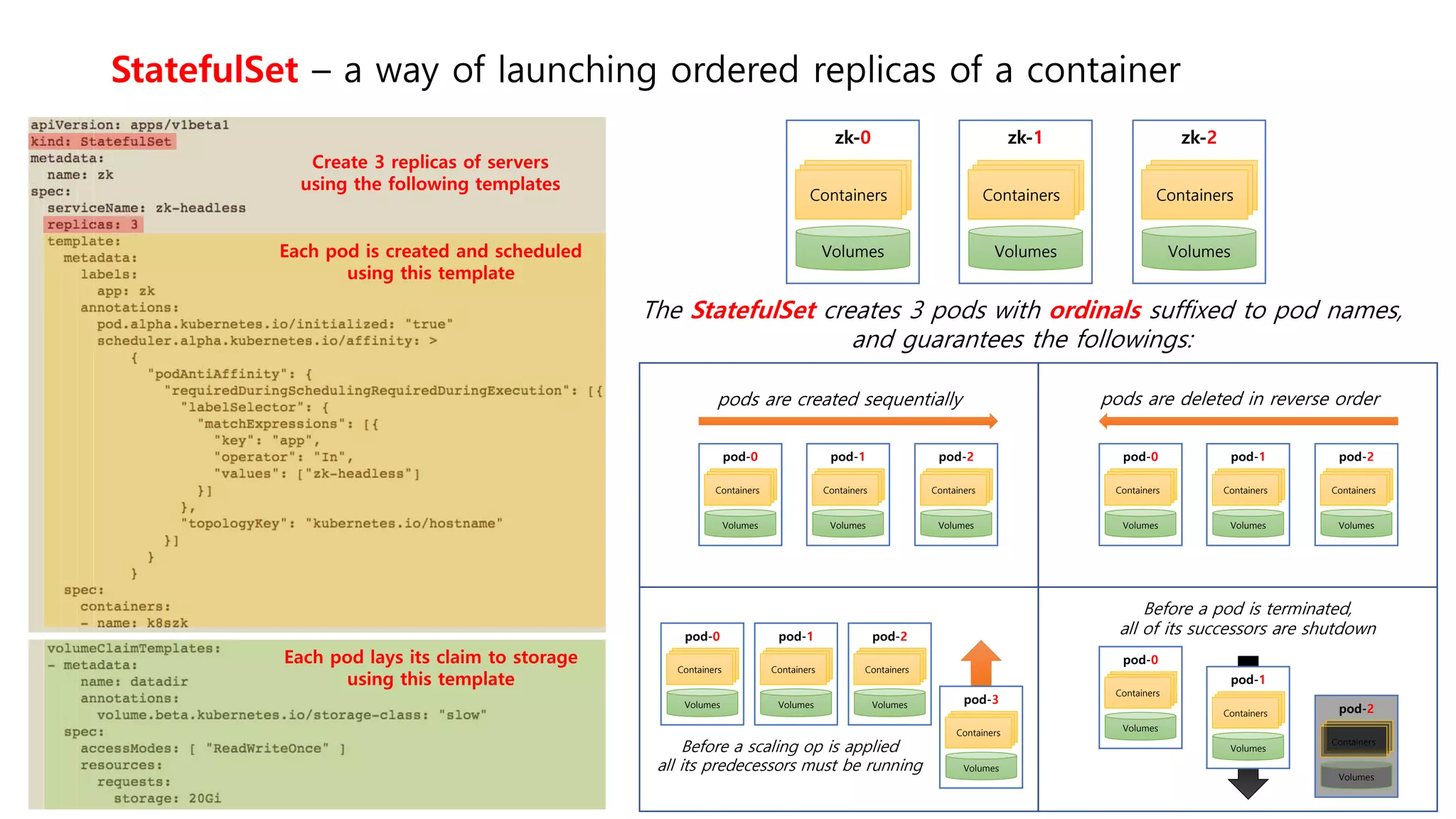

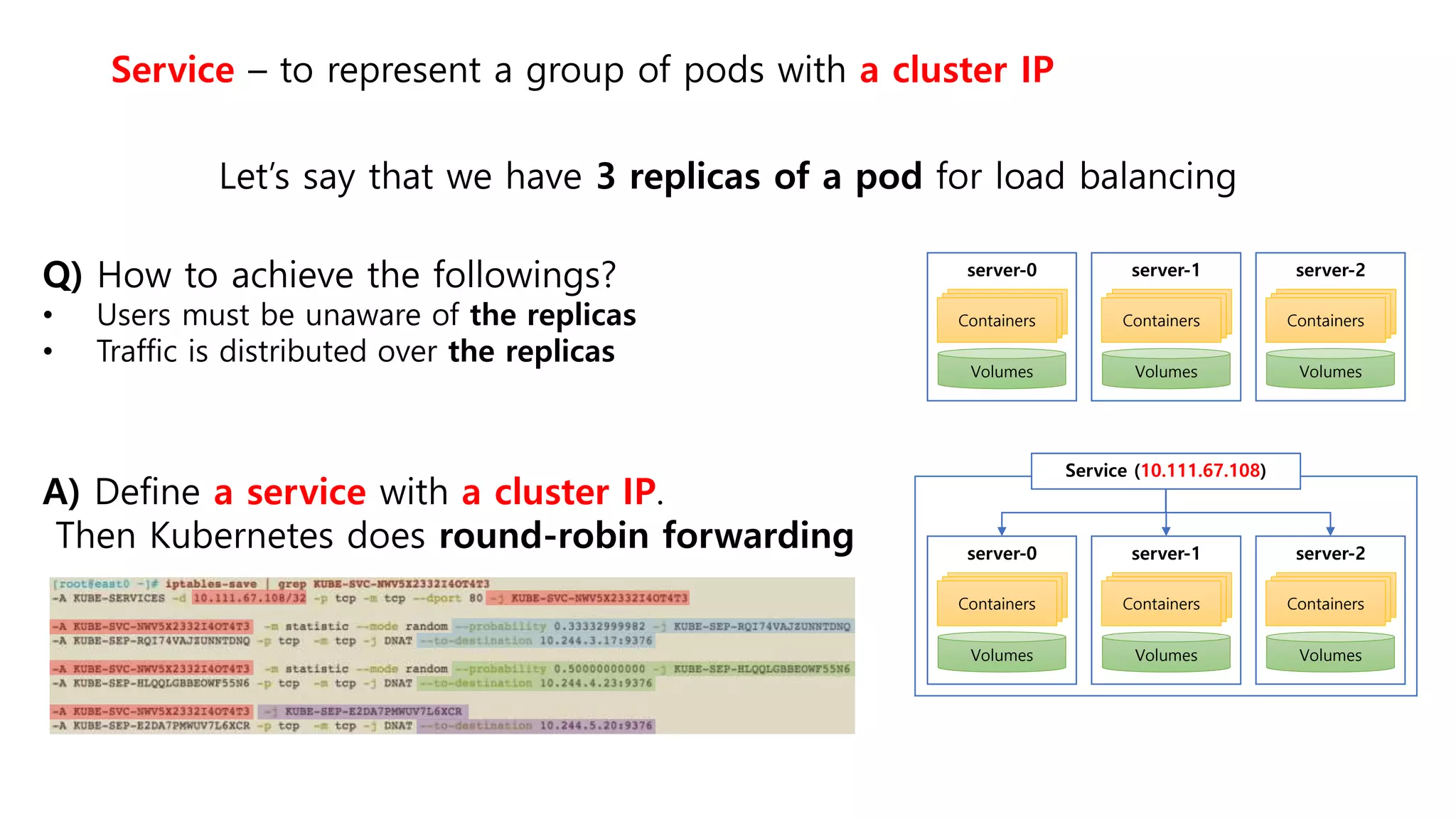

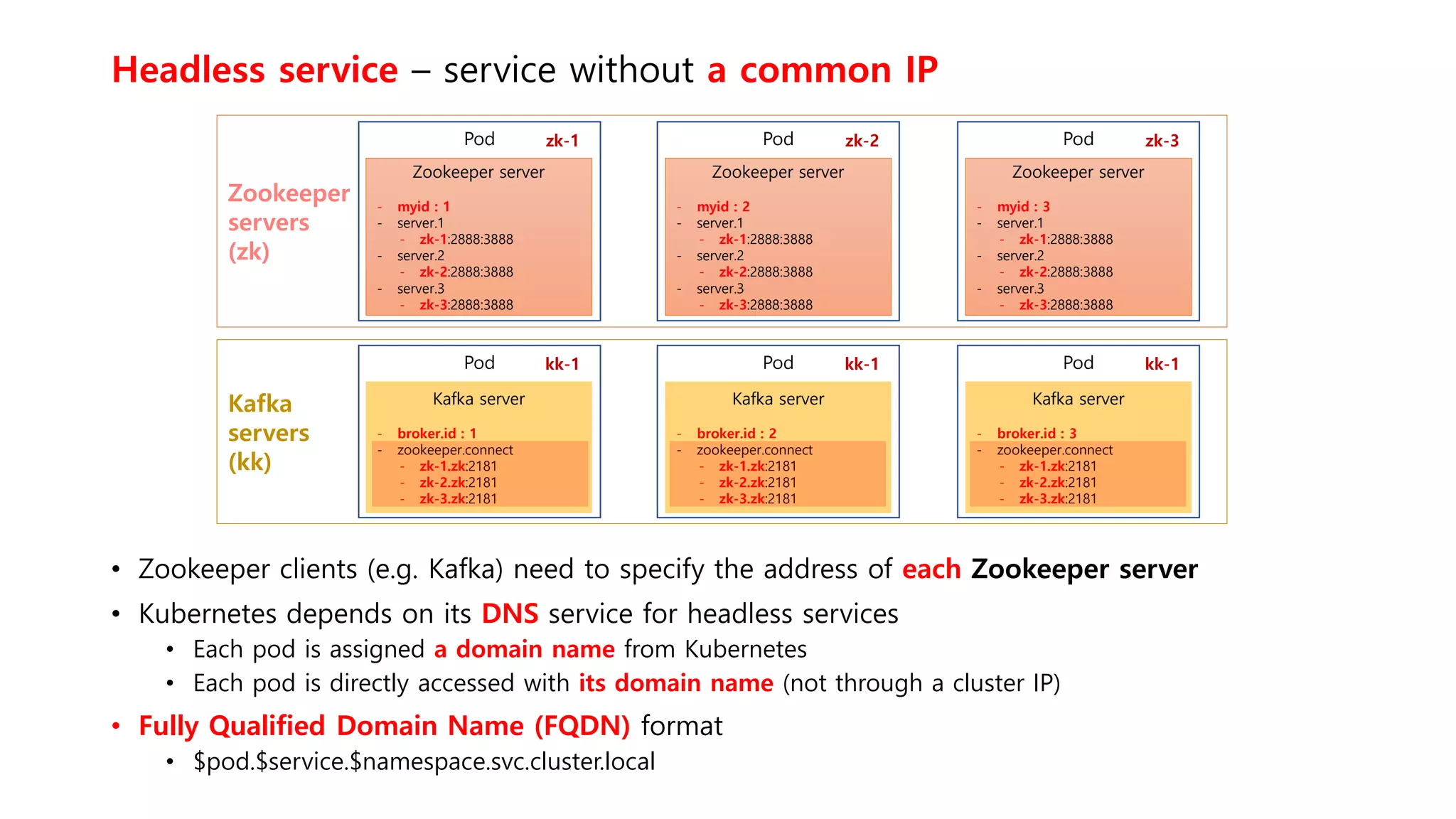

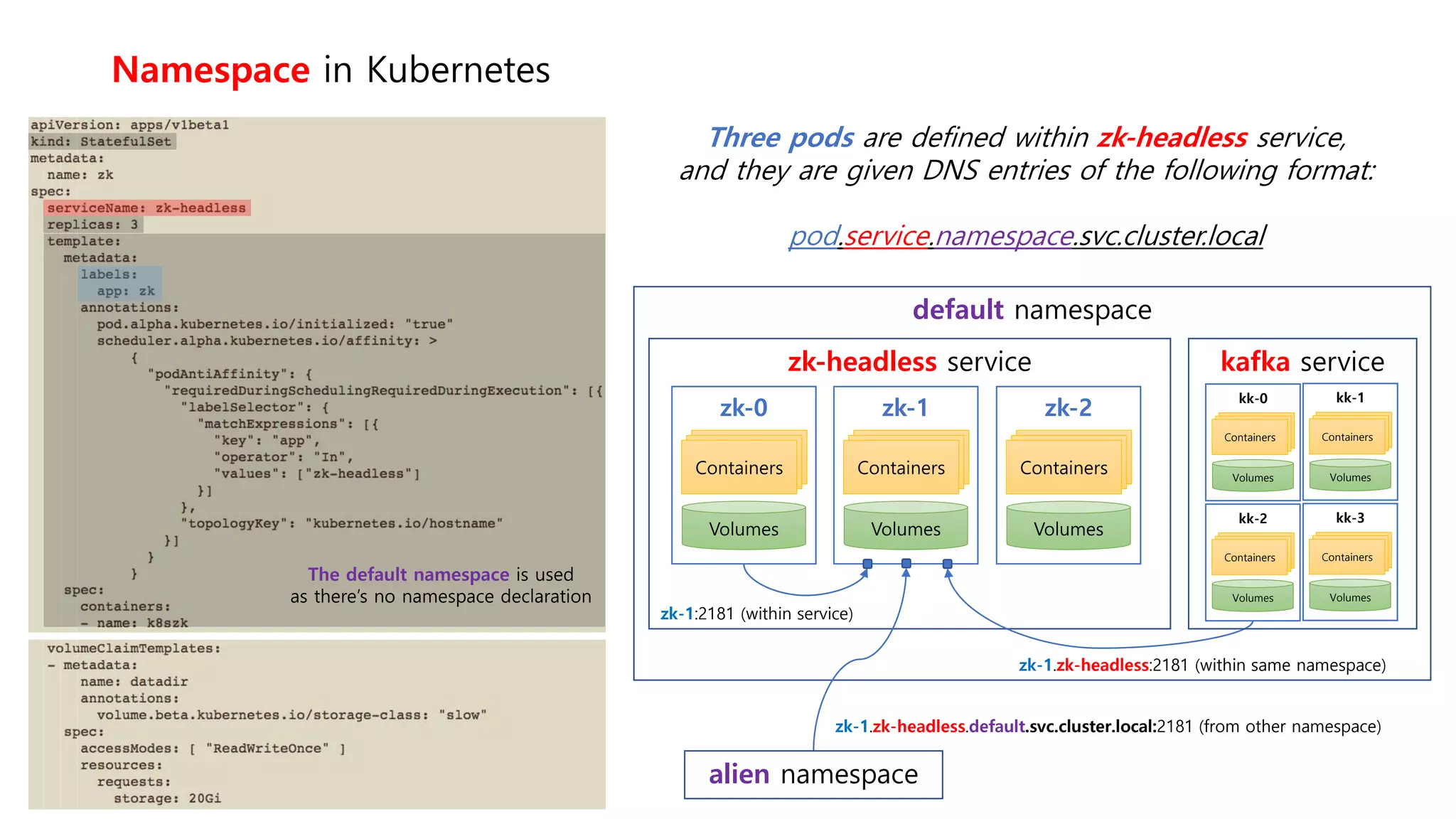

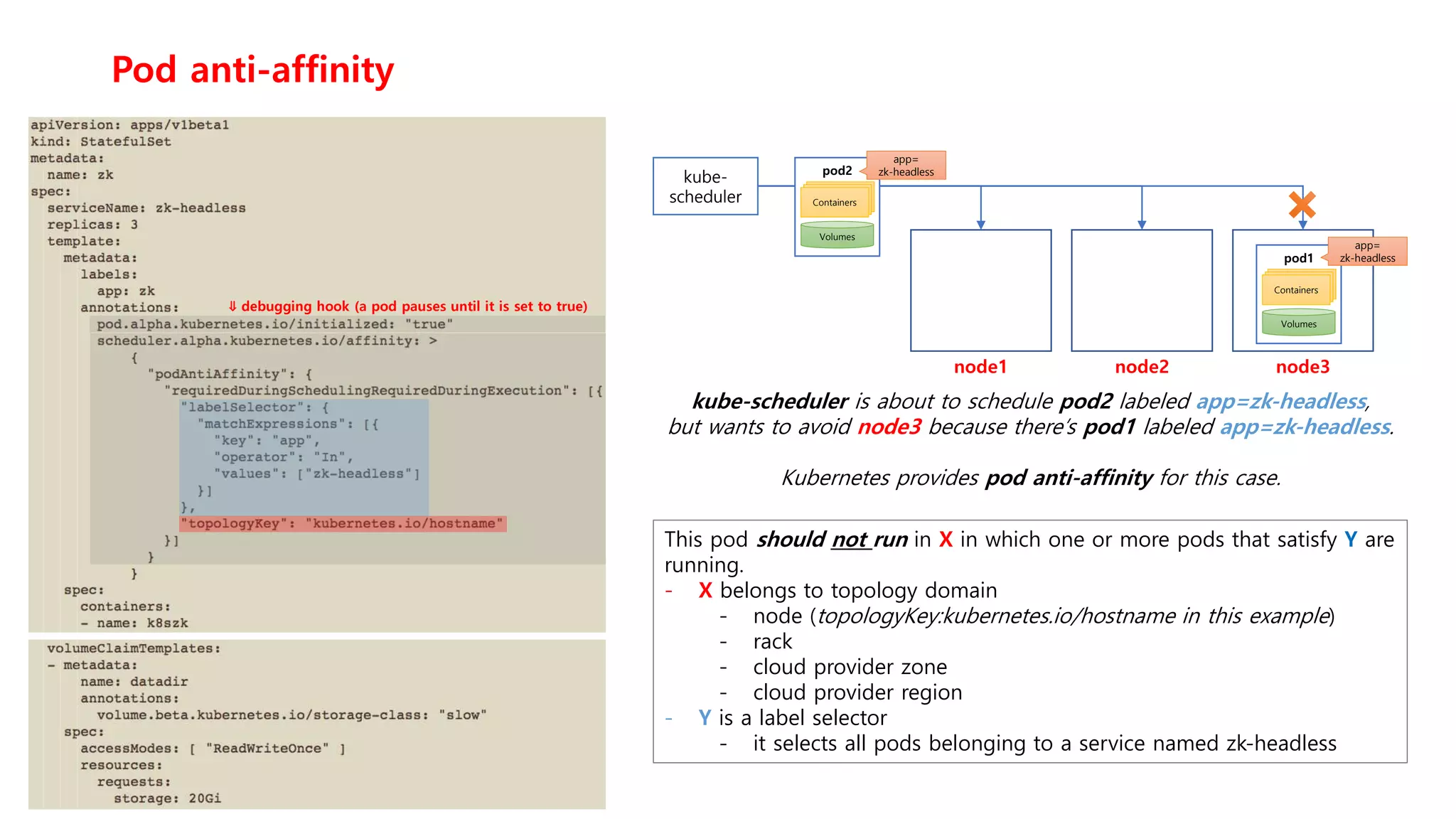

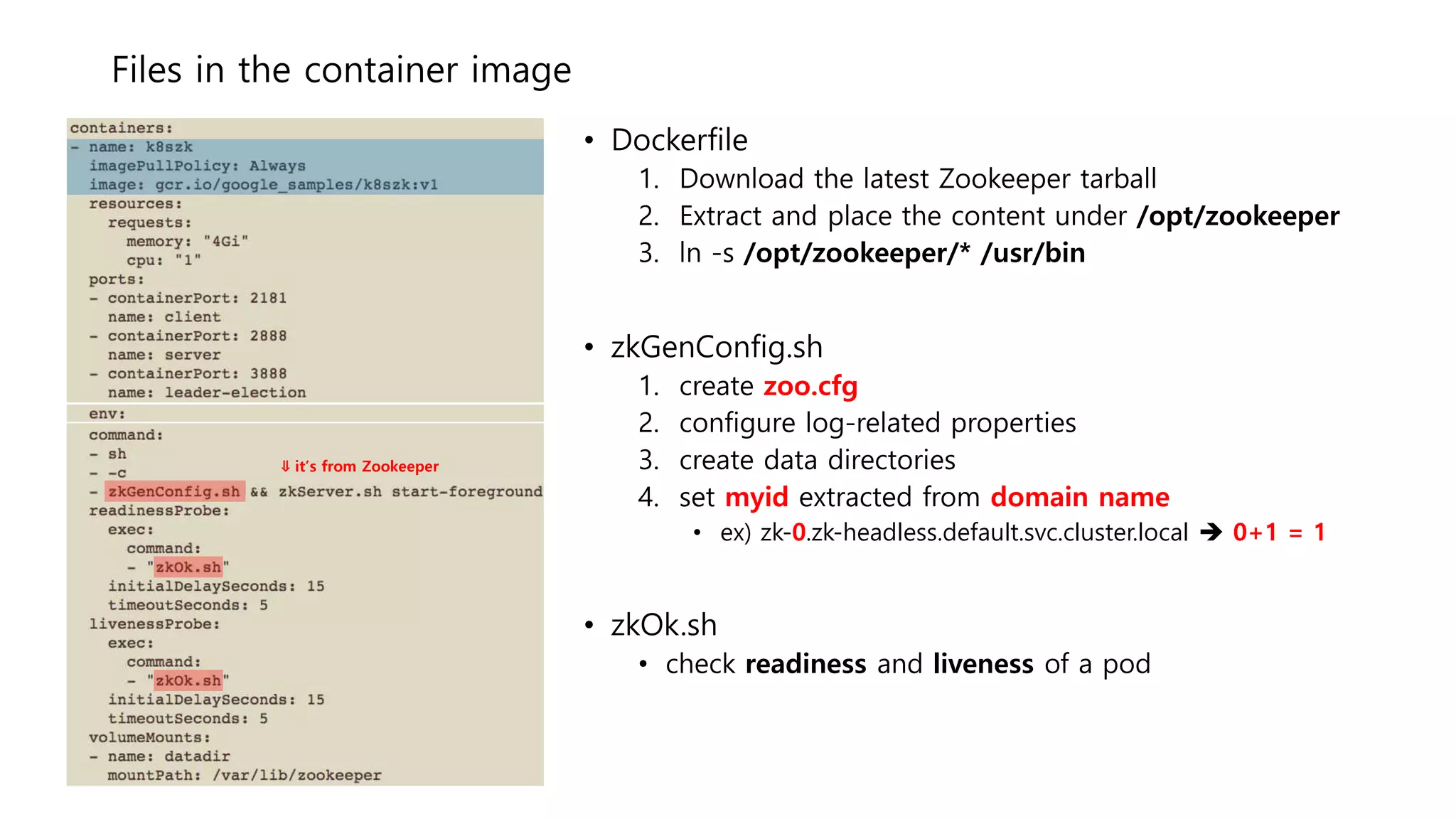

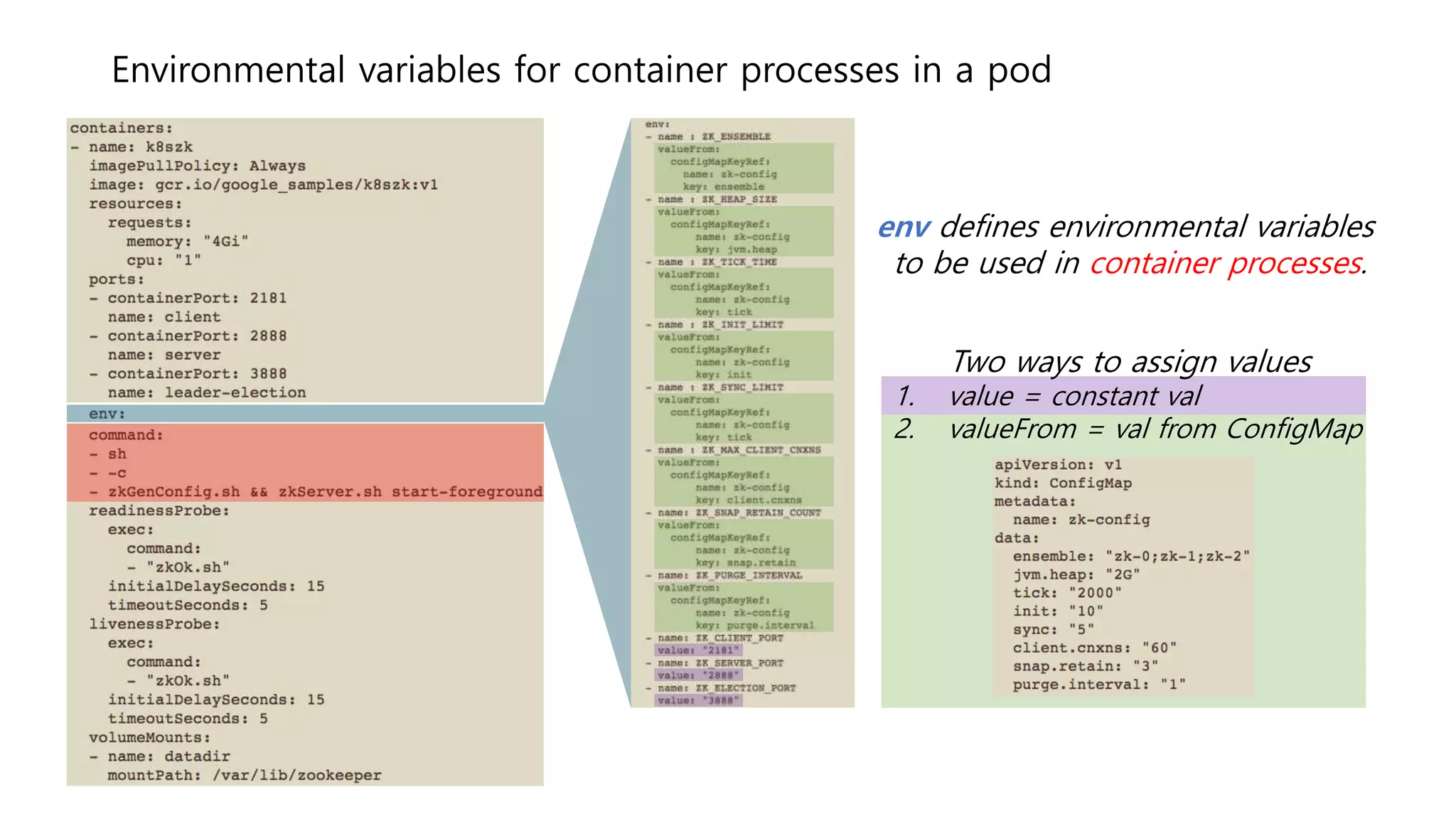

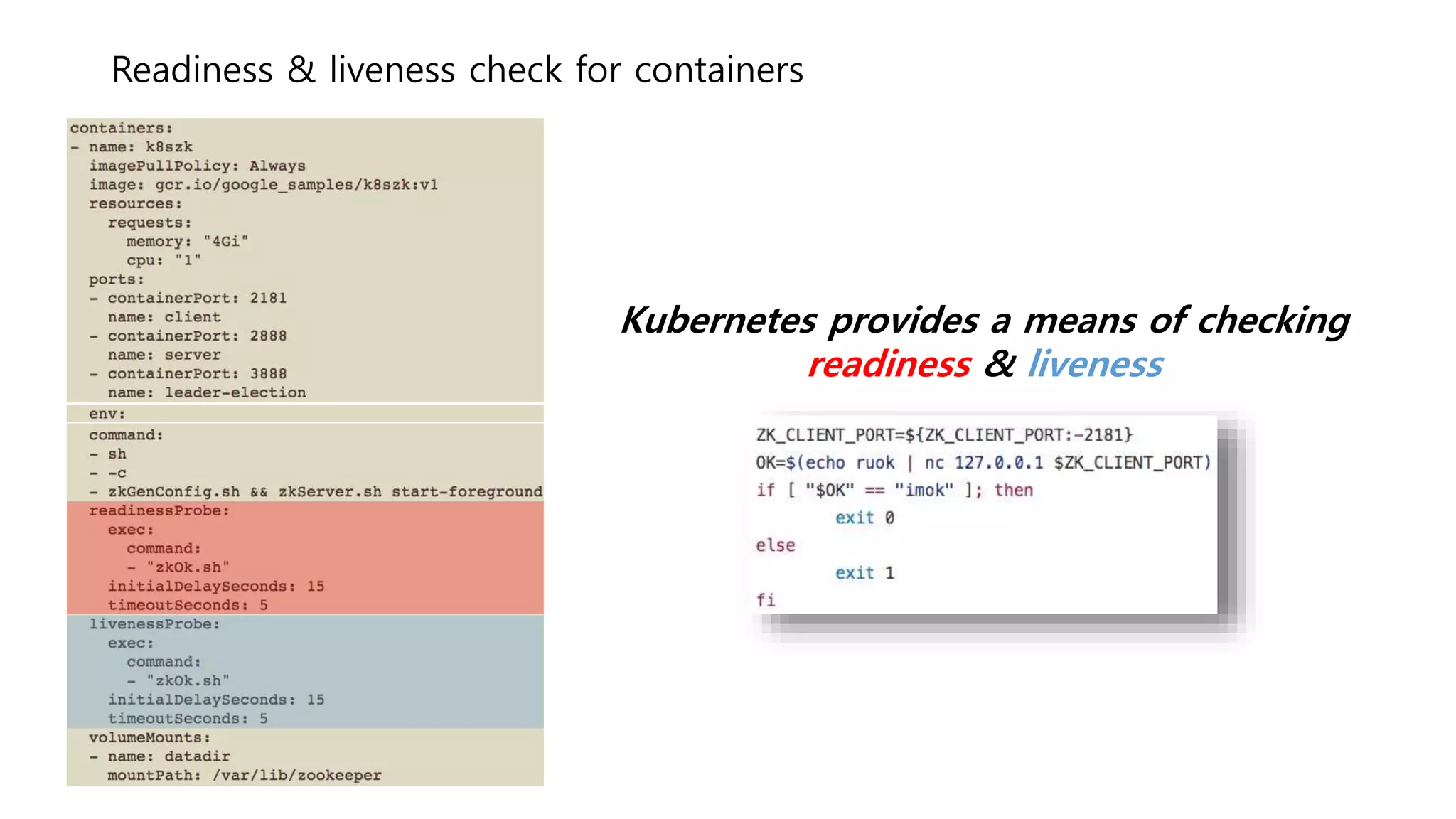

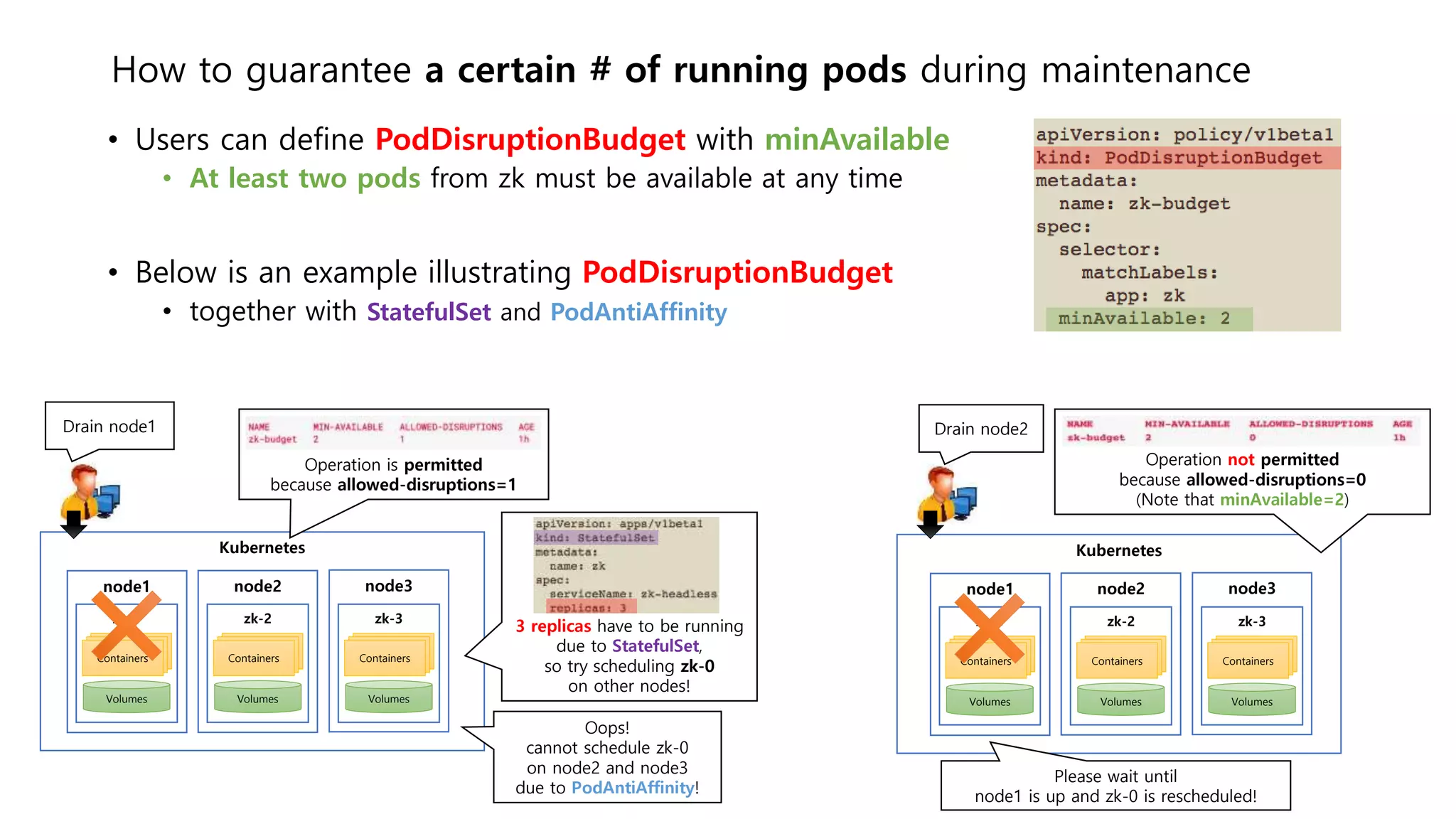

The document provides an introduction to Kubernetes, explaining its use for container-based virtualization and orchestration. It describes key components like pods, statefulsets, and services, while specifically addressing how to configure and manage Zookeeper and Kafka within Kubernetes. Additionally, it covers topics such as pod anti-affinity, scaling, and maintenance strategies to ensure high availability of applications.