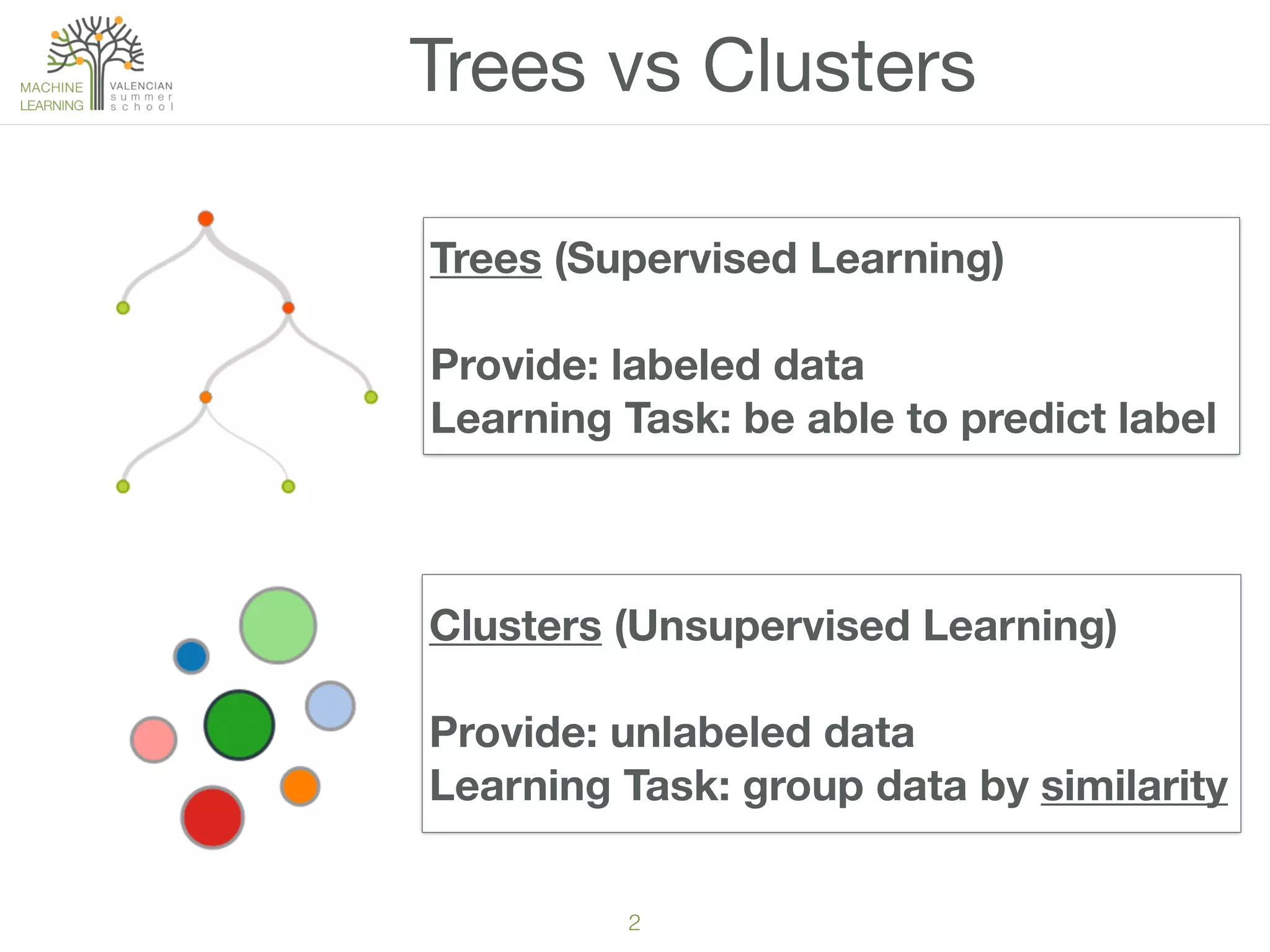

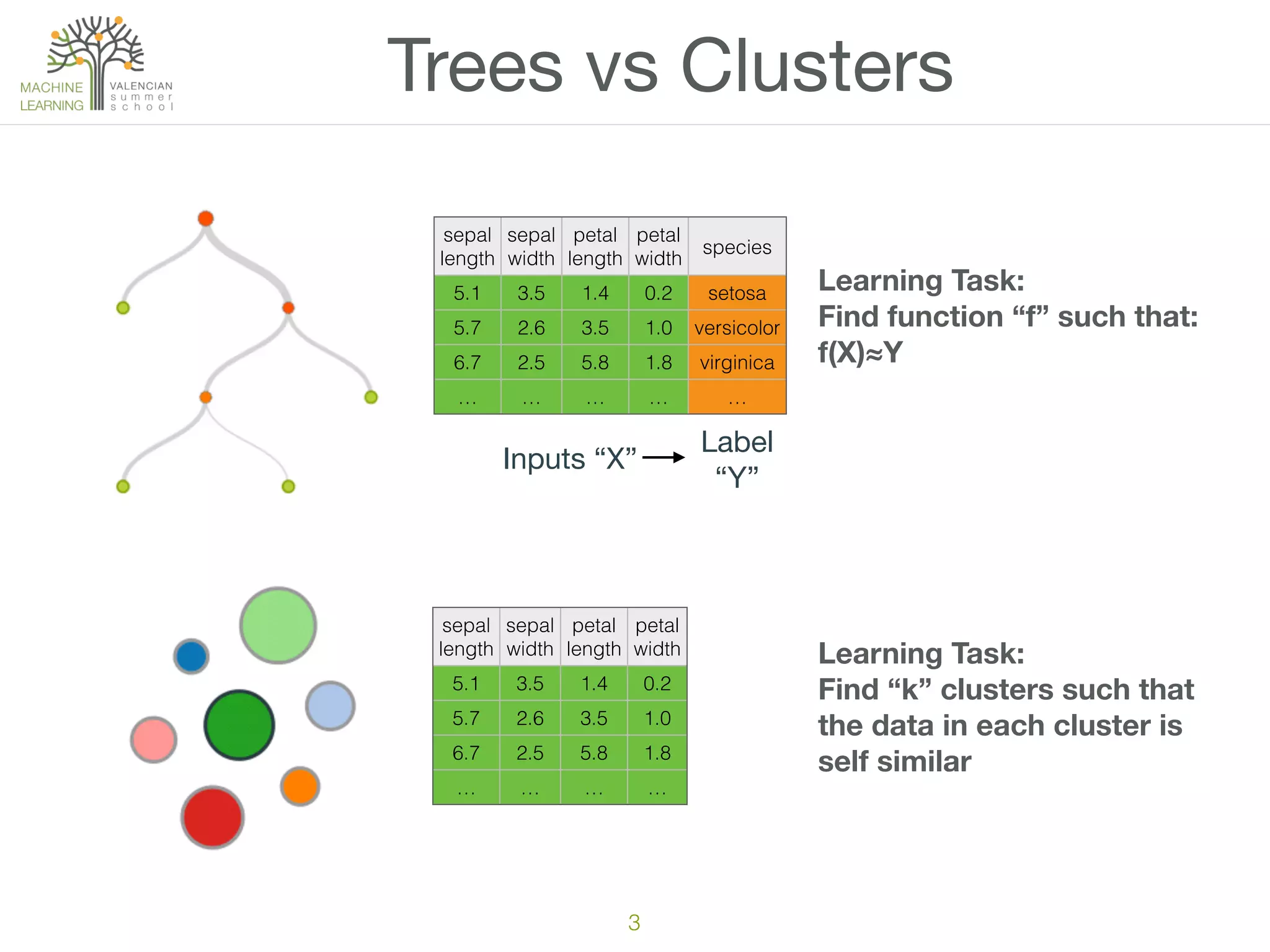

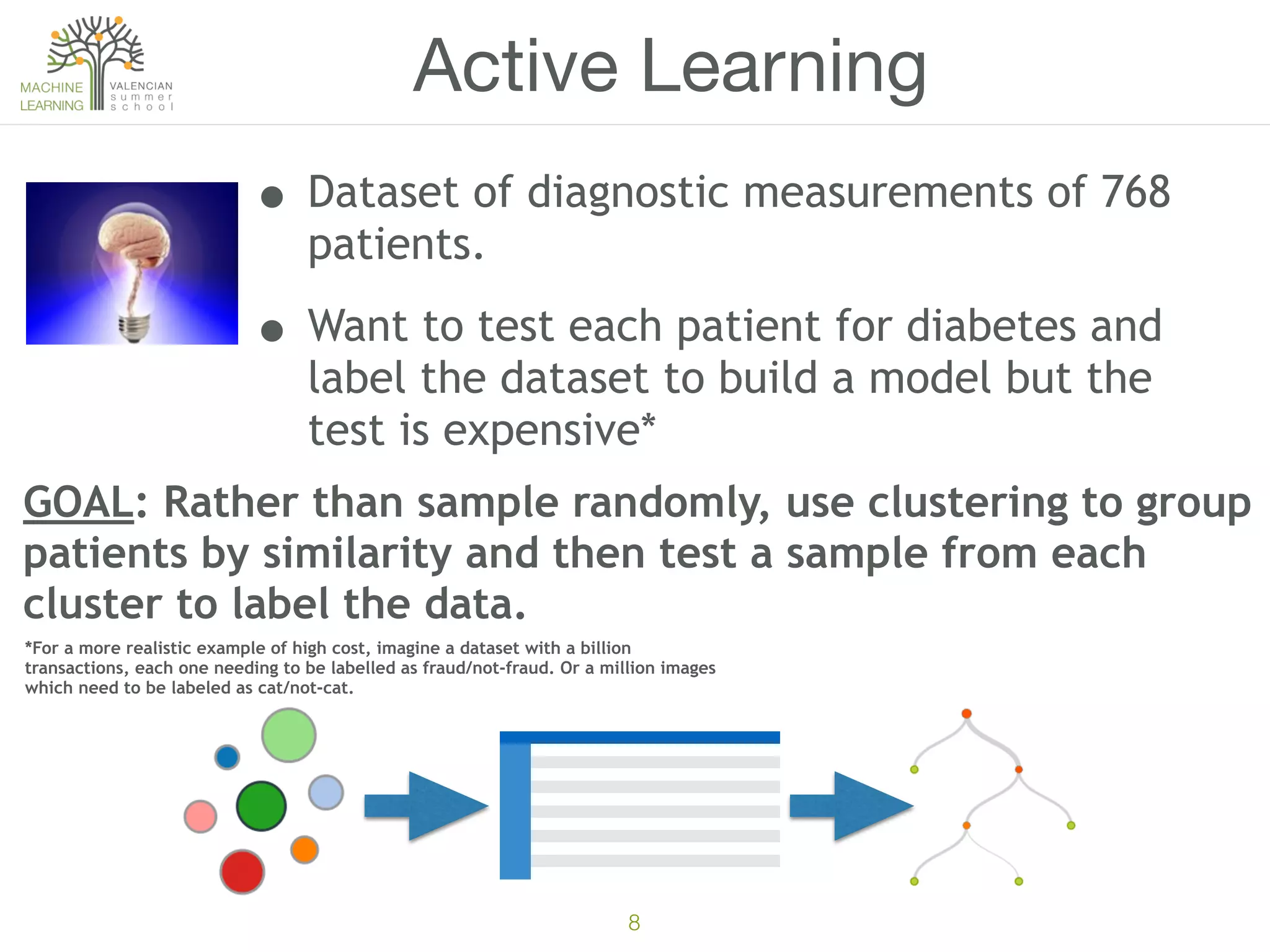

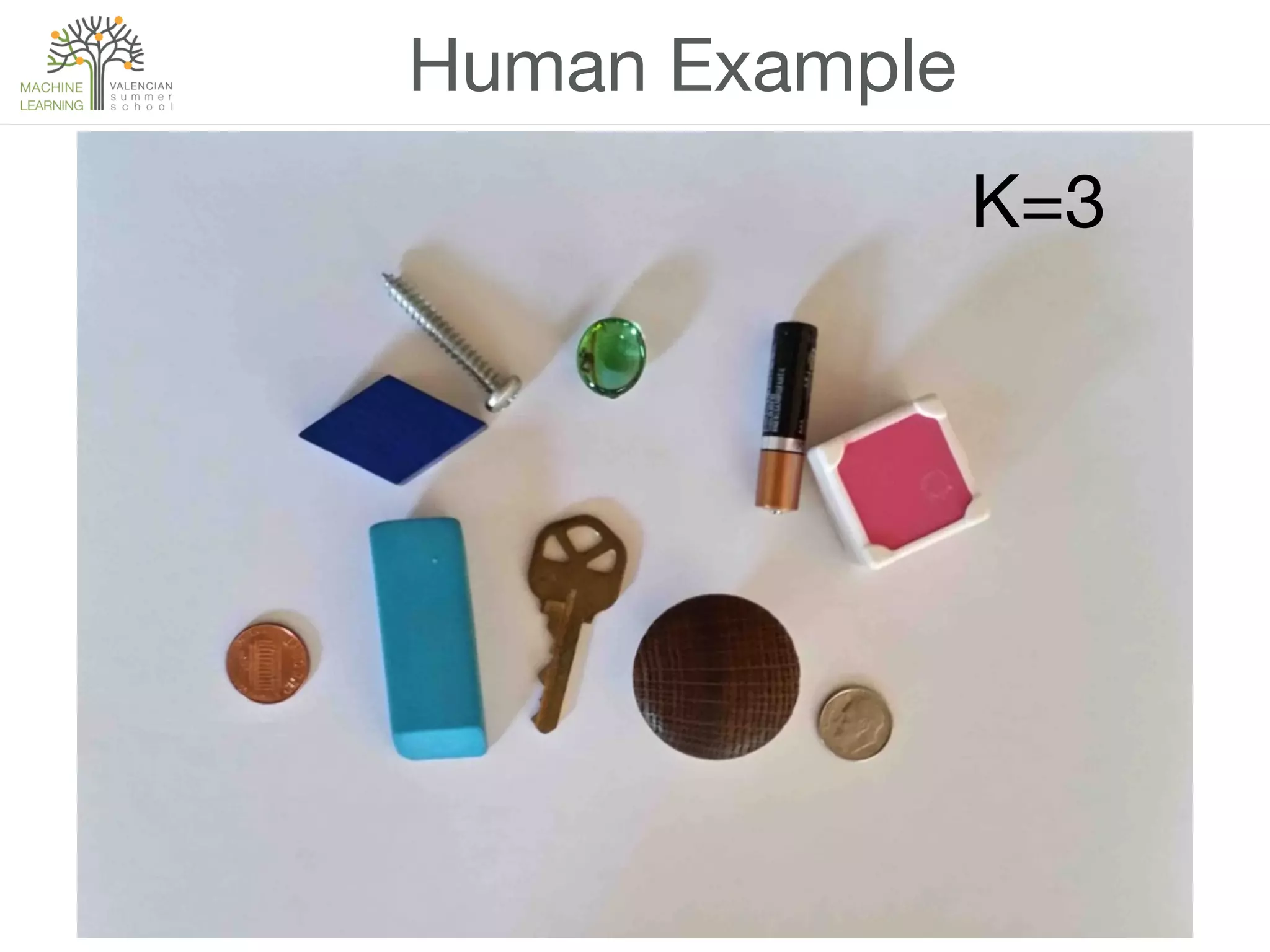

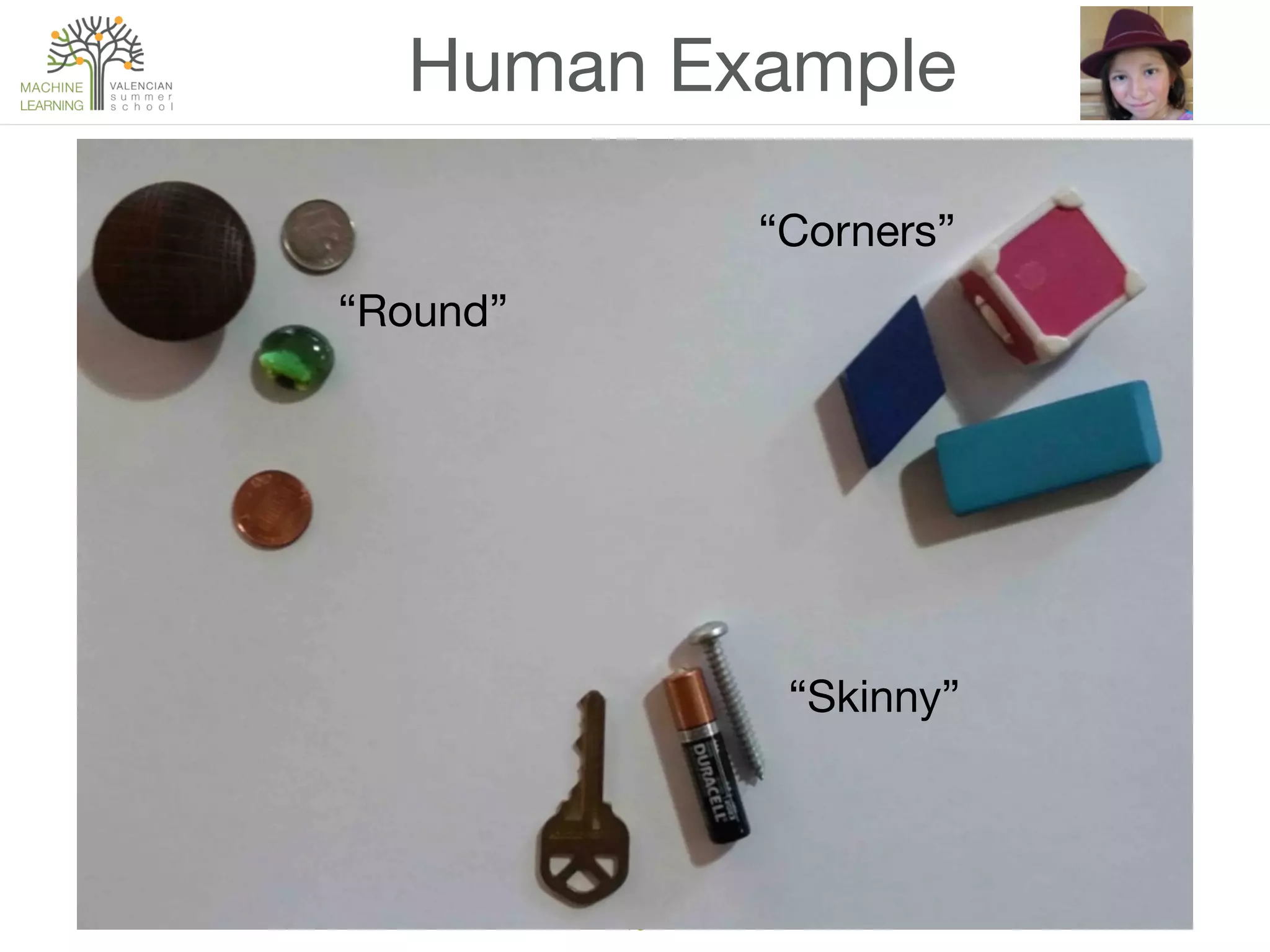

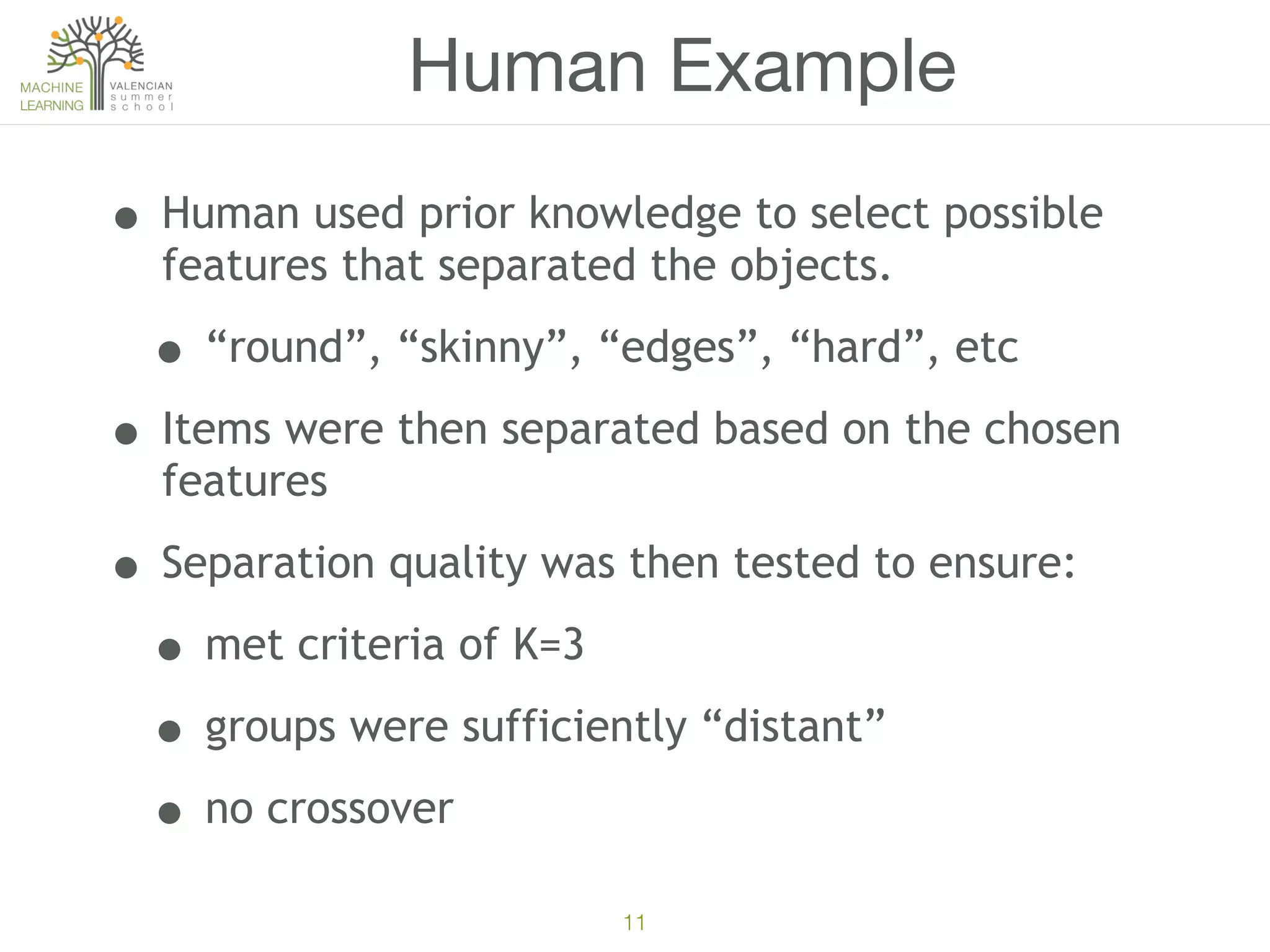

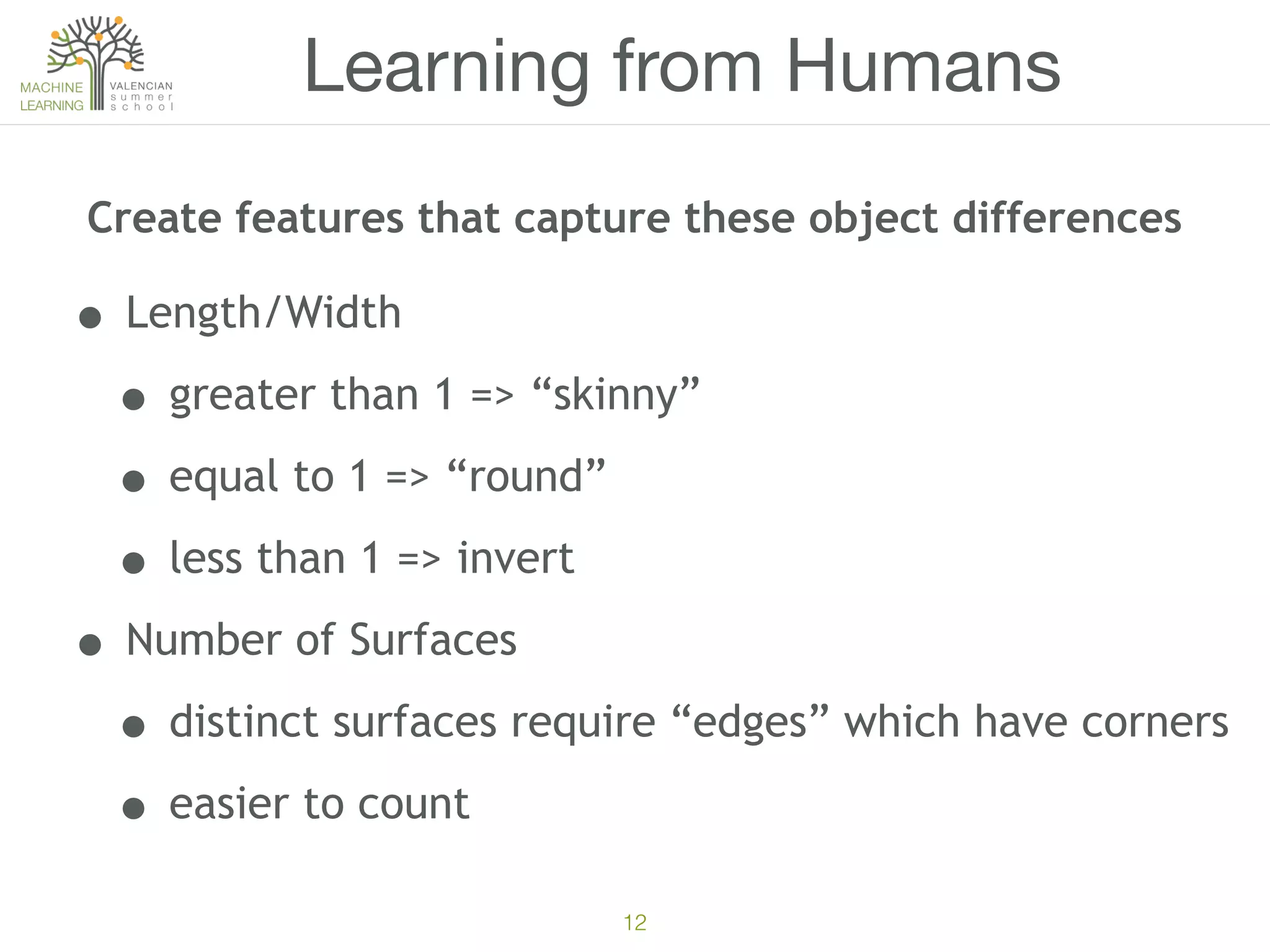

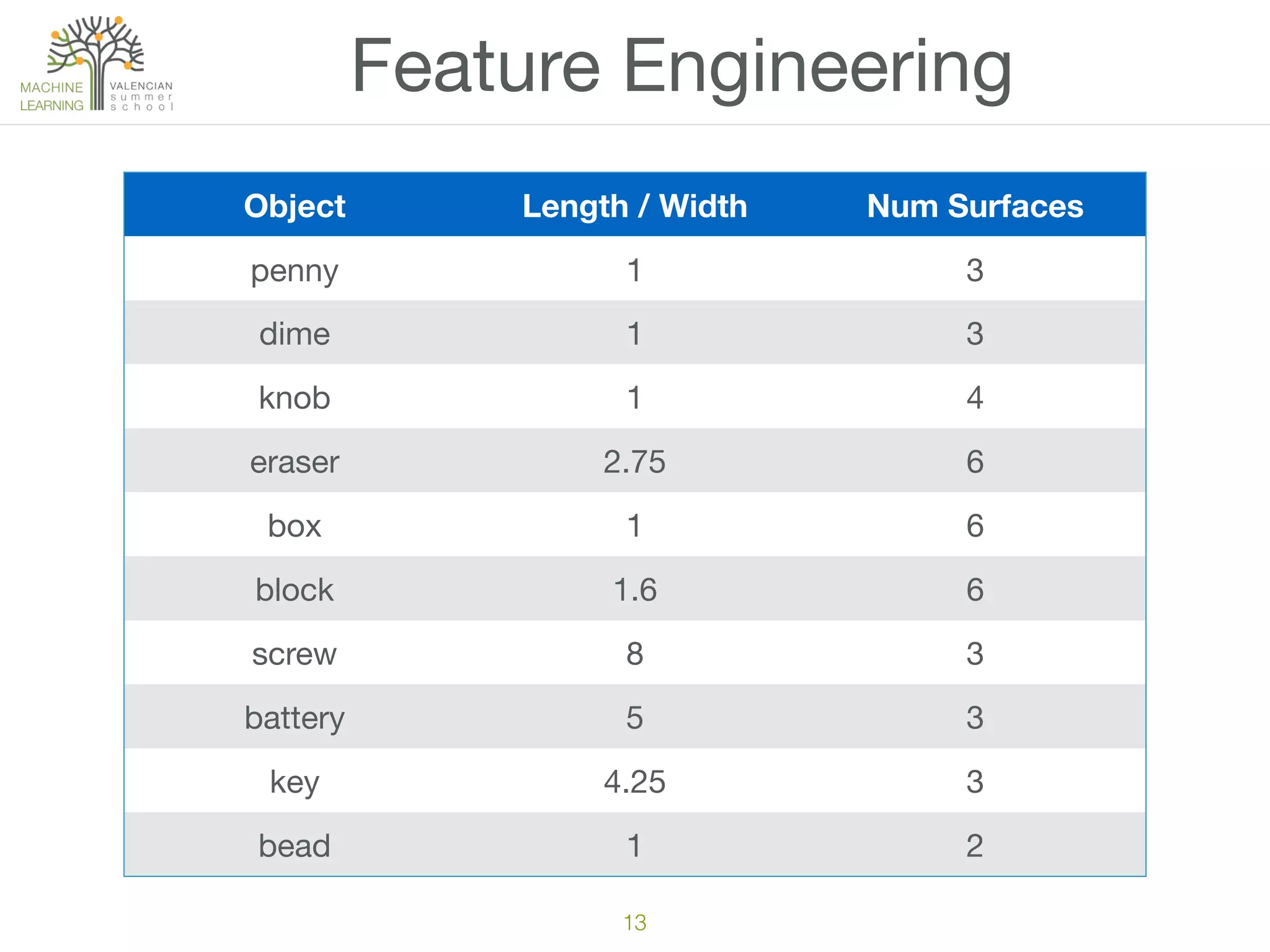

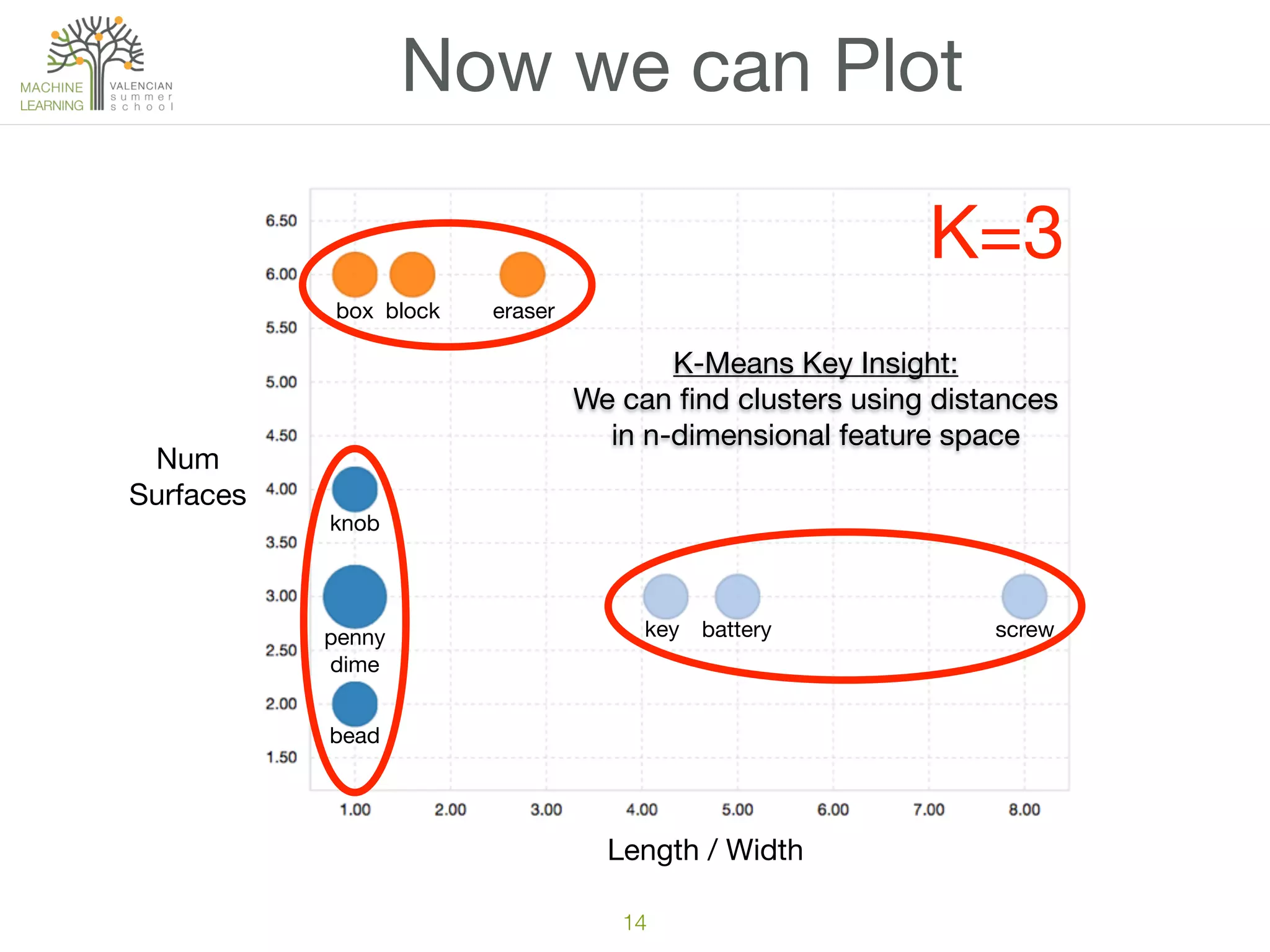

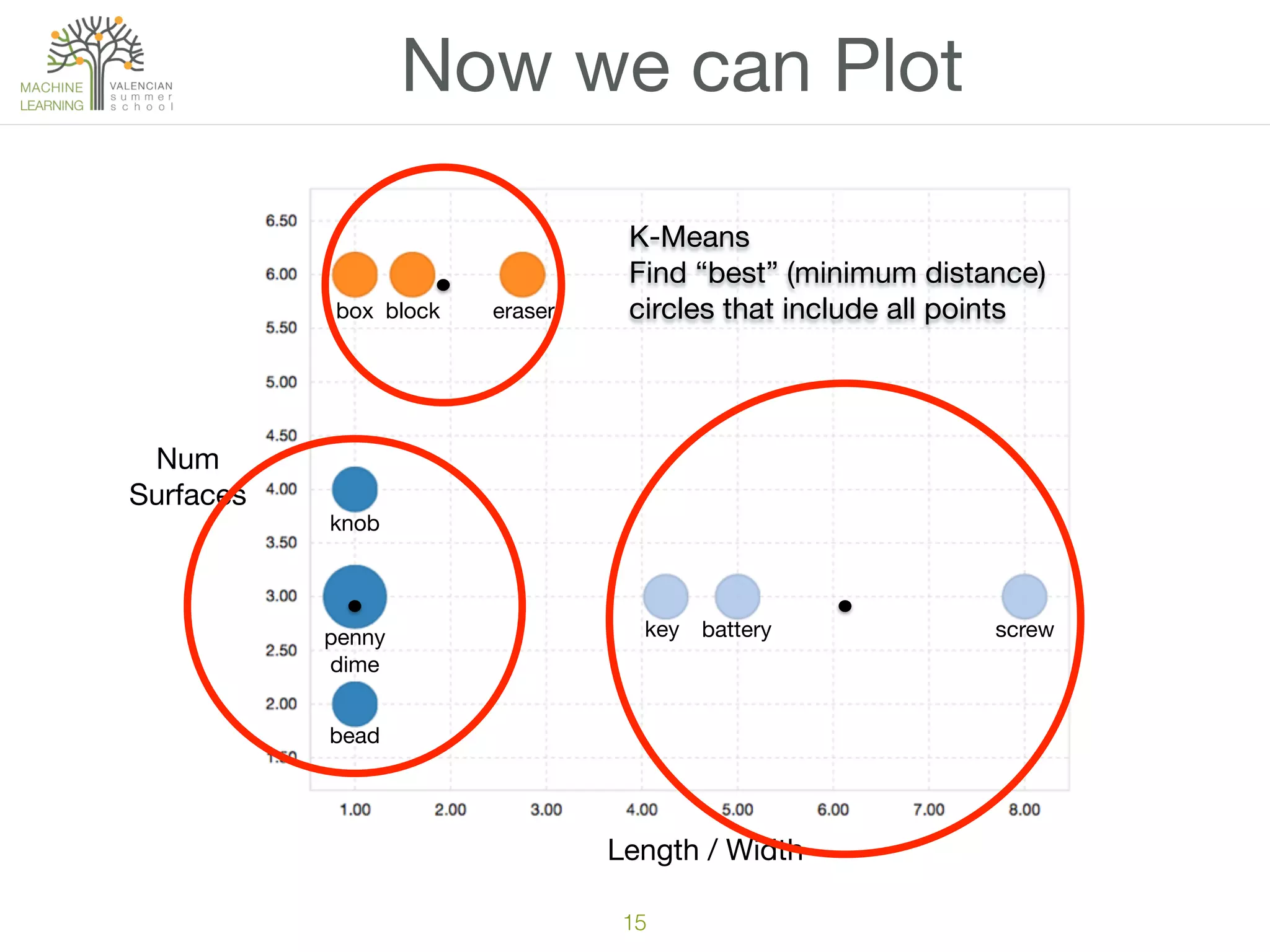

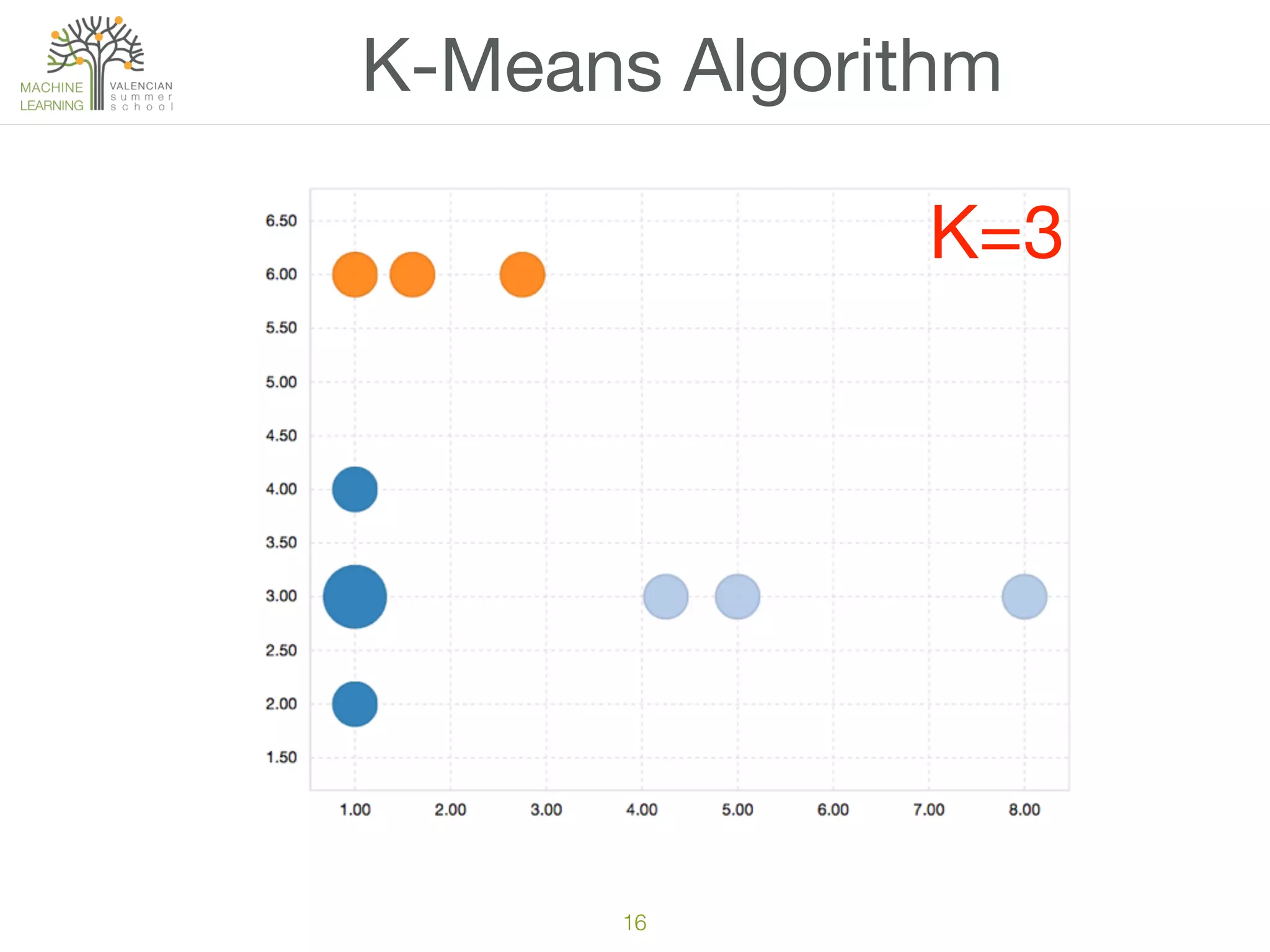

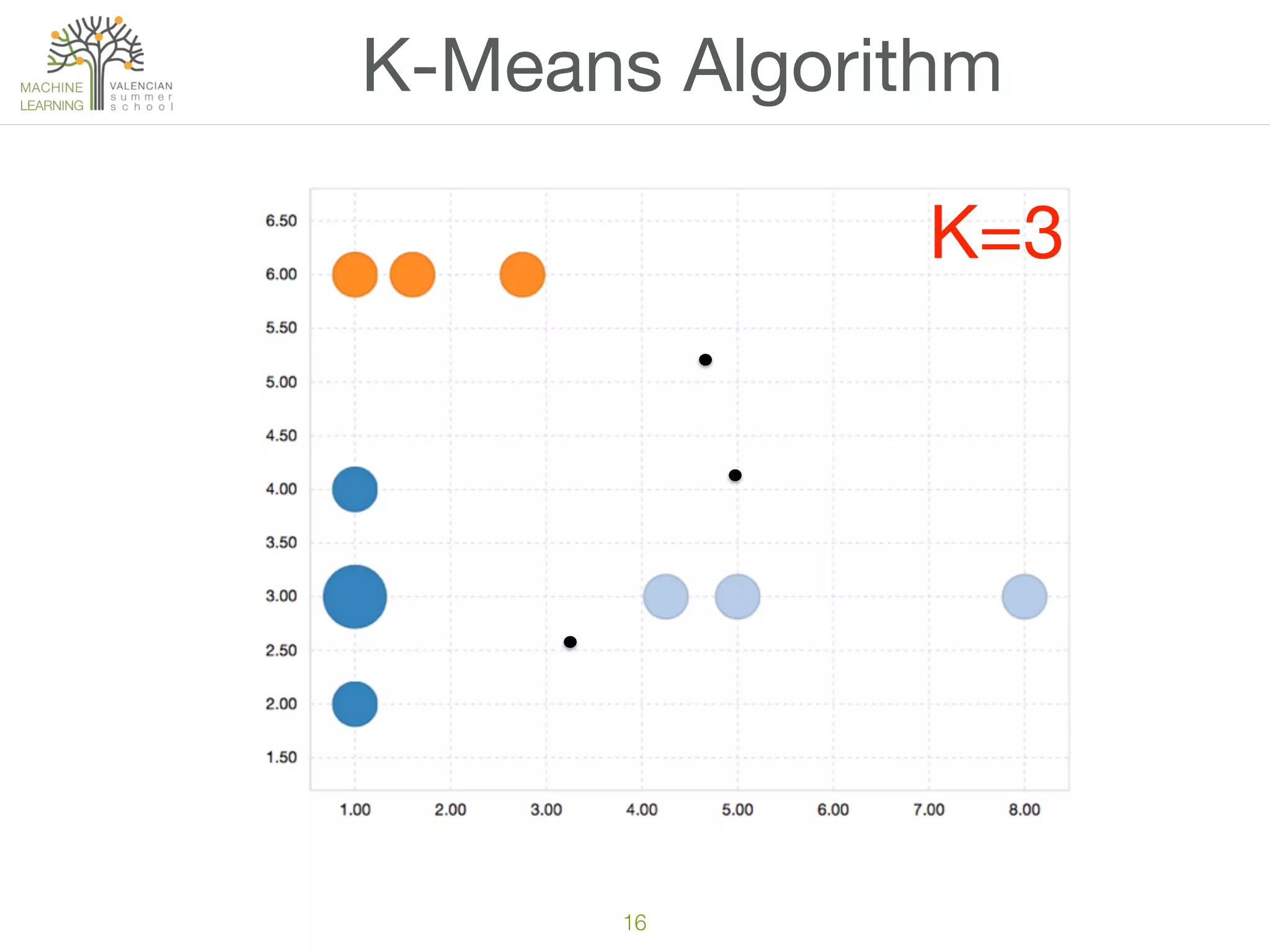

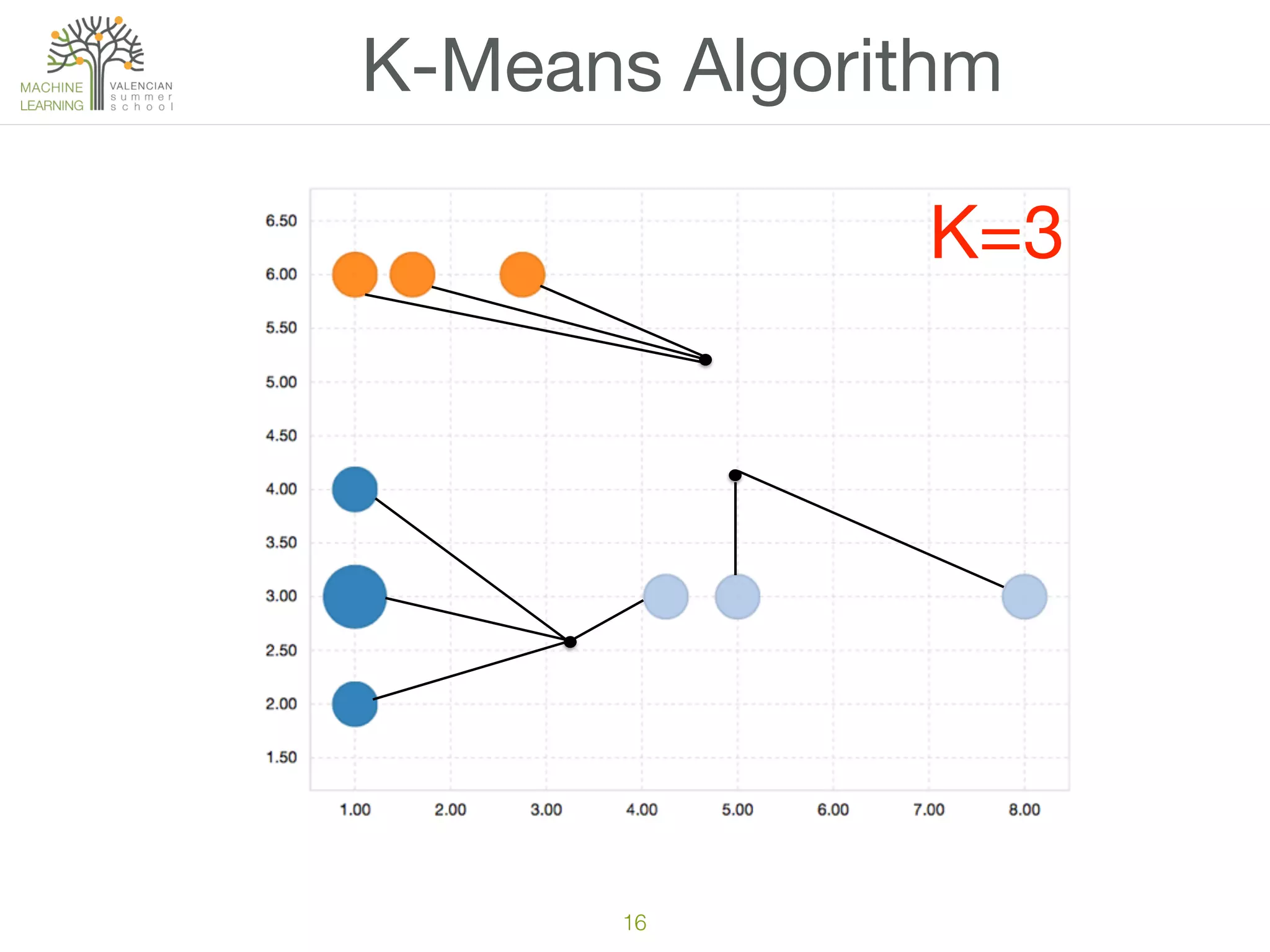

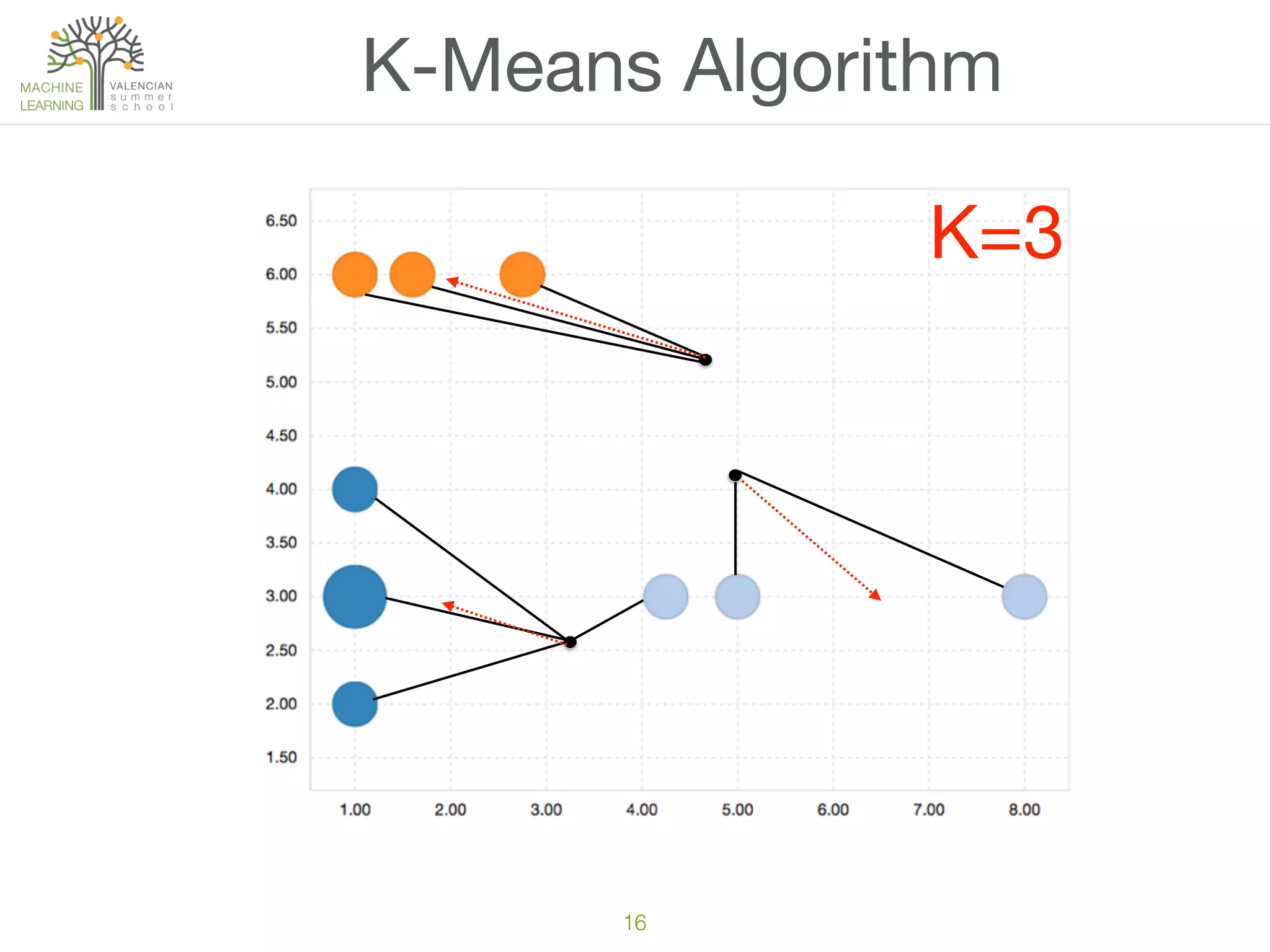

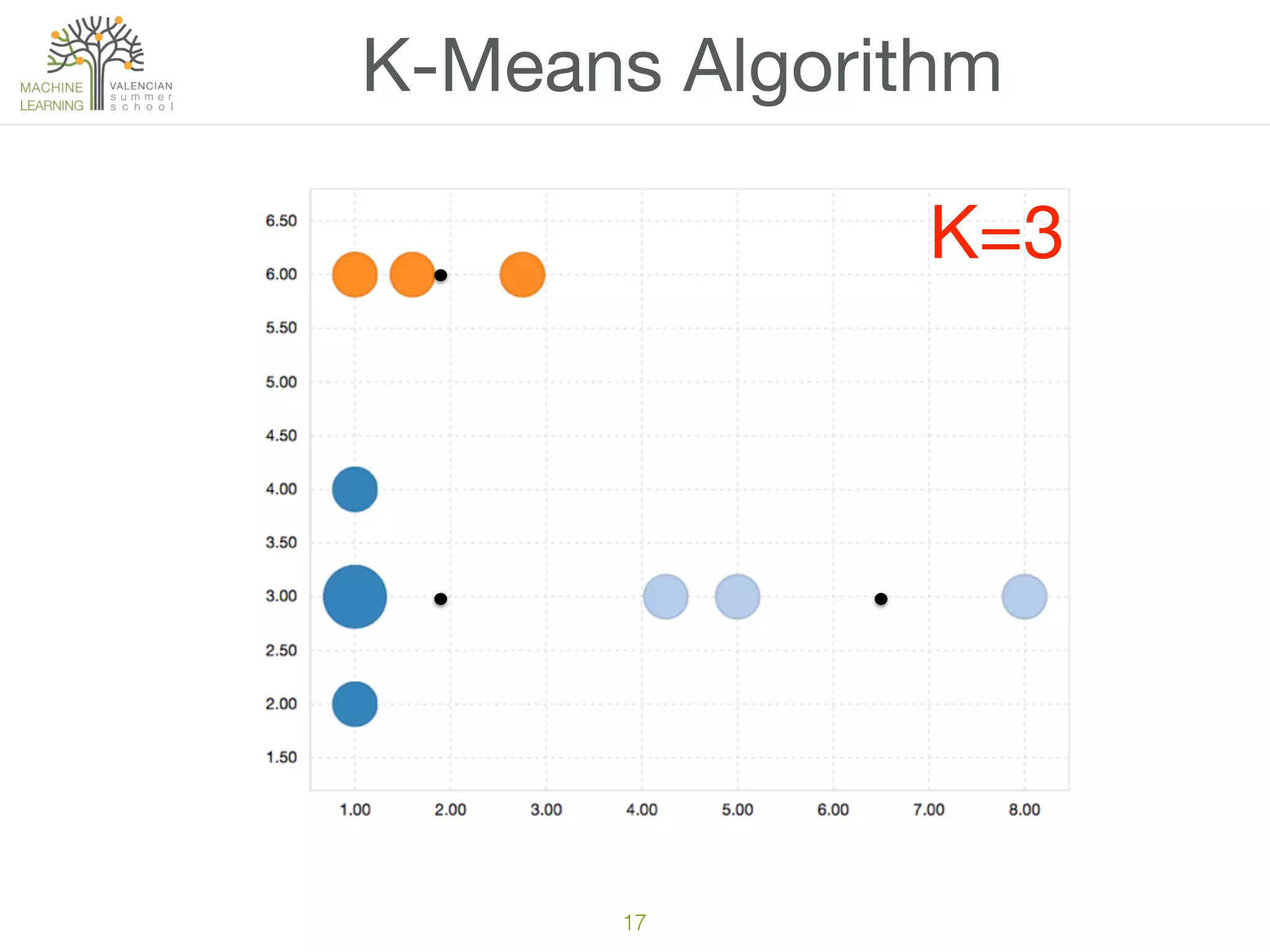

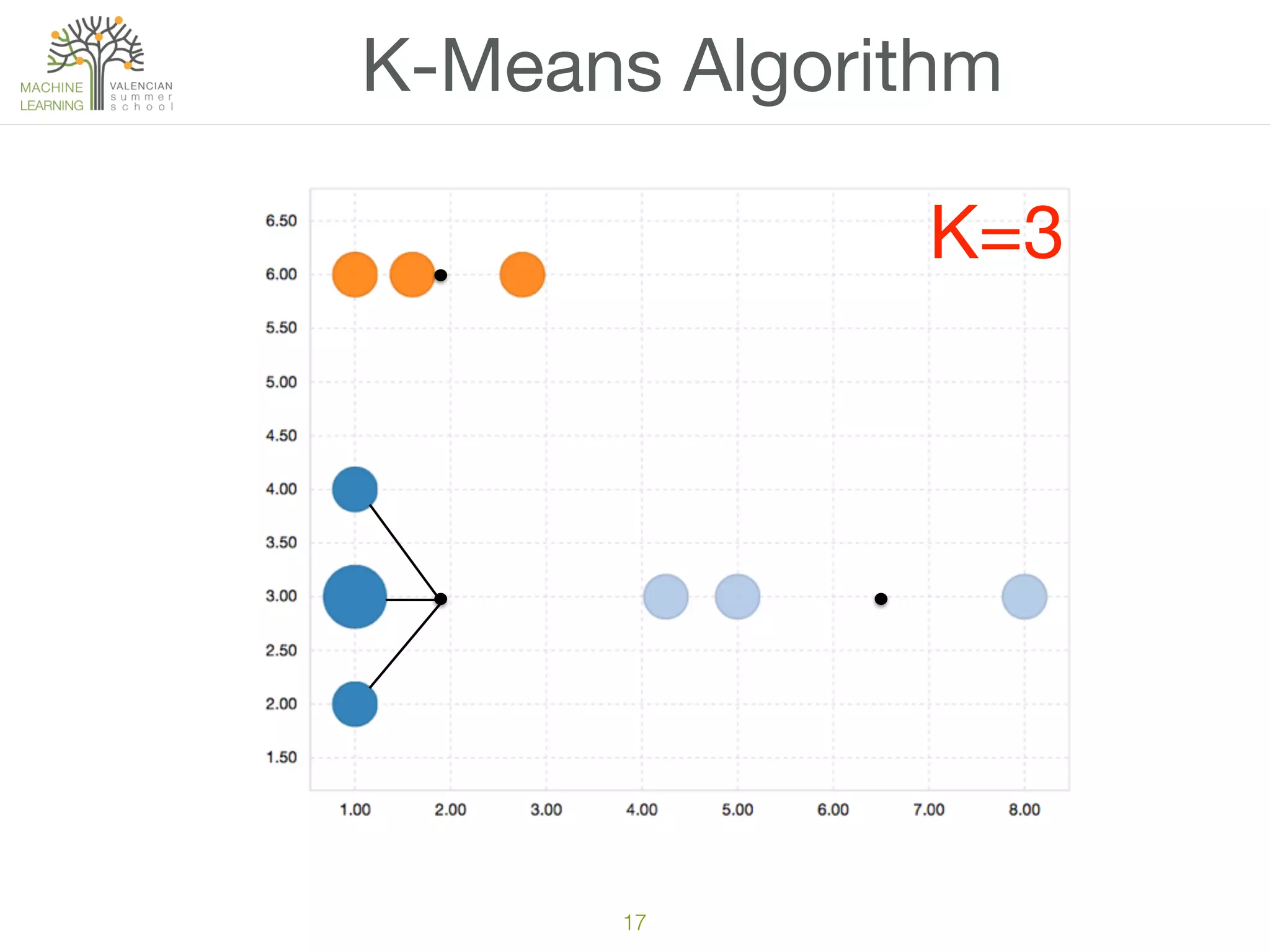

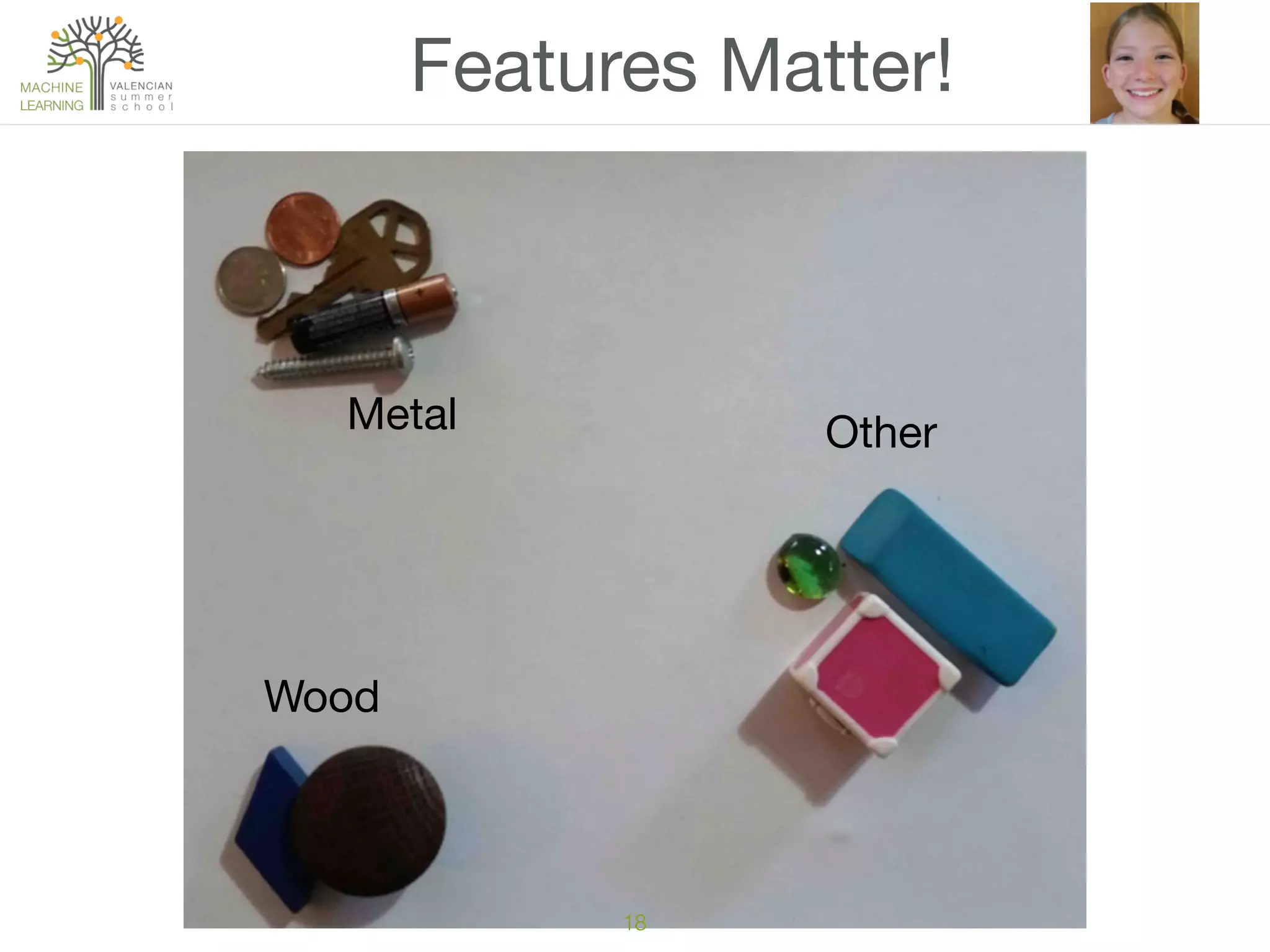

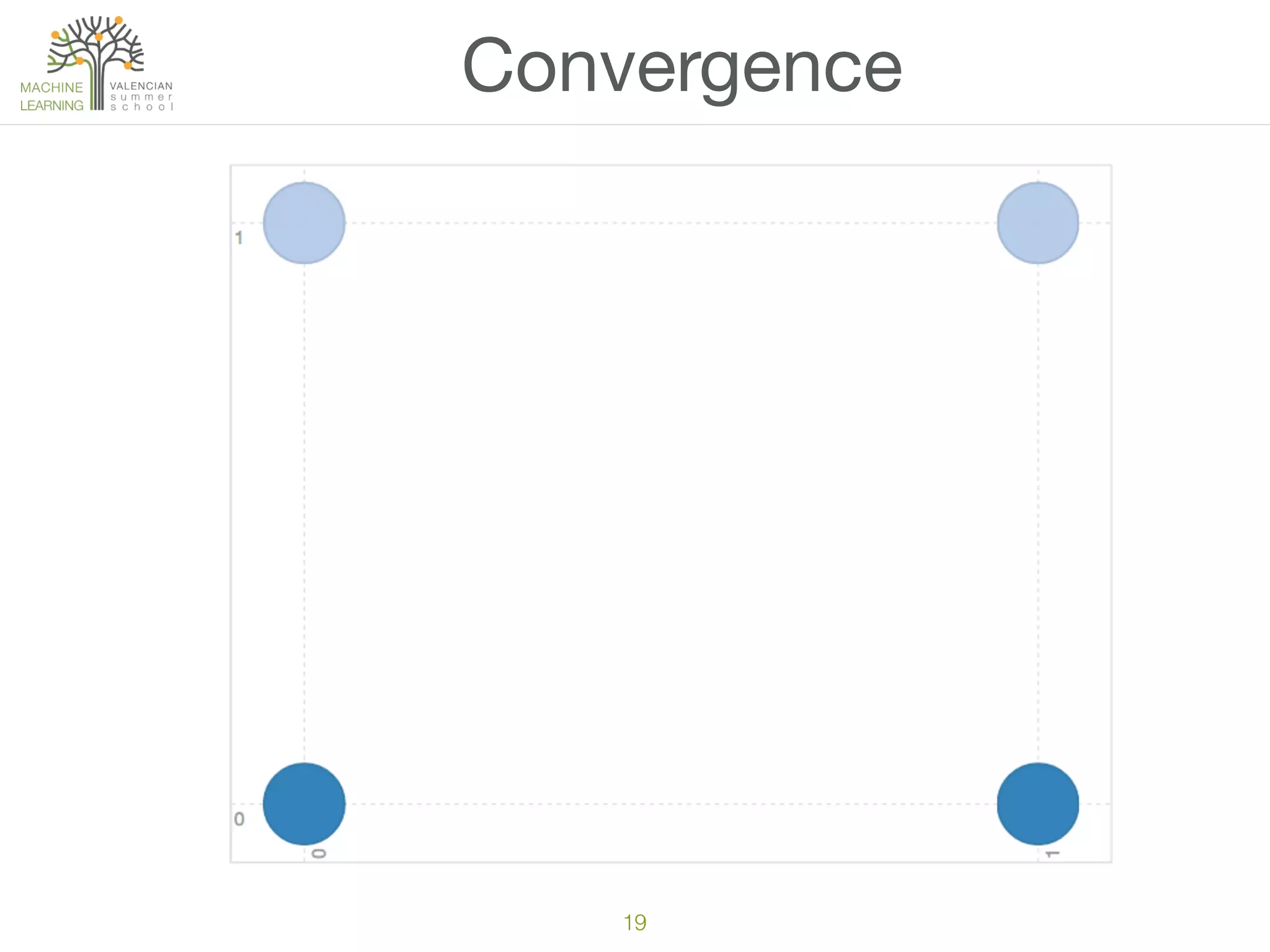

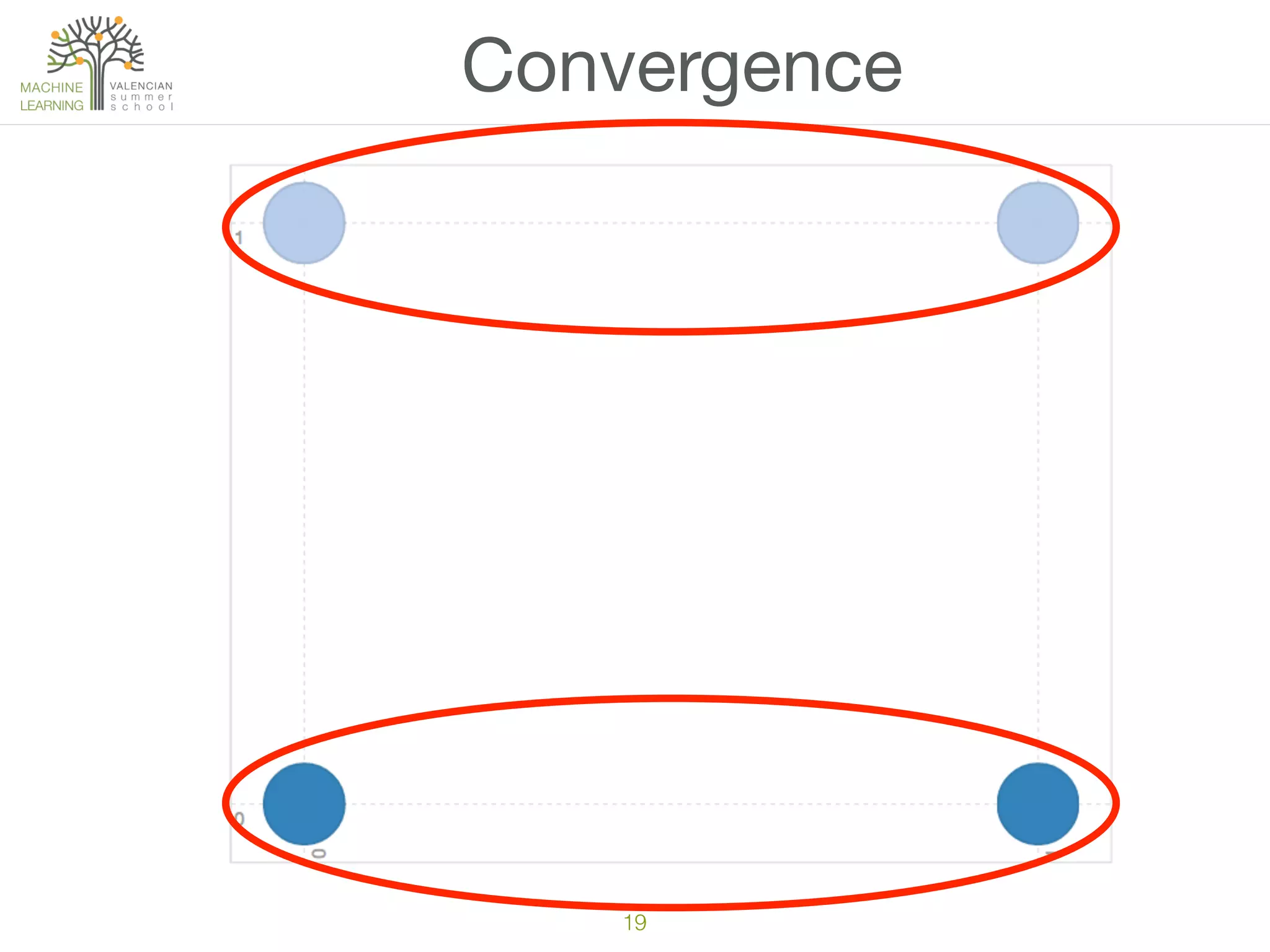

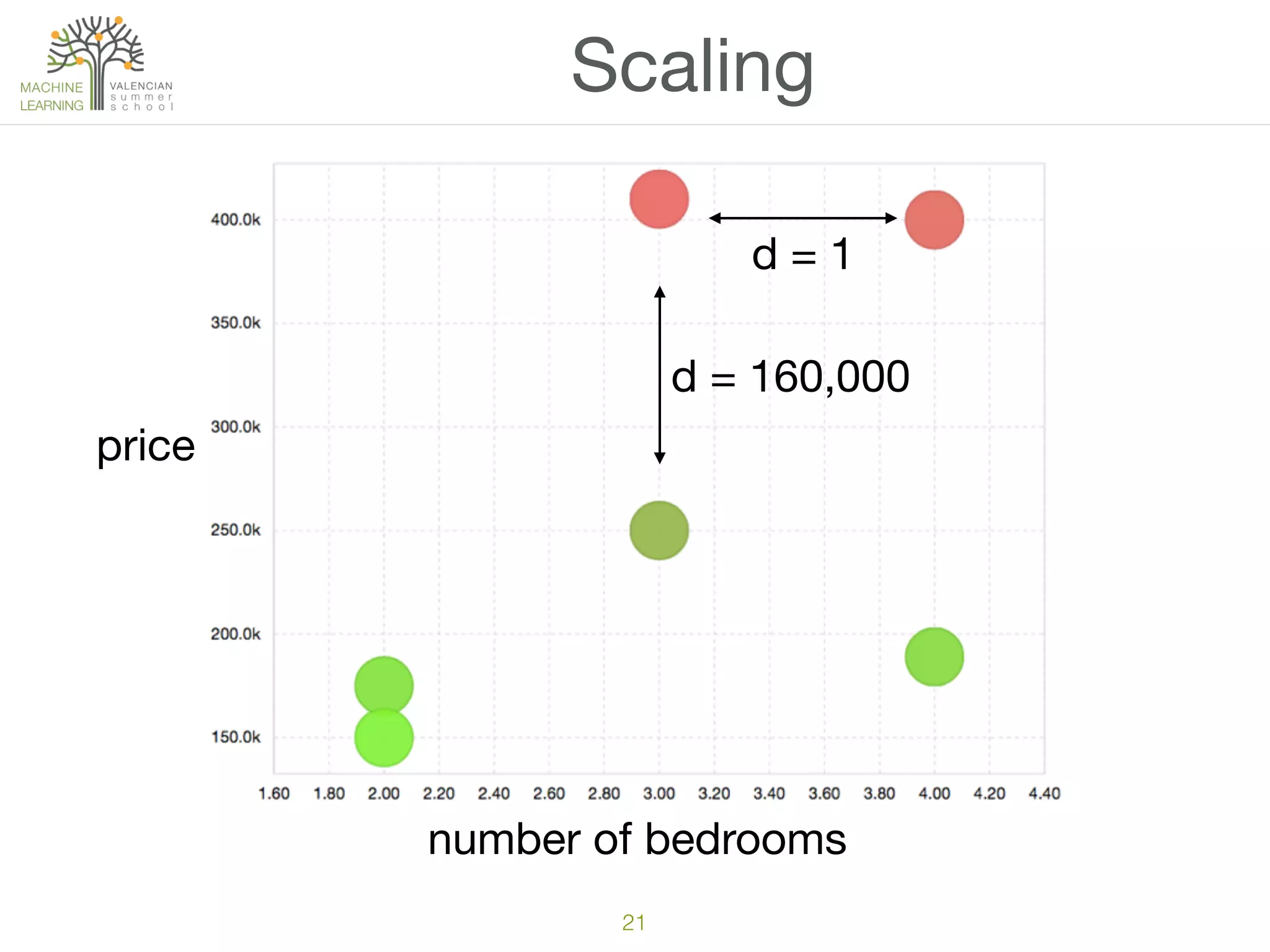

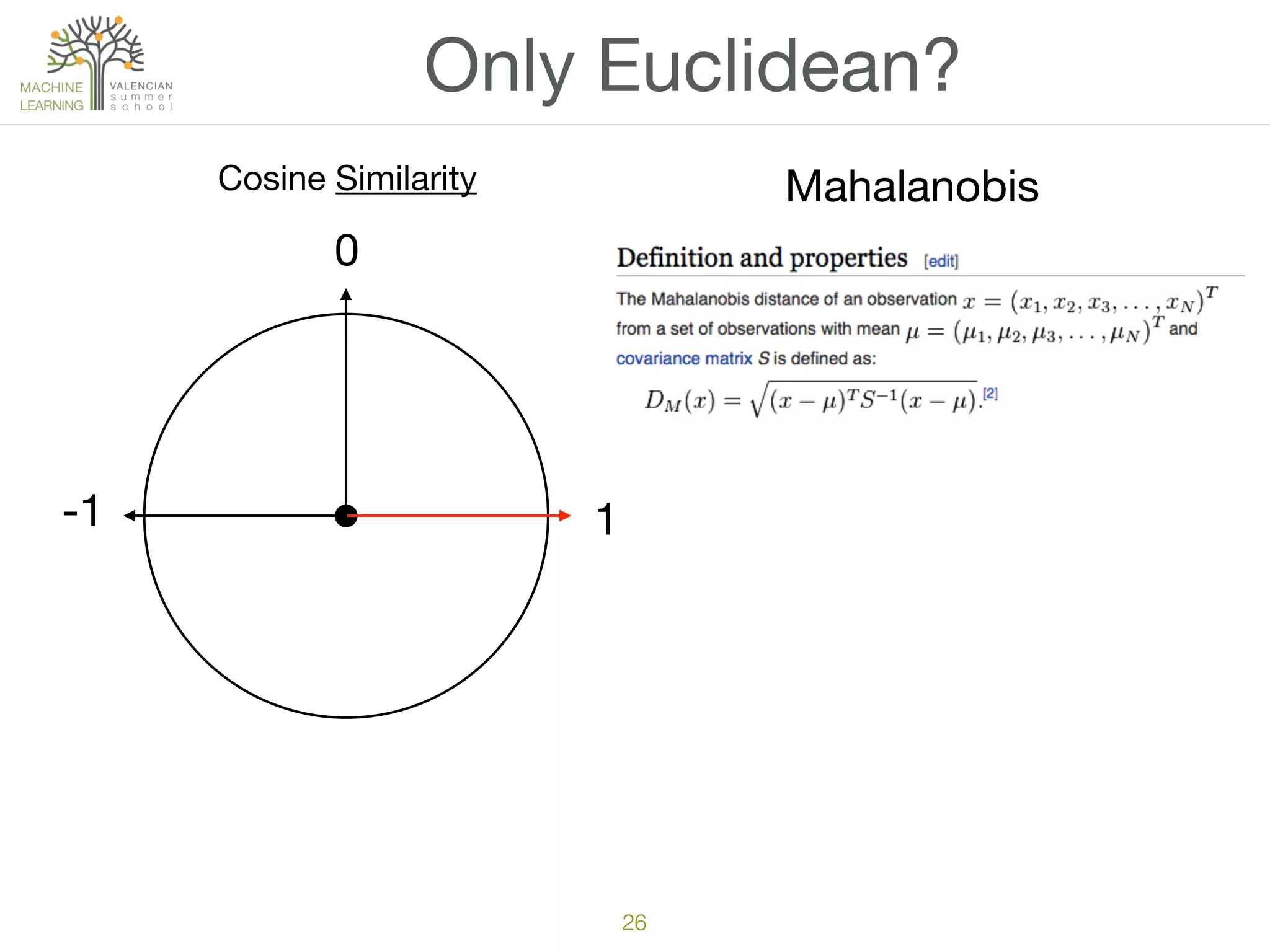

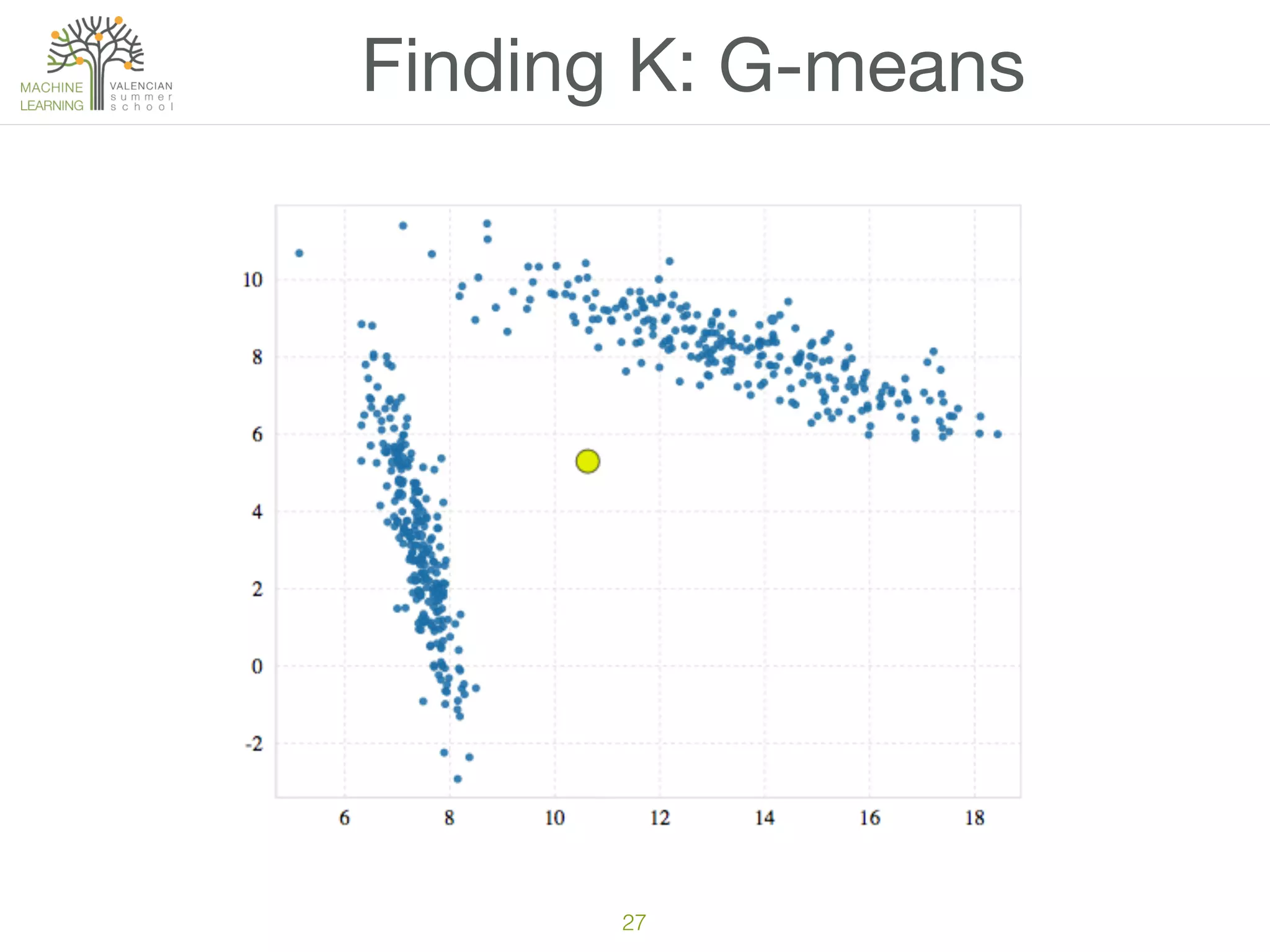

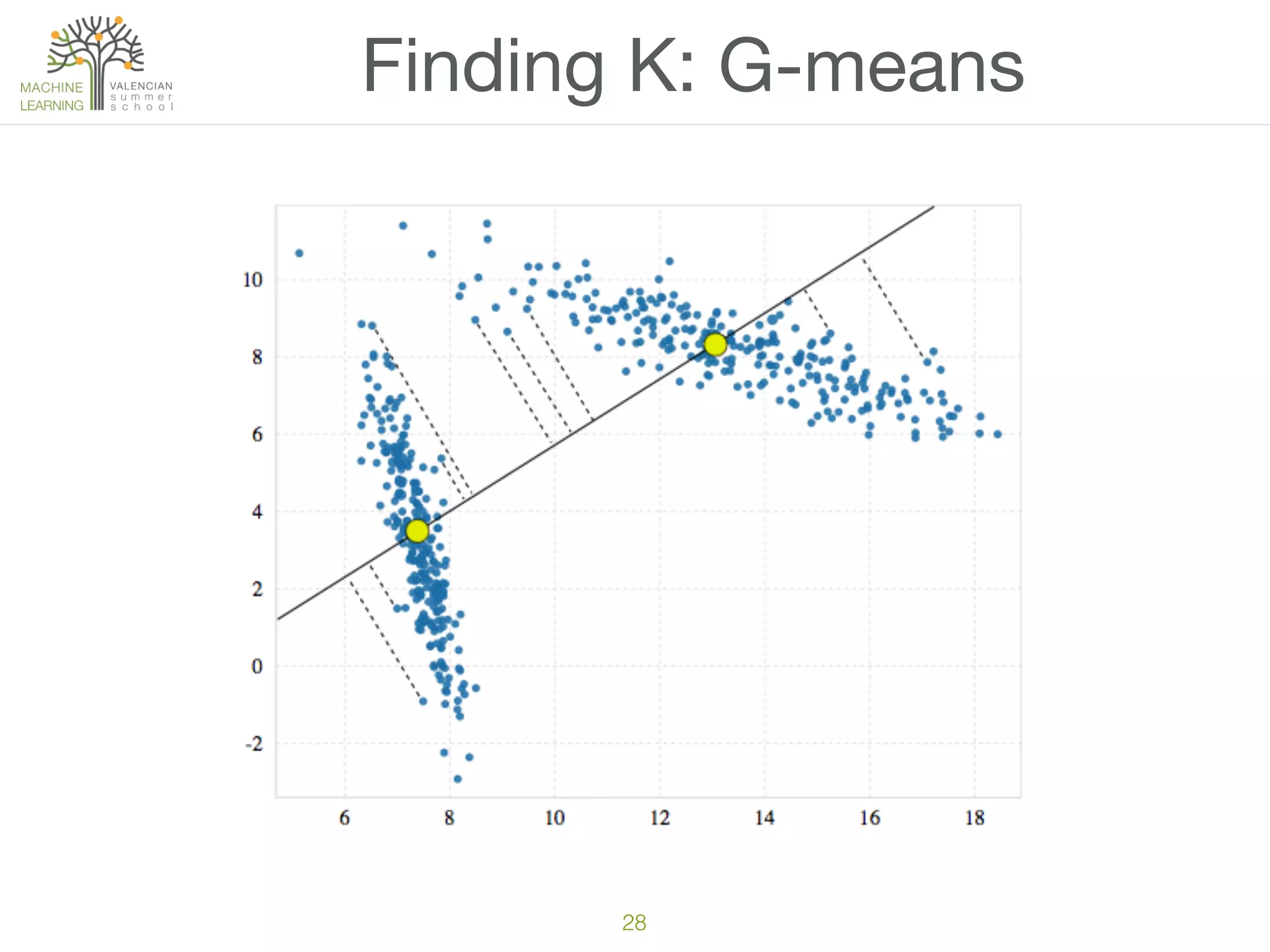

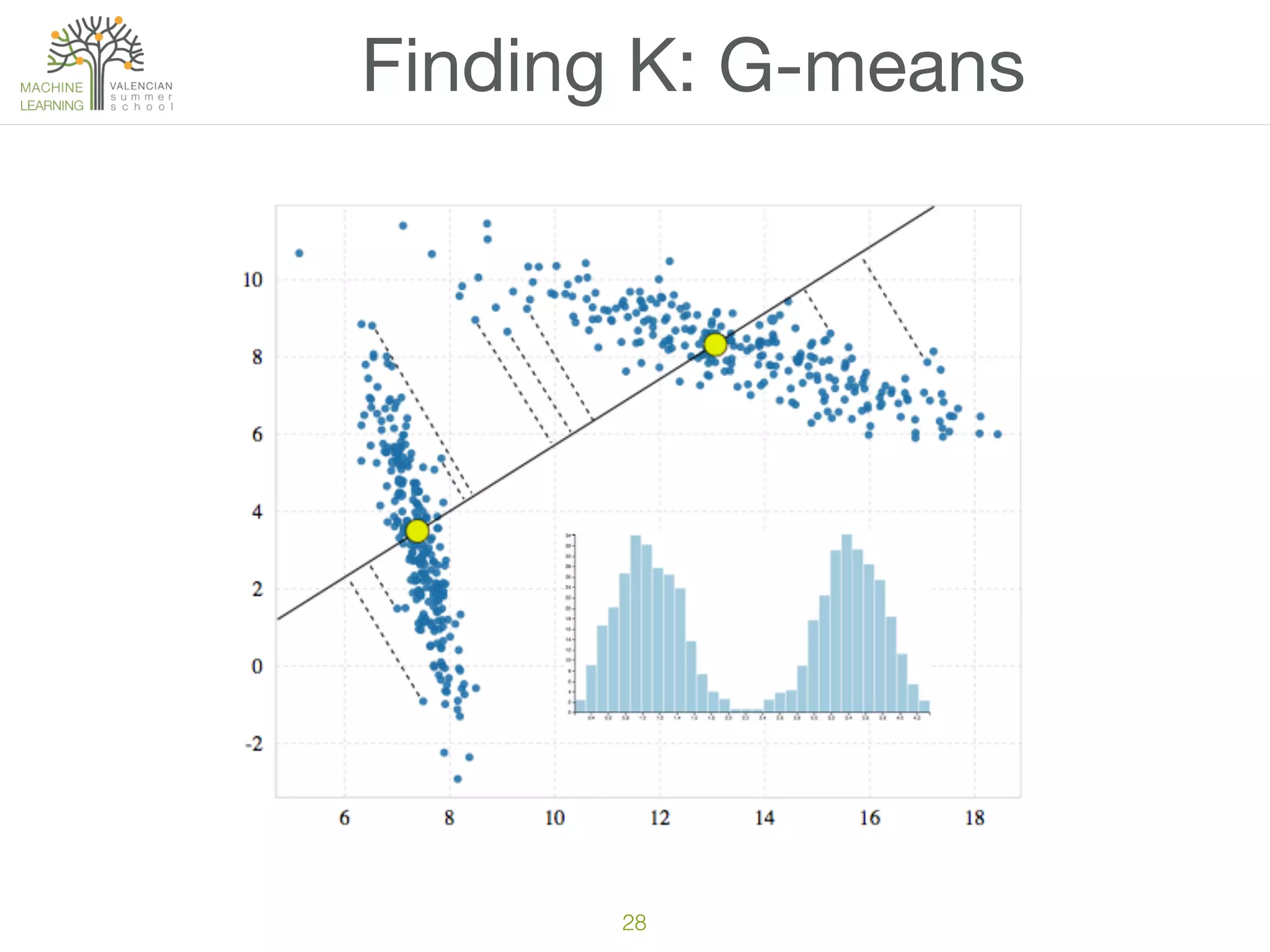

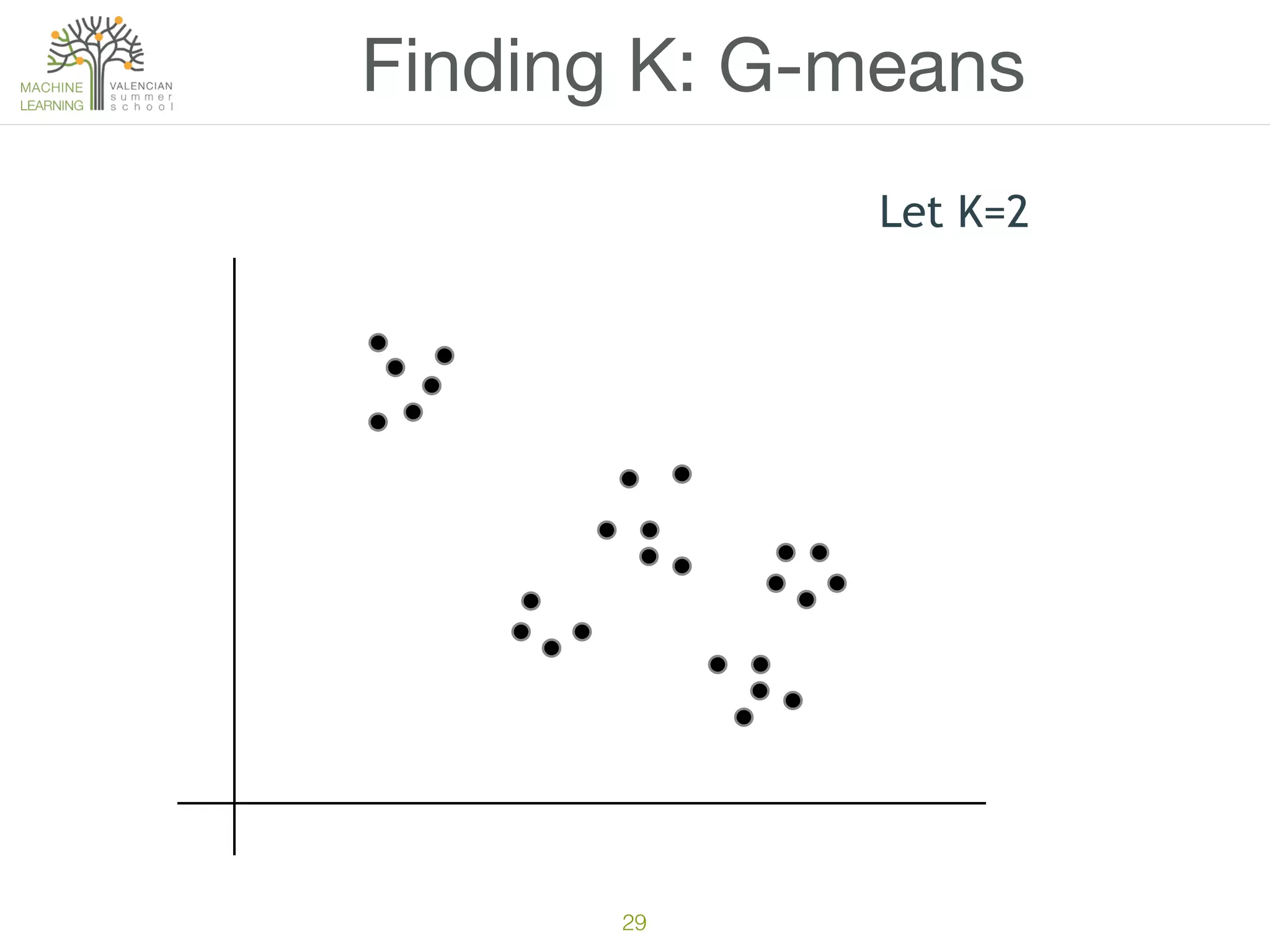

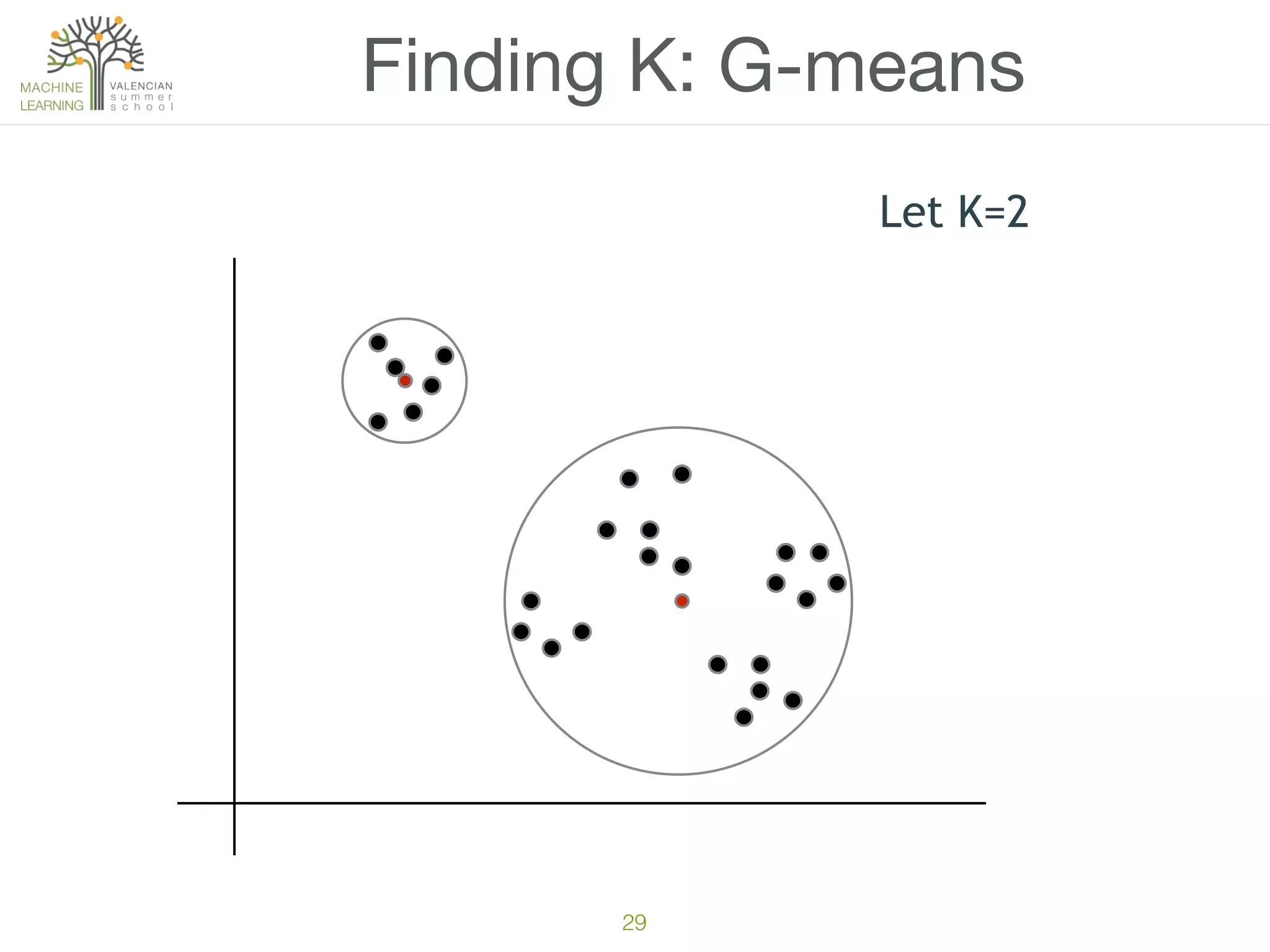

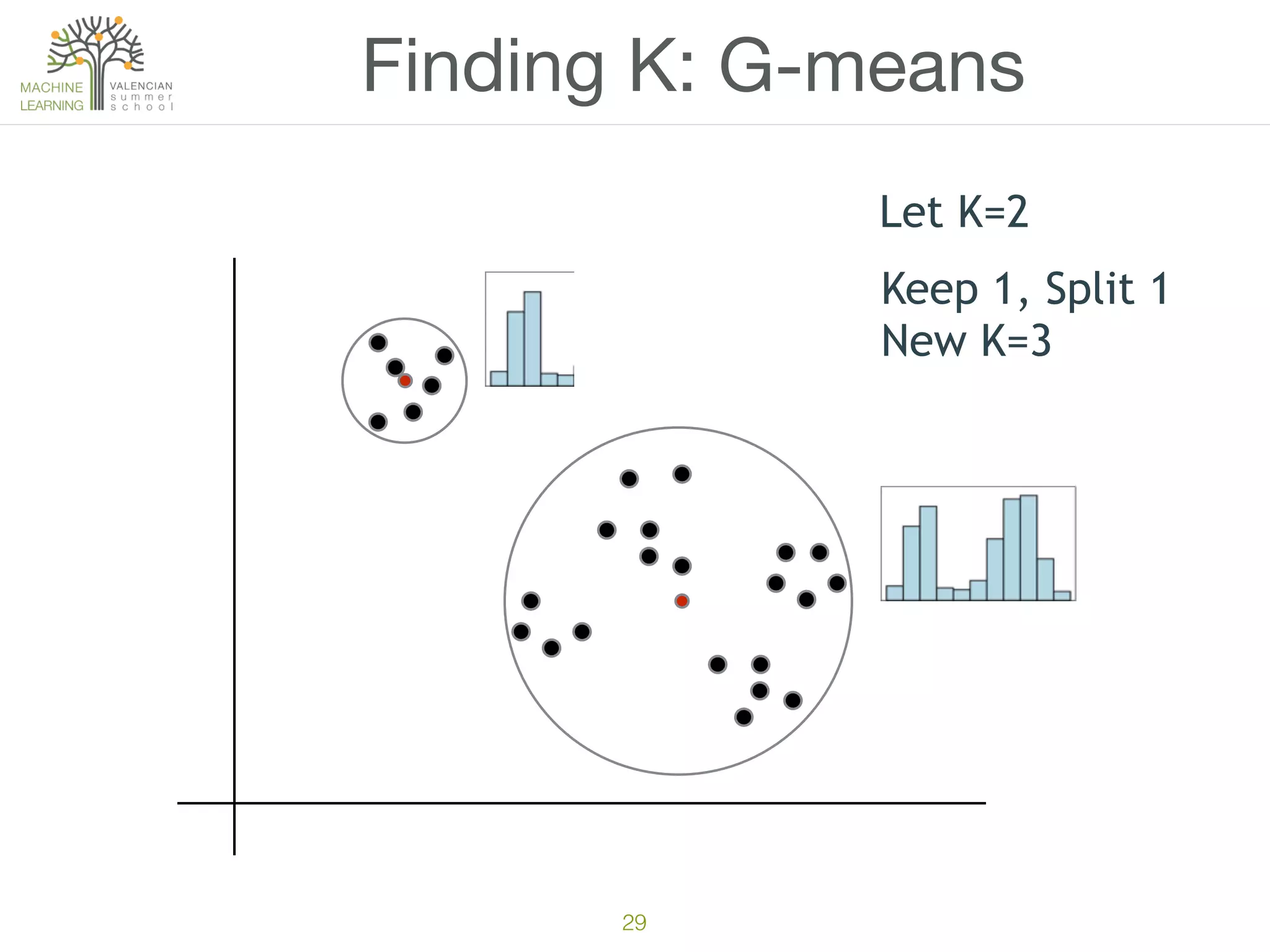

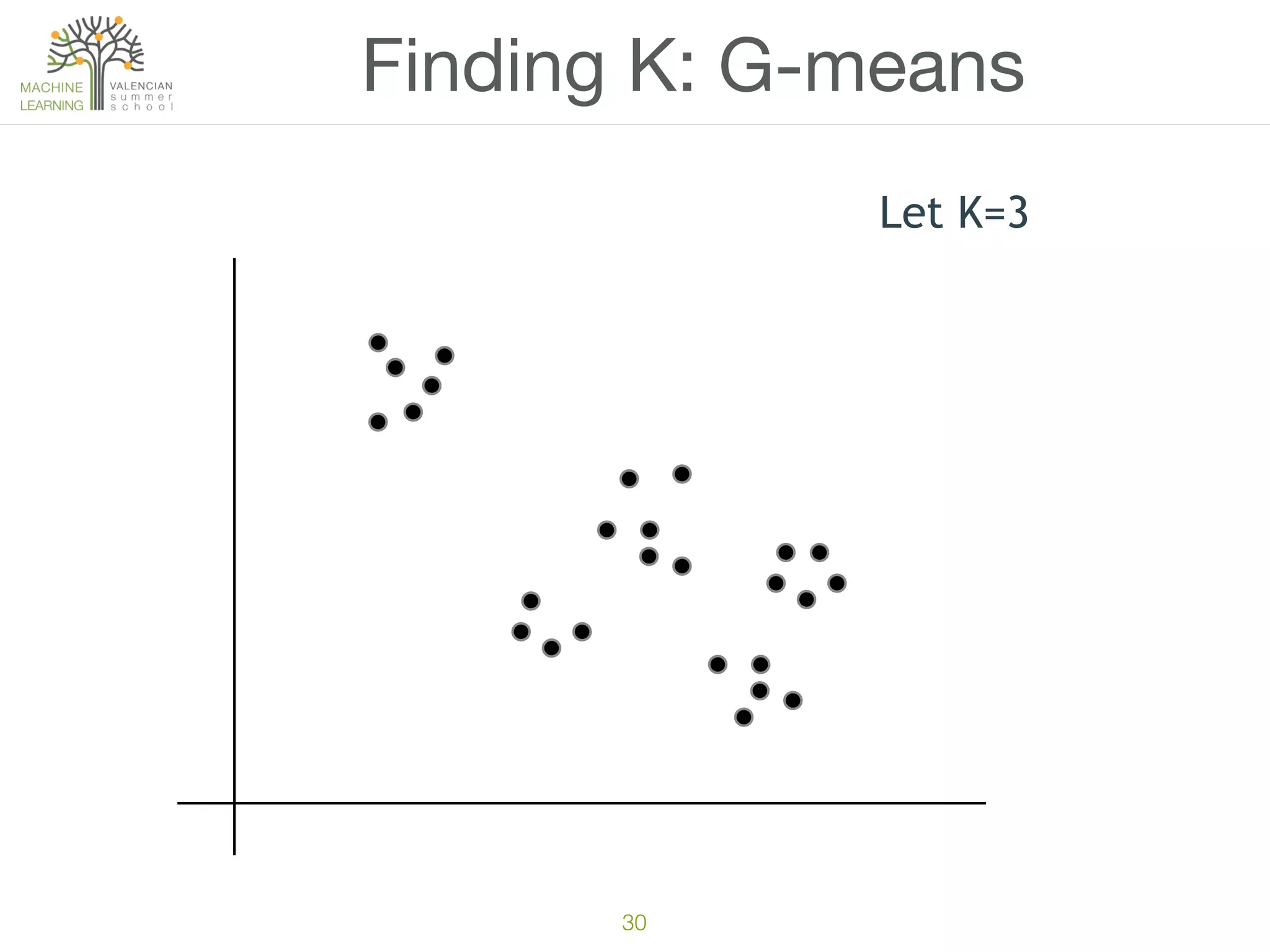

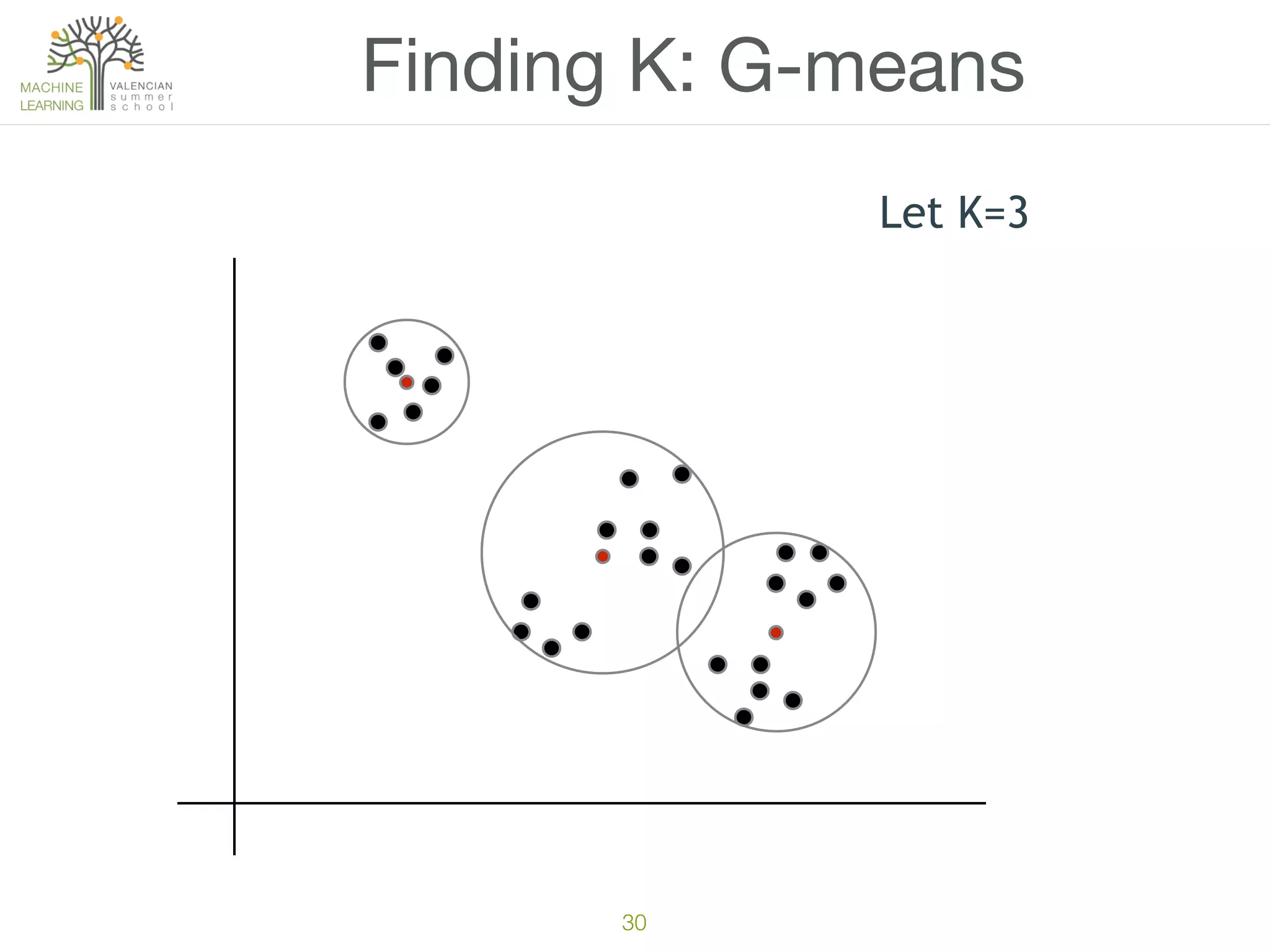

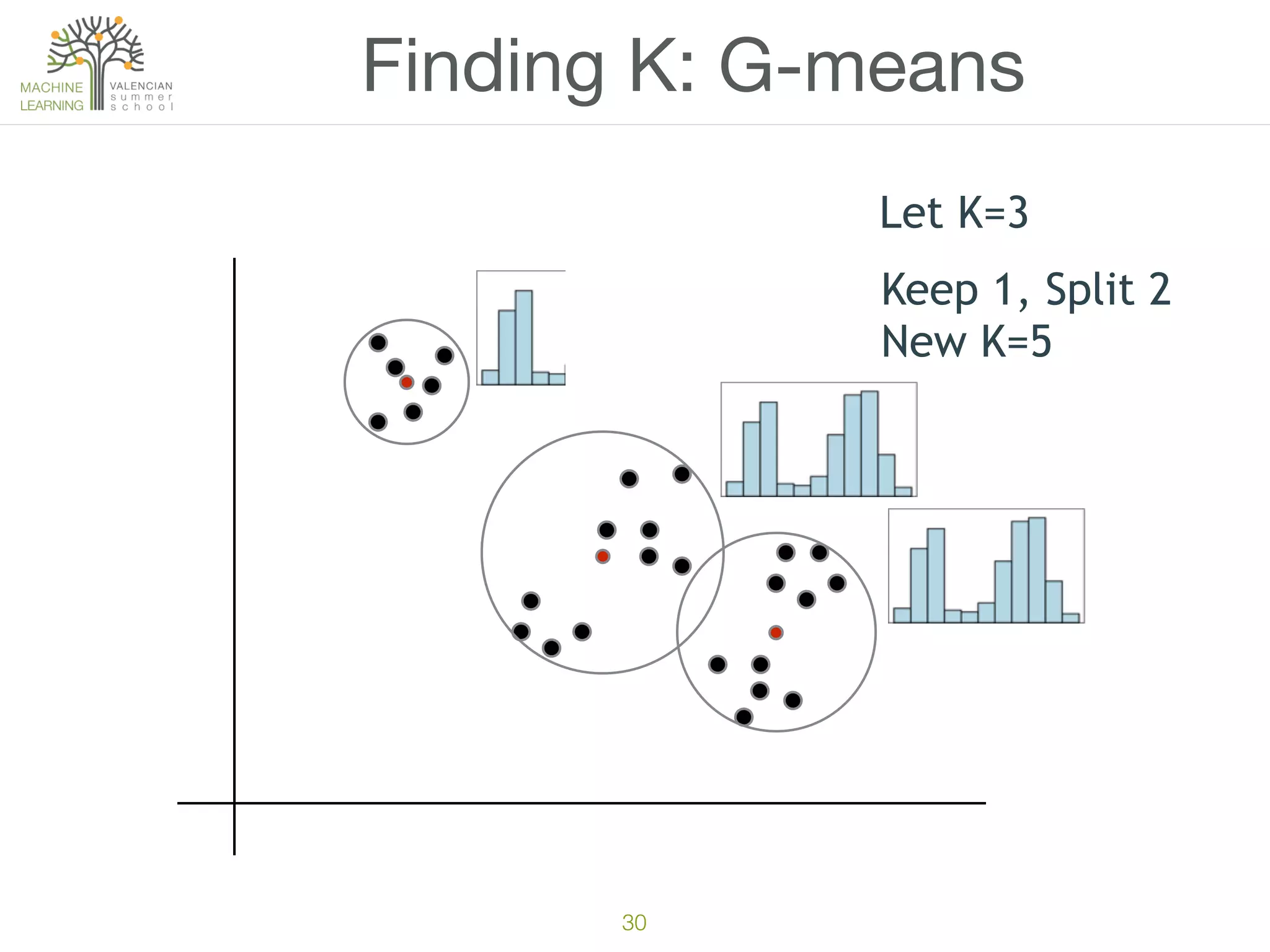

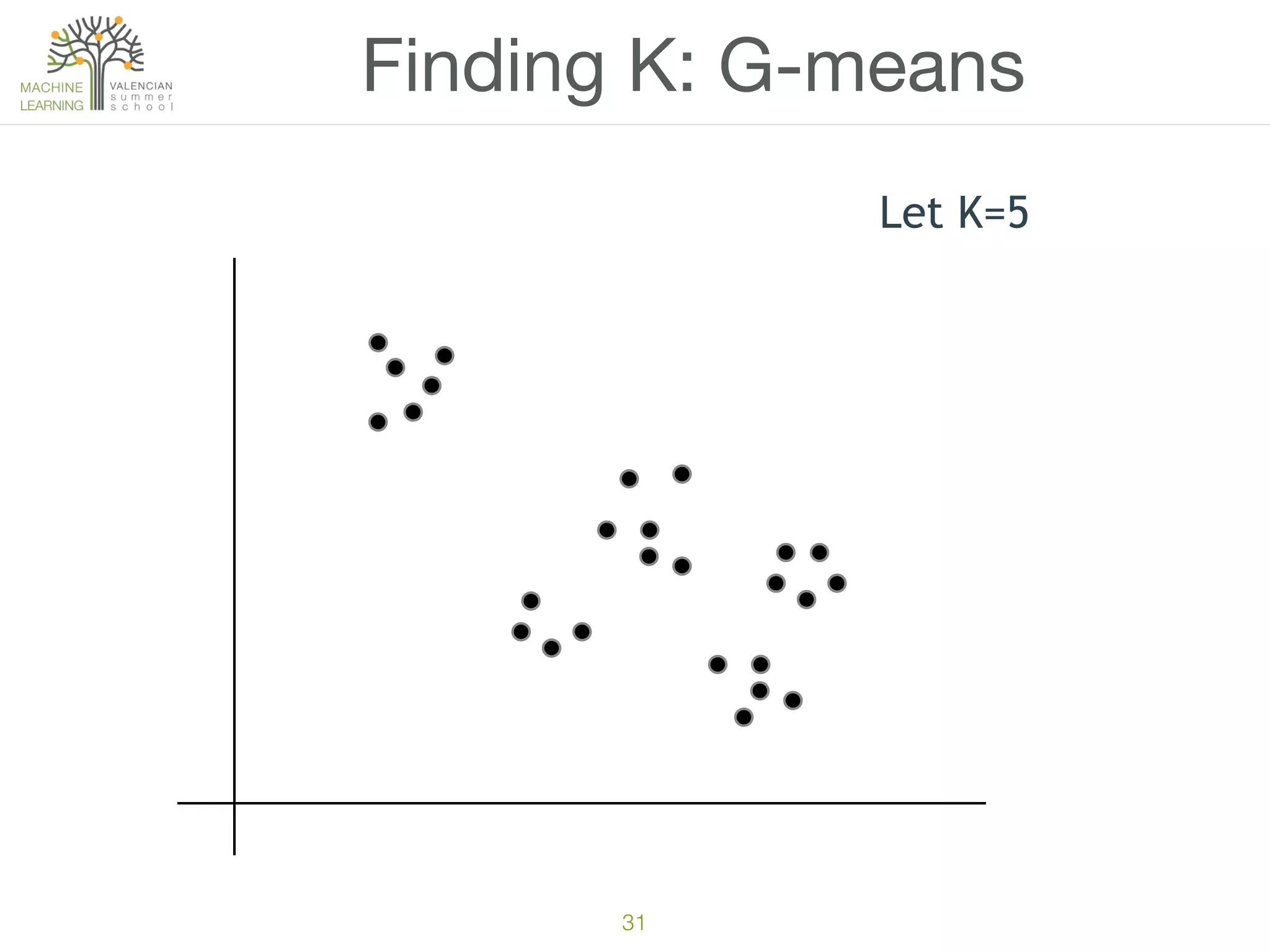

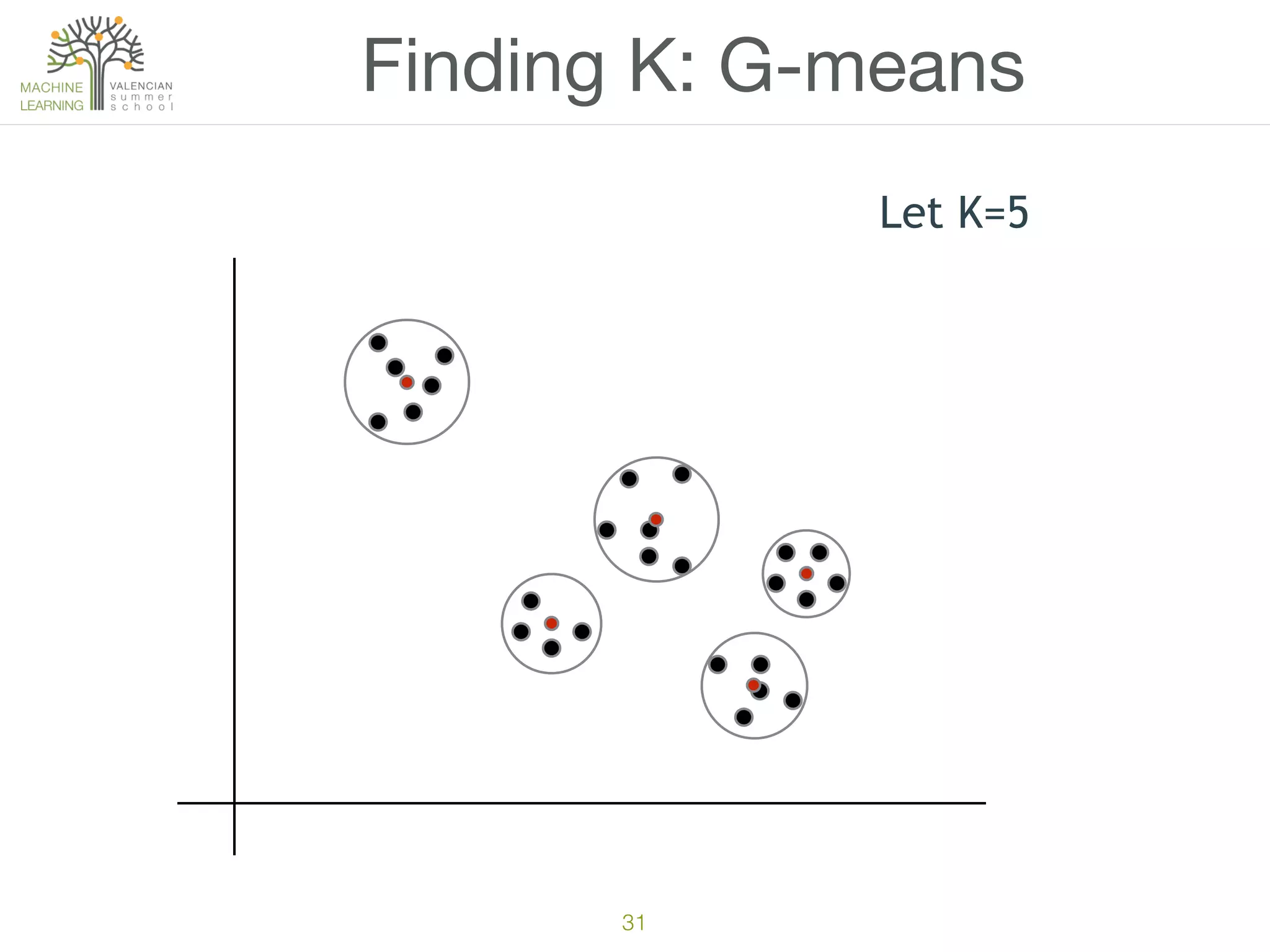

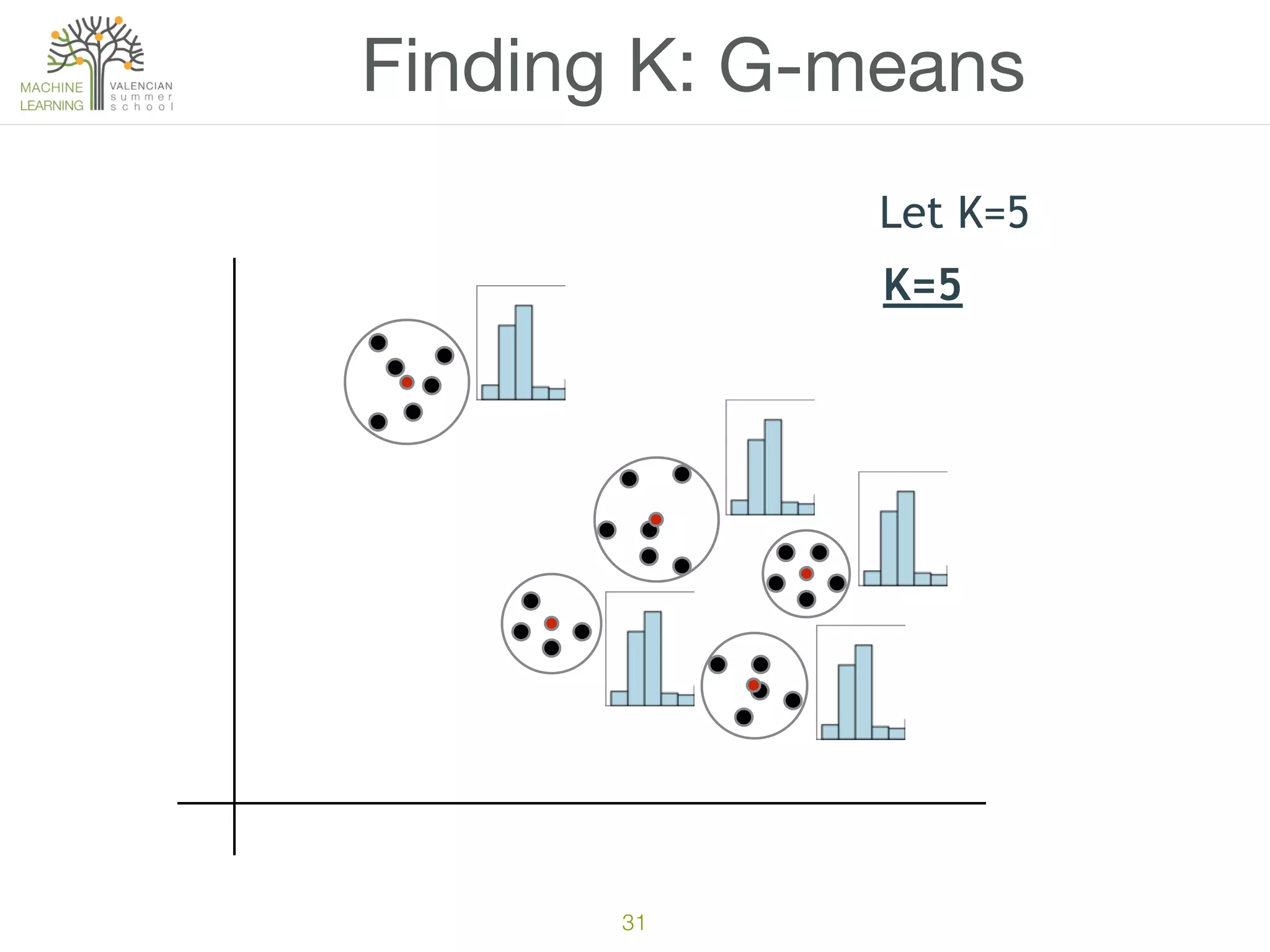

The document discusses concepts of cluster analysis in machine learning, contrasting supervised and unsupervised learning, and showcasing applications such as customer segmentation and item discovery. It introduces the k-means algorithm, feature engineering, and methods for handling missing values and categorical data in clustering contexts. Additionally, it describes techniques for determining the optimal number of clusters (k) using approaches like g-means.