The document discusses memory allocation in the Linux kernel, specifically detailing the implementations of malloc through brk() and the implications of using mmap() versus brk() based upon allocation sizes. It highlights the distinctions between kmalloc (for contiguous memory) and vmalloc (for non-contiguous memory) along with their interactions with the slab allocator. Additionally, the document provides insights into the memory management process related to program launches and how the kernel handles address spaces and heap configurations.

![Agenda

• Memory Allocation in Linux

• malloc -> brk() implementation in Linux Kernel

oWill *NOT* focus on glibc malloc implementation: You can read this link: malloc internal

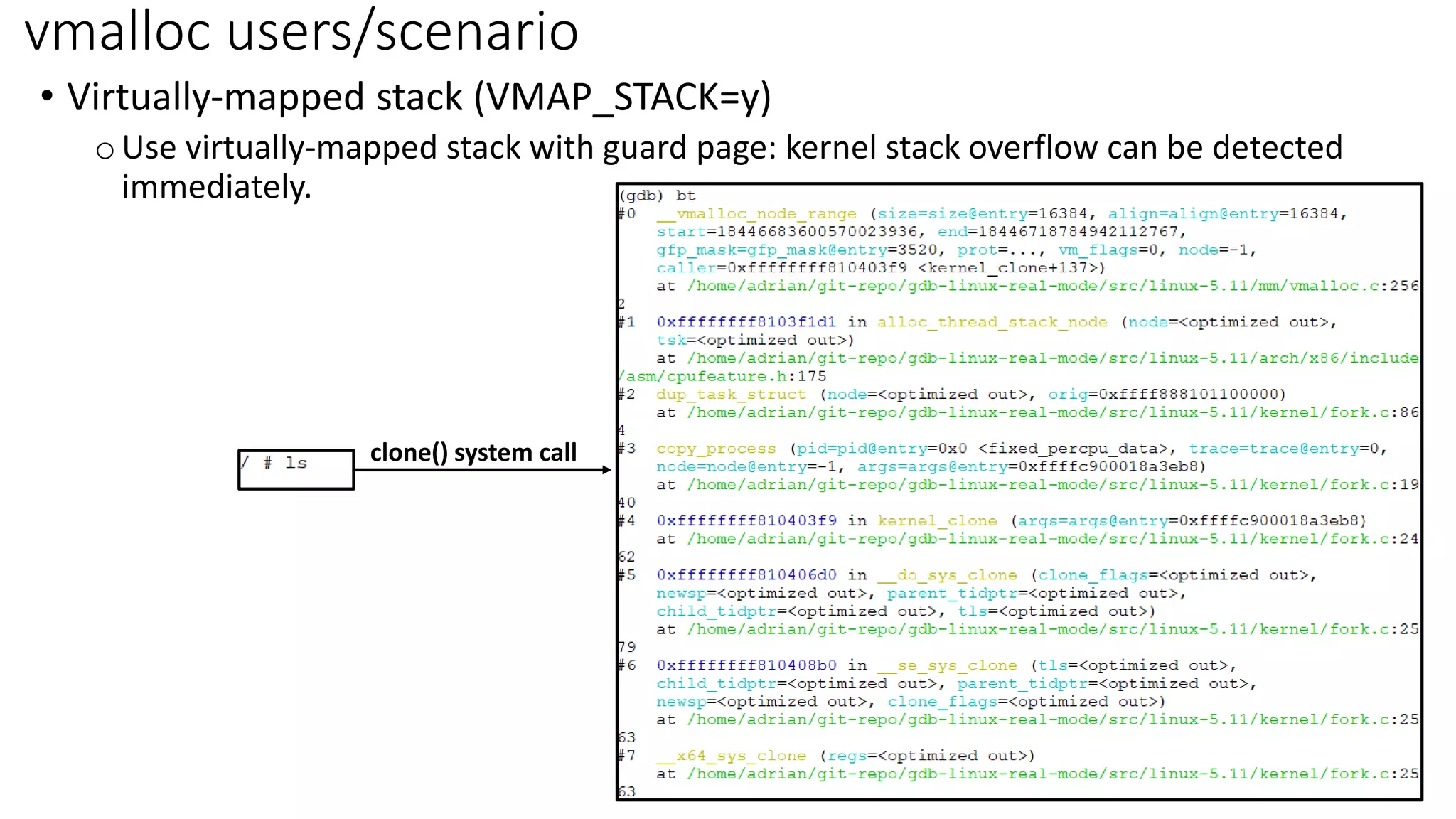

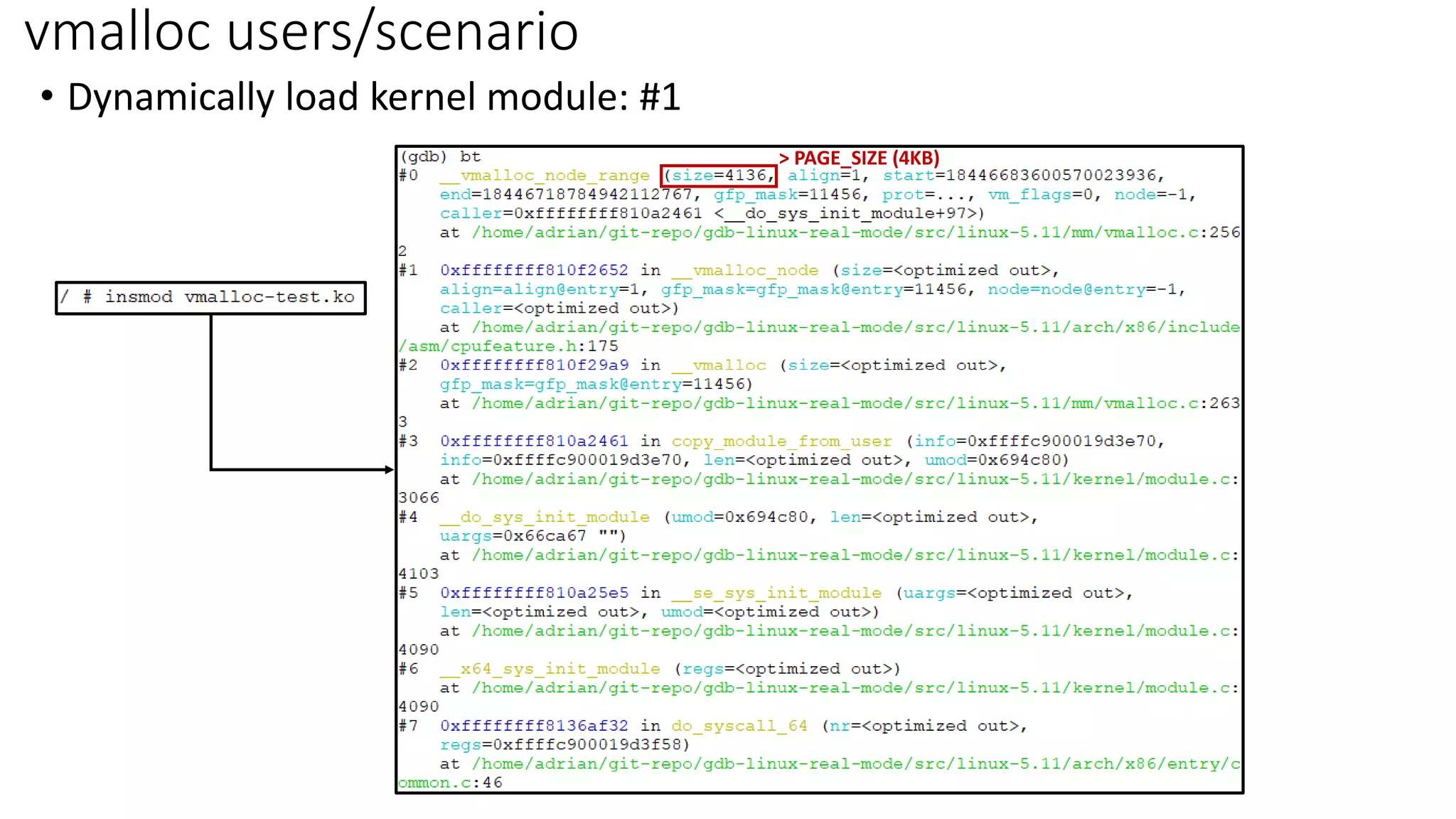

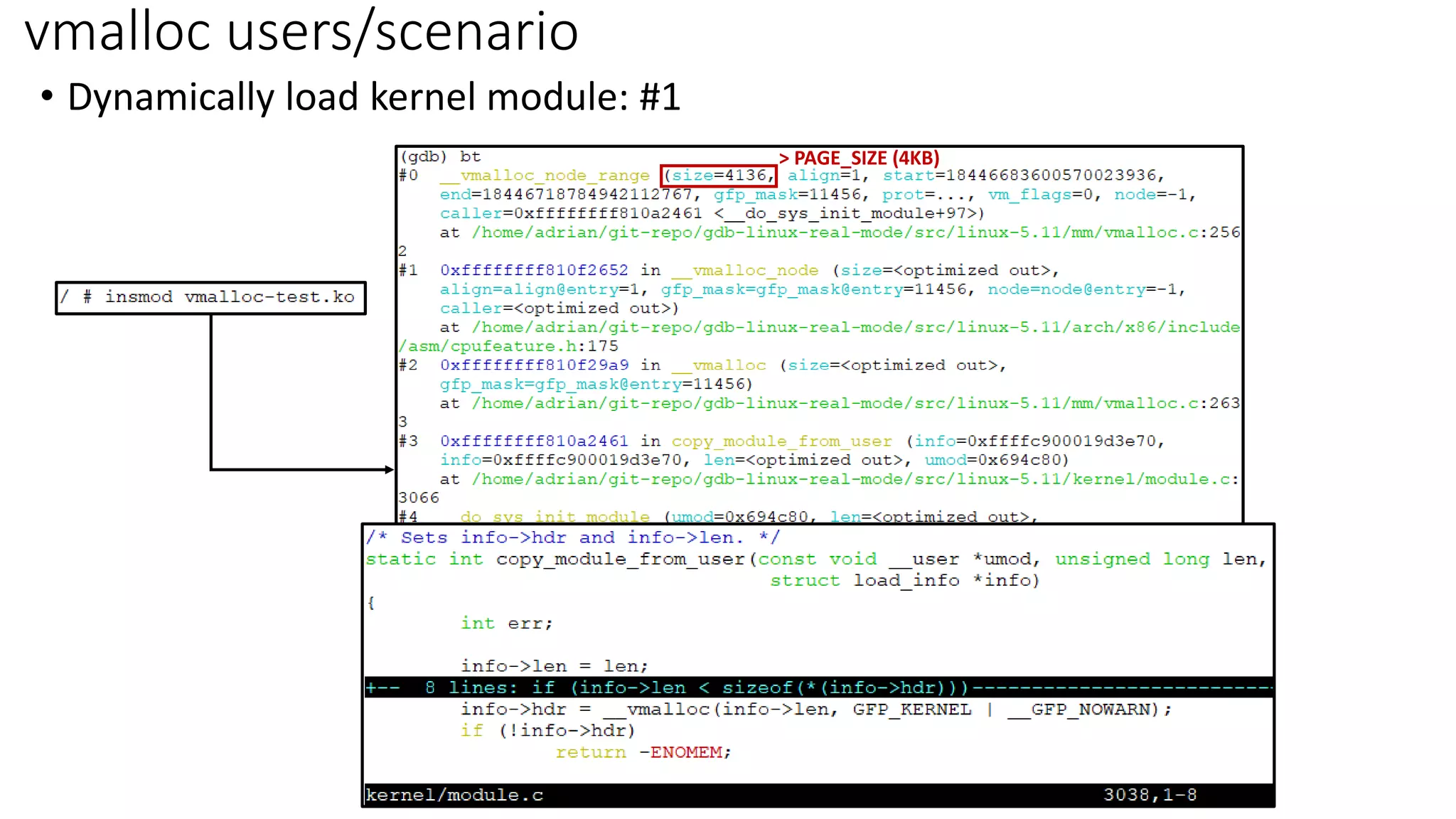

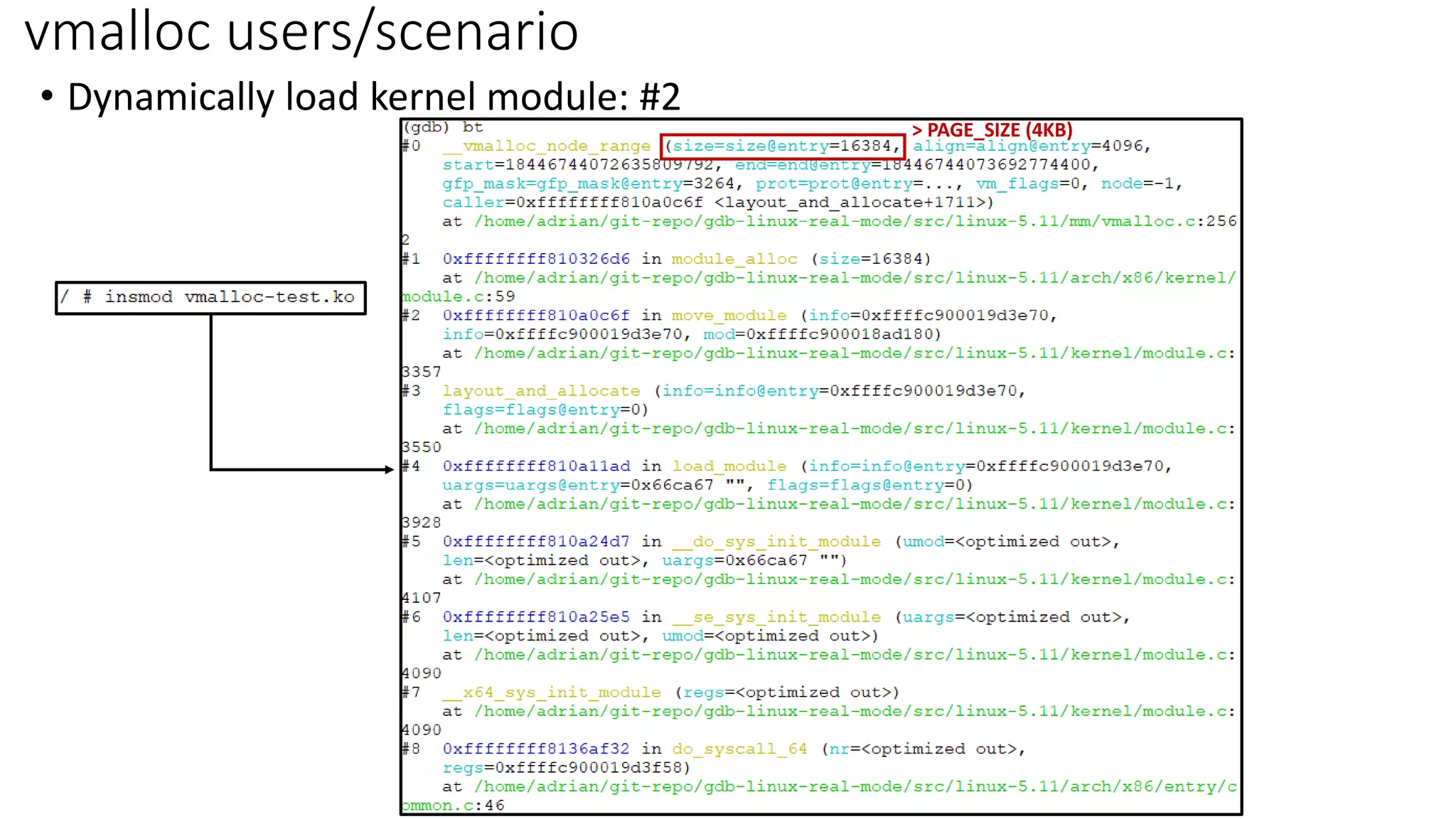

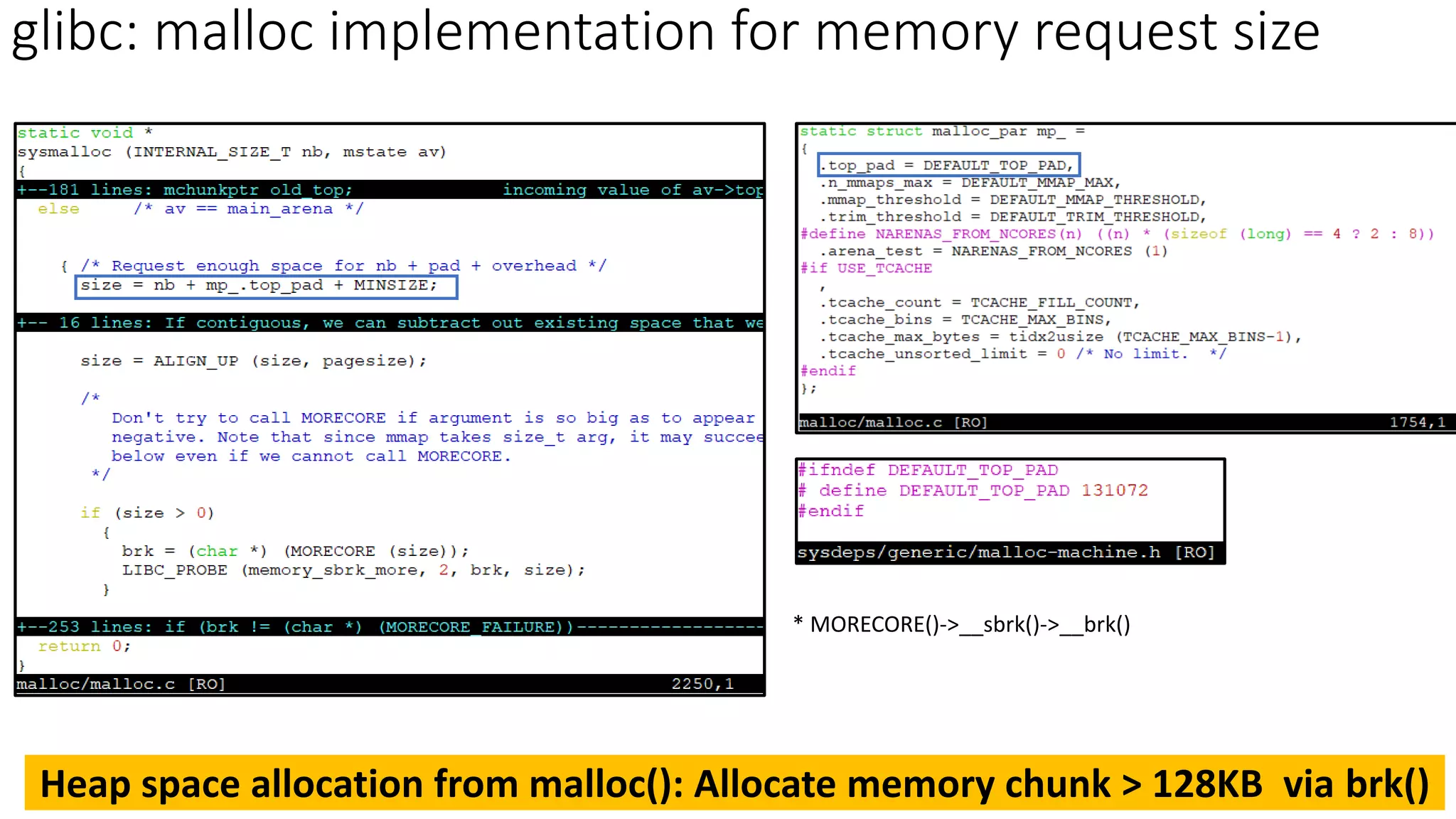

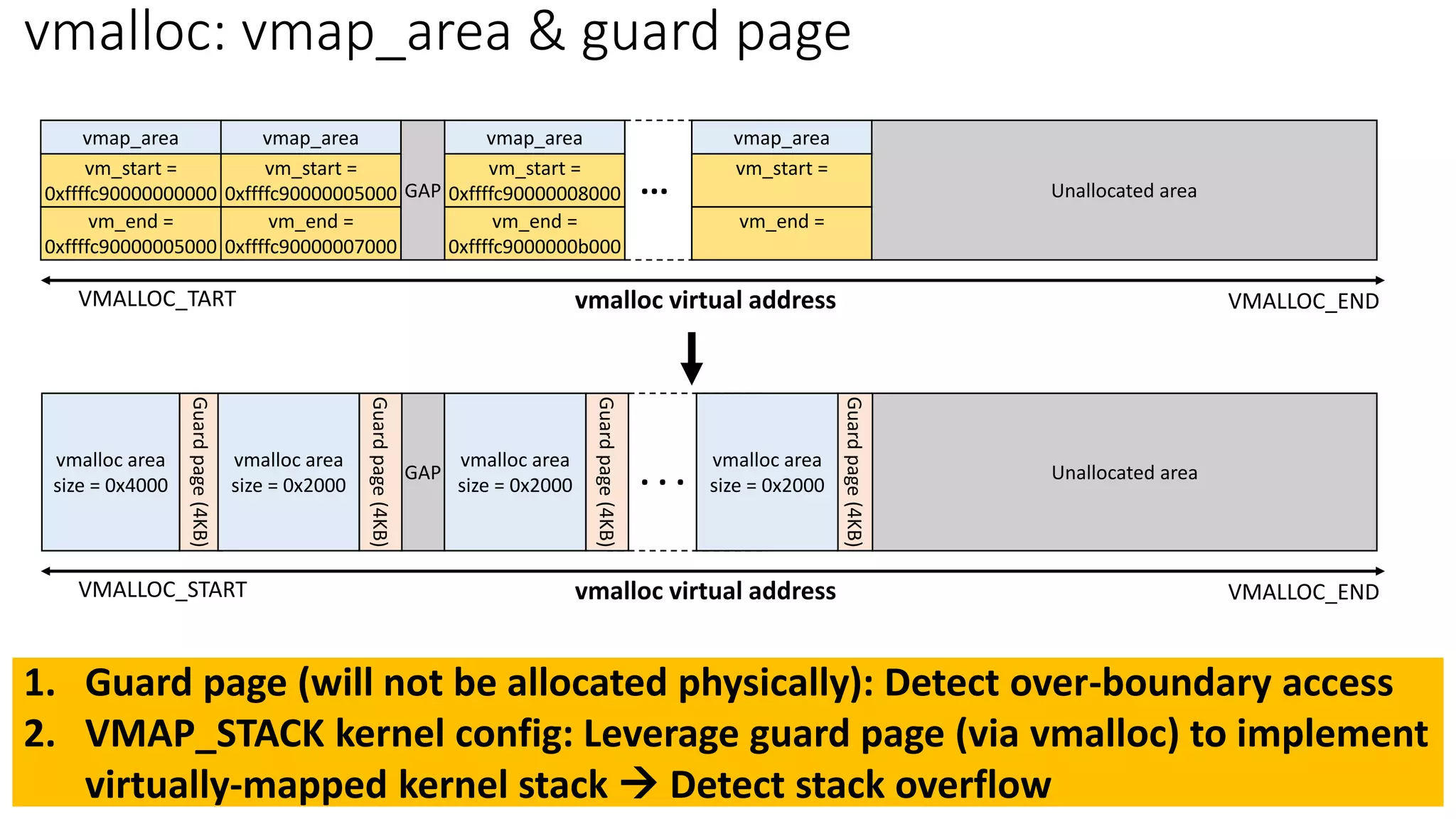

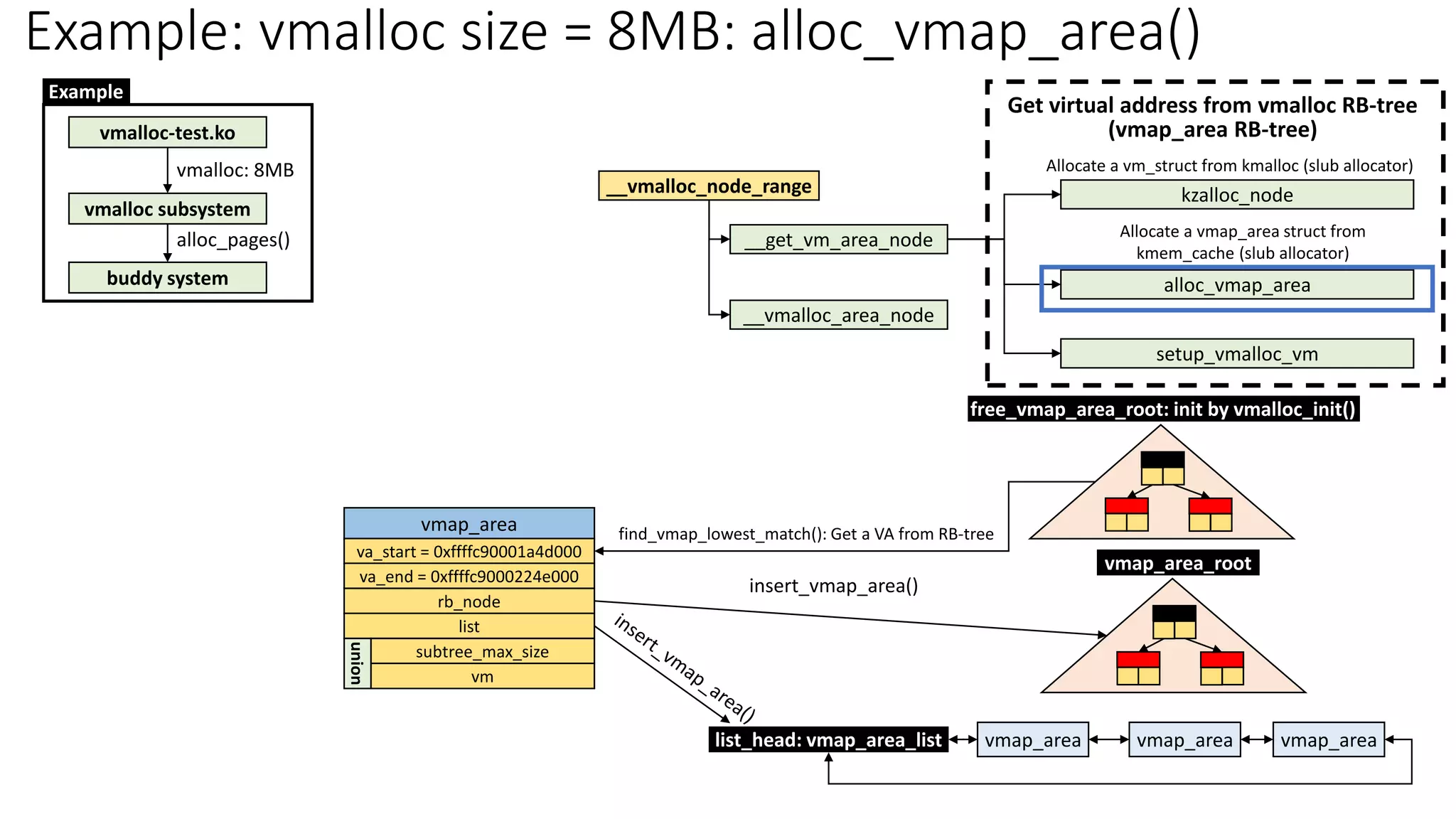

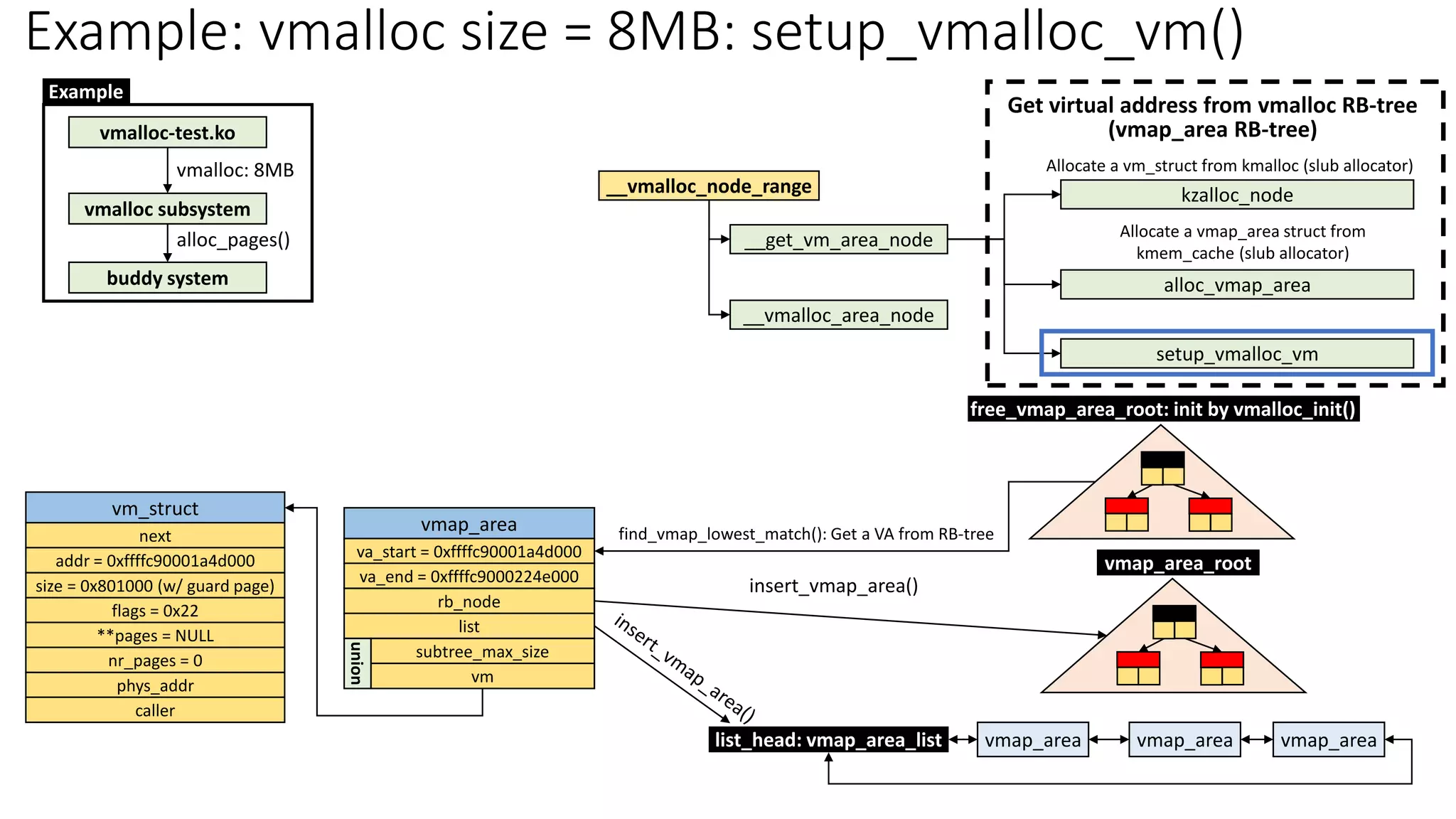

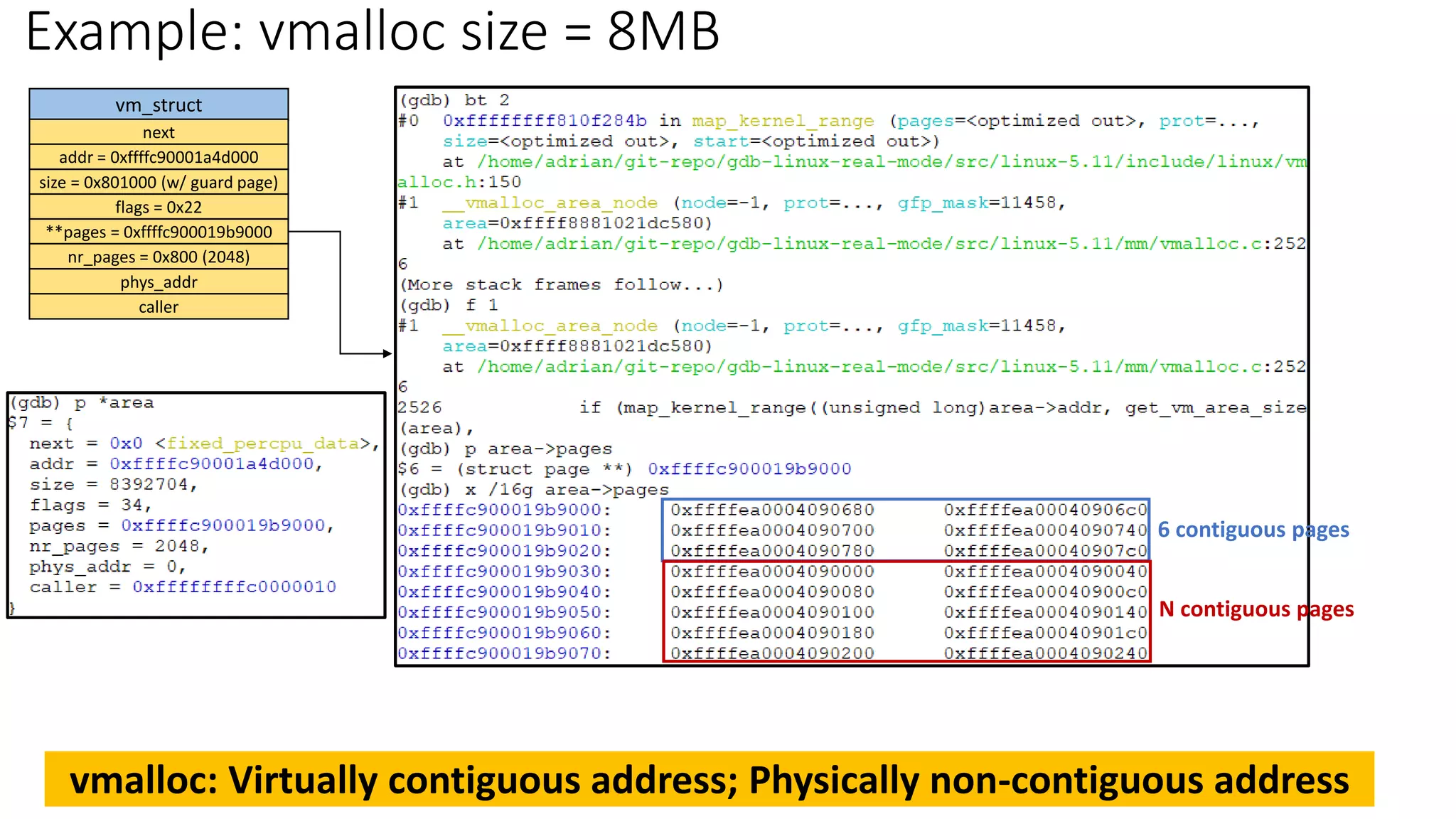

• vmalloc: Non-contiguous memory allocation

• [Note] kmalloc has been discussed here: Slide #88 of Slab Allocator in Linux

Kernel](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-2-2048.jpg)

![Memory Allocation in Linux

Buddy System

alloc_page(s), __get_free_page(s)

Slab Allocator

kmalloc/kfree

glibc: malloc/free

brk/mmap

. . .

vmalloc

User Space

Kernel Space

Hardware

• Balance between brk() and mmap()

• Use brk() if request size < DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The heap can be trimmed only if memory is freed at the top end.

o sbrk() is implemented as a library function that uses the brk() system call.

o When the heap is used up, allocate memory chunk > 128KB via brk().

▪ Save overhead for frequent system call ‘brk()’

• Use mmap() if request size >= DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The allocated memory blocks can be independently released back to the system.

o Deallocated space is not placed on the free list for reuse by later allocations.

o Memory may be wasted because mmap allocations must be page-aligned; and the

kernel must perform the expensive task of zeroing out memory allocated.

o Note: glibc uses the dynamic mmap threshold

o Detail: `man mallopt`

[glibc] malloc

• kmalloc: Contiguous memory allocation

• vmalloc: Non-contiguous memory allocation

o Scenario: memory allocation size > PAGE_SIZE (4KB)

o Allocate virtually contiguous memory

▪ Physical memory might NOT be contiguous

kmalloc & vmalloc](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-3-2048.jpg)

![kmalloc & slab (Recap)

struct kmem_cache

*kmalloc_caches[NR_KMALLOC_TYPES][KMALLOC_SHIFT_HIGH + 1]

struct kmem_cache

*kmalloc_caches[KMALLOC_NORMAL][]

kmem_cache

__percpu *cpu_slab

*node[MAX_NUMNODES]

kmem_cache

__percpu *cpu_slab

*node[MAX_NUMNODES]

kmem_cache

__percpu *cpu_slab

*node[MAX_NUMNODES]

kmem_cache

__percpu *cpu_slab

*node[MAX_NUMNODES]

kmem_cache

__percpu *cpu_slab

*node[MAX_NUMNODES]

NULL

kmalloc-96

0

1

2

3

4

13

kmalloc-192

kmalloc-8

kmalloc-16

…

kmalloc-8192

struct kmem_cache

*kmalloc_caches[KMALLOC_RECLAIM][]

NULL

kmalloc-96

0

1

2

3

4

13

kmalloc-192

kmalloc-8

kmalloc-16

…

kmalloc-8192

__GFP_RECLAIMABLE

struct kmem_cache

*kmalloc_caches[KMALLOC_DMA][]

NULL

kmalloc-96

0

1

2

3

4

13

kmalloc-192

kmalloc-8

kmalloc-16

…

kmalloc-8192

__GFP_DMA

Check create_kmalloc_caches() &kmalloc_info Referece (slideshare): Slab Allocator in Linux Kernel](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-4-2048.jpg)

![malloc() -> brk() implementation in

Linux Kernel

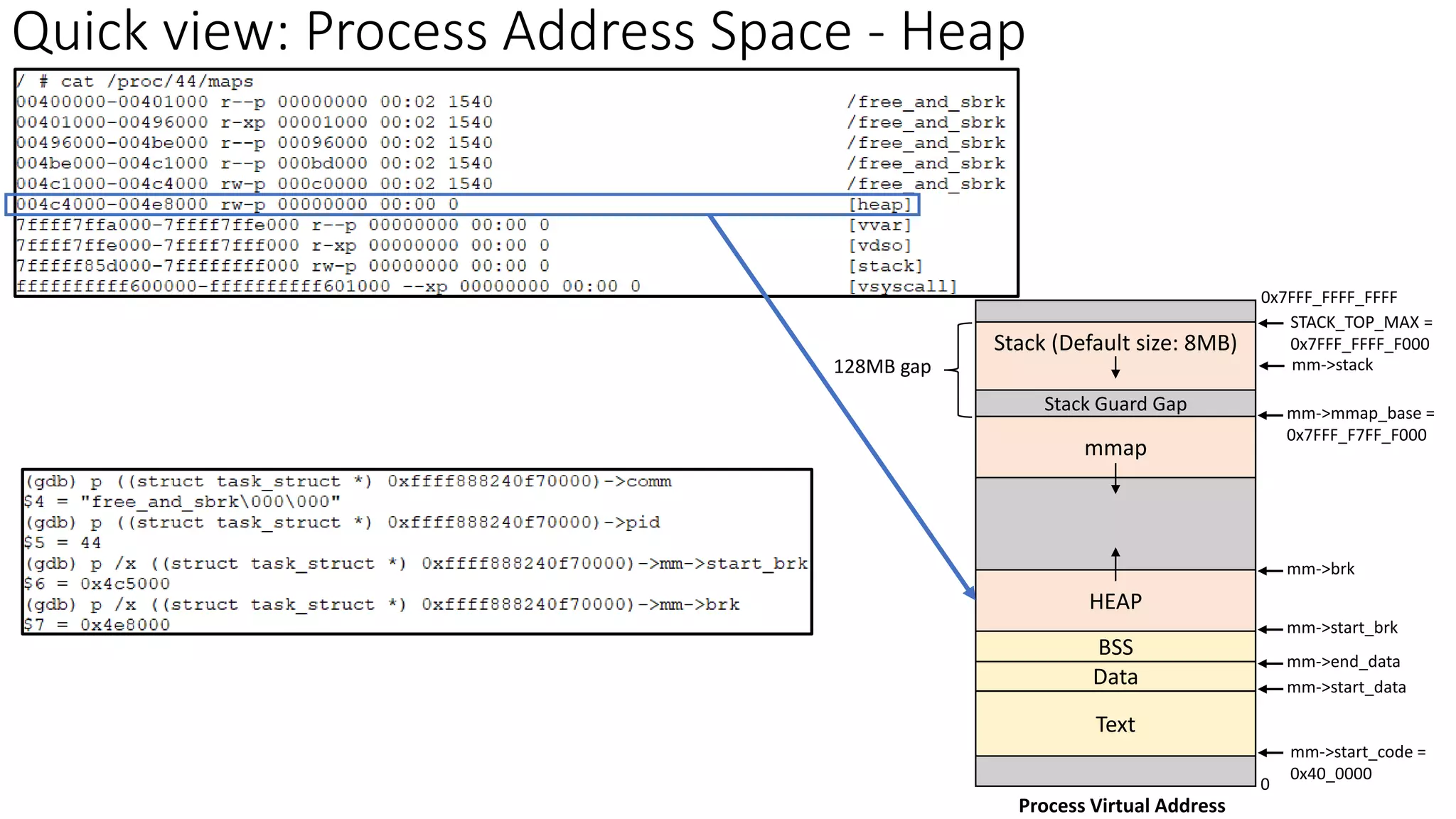

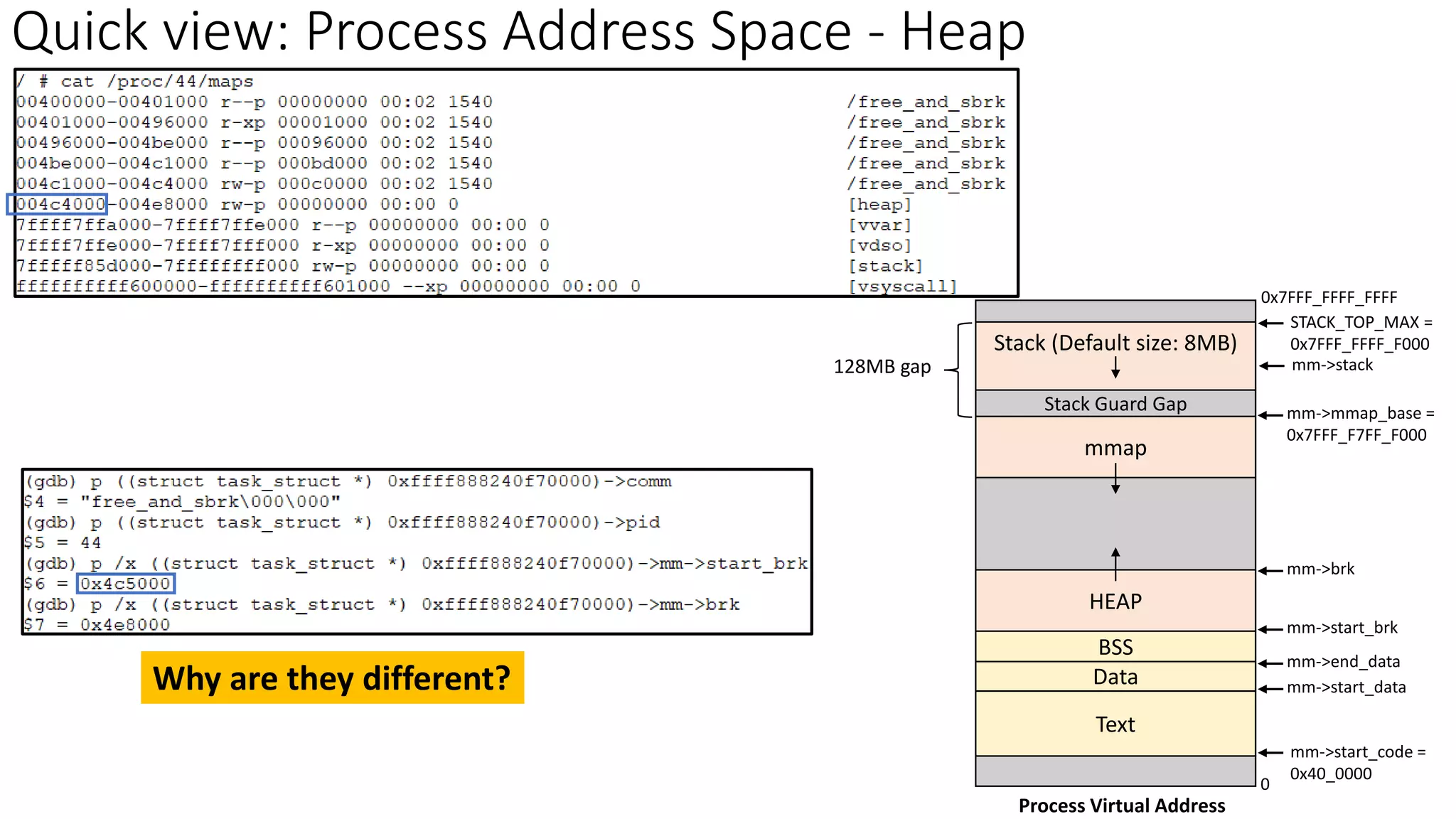

• Quick view: Process Address Space – Heap

• sys_brk – Call path

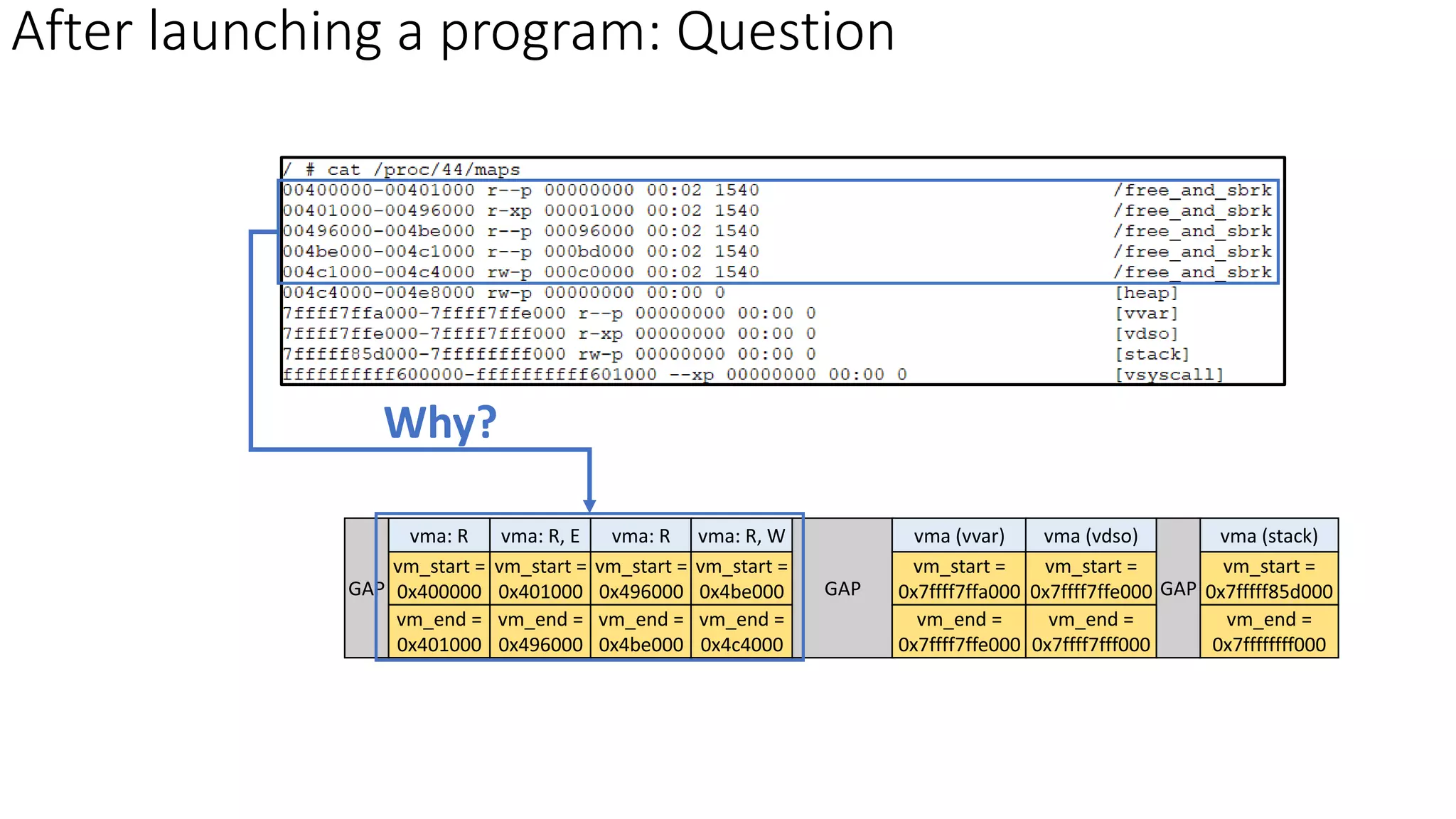

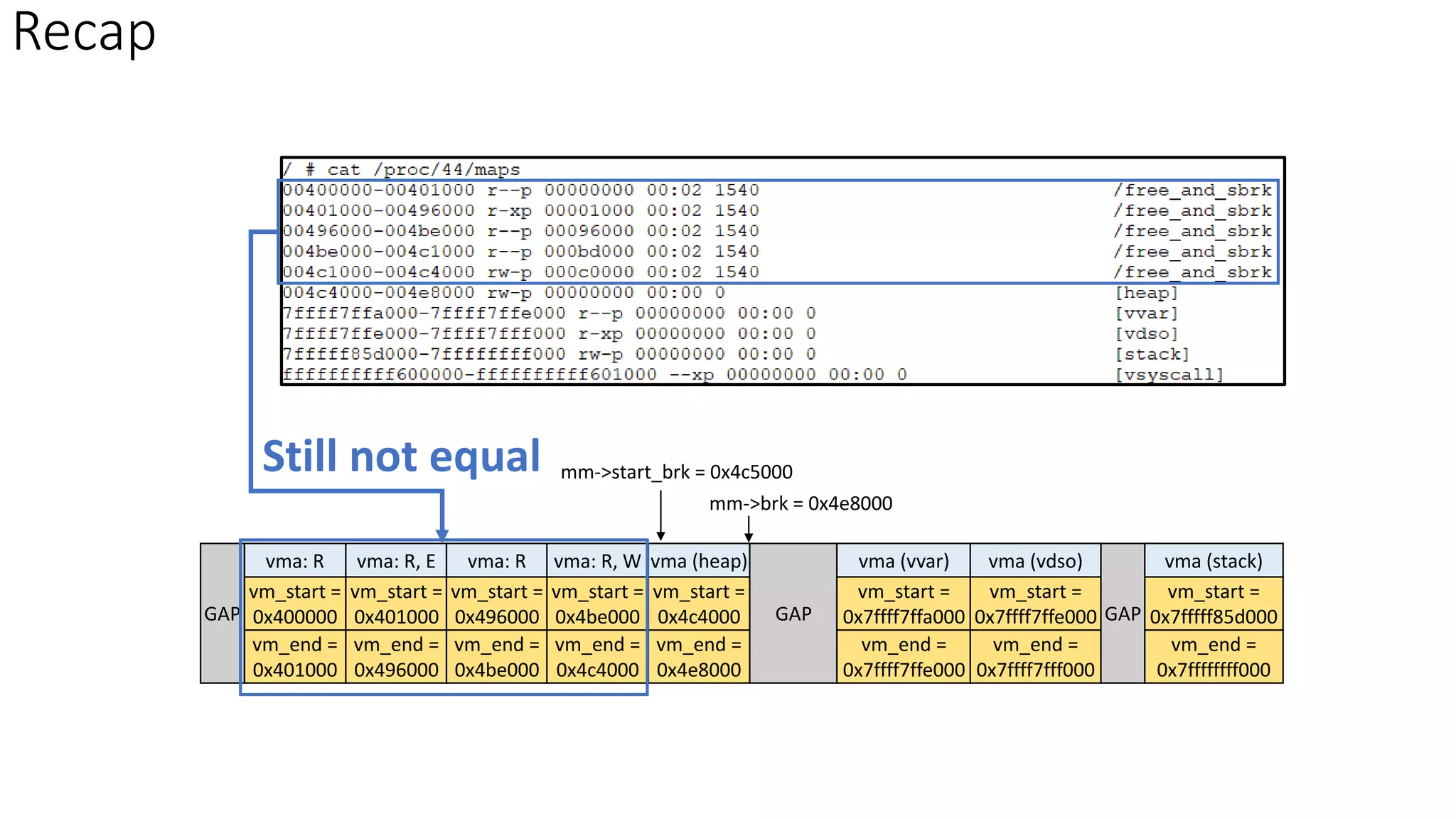

• [From scratch] Launch a program: load_elf_binary() in Linux kernel

o VMA change observation

o Heap (brk or program break) configuration

• [Program Launch] strace observation: heap – brk()

• strace observation: allocate space via malloc()

o If the heap space is used up, how about allocation size when calling malloc()->brk?

• glibc: malloc implementation for memory request size](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-5-2048.jpg)

![sys_brk – Call path

sys_brk

newbrk = PAGE_ALIGN(brk)

oldbrk = PAGE_ALIGN(mm->brk)

__do_munmap

shrink brk if brk <= mm->brk

do_brk_flags

mm->brk = brk

mm_populate

mm->def_flags & VM_LOCKED != 0

can expand the existing

anonymous mapping

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

return mm->brk

if brk < mm->start_brk

__mm_populate

populate_vma_page_range

__get_user_pages

follow_page_mask

return newbrk

mm_populate

faultin_page

handle_mm_fault

Find if the page is populated

The page is NOT populated yet

[By default] Heap (or brk) space is on-demand page](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-8-2048.jpg)

![vma: R

vm_start =

0x400000

vm_end =

0x401000

vma: R, E

vm_start =

0x401000

vm_end =

0x496000

vma: R

vm_start =

0x496000

vm_end =

0x4be000

GAP

vma: R, W

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

[From scratch] Launch a program: load_elf_binary() in Linux kernel

# ./free_and_sbrk 1 1

load_elf_binary()

Kernel](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-9-2048.jpg)

![# ./free_and_sbrk 1 1

vma: R

vm_start =

0x400000

vm_end =

0x401000

vma: R, E

vm_start =

0x401000

vm_end =

0x496000

vma: R

vm_start =

0x496000

vm_end =

0x4be000

GAP

vma: R, W

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

[From scratch] Launch a program: load_elf_binary() – Heap Configration

mm->{start_brk, brk} = end](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-11-2048.jpg)

![# ./free_and_sbrk 1 1

vma: R

vm_start =

0x400000

vm_end =

0x401000

vma: R, E

vm_start =

0x401000

vm_end =

0x496000

vma: R

vm_start =

0x496000

vm_end =

0x4be000

GAP

vma: R, W

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

mm->{start_brk, brk} = end

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c5000

[From scratch] Launch a program: load_elf_binary() – Heap Configration](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-12-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c5000

mm->brk = mm->start_brk

= 0x4c5000

vma: R vma: R, E vma: R vma: R, W

[From scratch] Launch a program: load_elf_binary() – Heap Configration

mm->{start_brk, brk} = end](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-13-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c5000

mm->brk = mm->start_brk

= 0x4c5000

vma: R vma: R, E vma: R vma: R, W

[From scratch] Launch a program: load_elf_binary() – Heap Configration

mm->{start_brk, brk} = end

Why?](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-14-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c5000

mm->brk = mm->start_brk

= 0x4c5000

vma: R vma: R, E vma: R vma: R, W

[From scratch] Launch a program: load_elf_binary() – Heap Configration

mm->{start_brk, brk} = end

elf_bss

elf_brk](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-15-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

load_elf_binary

set_brk

do_brk_flags

can expand the existing

anonymous mapping

vm_brk_flags

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c5000

mm->brk = mm->start_brk = 0x4c5000

vma: R vma: R, E vma: R vma: R, W

[From scratch] Launch a program: load_elf_binary() – Heap Configration

mm->{start_brk, brk} = end

elf_bss

elf_brk

range(elf_bss, elf_brk): bss space](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-16-2048.jpg)

![[Program Launch] strace observation: heap – brk()

vma: R

vm_start =

0x400000

vm_end =

0x401000

vma: R, E

vm_start =

0x401000

vm_end =

0x496000

vma: R

vm_start =

0x496000

vm_end =

0x4be000

GAP

vma: R, W

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c7000

mm->brk = 0x4c61c0

mm->start_brk = 0x4c5000

Demand paging: Allocate a physical page when a page fault occurs

sys_brk

newbrk = PAGE_ALIGN(brk)

oldbrk = PAGE_ALIGN(mm->brk)

__do_munmap

shrink brk if brk <= mm->brk

do_brk_flags

mm->brk = brk

mm_populate

mm->def_flags & VM_LOCKED != 0

can expand the existing

anonymous mapping

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

return mm->brk

if brk < mm->start_brk](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-17-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4c7000

mm->brk = 0x4c61c0

mm->start_brk = 0x4c5000

Demand paging: Allocate a physical page when a page fault occurs

vma: R vma: R, E vma: R vma: R, W

[Program Launch] strace observation: heap – brk()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-18-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4e8000

mm->brk = 0x4e8000

mm->start_brk = 0x4c5000

Demand paging: Allocate a physical page when a page fault occurs

sys_brk

newbrk = PAGE_ALIGN(brk)

oldbrk = PAGE_ALIGN(mm->brk)

__do_munmap

shrink brk if brk <= mm->brk

do_brk_flags

mm->brk = brk

mm_populate

mm->def_flags & VM_LOCKED != 0

can expand the existing

anonymous mapping

vma_merge

vm_area_alloc

cannot expand the existing

anonymous mapping

return mm->brk

if brk < mm->start_brk

vma: R vma: R, E vma: R vma: R, W

[Program Launch] strace observation: heap – brk()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-19-2048.jpg)

![[Program Launch] strace observation: mprotect()

vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4e8000

mm->brk = 0x4e8000

mm->start_brk = 0x4c5000

mprotect

split_vma vma_merge

Split this vma

vma: R vma: R, E vma: R vma: R, W](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-21-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c4000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4e8000

mm->brk = 0x4e8000

mm->start_brk = 0x4c5000

R/W permission

vma: R vma: R, E vma: R vma: R, W

[Program Launch] strace observation: mprotect()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-22-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c1000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4e8000

mm->brk = 0x4e8000

mm->start_brk = 0x4c5000

vma: R, W

vm_start =

0x4c1000

vm_end =

0x4c4000

mprotect

split_vma vma_merge

vma split

vma: R vma: R, E vma: R vma: R

[Program Launch] strace observation: mprotect()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-23-2048.jpg)

![vm_start =

0x400000

vm_end =

0x401000

vm_start =

0x401000

vm_end =

0x496000

vm_start =

0x496000

vm_end =

0x4be000

GAP

vm_start =

0x4be000

vm_end =

0x4c1000

GAP

vma (vvar)

vm_start =

0x7ffff7ffa000

vm_end =

0x7ffff7ffe000

vma (vdso)

vm_start =

0x7ffff7ffe000

vm_end =

0x7ffff7fff000

vma (stack)

vm_start =

0x7fffff85d000

vm_end =

0x7ffffffff000

GAP

vma (heap)

vm_start =

0x4c4000

vm_end =

0x4e8000

mm->brk = 0x4e8000

mm->start_brk = 0x4c5000

vm_start =

0x4c1000

vm_end =

0x4c4000

match

vma: R, W

vma: R vma: R, E vma: R vma: R

[Program Launch] strace observation: mprotect()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-24-2048.jpg)

![strace observation: allocate space via malloc() #1

[Init stage]

0x4e8000 – 0x4c7000 = 0x21000

(132KB: 33 pages)

• Balance between brk() and mmap()

• Use brk() if request size < DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The heap can be trimmed only if memory is freed at the top end.

o sbrk() is implemented as a library function that uses the brk() system call.

o When the heap is used up, allocate memory chunk > 128KB via brk().

▪ Save overhead for frequent system call ‘brk()’

• Use mmap() if request size >= DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The allocated memory blocks can be independently released back to the system.

o Deallocated space is not placed on the free list for reuse by later allocations.

o Memory may be wasted because mmap allocations must be page-aligned; and the

kernel must perform the expensive task of zeroing out memory allocated.

o Note: glibc uses the dynamic mmap threshold

o Detail: `man mallopt`

[glibc] malloc](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-25-2048.jpg)

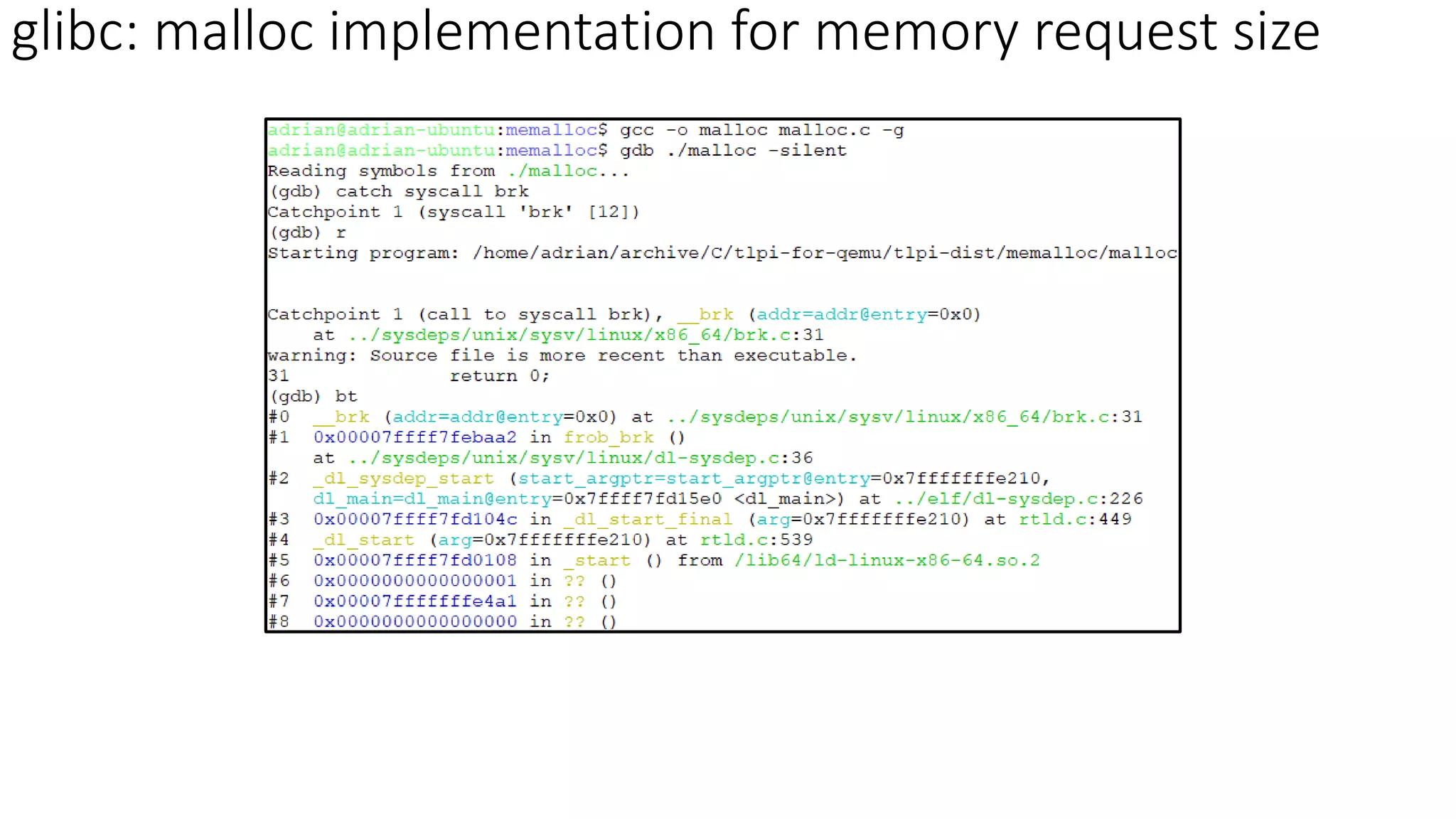

![strace observation: allocate space via malloc() #2

[Init stage] 0x21000 (132KB: 33 pages)

• Balance between brk() and mmap()

• Use brk() if request size < DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The heap can be trimmed only if memory is freed at the top end.

o sbrk() is implemented as a library function that uses the brk() system call.

o When the heap is used up, allocate memory chunk > 128KB via brk().

▪ Save overhead for frequent system call ‘brk()’

• Use mmap() if request size >= DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The allocated memory blocks can be independently released back to the system.

o Deallocated space is not placed on the free list for reuse by later allocations.

o Memory may be wasted because mmap allocations must be page-aligned; and the

kernel must perform the expensive task of zeroing out memory allocated.

o Note: glibc uses the dynamic mmap threshold

o Detail: `man mallopt`

[glibc] malloc

Current program break is used

up: allocate another 132KB

malloc.c

Heap space allocation from malloc(): Allocate memory chunk > 128KB via brk()](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-26-2048.jpg)

![Memory Allocation in Linux – brk() detail

Buddy System

alloc_page(s), __get_free_page(s)

Slab Allocator

kmalloc/kfree

brk or mmap

. . .

vmalloc

User Space

Kernel Space

Hardware

• Balance between brk() and mmap()

• Use brk() if request size < DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The heap can be trimmed only if memory is freed at the top end.

o sbrk() is implemented as a library function that uses the brk() system call.

o When the heap is used up, allocate memory chunk > 128KB via brk().

▪ Save overhead for frequent system call ‘brk()’

• Use mmap() if request size >= DEFAULT_MMAP_THRESHOLD_MIN (128 KB)

o The allocated memory blocks can be independently released back to the system.

o Deallocated space is not placed on the free list for reuse by later allocations.

o Memory may be wasted because mmap allocations must be page-aligned; and the

kernel must perform the expensive task of zeroing out memory allocated.

o Note: glibc uses the dynamic mmap threshold

o Detail: `man mallopt`

[glibc] malloc: check sysmalloc() for implementation

User application

glibc: malloc implementation

Allocated

heap space

enough? Y: Return available address from the allocated

heap space

N: if size < 128KB, then allocate “memory chunk > 128KB” by

calling brk()

VMA Configuration &

program break adjustment

Page fault handler

malloc](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-27-2048.jpg)

![malloc.c

1

2

3

4

5

6

Heap is expanded for 0x21000 (33 pages): 0x555555559000 -> 0x55555557a000

glibc: malloc implementation for memory request size

Detail Reference

• [glibc] malloc internals

o Concept: Chunk, arenas, heaps, and thread

local cache (tcache)](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-30-2048.jpg)

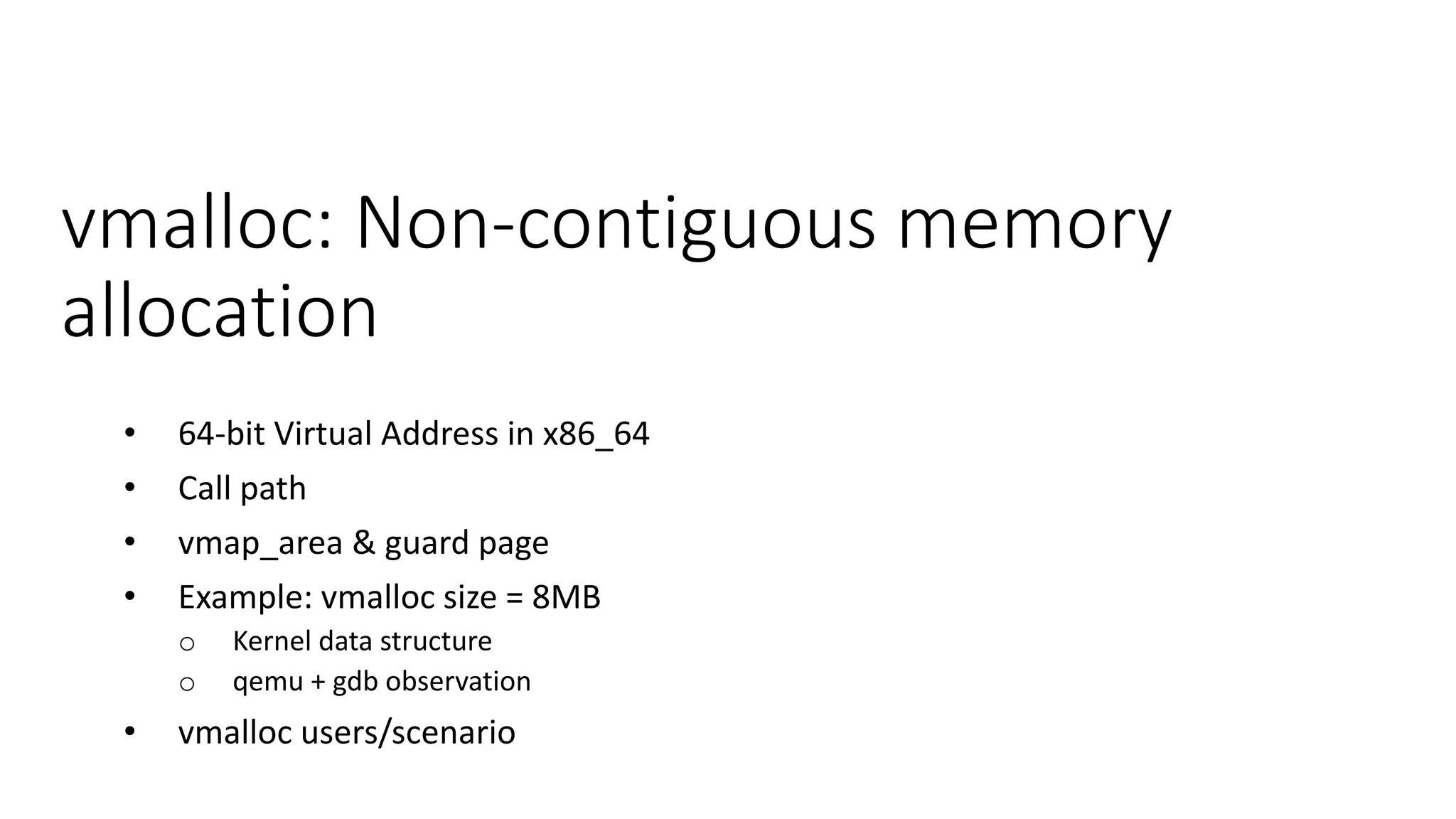

![Kernel Space

0x0000_7FFF_FFFF_FFFF

0xFFFF_8000_0000_0000

128TB

Page frame direct

mapping (64TB)

page_offset_base

64-bit Virtual Address

Kernel Virtual Address

0

0xFFFF_FFFF_FFFF_FFFF

Guard hole (8TB)

LDT remap for PTI (0.5TB)

Unused hole (0.5TB)

vmalloc/ioremap (32TB)

vmalloc_base

Unused hole (1TB)

Virtual memory map – 1TB

(store page frame descriptor)

…

vmemmap_base

page_ofset_base = 0xFFFF_8880_0000_0000

vmalloc_base = 0xFFFF_C900_0000_0000

vmemmap_base = 0xFFFF_EA00_0000_0000

* Can be dynamically configured by KASLR (Kernel Address Space Layout Randomization - "arch/x86/mm/kaslr.c")

Default Configuration

Kernel text mapping from

physical address 0

Kernel code [.text, .data…]

Modules

__START_KERNEL_map = 0xFFFF_FFFF_8000_0000

__START_KERNEL = 0xFFFF_FFFF_8100_0000

MODULES_VADDR

0xFFFF_8000_0000_0000

Empty Space

User Space

128TB

1GB or 512MB

1GB or 1.5GB Fix-mapped address space

(Expanded to 4MB: 05ab1d8a4b36) FIXADDR_START

Unused hole (2MB)

VMALLOC_START = 0xFFFF_C900_0000_0000

VMALLOC_END = 0xFFFF_E8FF_FFFF_FFFF

FIXADDR_TOP = 0xFFFF_FFFF_FF7F_F000

Reference: Documentation/x86/x86_64/mm.rst

64-bit Virtual Address in x86_64](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-32-2048.jpg)

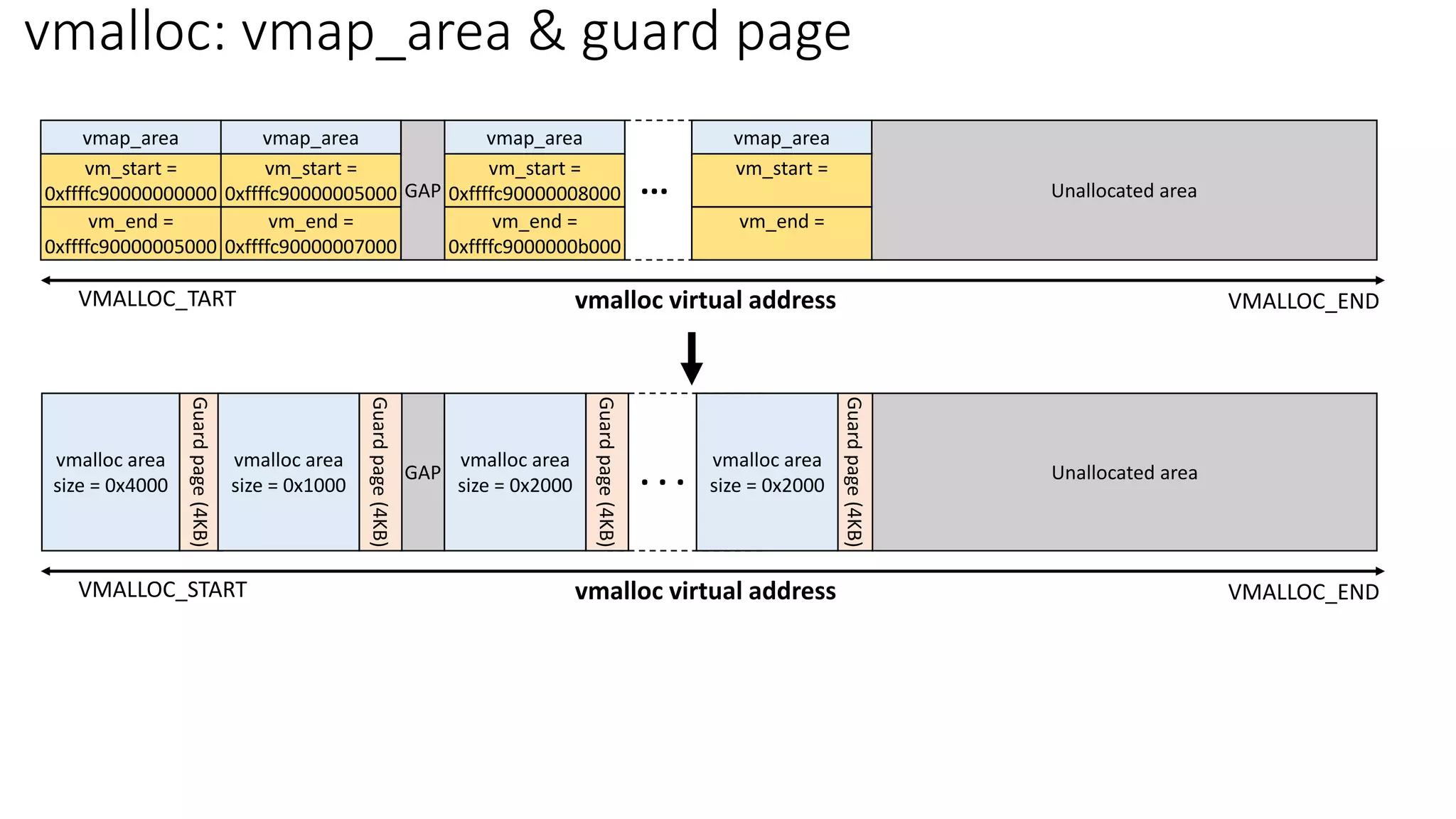

![vmalloc

Memory allocation for storing pointers

of page descriptors: area->pages[]

__get_vm_area_node

Allocate a vm_struct from kmalloc (slub allocator)

__vmalloc_node __vmalloc_node_range

Range: VMALLOC_START-VMALLOC_END

kzalloc_node

setup_vmalloc_vm

alloc_vmap_area

1. Allocate a vmap_area struct from

kmem_cache (slub allocator)

2. Get virtual address from vmalloc RB-tree

__vmalloc_area_node

area->pages[i] = page

page = alloc_page(gfp_mask)

for (i = 0; i < area->nr_pages; i++)

page table population

map_kernel_range

Get virtual address from vmalloc RB-tree

(vmap_area RB-tree)

vmalloc – call path

Page table is populated immediately upon the request: No page fault](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-33-2048.jpg)

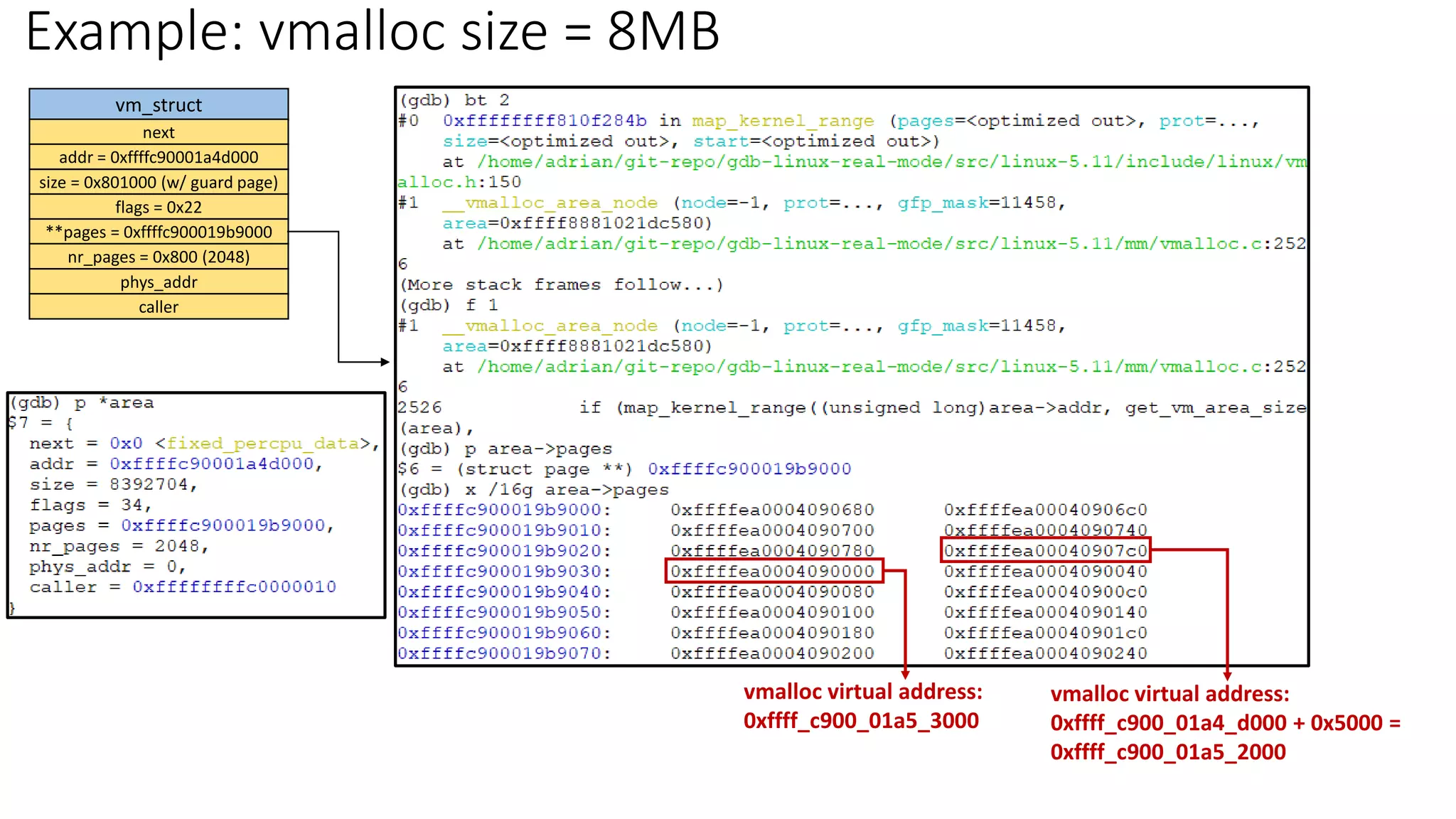

![Example: vmalloc size = 8MB: __vmalloc_area_node()

vmap_area

va_start = 0xffffc90001a4d000

va_end = 0xffffc9000224e000

rb_node

list

subtree_max_size

vm

union

__get_vm_area_node

__vmalloc_node_range

__vmalloc_area_node

find_vmap_lowest_match(): Get a VA from RB-tree

free_vmap_area_root: init by vmalloc_init()

vmap_area_root

list_head: vmap_area_list vmap_area vmap_area vmap_area

vmalloc: 8MB

vmalloc-test.ko

vmalloc subsystem

buddy system

alloc_pages()

Example

vm_struct

next

addr = 0xffffc90001a4d000

size = 0x801000 (w/ guard page)

flags = 0x22

**pages = 0xffffc900019b9000

nr_pages = 0x800 (2048)

phys_addr

caller

Memory allocation for storing pointers

of page descriptors: area->pages[]

area->pages[i] = page

page = alloc_page(gfp_mask)

for (i = 0; i < area->nr_pages; i++)

page table population

map_kernel_range

Page

Descriptor

Page

Descriptor

...

Memory allocation for page descriptor pointer

• size: 8MB/4KB * 8 = 16384 bytes

• Allocated from vmalloc ( > 4KB) or kmalloc

(<= 4KB)](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-38-2048.jpg)

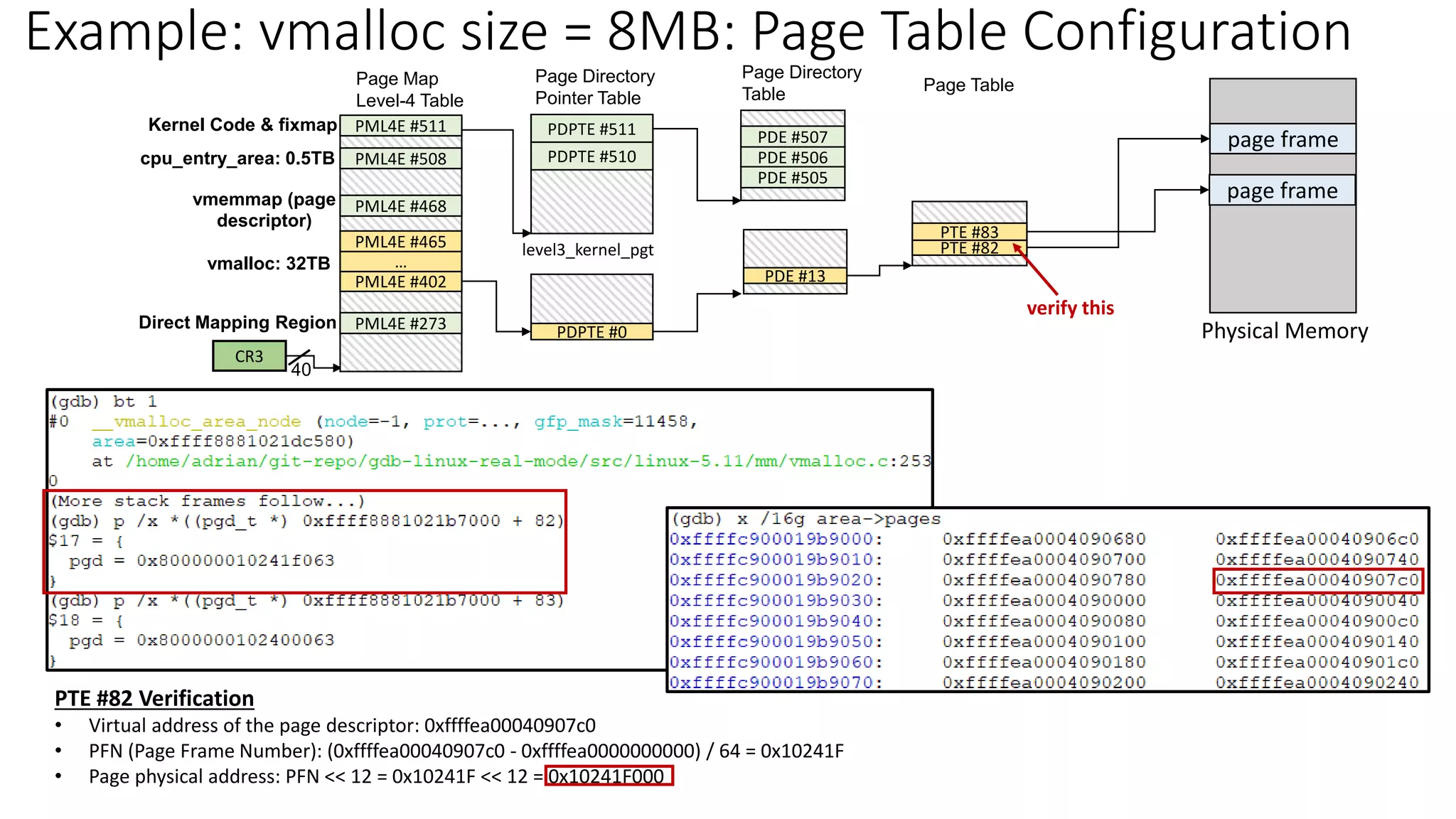

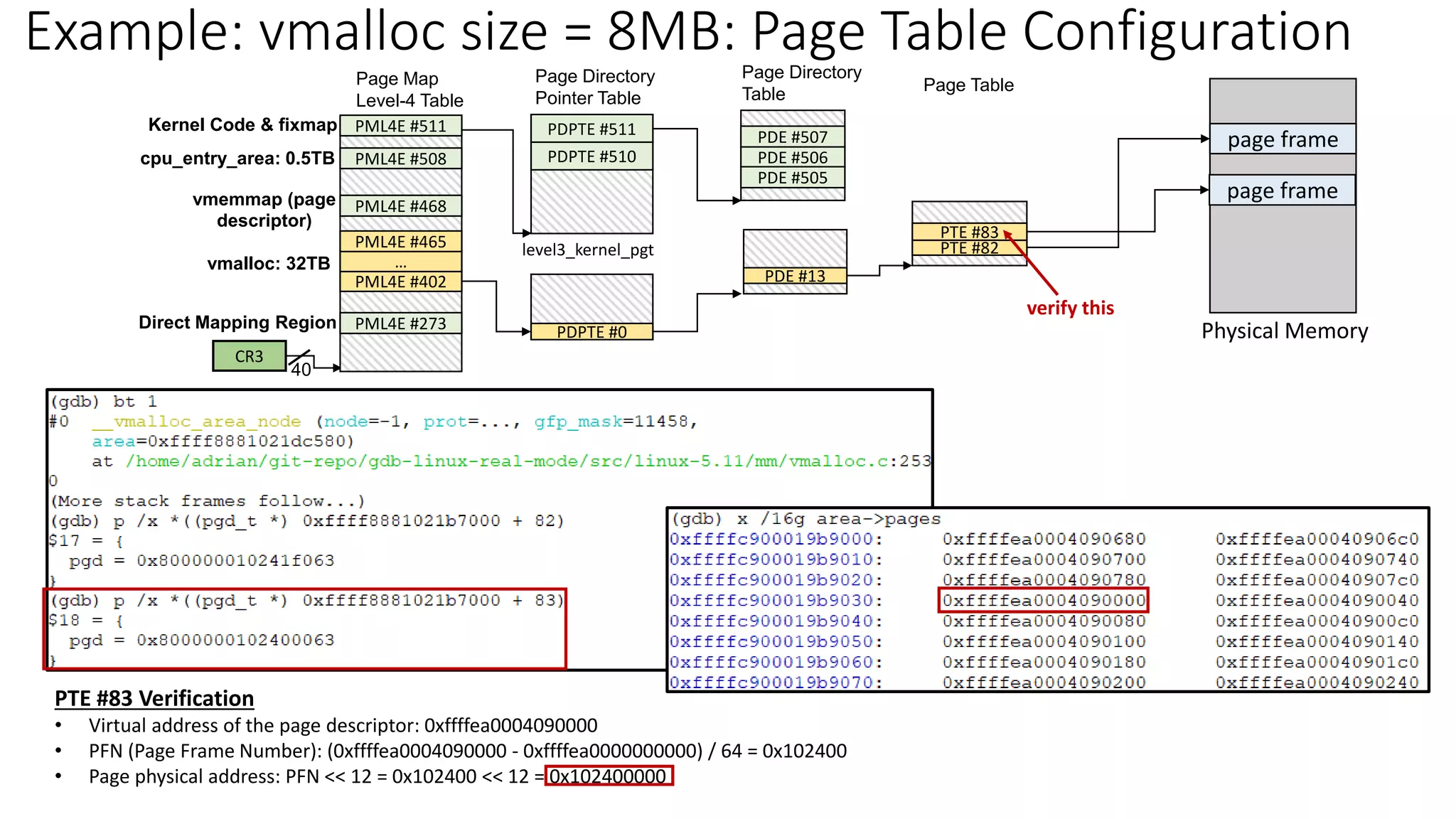

![Page Map

Level-4 Table

40

CR3 init_top_pgt = swapper_pg_dir

Sign-extend

Page Map

Level-4 Offset Physical Page Offset

0

30 21

39 20

38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

level3_kernel_pgt

PDPTE #511

PDPTE #510 PDE #506

PDE #507

PDE #505

Direct Mapping Region

Kernel Code & fixmap

cpu_entry_area: 0.5TB

vmalloc: 32TB

PDE #13

PML4E #402

PML4E #273

…

PML4E #465

PML4E #468

PML4E #508

PML4E #511

vmemmap (page

descriptor)

PDPTE #0

Page Table Offset

1211

PTE #82 = 0

PTE #83 = 0

Page Table

Physical Memory

page frame

Example: vmalloc size = 8MB: Page Table Configuration

[Linear Address] 0xffff_c900_01a5_2000, 0xffff_c900_01a5_3000](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-41-2048.jpg)

![Page Map

Level-4 Table

40

CR3 init_top_pgt = swapper_pg_dir

Sign-extend

Page Map

Level-4 Offset Physical Page Offset

0

30 21

39 20

38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

level3_kernel_pgt

PDPTE #511

PDPTE #510 PDE #506

PDE #507

PDE #505

Direct Mapping Region

Kernel Code & fixmap

cpu_entry_area: 0.5TB

vmalloc: 32TB

PDE #13

PML4E #402

PML4E #273

…

PML4E #465

PML4E #468

PML4E #508

PML4E #511

vmemmap (page

descriptor)

PDPTE #0

Page Table Offset

1211

PTE #82

PTE #83

Page Table

Physical Memory

page frame

Example: vmalloc size = 8MB: Page Table Configuration

[Linear Address] 0xffff_c900_01a5_2000, 0xffff_c900_01a5_3000

page frame](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-42-2048.jpg)

![Page Map

Level-4 Table

40

CR3 init_top_pgt = swapper_pg_dir

Sign-extend

Page Map

Level-4 Offset Physical Page Offset

0

30 21

39 20

38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

level3_kernel_pgt

PDPTE #511

PDPTE #510 PDE #506

PDE #507

PDE #505

Direct Mapping Region

Kernel Code & fixmap

cpu_entry_area: 0.5TB

vmalloc: 32TB

PDE #13

PML4E #402

PML4E #273

…

PML4E #465

PML4E #468

PML4E #508

PML4E #511

vmemmap (page

descriptor)

PDPTE #0

Page Table Offset

1211

PTE #82

PTE #83

Page Table

Physical Memory

page frame

Example: vmalloc size = 8MB: Page Table Configuration

[Linear Address] 0xffff_c900_01a5_2000, 0xffff_c900_01a5_3000

page frame

Page are physically non-

contiguous address](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-43-2048.jpg)

![Page Map

Level-4 Table

40

CR3 init_top_pgt = swapper_pg_dir

Sign-extend

Page Map

Level-4 Offset Physical Page Offset

0

30 21

39 20

38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

level3_kernel_pgt

PDPTE #511

PDPTE #510 PDE #506

PDE #507

PDE #505

Direct Mapping Region

Kernel Code & fixmap

cpu_entry_area: 0.5TB

vmalloc: 32TB

PDE #13

PML4E #402

PML4E #273

…

PML4E #465

PML4E #468

PML4E #508

PML4E #511

vmemmap (page

descriptor)

PDPTE #0

Page Table Offset

1211

PTE #82

PTE #83

Page Table

Physical Memory

page frame

Example: vmalloc size = 8MB: Page Table Configuration

[Linear Address] 0xffff_c900_01a5_2000, 0xffff_c900_01a5_3000

page frame

verify this](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-44-2048.jpg)

![• Array size > PAGE_SIZE (4KB)

oarr[0], arr[1]….arr[n] → Need contiguous memory for array indexing

oExample: 8MB memory allocation (for page descriptor) from vmalloc

▪ Page descriptor list (vm_struct->pages) requires contiguous memory for array indexing

vmalloc users/scenario

vm_struct

next

addr = 0xffffc90001a4d000

size = 0x801000 (w/ guard page)

flags = 0x22

**pages = 0xffffc900019b9000

nr_pages = 0x800 (2048)

phys_addr

caller

Page

Descriptor

Page

Descriptor

...

Memory allocation for page descriptor

pointer

• Memory space can be address:

8MB/4KB * 8 = 16384 bytes

• Allocated from vmalloc ( > 4KB)](https://image.slidesharecdn.com/mallocvmallocinlinux-221205070506-74fe014b/75/malloc-vmalloc-in-Linux-47-2048.jpg)