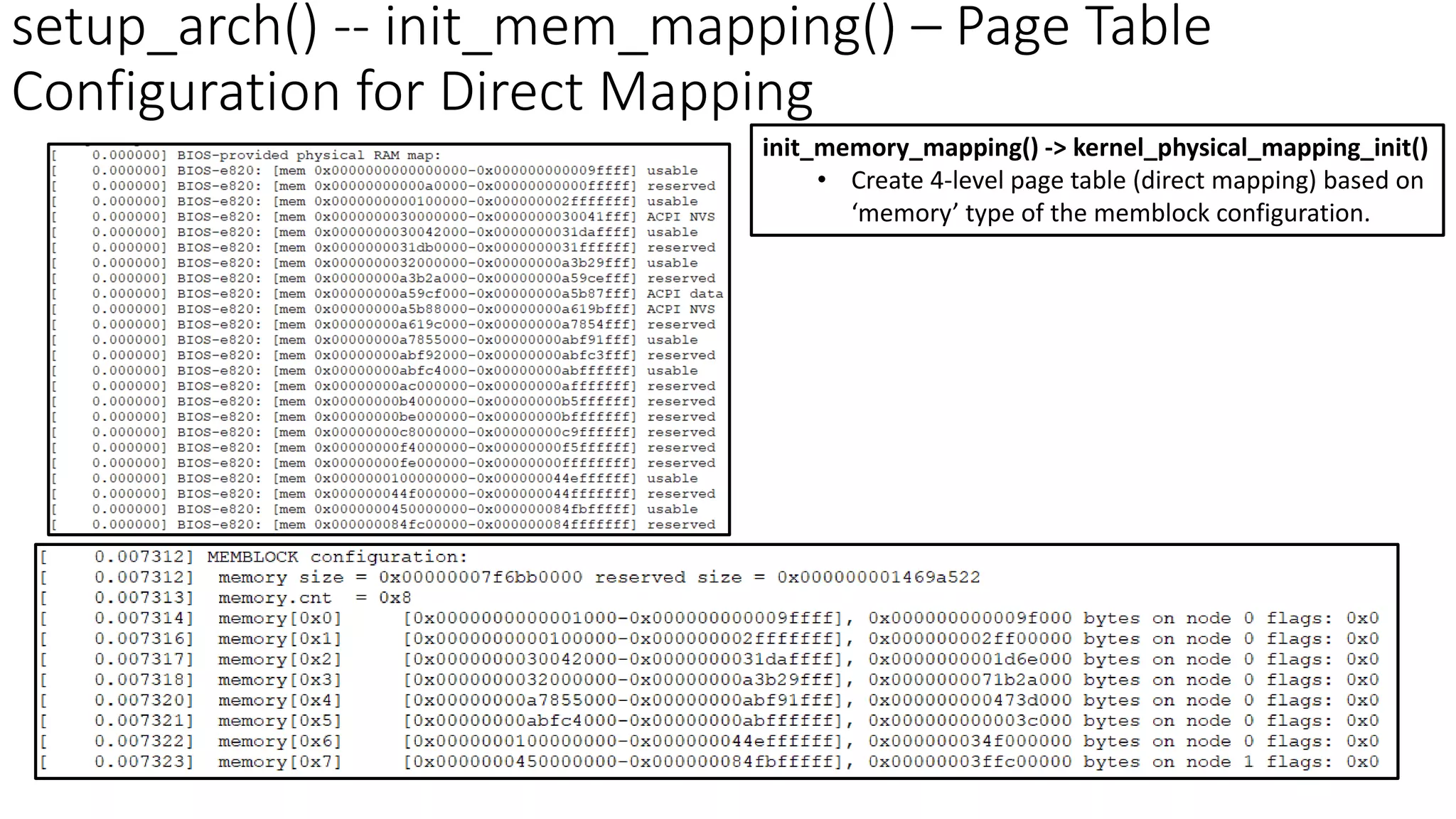

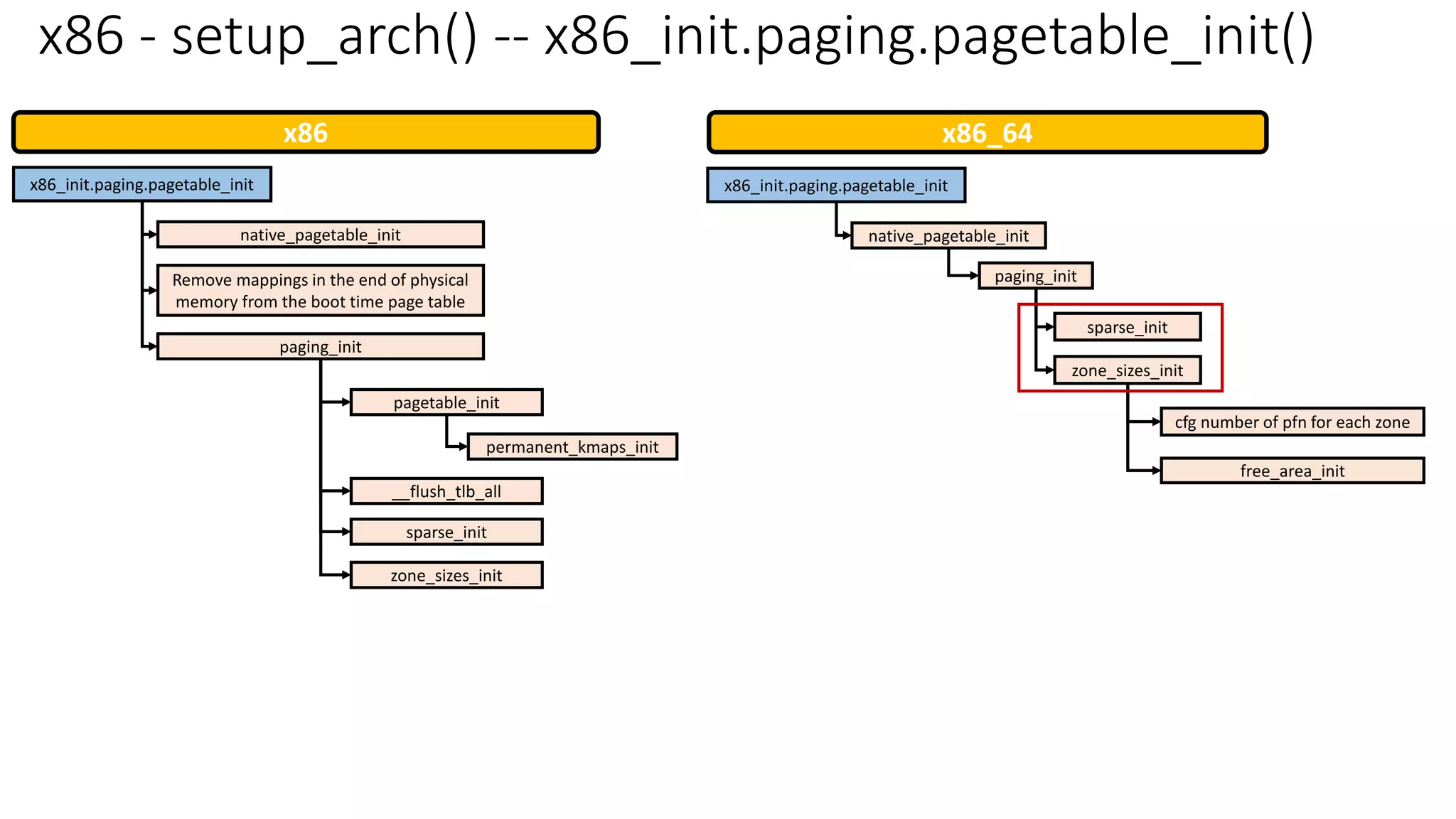

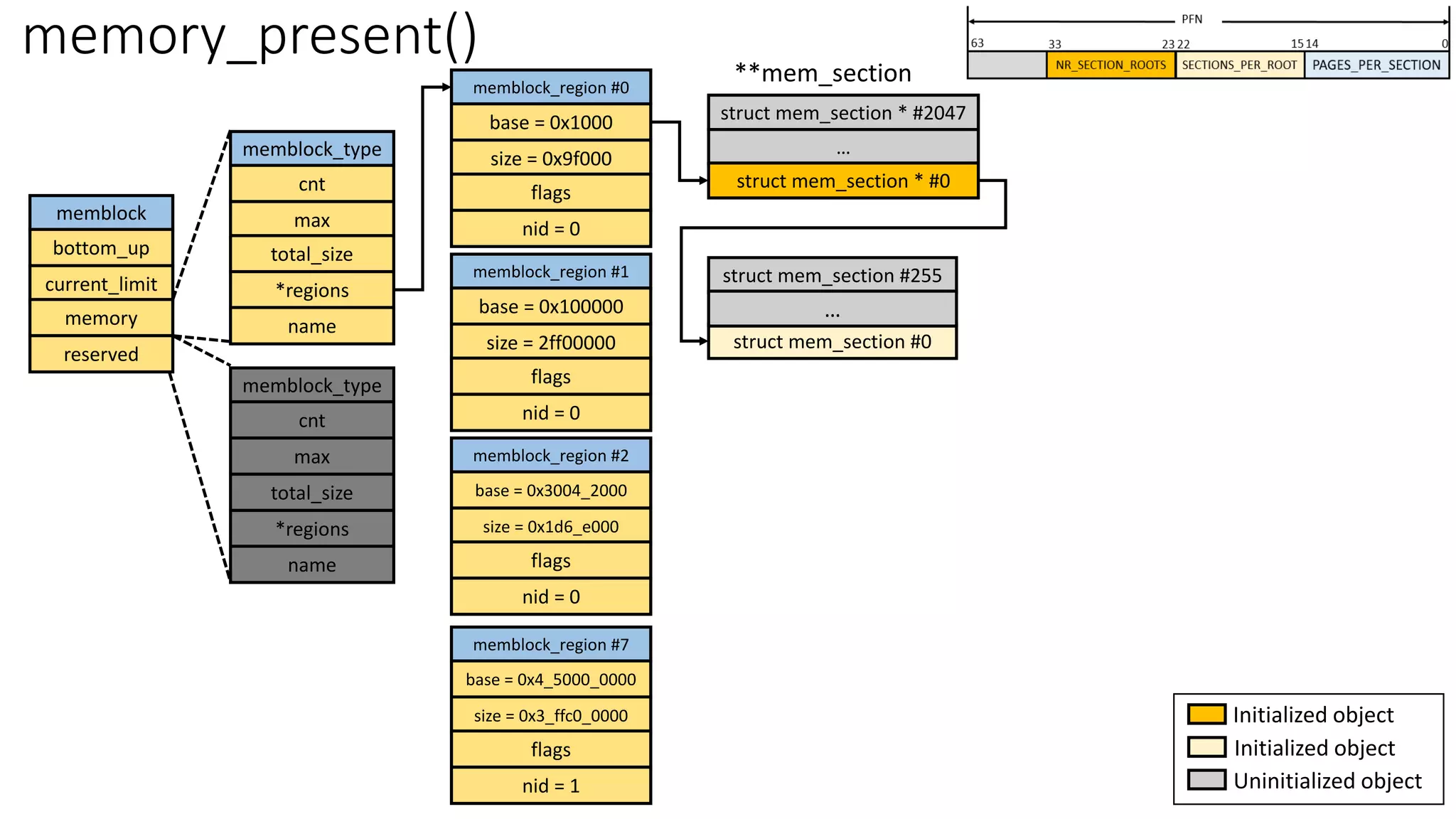

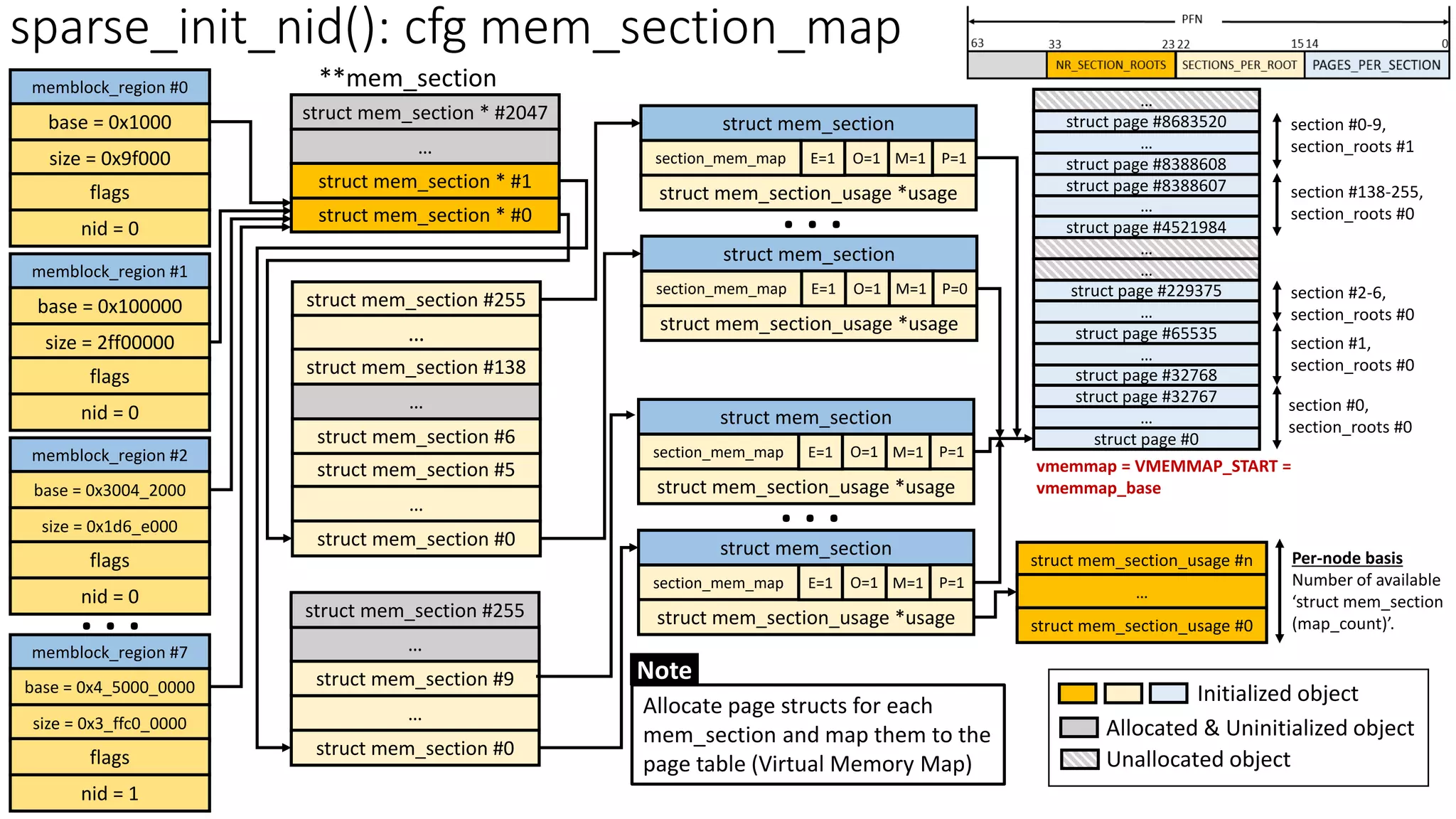

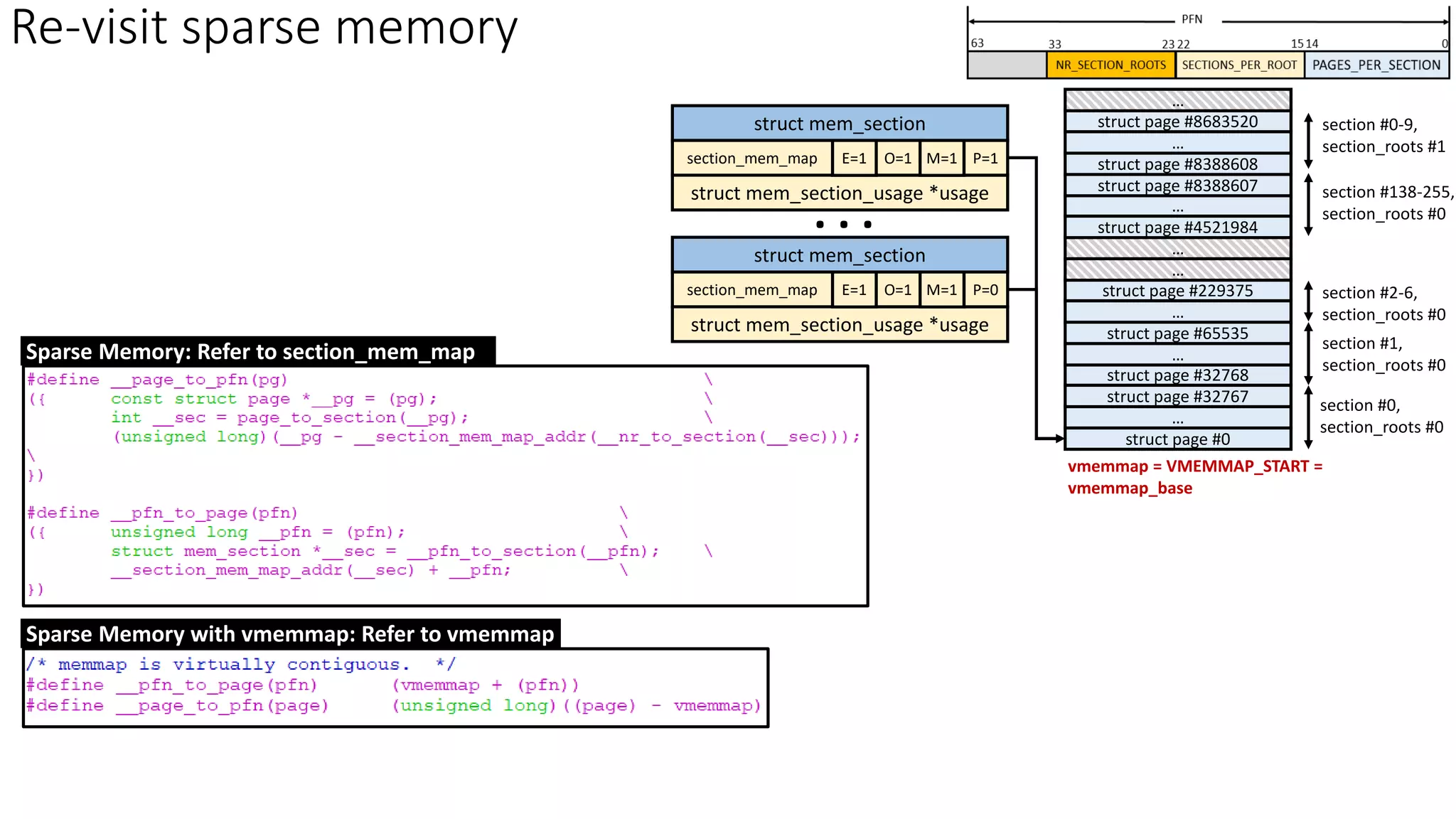

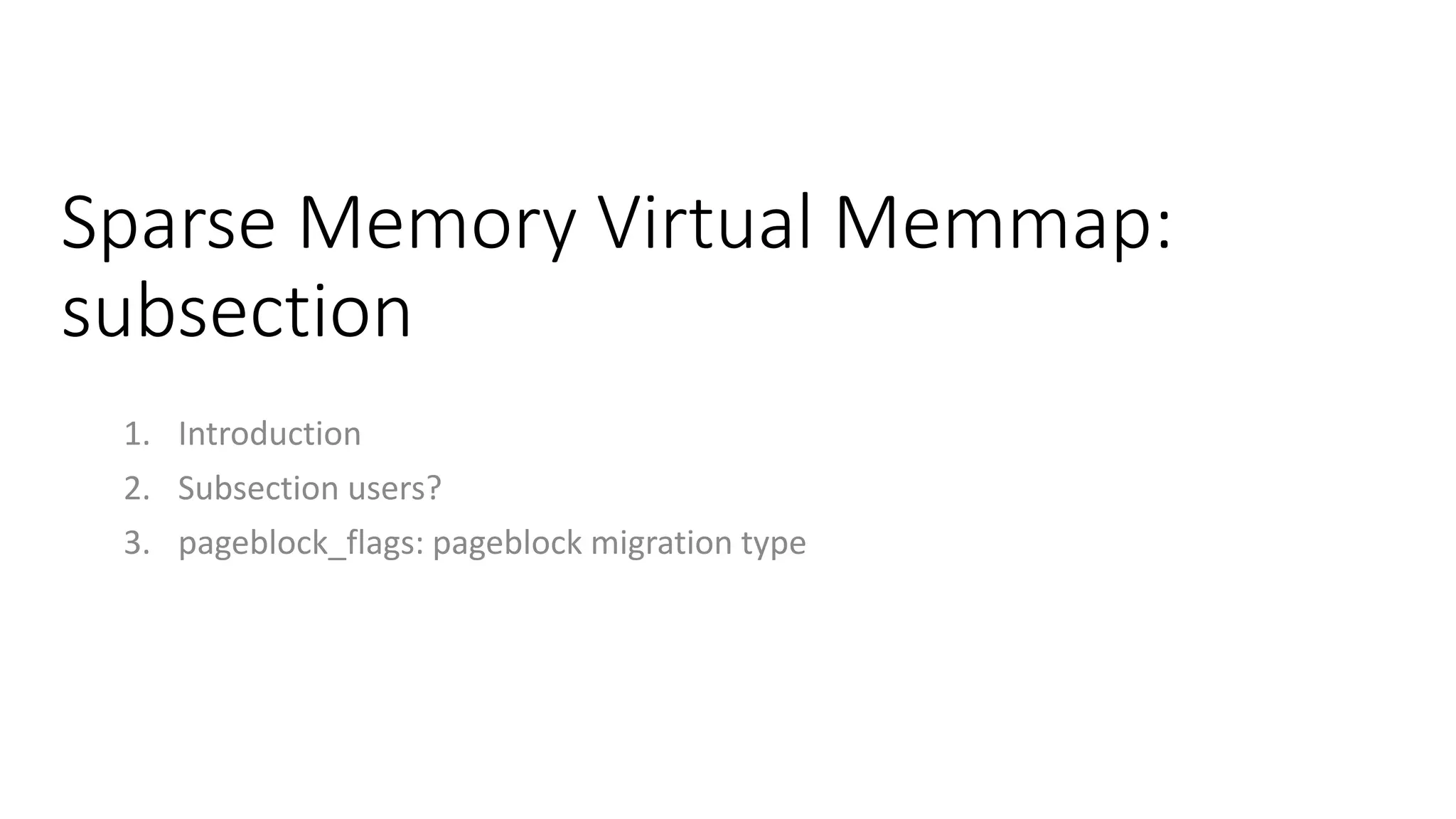

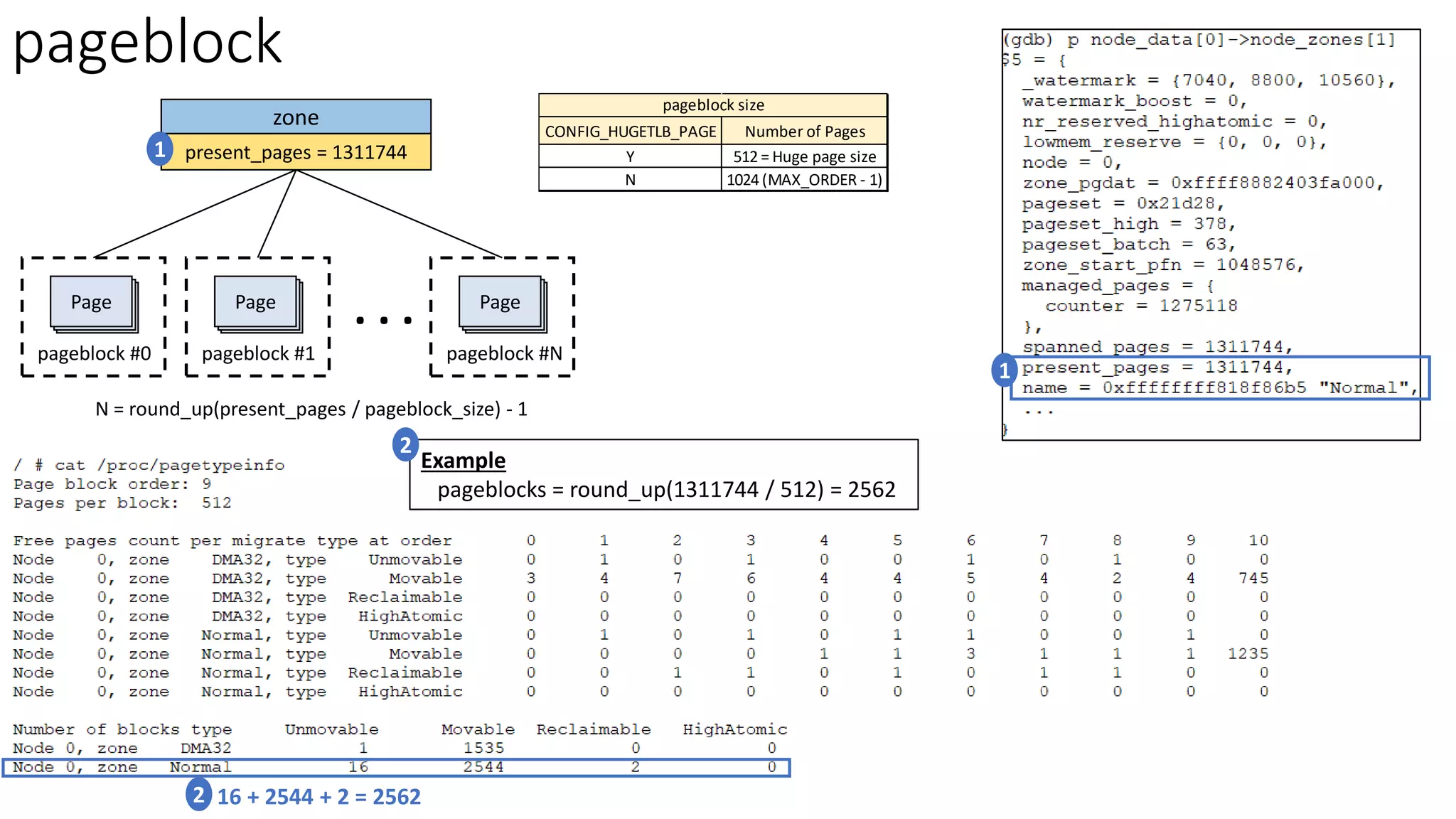

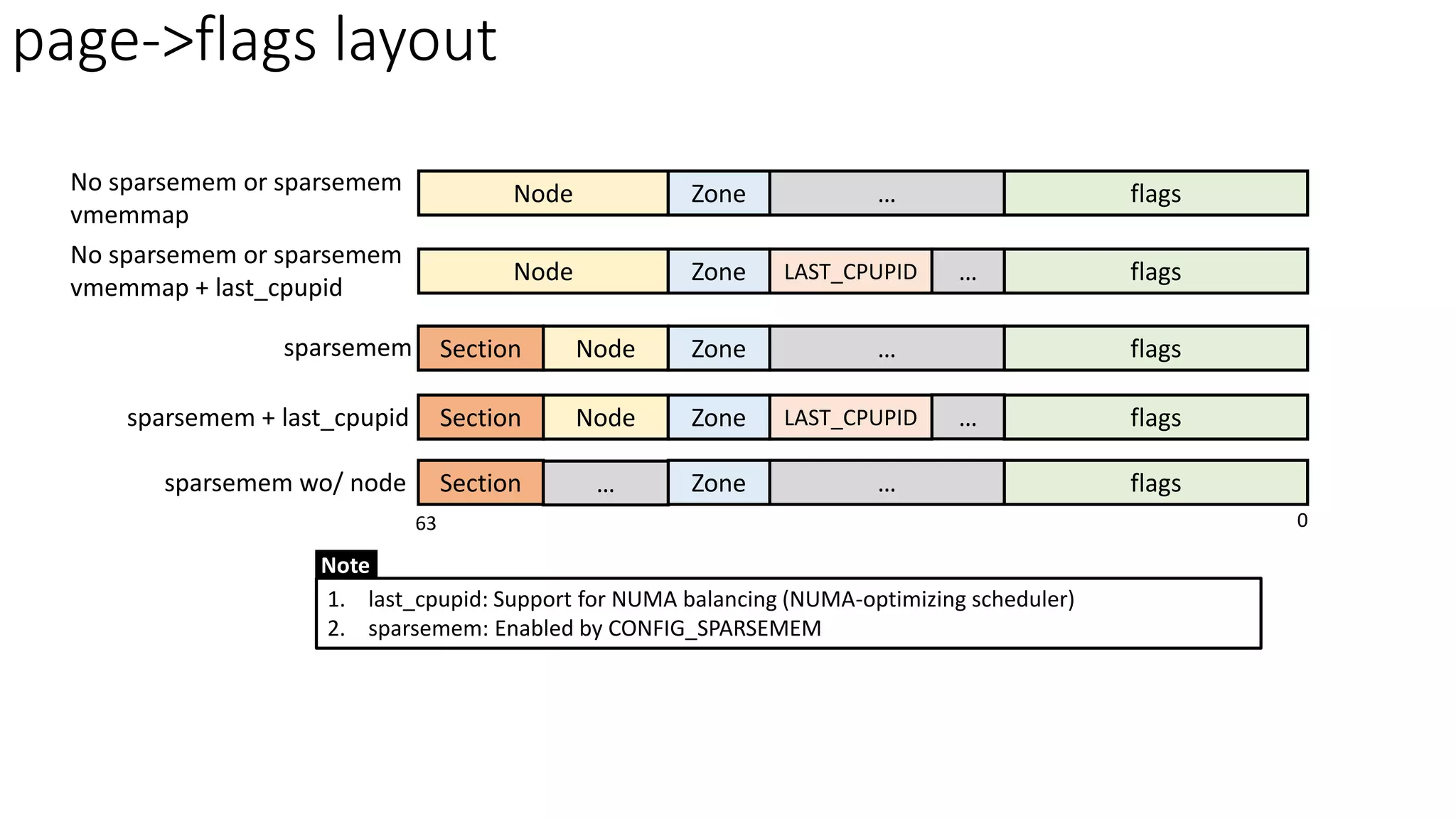

The document discusses four physical memory models in Linux: flat memory model, discontinuous memory model, sparse memory model, and sparse memory virtual memmap. It describes how each model addresses physical memory (page frames) and maps them to page descriptors. The sparse memory model is currently used, using memory sections to allocate page structures and support memory hotplug. It initializes by walking memory ranges from memblocks and allocating/initializing mem_section data structures.

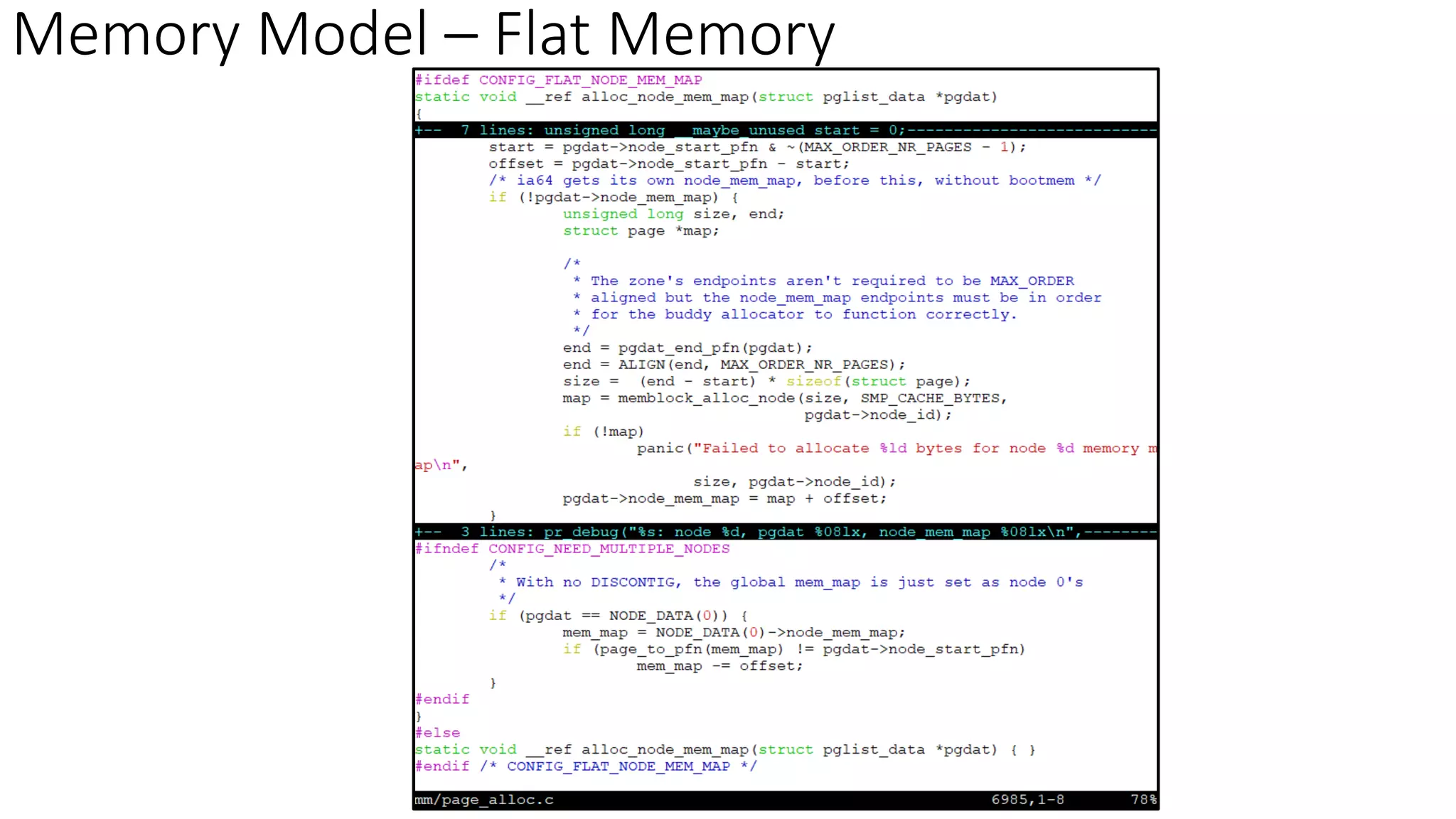

![Memory Model – Flat Memory

struct page #n

....

struct page #1

struct page #0

Dynamic page structure

(Kernel Virtual Address Space)

struct page *mem_map

page frame #n

....

page frame #1

page frame #0

Physical Memory

Note

Page structure array

(Kernel Virtual Address Space)

1. [mem_map] Dynamic page structure: pre-allocate all page structures based on the number of page frames

✓ Allocate/Init page structures based on node’s memory info (struct pglist_data)

▪ Refer from: pglist_data.node_start_pfn & pglist_data.node_spanned_pages

2. Scenario: Continuous page frames (no memory holes) in UMA

3. Drawback

✓ Waste node_mem_map space if memory holes

✓ does not support memory hotplug

4. Check kernel function alloc_node_mem_map() in mm/page_alloc.c](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-4-2048.jpg)

![Memory Model – Discontinuous Memory

struct pglist_data *

page frame #000

....

page frame #1000

Physical Memory

1. [node_mem_map] Dynamic page structure: pre-allocate all page structures based on the

number of page frames

✓ Allocate/Init page structures based on node’s memory info (struct pglist_data)

▪ Refer from: pglist_data.node_start_pfn & pglist_data.node_spanned_pages

2. Scenario: Each node has continuous page frames (no memory holes) in NUMA

3. Drawback

✓ Waste node_mem_map space if memory holes

✓ does not support memory hotplug

NUMA Node Structure

(Kernel Virtual Address Space) struct pglist_data *

struct pglist_data *

…

struct pglist_data *node_data[]

page frame #999

....

page frame #0

struct page #n

....

struct page #0

struct page #n

....

struct page #0

node_mem_map

node_mem_map Node #1

Node #0

Note](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-6-2048.jpg)

![Memory Model – Sparse Memory

struct mem_section

page frame

....

page frame

Physical Memory

**mem_section

struct mem_section

struct mem_section

…

struct mem_section *

page frame

....

page frame

....

struct page #0

struct page #n

....

struct page #0

Node #1

(hotplug)

Node #0

…

struct mem_section *

1. [section_mem_map] Dynamic page structure: pre-allocate page structures based on the

number of available page frames

✓ Refer from: memblock structure

2. Support physical memory hotplug

3. Minimum unit: PAGES_PER_SECTION = 32768

✓ Each memory section addresses the memory size: 32768 * 4KB (page size) = 128MB

4. [NUMA] : reduce the memory hole impact due to “struct mem_section”

Note

struct page #m+n-1](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-7-2048.jpg)

![struct mem_section

page frame

....

page frame

Physical Memory

struct mem_section

struct mem_section

…

struct mem_section *

page frame

....

page frame

struct page #m+n-1

....

struct page #m

struct page #n

....

struct page #0

Node #1

Node #0

…

struct mem_section *

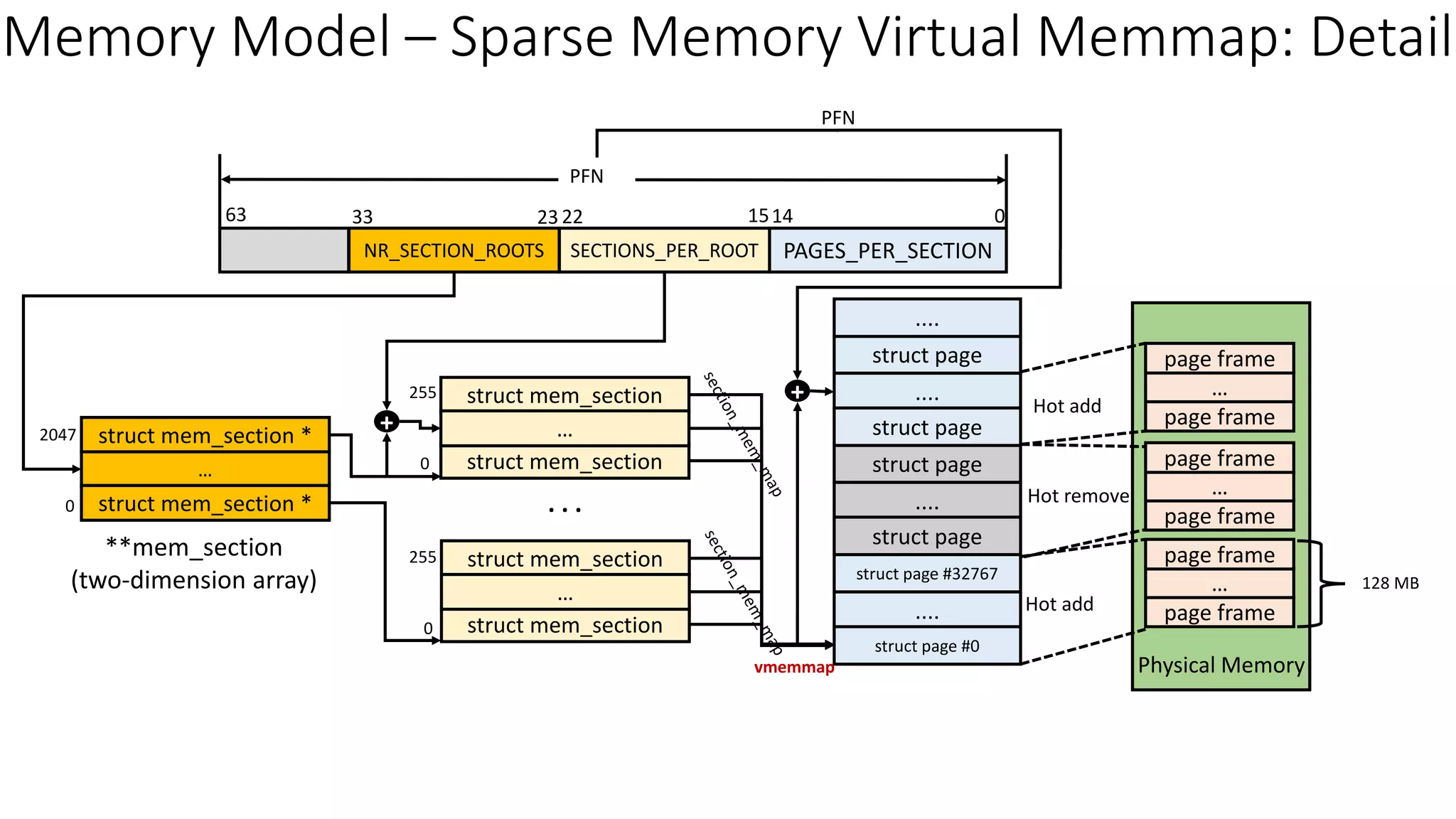

Memory Model – Sparse Memory Virtual Memmap

vmemmap

Memory Section

(two-dimension array)

Note

1. [section_mem_map] Dynamic page structure: pre-allocate page structures based on the number of

available page frames

✓ Refer from: memblock structure

2. Support physical memory hotplug

3. Minimum unit: PAGES_PER_SECTION = 32768

✓ Each memory section addresses the memory size: 32768 * 4KB (page size) = 128MB

4. [NUMA] : reduce the memory hole impact due to “struct mem_section”

5. Employ virtual memory map (vmemmap/ vmemmap_base) – A quick way to get page struct and pfn

6. Default configuration in Linux kernel](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-8-2048.jpg)

![How to know available memory pages in a system?

BIOS e820 memblock Zone Page Frame Allocator

e820__memblock_setup() __free_pages_core()

[Call Path] memblock frees available memory space to zone page frame allocator

Zone page allocator detail will be discussed in another session:

physical memory management](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-12-2048.jpg)

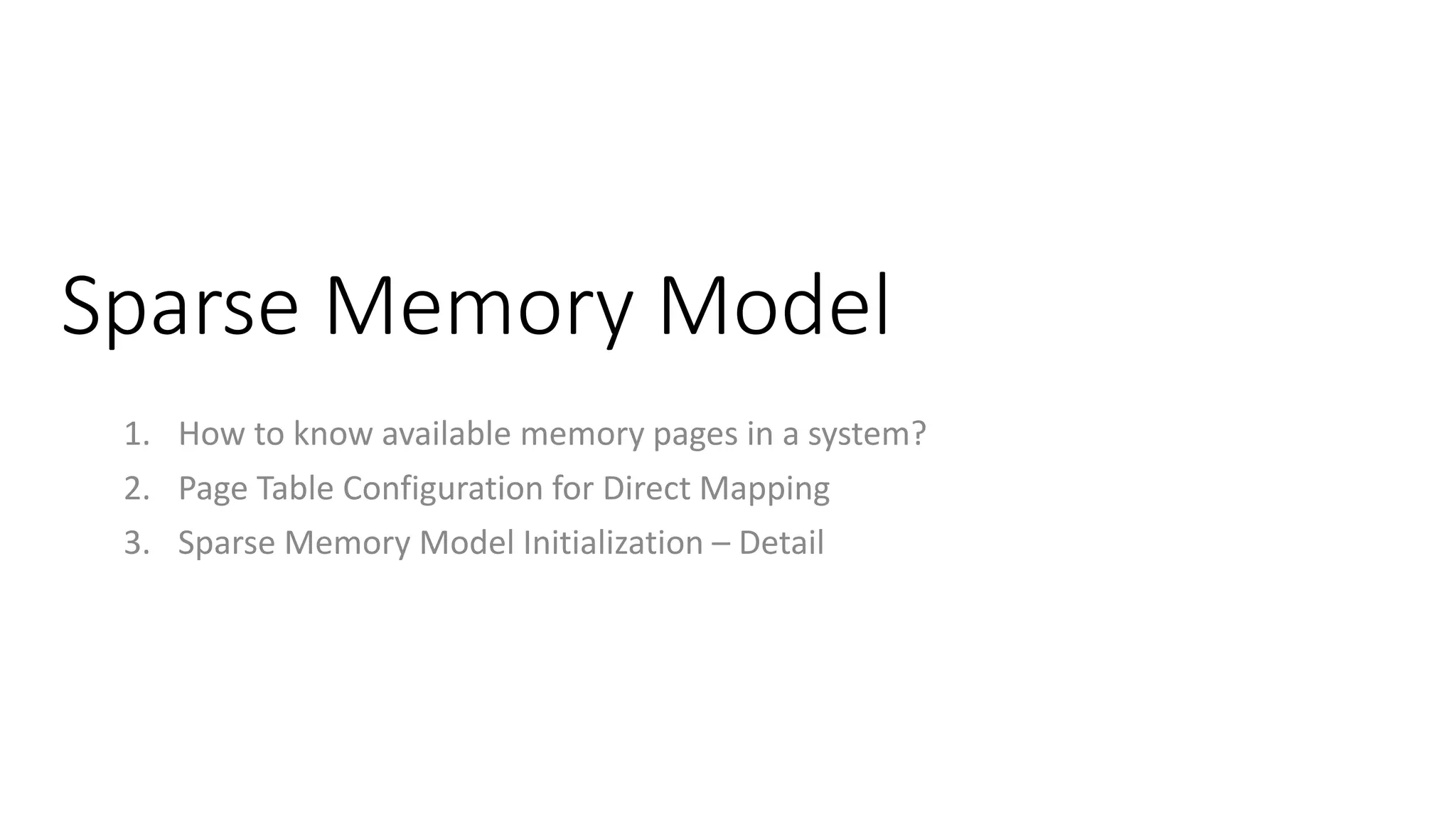

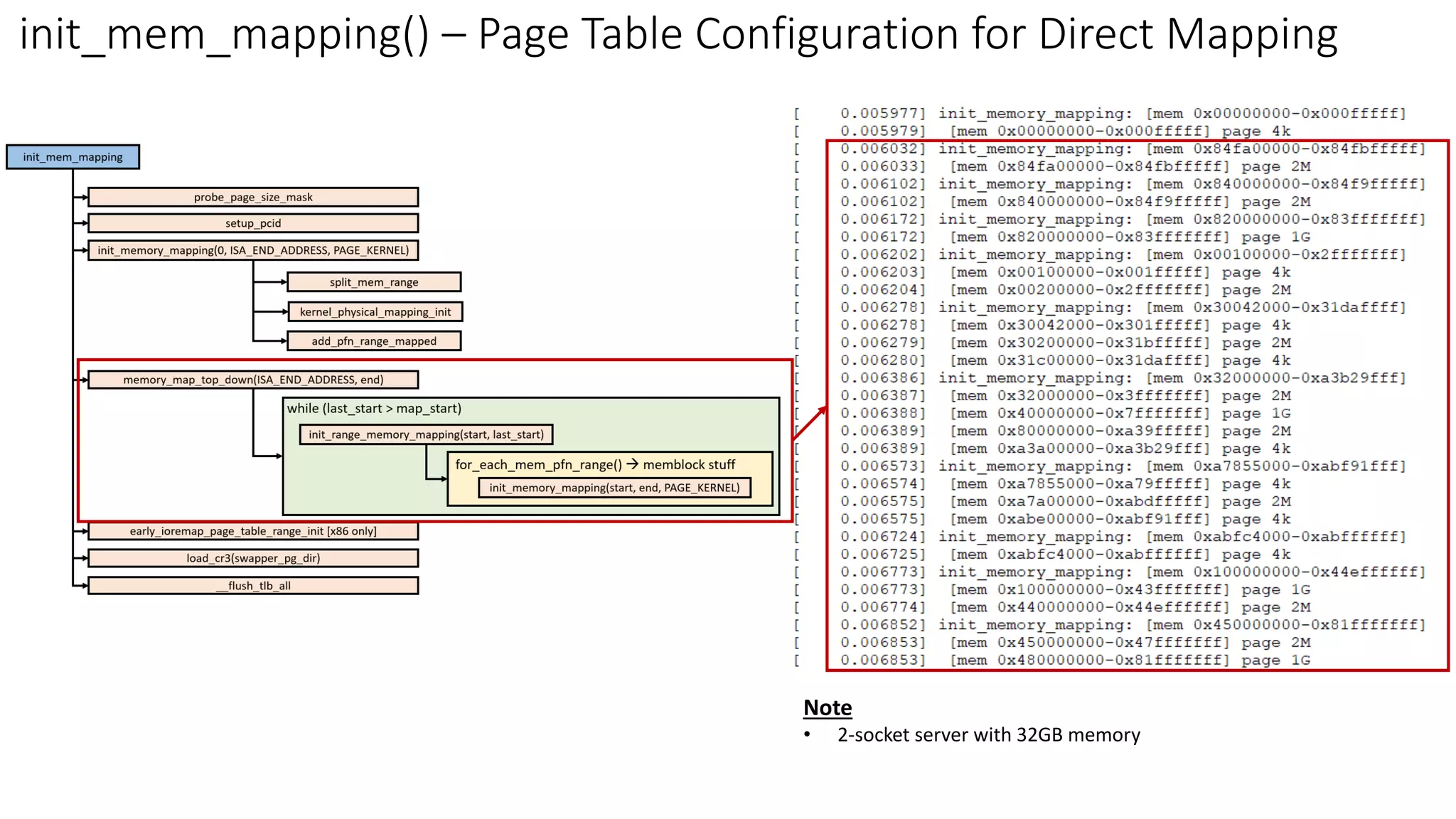

![x86 - setup_arch() -- init_mem_mapping() – Page Table

Configuration for Direct Mapping

init_mem_mapping

probe_page_size_mask

setup_pcid

memory_map_top_down(ISA_END_ADDRESS, end)

init_memory_mapping(0, ISA_END_ADDRESS, PAGE_KERNEL)

init_range_memory_mapping(start, last_start)

split_mem_range

kernel_physical_mapping_init

add_pfn_range_mapped

early_ioremap_page_table_range_init [x86 only]

load_cr3(swapper_pg_dir)

__flush_tlb_all

init_memory_mapping() -> kernel_physical_mapping_init()

• Create 4-level page table (direct mapping) based on

‘memory’ type of memblock configuration.

split_mem_range()

• Split different the groups of page size based on the input

memory range (start address and end address)

✓ Try larger page size first

▪ 1G huge page -> 2M huge page -> 4K page

while (last_start > map_start)

init_memory_mapping(start, end, PAGE_KERNEL)

for_each_mem_pfn_range() → memblock stuff](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-14-2048.jpg)

![Page Table Configuration for Direct Mapping

Kernel Space

0x0000_7FFF_FFFF_FFFF

0xFFFF_8000_0000_0000

128TB

Page frame direct

mapping (64TB)

ZONE_DMA

ZONE_DMA32

ZONE_NORMAL

page_offset_base

0

16MB

64-bit Virtual Address

Kernel Virtual Address

Physical Memory

0

0xFFFF_FFFF_FFFF_FFFF

Guard hole (8TB)

LDT remap for PTI (0.5TB)

Unused hole (0.5TB)

vmalloc/ioremap (32TB)

vmalloc_base

Unused hole (1TB)

Virtual memory map – 1TB

(store page frame descriptor)

…

vmemmap_base

64TB

*page

…

*page

…

*page

…

Page Frame

Descriptor

vmemmap_base

page_ofset_base = 0xFFFF_8880_0000_0000

vmalloc_base = 0xFFFF_C900_0000_0000

vmemmap_base = 0xFFFF_EA00_0000_0000

* Can be dynamically configured by KASLR (Kernel Address Space Layout Randomization - "arch/x86/mm/kaslr.c")

Default Configuration

Kernel text mapping from

physical address 0

Kernel code [.text, .data…]

Modules

__START_KERNEL_map = 0xFFFF_FFFF_8000_0000

__START_KERNEL = 0xFFFF_FFFF_8100_0000

MODULES_VADDR

0xFFFF_8000_0000_0000

Empty Space

User Space

128TB

1GB or 512MB

1GB or 1.5GB Fix-mapped address space

(Expanded to 4MB: 05ab1d8a4b36) FIXADDR_START

Unused hole (2MB) 0xFFFF_FFFF_FFE0_0000

0xFFFF_FFFF_FFFF_FFFF

FIXADDR_TOP = 0xFFFF_FFFF_FF7F_F000

Reference: Documentation/x86/x86_64/mm.rst

Note: Refer from page #5 in the slide deck Decompressed vmlinux: linux kernel initialization from page table configuration perspective](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-15-2048.jpg)

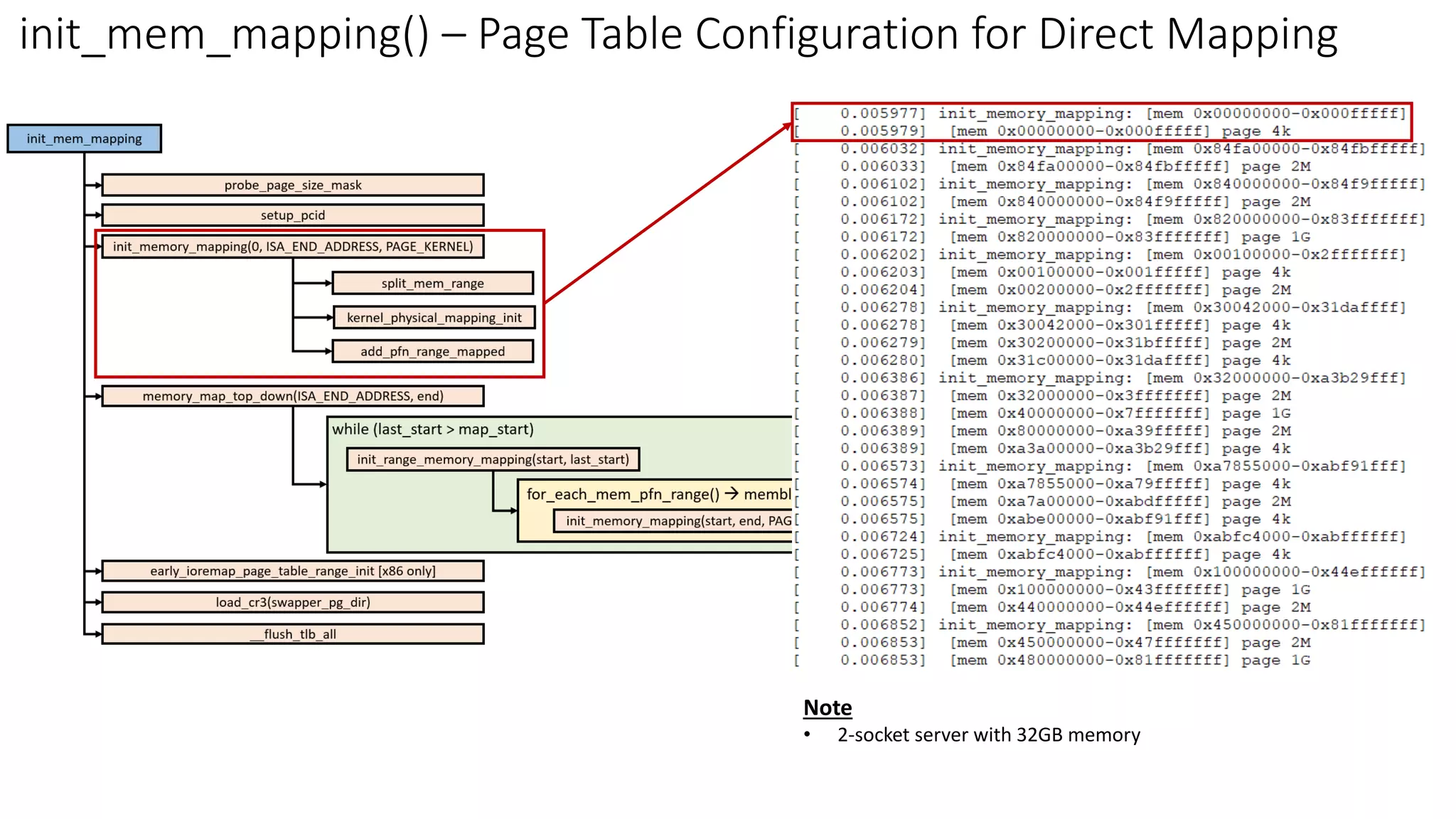

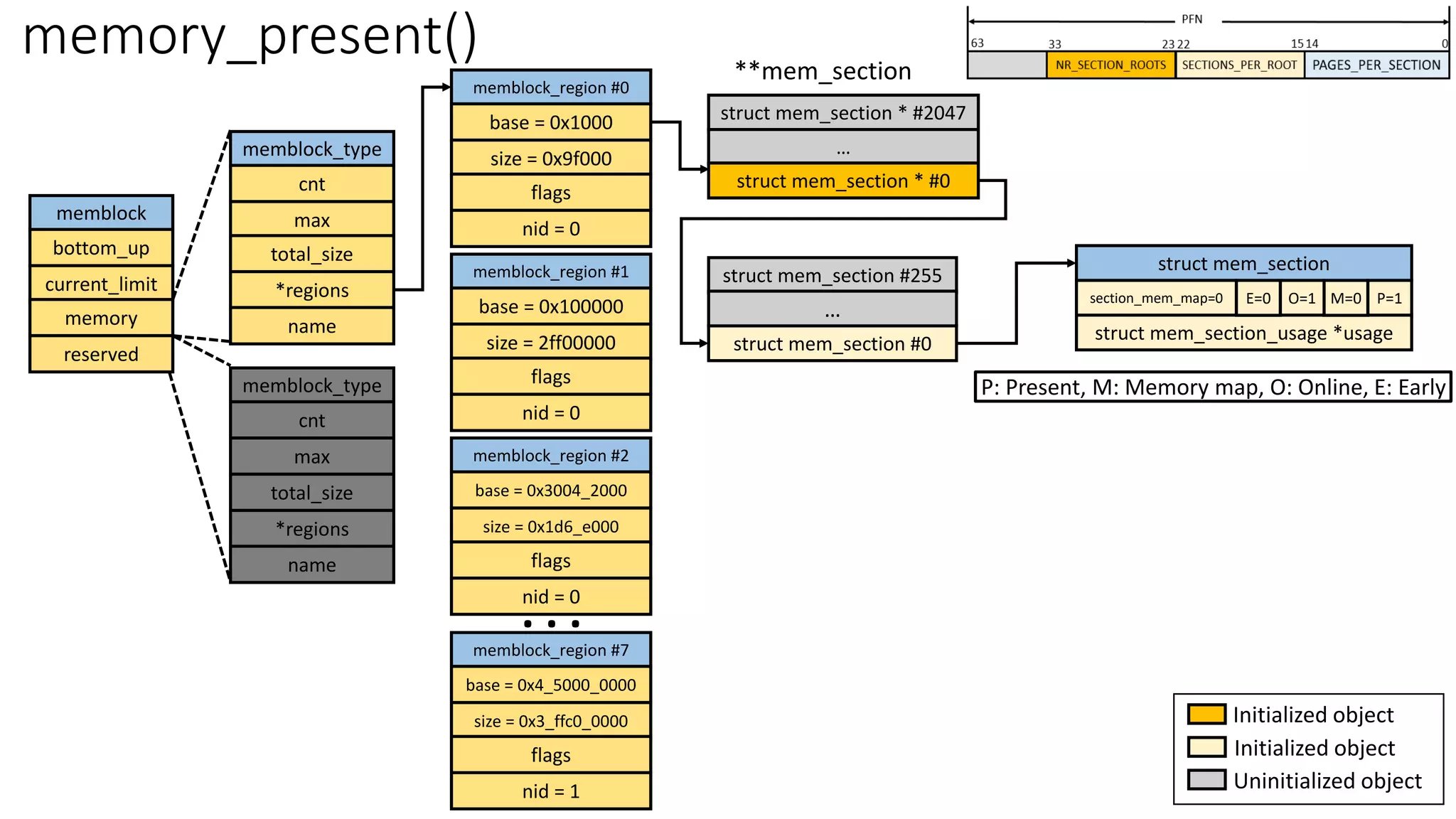

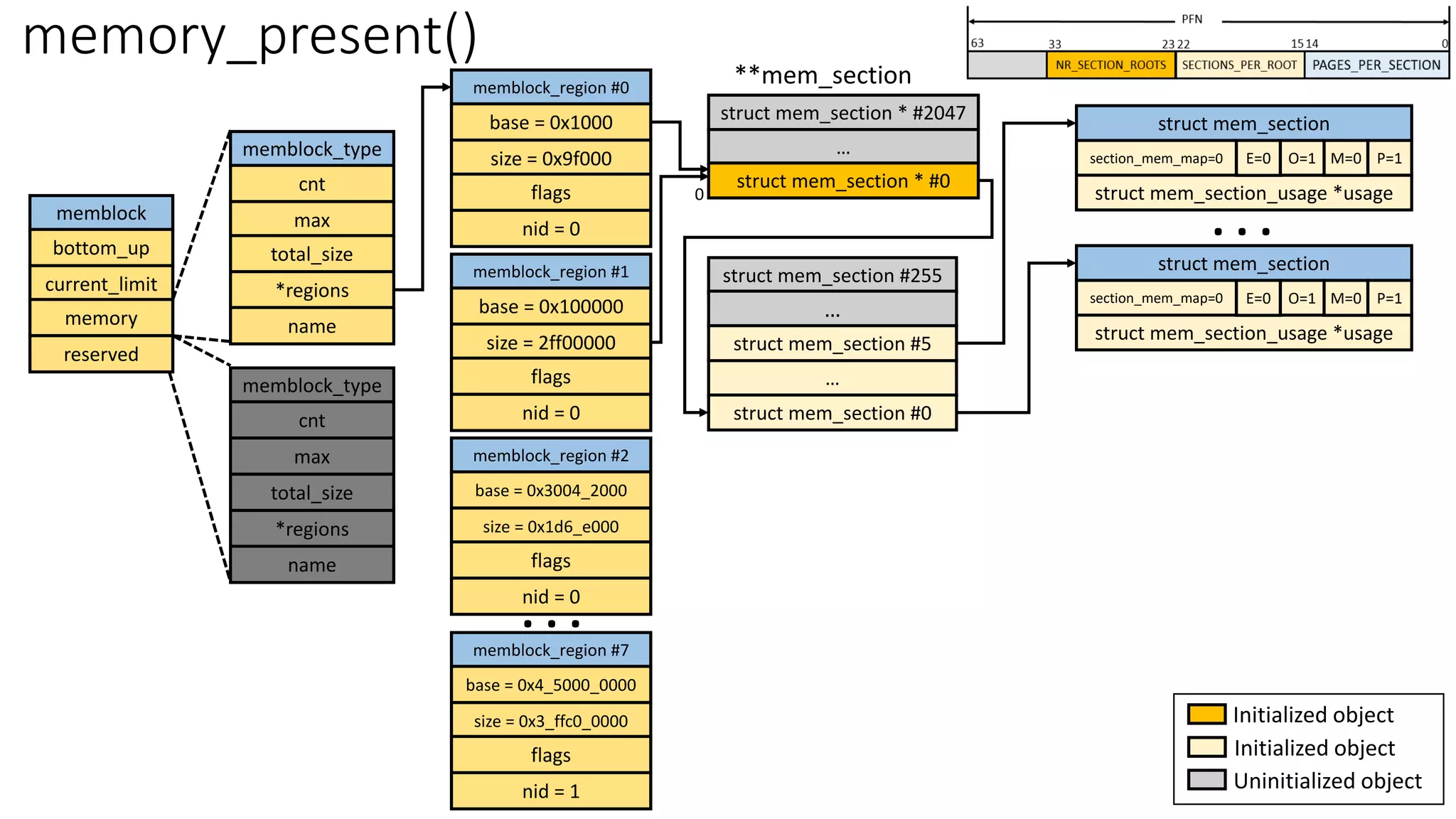

![Sparse Memory Model Initialization: sparse_init()

sparse_init

memblocks_present

pnum_begin = first_present_section_nr();

nid_begin = sparse_early_nid(__nr_to_section(pnum_begin));

for_each_mem_pfn_range(..)

memory_present(nid, start, end)

1. for_each_mem_pfn_range(): Walk through available memory range

from memblock subsystem

Allocate pointer array of section root if necessary

for (pfn = start; pfn < end; pfn += PAGES_PER_SECTION)

sparse_index_init

set_section_nid

section_mark_present

cfg ‘ms->section_mem_map’ via

sparse_encode_early_nid()

for_each_present_section_nr(pnum_begin + 1, pnum_end)

sparse_init_nid

sparse_init_nid [Cover last cpu node]

Mark the present bit for each allocated mem_section

cfg ms->section_mem_map flag bits

1. Allocate a mem_section_usage struct

2. cfg ms->section_mem_map with the valid page descriptor

[During boot]

Temporary: Store nid in

ms->section_mem_map

[During boot]

Temporary: get nid in ms->section_mem_map](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-20-2048.jpg)

![64-bit Virtual Address

Kernel Space

0x0000_7FFF_FFFF_FFFF

0xFFFF_8000_0000_0000

128TB

Page frame direct

mapping (64TB)

ZONE_DMA

ZONE_DMA32

ZONE_NORMAL

page_offset_base

0

16MB

64-bit Virtual Address

Kernel Virtual Address

Physical Memory

0

0xFFFF_FFFF_FFFF_FFFF

Guard hole (8TB)

LDT remap for PTI (0.5TB)

Unused hole (0.5TB)

vmalloc/ioremap (32TB)

vmalloc_base

Unused hole (1TB)

Virtual memory map – 1TB

(store page frame descriptor)

…

vmemmap_base

64TB

*page

…

*page

…

*page

…

Page Frame

Descriptor

vmemmap_base

page_ofset_base = 0xFFFF_8880_0000_0000

vmalloc_base = 0xFFFF_C900_0000_0000

vmemmap_base = 0xFFFF_EA00_0000_0000

* Can be dynamically configured by KASLR (Kernel Address Space Layout Randomization - "arch/x86/mm/kaslr.c")

Default Configuration

Kernel text mapping from

physical address 0

Kernel code [.text, .data…]

Modules

__START_KERNEL_map = 0xFFFF_FFFF_8000_0000

__START_KERNEL = 0xFFFF_FFFF_8100_0000

MODULES_VADDR

0xFFFF_8000_0000_0000

Empty Space

User Space

128TB

1GB or 512MB

1GB or 1.5GB Fix-mapped address space

(Expanded to 4MB: 05ab1d8a4b36) FIXADDR_START

Unused hole (2MB) 0xFFFF_FFFF_FFE0_0000

0xFFFF_FFFF_FFFF_FFFF

FIXADDR_TOP = 0xFFFF_FFFF_FF7F_F000

Reference: Documentation/x86/x86_64/mm.rst

Note: Refer from page #5 in the slide deck Decompressed vmlinux: linux kernel initialization from page table configuration perspective](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-28-2048.jpg)

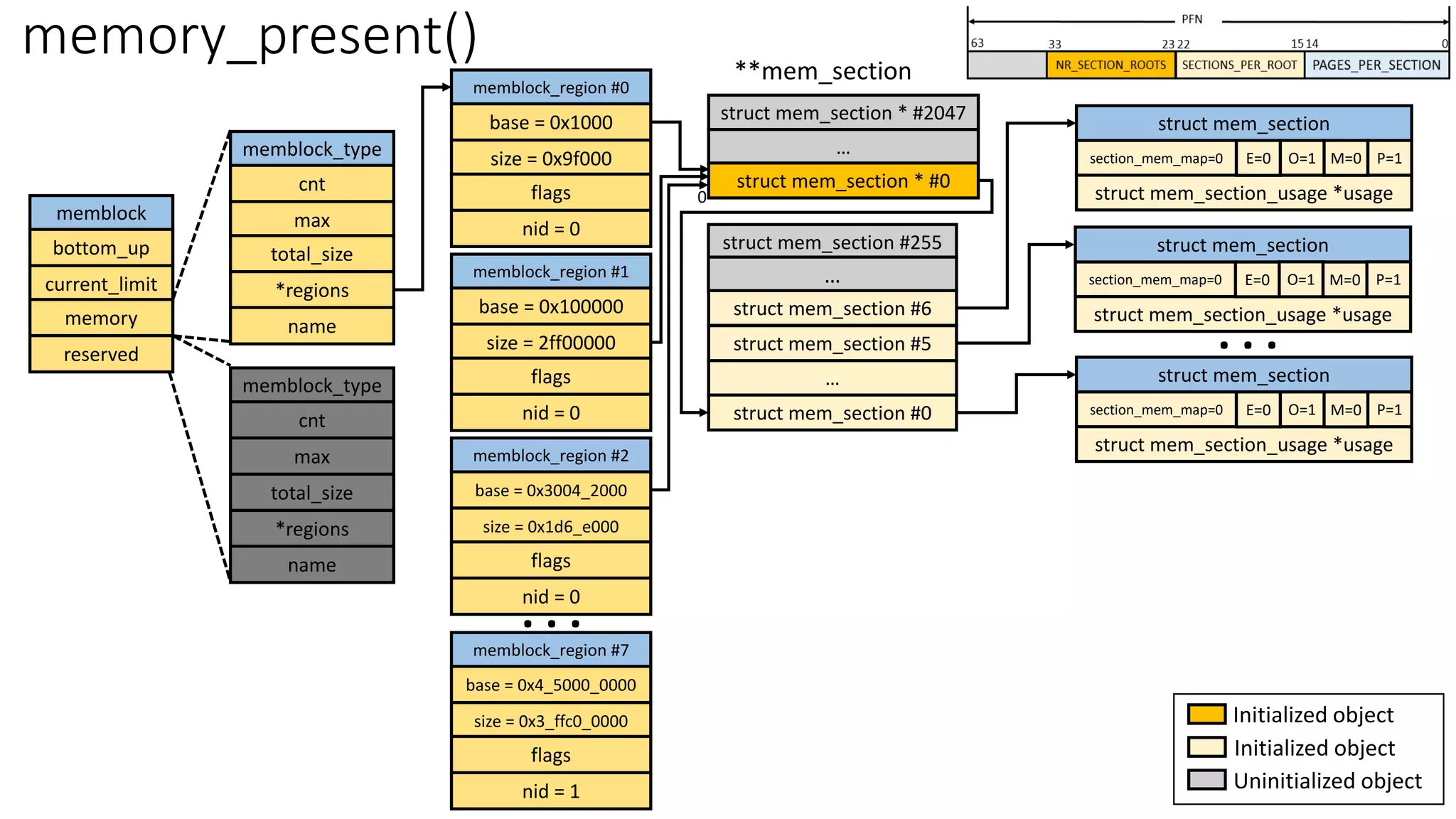

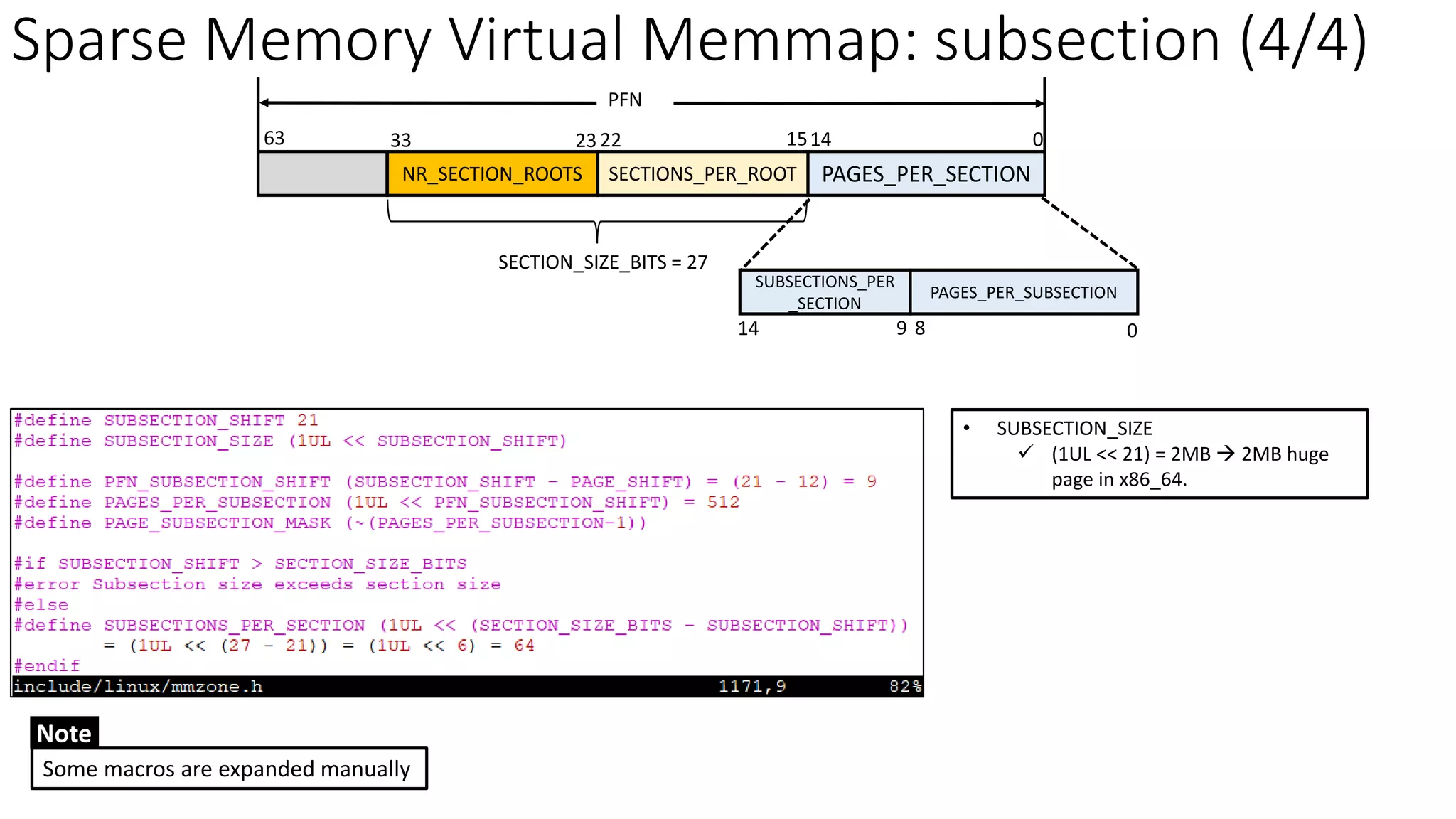

![Sparse Memory Virtual Memmap: subsection (1/4)

SECTIONS_PER_ROOT PAGES_PER_SECTION

0

14

15

22

NR_SECTION_ROOTS

23

33

PFN

63

SECTION_SIZE_BITS = 27

PAGES_PER_SUBSECTION

SUBSECTIONS_PER

_SECTION

14 9 0

8

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

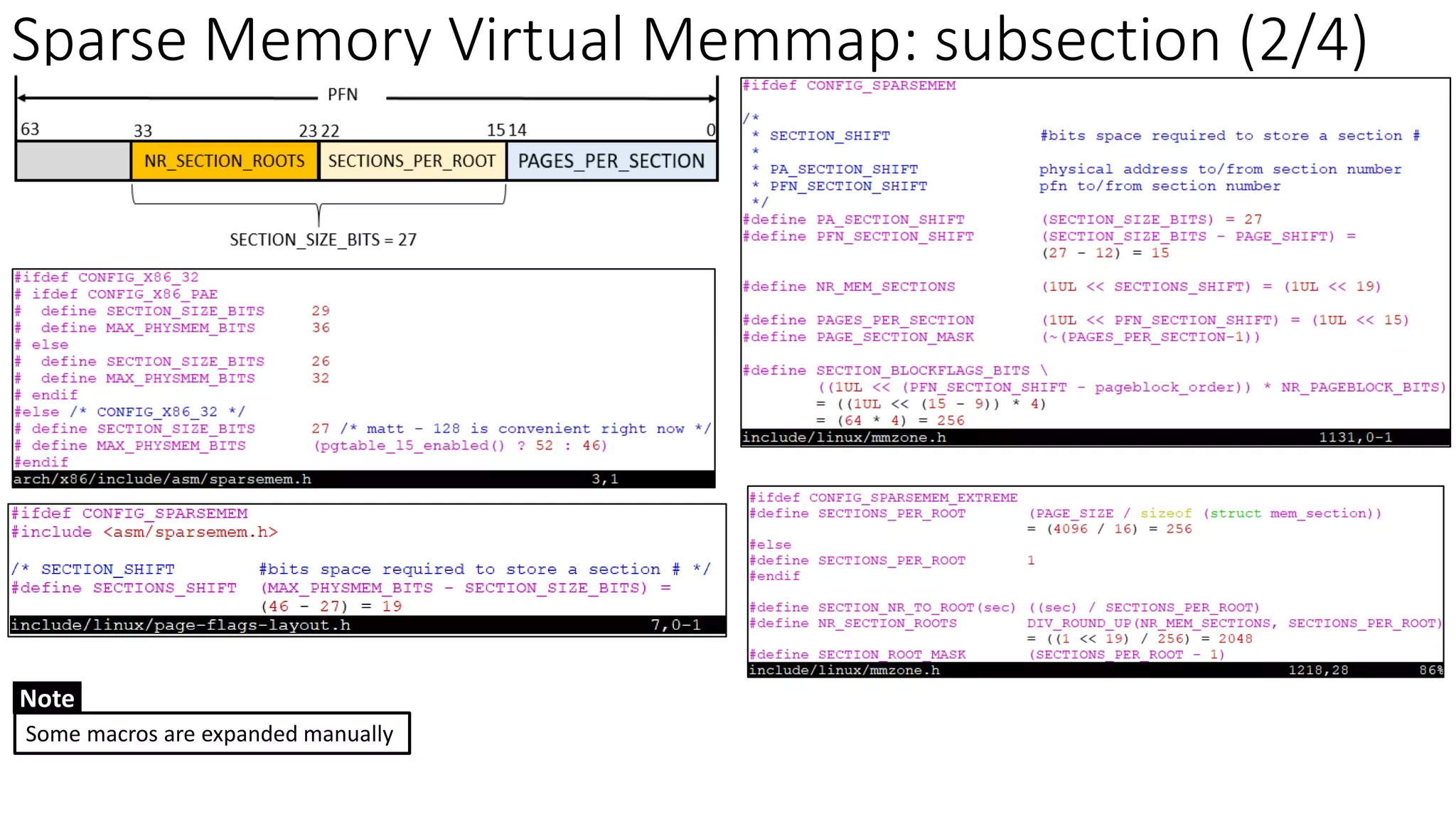

• subsection_map: bitmap to indicate if the corresponding subsection is valid

• pageblock_flags: pages of a subsection have the same flag (migration type)

sparsemem vmemmap *only*](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-31-2048.jpg)

![Sparse Memory Virtual Memmap: subsection (3/4)

SECTIONS_PER_ROOT PAGES_PER_SECTION

0

14

15

22

NR_SECTION_ROOTS

23

33

PFN

63

SECTION_SIZE_BITS = 27

PAGES_PER_SUBSECTION

SUBSECTIONS_PER

_SECTION

14 9 0

8

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

• PAGES_PER_SUBSECTION = 512 pages

✓ 512 pages * 4KB = 2MB → 2MB huge page

in x86_64](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-33-2048.jpg)

![subsection: subsection_map users?

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

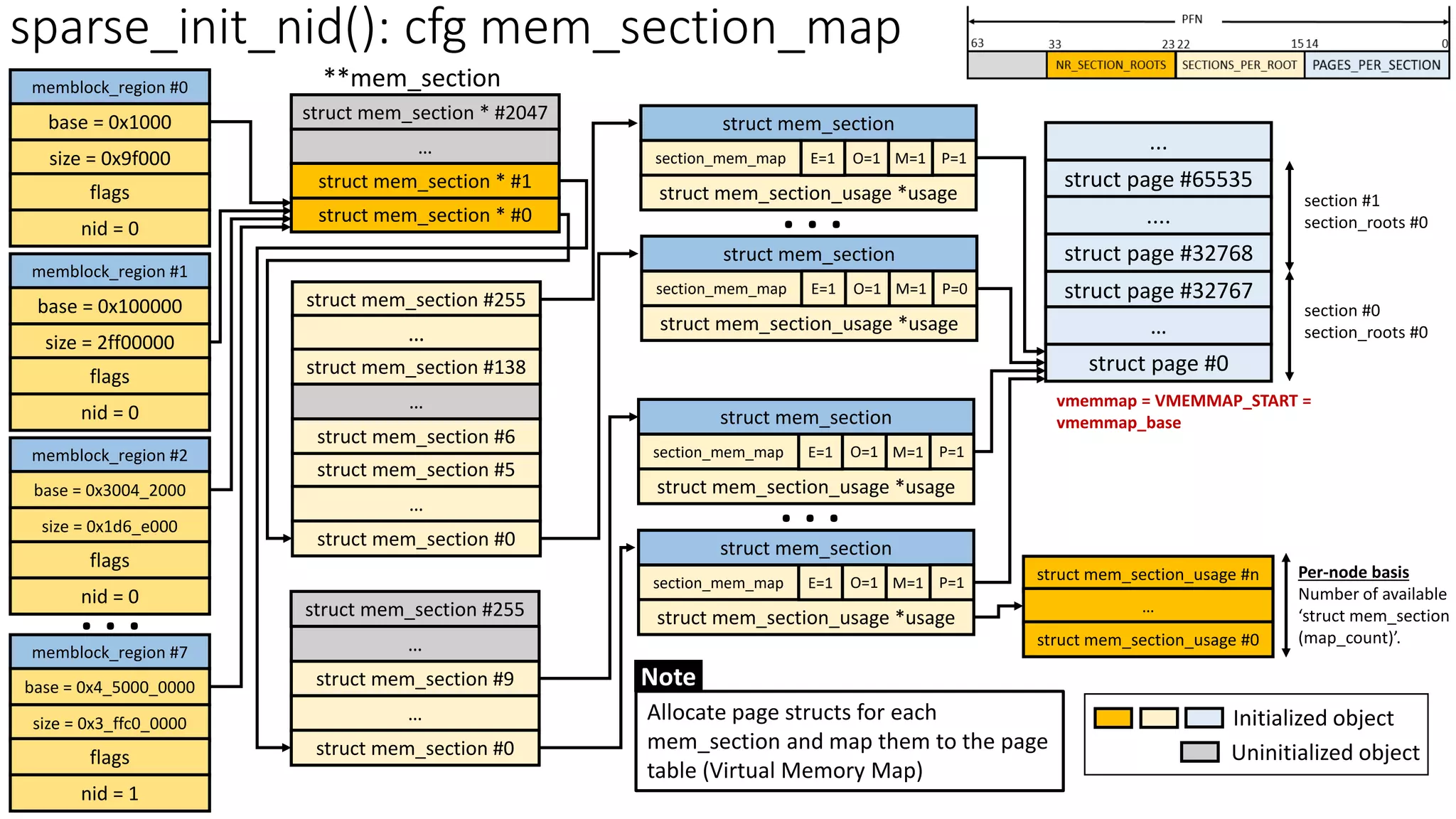

• init stage

✓ paging_init -> zone_sizes_init -> free_area_init -> subsection_map_init -> subsection_mask_set

➢ Set the corresponding bit map for the specific subsection

• Reference stage

✓ pfn_section_valid(struct mem_section *ms, unsigned long pfn)

➢ Users

▪ [mm/page_alloc.c: 5089] free_pages -> virt_addr_valid -> __virt_addr_valid -> pfn_valid -> pfn_section_valid

▪ [drivers/char/mem.c: 416] mmap_kmem -> pfn_valid -> pfn_section_valid ➔ /dev/mem (`man mem`)

▪ …

subsection_map users](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-35-2048.jpg)

![struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

• Hotplug stage

✓ Add

➢ #A1 [drivers/acpi/acpi_memhotplug.c: 311] acpi_memory_device_add -> acpi_memory_enable_device ->

__add_memory -> add_memory_resource -> arch_add_memory -> add_pages -> __add_pages -> sparse_add_section

-> section_activate -> fill_subsection_map -> subsection_mask_set

➢ #A2 [drivers/dax/kmem.c: 43] dev_dax_kmem_probe -> add_memory_driver_managed -> add_memory_resource ->

same with #A1

✓ Remove

➢ #R1 [drivers/acpi/acpi_memhotplug.c: 311] acpi_memory_device_remove -> __remove_memory ->

try_remove_memory -> arch_remove_memory -> __remove_pages -> __remove_section -> sparse_remove_section ->

section_deactivate -> clear_subsection_map

➢ #R2 [drivers/dax/kmem.c: 139] dev_dax_kmem_remove -> remove_memory -> try_remove_memory -> same with #R1

subsection_map users

subsection: subsection_map users?](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-36-2048.jpg)

![pageblock_flags: pageblock migration type

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

unsigned long pageblock_flags[4]

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

[0]

Dynamically allocated

[1]

[2]

[3]

subsection #0: Migration Type

subsection #16: Migration Type

subsection #32: Migration Type

subsection #48: Migration Type

Migration type is configured in setup_arch -> … -> memmap_init_zone](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-37-2048.jpg)

![pageblock_flags: pageblock migration type

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

unsigned long pageblock_flags[4]

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

[0]

Dynamically allocated

[1]

[2]

[3]

subsection #0: Migration Type

subsection #16: Migration Type

subsection #32: Migration Type

subsection #48: Migration Type](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-38-2048.jpg)

![pageblock: set migration type

free_area_init

print zone ranges and early memory node ranges

for_each_mem_pfn_range(..)

print memory range for each memblock

subsection_map_init

mminit_verify_pageflags_layout

setup_nr_node_ids

init_unavailable_mem

for_each_online_node(nid)

free_area_init_node

node_set_state

check_for_memory

get_pfn_range_for_nid

calculate_node_totalpages

pgdat_set_deferred_range

free_area_init_core

free_area_init_core

memmap_init

for (j = 0; j < MAX_NR_ZONES; j++)

memmap_init_zone

subsection_map_init

subsection_mask_set

for (nr = start_sec; nr <= end_sec; nr++)

bitmap_set

calculate arch_zone_{lowest, highest}_possible_pfn[]

for (pfn = start_pfn; pfn < end_pfn;)

set_pageblock_migratetype

__init_single_page

set_pageblock_migratetype

• [System init stage] each pageblock is initialized to MIGRATE_MOVABLE](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-39-2048.jpg)

![pageblock_flags: pageblock migration type

struct mem_section

section_mem_map

struct mem_section_usage *usage

O=1

E=1 P=1

M=1

subsection #63

…

subsection #0

struct mem_section_usage

subsection_map[1] (bitmap)

pageblock_flags[0]

struct page #0

…

struct page #511

…

…

struct page #32767

struct page #32256

subsection

subsection

section

…

…

unsigned long pageblock_flags[4]

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

4-bit MT . . . 4-bit MT

4-bit MT

[0]

Dynamically allocated

[1]

[2]

[3]

subsection #0: Migration Type

subsection #16: Migration Type

subsection #32: Migration Type

subsection #48: Migration Type

[CONFIG_HUGETLB_PAGE=y]

pages of subsection = pages of pageblock = 512 pages (order = 9)](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-41-2048.jpg)

![Memory Model – Sparse Memory (sparsemem wo/ vmemmap)

struct mem_section

page frame

....

page frame

Physical Memory

**mem_section

struct mem_section

struct mem_section

…

struct mem_section *

page frame

....

page frame

....

struct page #0

struct page #n

....

struct page #0

Node #1

(hotplug)

Node #0

…

struct mem_section *

1. [section_mem_map] Dynamic page structure: pre-allocate page structures based on the

number of available page frames

✓ Refer from: memblock structure

2. Support physical memory hotplug

3. Minimum unit: mem_section - PAGES_PER_SECTION = 32768

✓ Each memory section addresses the memory size: 32768 * 4KB (page size) = 128MB

4. [NUMA] : reduce the memory hole impact due to “struct mem_section”

Note

struct page #m+n-1](https://image.slidesharecdn.com/physicalmemorymodels-220620070231-42aa9f6d/75/Physical-Memory-Models-pdf-47-2048.jpg)