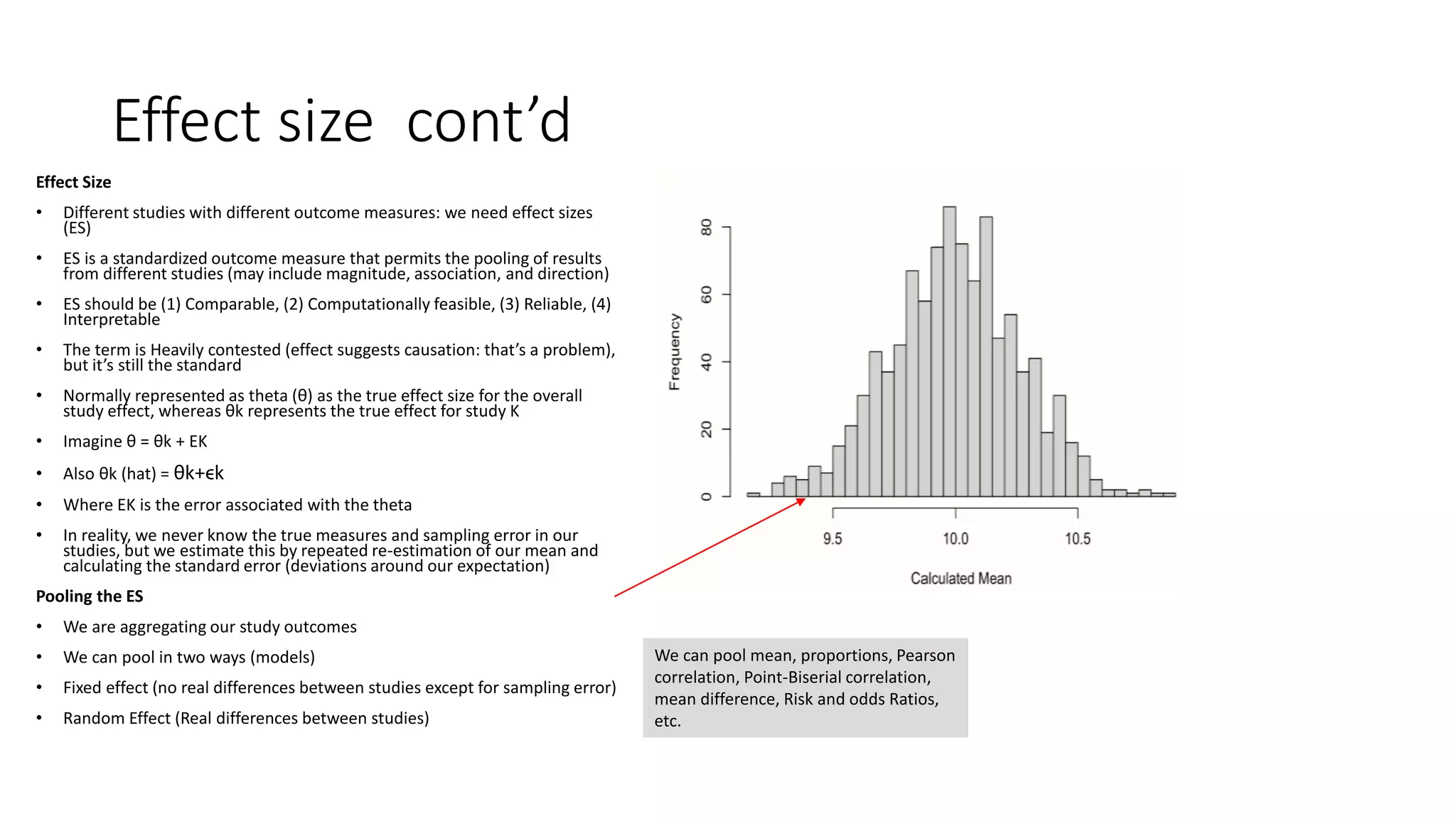

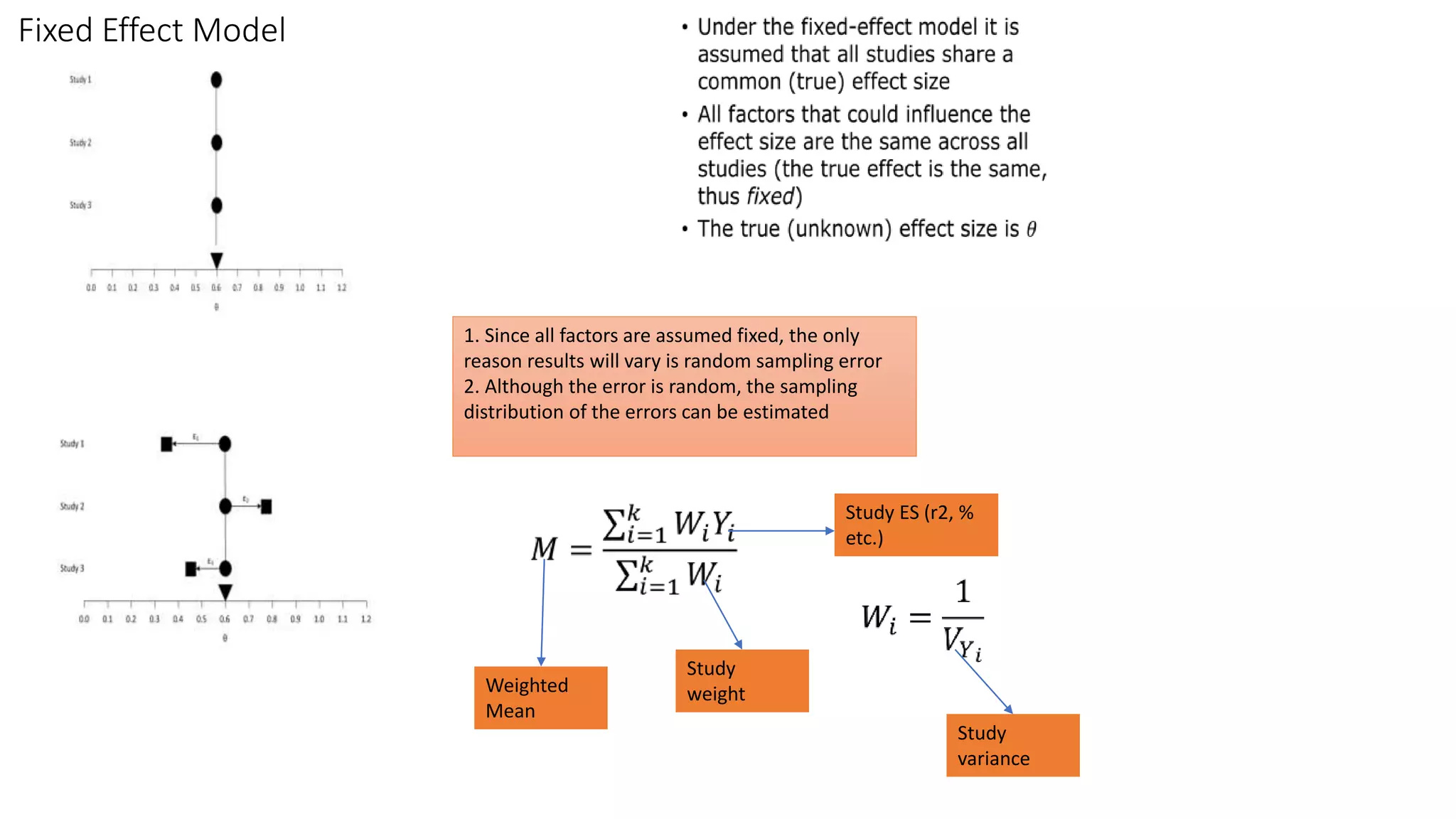

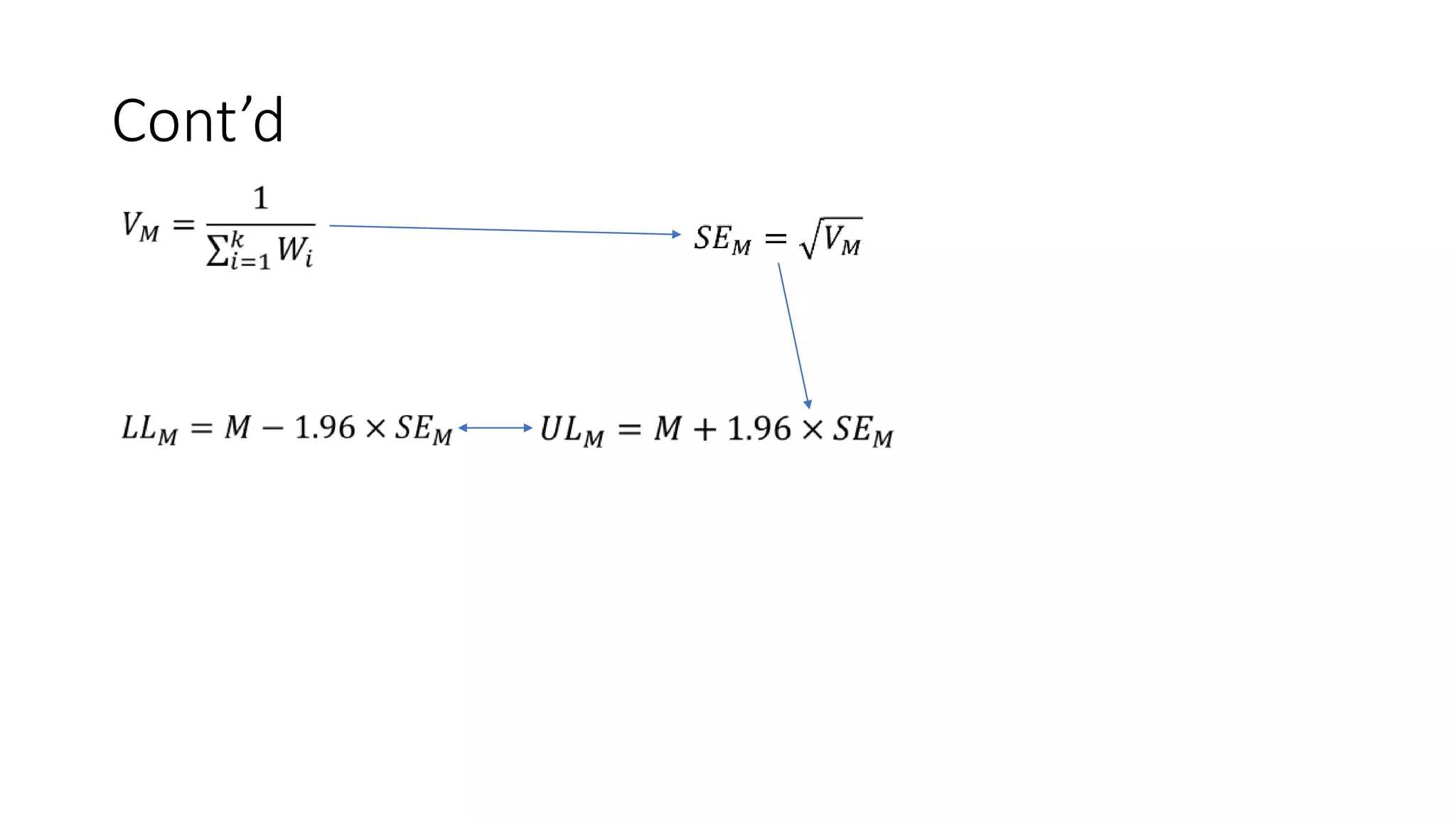

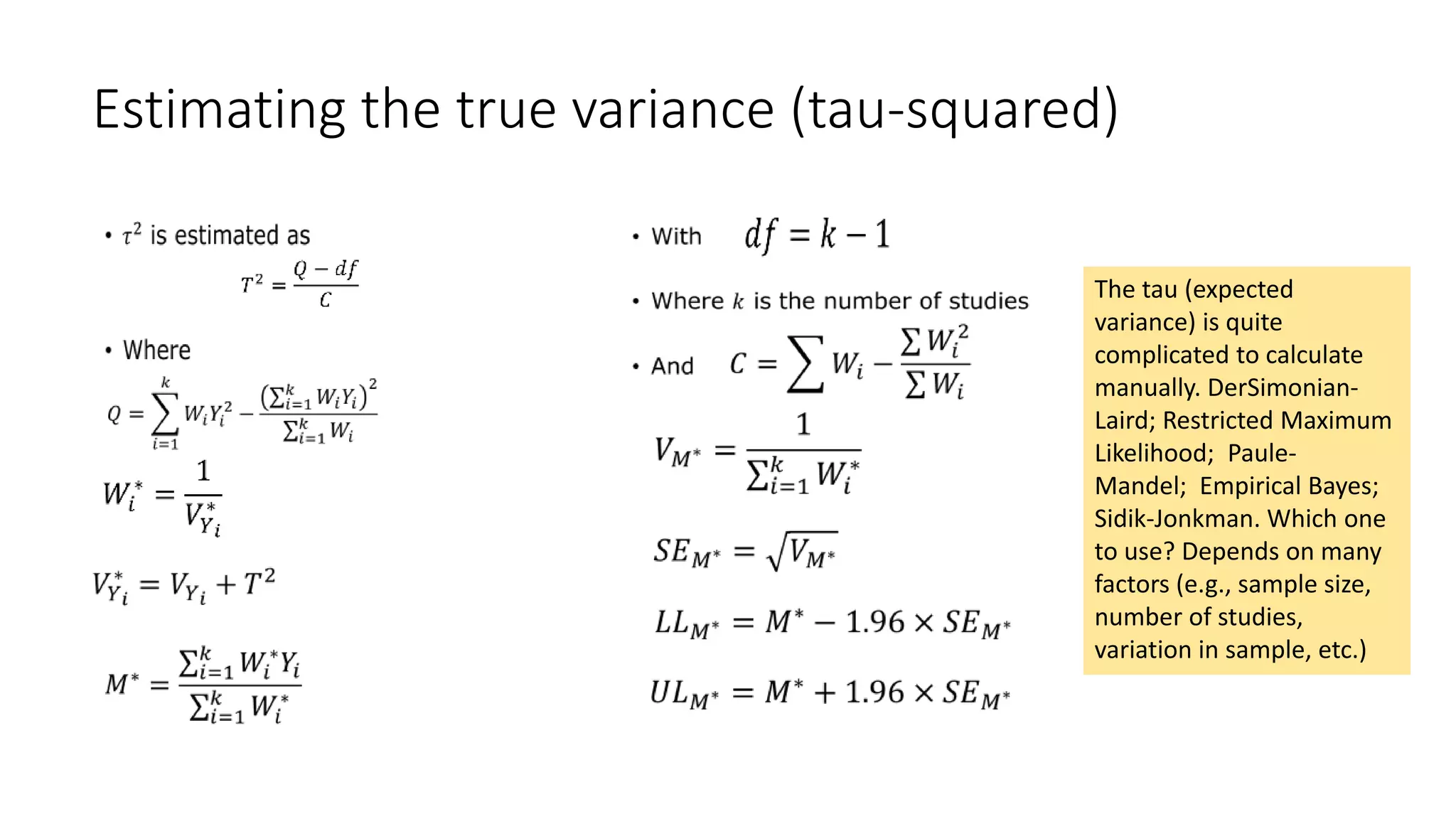

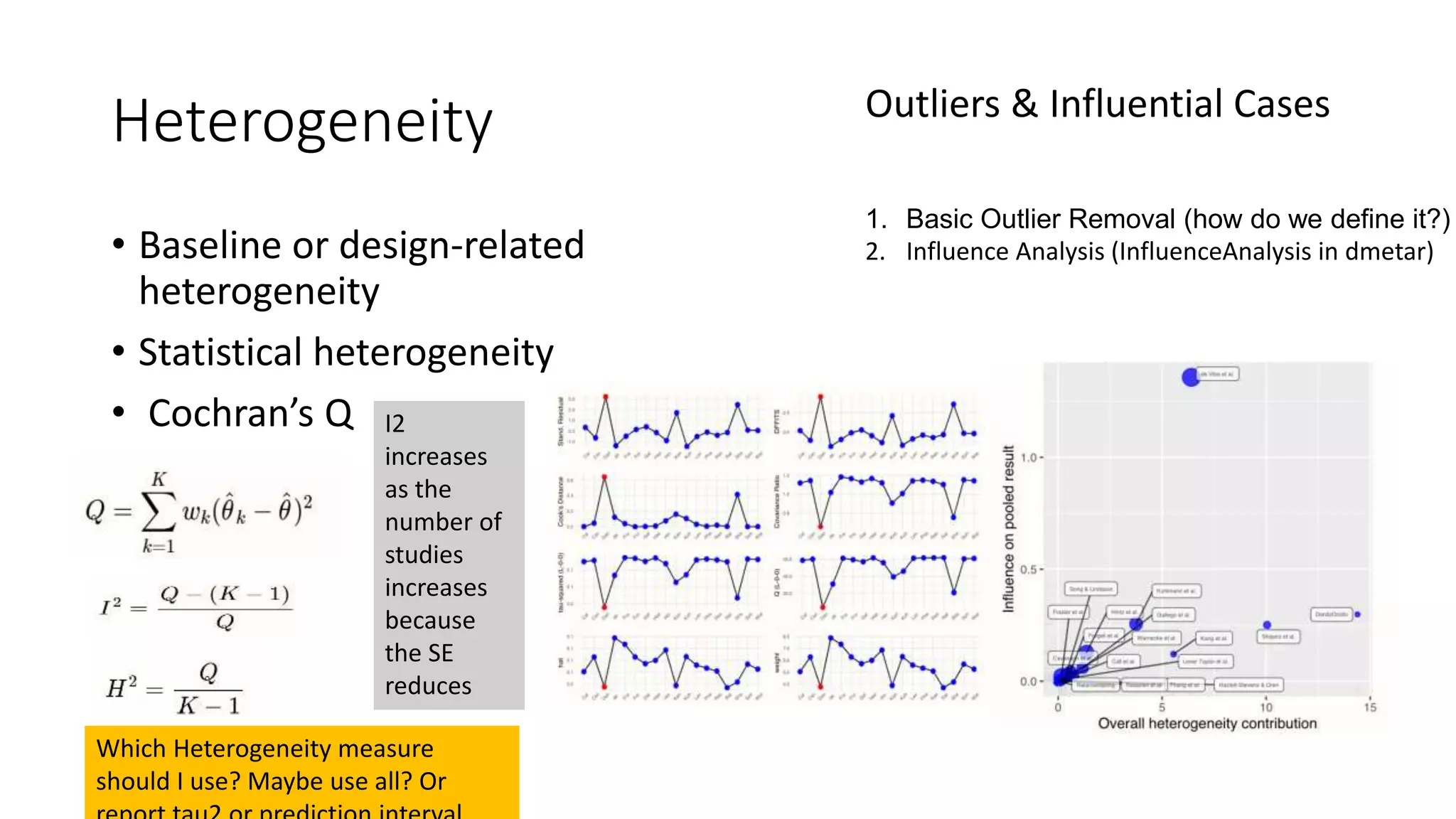

The document outlines an introduction to meta-analysis, emphasizing its significance in synthesizing quantitative data from multiple studies, differentiating it from systematic reviews. It covers the evolution of the concept, its advantages, potential pitfalls, and factors influencing the choice between fixed and random effects models. Additionally, it discusses the importance of effect size and provides a brief overview of software tools available for conducting meta-analysis.