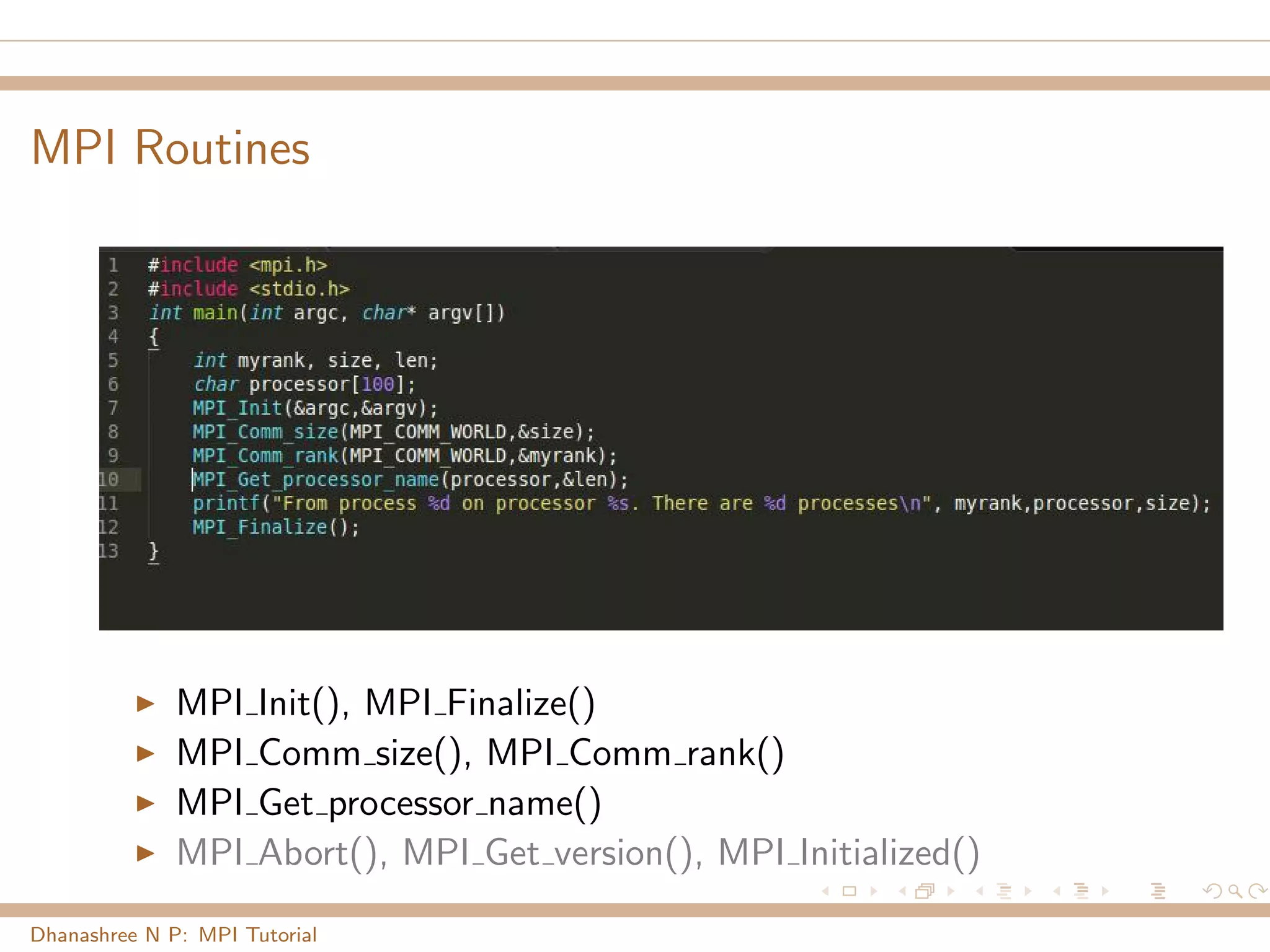

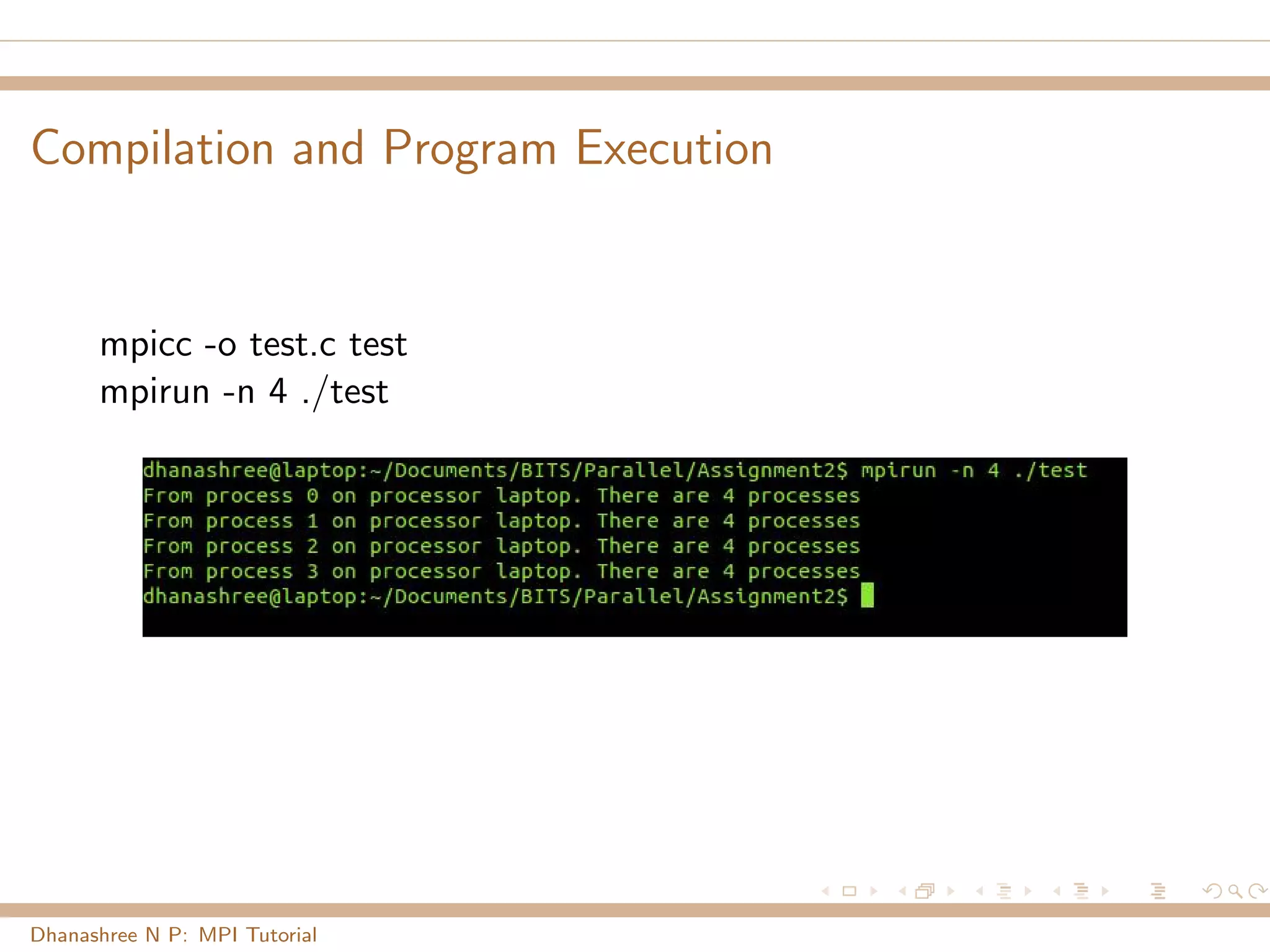

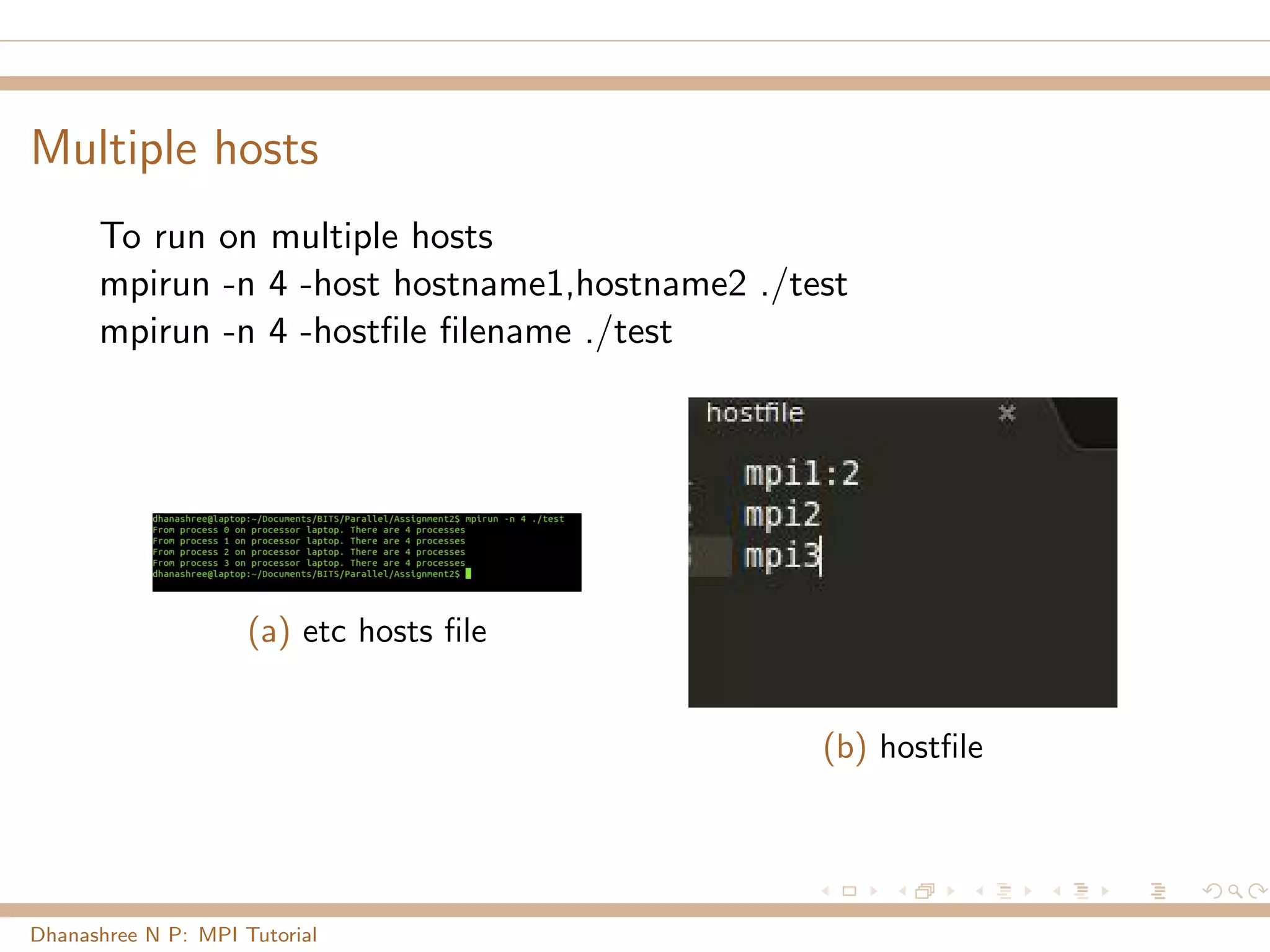

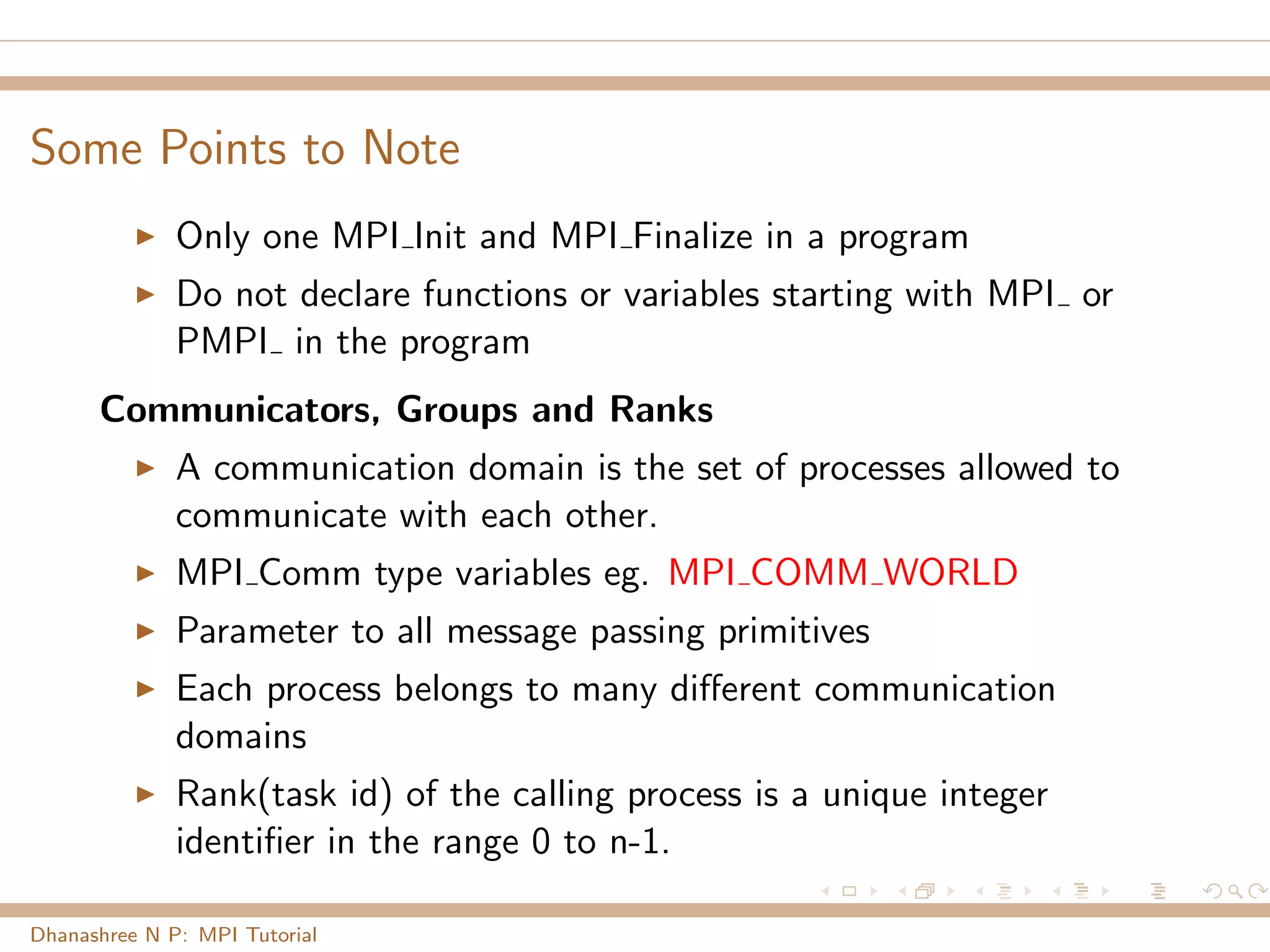

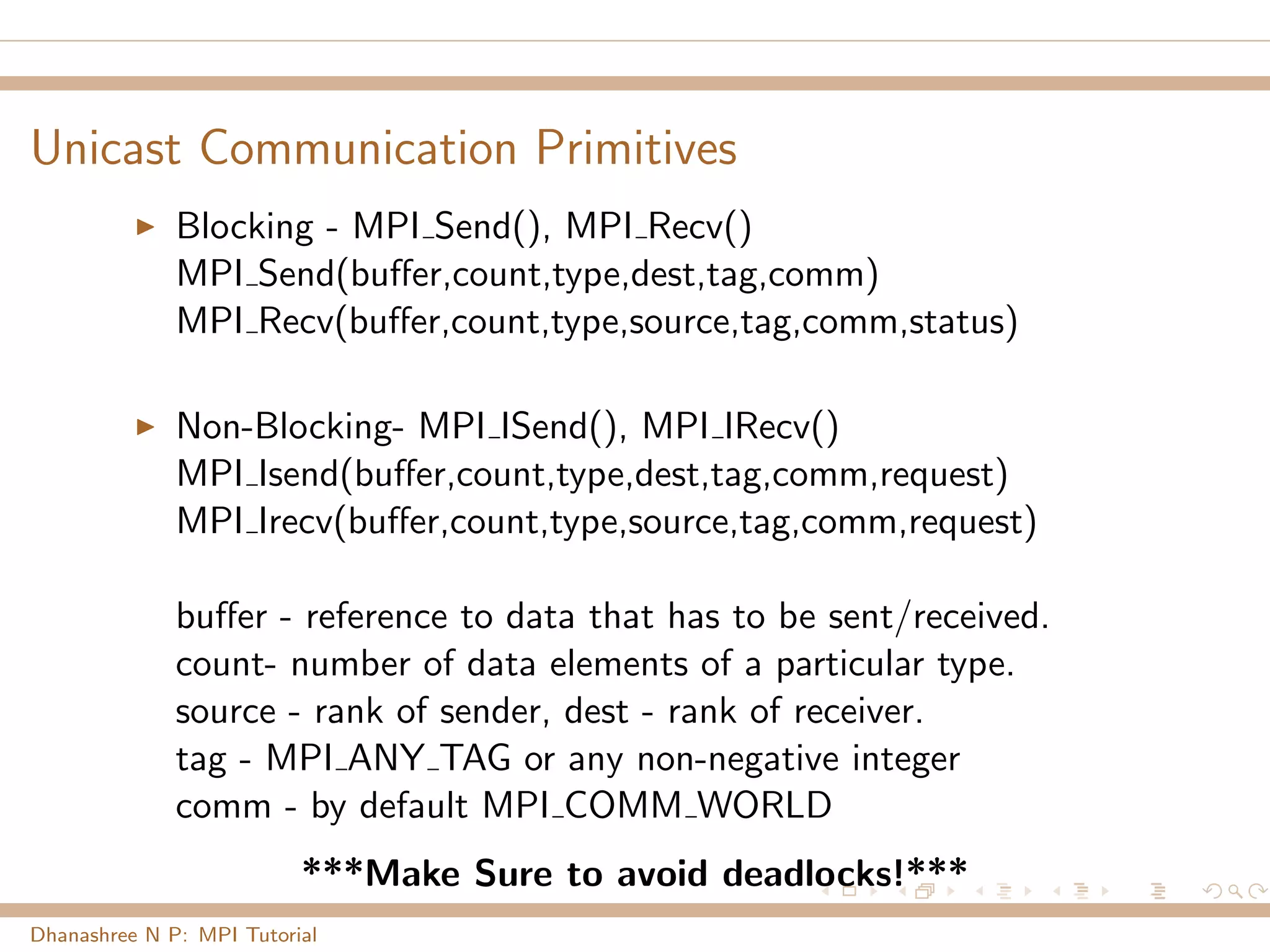

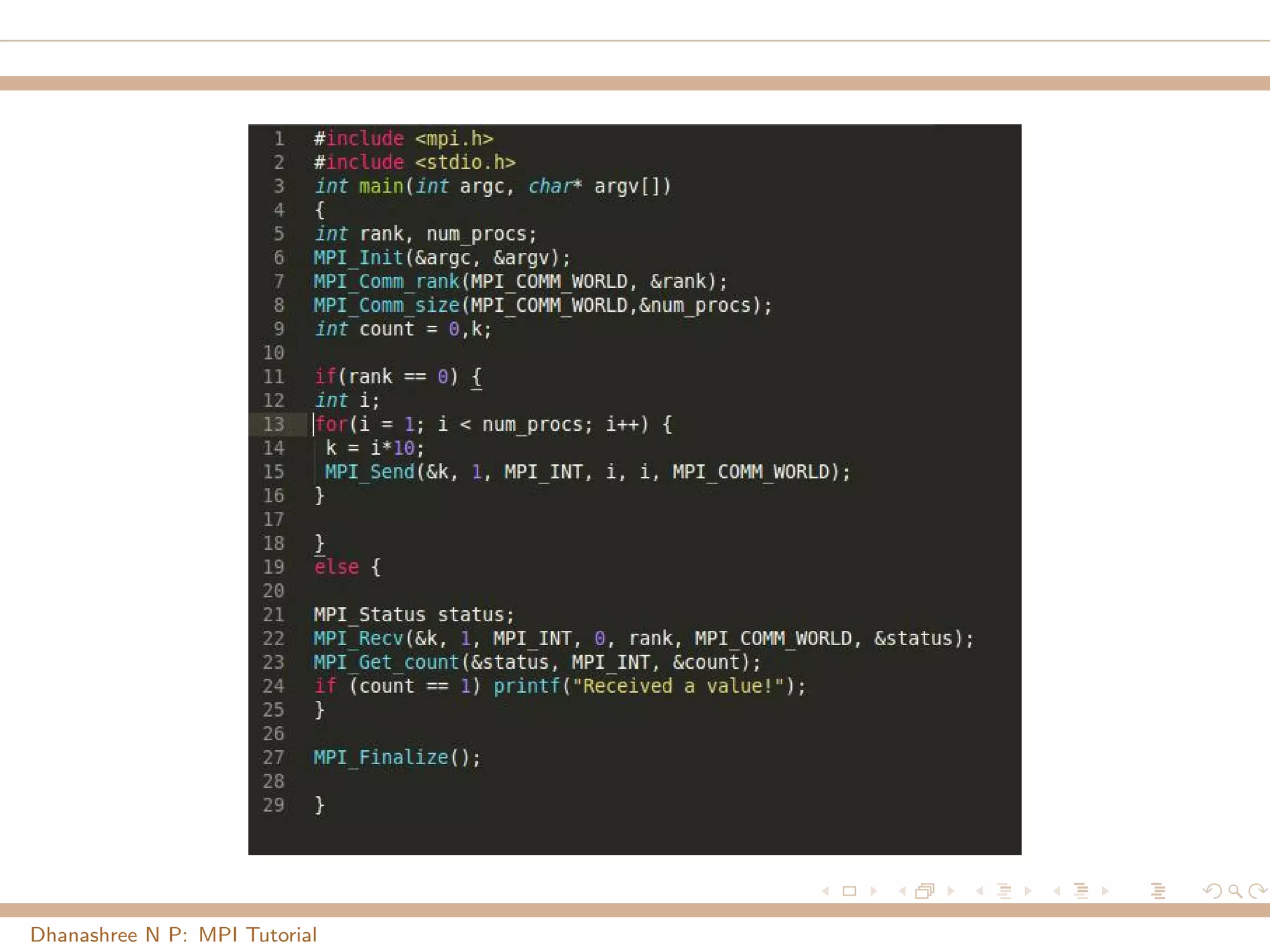

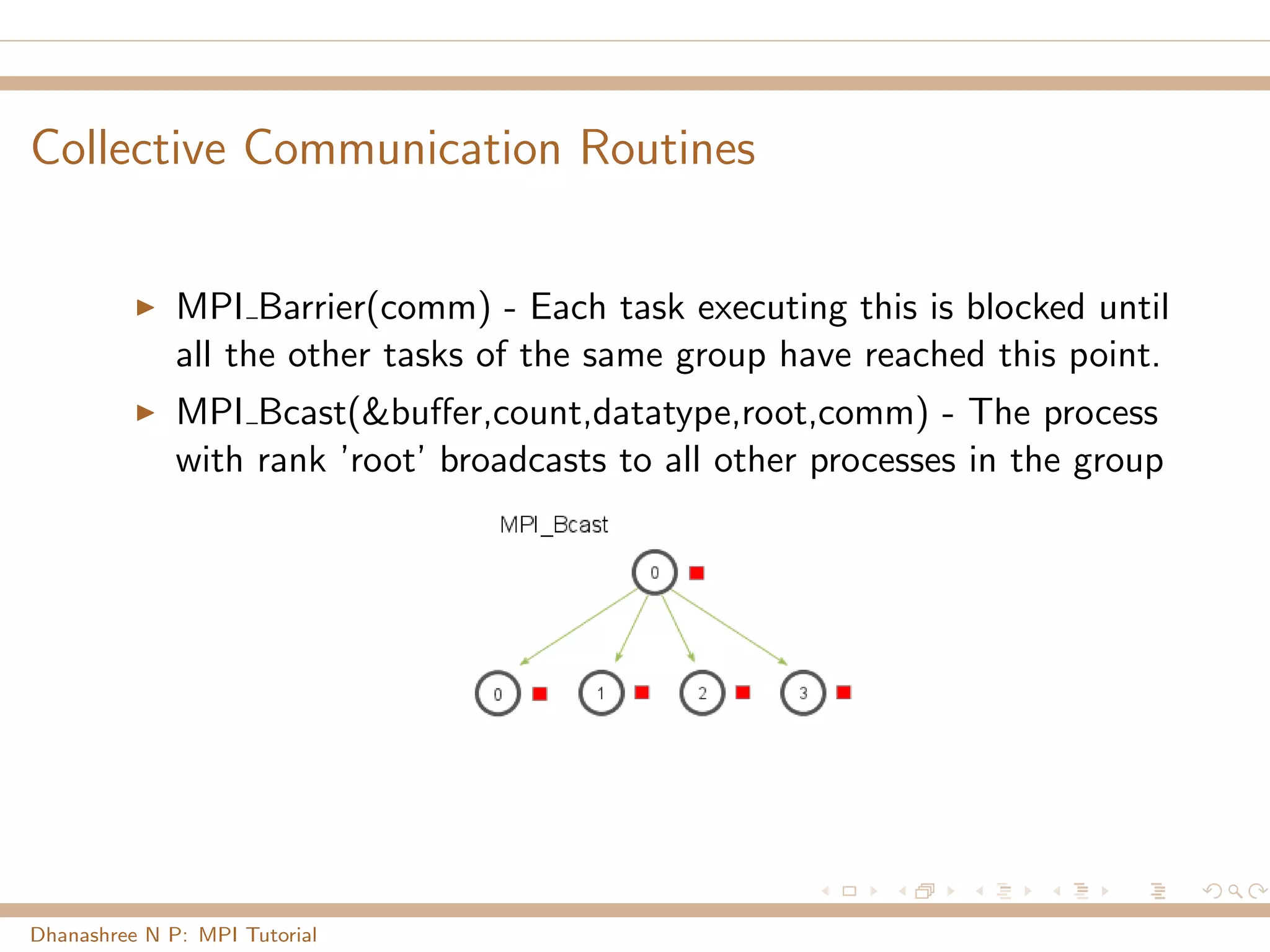

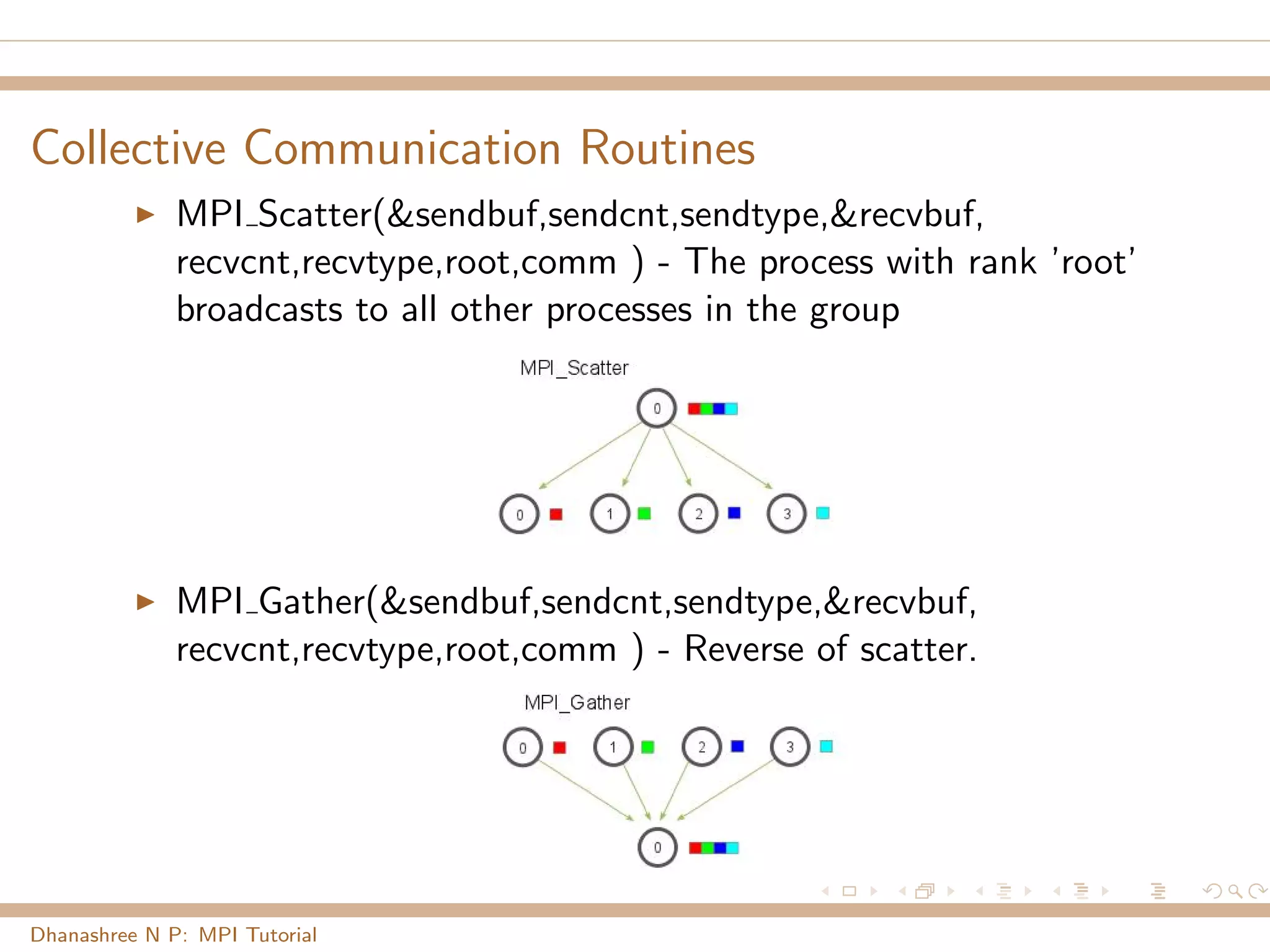

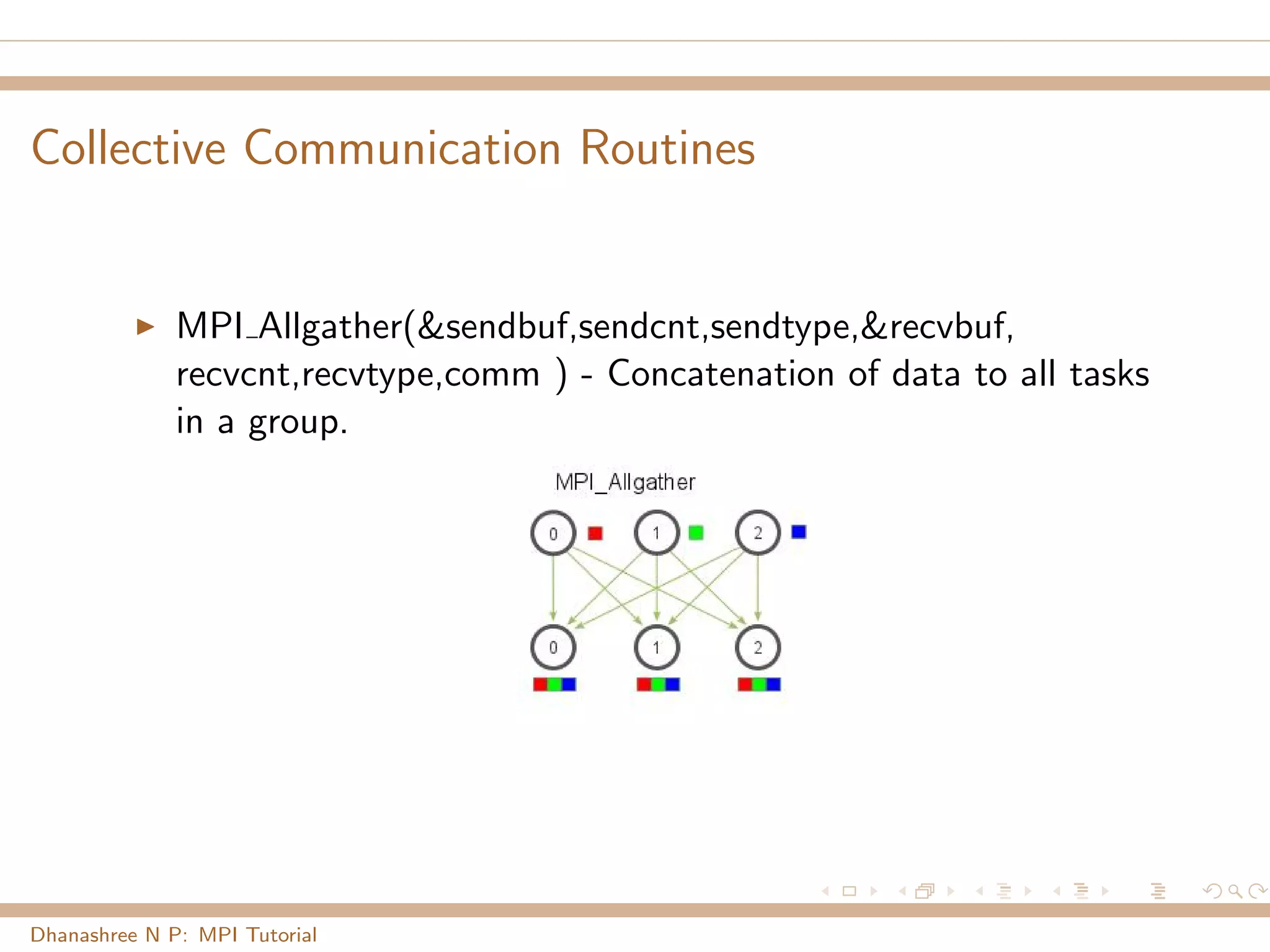

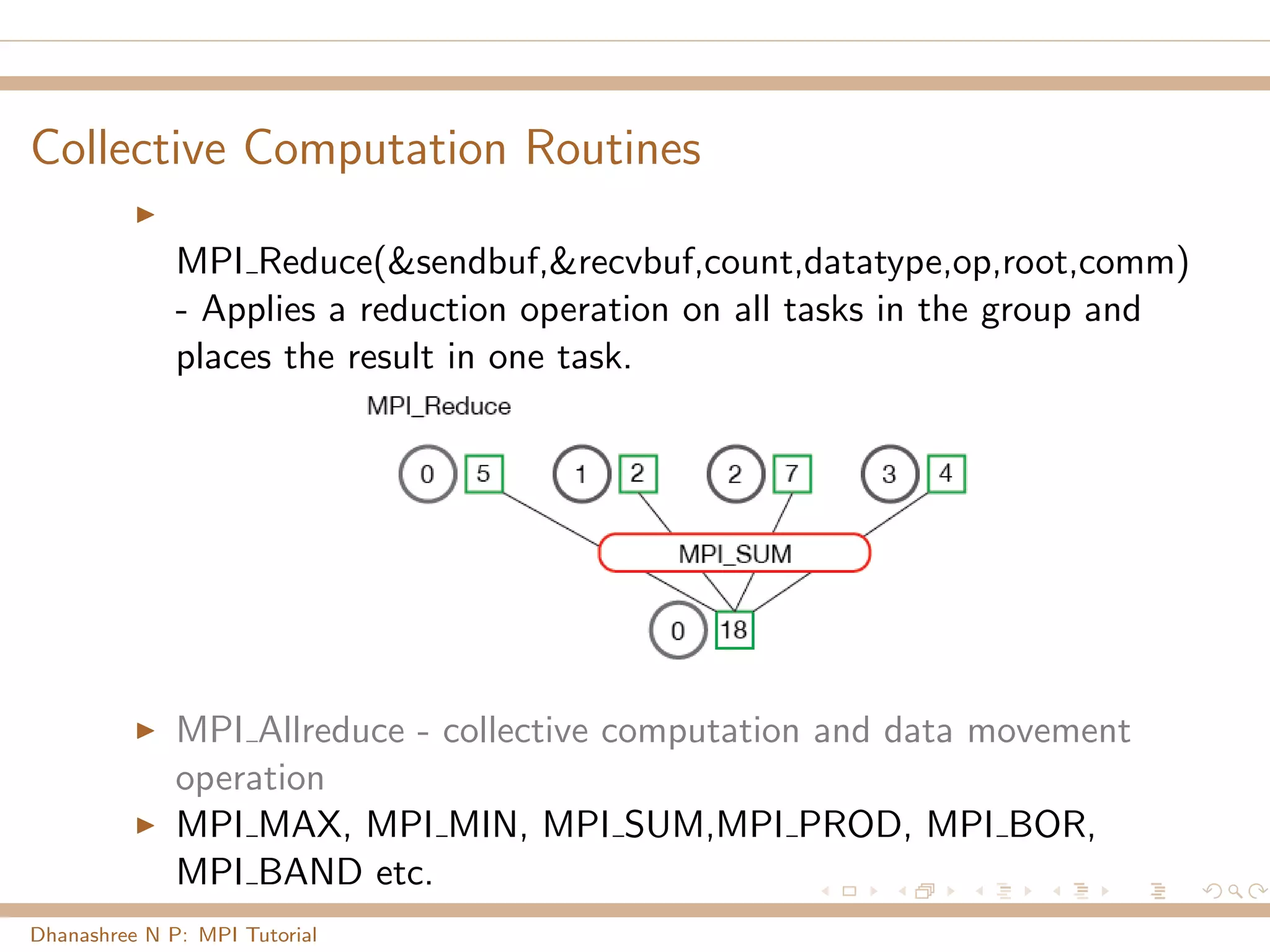

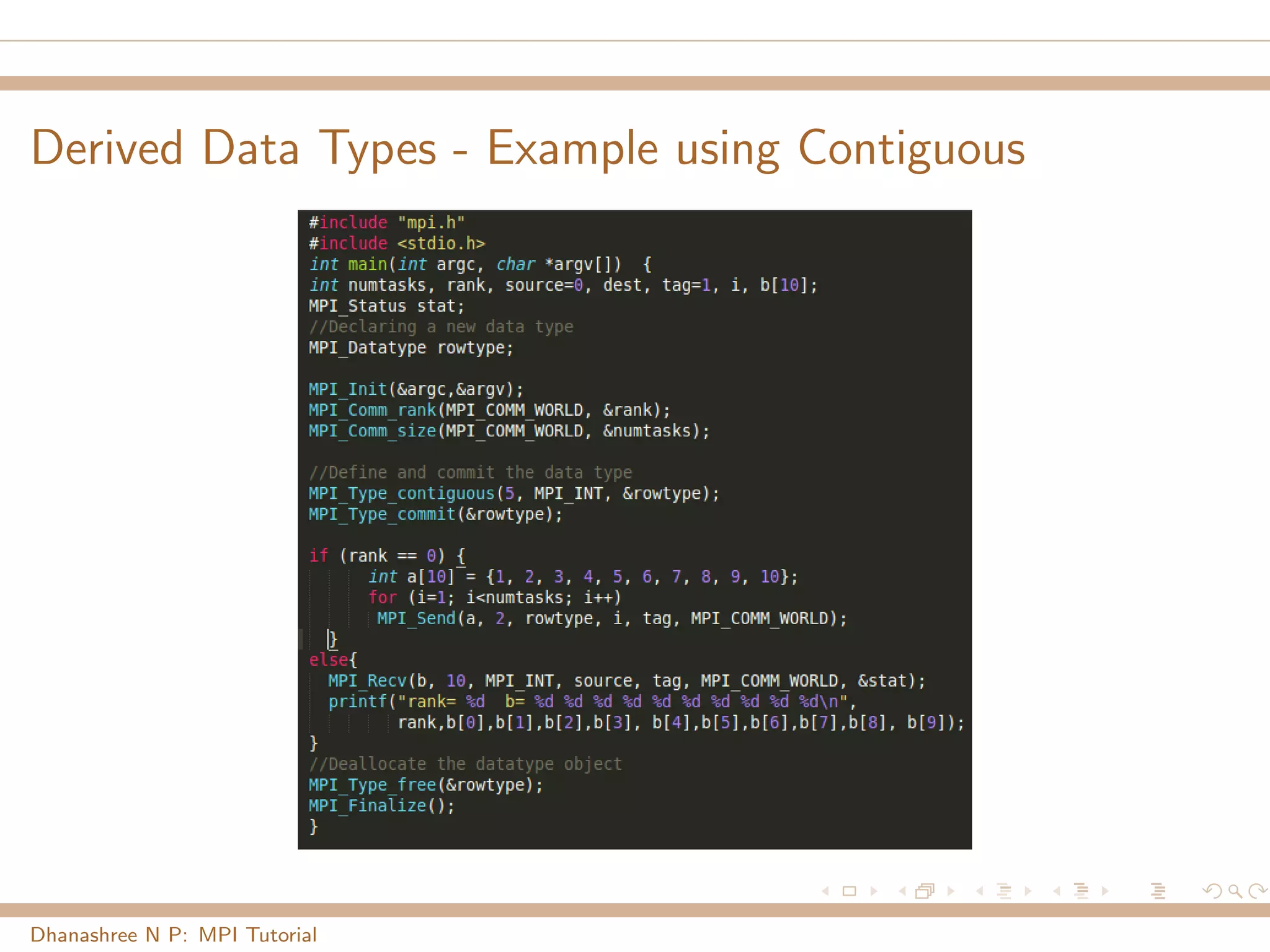

This document provides an overview of MPI (Message Passing Interface), a standard for message passing in parallel programs. It discusses MPI's portability, scalability and support for C/Fortran. Key concepts covered include message passing model, common routines, compilation/execution, communication primitives, collective operations, and data types. The document serves as an introductory tutorial on MPI parallel programming.