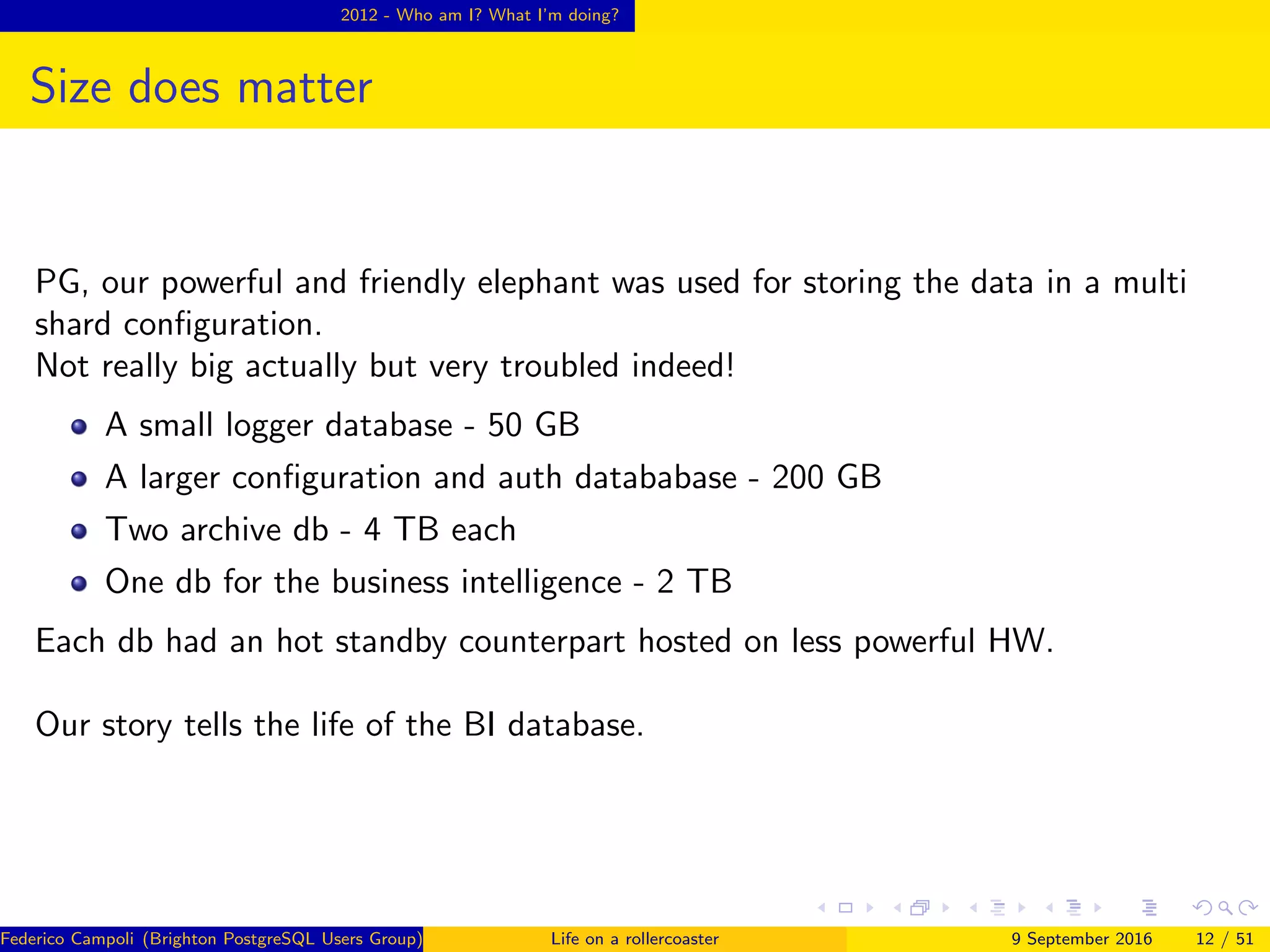

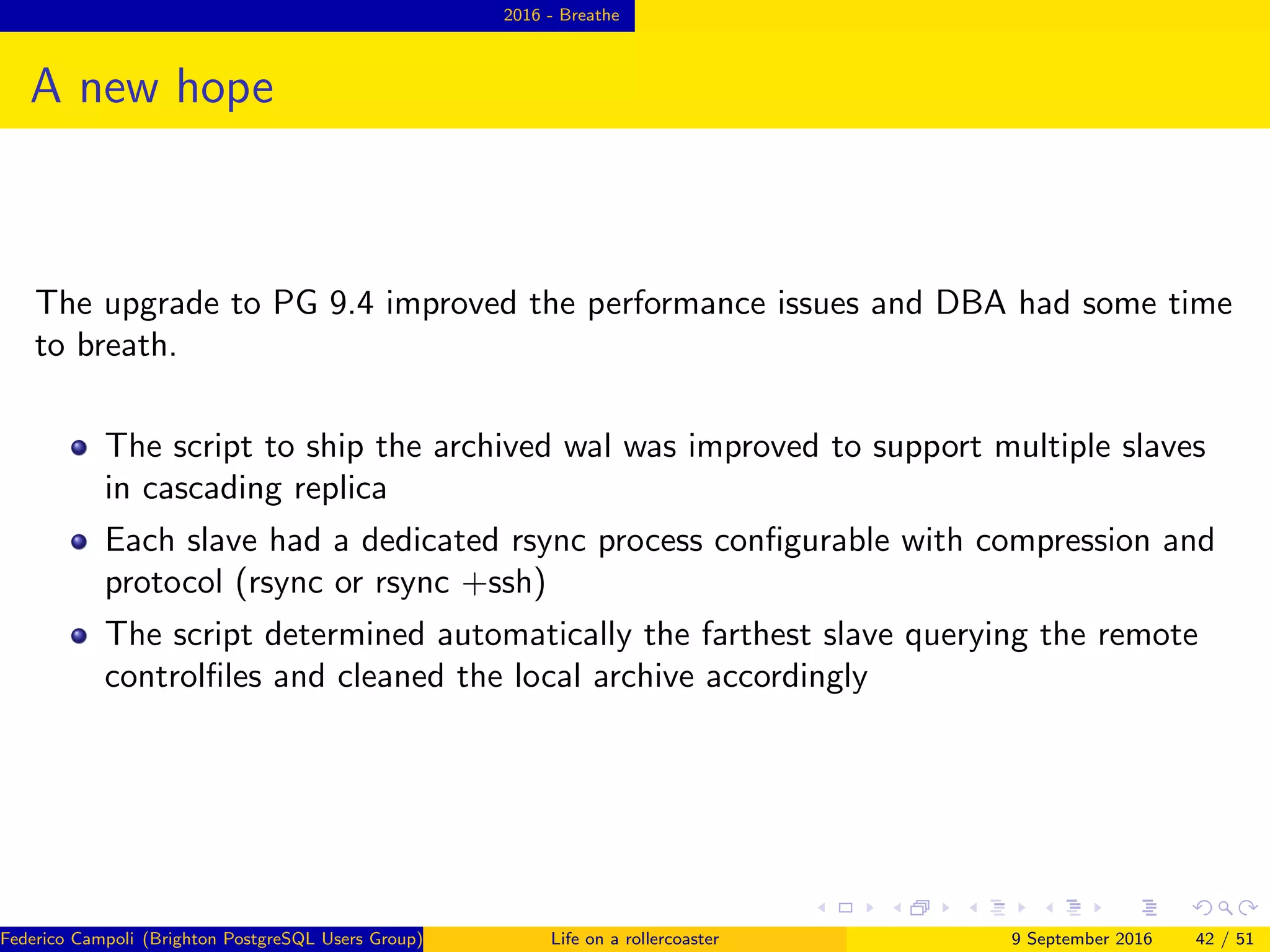

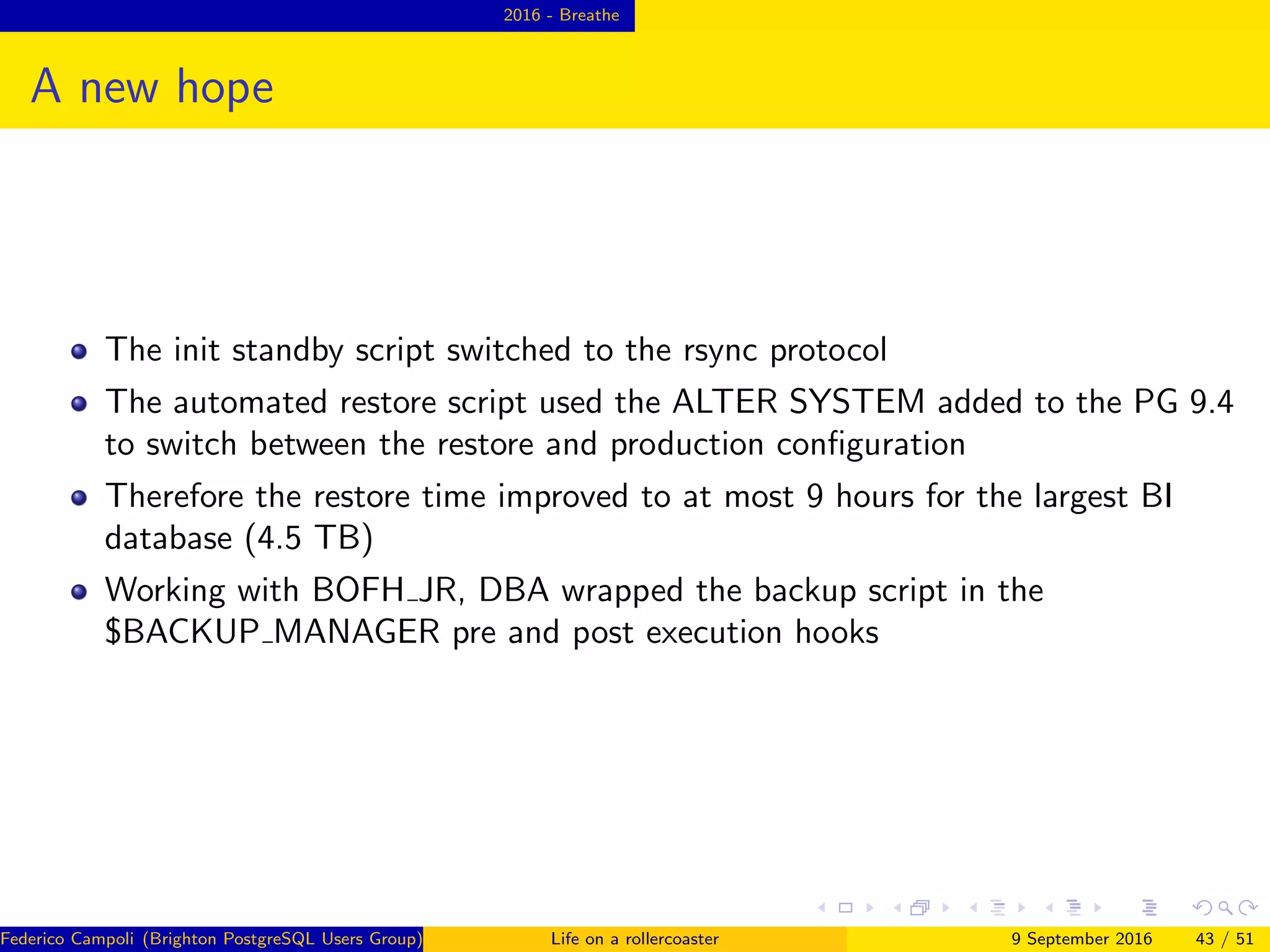

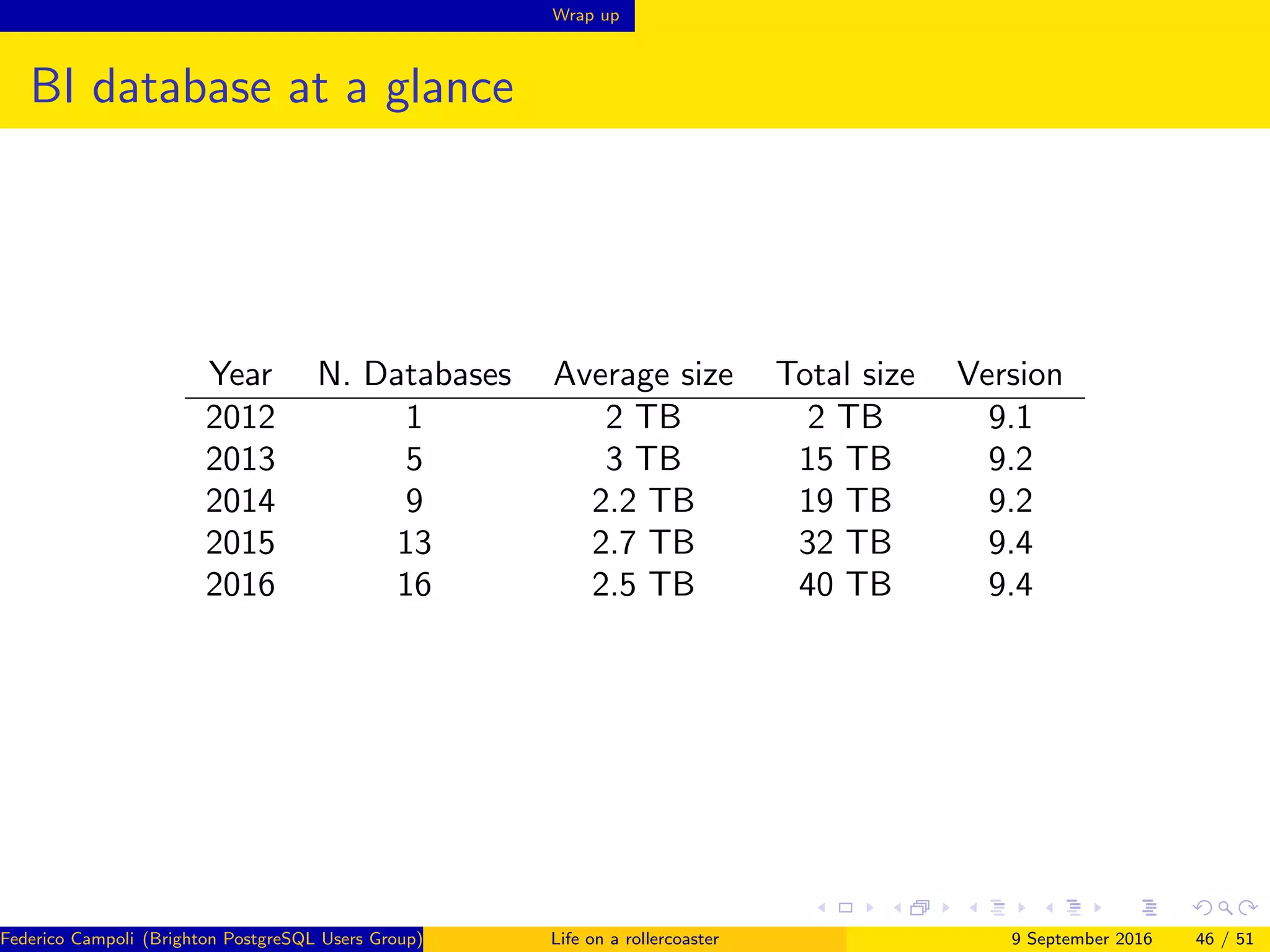

The document chronicles the challenges and solutions faced by a DBA at a startup, Acme, between 2012 and 2016, specifically focusing on PostgreSQL's backup and recovery system. It details the evolving issues related to database performance, backup duration, and data management strategies, including problems stemming from schema design and server configurations. Ultimately, the DBA implements various enhancements over the years, leading to improved system performance and efficiency despite initial resistance from management.