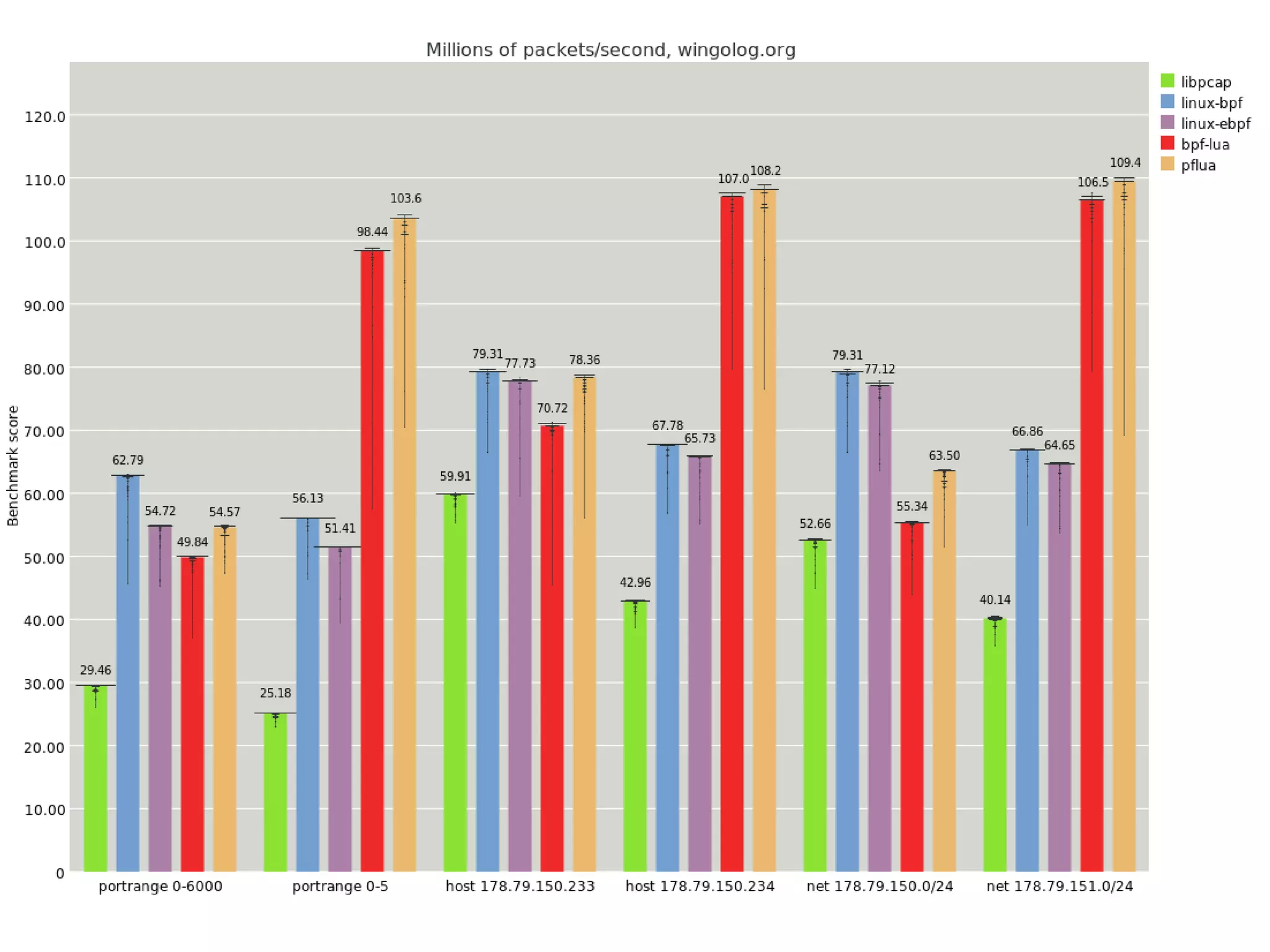

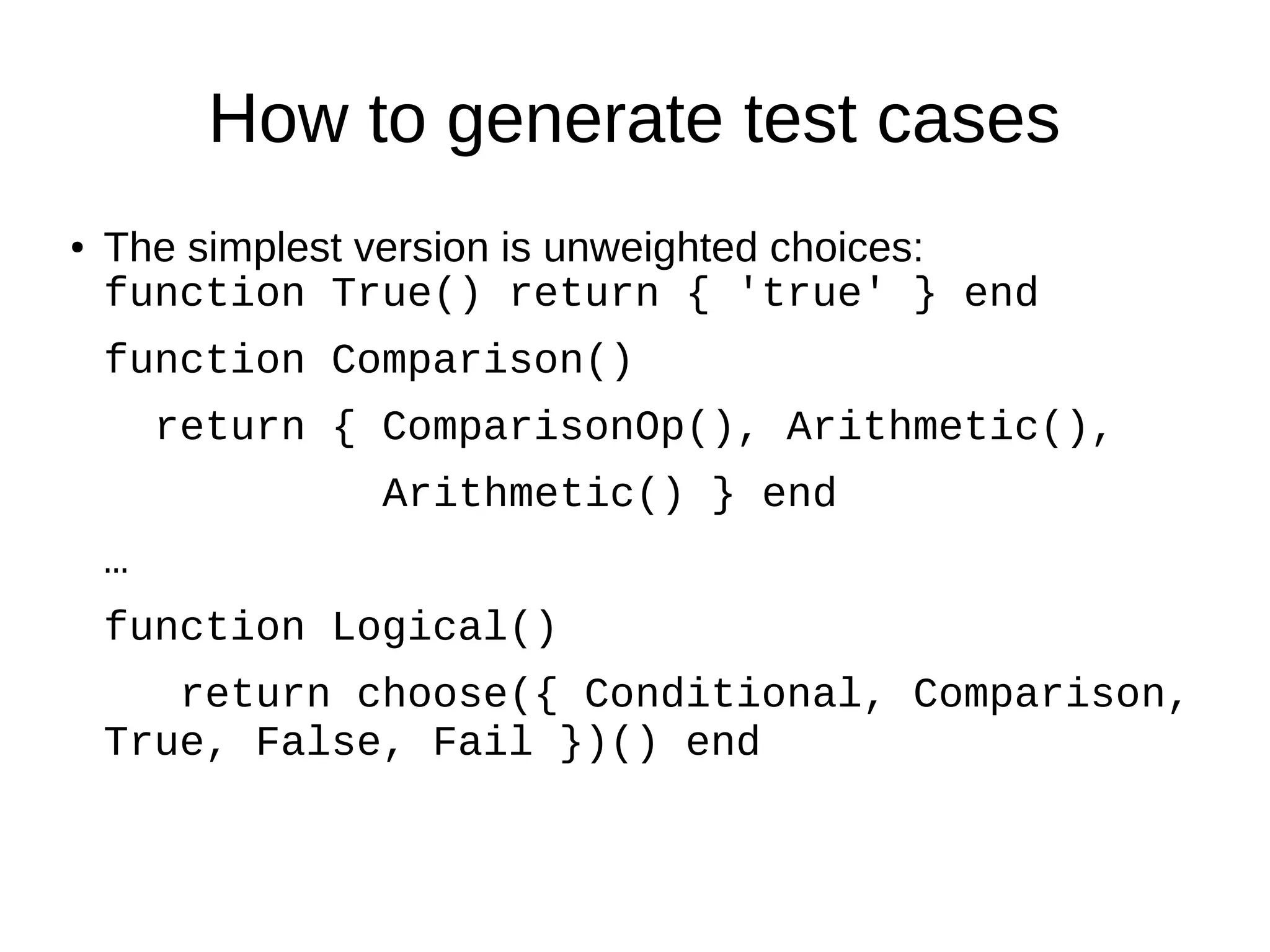

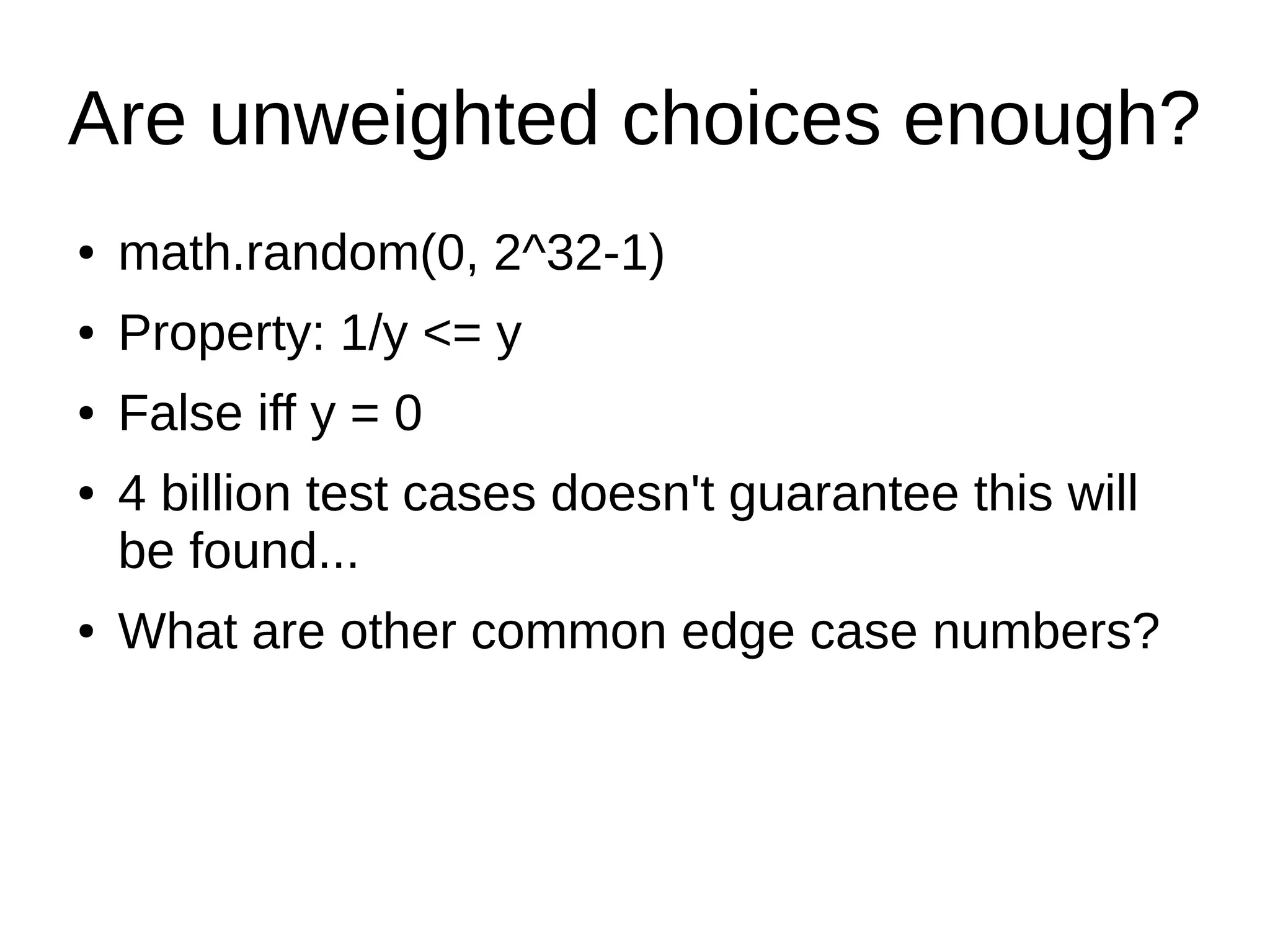

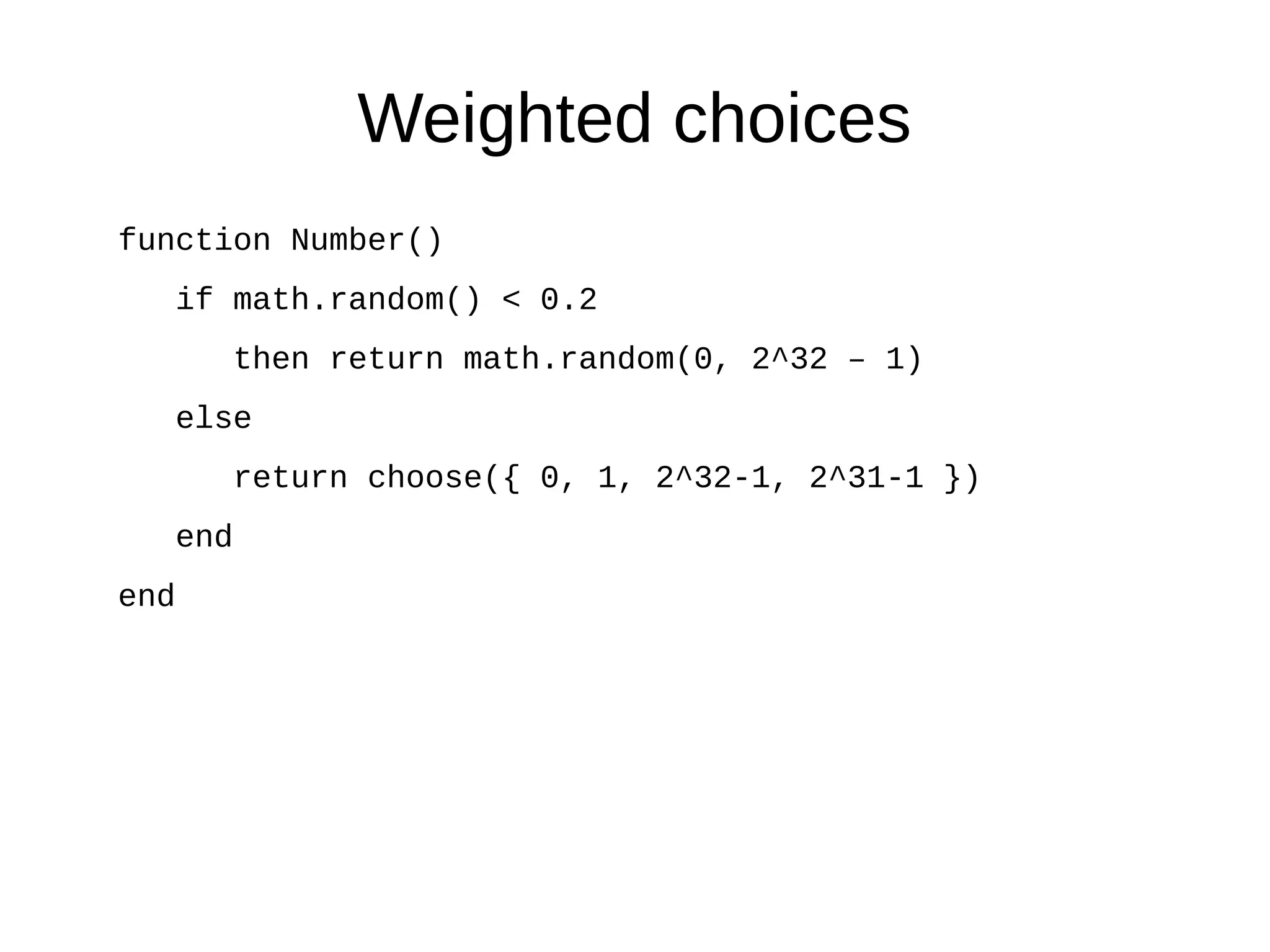

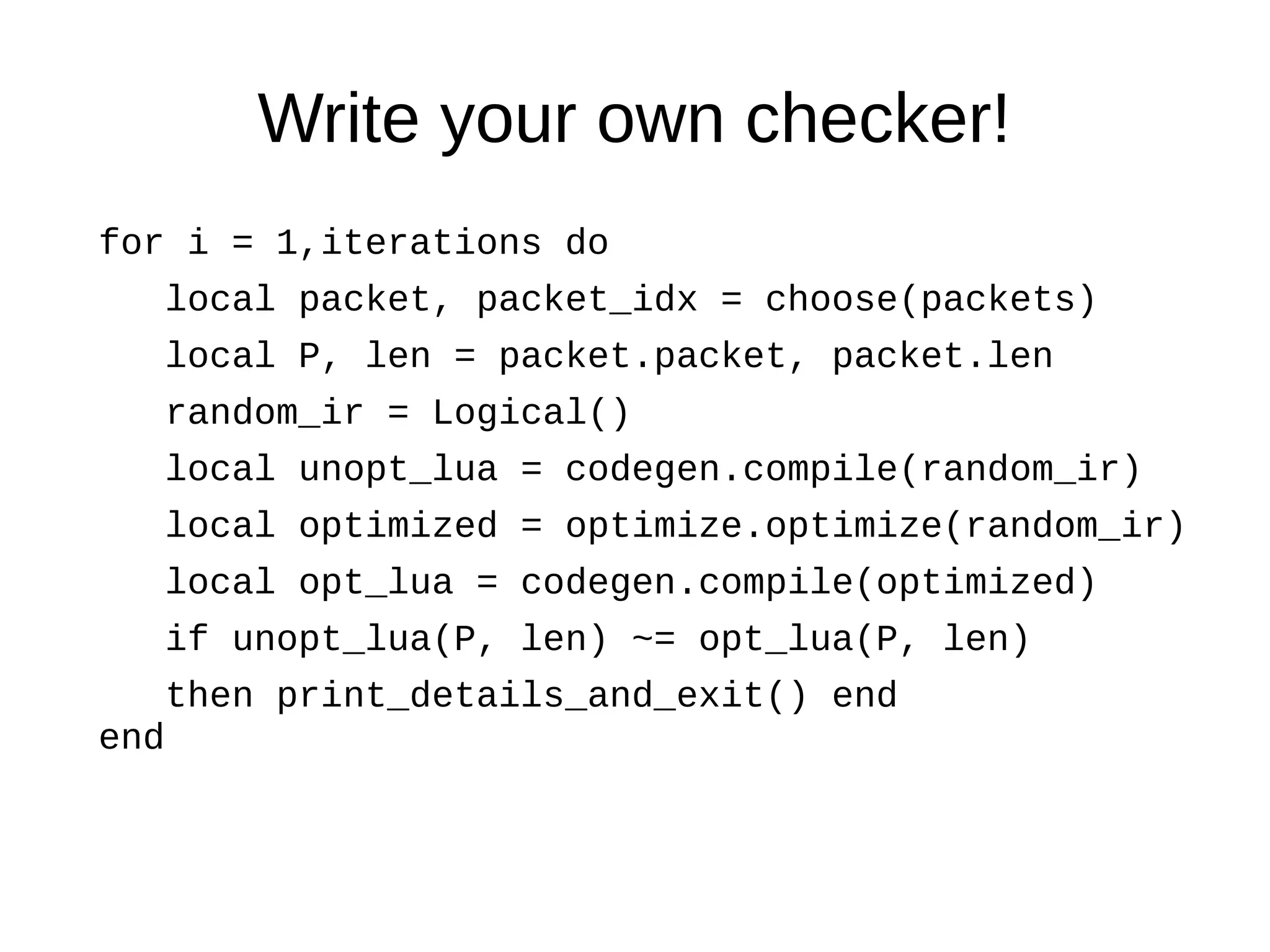

The document discusses property-based testing, emphasizing its efficiency in bug detection compared to manual testing methods, especially for seemingly mature software like pflua, an open source compiler. It outlines a case study where implementing property-based tests revealed multiple bugs that traditional testing likely would have missed. Additionally, the document highlights existing tools for implementing property-based testing and encourages its adoption for more effective software reliability and regression avoidance.