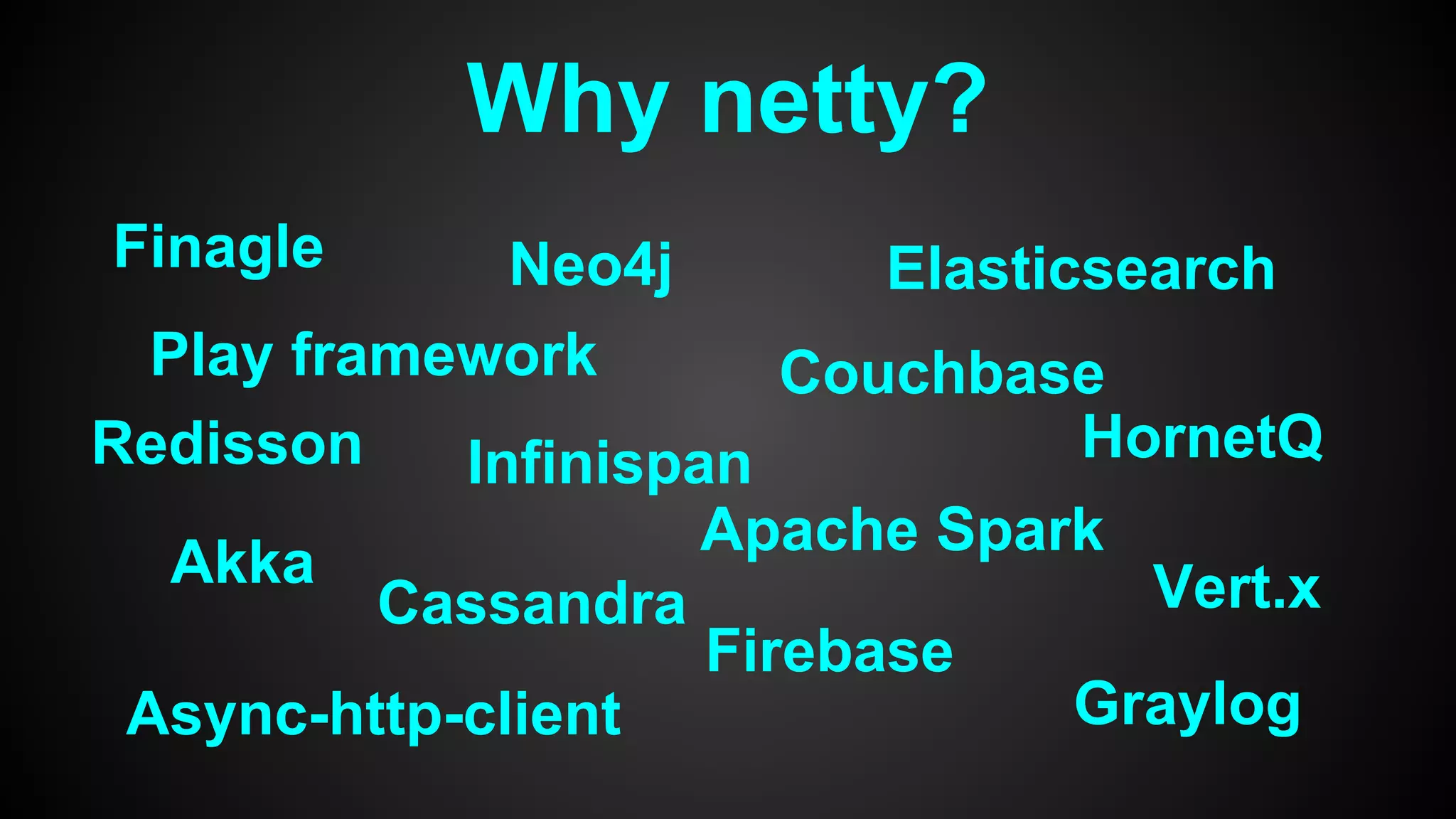

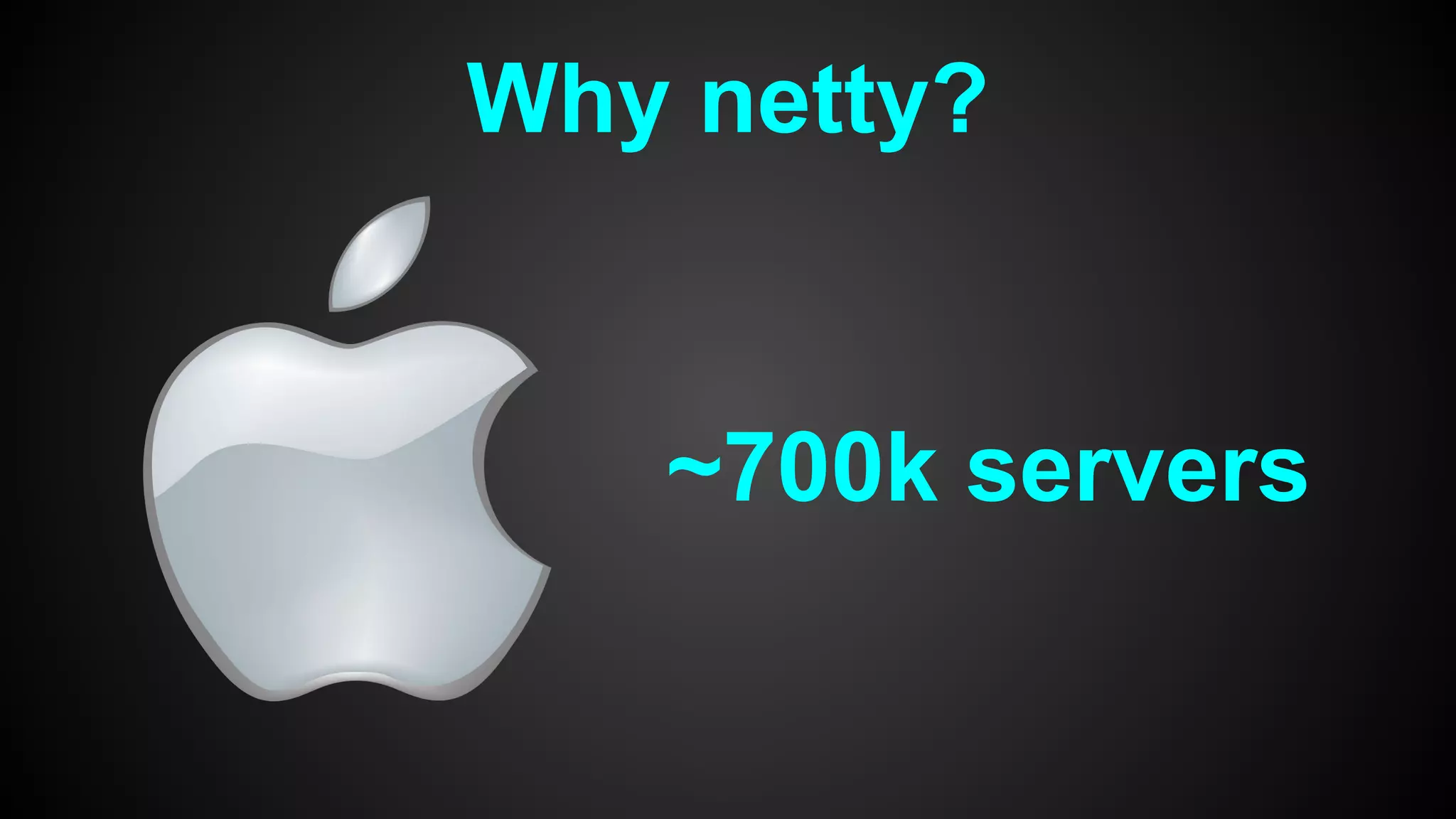

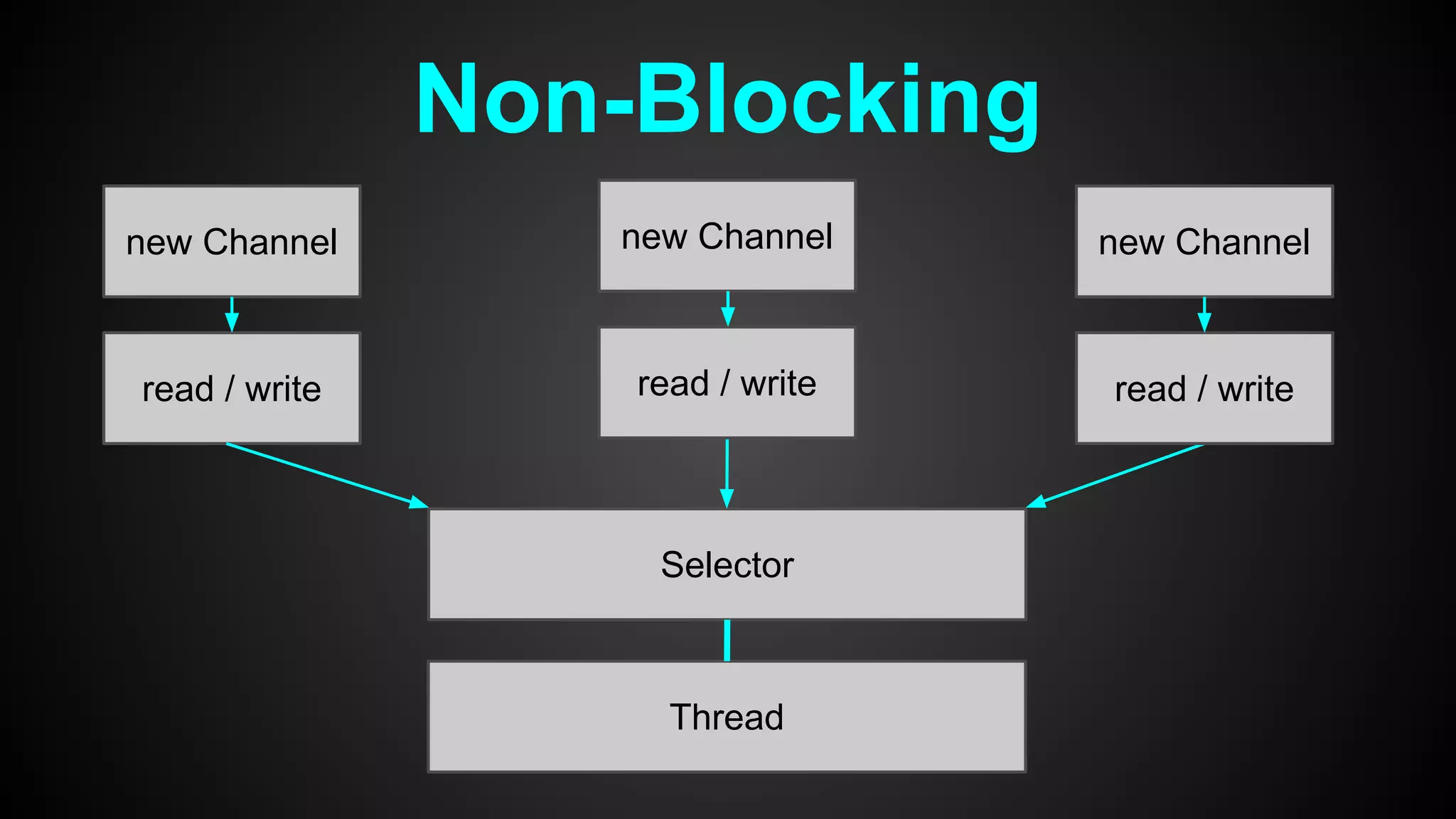

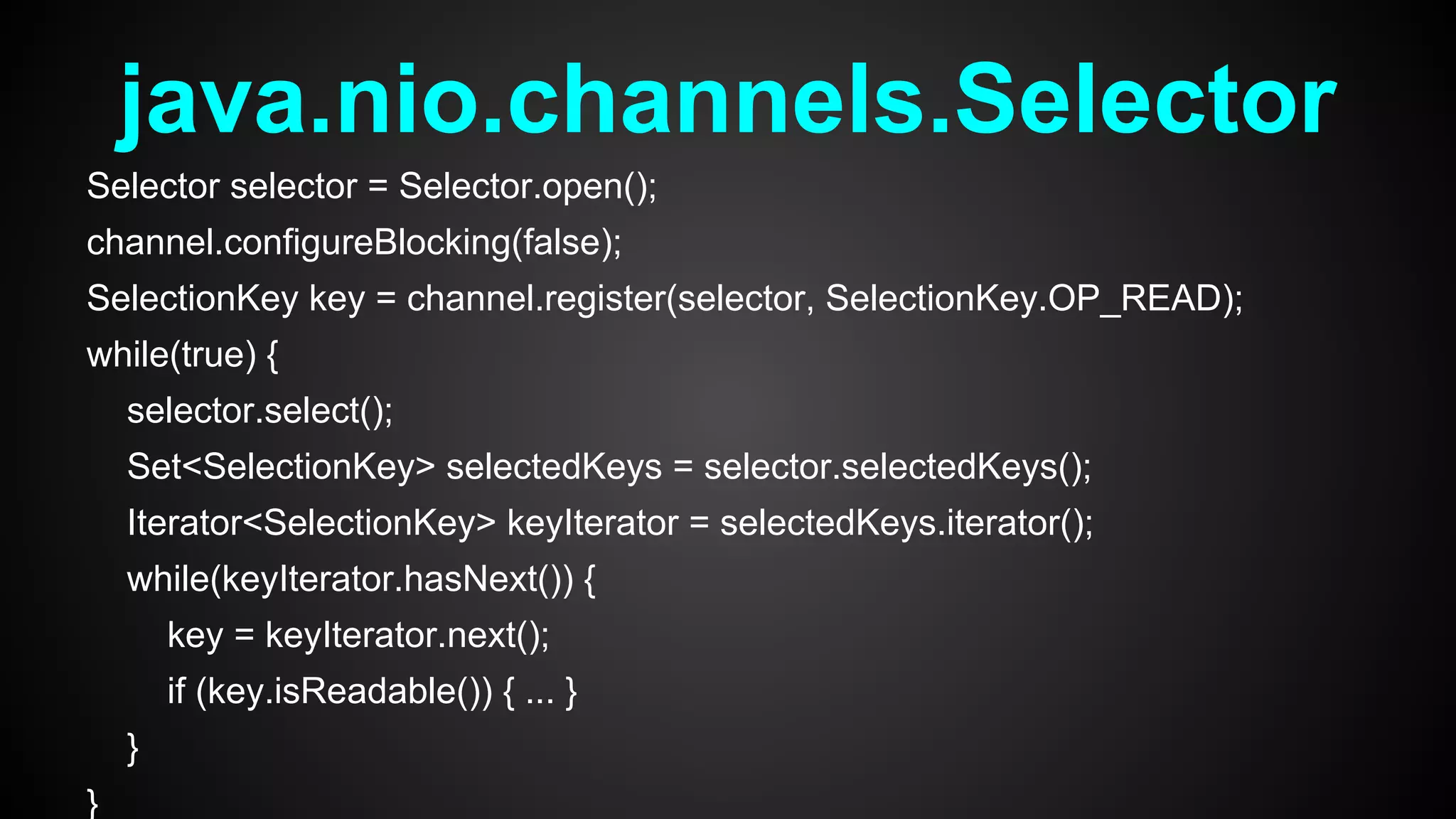

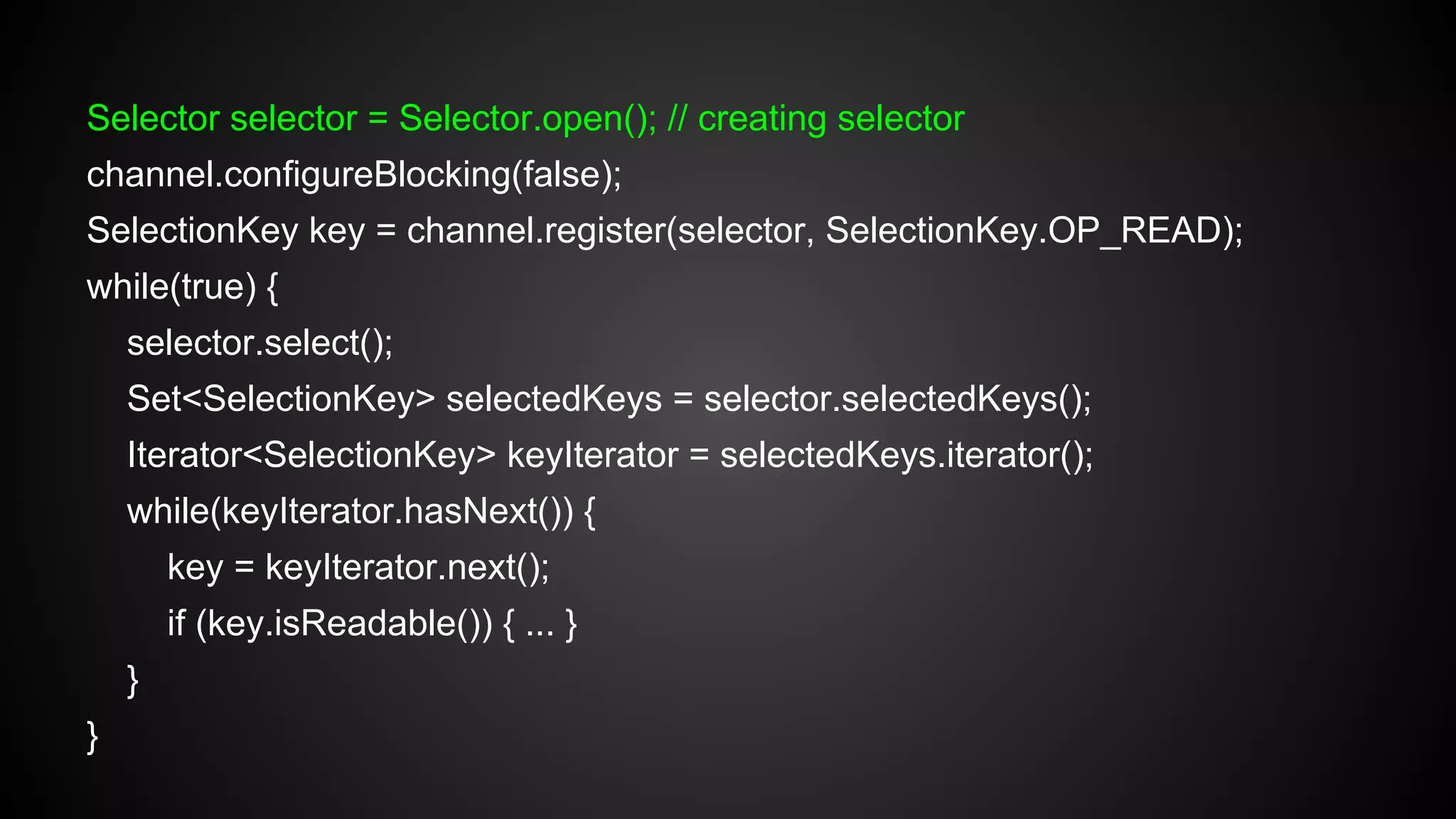

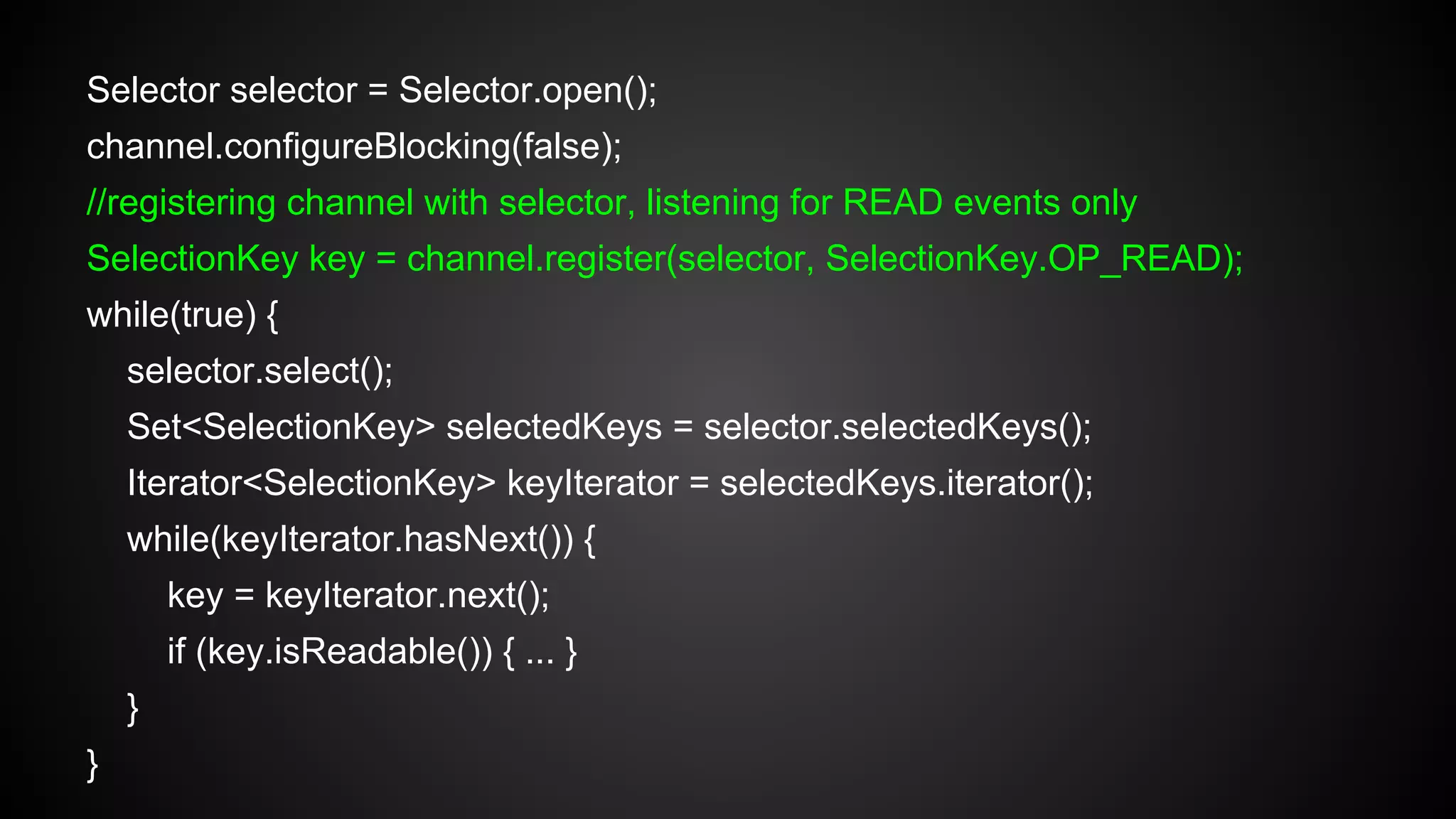

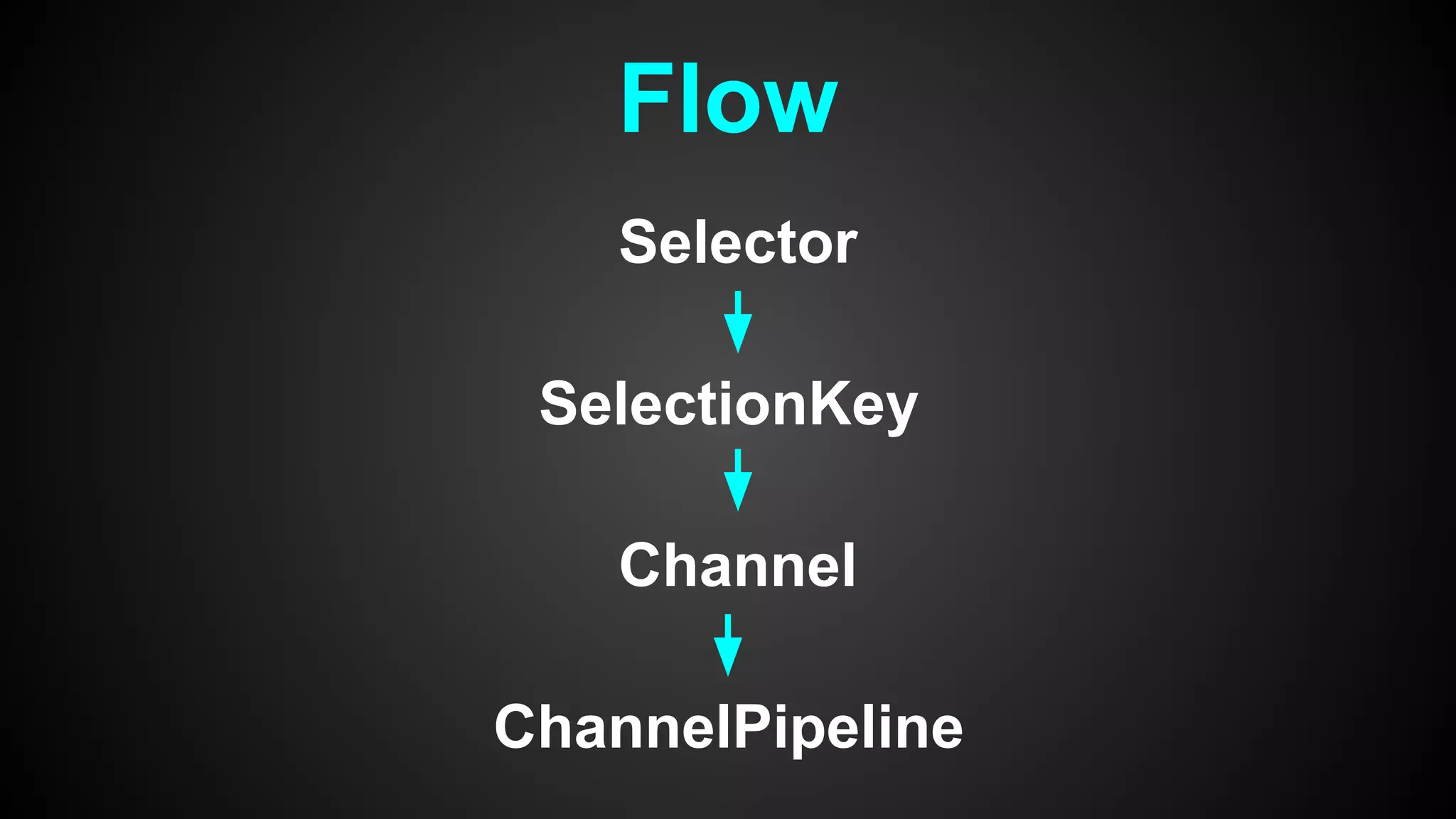

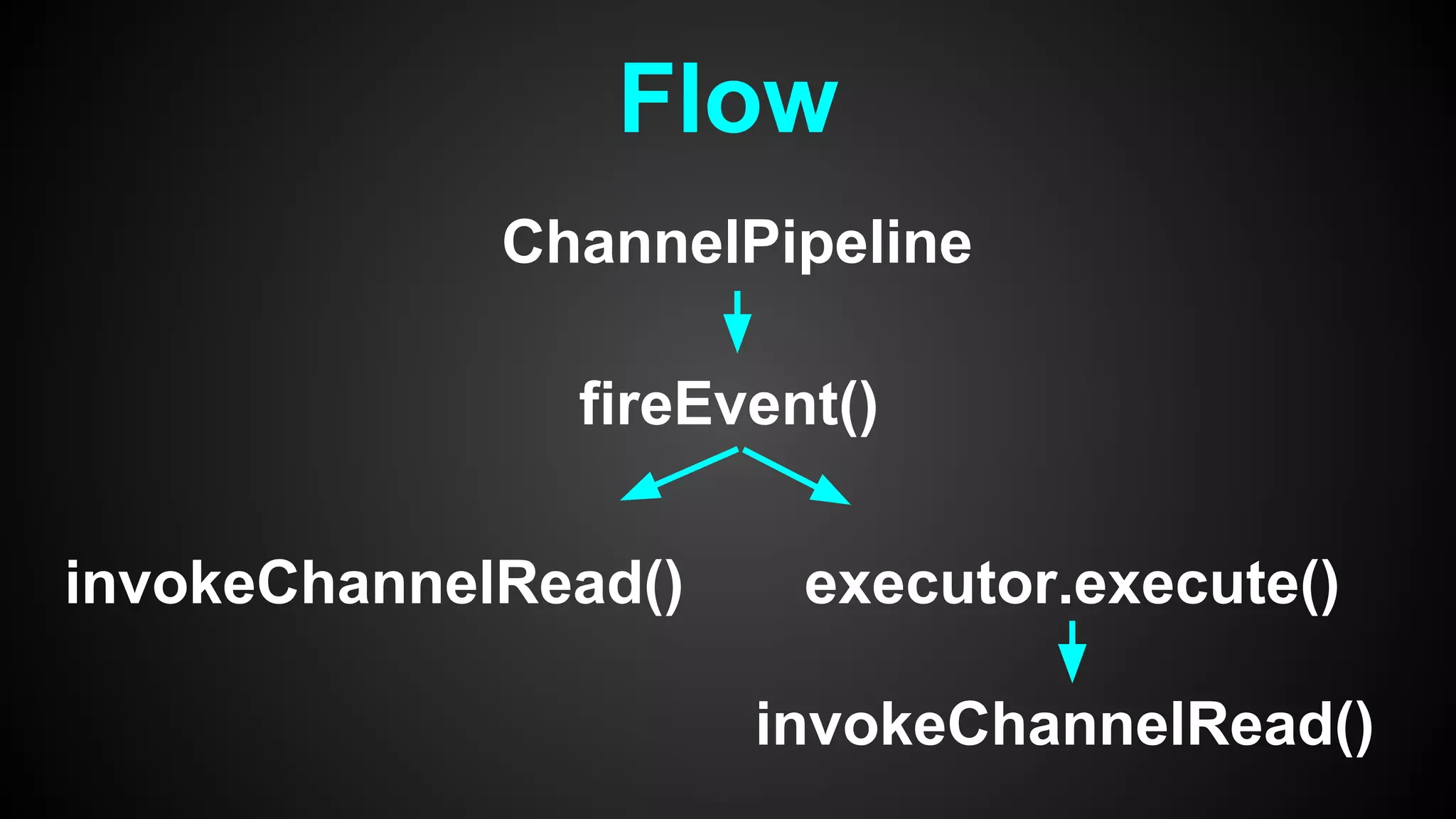

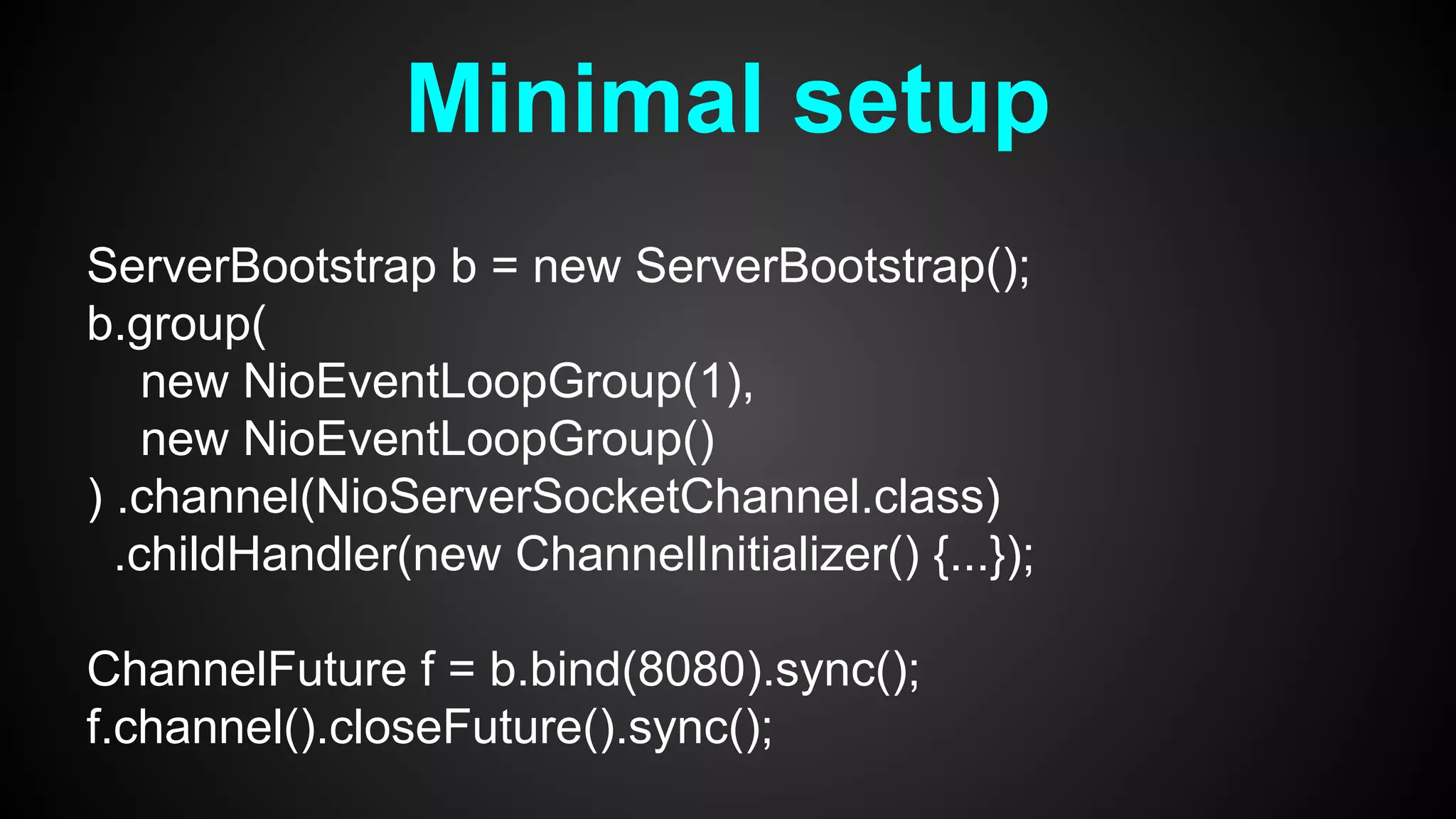

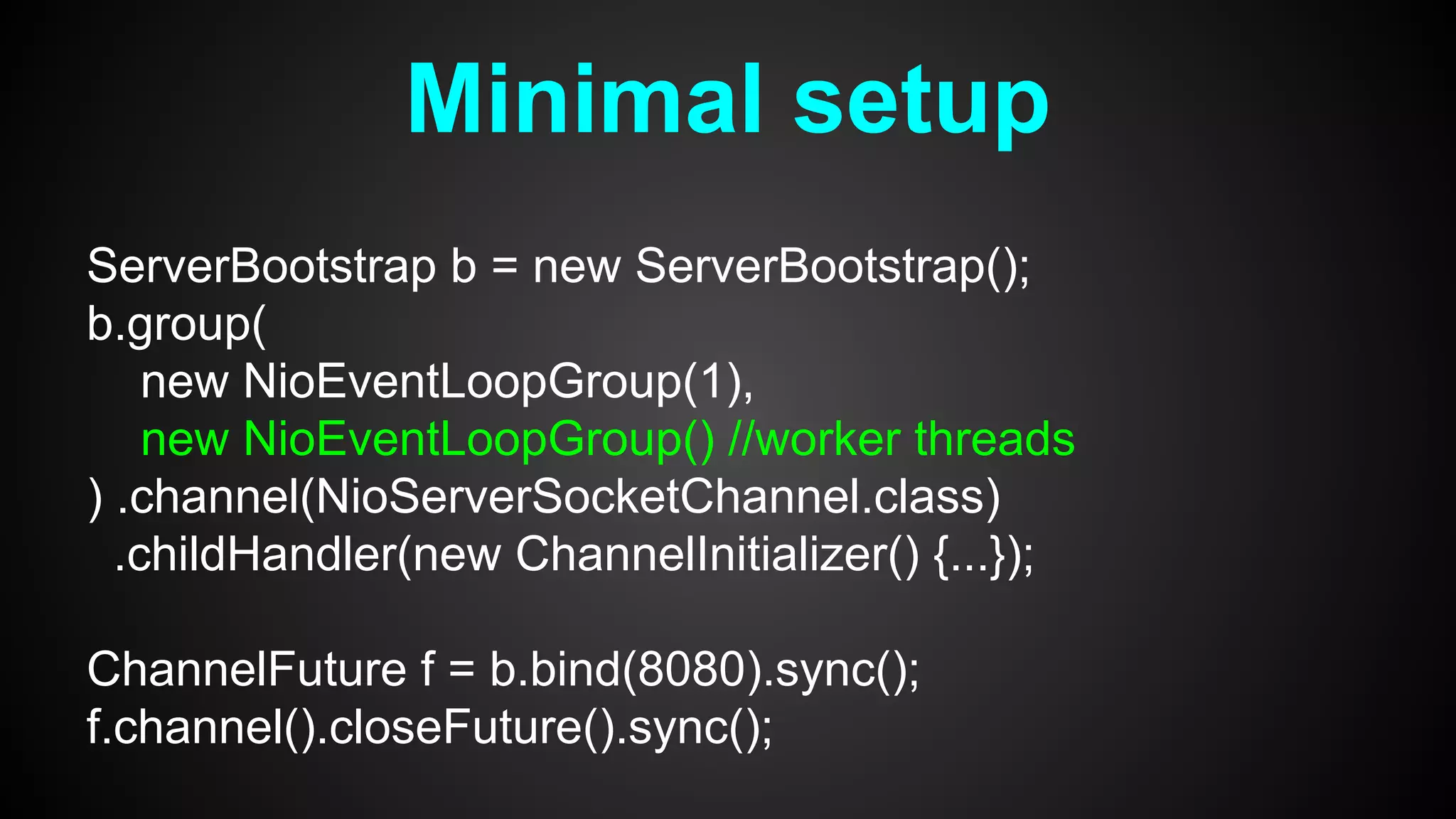

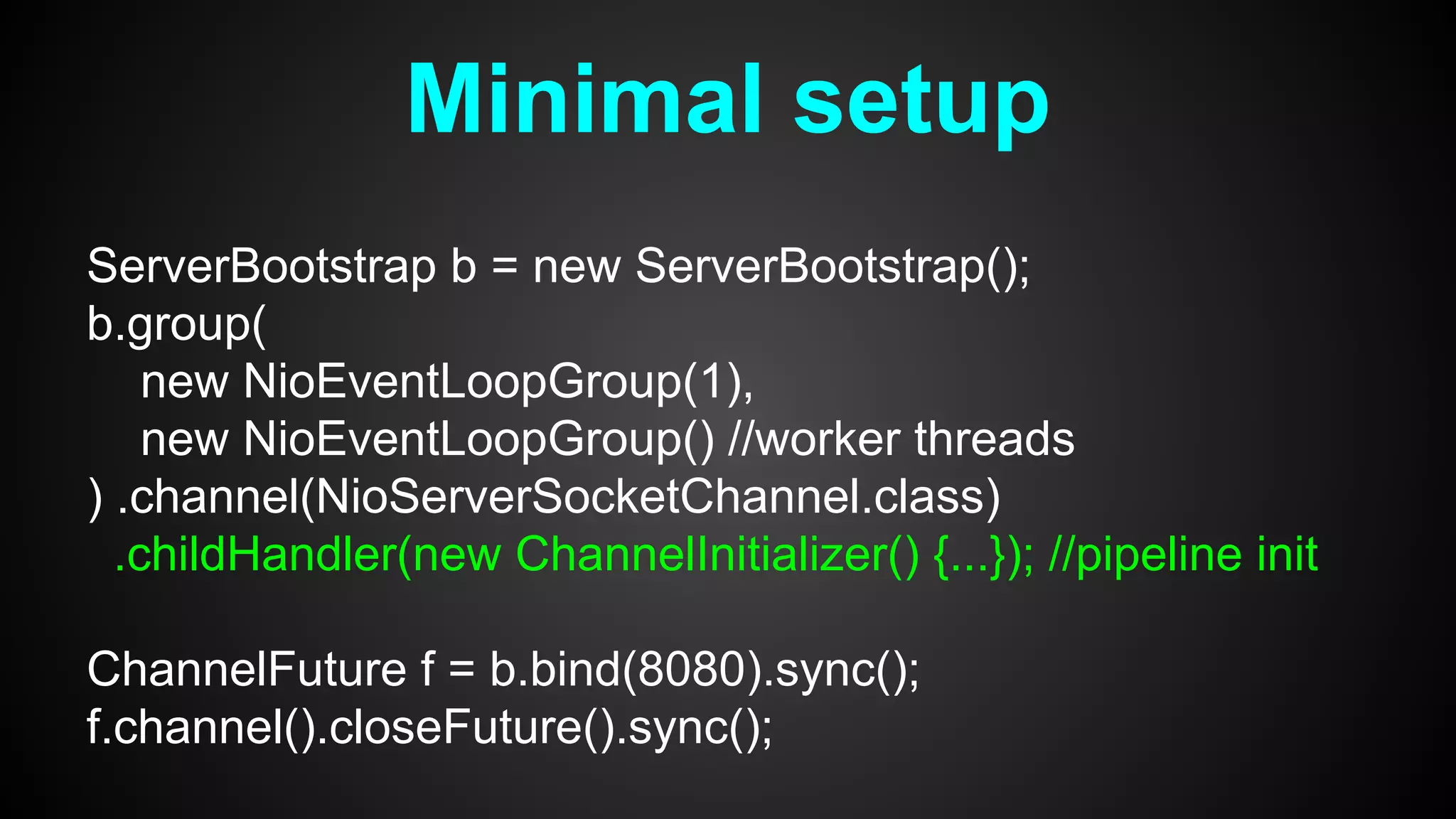

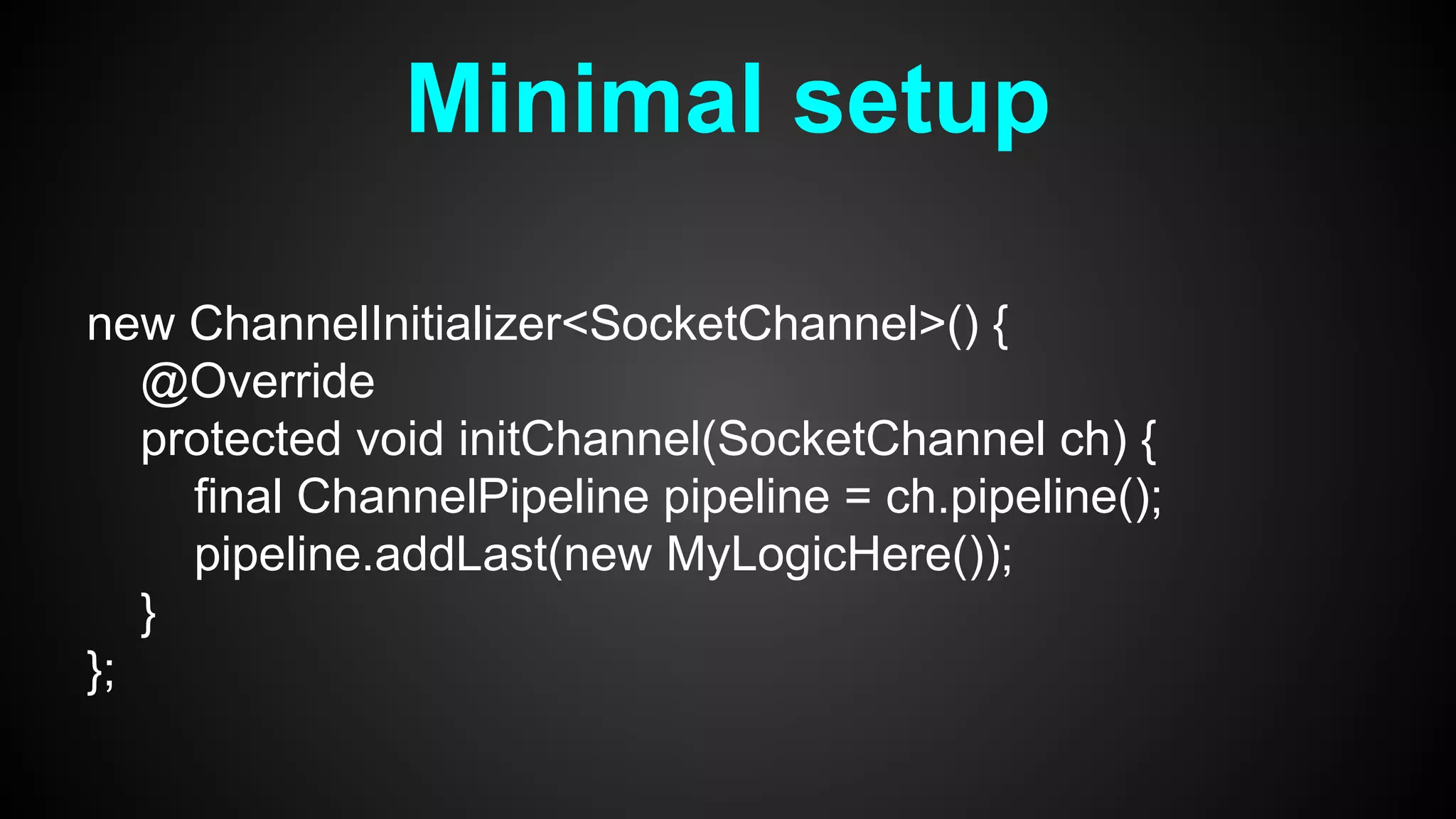

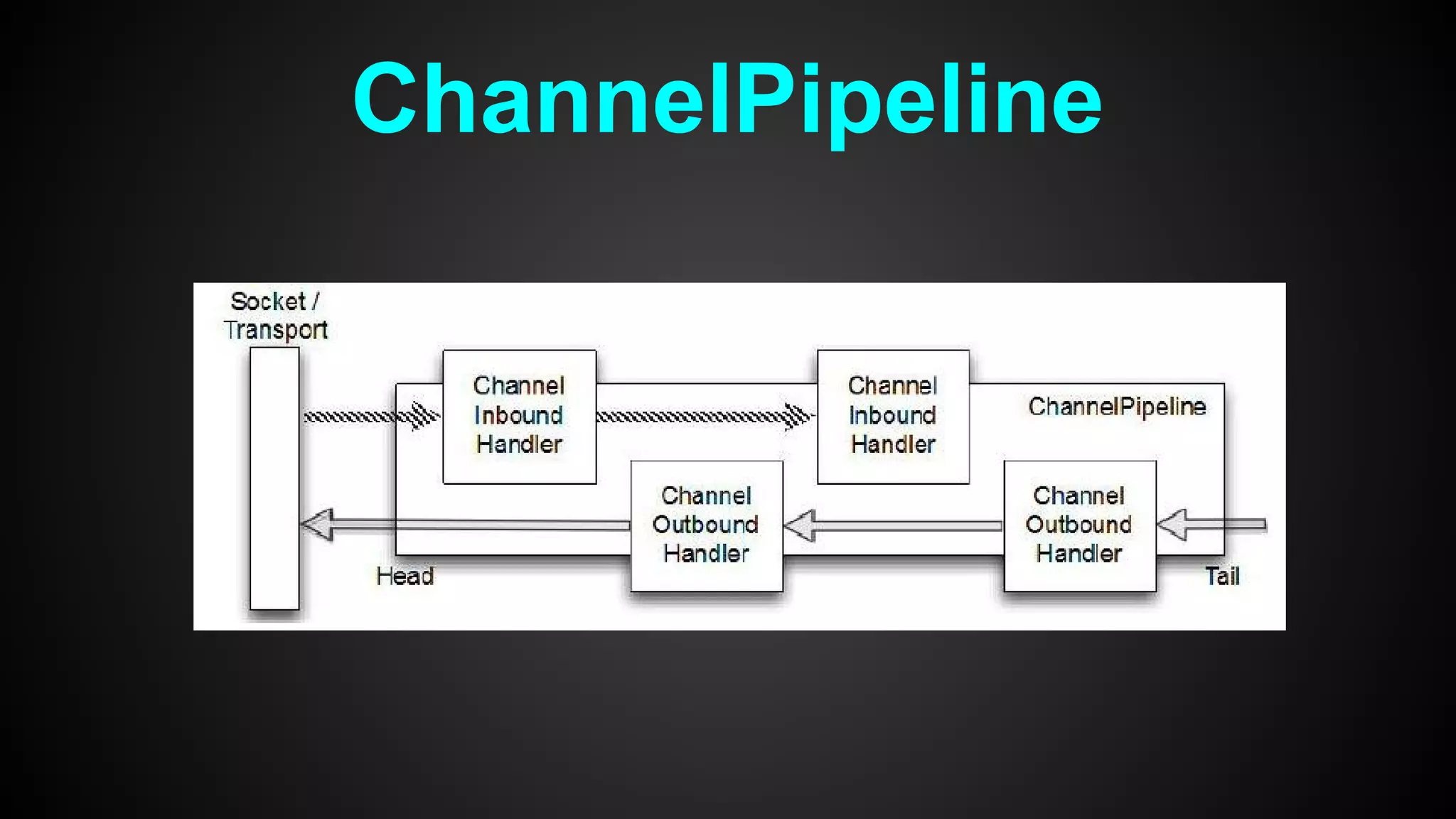

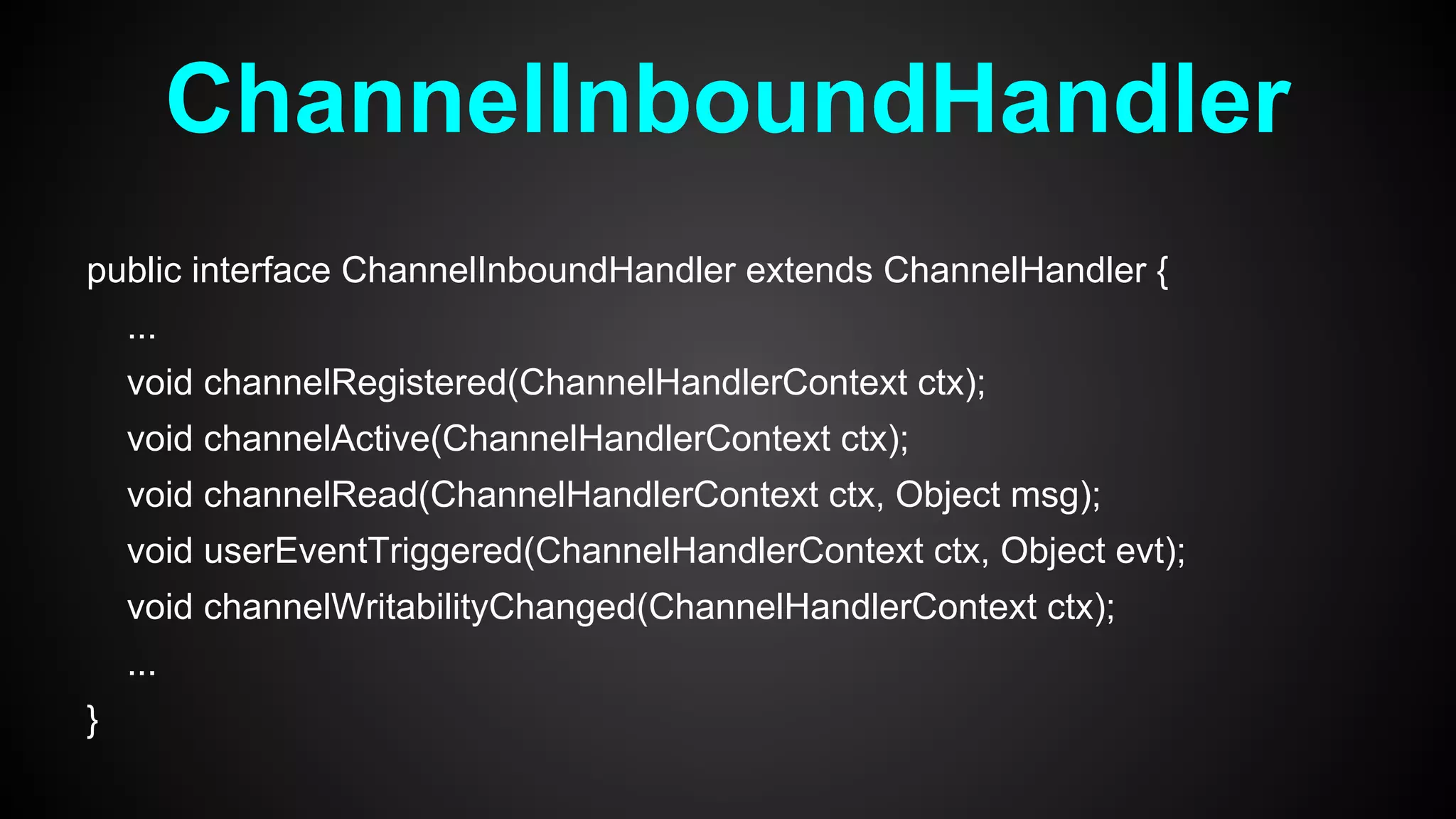

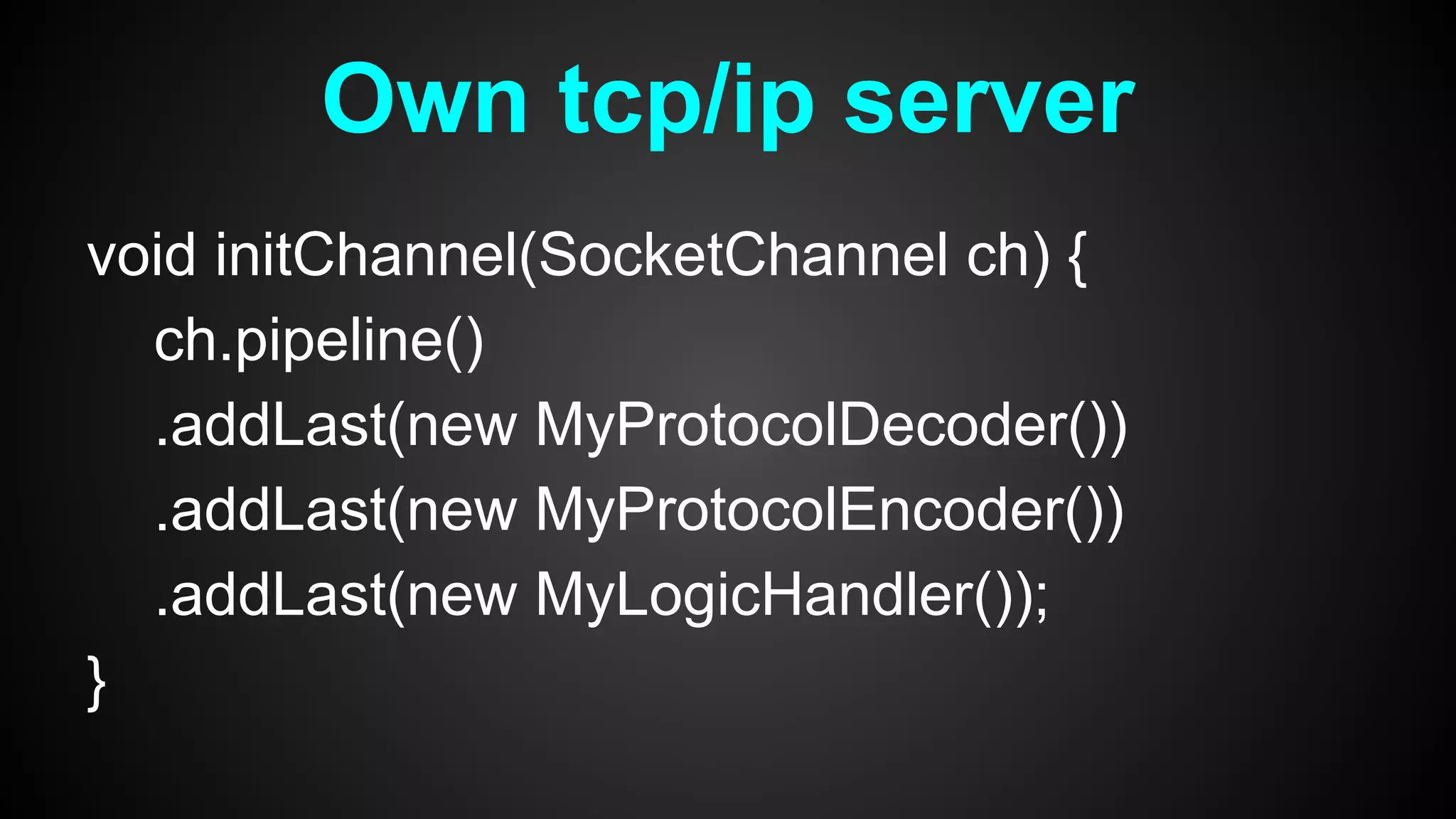

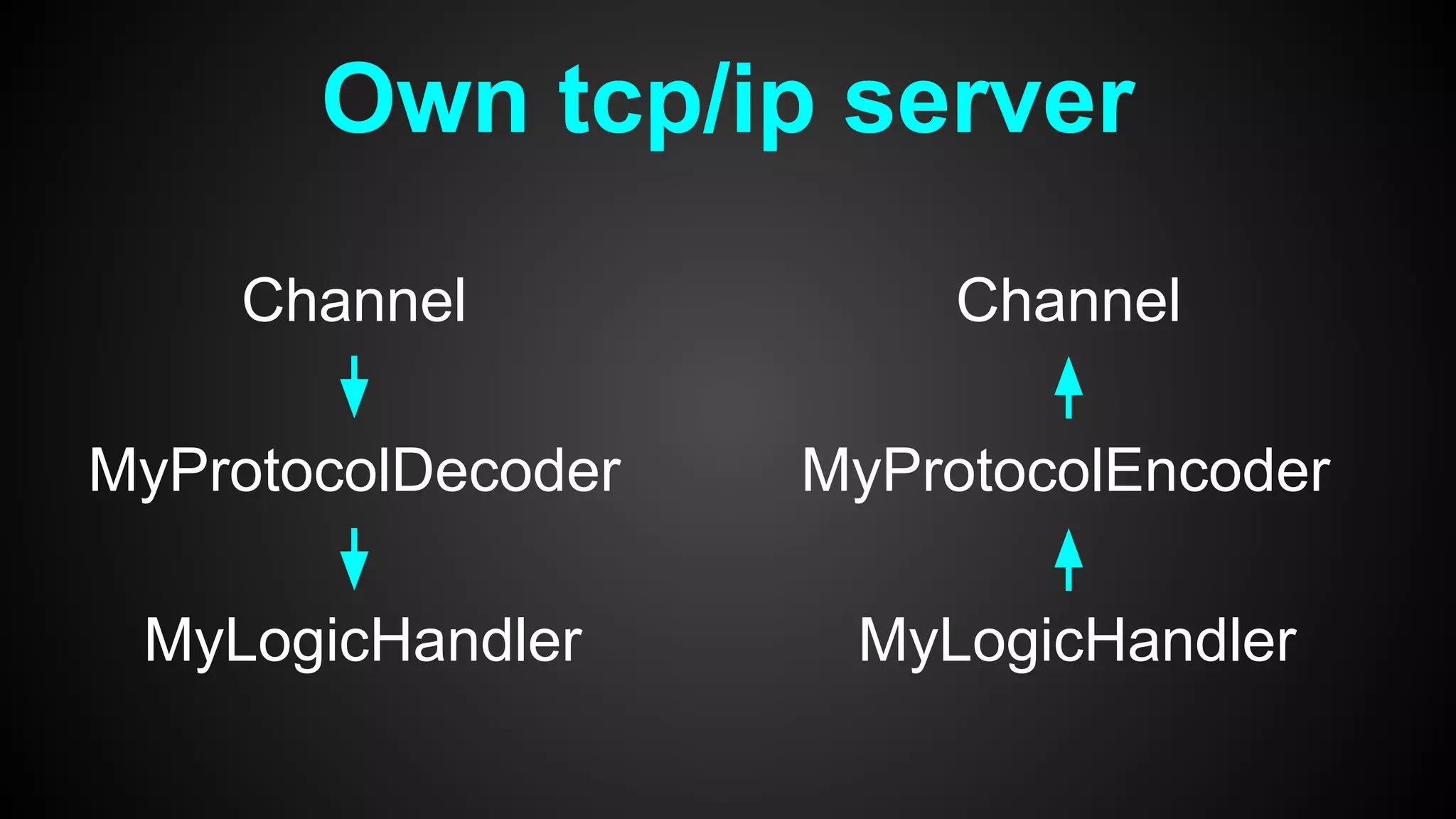

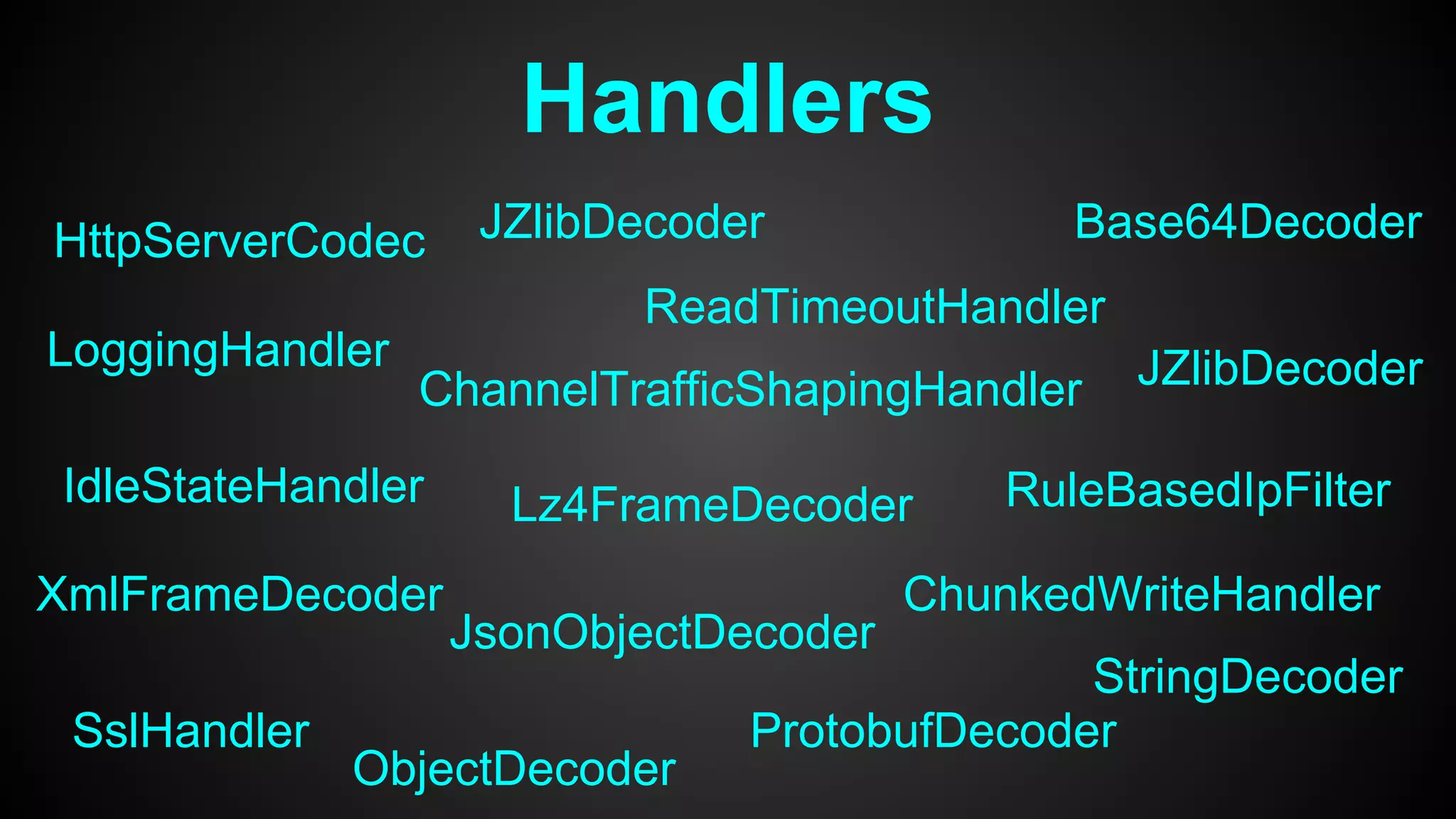

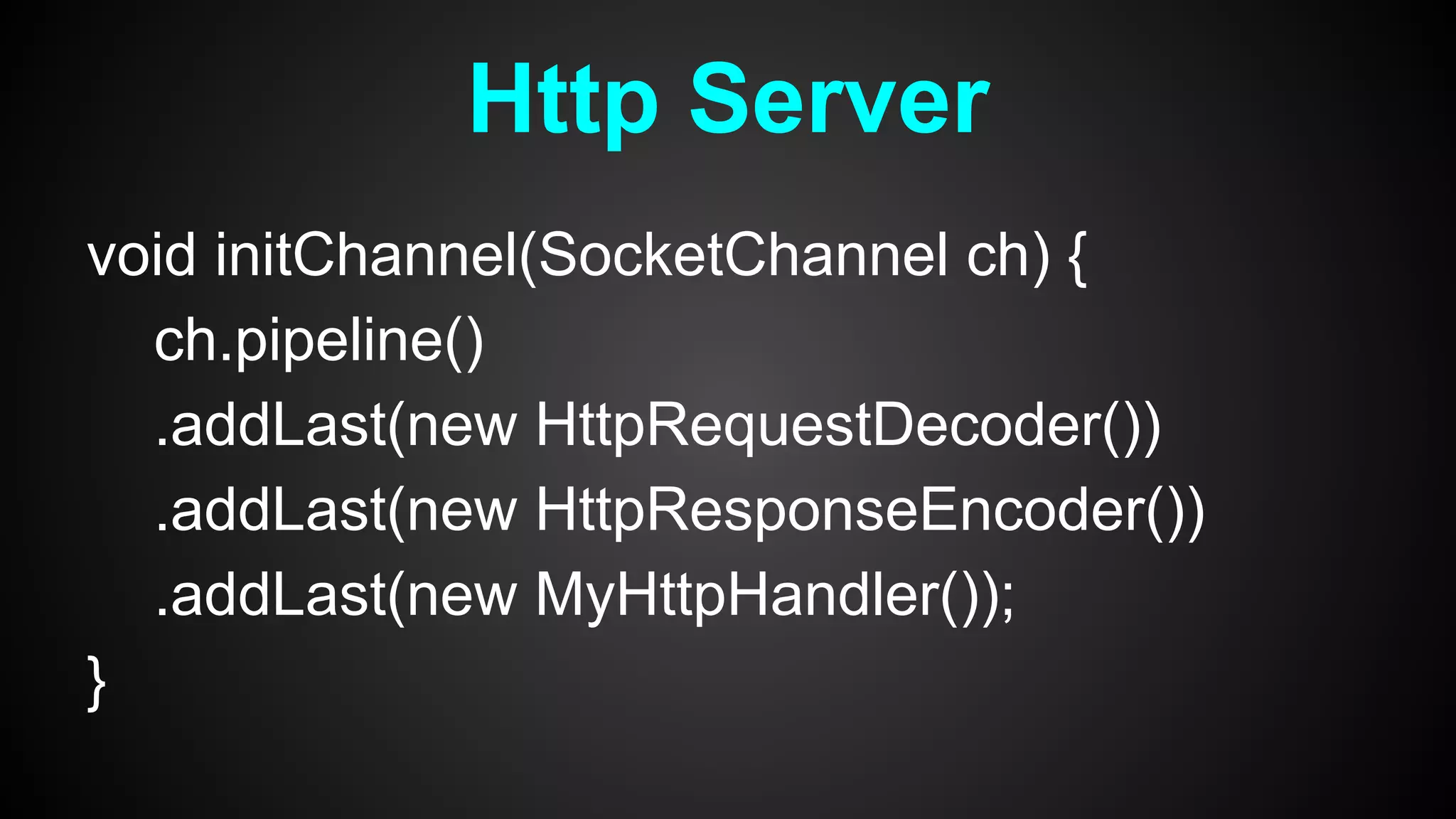

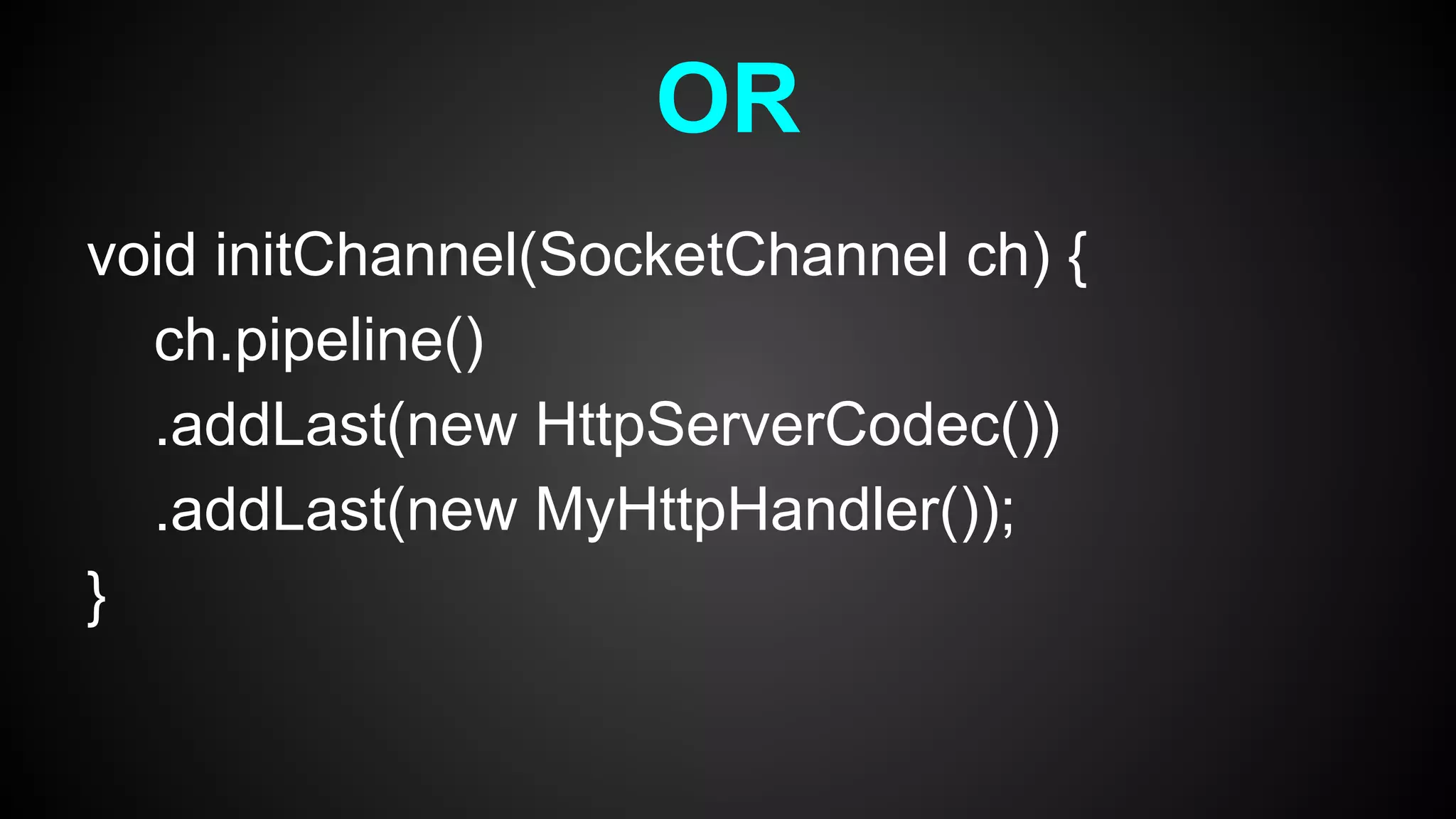

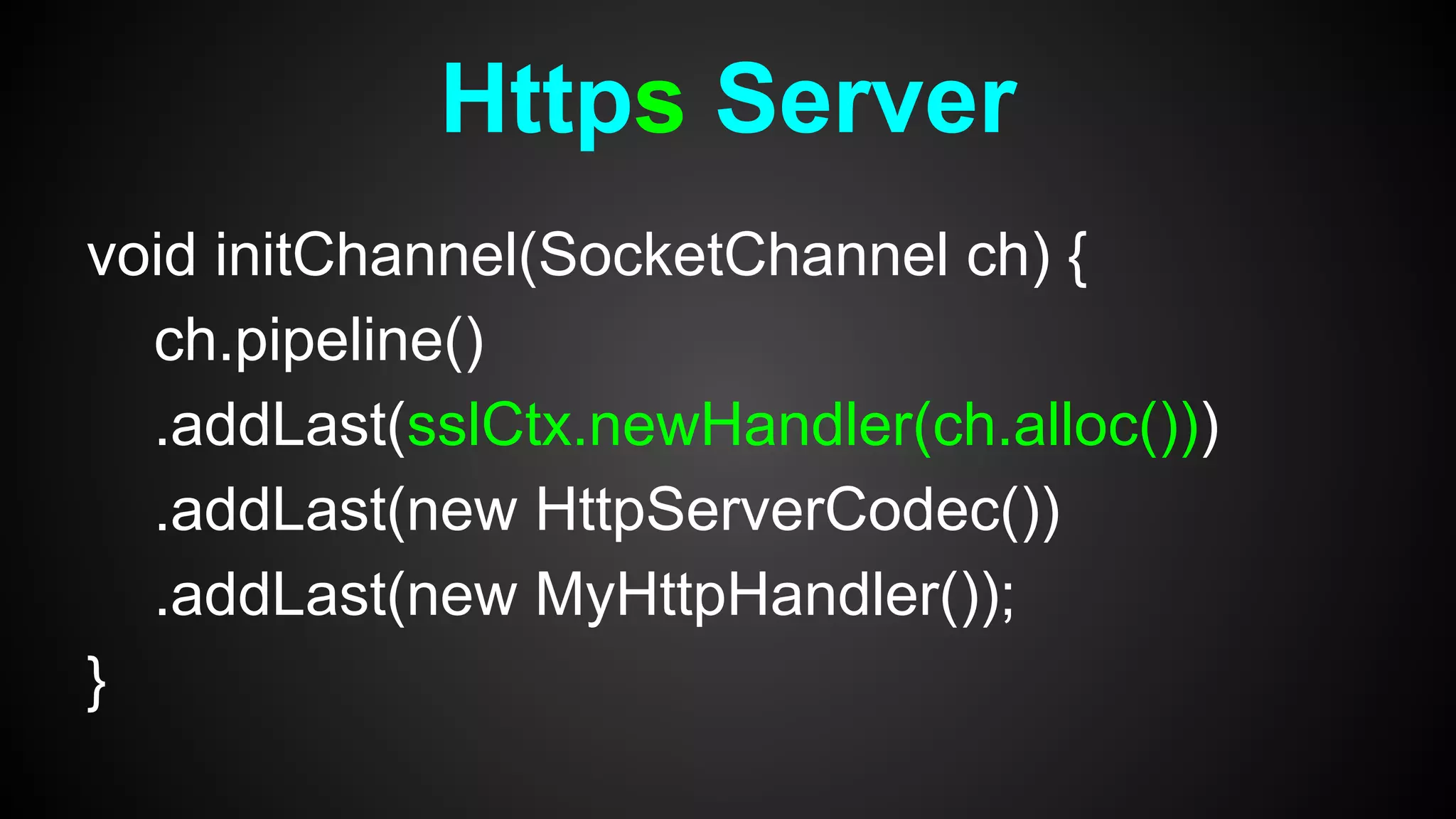

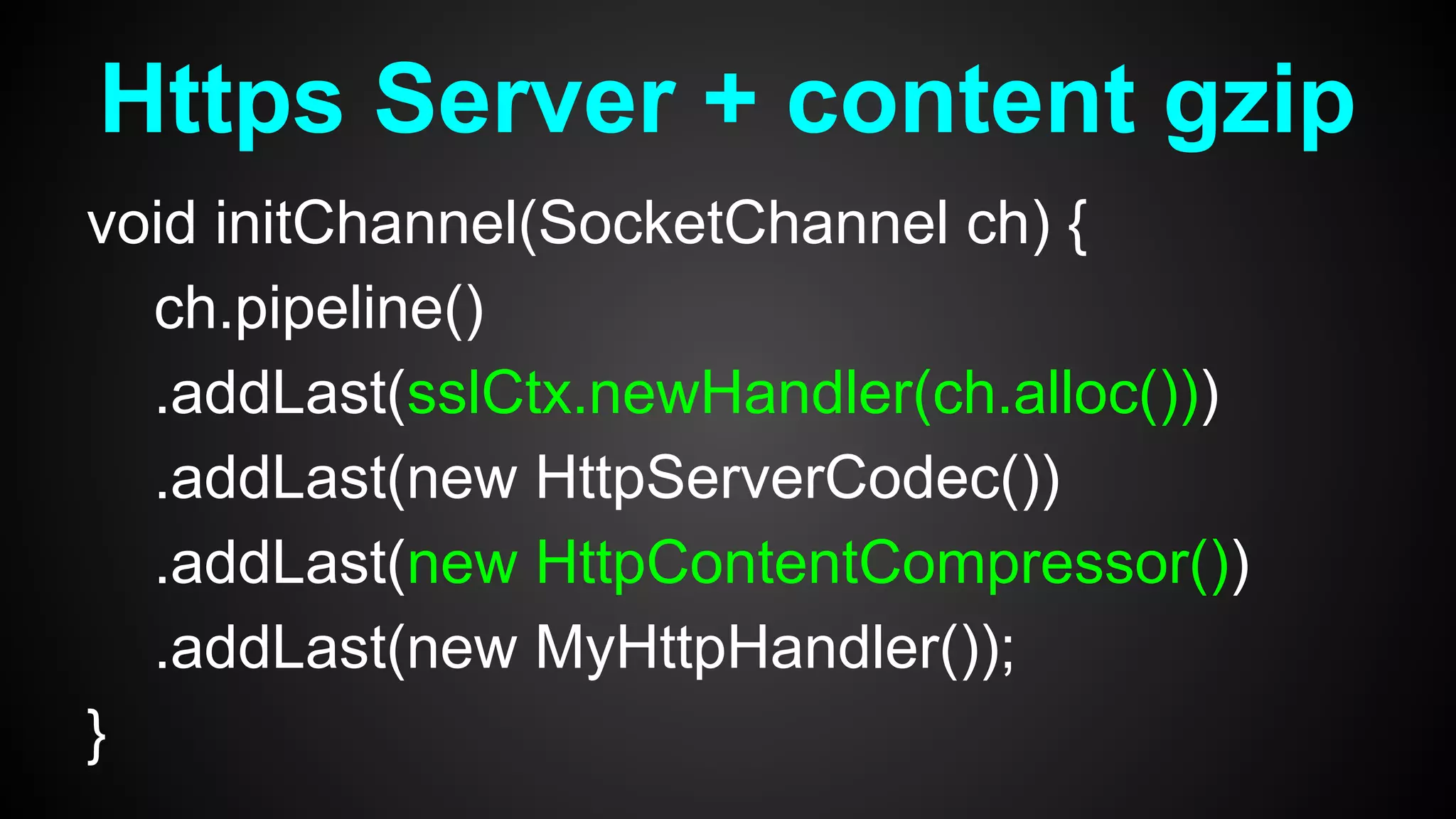

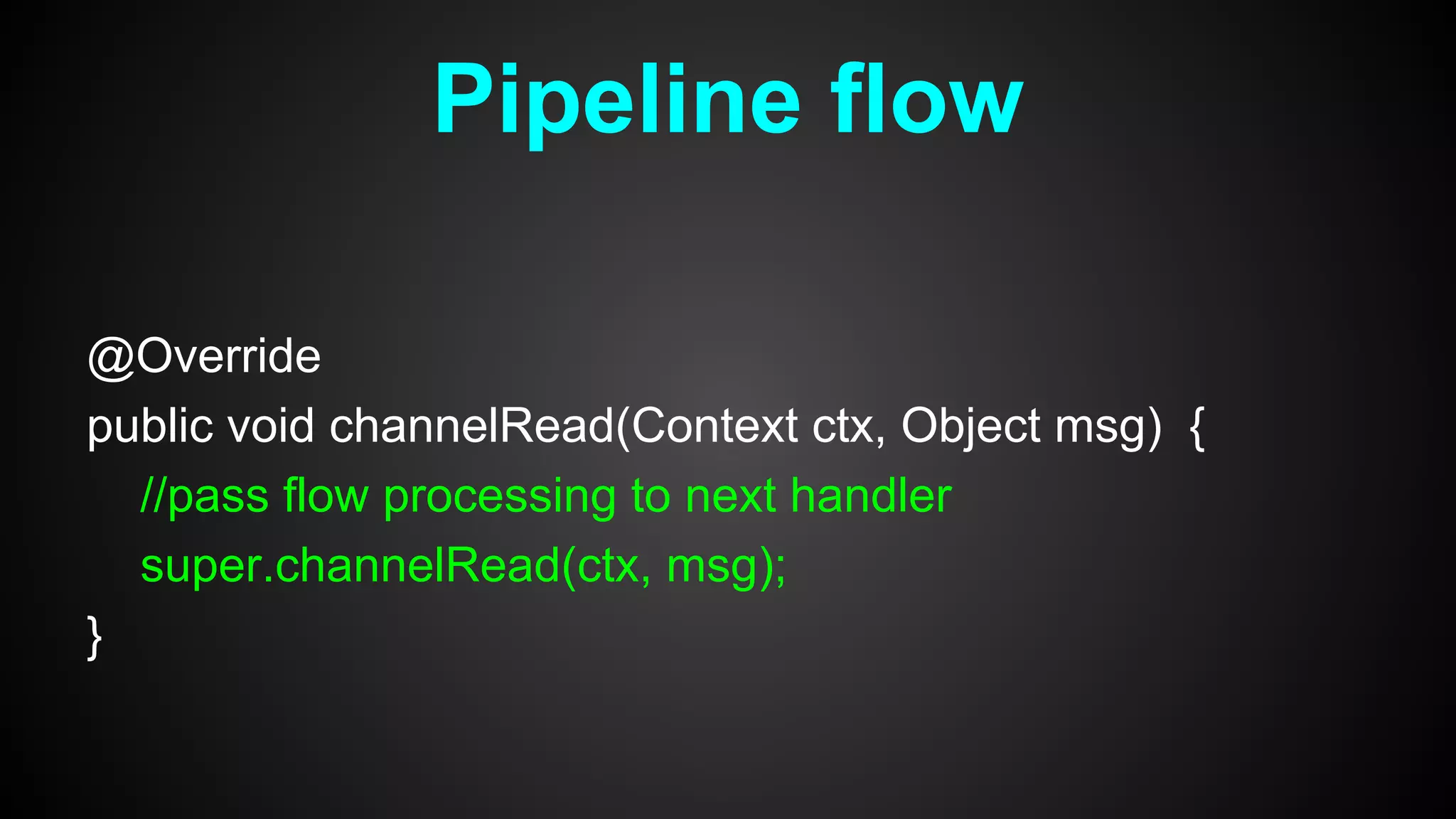

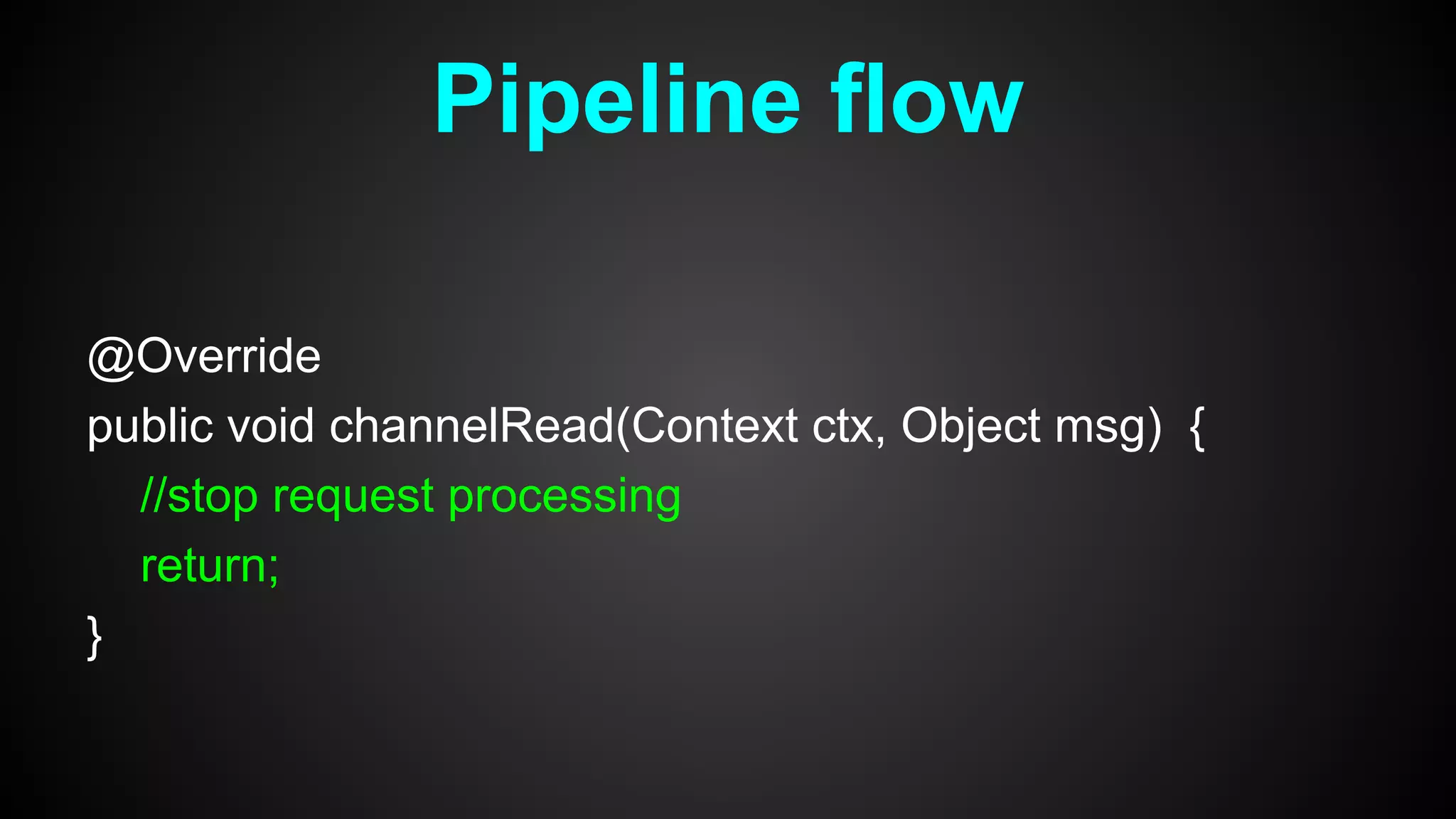

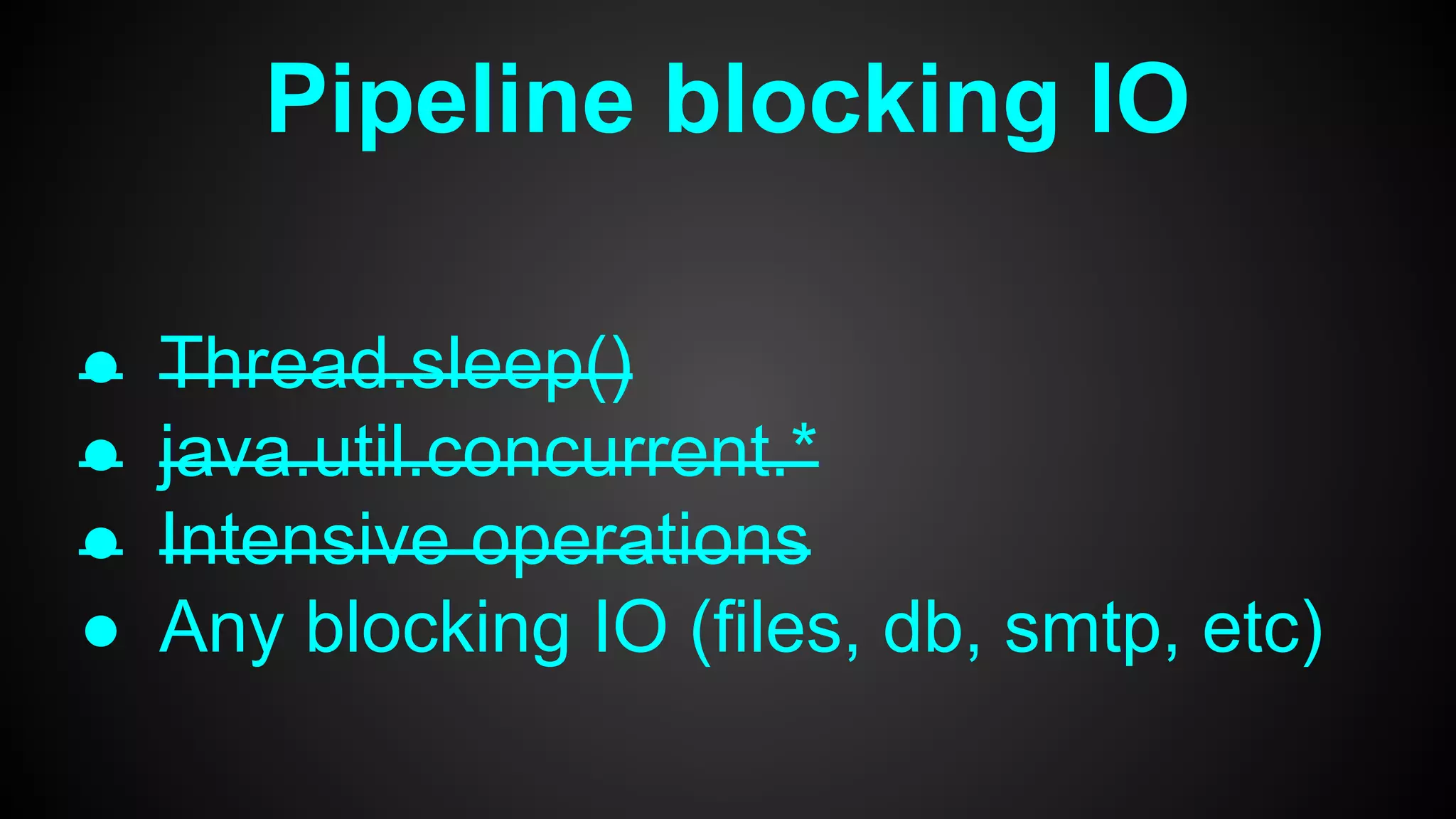

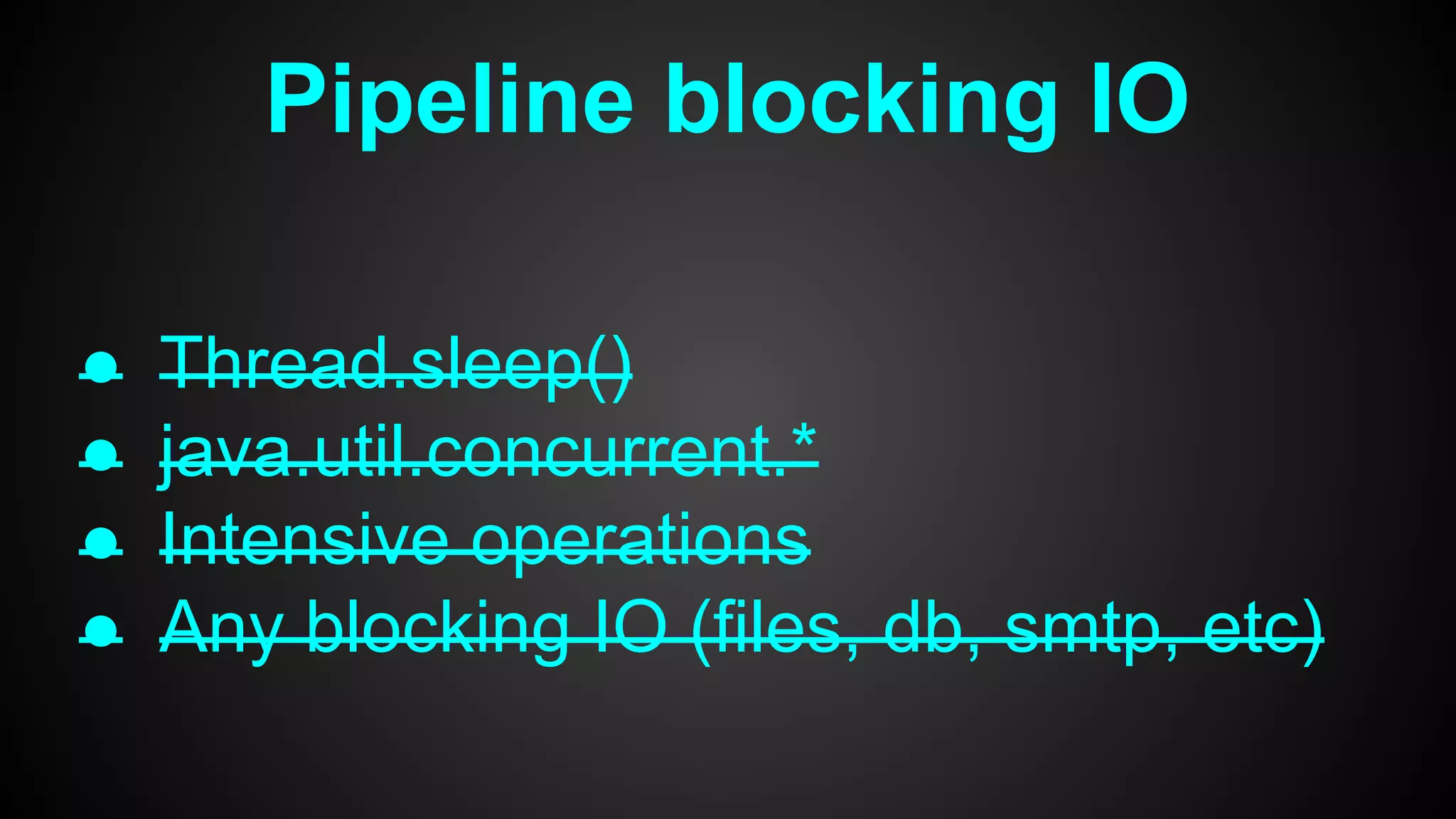

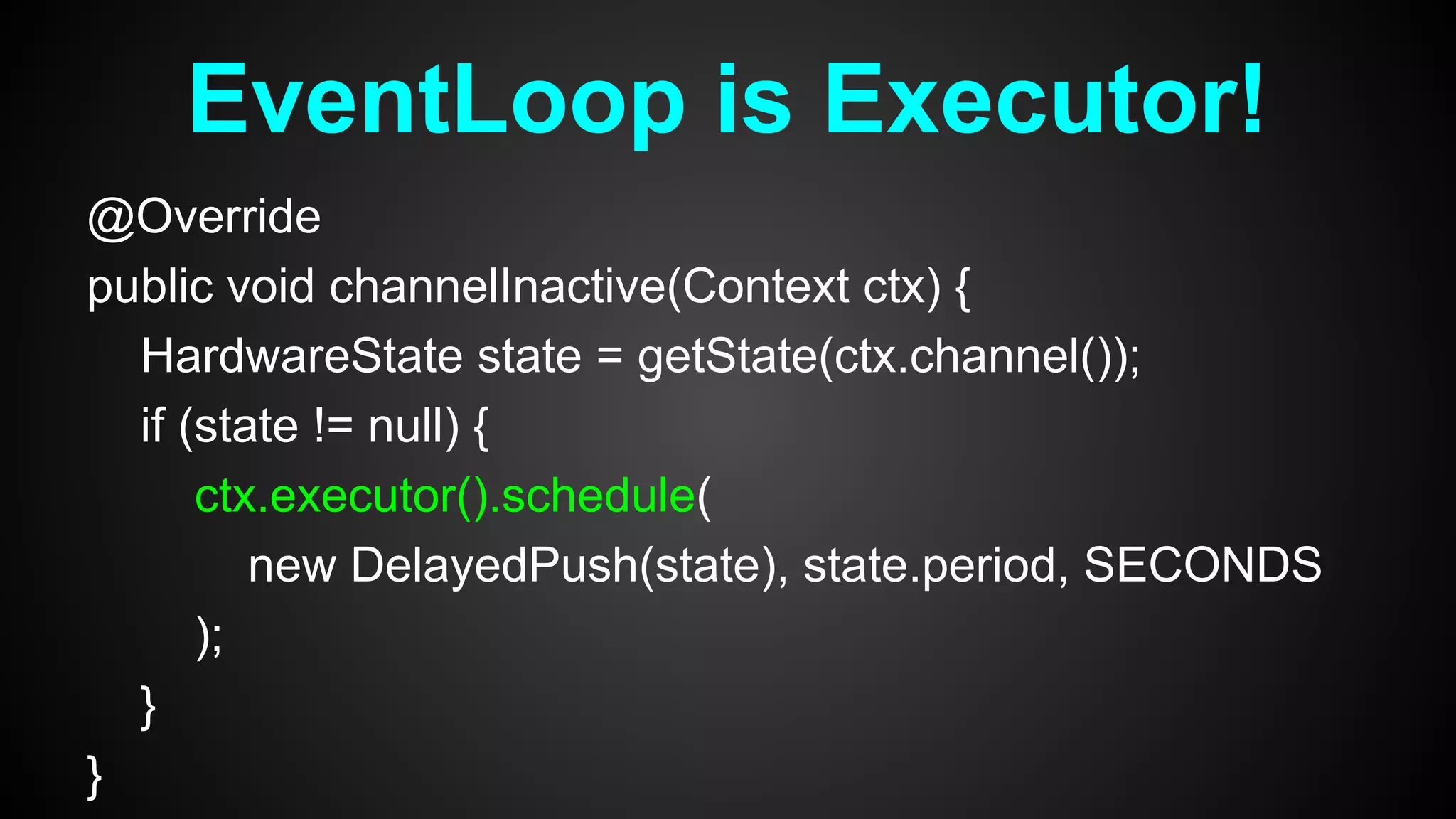

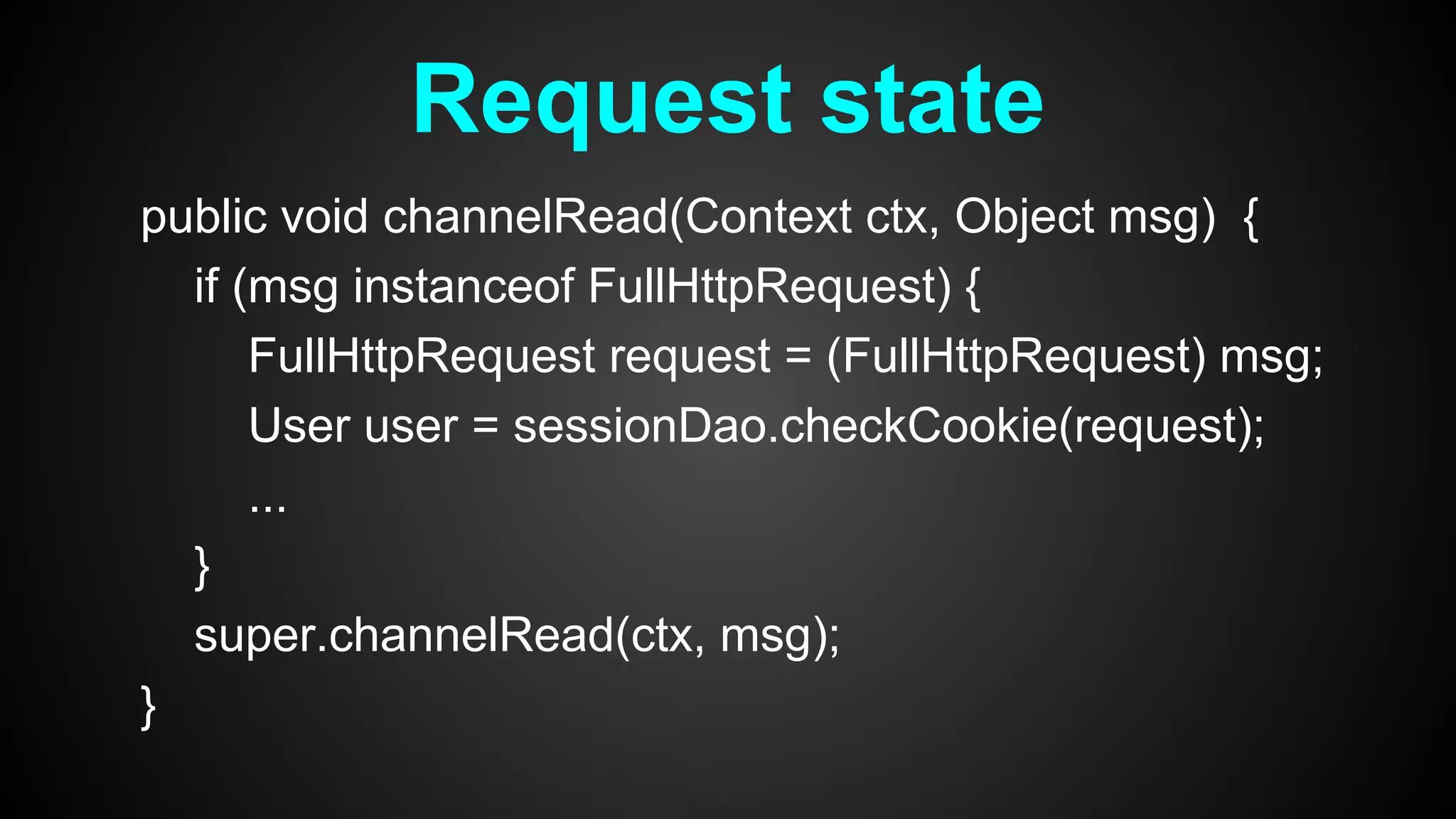

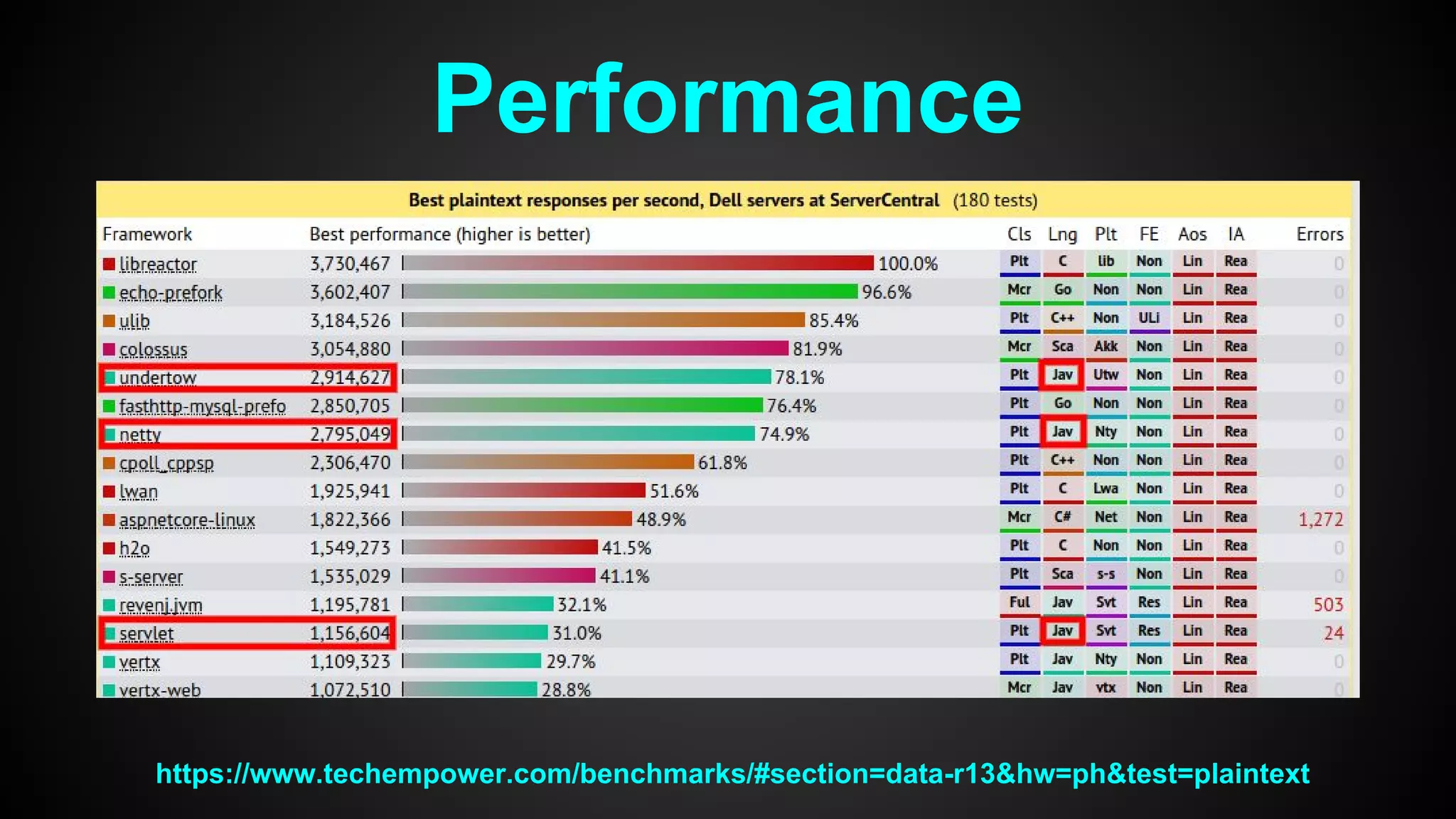

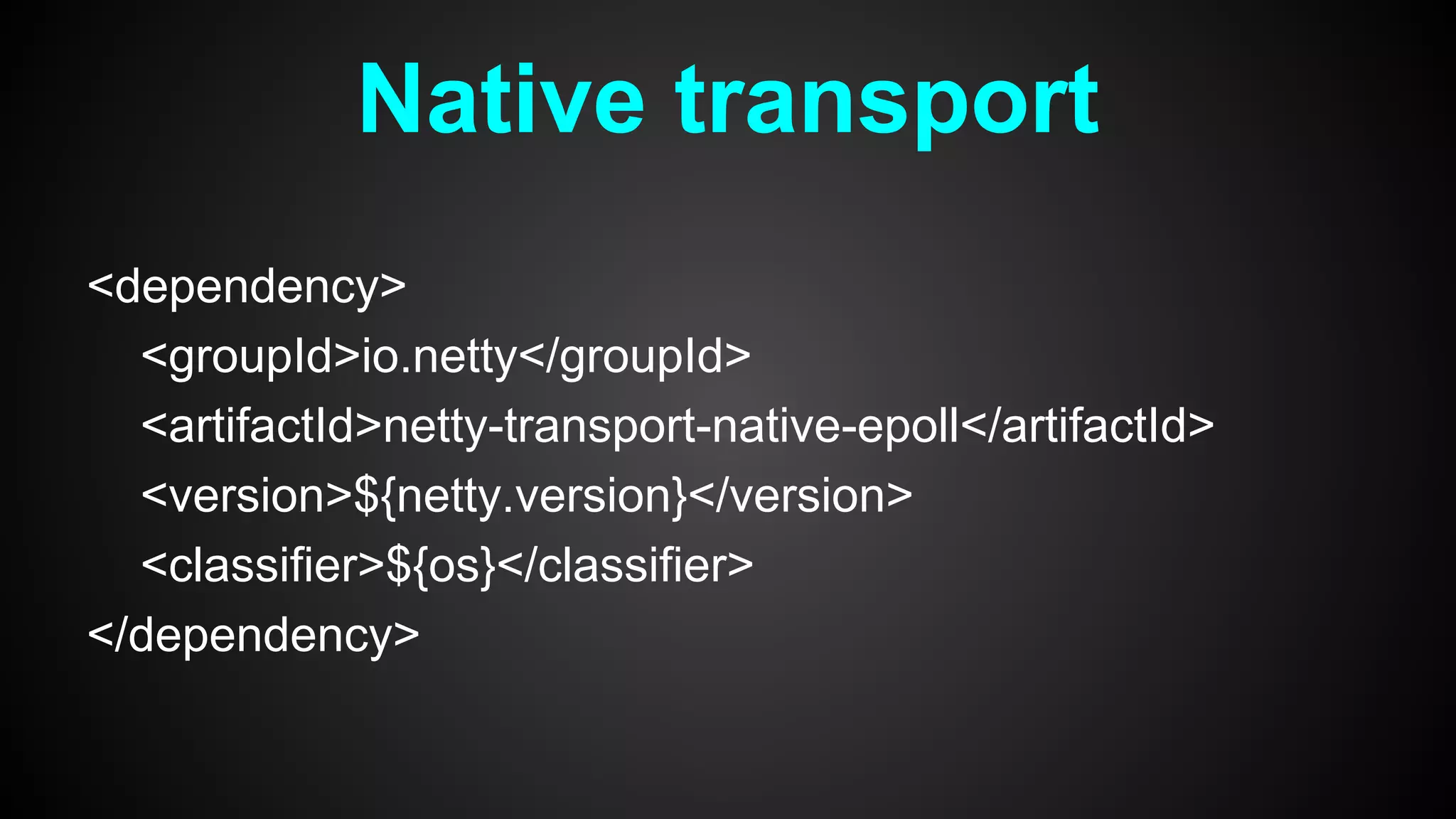

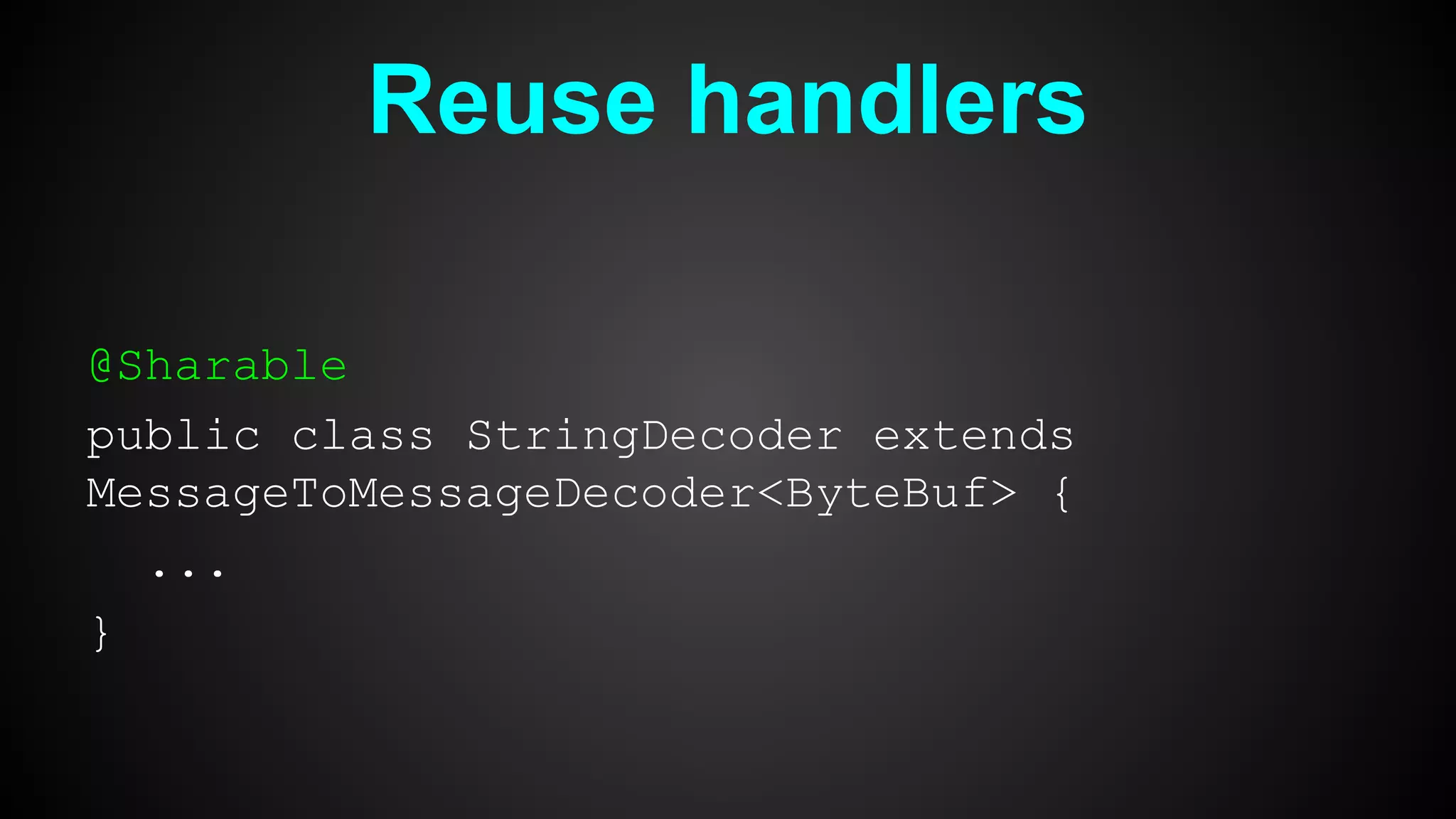

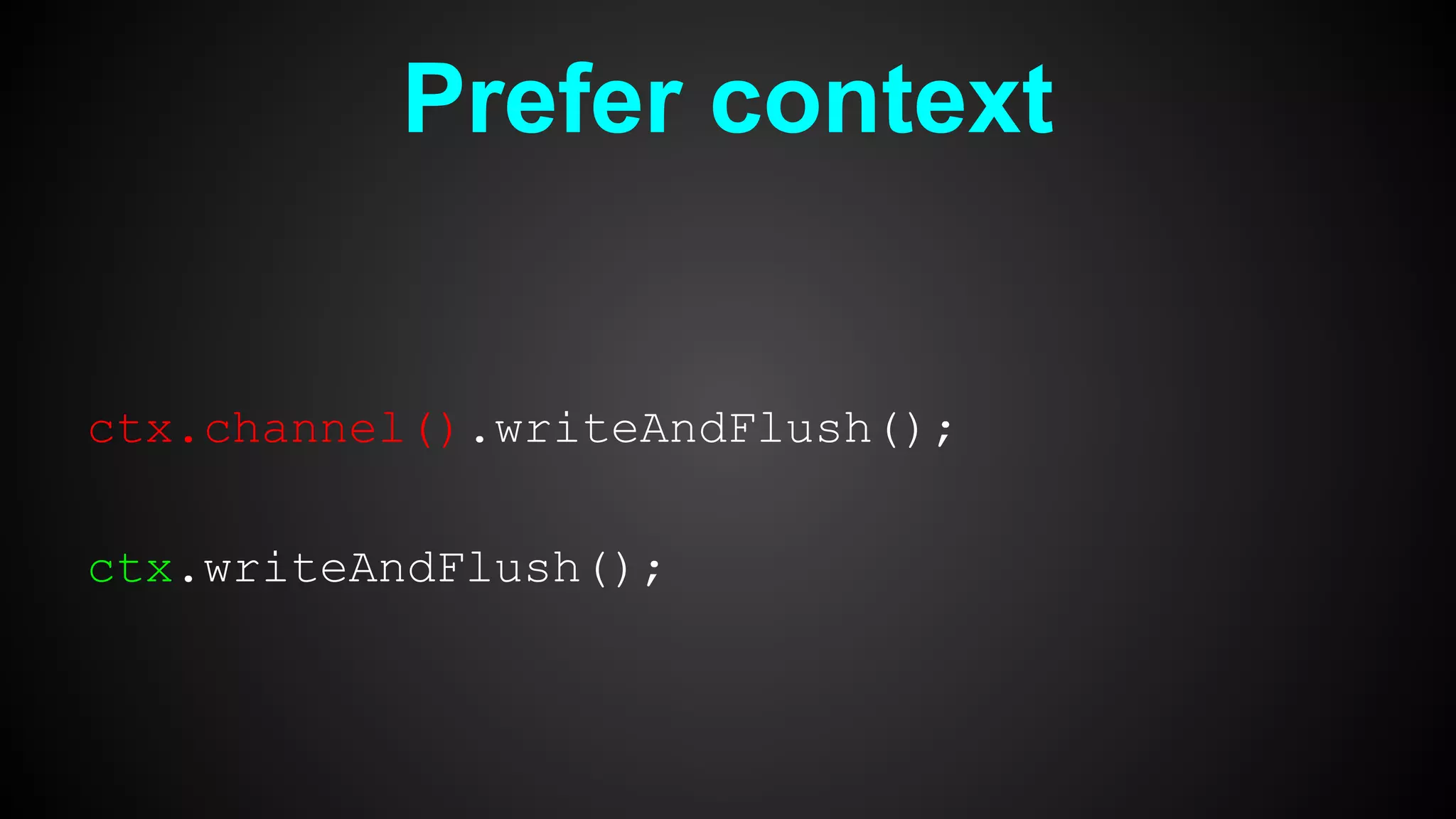

This document discusses using Netty for building high performance reactive servers. It provides an overview of Netty's features such as non-blocking I/O, channel pipelines, event loops and performance optimizations. Examples are given of building HTTP and TCP servers using Netty. Reasons for choosing Netty include its high performance, low garbage collection overhead, support for various protocols and full control over networking.