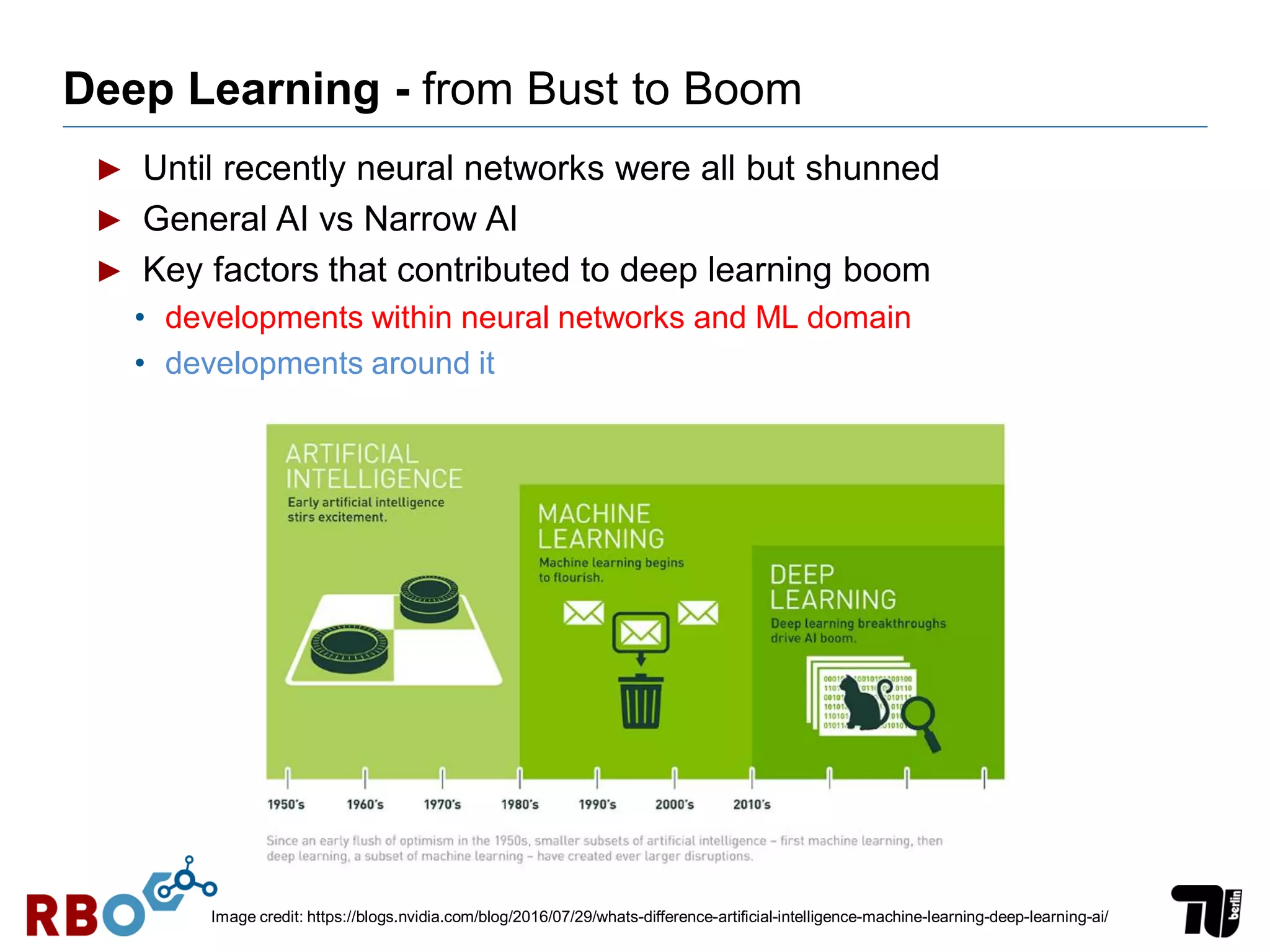

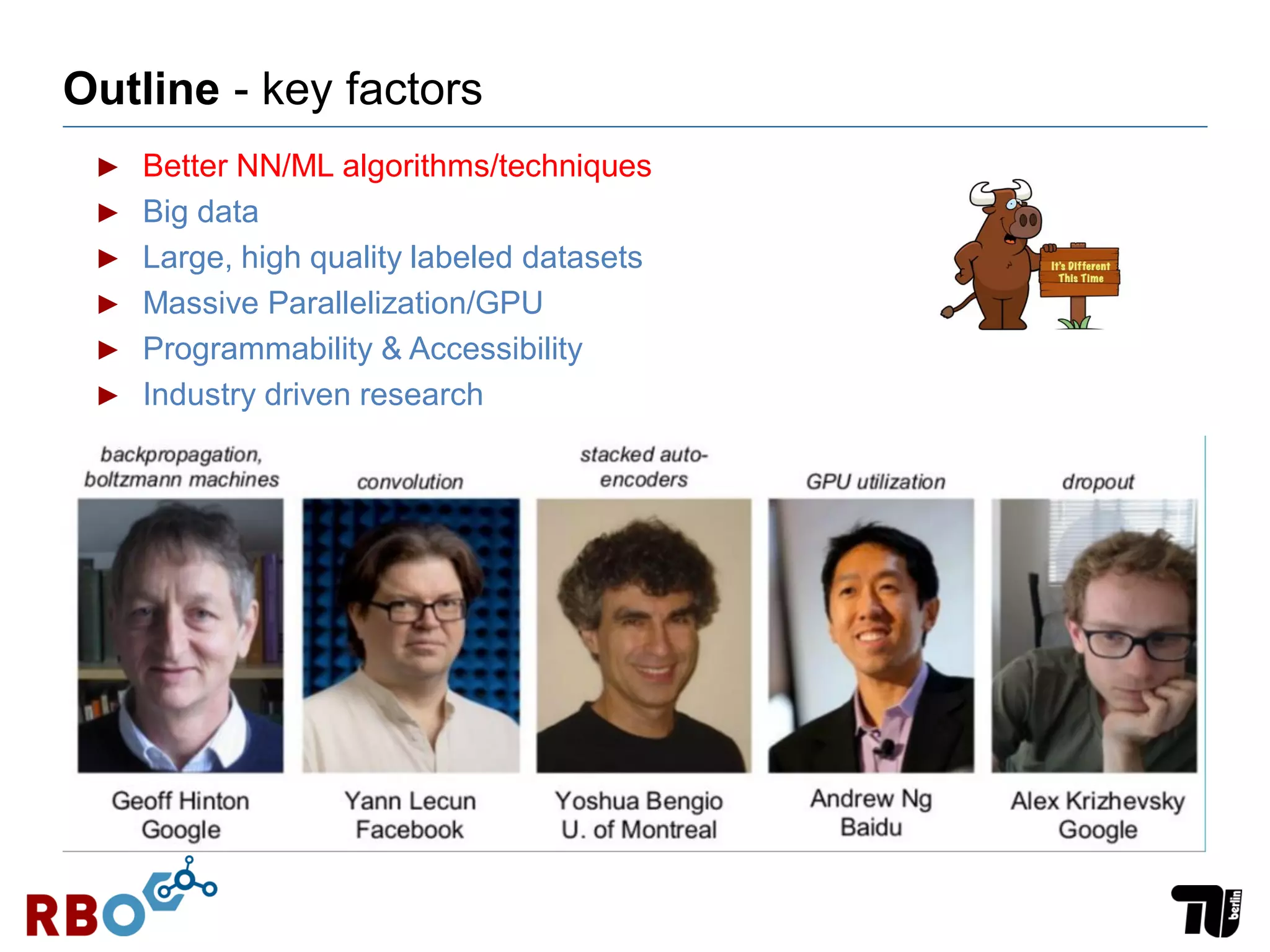

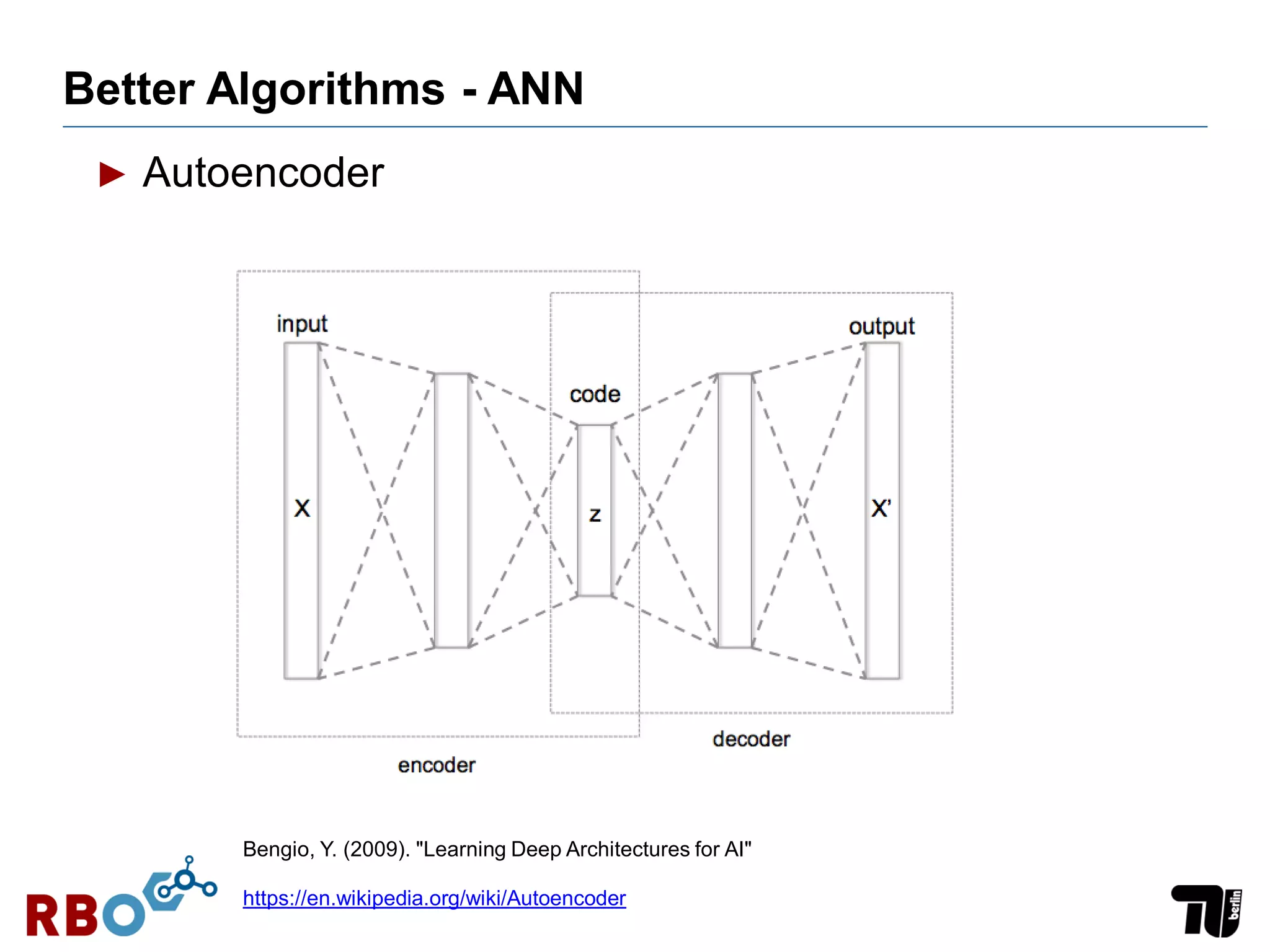

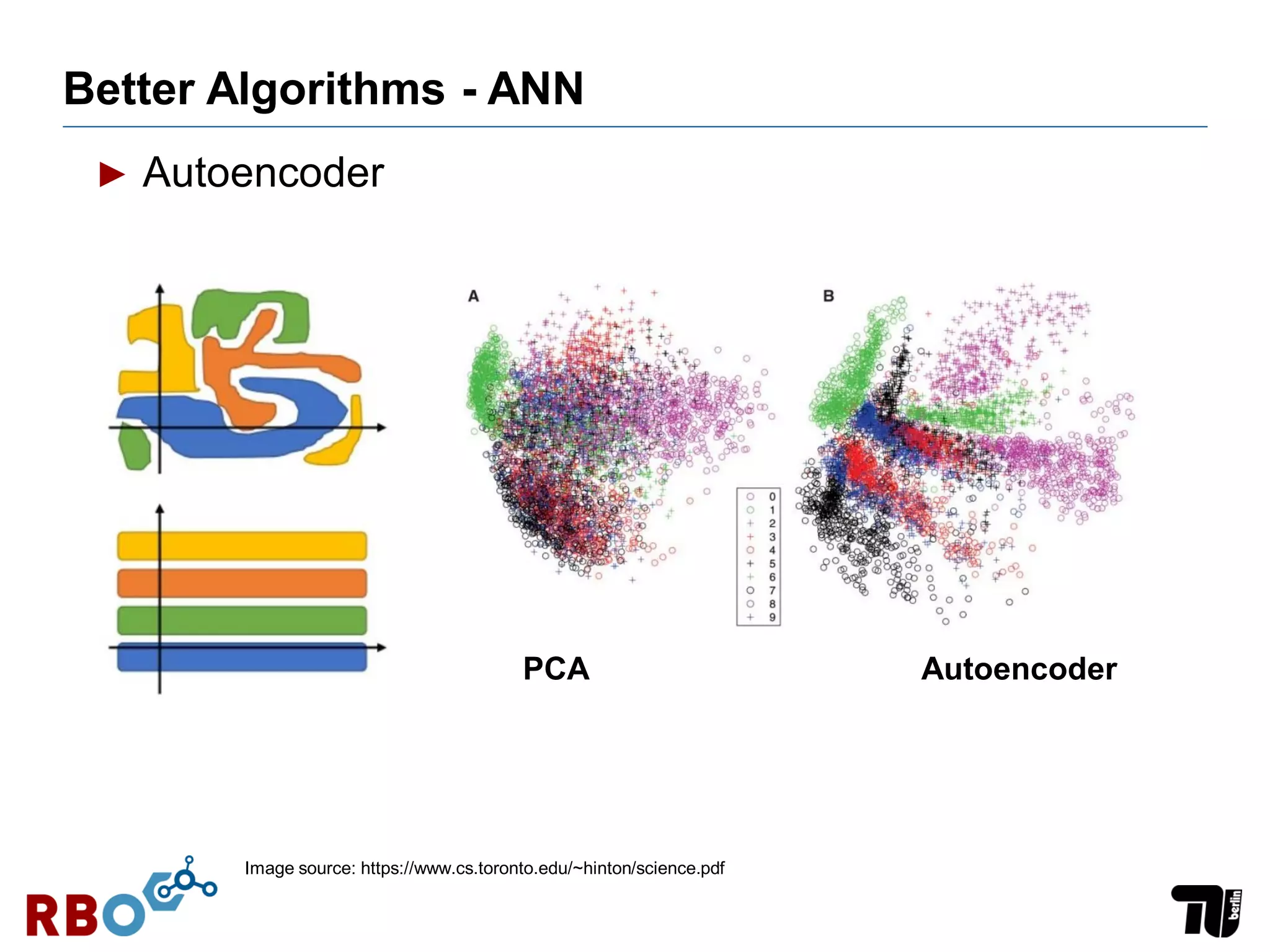

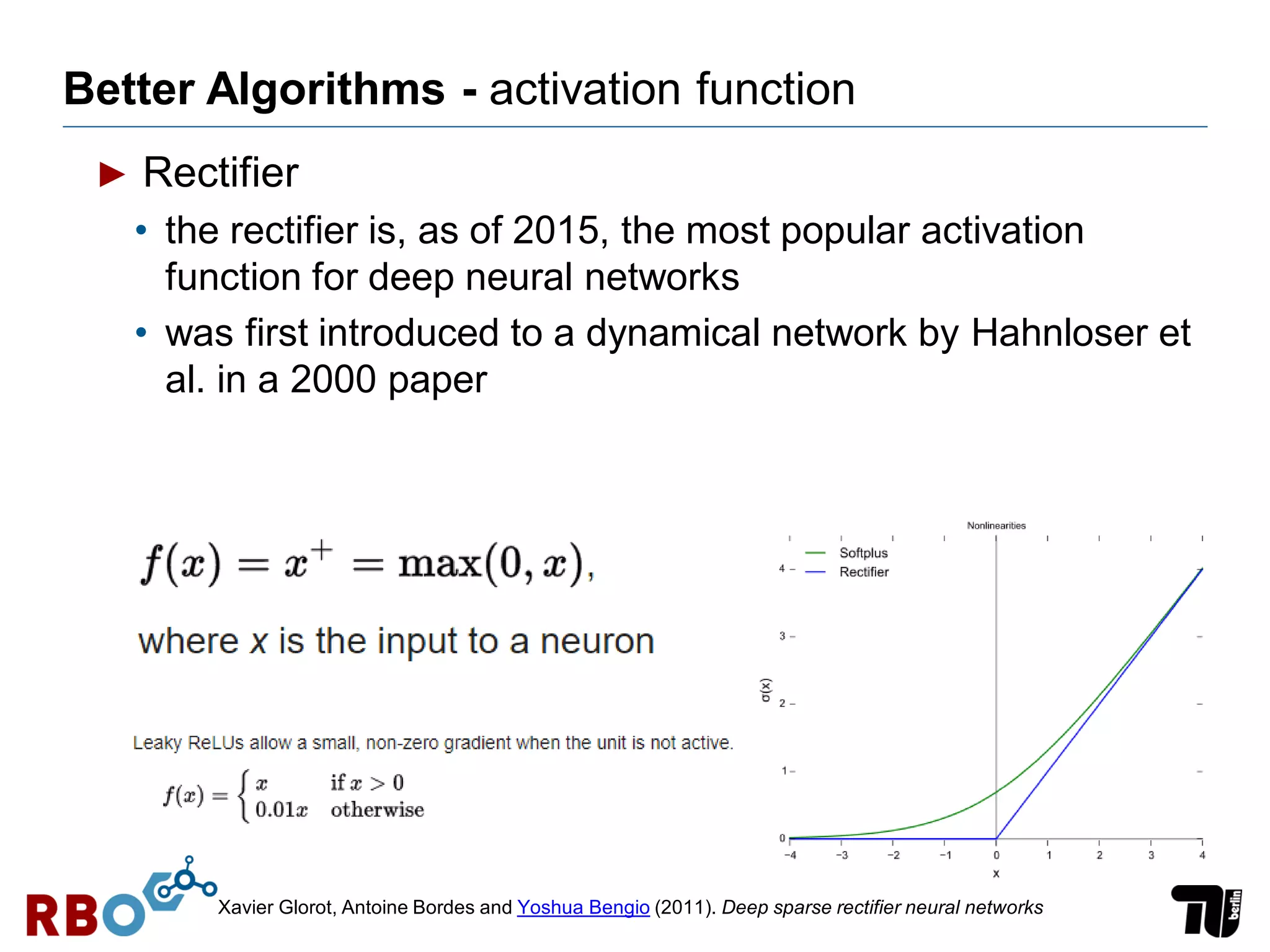

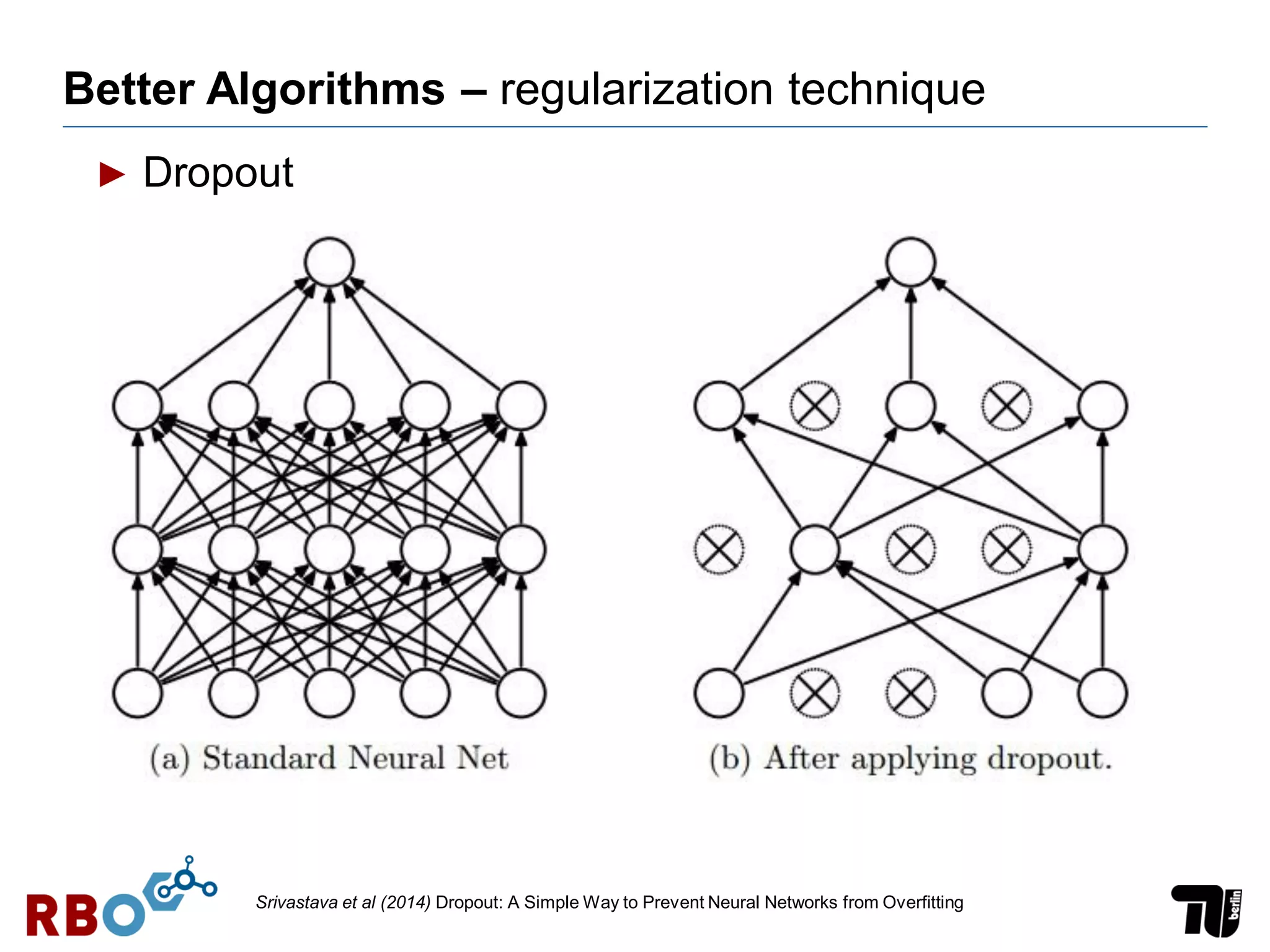

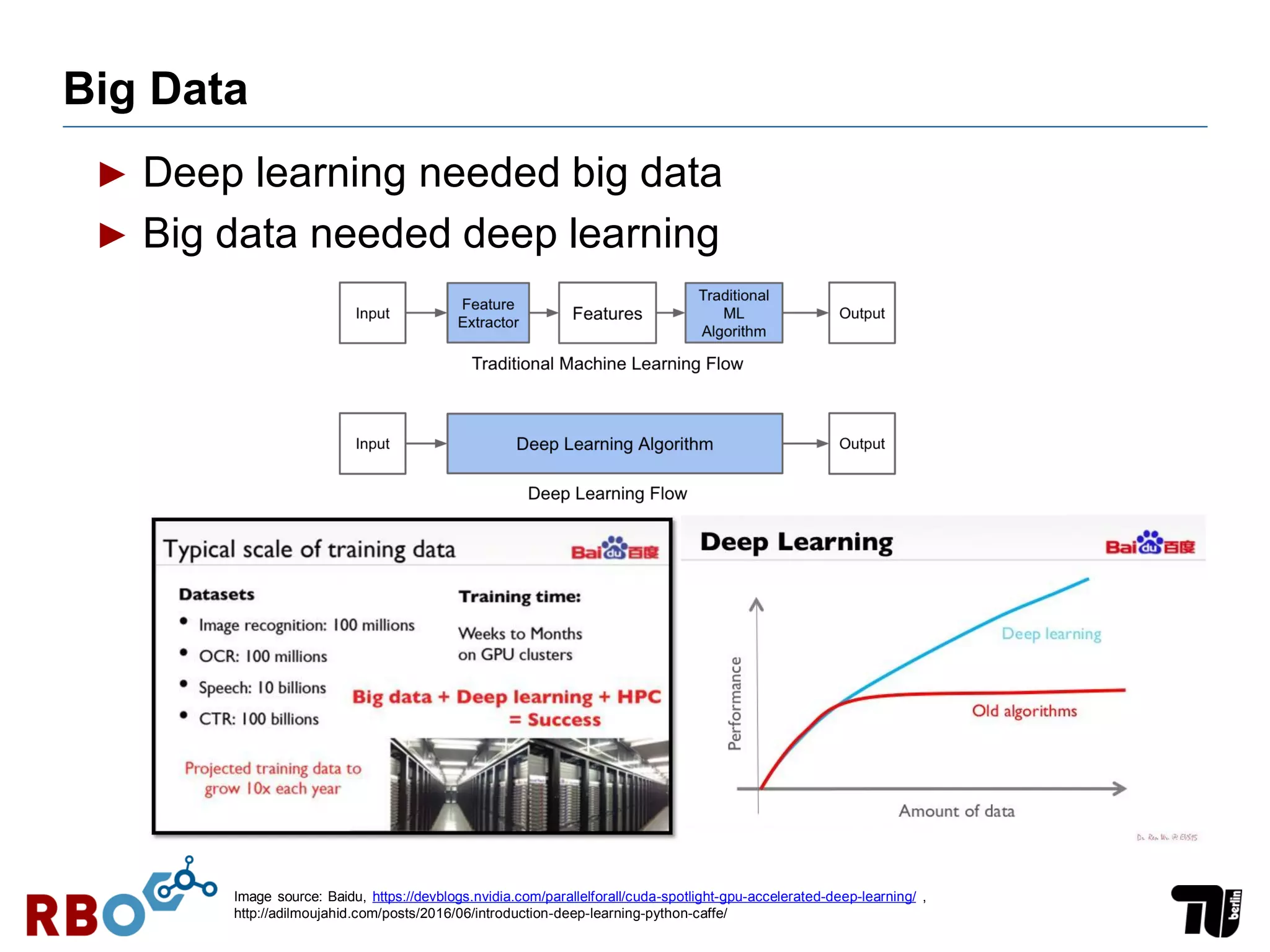

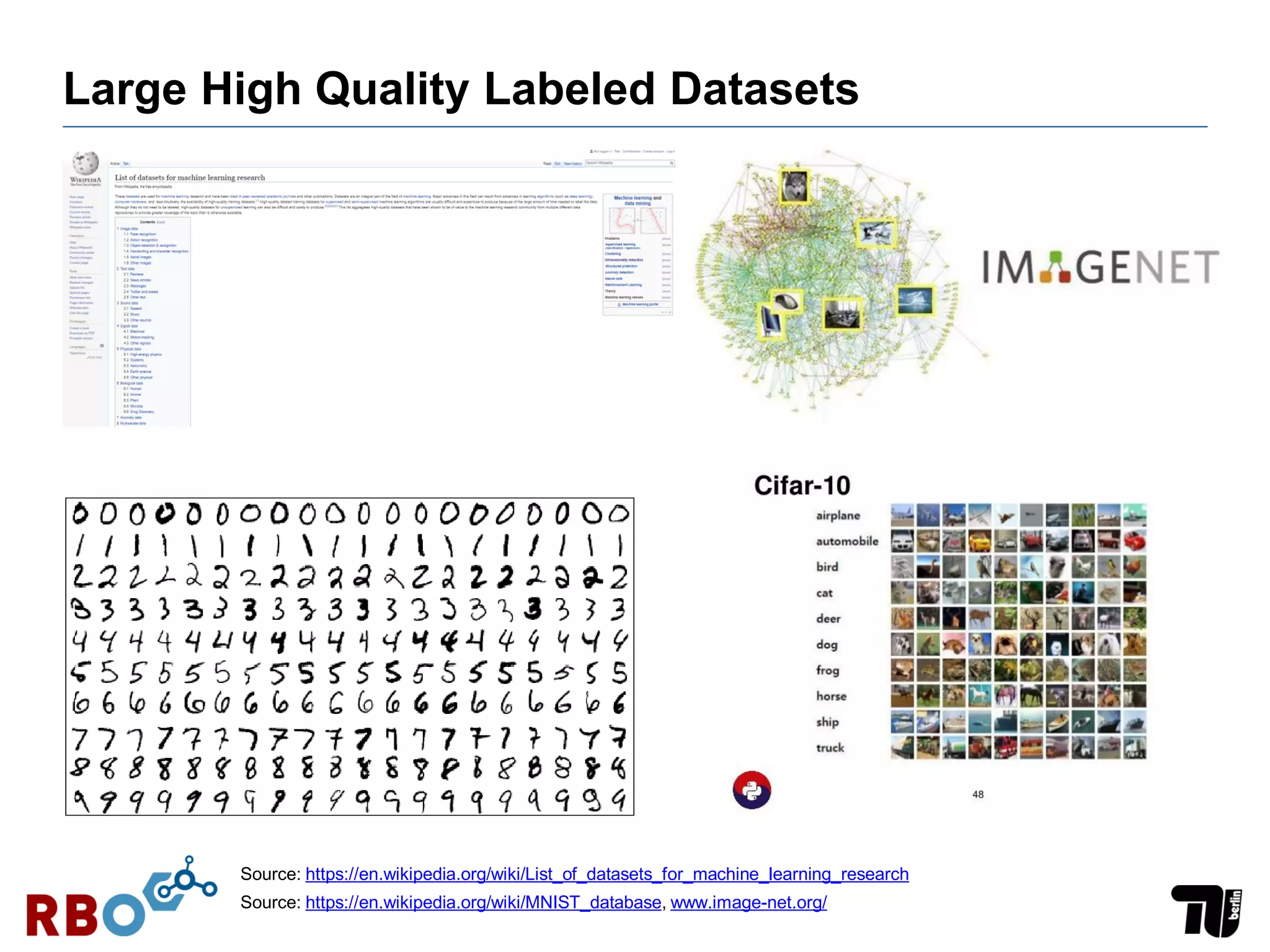

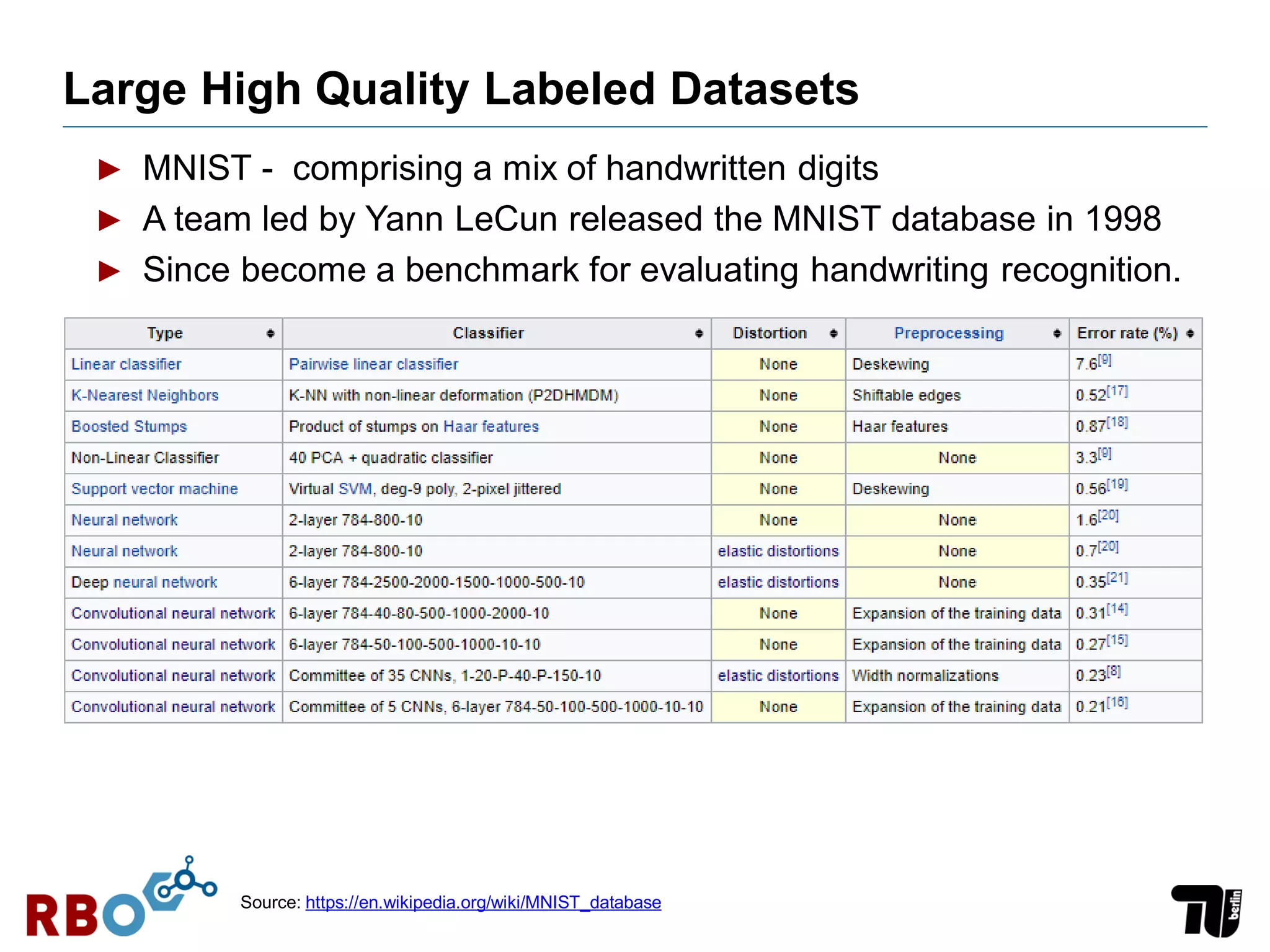

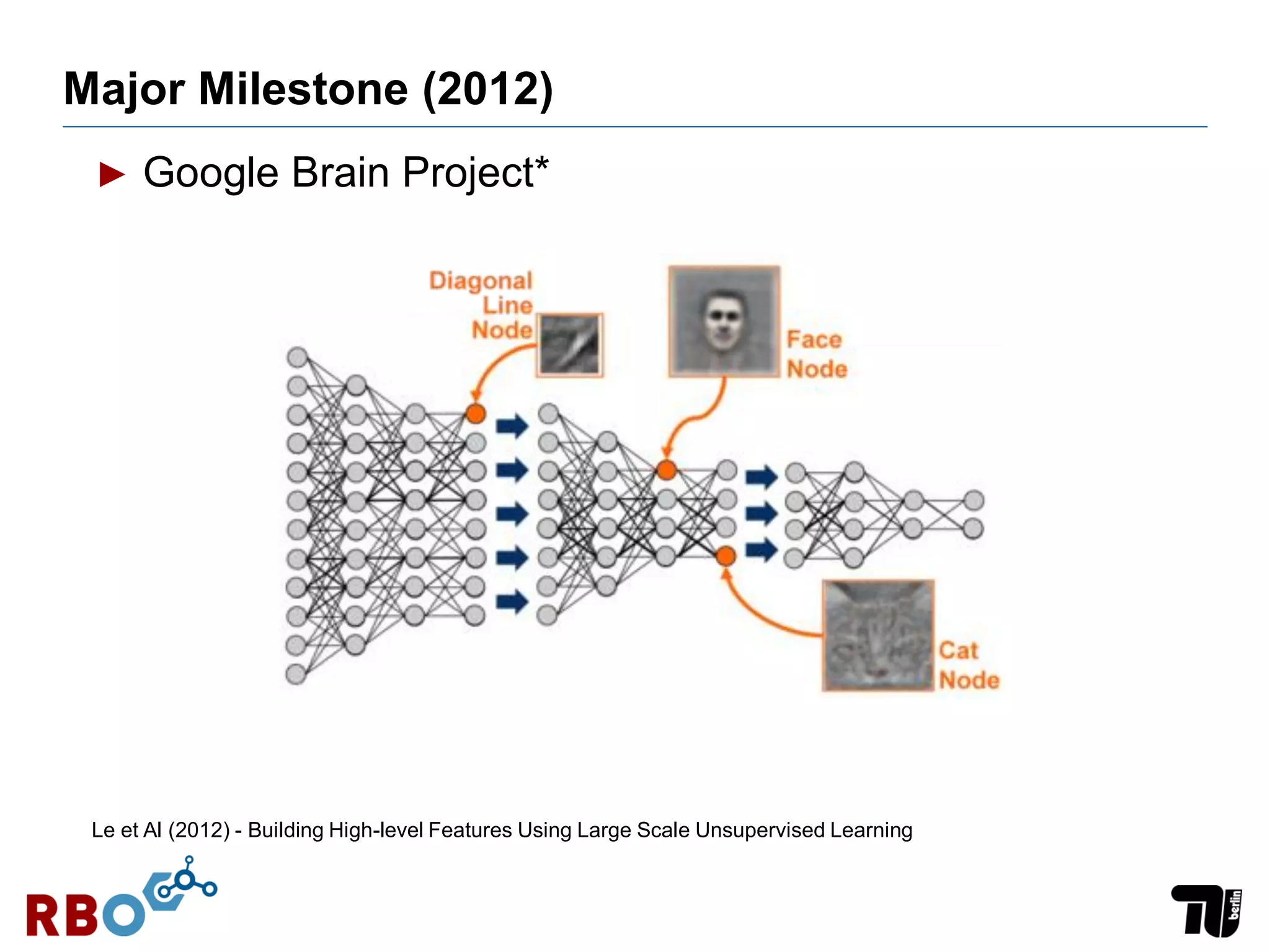

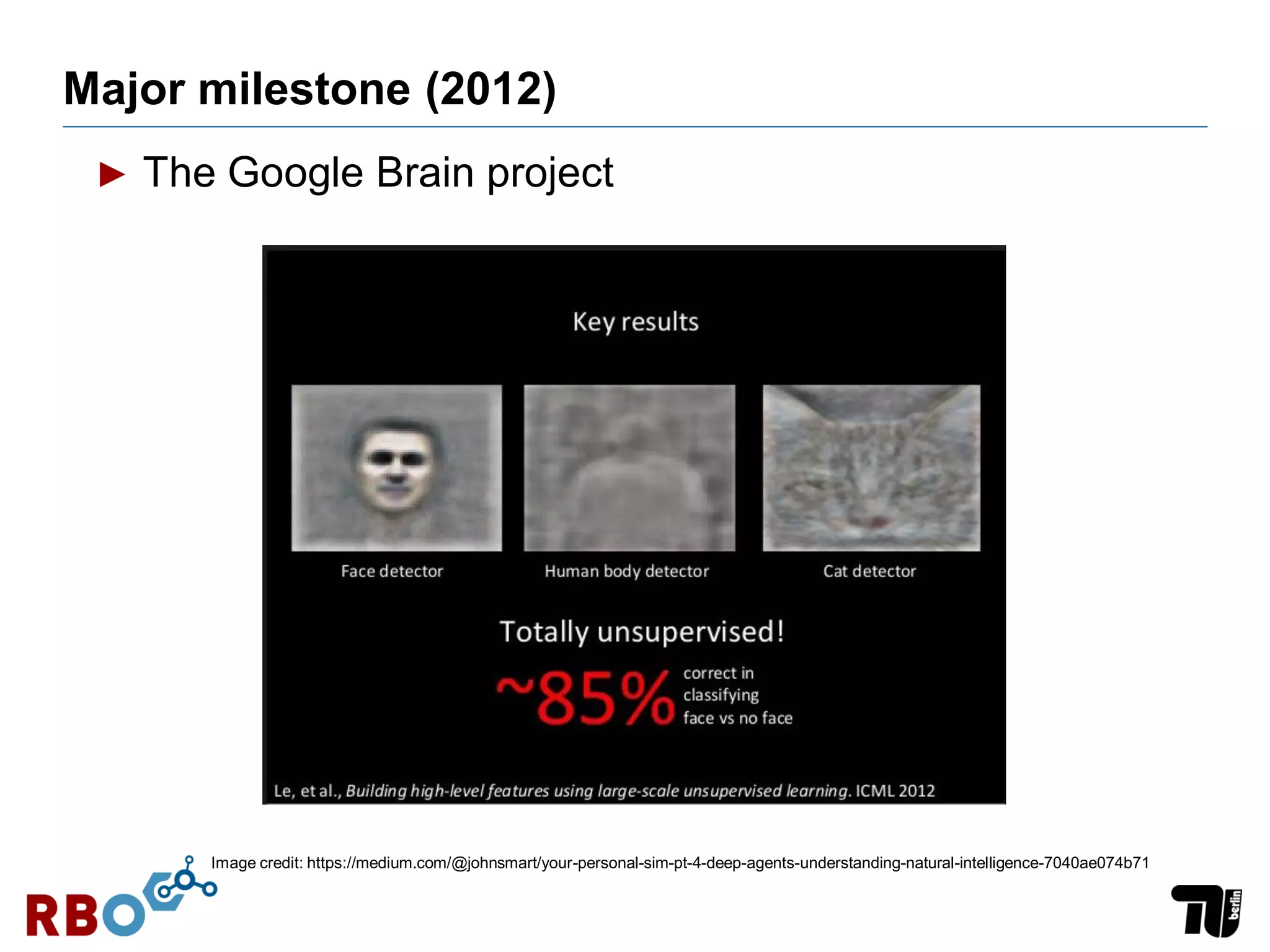

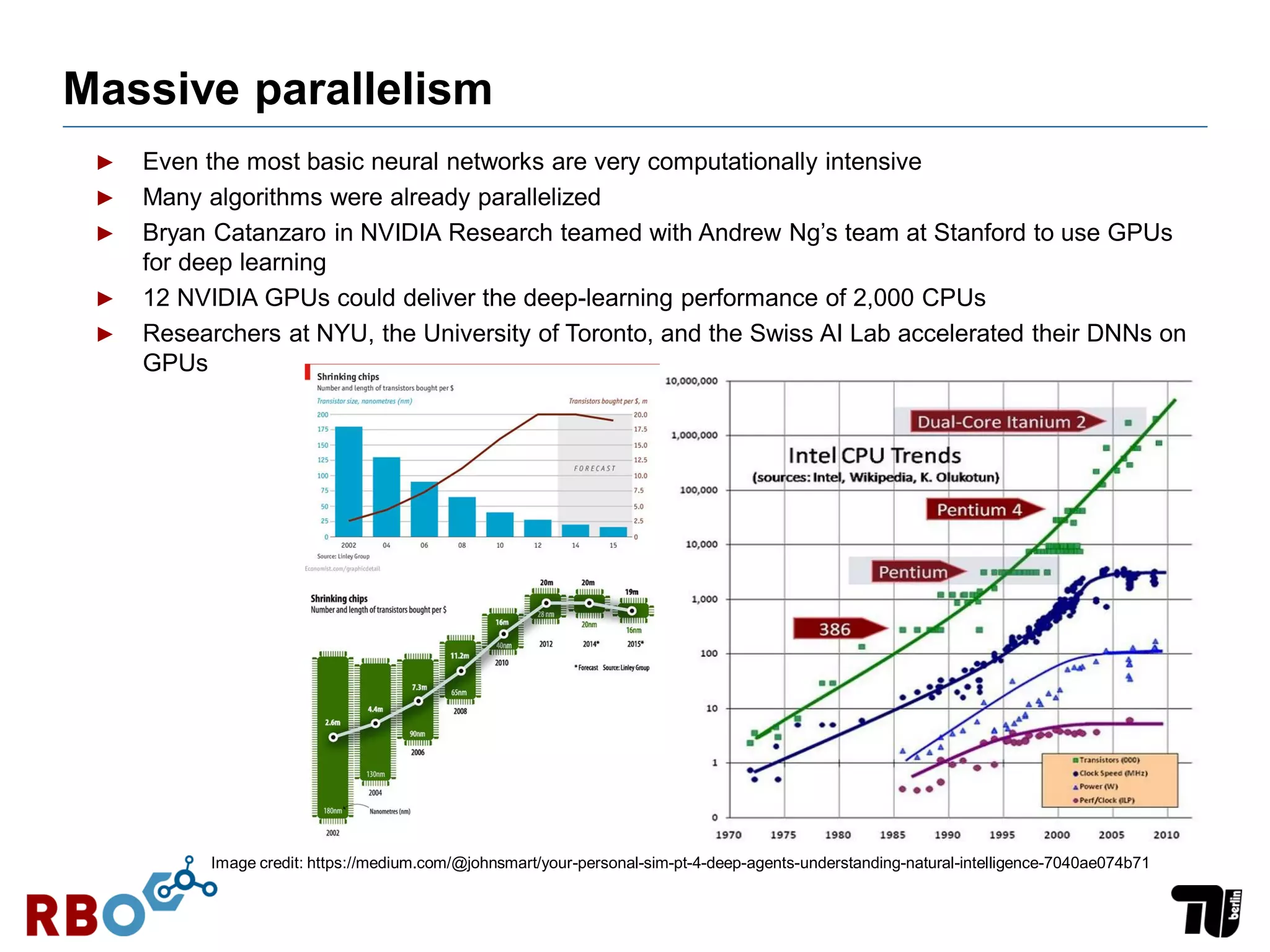

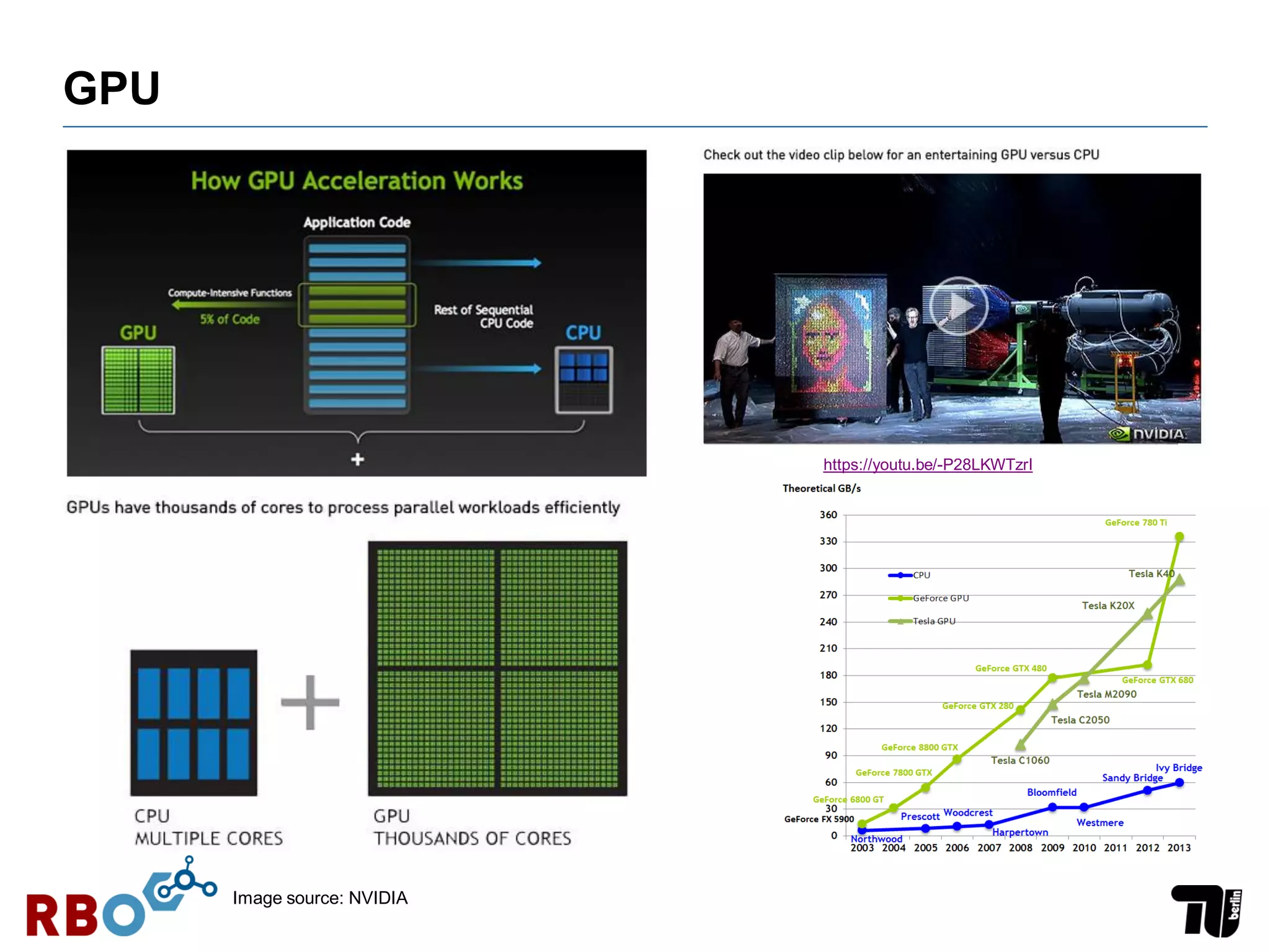

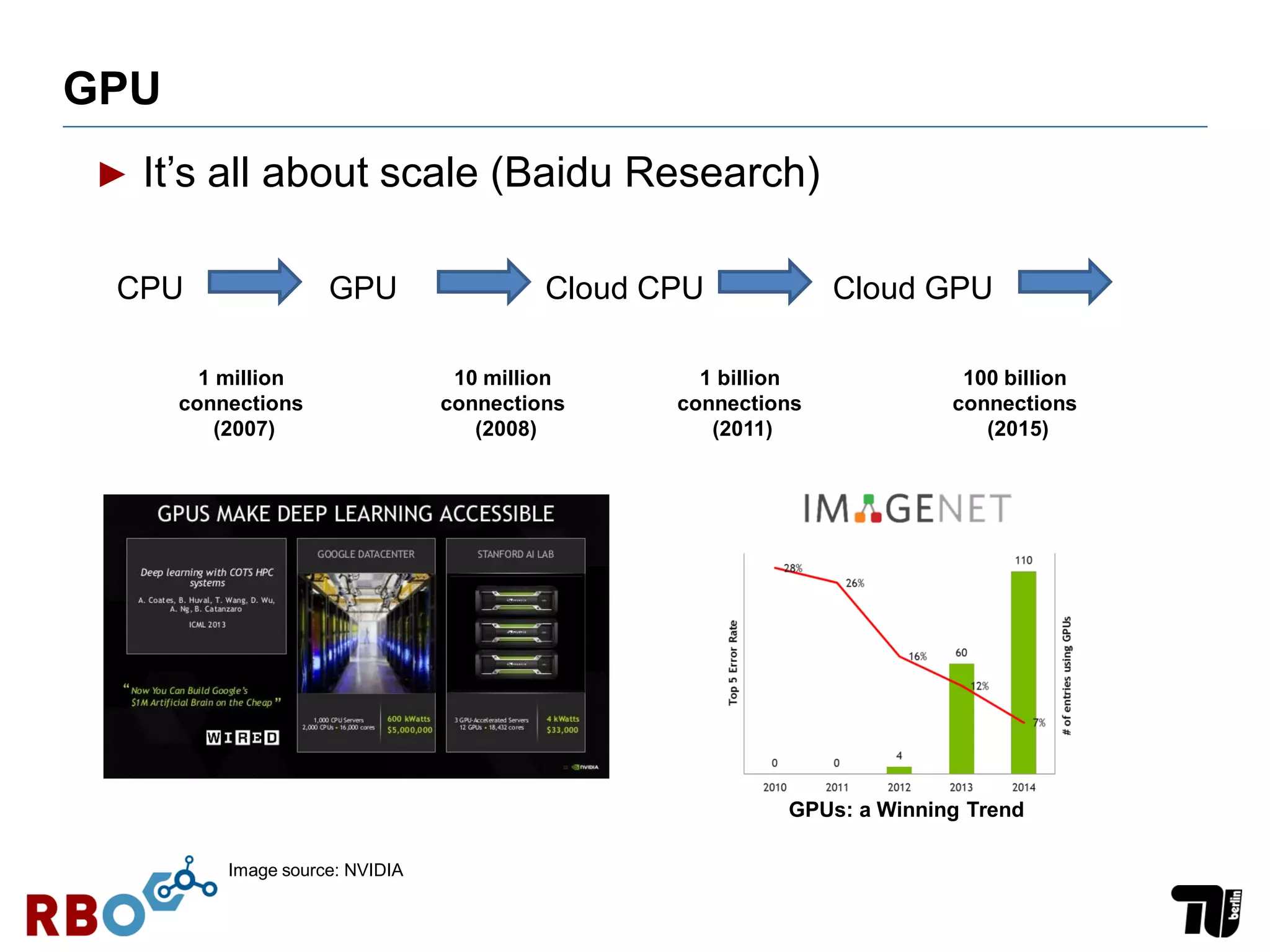

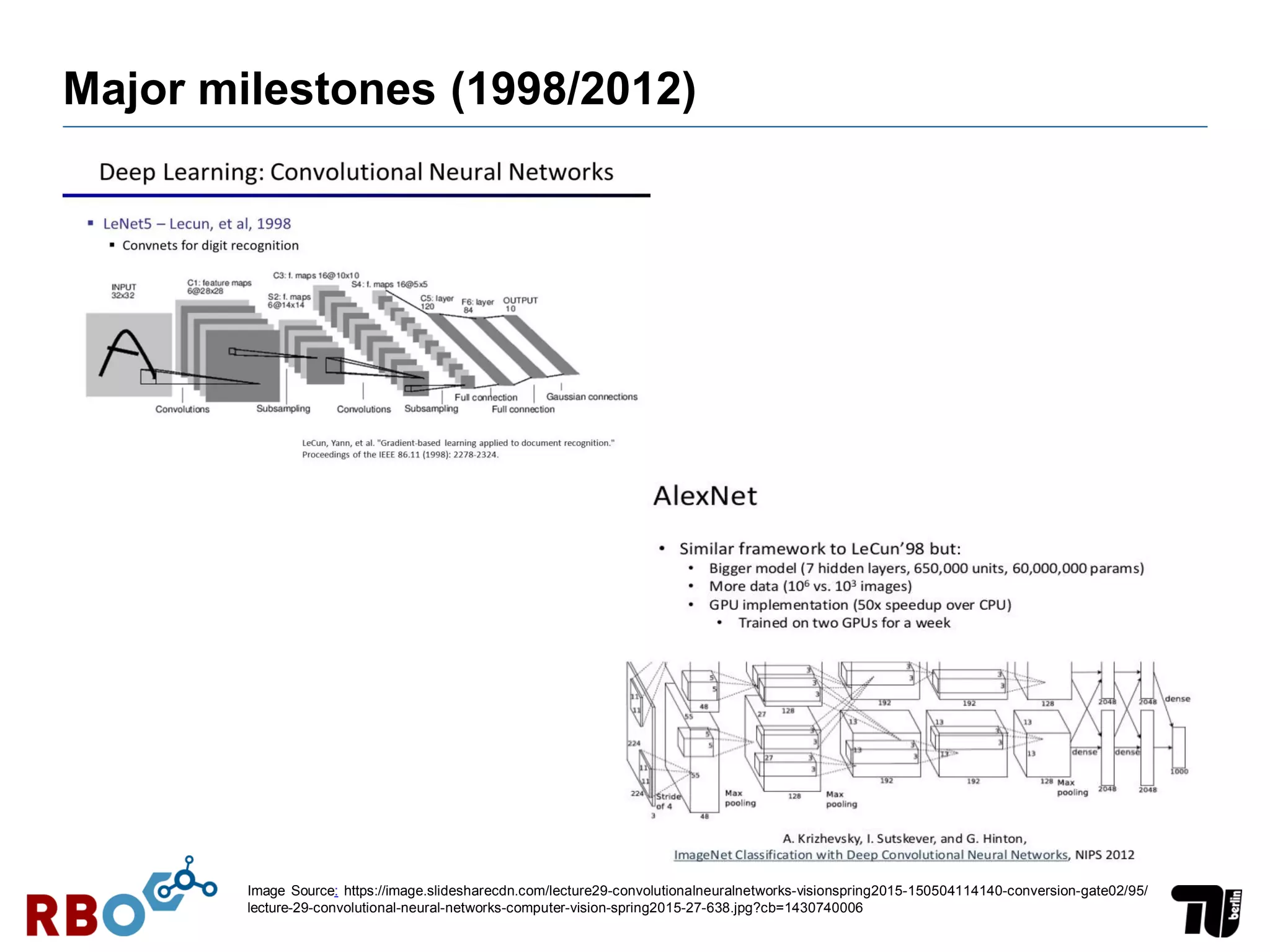

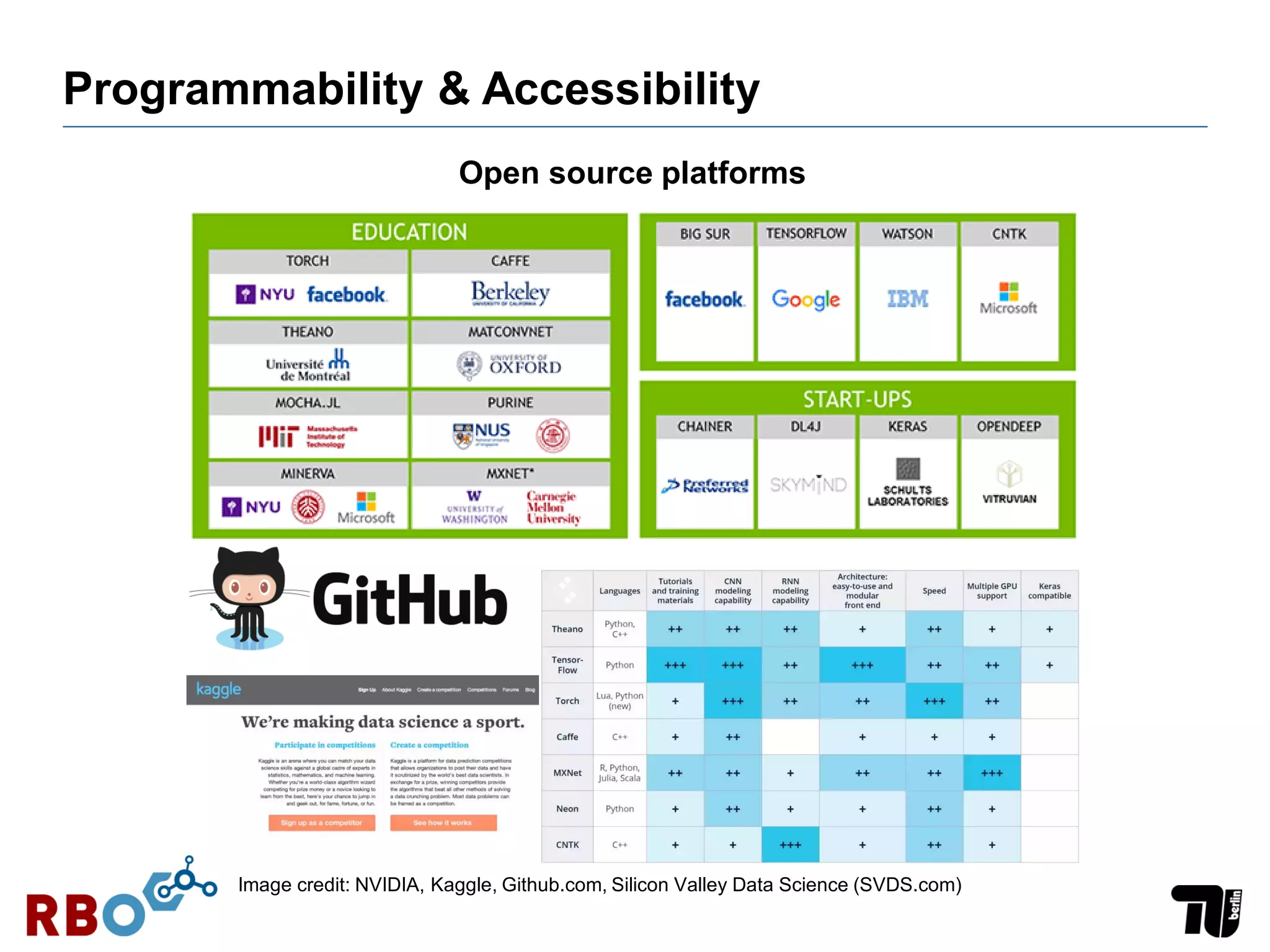

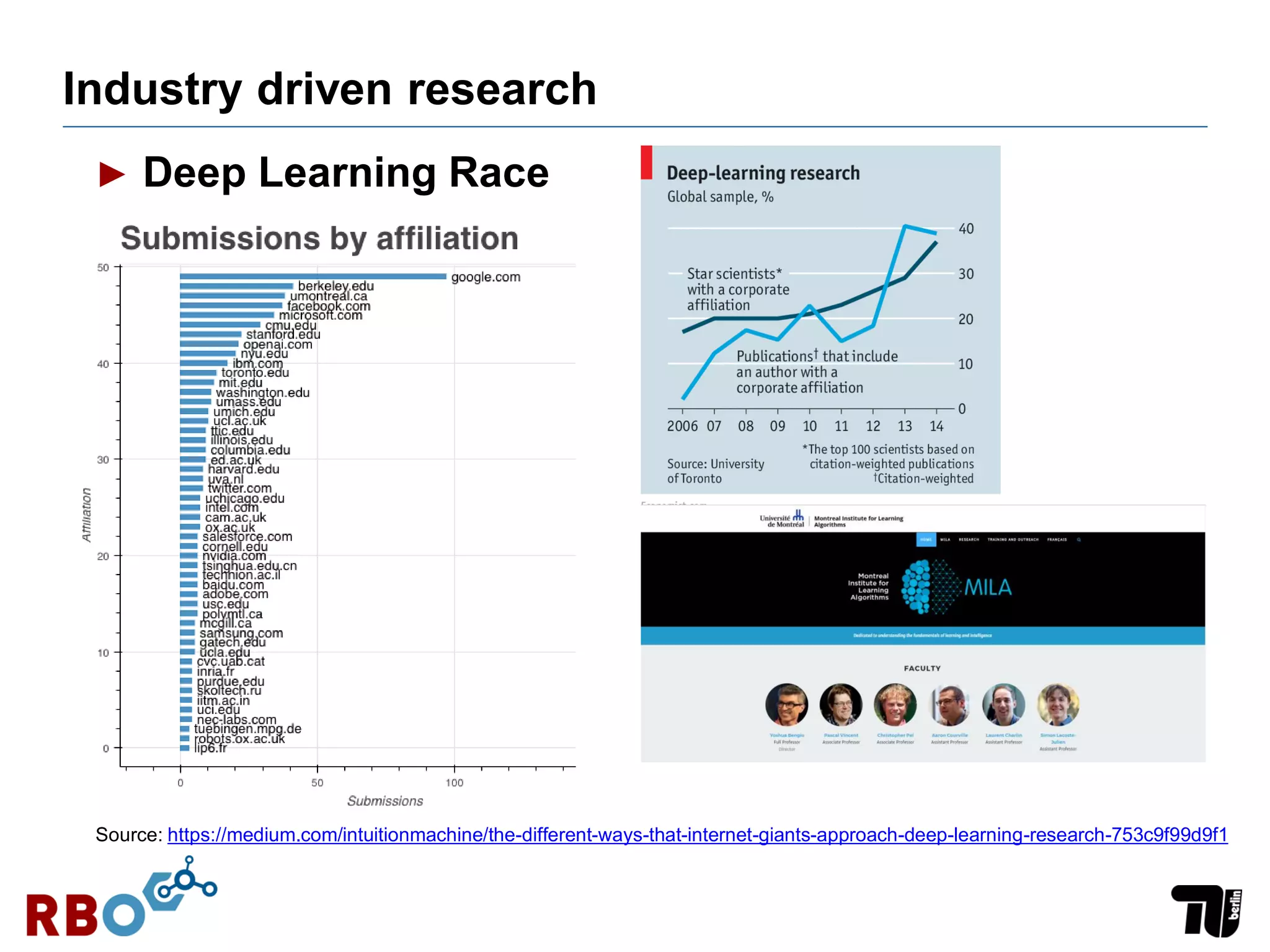

This document discusses the key factors that contributed to the recent boom in deep learning. It identifies better neural network algorithms/techniques, large datasets, massive parallelization using GPUs, and industry investment as major enabling factors. In particular, it highlights how the availability of large, labeled datasets like ImageNet; developments in CNNs, autoencoders, and other neural network architectures; the use of GPUs to enable efficient parallel training; and large-scale research at tech companies like Google were central to recent advances in deep learning.