The document is a tutorial on Torch7, covering installation, basic examples, tensor systems, notable packages like nn, optim, and dp, along with references and data handling techniques. It explains fundamental operations, tensor manipulation, neural network modeling, optimization methods, and command-line argument handling for various preprocessing and data sources. Additionally, it compares Torch7 with Theano, highlighting the ease of coding in C/CUDA as a reason for preference.

![BITensor System (1/6)

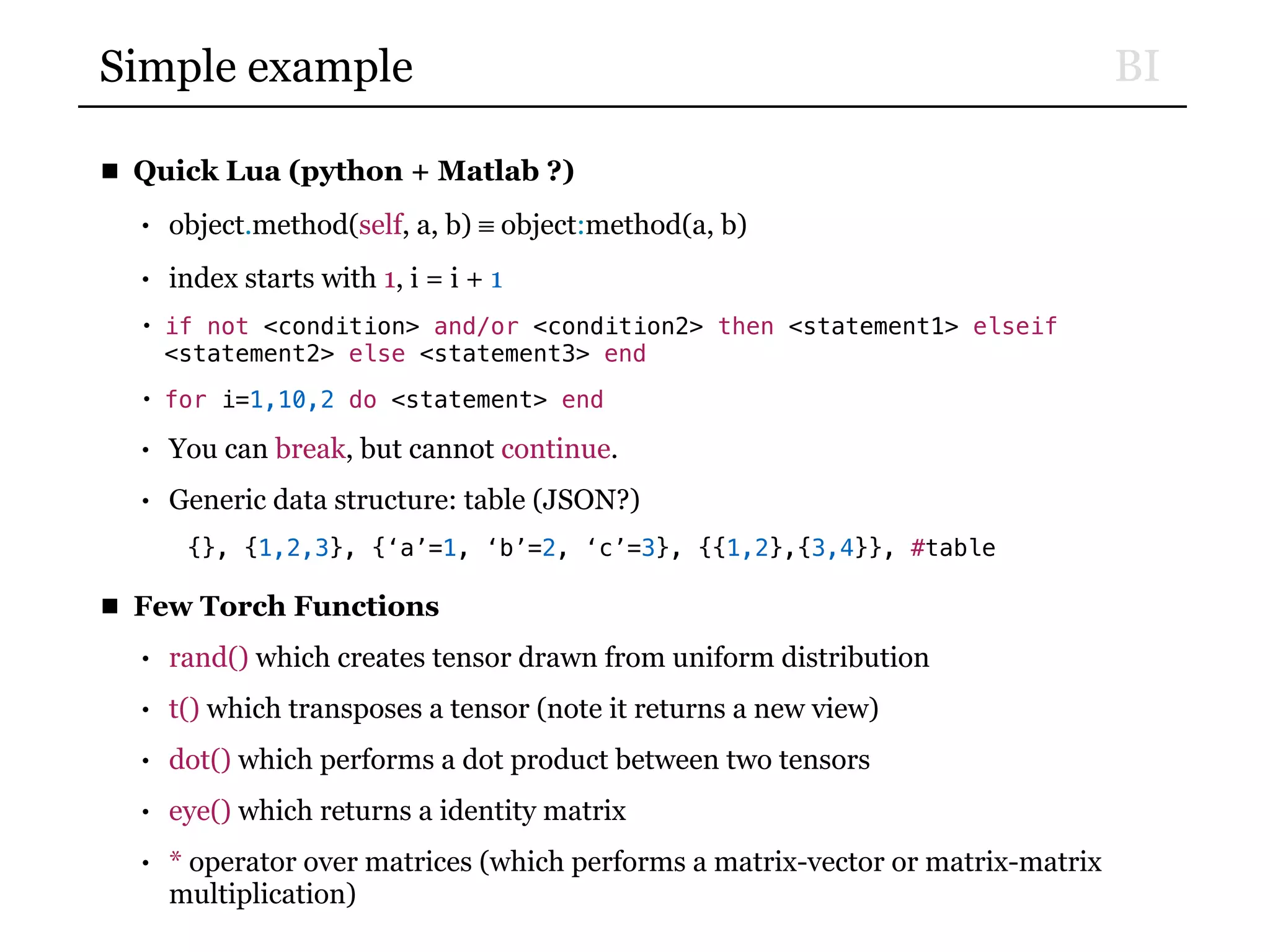

■ Fundamental data class, Tensor

• Handling numeric data

• Serializable (If you want, can save as a file.)

• Tensor interprets a chunk of memory as having dimensions.

■ Size

> x:nDimension()

6

> x:size() —- use x:size(dim) for a specific dimension

4

5

6

2

7

3

[torch.LongStorage of size 6]

■ Access

> x[3][4][5]](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-5-2048.jpg)

![BITensor System (2/6)

■ Memory Contiguous

• It’s a C style, not Fortran.

x = torch.Tensor(4,5)

i = 0

x:apply(function()

i = i + 1

return i

end)

> x

1 2 3 4 5

6 7 8 9 10

11 12 13 14 15

16 17 18 19 20

[torch.DoubleTensor of dimension 4x5]

> x:stride()

5

1 -- element in the last dimension are contiguous!

[torch.LongStorage of size 2]](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-6-2048.jpg)

![BITensor System (3/6)

■ Tensor Types

ByteTensor -- contains unsigned chars

CharTensor -- contains signed chars

ShortTensor -- contains shorts

IntTensor -- contains ints

FloatTensor -- contains floats

DoubleTensor -- contains doubles

■ Most numeric operations are implemented only for FloatTensor and

DoubleTensor (e.g. torch.histc()).

> torch.histc(torch.IntTensor(5))

[string "_RESULT={torch.histc(a)}"]:1: torch.IntTensor does not implement the

torch.histc() function

stack traceback:

[C]: in function 'histc'

[string "_RESULT={torch.histc(a)}"]:1: in main chunk

[C]: in function 'xpcall'

/Users/Calvin/torch/install/share/lua/5.1/trepl/init.lua:630: in function 'repl'

...lvin/torch/install/lib/luarocks/rocks/trepl/scm-1/bin/th:185: in main chunk

[C]: at 0x010e9422f0

torch.setdefaulttensortype(‘torch.FloatTensor')

a = torch.Tensor()

a:type()

a:size(dim)](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-7-2048.jpg)

![BITensor System (4/6)

■ Querying elements

> x[2][3] -- returns row 2, column 3

6

> x[{2,3}] -- another way to return row 2, column 3

6

> x[torch.LongStorage{2,3}] -- yet another way to return row 2, column 3

6

> x[torch.le(x,3)] -- torch.le returns a ByteTensor that acts as a mask

1

2

3

[torch.DoubleTensor of dimension 3]

■ Extracting sub-tensors

[self] narrow(dim, index, size)

[Tensor] sub(dim1s, dim1e ... [, dim4s [, dim4e]])

[Tensor] select(dim, index)

or just using operator [] …](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-8-2048.jpg)

![BITensor System (5/6)

■ Indexing operator []

x = torch.Tensor(5, 6):zero()

> x

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

[torch.DoubleTensor of dimension 5x6]

x[{ 1,3 }] = 1 -- sets element at

> x (i=1,j=3) to 1

0 0 1 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

[torch.DoubleTensor of dimension 5x6]

x[{ 2,{2,4} }] = 2 -- sets a slice

> x of 3 elements to 2

0 0 1 0 0 0

0 2 2 2 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

[torch.DoubleTensor of dimension 5x6]

x[{ {},4 }] = -1 -- sets the full 4th column to -1

> x

0 0 1 -1 0 0

0 2 2 -1 0 0

0 0 0 -1 0 0

0 0 0 -1 0 0

0 0 0 -1 0 0

[torch.DoubleTensor of dimension 5x6]

x[{ {},2 }] = torch.range(1,5) -- copy a 1D tensor

> x to a slice of x

0 1 1 -1 0 0

0 2 2 -1 0 0

0 3 0 -1 0 0

0 4 0 -1 0 0

0 5 0 -1 0 0

[torch.DoubleTensor of dimension 5x6]

x[torch.lt(x,0)] = -2 -- sets all negative elements

> x to -2 via a bytetensor mask

0 1 1 -2 0 0

0 2 2 -2 0 0

0 3 0 -2 0 0

0 4 0 -2 0 0

0 5 0 -2 0 0

[torch.DoubleTensor of dimension 5x6]](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-9-2048.jpg)

![BITensor System (6/6)

■ And so on …

• See how many functions are the same you’ve used in Matlab!

⋮

[Tensor] gather(dim, index)

[LongTensor] nonzero(tensor)

[result] expand([result,] sizes)

[Tensor] repeatTensor([result,] sizes)

[Tensor] squeeze([dim])

[Tensor] transpose(dim1, dim2)

[Tensor] permute(dim1, dim2, ..., dimn)

[Tensor] unfold(dim, size, step)

⋮](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-10-2048.jpg)

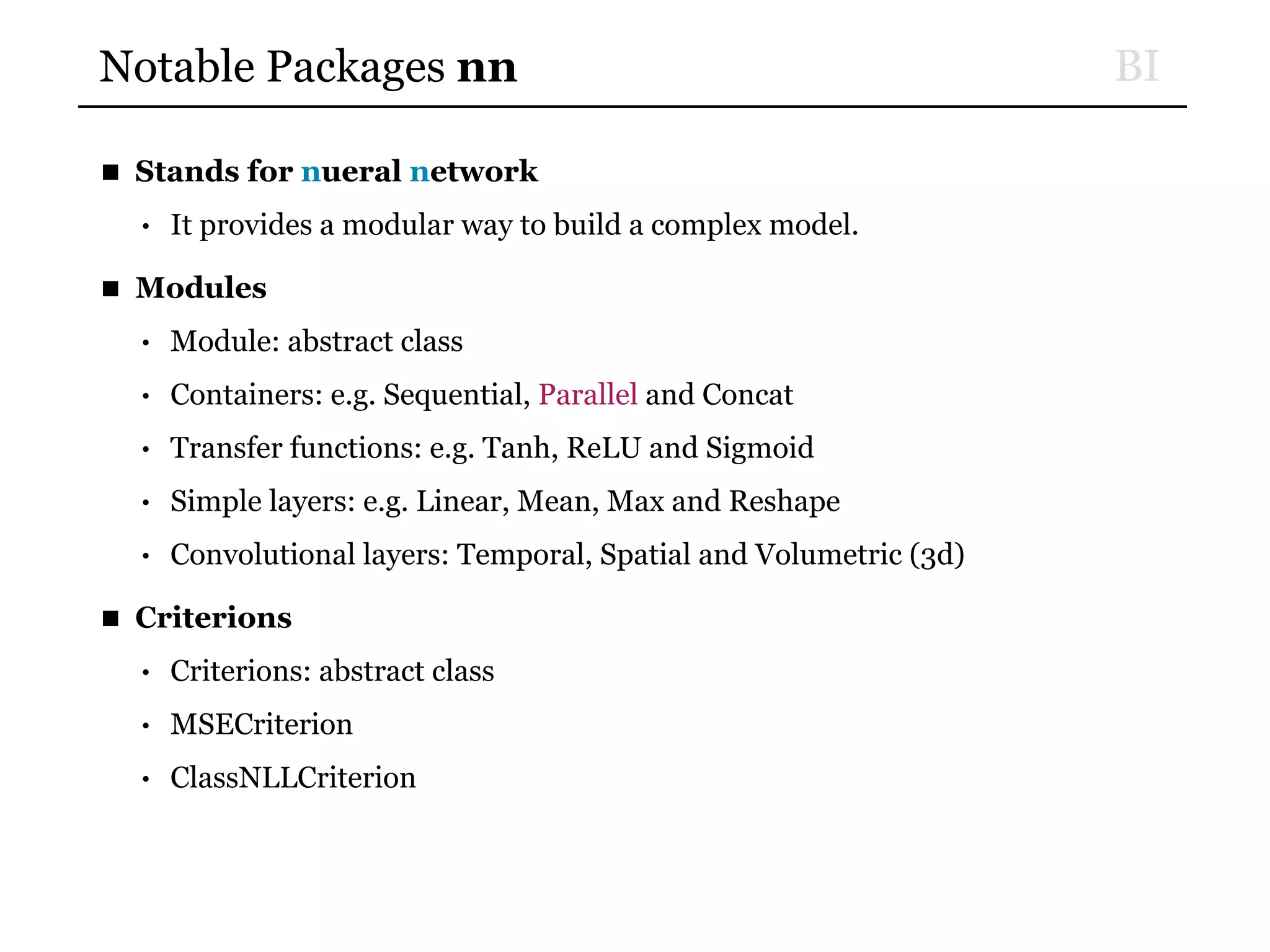

![BINotable Packages nn

■ nn example of manual training (though you gonna not using this)

• Model definition

require "nn"

mlp = nn.Sequential(); -- make a multi-layer perceptron

inputs = 2; outputs = 1; HUs = 20; -- parameters

mlp:add(nn.Linear(inputs, HUs))

mlp:add(nn.Tanh())

mlp:add(nn.Linear(HUs, outputs))

• Loss function

criterion = nn.MSECriterion()

• Training

for i = 1,2500 do

-- random sample

local input= torch.randn(2); -- normally distributed example in 2d

local output= torch.Tensor(1);

if input[1]*input[2] > 0 then -- calculate label for XOR function

output[1] = -1

else output[1] = 1 end

-- feed it to the neural network and the criterion

criterion:forward(mlp:forward(input), output) —- test using mlp:forward(input)

-- train over this example in 3 steps

-- (1) zero the accumulation of the gradients

mlp:zeroGradParameters()

-- (2) accumulate gradients

mlp:backward(input, criterion:backward(mlp.output, output))

-- (3) update parameters with a 0.01 learning rate

mlp:updateParameters(0.01)

end](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-12-2048.jpg)

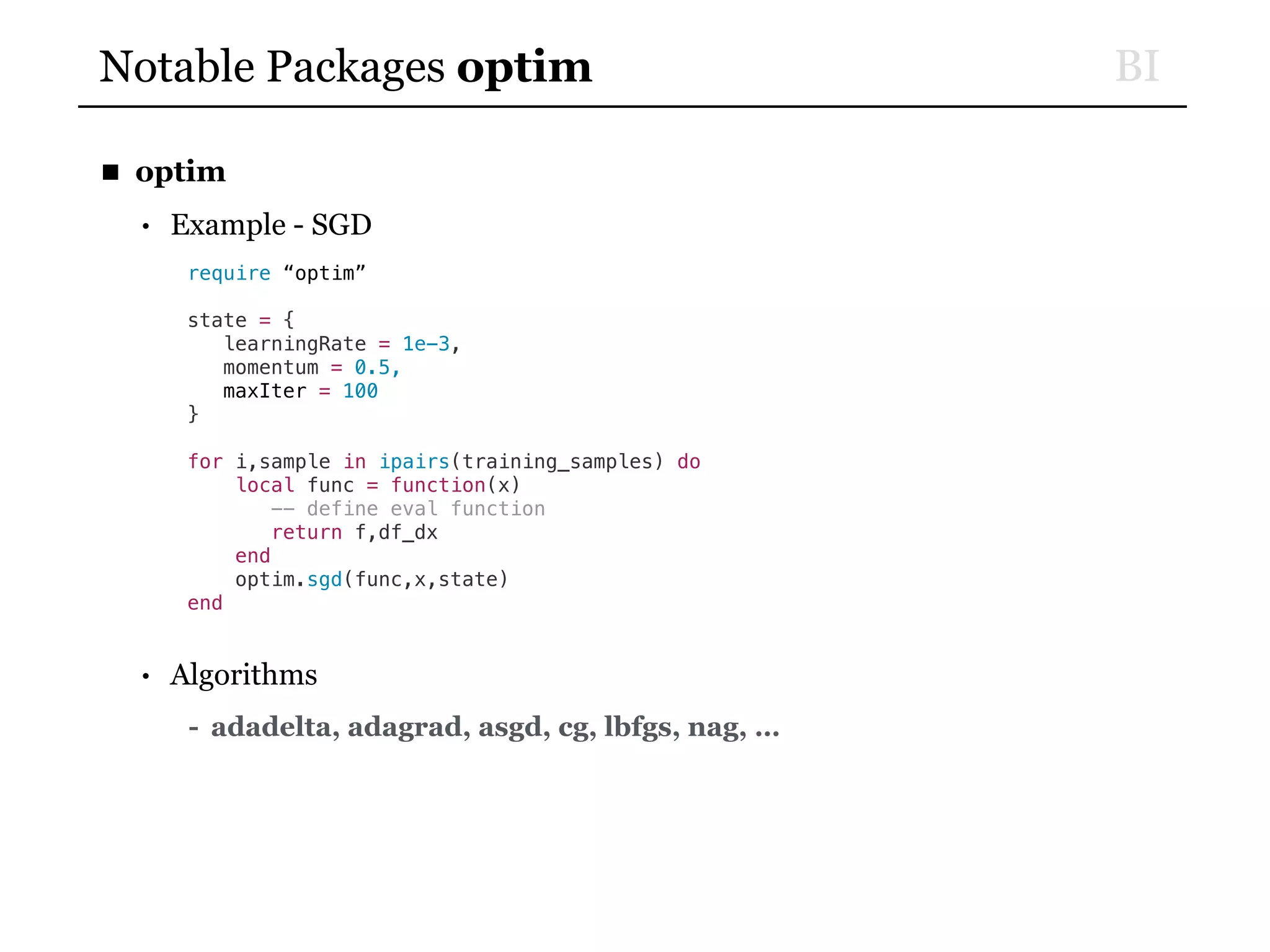

![BINotable Packages dp

■ Command-line Arguments

--[[command line arguments]]--

cmd = torch.CmdLine()

cmd:text()

cmd:text('Image Classification using MLP Training/Optimization')

cmd:text('Example:')

cmd:text('$> th neuralnetwork.lua --batchSize 128 --momentum 0.5')

cmd:text('Options:')

cmd:option('--learningRate', 0.1, 'learning rate at t=0')

cmd:option('--schedule', '{}', 'learning rate schedule')

cmd:option('--hiddenSize', '{200,200}', 'number of hidden units per layer')

⋮

cmd:option('--batchSize', 32, 'number of examples per batch')

cmd:option('--cuda', false, 'use CUDA')

cmd:option('--useDevice', 1, 'sets the device (GPU) to use')

cmd:option('--maxEpoch', 100, 'maximum number of epochs to run')

cmd:option('--dropout', false, 'apply dropout on hidden neurons')

cmd:option('--batchNorm', false, 'use batch normalization. dropout is mostly redundant with this')

cmd:option('--dataset', 'Mnist', 'which dataset to use : Mnist | NotMnist | Cifar10 | Cifar100')

cmd:option('--standardize', false, 'apply Standardize preprocessing')

cmd:option('--zca', false, 'apply Zero-Component Analysis whitening')

cmd:option('--progress', false, 'display progress bar')

cmd:option('--silent', false, 'dont print anything to stdout')

cmd:text()

opt = cmd:parse(arg or {})

opt.schedule = dp.returnString(opt.schedule)

opt.hiddenSize = dp.returnString(opt.hiddenSize)

if not opt.silent then

table.print(opt)

end](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-14-2048.jpg)

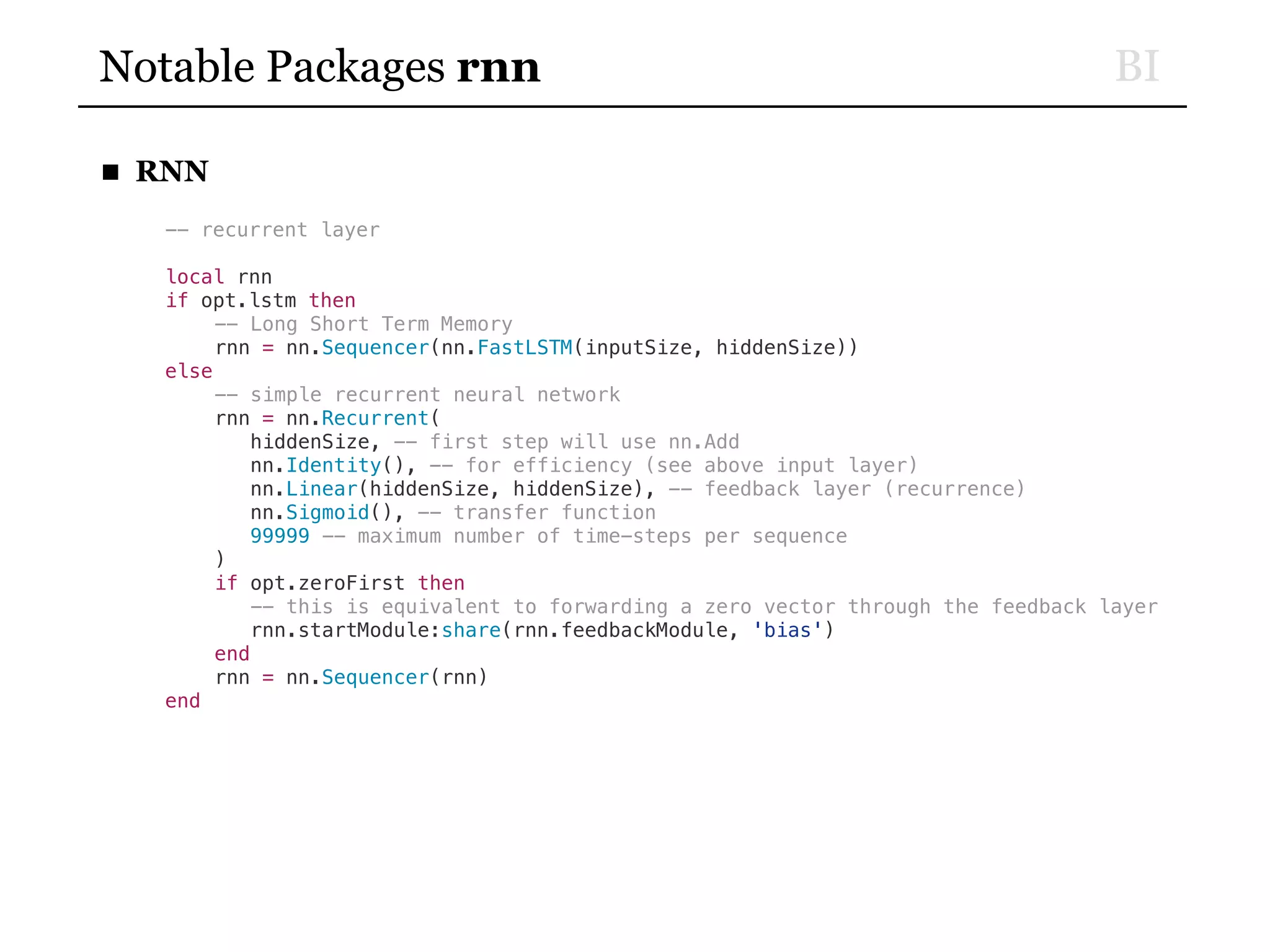

![BINotable Packages dp

■ Preprocess

--[[preprocessing]]--

local input_preprocess = {}

if opt.standardize then

table.insert(input_preprocess, dp.Standardize())

end

if opt.zca then

table.insert(input_preprocess, dp.ZCA())

end

if opt.lecunlcn then

table.insert(input_preprocess, dp.GCN())

table.insert(input_preprocess, dp.LeCunLCN{progress=true})

end

■ DataSource

--[[data]]--

if opt.dataset == 'Mnist' then

ds = dp.Mnist{input_preprocess = input_preprocess}

elseif opt.dataset == 'NotMnist' then

ds = dp.NotMnist{input_preprocess = input_preprocess}

elseif opt.dataset == 'Cifar10' then

ds = dp.Cifar10{input_preprocess = input_preprocess}

elseif opt.dataset == 'Cifar100' then

ds = dp.Cifar100{input_preprocess = input_preprocess}

else

error("Unknown Dataset")

end](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-15-2048.jpg)

![BINotable Packages dp

■ Model of Modules

--[[Model]]--

model = nn.Sequential()

model:add(nn.Convert(ds:ioShapes(), 'bf')) -- to batchSize x nFeature (also type converts)

-- hidden layers

inputSize = ds:featureSize()

for i,hiddenSize in ipairs(opt.hiddenSize) do

model:add(nn.Linear(inputSize, hiddenSize)) -- parameters

if opt.batchNorm then

model:add(nn.BatchNormalization(hiddenSize))

end

model:add(nn.Tanh())

if opt.dropout then

model:add(nn.Dropout())

end

inputSize = hiddenSize

end

-- output layer

model:add(nn.Linear(inputSize, #(ds:classes())))

model:add(nn.LogSoftMax())](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-16-2048.jpg)

![BINotable Packages dp

■ Propagator

--[[Propagators]]--

if opt.lrDecay == 'adaptive' then

ad = dp.AdaptiveDecay{max_wait = opt.maxWait, decay_factor=opt.decayFactor}

elseif opt.lrDecay == 'linear' then

opt.decayFactor = (opt.minLR - opt.learningRate)/opt.saturateEpoch

end

train = dp.Optimizer{

acc_update = opt.accUpdate, loss = nn.ModuleCriterion(nn.ClassNLLCriterion(), nil, nn.Convert()),

epoch_callback = function(model, report) -- called every epoch

-- learning rate decay

if report.epoch > 0 then

if opt.lrDecay == 'adaptive' then

opt.learningRate = opt.learningRate*ad.decay

ad.decay = 1

elseif opt.lrDecay == 'schedule' and opt.schedule[report.epoch] then

opt.learningRate = opt.schedule[report.epoch]

elseif opt.lrDecay == 'linear' then

opt.learningRate = opt.learningRate + opt.decayFactor

end

opt.learningRate = math.max(opt.minLR, opt.learningRate)

if not opt.silent then

print("learningRate", opt.learningRate)

end

end

end,

callback = function(model, report) -- called every batch

if opt.accUpdate then

model:accUpdateGradParameters(model.dpnn_input, model.output, opt.learningRate)

else

model:updateGradParameters(opt.momentum) -- affects gradParams

model:updateParameters(opt.learningRate) -- affects params

end

model:maxParamNorm(opt.maxOutNorm) -- affects params

model:zeroGradParameters() -- affects gradParams

end,

feedback = dp.Confusion(), sampler = dp.ShuffleSampler{batch_size = opt.batchSize}, progress = opt.progress

}

valid = dp.Evaluator{

feedback = dp.Confusion(), sampler = dp.Sampler{batch_size = opt.batchSize}

}

test = dp.Evaluator{

feedback = dp.Confusion(), sampler = dp.Sampler{batch_size = opt.batchSize}

}](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-17-2048.jpg)

![BINotable Packages dp

■ Experiment

--[[Experiment]]--

xp = dp.Experiment{

model = model,

optimizer = train,

validator = valid,

tester = test,

observer = {

dp.FileLogger(),

dp.EarlyStopper{

error_report = {'validator','feedback','confusion','accuracy'},

maximize = true,

max_epochs = opt.maxTries

}

},

random_seed = os.time(),

max_epoch = opt.maxEpoch

}](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-18-2048.jpg)

![BINotable Packages dp

■ Running the Experiment

--[[GPU or CPU]]--

if opt.cuda then

require 'cutorch'

require 'cunn'

cutorch.setDevice(opt.useDevice)

xp:cuda()

end

--[[Experiment]]--

xp:run(ds)

■ Loading the saved Experiment

require 'dp'

require 'cuda' -- if you used cmd-line argument --cuda

xp = torch.load("/home/nicholas/save/xps:1432747515:1.dat")

model = xp:model()

print(torch.type(model))

nn.Serial

model = model.module

print(torch.type(model))

nn.Sequential](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-19-2048.jpg)

![BIQ2: Is the # of dimmensions unlimited?

■ Torch7 supports an unlimited multi-dimmensional Tensor matrix.

• The number of dimensions is unlimited that can be created using

LongStorage with more dimensions.

--- creation of a 4D-tensor 4x5x6x2

z = torch.Tensor(4,5,6,2)

--- for more dimensions, (here a 6D tensor) one can do:

s = torch.LongStorage(6)

--- assigning lengths for each dimension, not values

s[1] = 4; s[2] = 5; s[3] = 6; s[4] = 2; s[5] = 7; s[6] = 3;

x = torch.Tensor(s)

> x:nDimension()

6

> x:size()

4

5

6

2

7

3

[torch.LongStorage of size 6]](https://image.slidesharecdn.com/simplefastandscalabletorch7tutorial-150924024306-lva1-app6891/75/Simple-fast-and-scalable-torch7-tutorial-24-2048.jpg)