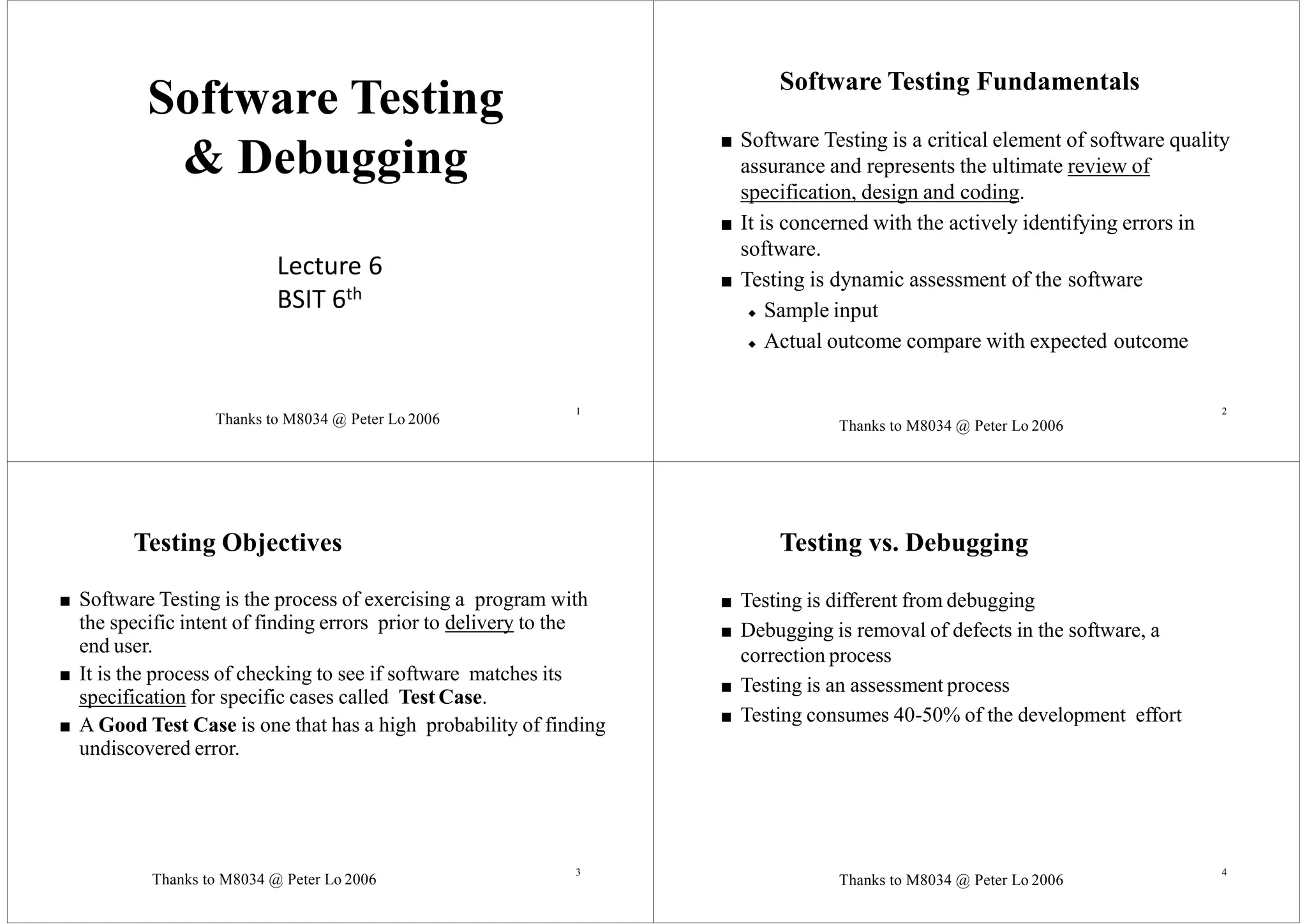

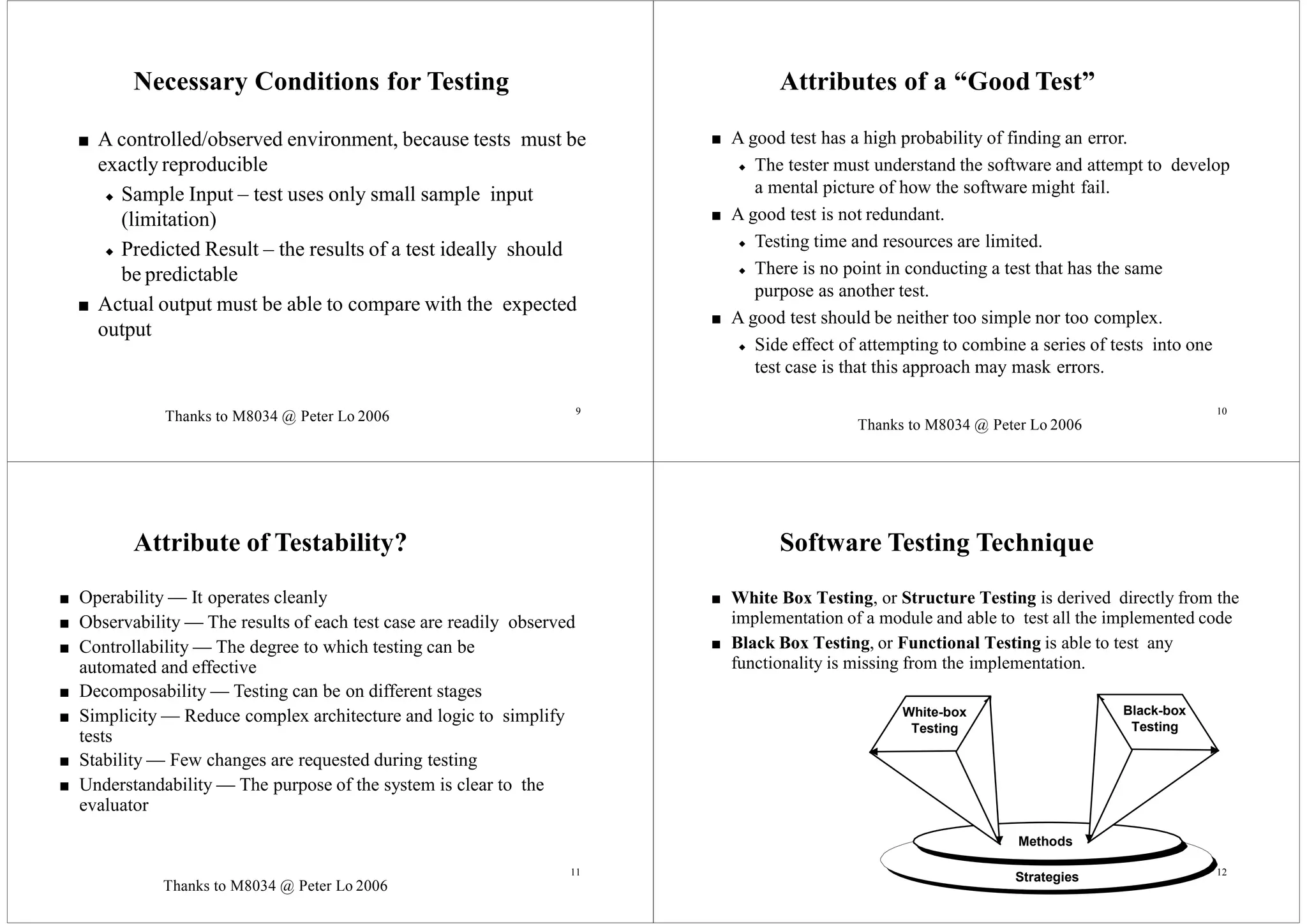

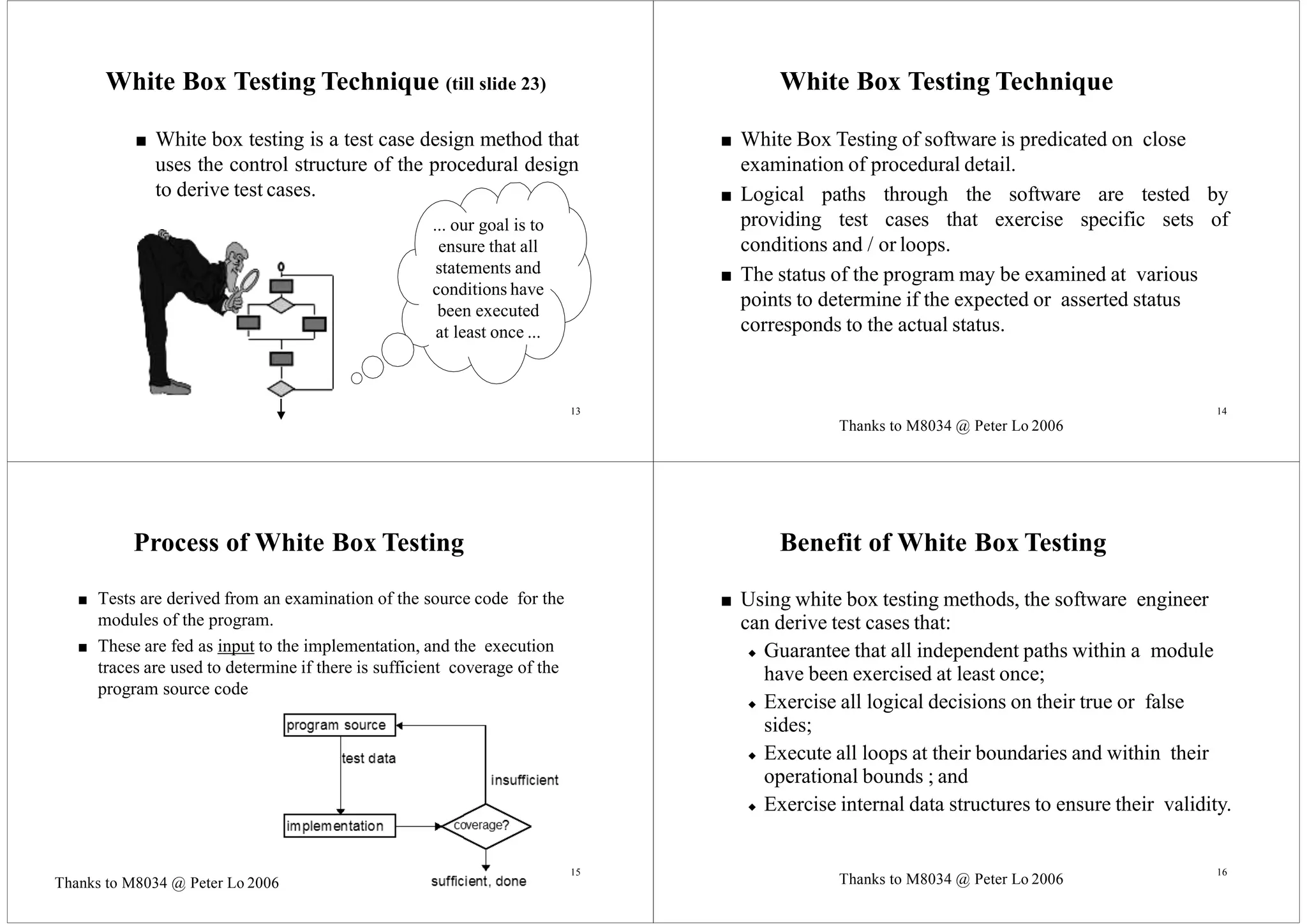

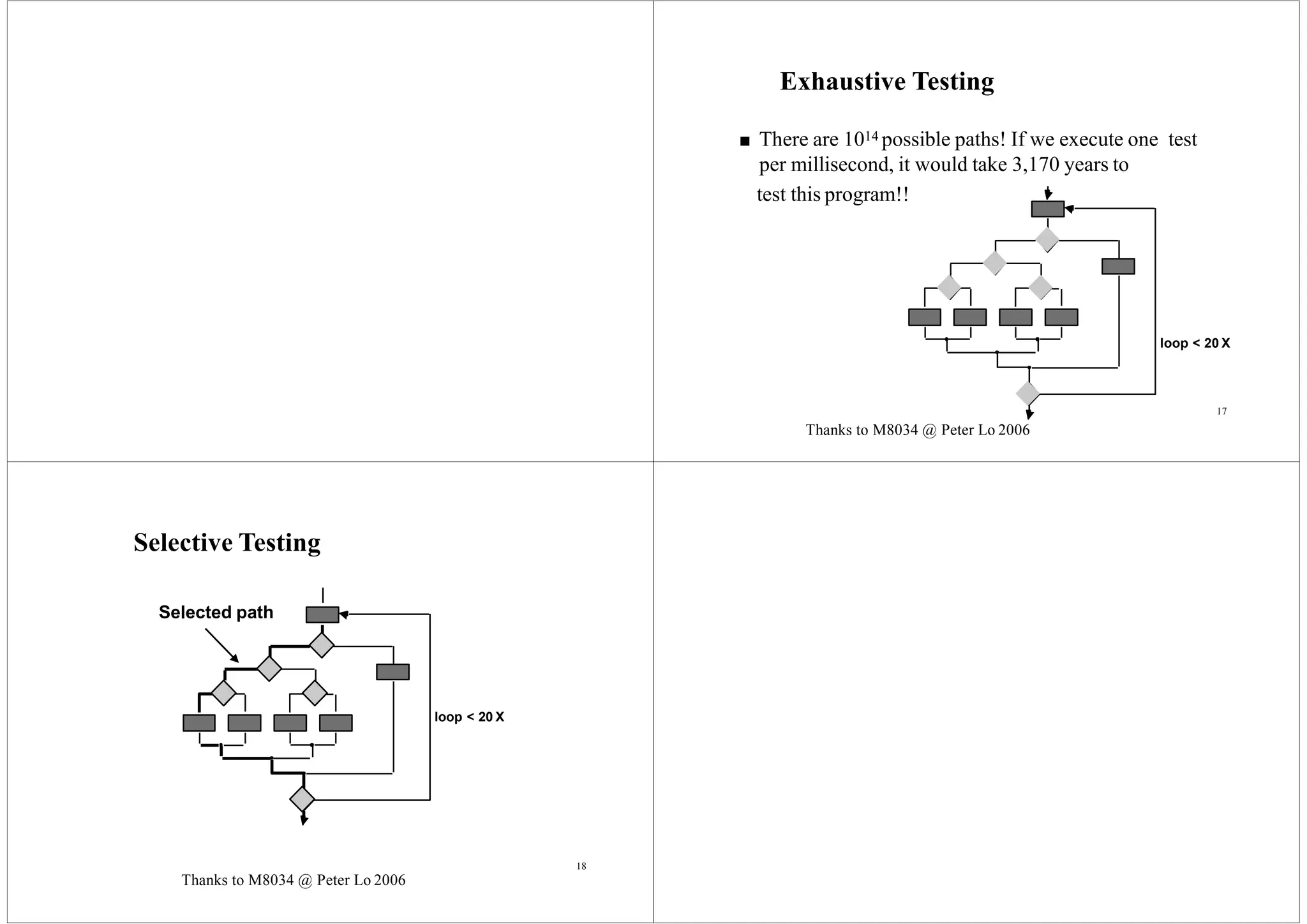

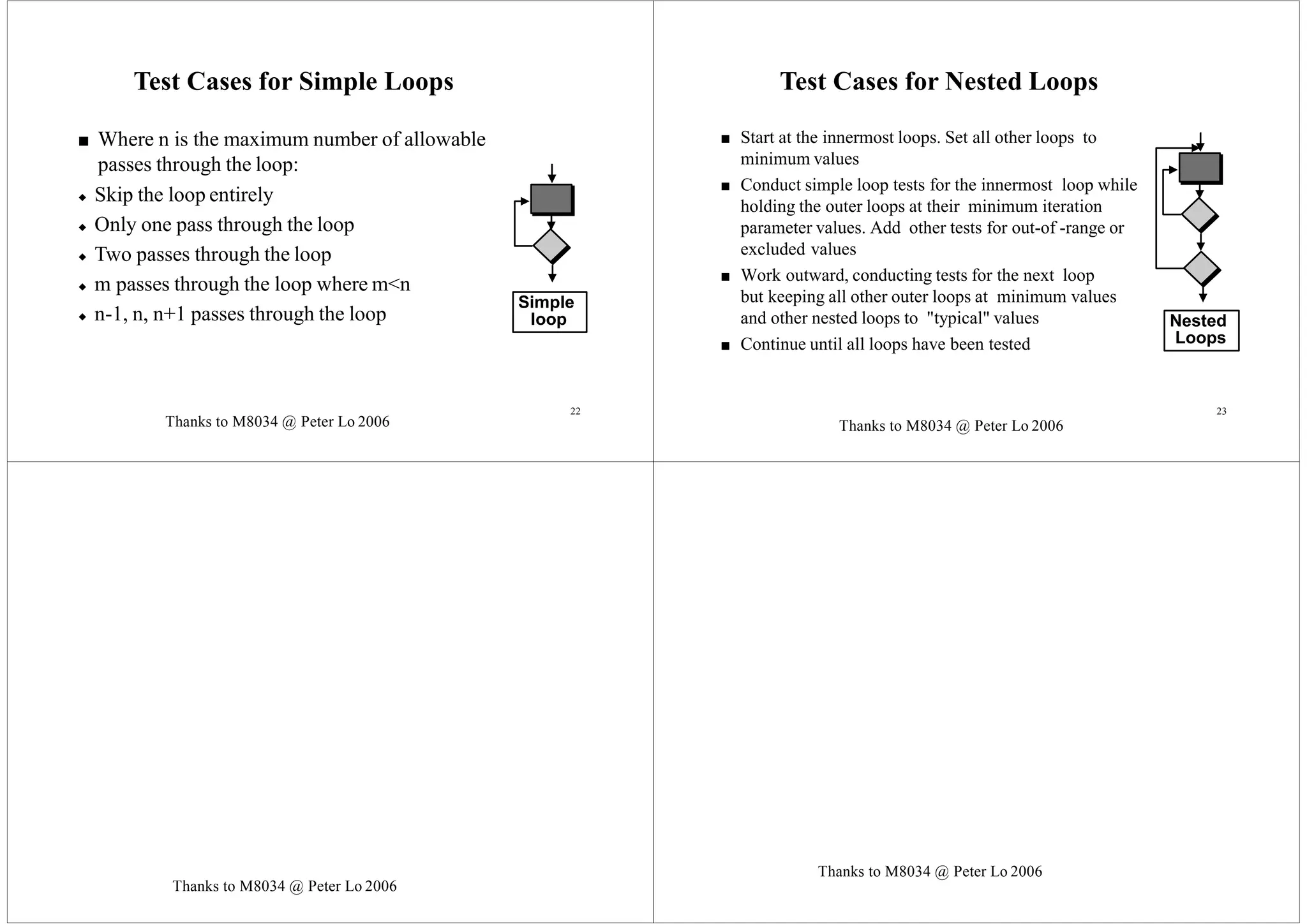

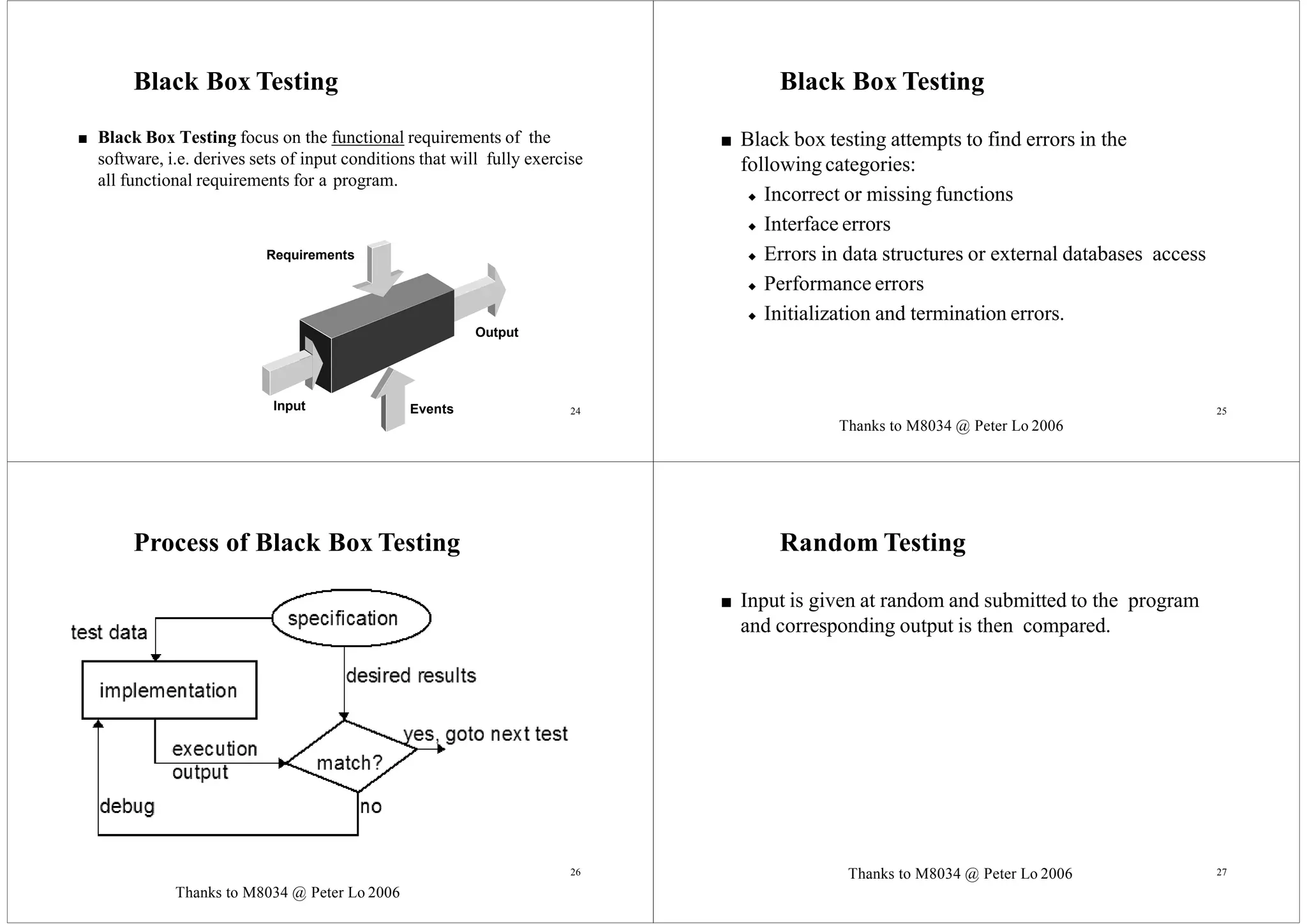

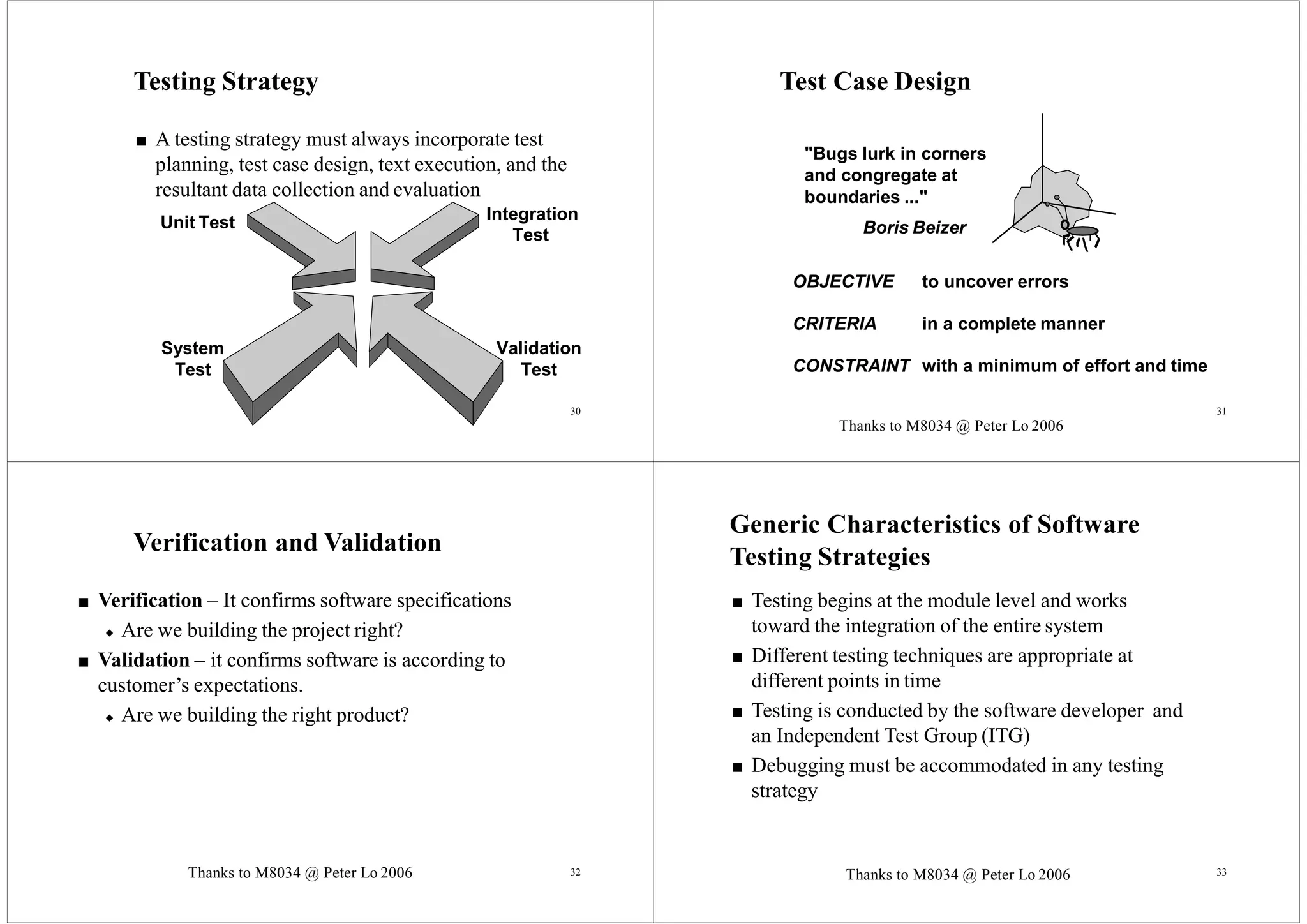

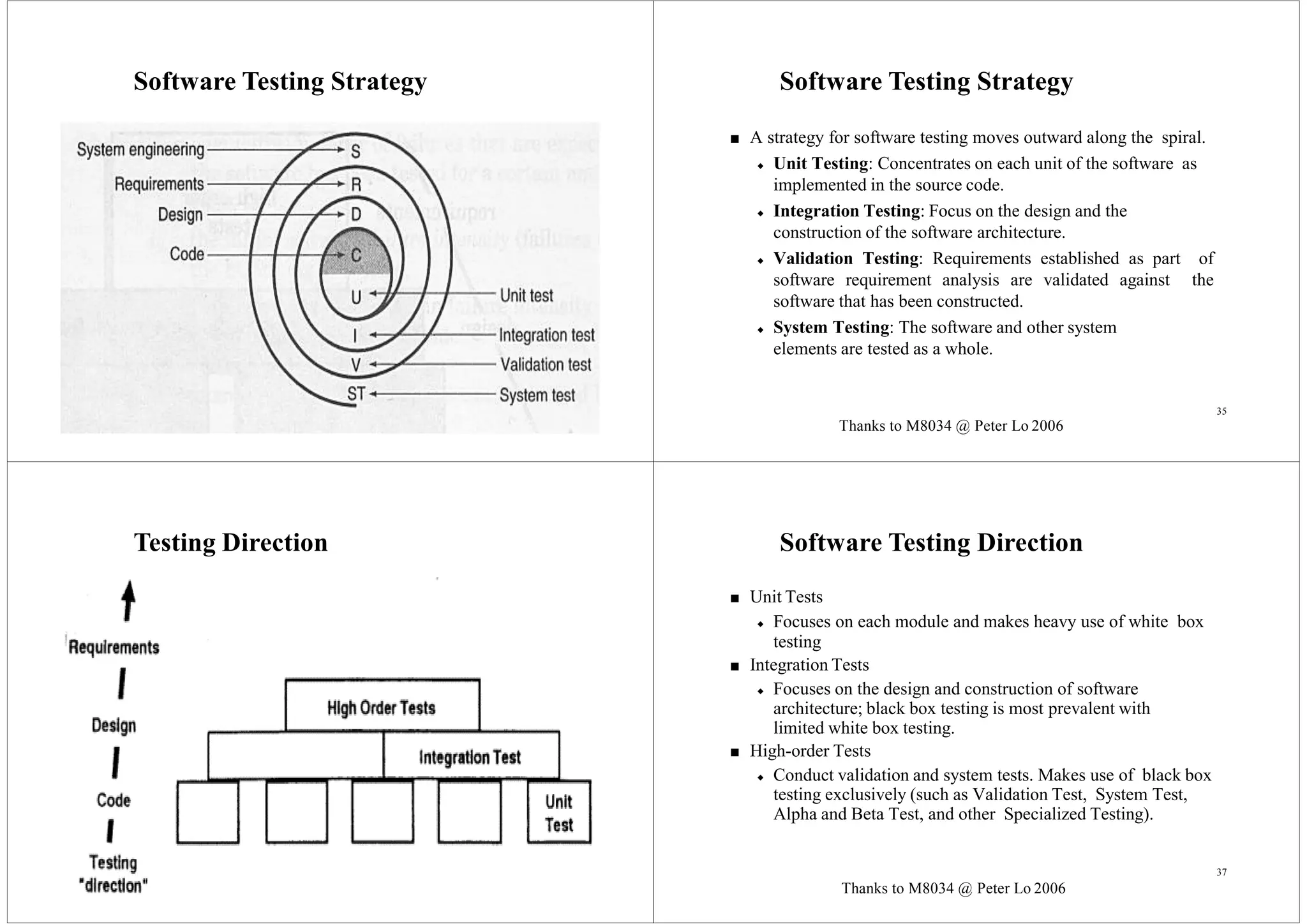

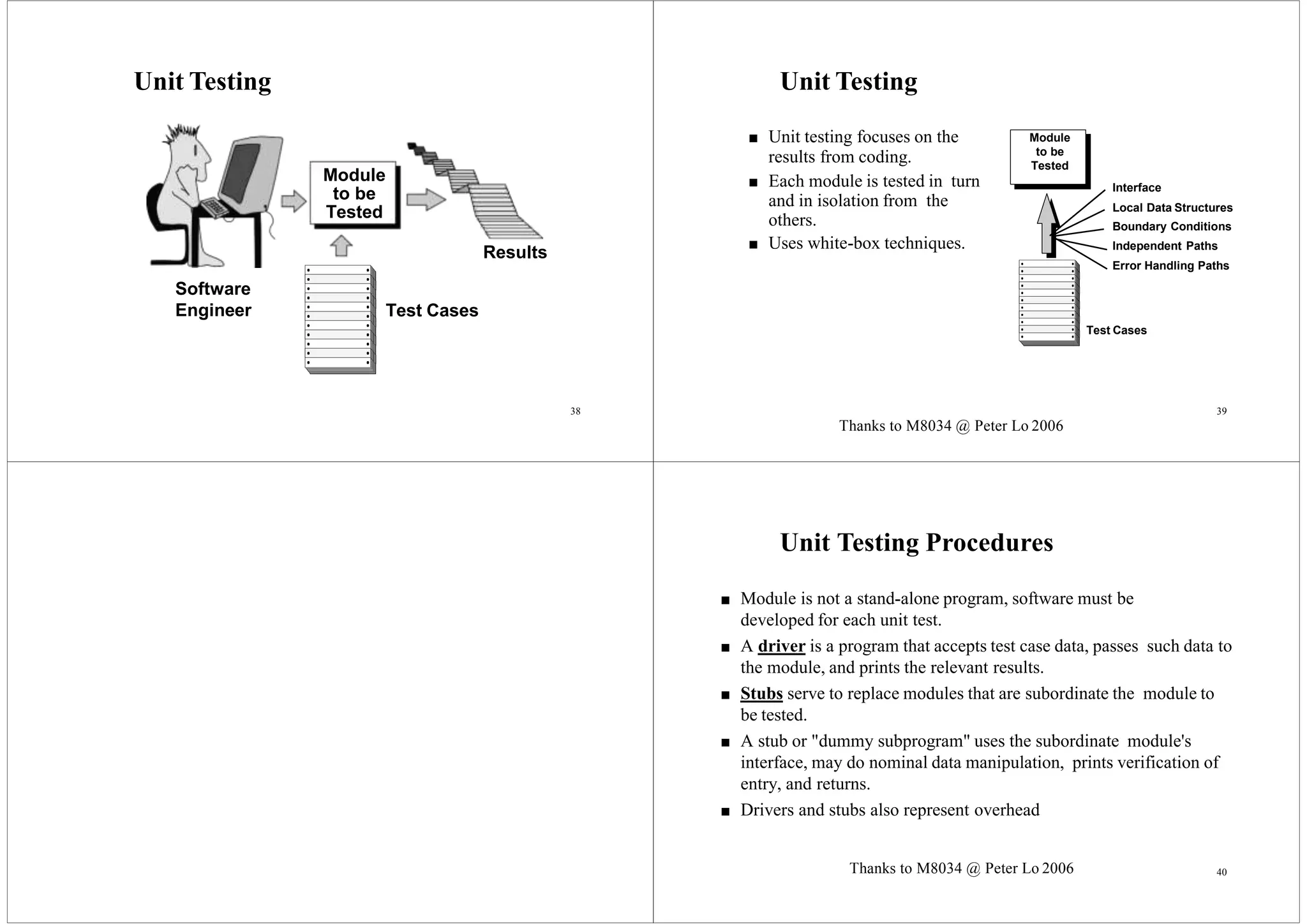

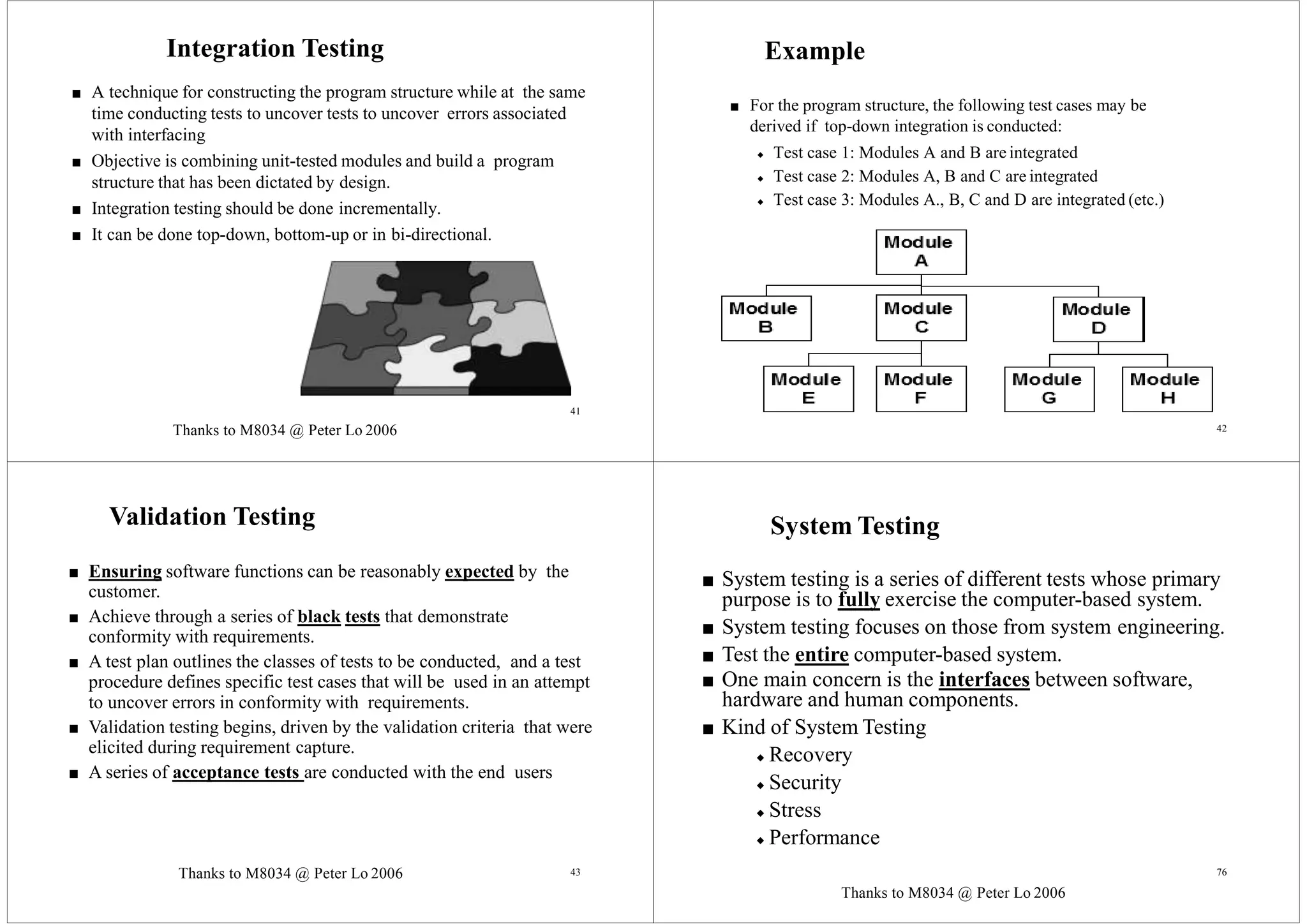

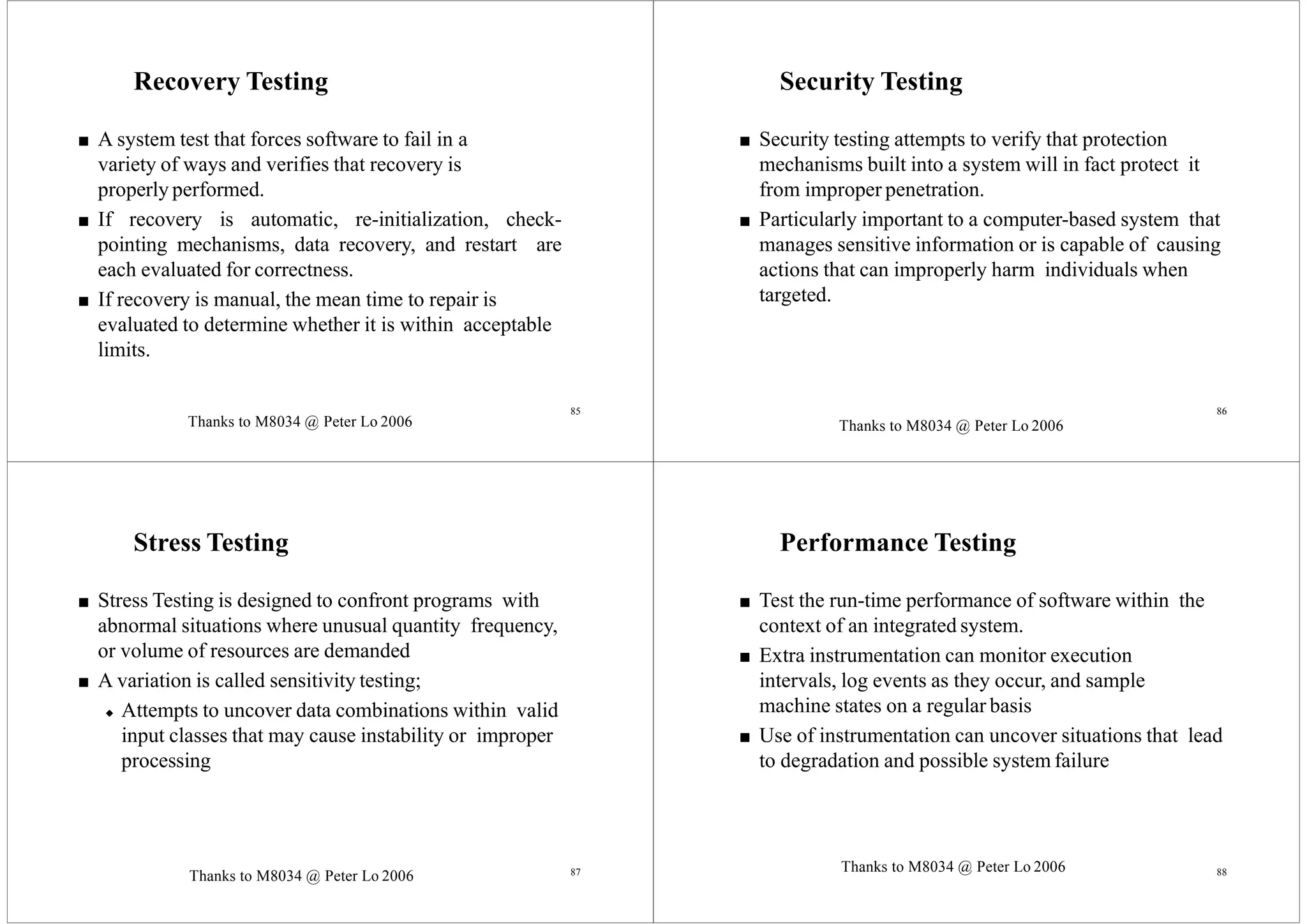

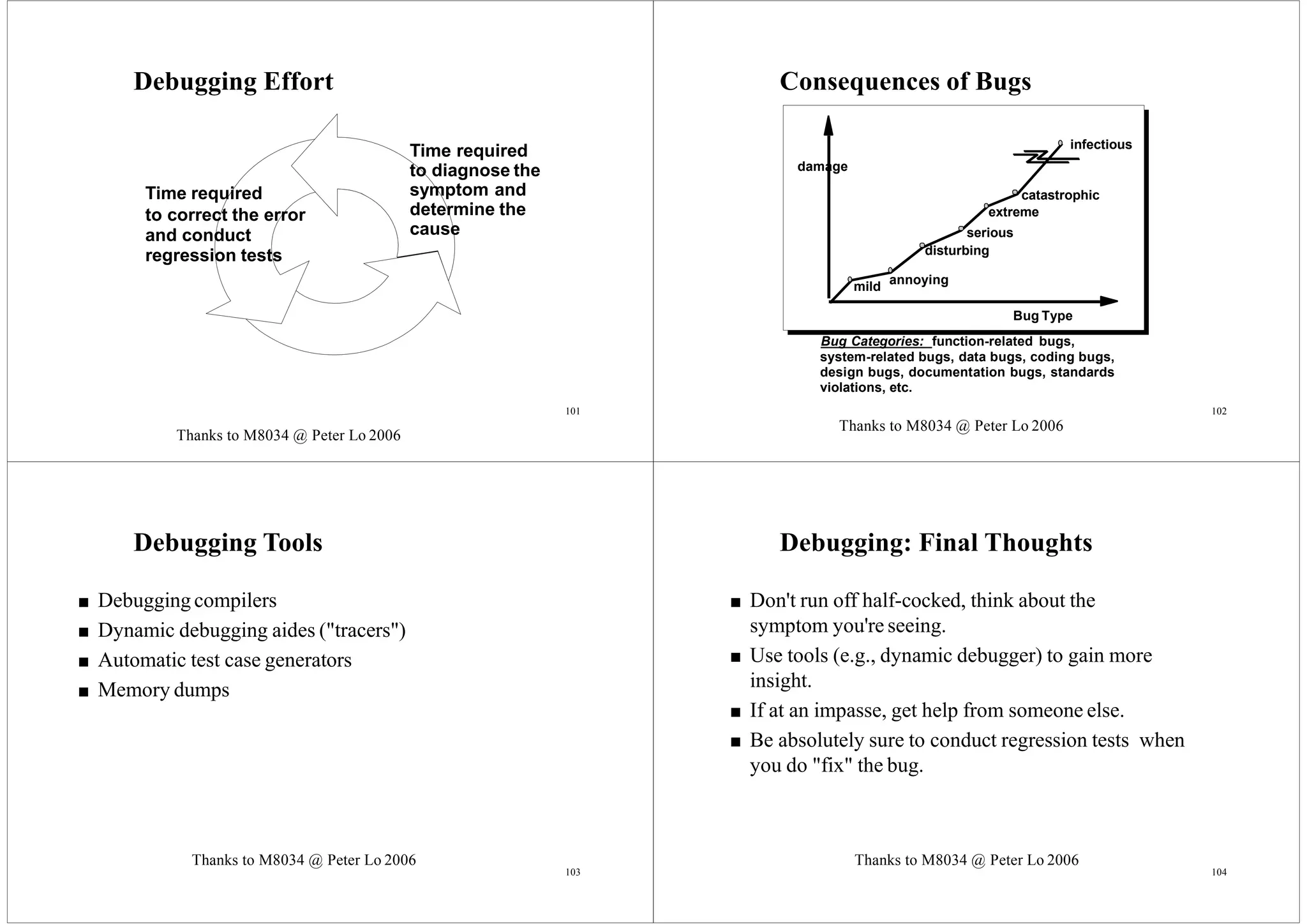

The document provides an overview of software testing and debugging. It discusses the objectives of software testing as finding errors prior to delivery and checking if the software matches its specifications. It also distinguishes between testing and debugging, with testing being an assessment process and debugging involving defect removal. The document then covers various software testing techniques like white box testing, black box testing, unit testing, integration testing, validation testing, and system testing. It provides details on each technique including their objectives, processes, and examples. The document concludes with a discussion of debugging and the debugging process.