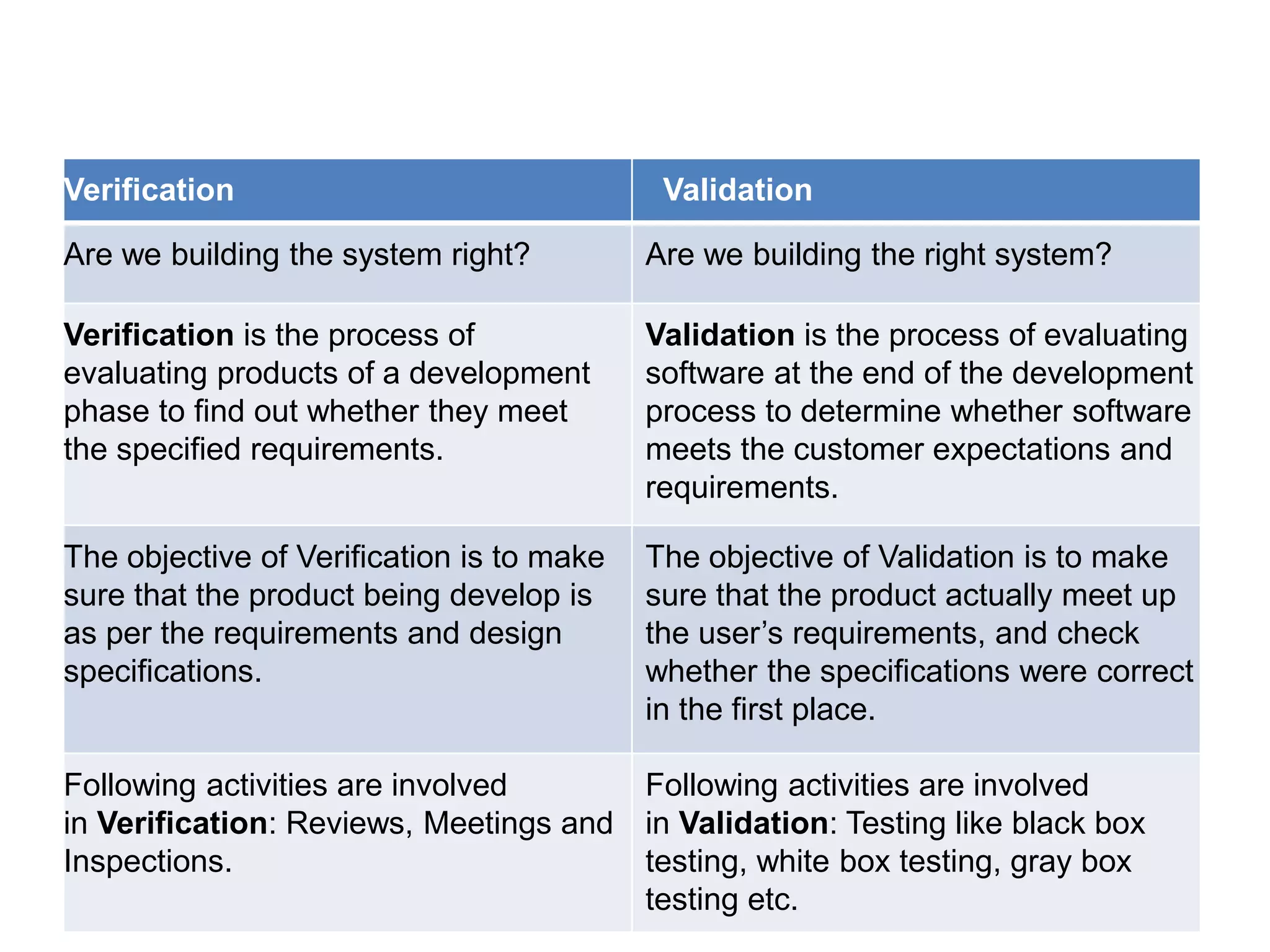

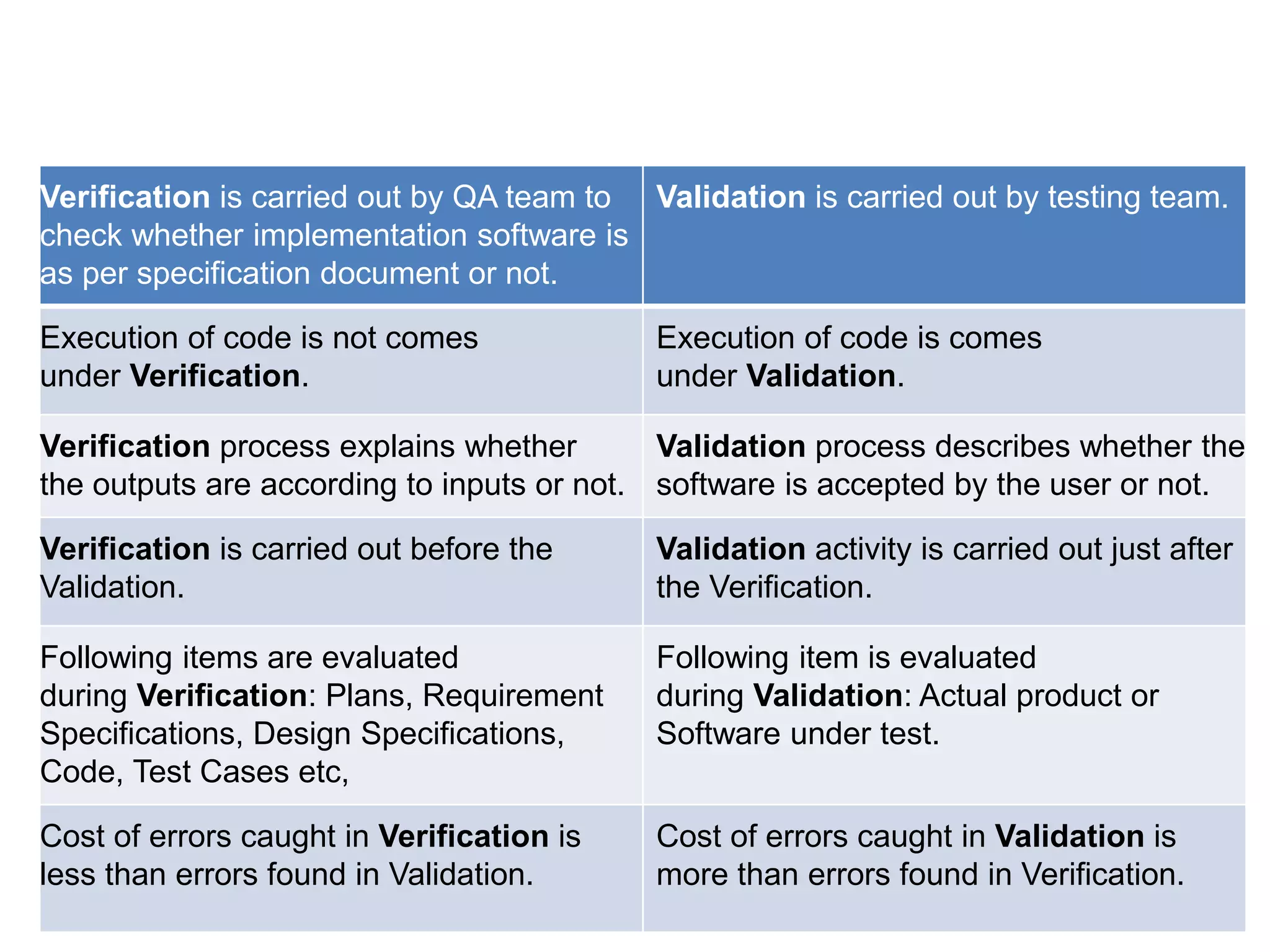

The document discusses software testing terminology, principles, and phases. It defines errors, faults, failures, and their relationships. It also covers software quality metrics and attributes like correctness, reliability, and maintainability. Twelve principles of software testing are outlined around test planning, invalid/unexpected inputs, regression testing, and integrating testing into the development lifecycle. The phases of a software project are described as requirements gathering, planning, design, development, and testing.