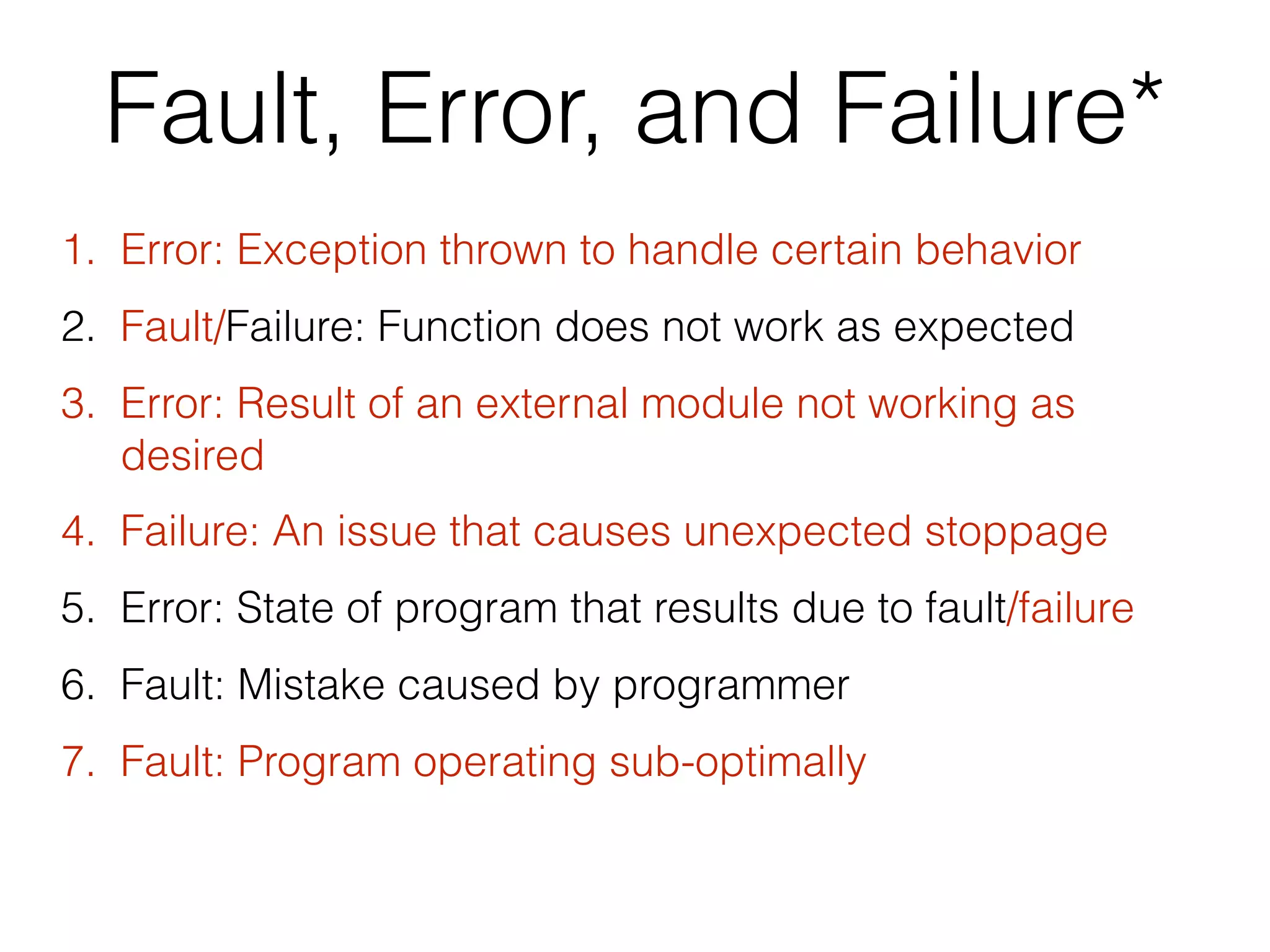

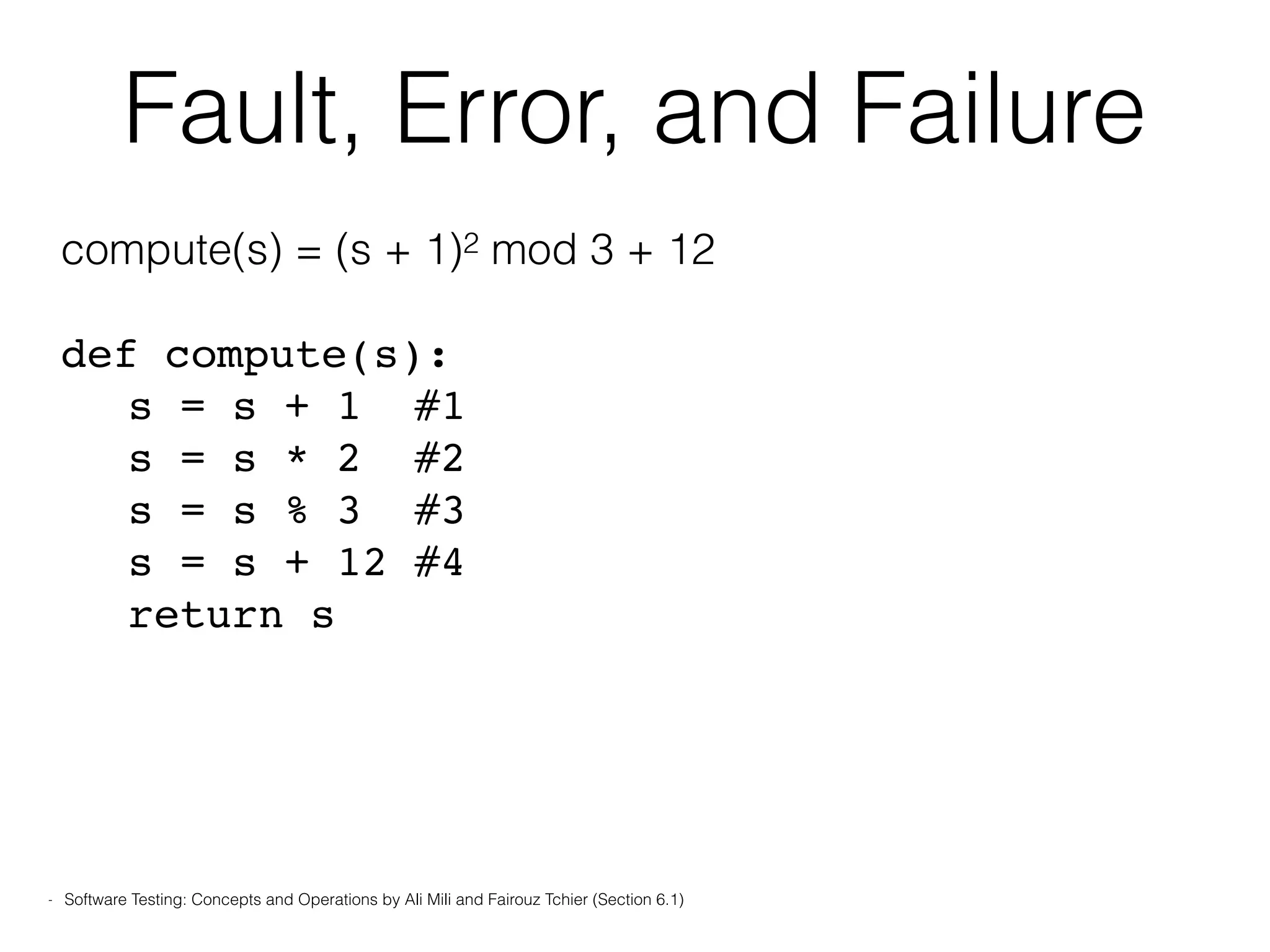

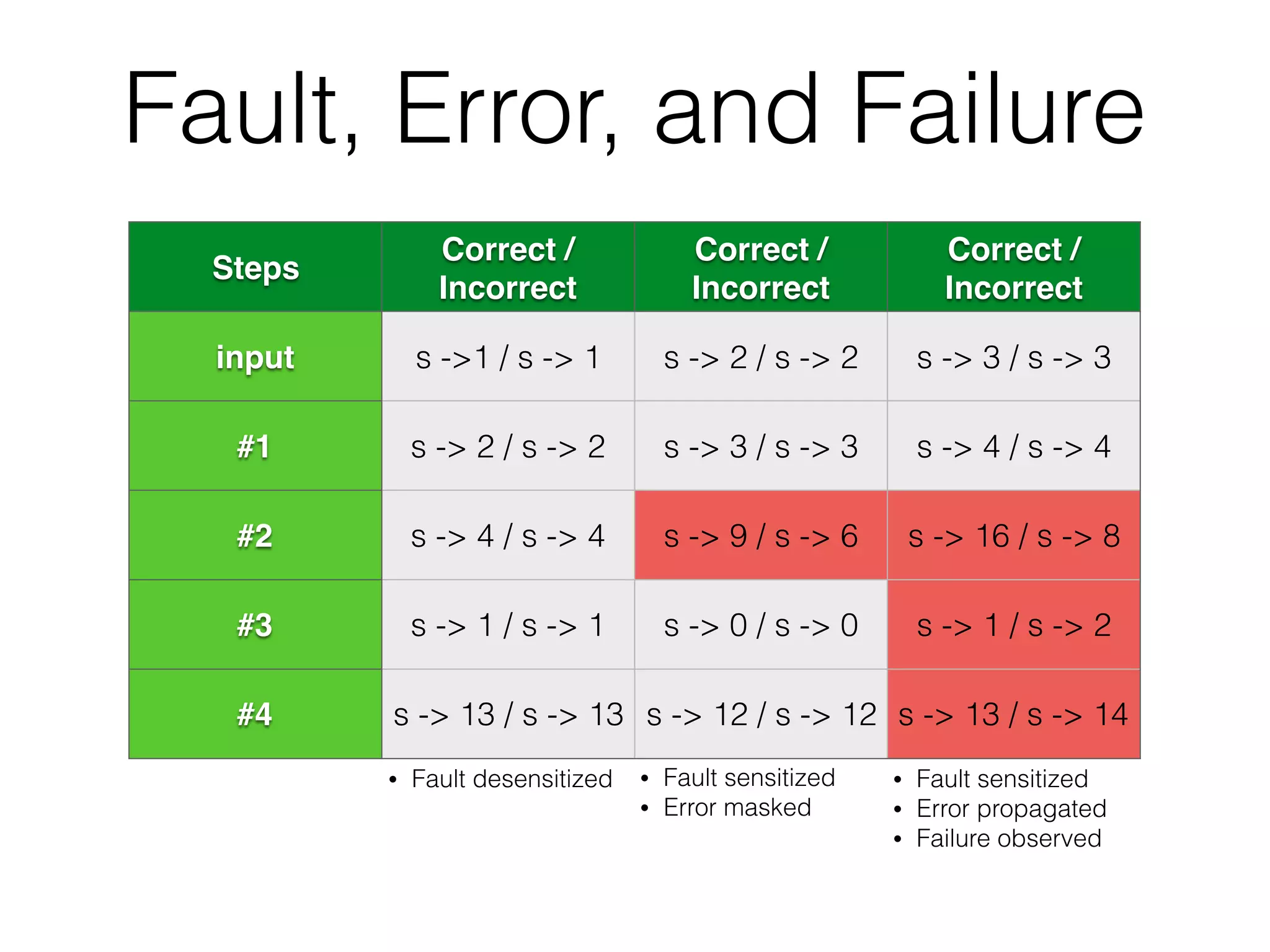

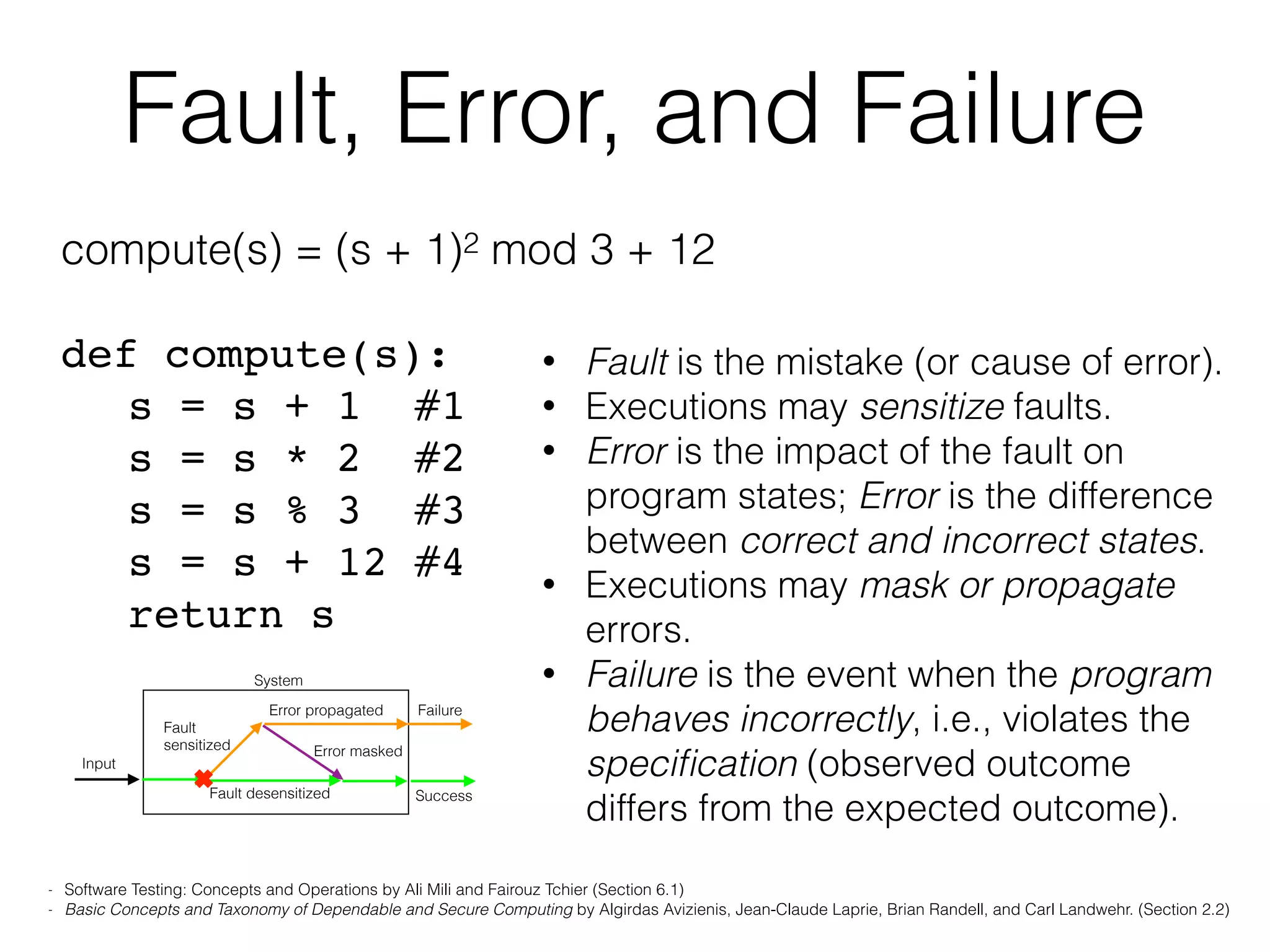

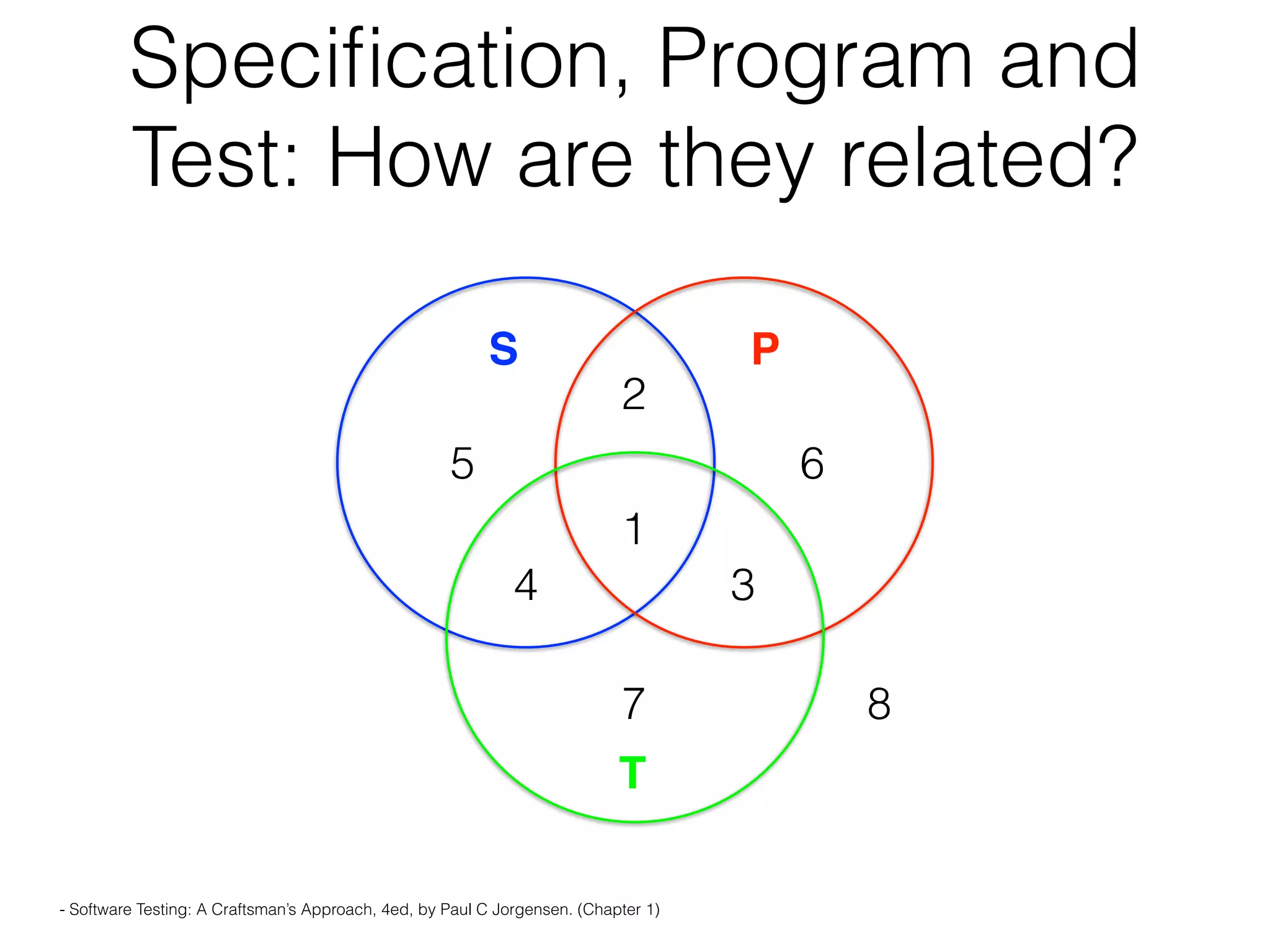

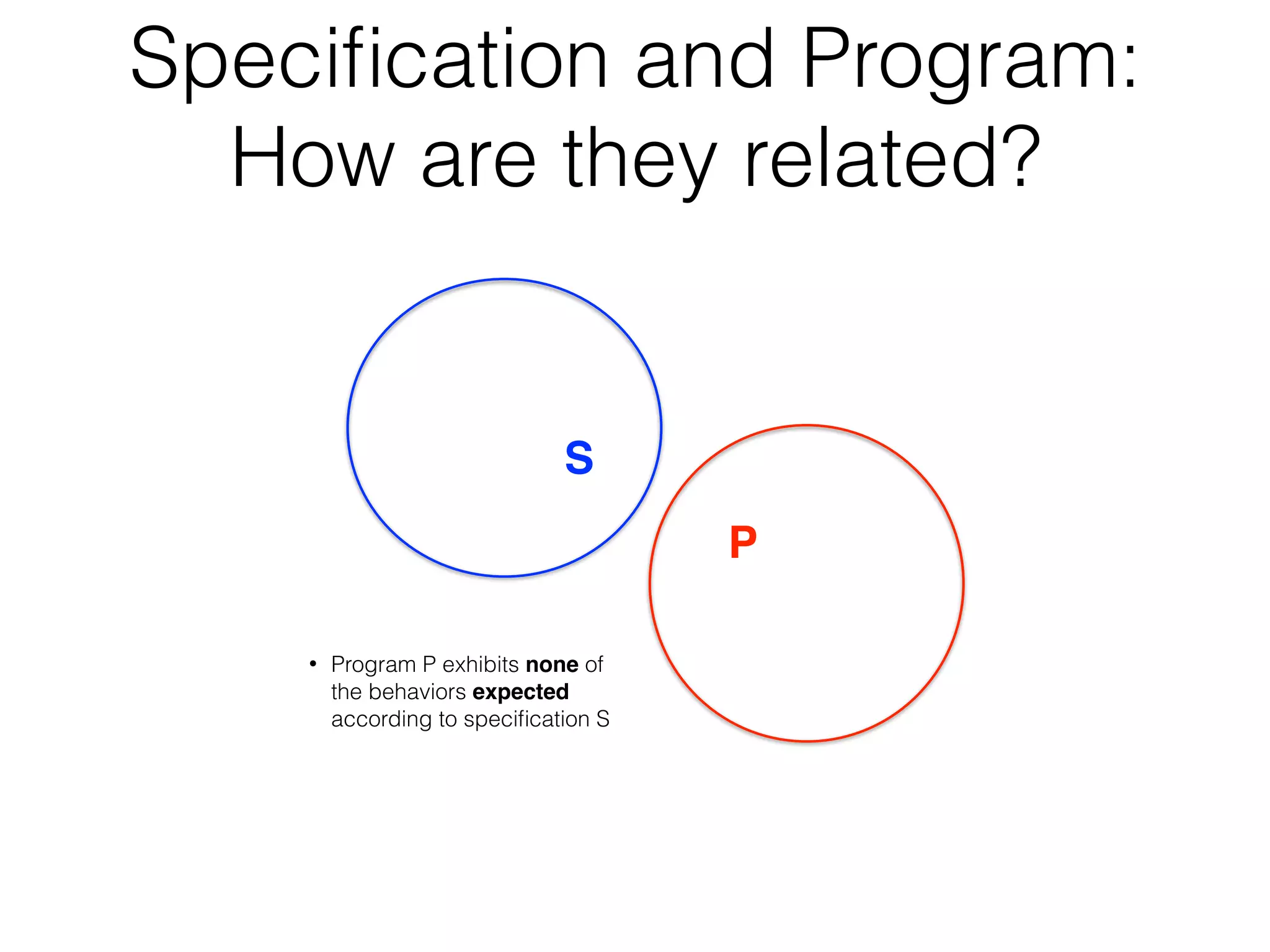

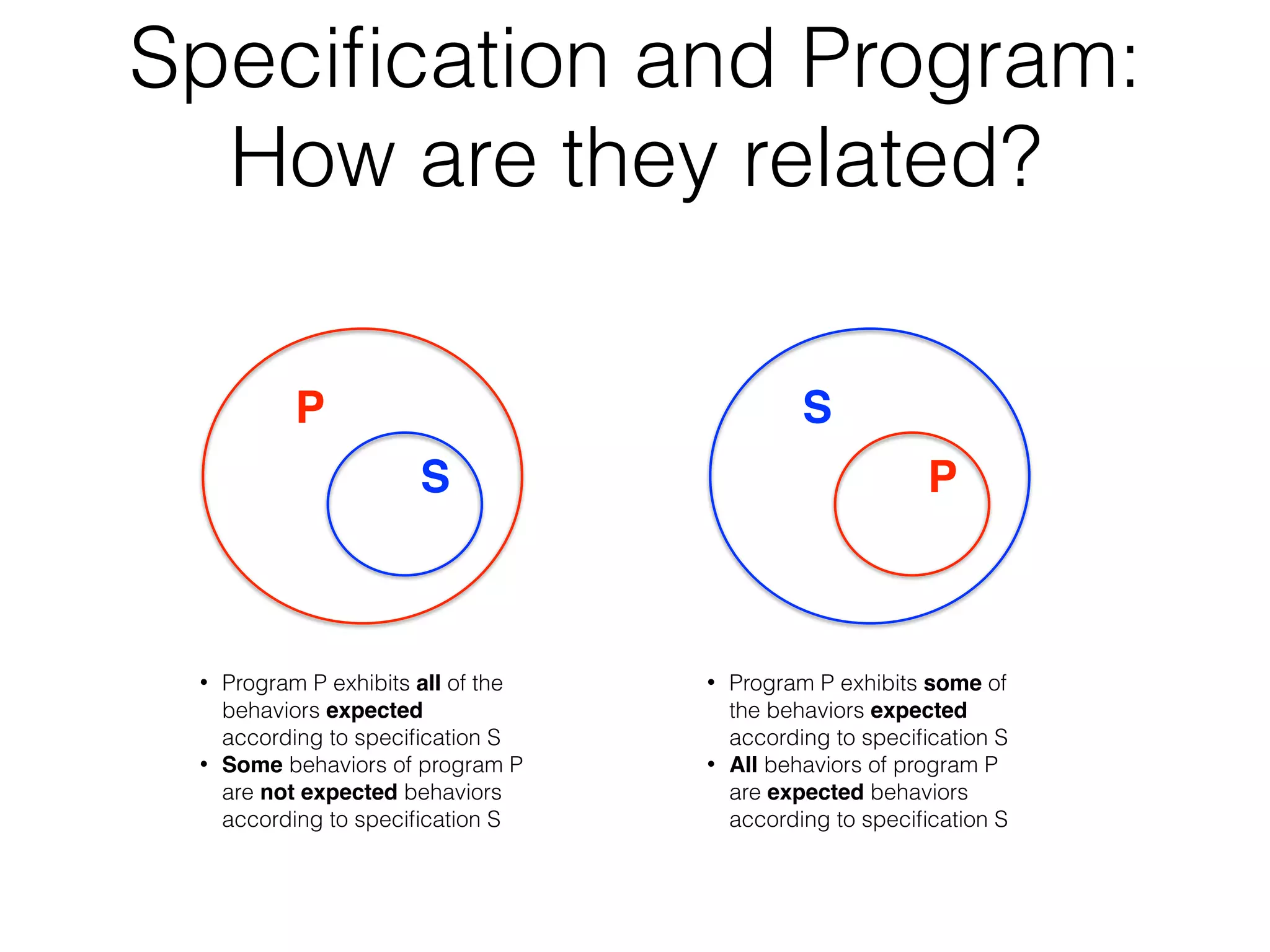

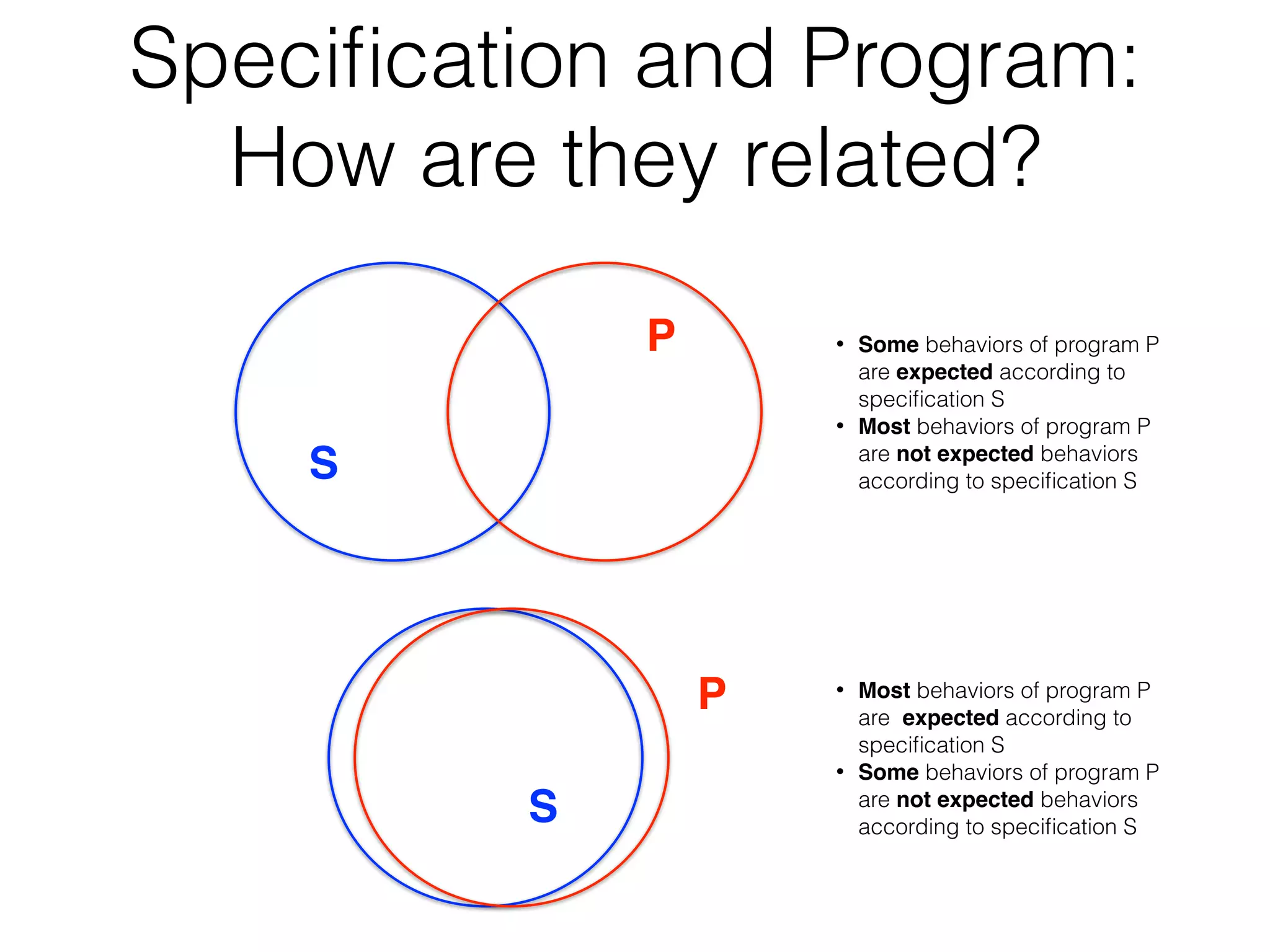

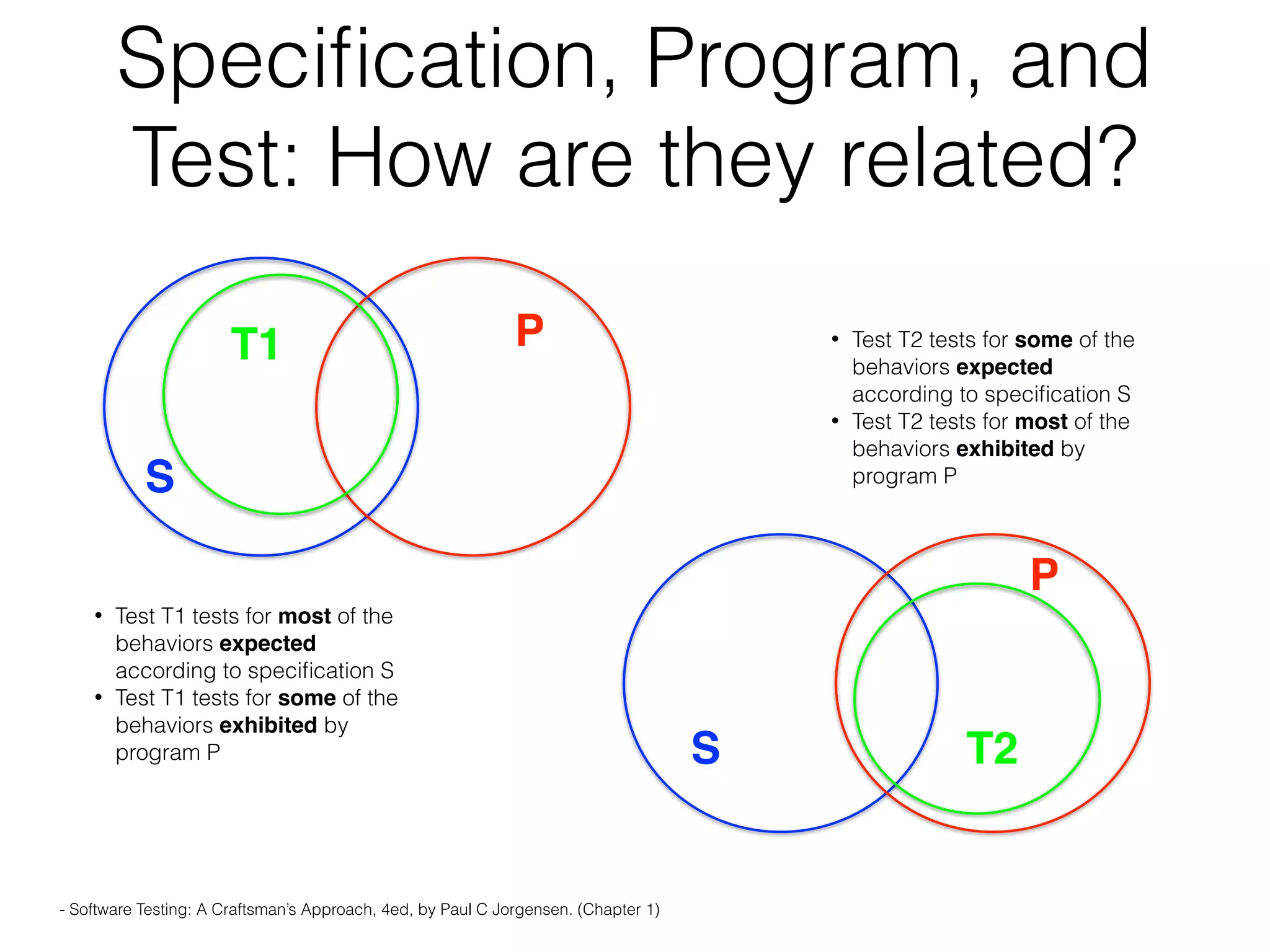

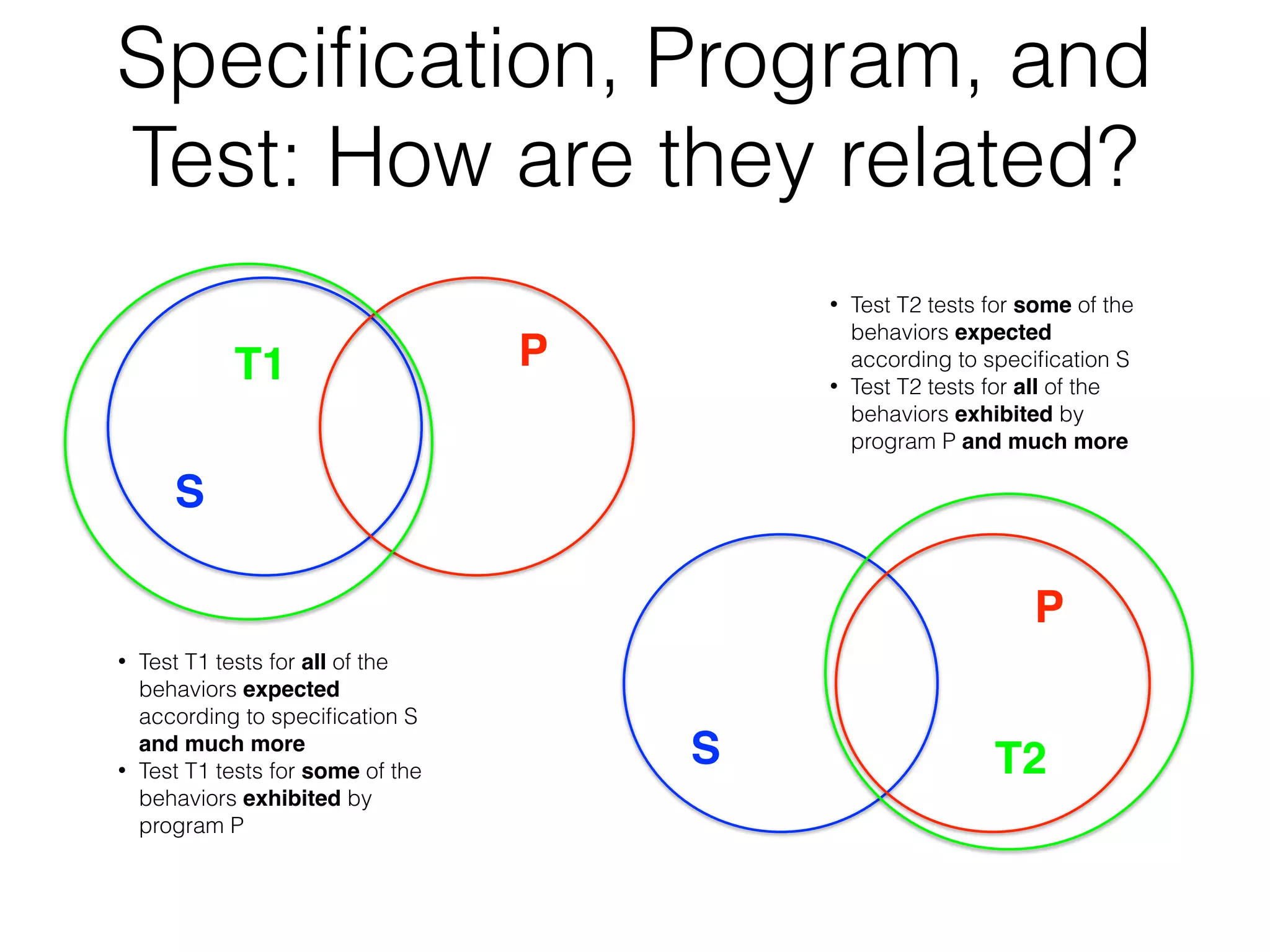

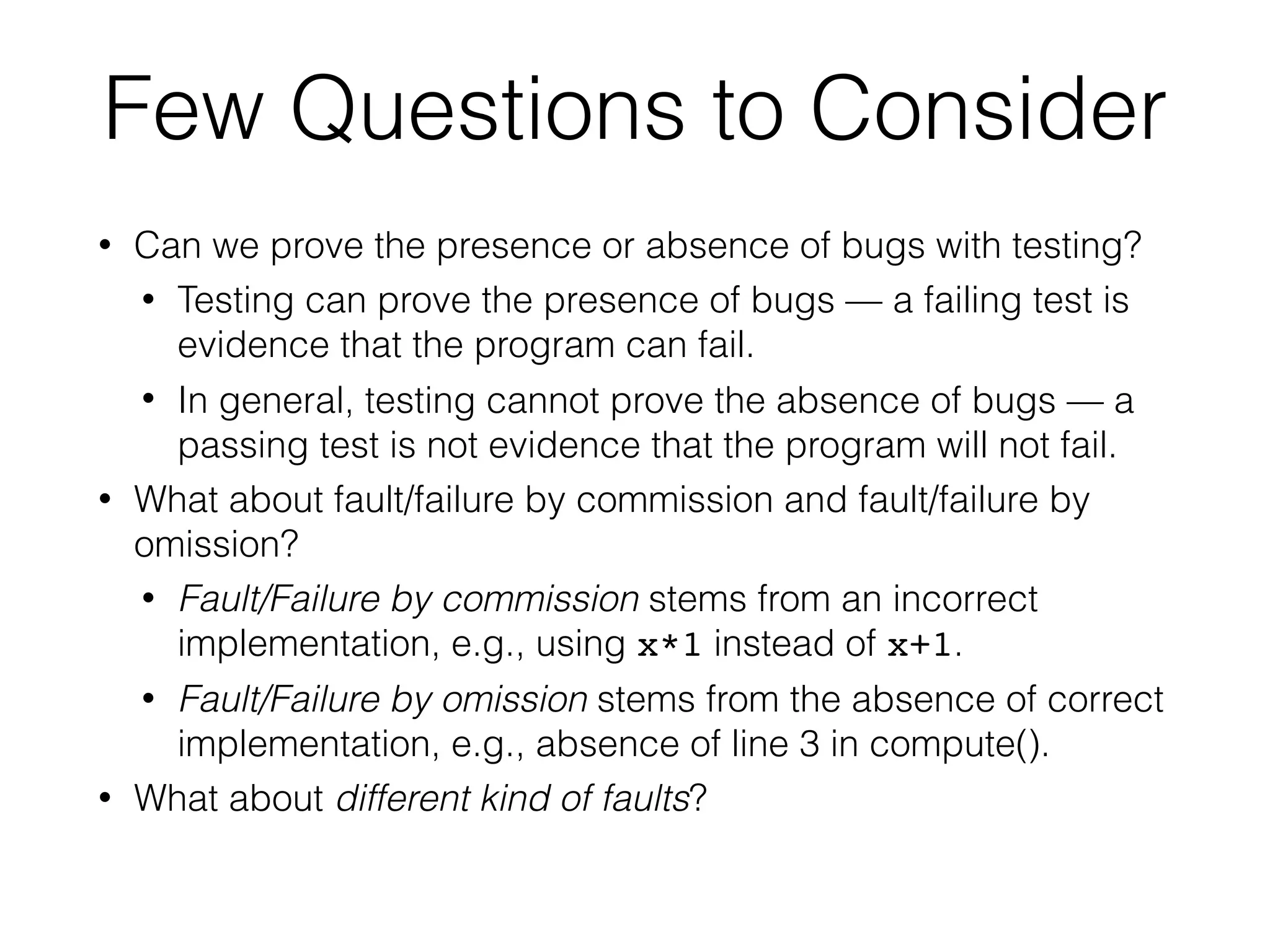

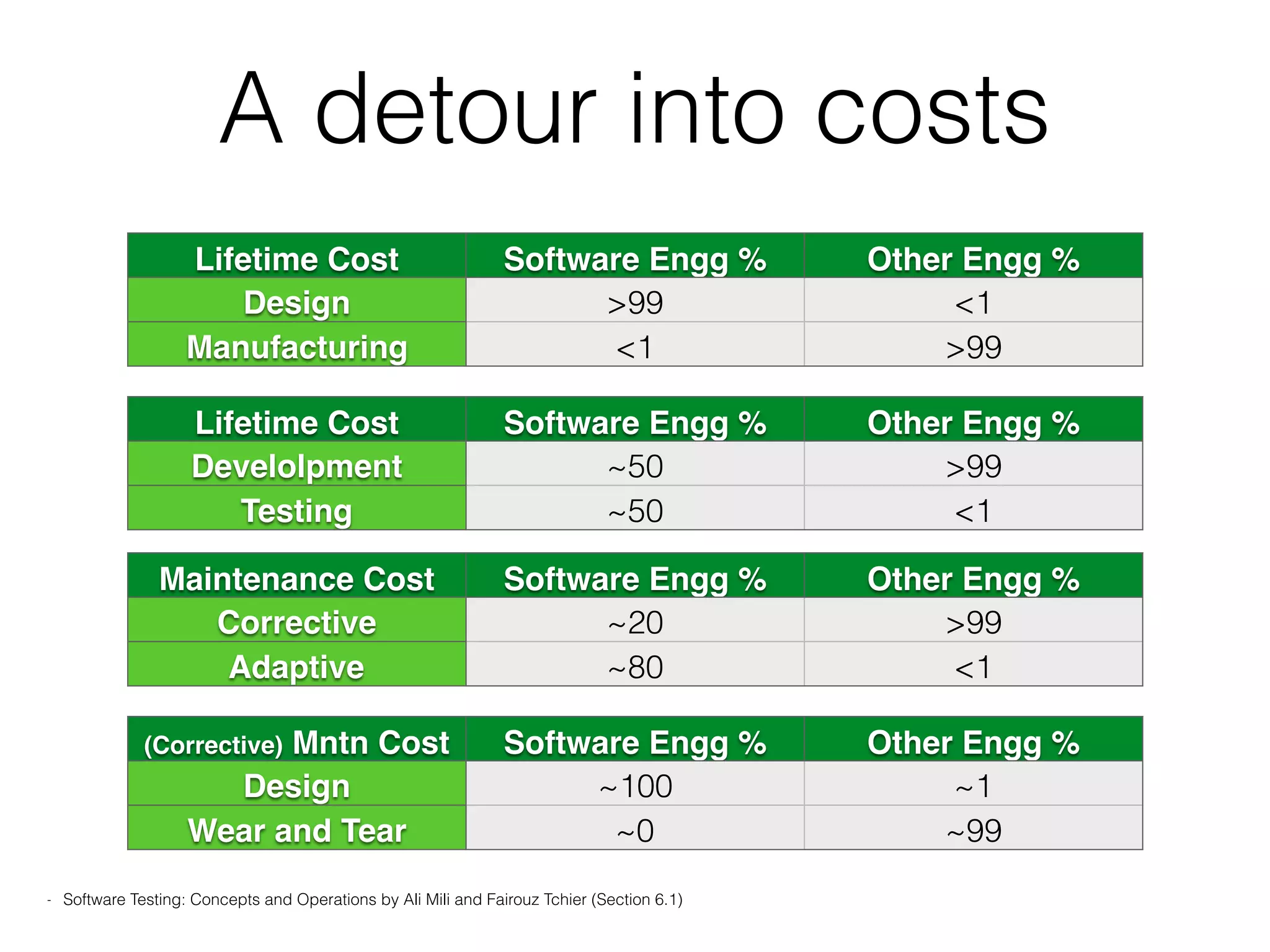

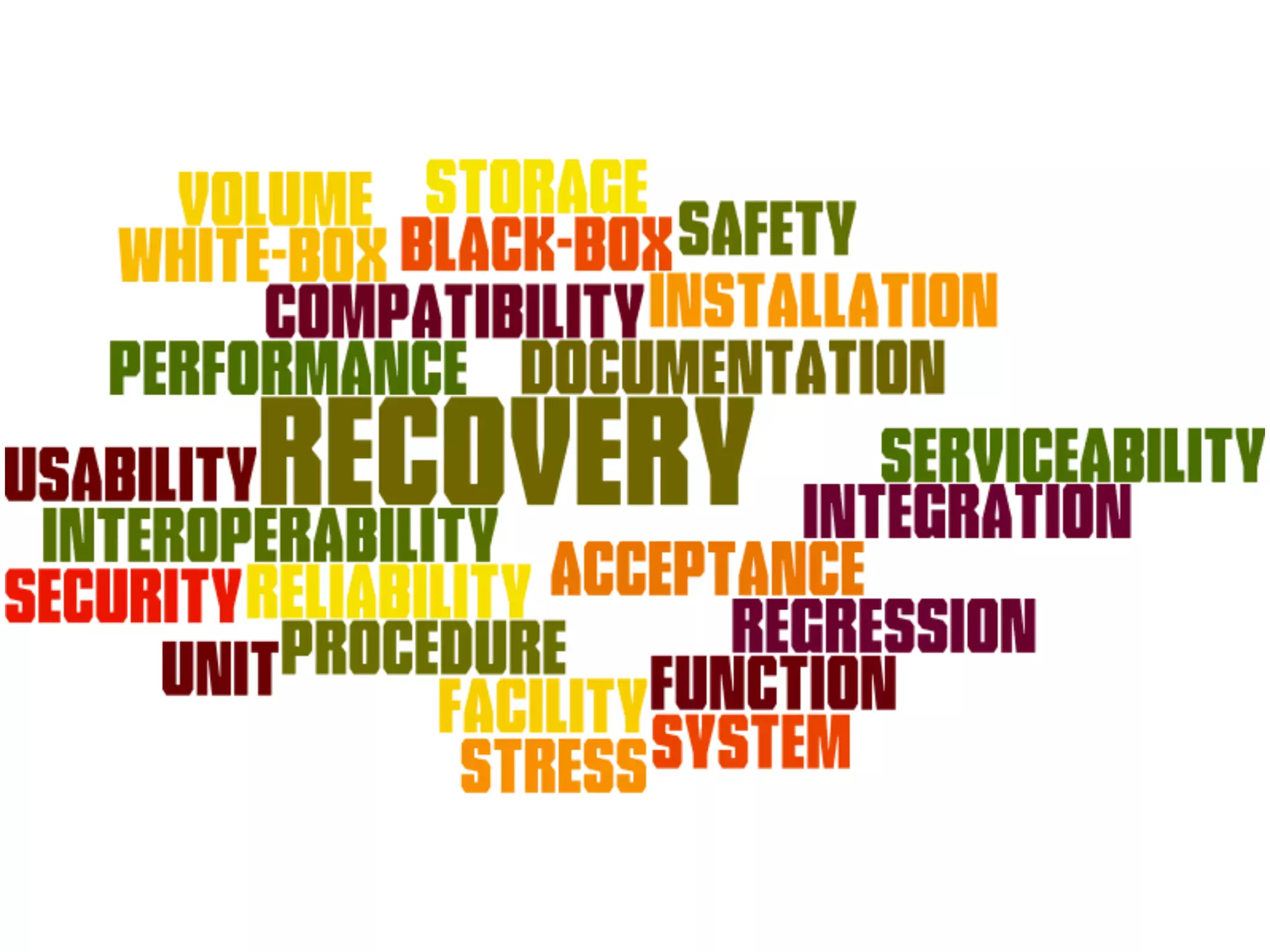

The document discusses fundamental concepts of software testing, including its purpose, types, and the relationship between specification, program, and test. It explains testing definitions, taxonomy, and methodologies such as black-box and white-box testing, as well as various testing levels from unit to acceptance testing. Additionally, it highlights the significance of ensuring software quality and reliability through effective testing practices.