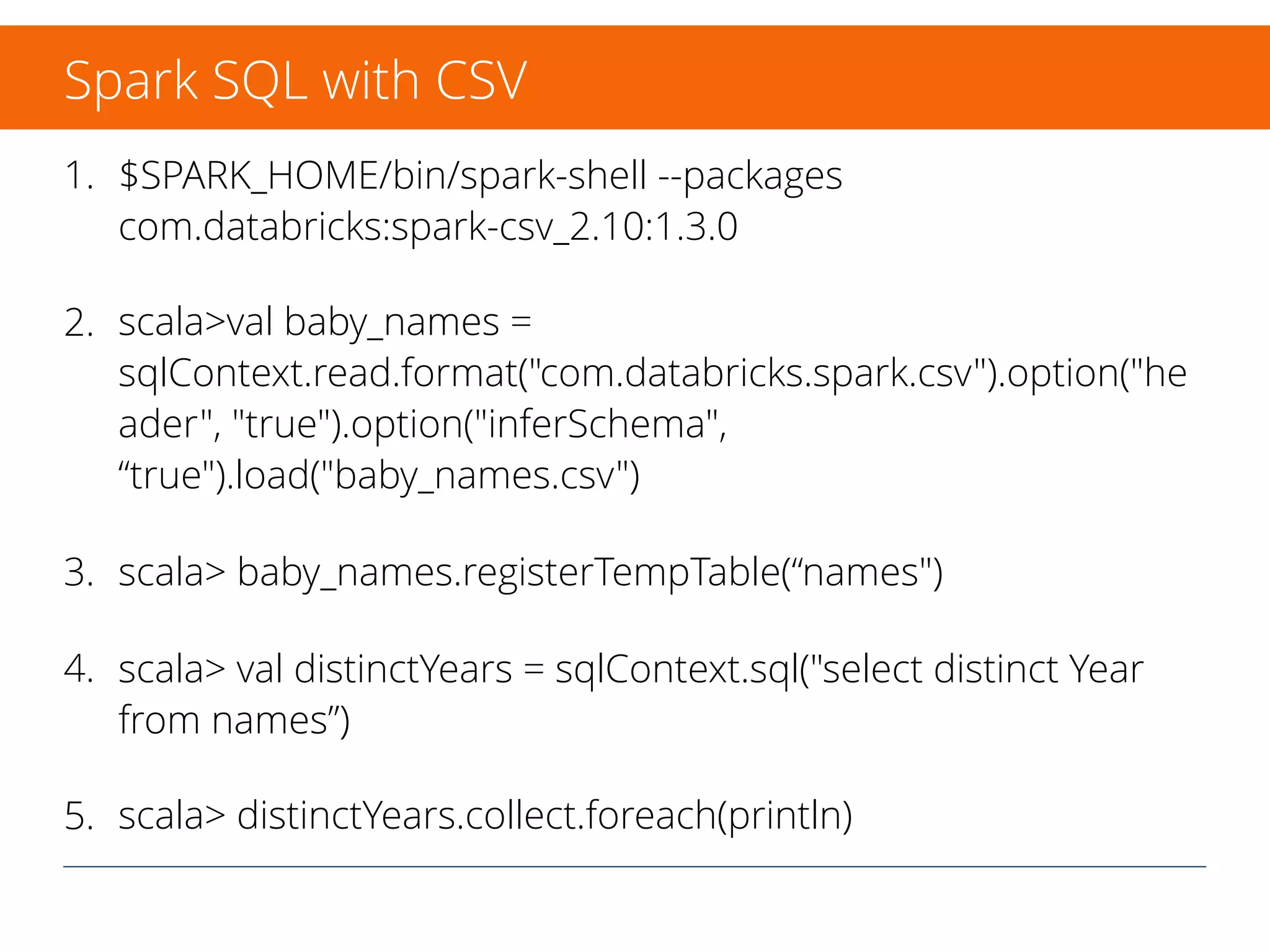

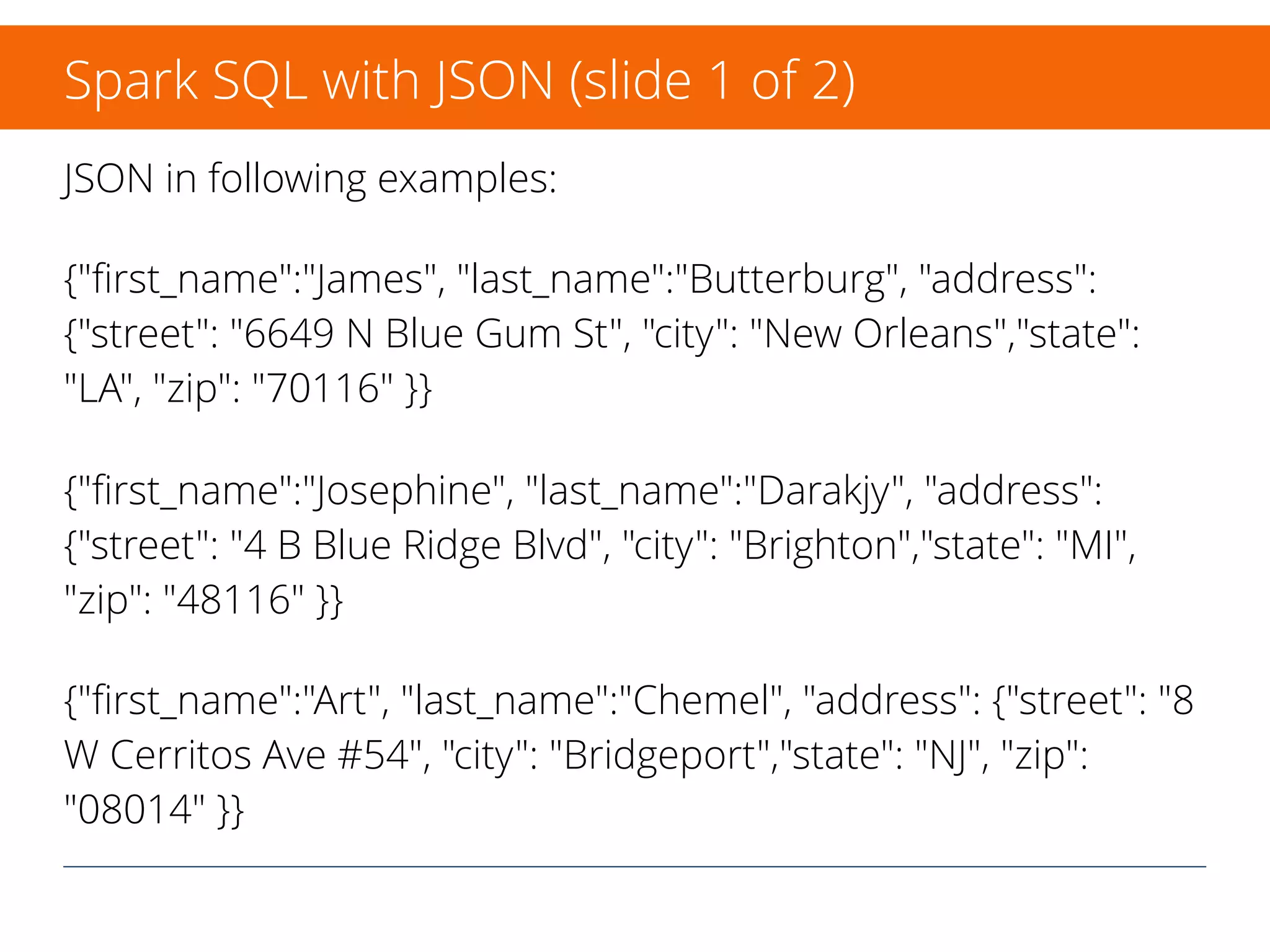

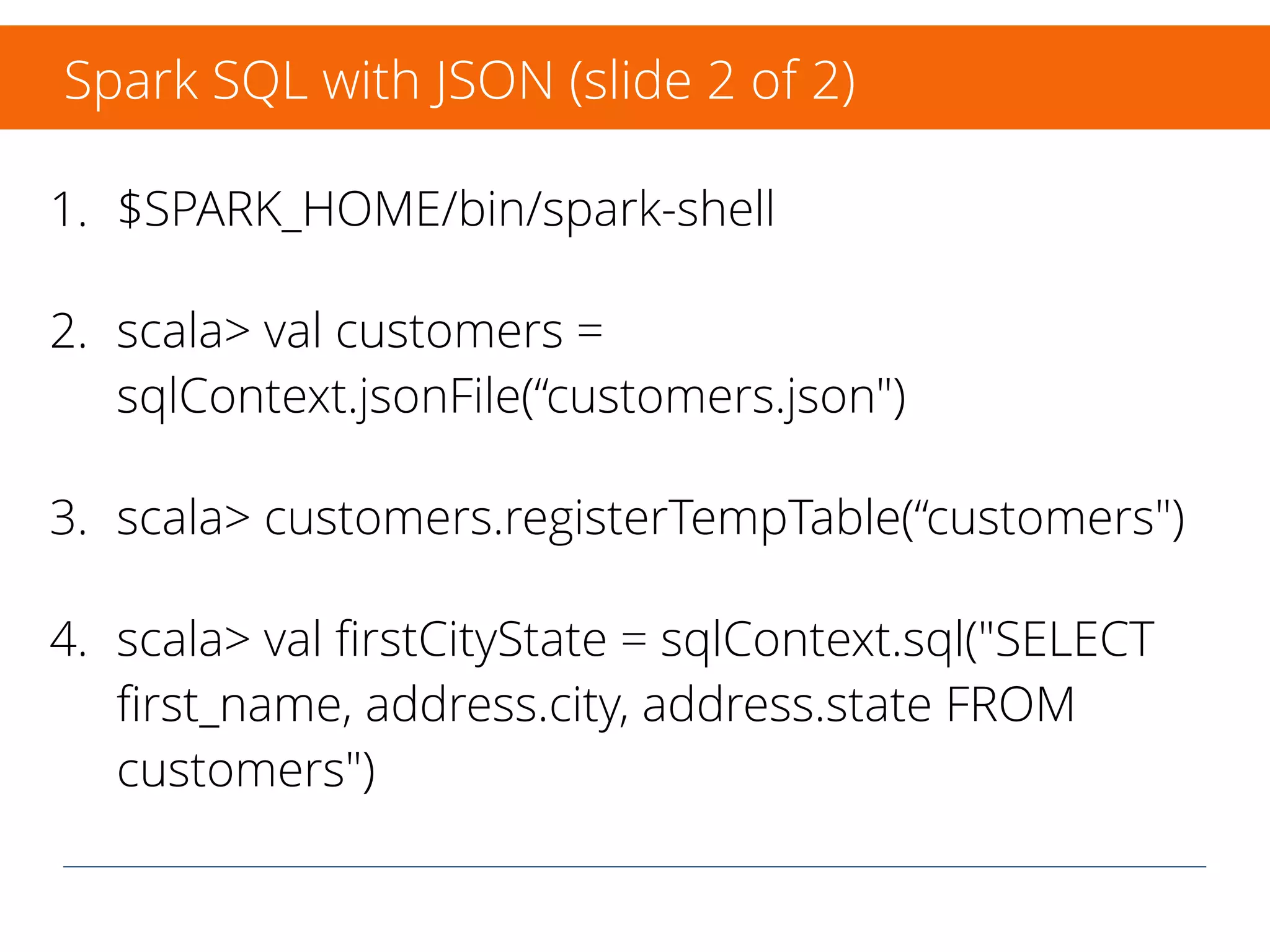

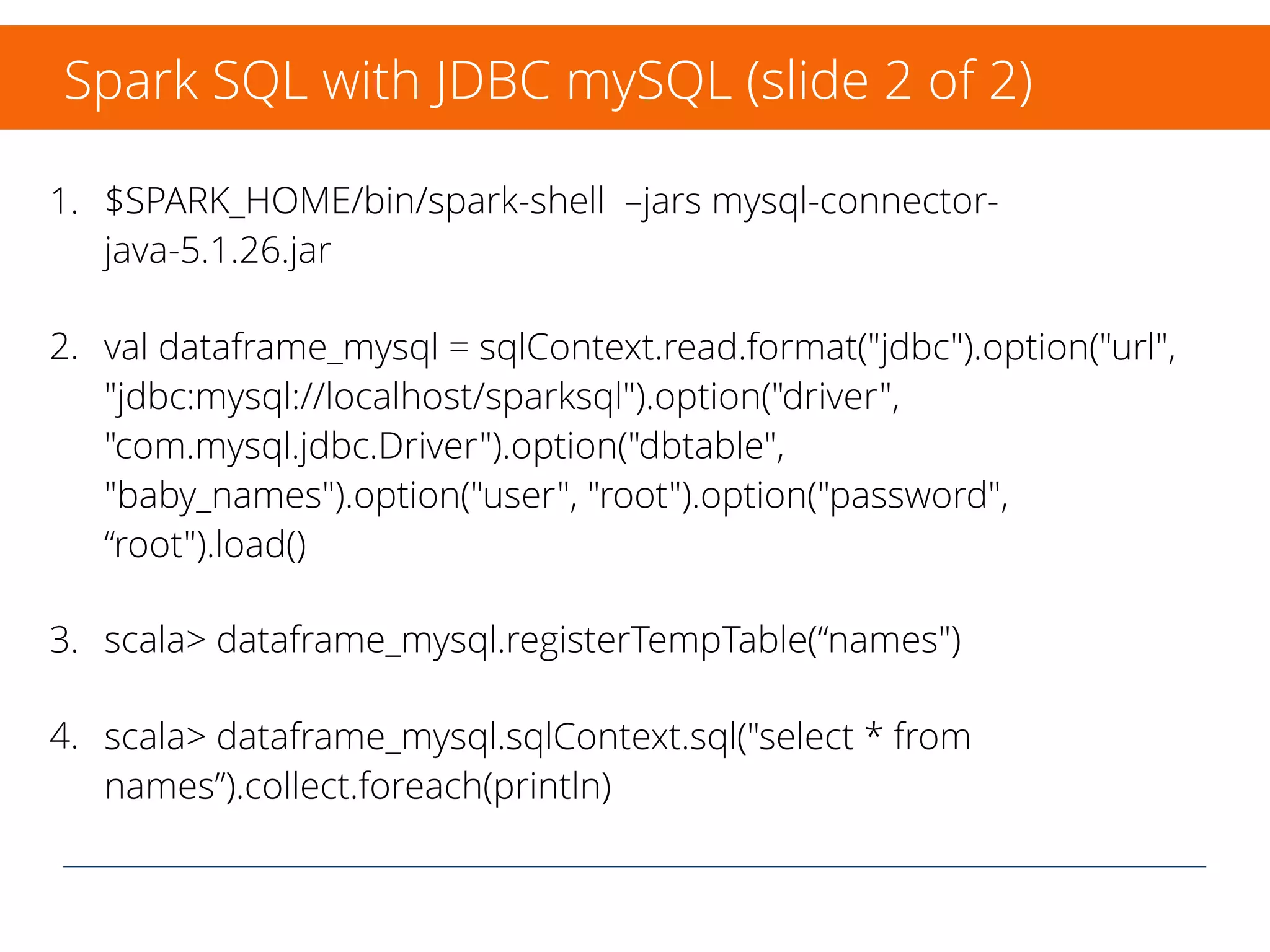

The document provides an overview of Spark SQL, Spark's module for querying structured data using SQL or DataFrame API in various programming languages. It includes code examples for working with DataFrames, CSV, JSON, and MySQL through Spark SQL. The document also directs readers to additional resources for further learning about Spark.