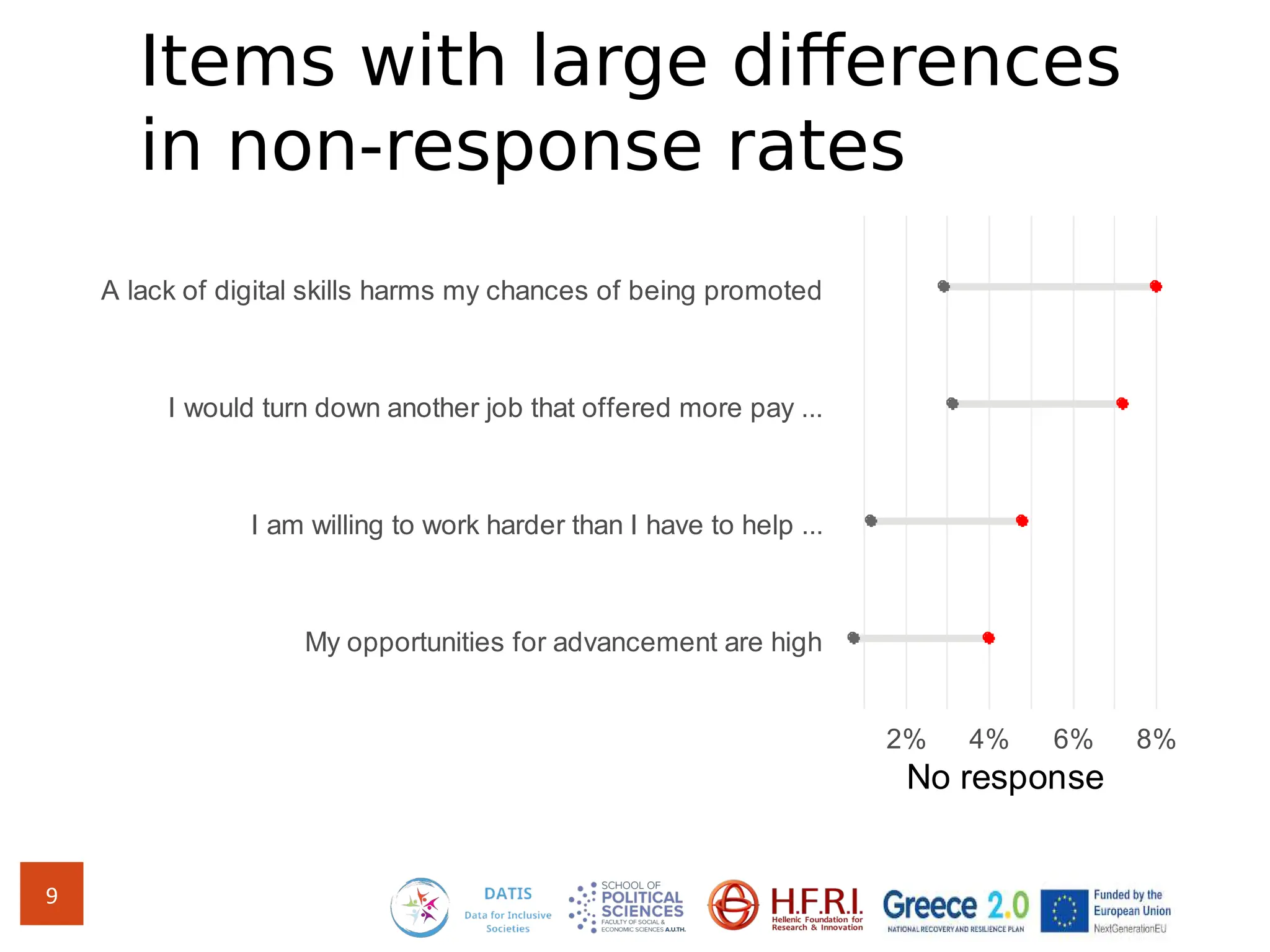

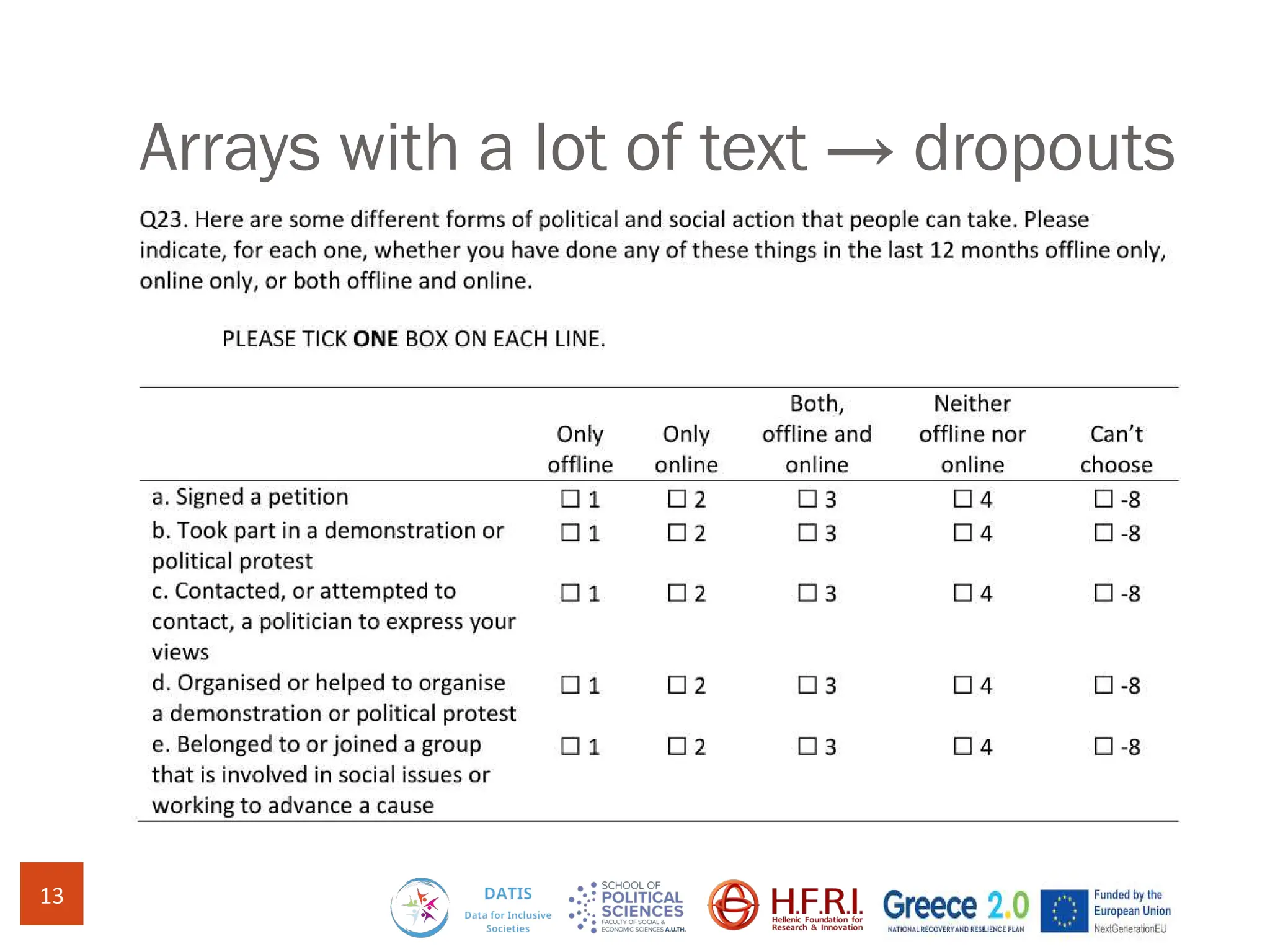

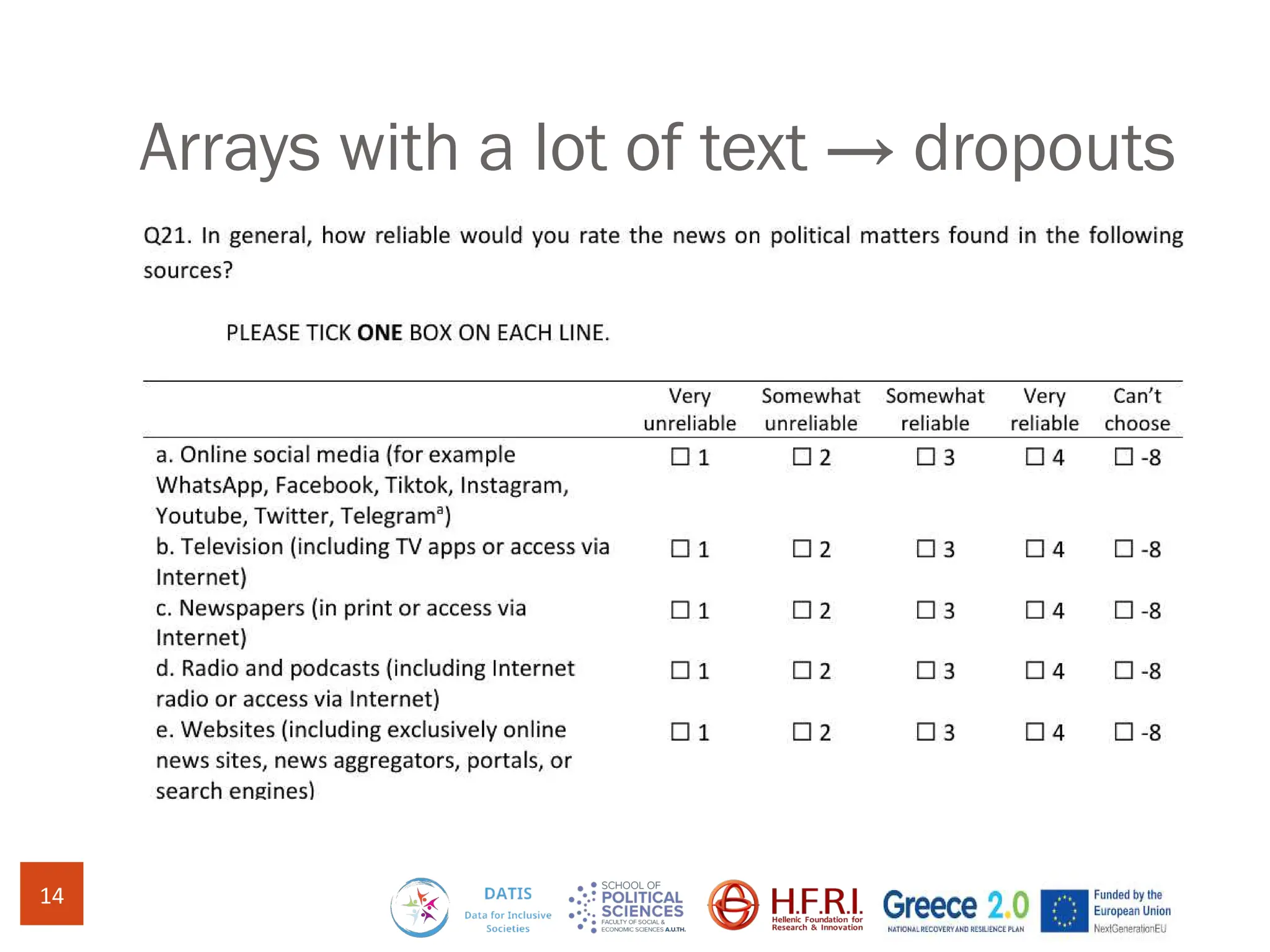

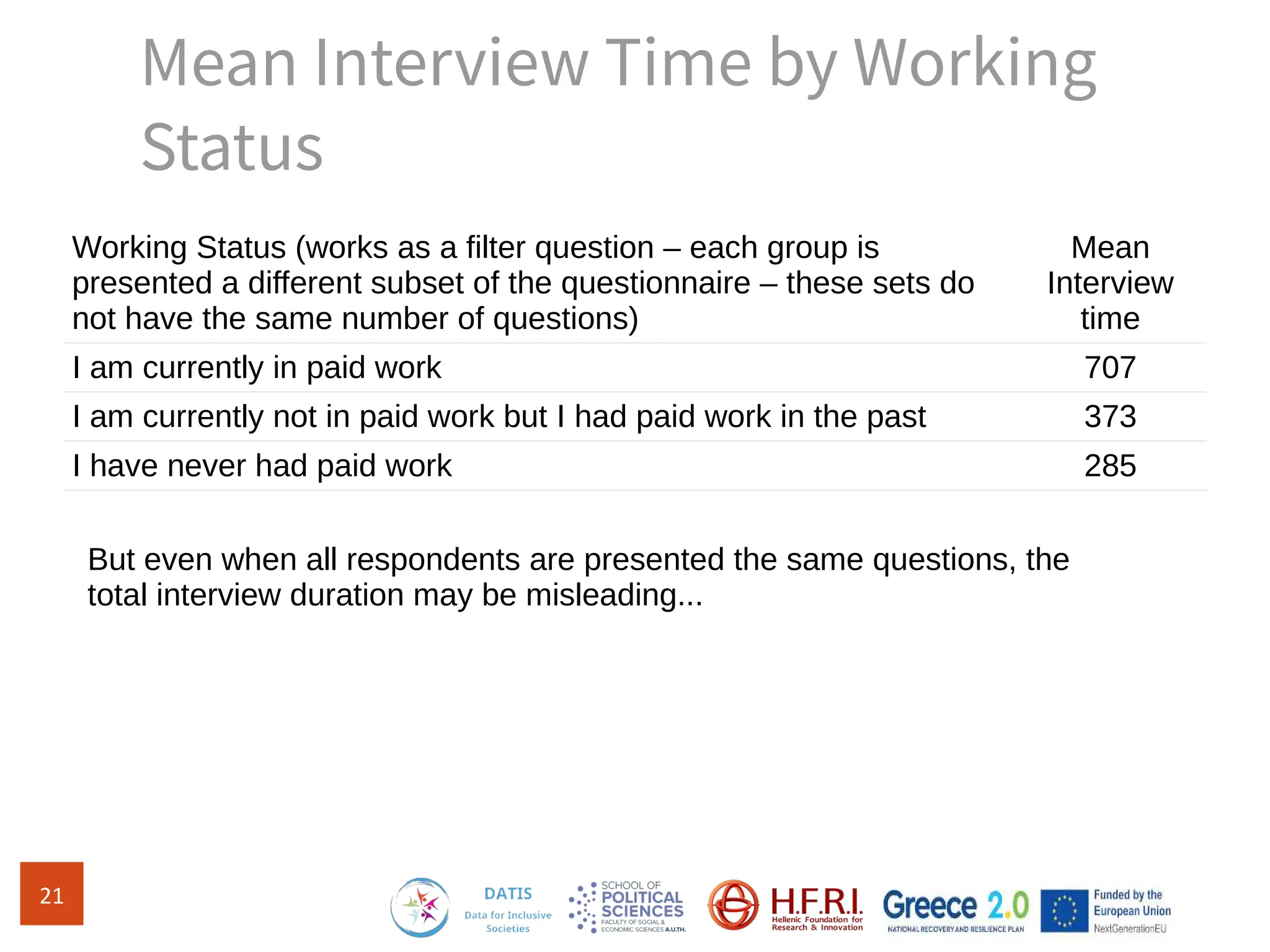

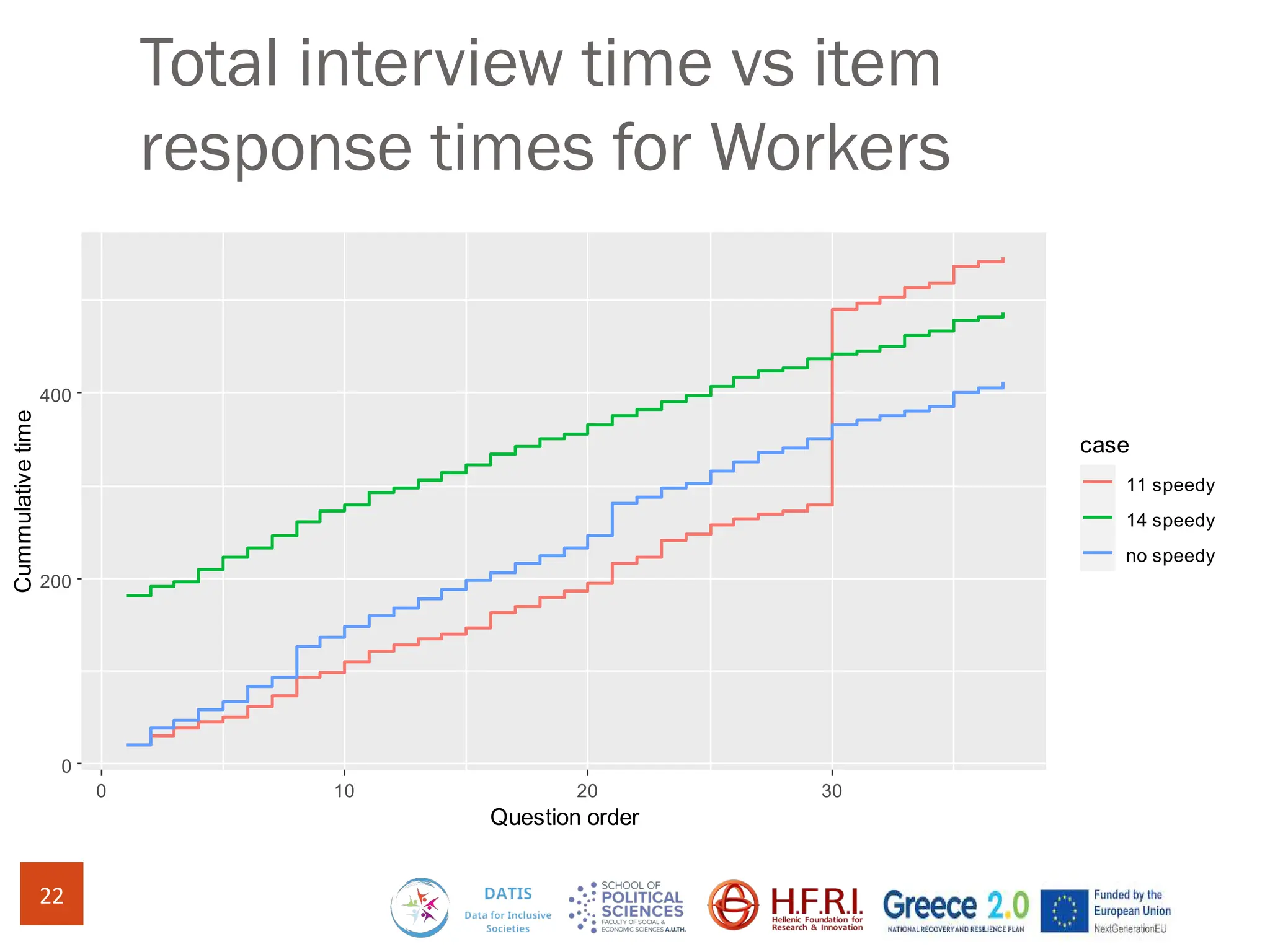

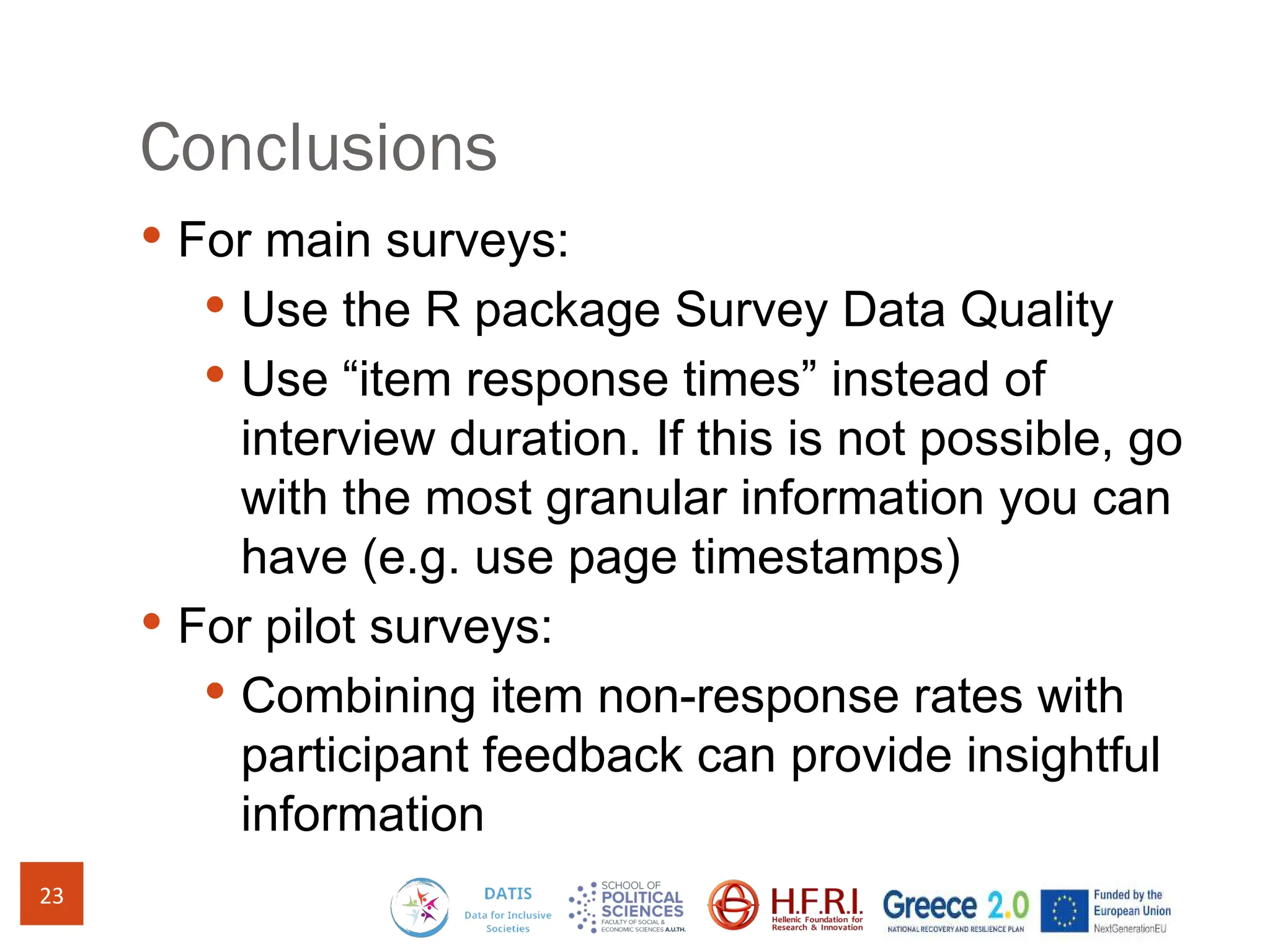

The document discusses methods for assessing survey data quality in two surveys conducted in Greece, focusing on the ISSP 2025 pilot survey and the ISSP 2024 main survey. Key methods include analyzing item non-response rates, participant feedback, and dropout rates to identify potential issues in questionnaires. It also introduces an R package for addressing data quality and provides insights into the importance of tailoring survey questions to different respondent categories, such as self-employed individuals.