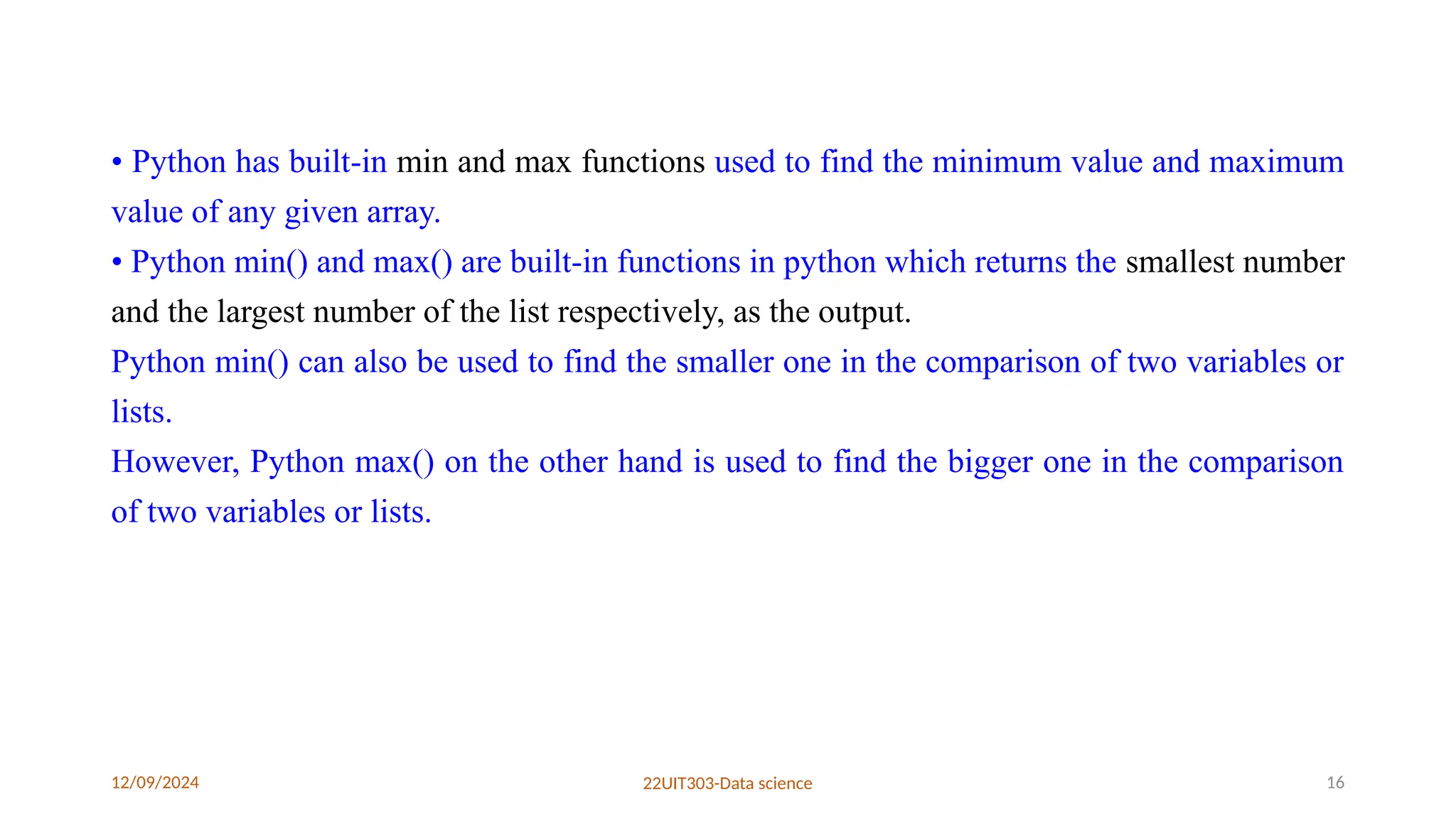

The document covers various topics related to data science, focusing on the essentials of data wrangling and the use of Python libraries such as NumPy and Pandas for data manipulation tasks. It outlines the data wrangling process, which involves steps like discovering, structuring, cleaning, enriching, validating, and publishing data for effective decision-making. Additionally, it provides an introduction to Python programming, highlighting its features, advantages, and the capabilities of built-in functions for performing aggregations and computations on arrays.

![12/09/2024 22UIT303-Data science 14

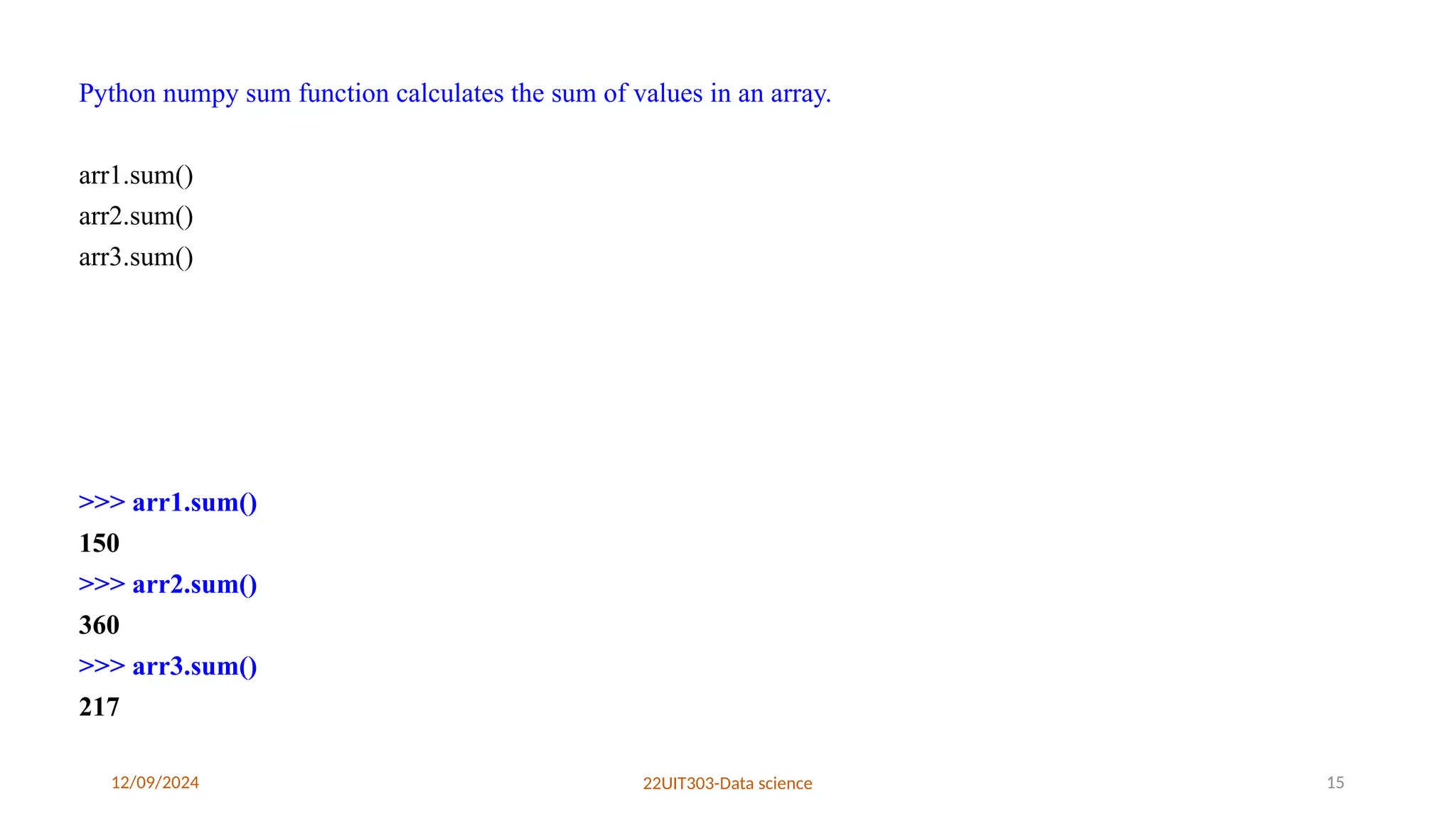

Example

>>> import numpy as np

>>> arr1 = np.array([10, 20, 30, 40, 50])

>>> arr1

array([10, 20, 30, 40, 50])

>>> arr2 = np.array([[0, 10, 20], [30, 40, 50], [60, 70, 80]])

>>> arr2

array([[0, 10, 20]

[30, 40, 50]

[60, 70, 80]])

>>> arr3 = np.array([[14, 6, 9, -12, 19, 72], [-9, 8, 22, 0, 99, -11]])

>>> array3

array([[14, 6, 9, -12, 19, 72])

[-9, 8, 22, 0, 99, -11]])](https://image.slidesharecdn.com/unit-4-241209094246-e09bf06a/75/UNIT-IV-II-IT-Python-Libraries-for-Data-Wrangling-14-2048.jpg)