0% found this document useful (0 votes)

270 views27 pagesDe Mod 5 Deploy Workloads With Databricks Workflows

This document provides an introduction to Databricks Workflows, which is a fully-managed cloud-based general-purpose task orchestration service. It describes key features of Workflows such as orchestrating diverse workloads across data, analytics and AI using notebooks, SQL, and custom code. Workflows enables building reliable data and AI workflows on any cloud with deep platform integration and proven reliability. Common workflow patterns like sequence, funnel, and fan-out are presented along with an example workflow. The document also covers creating workflow jobs with tasks and schedules, as well as monitoring, debugging and navigating workflow runs.

Uploaded by

Jaya BharathiCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

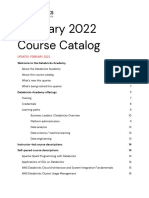

270 views27 pagesDe Mod 5 Deploy Workloads With Databricks Workflows

This document provides an introduction to Databricks Workflows, which is a fully-managed cloud-based general-purpose task orchestration service. It describes key features of Workflows such as orchestrating diverse workloads across data, analytics and AI using notebooks, SQL, and custom code. Workflows enables building reliable data and AI workflows on any cloud with deep platform integration and proven reliability. Common workflow patterns like sequence, funnel, and fan-out are presented along with an example workflow. The document also covers creating workflow jobs with tasks and schedules, as well as monitoring, debugging and navigating workflow runs.

Uploaded by

Jaya BharathiCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 27