Unit 1 Notes Wordgcr

Uploaded by

petri.successUnit 1 Notes Wordgcr

Uploaded by

petri.successAD3351 DAA Unit 1 Notes

Studocu is not sponsored or endorsed by any college or university

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.1

UNIT I INTRODUCTION

1.1 NOTION OF AN ALGORITHM

An algorithm is a sequence of unambiguous instructions for solving a problem, i.e., for

obtaining a required output for any legitimate input in a finite amount of time.

Problem to be solved

Algorithm

Input Computer Program Output

FIGURE 1.1 The notion of the algorithm.

It is a step by step procedure with the input to solve the problem in a finite amount of time

to obtain the required output.

The notion of the algorithm illustrates some important points:

The non-ambiguity requirement for each step of an algorithm cannot be compromised.

The range of inputs for which an algorithm works has to be specified carefully.

The same algorithm can be represented in several different ways.

There may exist several algorithms for solving the same problem.

Algorithms for the same problem can be based on very different ideas and can solve the

problem with dramatically different speeds.

Characteristics of an algorithm:

Input: Zero / more quantities are externally

supplied. Output: At least one quantity is produced.

Definiteness: Each instruction is clear and unambiguous.

Finiteness: If the instructions of an algorithm is traced then for all cases the algorithm must

terminates after a finite number of steps.

Efficiency: Every instruction must be very basic and runs in short time.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.2

Steps for writing an algorithm:

1. An algorithm is a procedure. It has two parts; the first part is head and the second part is

body.

2. The Head section consists of keyword Algorithm and Name of the algorithm with

parameter list. E.g. Algorithm name1(p1, p2,…,p3)

The head section also has the following:

//Problem Description:

//Input:

//Output:

3. In the body of an algorithm various programming constructs like if, for, while and some

statements like assignments are used.

4. The compound statements may be enclosed with { and } brackets. if, for, while can be

closed by endif, endfor, endwhile respectively. Proper indention is must for block.

5. Comments are written using // at the beginning.

6. The identifier should begin by a letter and not by digit. It contains alpha numeric letters

after first letter. No need to mention data types.

7. The left arrow “←” used as assignment operator. E.g. v←10

8. Boolean operators (TRUE, FALSE), Logical operators (AND, OR, NOT) and Relational

operators (<,<=, >, >=,=, ≠, <>) are also used.

9. Input and Output can be done using read and write.

10. Array[], if then else condition, branch and loop can be also used in algorithm.

Example:

The greatest common divisor(GCD) of two nonnegative integers m and n (not-both-zero),

denoted gcd(m, n), is defined as the largest integer that divides both m and n evenly, i.e., with a

remainder of zero.

Euclid’s algorithm is based on applying repeatedly the equality gcd(m, n) = gcd(n, m mod n),

where m mod n is the remainder of the division of m by n, until m mod n is equal to 0. Since gcd(m,

0) = m, the last value of m is also the greatest common divisor of the initial m and n.

gcd(60, 24) can be computed as follows:gcd(60, 24) = gcd(24, 12) = gcd(12, 0) = 12.

Euclid’s algorithm for computing gcd(m, n) in simple steps

Step 1 If n = 0, return the value of m as the answer and stop; otherwise, proceed to Step 2.

Step 2 Divide m by n and assign the value of the remainder to r.

Step 3 Assign the value of n to m and the value of r to n. Go to Step 1.

Euclid’s algorithm for computing gcd(m, n) expressed in pseudocode

ALGORITHM Euclid_gcd(m, n)

//Computes gcd(m, n) by Euclid’s algorithm

//Input: Two nonnegative, not-both-zero integers m and n

//Output: Greatest common divisor of m and n

while n ≠ 0 do

r ←m mod n

m←n

n←r

return m

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.3

1.2 FUNDAMENTALS OF ALGORITHMIC PROBLEM SOLVING

A sequence of steps involved in designing and analyzing an algorithm is shown in the figure

below.

FIGURE 1.2 Algorithm design and analysis process.

(i) Understanding the Problem

This is the first step in designing of algorithm.

Read the problem’s description carefully to understand the problem statement completely.

Ask questions for clarifying the doubts about the problem.

Identify the problem types and use existing algorithm to find solution.

Input (instance) to the problem and range of the input get fixed.

(ii) Decision making

The Decision making is done on the following:

(a) Ascertaining the Capabilities of the Computational Device

In random-access machine (RAM), instructions are executed one after another (The

central assumption is that one operation at a time). Accordingly, algorithms

designed to be executed on such machines are called sequential algorithms.

In some newer computers, operations are executed concurrently, i.e., in parallel.

Algorithms that take advantage of this capability are called parallel algorithms.

Choice of computational devices like Processor and memory is mainly based on

space and time efficiency

(b) Choosing between Exact and Approximate Problem Solving

The next principal decision is to choose between solving the problem exactly or

solving it approximately.

An algorithm used to solve the problem exactly and produce correct result is called

an exact algorithm.

If the problem is so complex and not able to get exact solution, then we have to

choose an algorithm called an approximation algorithm. i.e., produces an

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.4

approximate answer. E.g., extracting square roots, solving nonlinear equations, and

evaluating definite integrals.

(c) Algorithm Design Techniques

An algorithm design technique (or “strategy” or “paradigm”) is a general approach

to solving problems algorithmically that is applicable to a variety of problems from

different areas of computing.

Algorithms+ Data Structures = Programs

Though Algorithms and Data Structures are independent, but they are combined

together to develop program. Hence the choice of proper data structure is required

before designing the algorithm.

Implementation of algorithm is possible only with the help of Algorithms and Data

Structures

Algorithmic strategy / technique / paradigm are a general approach by which

many problems can be solved algorithmically. E.g., Brute Force, Divide and

Conquer, Dynamic Programming, Greedy Technique and so on.

(iii) Methods of Specifying an Algorithm

There are three ways to specify an algorithm. They are:

a. Natural language

b. Pseudocode

c. Flowchart

Algorithm Specification

Natural Language Pseudocode Flowchart

FIGURE 1.3 Algorithm Specifications

Pseudocode and flowchart are the two options that are most widely used nowadays for specifying

algorithms.

a. Natural Language

It is very simple and easy to specify an algorithm using natural language. But many times specification

of algorithm by using natural language is not clear and thereby we get brief specification.

Example: An algorithm to perform addition of two numbers.

Step 1: Read the first number, say a.

Step 2: Read the first number, say b.

Step 3: Add the above two numbers and store the result in c.

Step 4: Display the result from c.

Such a specification creates difficulty while actually implementing it. Hence many programmers prefer

to have specification of algorithm by means of Pseudocode.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.5

b. Pseudocode

Pseudocode is a mixture of a natural language and programming language constructs.

Pseudocode is usually more precise than natural language.

For Assignment operation left arrow “←”, for comments two slashes “//”,if condition, for,

while loops are used.

ALGORITHM Sum(a,b)

//Problem Description: This algorithm performs addition of two numbers

//Input: Two integers a and b

//Output: Addition of two integers

c←a+b

return c

This specification is more useful for implementation of any language.

c. Flowchart

In the earlier days of computing, the dominant method for specifying algorithms was a flowchart,

this representation technique has proved to be inconvenient.

Flowchart is a graphical representation of an algorithm. It is a a method of expressing an algorithm

by a collection of connected geometric shapes containing descriptions of the algorithm’s steps.

Symbols Example: Addition of a and b

Start Start state

Start

Transition / Assignment

Input the value of a

Processing / Input read Input and

Input the value of

Output

b

Condition / Decision c=a+b

Display the value of

Flow connectivity

c Stop

Stop Stop state

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.6

FIGURE 1.4 Flowchart symbols and Example for two integer addition.

(iv) Proving an Algorithm’s Correctness

Once an algorithm has been specified then its correctness must be proved.

An algorithm must yields a required result for every legitimate input in a finite amount of

time.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.7

For example, the correctness of Euclid’s algorithm for computing the greatest common

divisor stems from the correctness of the equality gcd(m, n) = gcd(n, m mod n).

A common technique for proving correctness is to use mathematical induction because an

algorithm’s iterations provide a natural sequence of steps needed for such proofs.

The notion of correctness for approximation algorithms is less straightforward than it is for

exact algorithms. The error produced by the algorithm should not exceed a predefined

limit.

(v) Analyzing an Algorithm

For an algorithm the most important is efficiency. In fact, there are two kinds of algorithm

efficiency. They are:

Time efficiency, indicating how fast the algorithm runs, and

Space efficiency, indicating how much extra memory it uses.

The efficiency of an algorithm is determined by measuring both time efficiency and space

efficiency.

So factors to analyze an algorithm are:

Time efficiency of an algorithm

Space efficiency of an algorithm

Simplicity of an algorithm

Generality of an algorithm

(vi) Coding an Algorithm

The coding / implementation of an algorithm is done by a suitable programming language

like C, C++, JAVA.

The transition from an algorithm to a program can be done either incorrectly or very

inefficiently. Implementing an algorithm correctly is necessary. The Algorithm power

should not reduced by inefficient implementation.

Standard tricks like computing a loop’s invariant (an expression that does not change its

value) outside the loop, collecting common subexpressions, replacing expensive

operations by cheap ones, selection of programming language and so on should be known to

the programmer.

Typically, such improvements can speed up a program only by a constant factor, whereas a

better algorithm can make a difference in running time by orders of magnitude. But once

an algorithm is selected, a 10–50% speedup may be worth an effort.

It is very essential to write an optimized code (efficient code) to reduce the burden of

compiler.

1.3 IMPORTANT PROBLEM TYPES

The most important problem types are:

(i). Sorting

(ii). Searching

(iii). String processing

(iv). Graph problems

(v). Combinatorial problems

(vi). Geometric problems

(vii). Numerical problems

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.8

(i) Sorting

The sorting problem is to rearrange the items of a given list in nondecreasing (ascending)

order.

Sorting can be done on numbers, characters, strings or records.

To sort student records in alphabetical order of names or by student number or by student

grade-point average. Such a specially chosen piece of information is called a key.

An algorithm is said to be in-place if it does not require extra memory, E.g., Quick sort.

A sorting algorithm is called stable if it preserves the relative order of any two equal

elements in its input.

(ii) Searching

The searching problem deals with finding a given value, called a search key, in a given set.

E.g., Ordinary Linear search and fast binary search.

(iii) String processing

A string is a sequence of characters from an alphabet.

Strings comprise letters, numbers, and special characters; bit strings, which comprise zeros

and ones; and gene sequences, which can be modeled by strings of characters from the four-

character alphabet {A, C, G, T}. It is very useful in bioinformatics.

Searching for a given word in a text is called string matching

(iv) Graph problems

A graph is a collection of points called vertices, some of which are connected by line

segments called edges.

Some of the graph problems are graph traversal, shortest path algorithm, topological sort,

traveling salesman problem and the graph-coloring problem and so on.

(v) Combinatorial problems

These are problems that ask, explicitly or implicitly, to find a combinatorial object such as a

permutation, a combination, or a subset that satisfies certain constraints.

A desired combinatorial object may also be required to have some additional property such

s a maximum value or a minimum cost.

In practical, the combinatorial problems are the most difficult problems in computing.

The traveling salesman problem and the graph coloring problem are examples of

combinatorial problems.

(vi) Geometric problems

Geometric algorithms deal with geometric objects such as points, lines, and polygons.

Geometric algorithms are used in computer graphics, robotics, and tomography.

The closest-pair problem and the convex-hull problem are comes under this category.

(vii) Numerical problems

Numerical problems are problems that involve mathematical equations, systems of

equations, computing definite integrals, evaluating functions, and so on.

The majority of such mathematical problems can be solved only approximately.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.9

1.4 FUNDAMENTALS OF THE ANALYSIS OF ALGORITHM EFFICIENCY

The efficiency of an algorithm can be in terms of time and space. The algorithm efficiency

can be analyzed by the following ways.

a. Analysis Framework.

b. Asymptotic Notations and its properties.

c. Mathematical analysis for Recursive algorithms.

d. Mathematical analysis for Non-recursive algorithms.

1.5 Analysis Framework

There are two kinds of efficiencies to analyze the efficiency of any algorithm. They are:

Time efficiency, indicating how fast the algorithm runs, and

Space efficiency, indicating how much extra memory it uses.

The algorithm analysis framework consists of the following:

Measuring an Input’s Size

Units for Measuring Running Time

Orders of Growth

Worst-Case, Best-Case, and Average-Case Efficiencies

(i) Measuring an Input’s Size

An algorithm’s efficiency is defined as a function of some parameter n indicating the

algorithm’s input size. In most cases, selecting such a parameter is quite straightforward.

For example, it will be the size of the list for problems of sorting, searching.

For the problem of evaluating a polynomial p(x) = anxn + . . . + a0 of degree n, the size of

the parameter will be the polynomial’s degree or the number of its coefficients, which is

larger by 1 than its degree.

In computing the product of two n × n matrices, the choice of a parameter indicating an

input size does matter.

Consider a spell-checking algorithm. If the algorithm examines individual characters of its

input, then the size is measured by the number of characters.

In measuring input size for algorithms solving problems such as checking primality of a

positive integer n. the input is just one number.

The input size by the number b of bits in the n’s binary representation is b=(log2 n)+1.

(ii) Units for Measuring Running Time

Some standard unit of time measurement such as a second, or millisecond, and so on can be

used to measure the running time of a program after implementing the algorithm.

Drawbacks

Dependence on the speed of a particular computer.

Dependence on the quality of a program implementing the algorithm.

The compiler used in generating the machine code.

The difficulty of clocking the actual running time of the program.

So, we need metric to measure an algorithm’s efficiency that does not depend on these

extraneous factors.

One possible approach is to count the number of times each of the algorithm’s operations

is executed. This approach is excessively difficult.

The most important operation (+, -, *, /) of the algorithm, called the basic operation.

Computing the number of times the basic operation is executed is easy. The total running time is

determined by basic operations count.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.10

(iii) Orders of Growth

A difference in running times on small inputs is not what really distinguishes efficient

algorithms from inefficient ones.

For example, the greatest common divisor of two small numbers, it is not immediately clear

how much more efficient Euclid’s algorithm is compared to the other algorithms, the

difference in algorithm efficiencies becomes clear for larger numbers only.

For large values of n, it is the function’s order of growth that counts just like the Table 1.1,

which contains values of a few functions particularly important for analysis of algorithms.

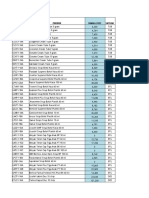

TABLE 1.1 Values (approximate) of several functions important for analysis of algorithms

n √𝑛 log2n n n log2n n2 n3 2n n!

1 1 0 1 0 1 1 2 1

2 1.4 1 2 2 4 4 4 2

4 2 2 4 8 16 64 16 24

8 2.8 3 8 2.4•101 64 5.1•102 2.6•102 4.0•104

10 3.2 3.3 10 3.3•101 102 103 103 3.6•106

16 4 4 16 6.4•101 2.6•102 4.1•103 6.5•104 2.1•1013

10 2 10 6.6 10 2

6.6•102 10 4

10 6

1.3•1030 9.3•10157

10 3 31 10 10 3

1.0•10 4

10 6

10 9

104 102 13 104 1.3•105 108 Very big

3.2•102 1012

105 17 105 1.7•106 computation

1010 1015

106 103 20 106 2.0•107 1012 1018

(iv) Worst-Case, Best-Case, and Average-Case Efficiencies

Consider Sequential Search algorithm some search key K

ALGORITHM SequentialSearch(A[0..n - 1], K)

//Searches for a given value in a given array by sequential search

//Input: An array A[0..n - 1] and a search key K

//Output: The index of the first element in A that matches K or -1 if there are no

// matching elements

i ←0

while i < n and A[i] ≠ K do

i ←i + 1

if i < n return

i else return -1

Clearly, the running time of this algorithm can be quite different for the same list size n.

In the worst case, there is no matching of elements or the first matching element can found

at last on the list. In the best case, there is matching of elements at first on the list.

Worst-case efficiency

The worst-case efficiency of an algorithm is its efficiency for the worst case input of size n.

The algorithm runs the longest among all possible inputs of that size.

For the input of size n, the running time is Cworst(n) = n.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.11

Best case efficiency

The best-case efficiency of an algorithm is its efficiency for the best case input of size n.

The algorithm runs the fastest among all possible inputs of that size n.

In sequential search, If we search a first element in list of size n. (i.e. first element equal to

a search key), then the running time is Cbest(n) = 1

Average case efficiency

The Average case efficiency lies between best case and worst case.

To analyze the algorithm’s average case efficiency, we must make some assumptions about

possible inputs of size n.

The standard assumptions are that

o The probability of a successful search is equal to p (0 ≤ p ≤ 1) and

o The probability of the first match occurring in the ith position of the list is the same

for every i.

Yet another type of efficiency is called amortized efficiency. It applies not to a single run of

an algorithm but rather to a sequence of operations performed on the same data structure.

1.6 ASYMPTOTIC NOTATIONS AND ITS PROPERTIES

Asymptotic notation is a notation, which is used to take meaningful statement about the

efficiency of a program.

The efficiency analysis framework concentrates on the order of growth of an algorithm’s

basic operation count as the principal indicator of the algorithm’s efficiency.

To compare and rank such orders of growth, computer scientists use three notations, they

are:

O - Big oh notation

Ω - Big omega notation

Θ - Big theta notation

Let t(n) and g(n) can be any nonnegative functions defined on the set of natural numbers.

The algorithm’s running time t(n) usually indicated by its basic operation count C(n), and g(n),

some simple function to compare with the count.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.12

A function t(n) is said to be in O(g(n)), denoted 𝑡 (𝑛) ∈ 𝑂(𝑔(𝑛)), if t (n) is bounded

(i) O - Big oh notation

above by some constant multiple of g(n) for all large n, i.e., if there exist some positive constant c

𝑡 (𝑛) ≤ 𝑐(𝑛) fo𝑟 𝑎𝑙𝑙 𝑛 ≥ 𝑛0.

and some nonnegative integer n0 such that

Where t(n) and g(n) are nonnegative functions defined on the set of natural numbers.

O = Asymptotic upper bound = Useful for worst case analysis = Loose bound

FIGURE 1.5 Big-oh notation: 𝑡 (𝑛) ∈ (𝑔(𝑛)).

Example 2: Prove the assertions 100𝑛 + 5 ∈ (𝑛2).

Proof: 100n + 5 ≤ 100n + n (for all n ≥ 5)

= 101n

≤ 101n2 (Ӭ 𝑛 ≤ 𝑛2)

Since, the definition gives us a lot of freedom in choosing specific values for constants c

Example 3: Prove the assertions 100𝑛 + 5 ∈ (𝑛).

and n0. We have c=101 and n0=5

Proof: 100n + 5 ≤ 100n + 5n (for all n ≥ 1)

= 105n

i.e., 100n + 5 ≤

105n i.e., t(n) ≤

cg(n)

±100𝑛 + 5 ∈ (𝑛) with c=105 and n0=1

A function t(n) is said to be in Ω(g(n)), denoted t(n) ∈ Ω(g(n)), if t(n) is bounded below by

(ii) Ω - Big omega notation

some positive constant multiple of g(n) for all large n, i.e., if there exist some positive constant c

and some nonnegative integer n0 such that

t (n) ≥ cg(n) for all n ≥ n0.

Where t(n) and g(n) are nonnegative functions defined on the set of natural numbers.

Ω = Asymptotic lower bound = Useful for best case analysis = Loose bound

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.13

FIGURE 1.6 Big-omega notation: t (n) ∈ Ω (g(n)).

Example 4: Prove the assertions n3+10n2+4n+2 ∈ Ω (n2).

Proof: n3+10n2+4n+2 ≥ n2 (for all n ≥ 0)

i.e., by definition t(n) ≥ cg(n), where c=1 and n0=0

A function t(n) is said to be in Θ(g(n)), denoted t(n) ∈ Θ(g(n)), if t(n) is bounded both

(iii) Θ - Big theta notation

above and below by some positive constant multiples of g(n) for all large n, i.e., if there exist some

positive constants c1 and c2 and some nonnegative integer n0 such that

c2g(n) ≤ t (n) ≤ c1g(n) for all n ≥ n0.

Where t(n) and g(n) are nonnegative functions defined on the set of natural numbers.

Θ = Asymptotic tight bound = Useful for average case analysis

FIGURE 1.7 Big-theta notation: t (n) ∈ Θ(g(n)).

Prove the assertions 𝑛 (𝑛 − 1) ∈ Θ(𝑛2).

1

Example 5:

2

1 𝑛 (𝑛 − 1) = 1 𝑛2 − 1 𝑛 ≤ 1 𝑛2 for all n ≥ 0.

Proof: First prove the right inequality (the upper bound):

2 2 2 2

1 𝑛 (𝑛 − 1) = 1 𝑛2 − 1 𝑛 ≥ 1 𝑛2 − [1 𝑛] [1 𝑛] for all n ≥ 2.

Second, we prove the left inequality (the lower bound):

2 2 2 2 2 2

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.14

Useful Property Involving the Asymptotic Notations

The following property, in particular, is useful in analyzing algorithms that comprise two

consecutively executed parts.

THEOREM: If t1(n) ∈ O(g1(n)) and t2(n) ∈ O(g2(n)), then t1(n) + t2(n) ∈ O(max{g1(n),

g2(n)}). (The analogous assertions are true for the Ω and Θ notations as well.)

PROOF: The proof extends to orders of growth the following simple fact about four arbitrary real

Since t1(n) ∈ O(g1(n)), there exist some positive constant c1 and some nonnegative integer

numbers a1, b1, a2, b2: if a1 ≤ b1 and a2 ≤ b2, then a1 + a2 ≤ 2 max{b1, b2}.

n1 such that

Similarly, since t2(n) ∈ O(g2(n)),

t1(n) ≤ c1g1(n) for all n ≥ n1.

t2(n) ≤ c2g2(n) for all n ≥ n2.

Let us denote c3 = max{c1, c2} and consider n ≥ max{n1, n2} so that we can use

both inequalities. Adding them yields the following:

t1(n) + t2(n) ≤ c1g1(n) + c2g2(n)

≤ c3g1(n) + c3g2(n)

= c3[g1(n) + g2(n)]

≤ c32 max{g1(n), g2(n)}.

Hence, t1(n) + t2(n) ∈ O(max{g1(n), g2(n)}), with the constants c and n0 required by

the definition O being 2c3 = 2 max{c1, c2} and max{n1, n2}, respectively.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.15

1.7 MATHEMATICAL ANALYSIS FOR RECURSIVE ALGORITHMS

1.8 General Plan for Analyzing the Time Efficiency of Recursive Algorithms

1. Decide on a parameter (or parameters) indicating an input’s size.

2. Identify the algorithm’s basic operation.

3. Check whether the number of times the basic operation is executed can vary on different

inputs of the same size; if it can, the worst-case, average-case, and best-case efficiencies

must be investigated separately.

4. Set up a recurrence relation, with an appropriate initial condition, for the number of times

the basic operation is executed.

5. Solve the recurrence or, at least, ascertain the order of growth of its solution.

EXAMPLE 1: Compute the factorial function F(n) = n! for an arbitrary nonnegative integer n.

Since n!= 1•. . . . • (n − 1) • n = (n − 1)! • n, for n ≥ 1 and 0!= 1 by definition, we can compute

F(n) = F(n − 1) • n with the following recursive algorithm. (ND 2015)

ALGORITHM F(n)

//Computes n! recursively

//Input: A nonnegative integer n

//Output: The value of n!

if n = 0 return 1

else return F(n − 1) * n

Algorithm analysis

For simplicity, we consider n itself as an indicator of this algorithm’s input size. i.e. 1.

The basic operation of the algorithm is multiplication, whose number of executions we

denote M(n). Since the function F(n) is computed according to the formula F(n) = F(n

−1)•n for n > 0.

The number of multiplications M(n) needed to compute it must satisfy the equality

M(n) = M(n − 1) + 1 for n >

0, M(0) = 0 for n = 0.

Method of backward substitutions

M(n) = M(n − 1) + 1 substitute M(n − 1) = M(n − 2) + 1

= [M(n − 2) + 1]+ 1

= M(n − 2) + 2 substitute M(n − 2) = M(n − 3) + 1

= [M(n − 3) + 1]+ 2

= M(n − 3) + 3

…

= M(n − i) + i

…

= M(n − n) + n

= n.

Therefore M(n)=n

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.16

EXAMPLE 2: consider educational workhorse of recursive algorithms: the Tower of Hanoi

puzzle. We have n disks of different sizes that can slide onto any of three pegs. Consider A

(source), B (auxiliary), and C (Destination). Initially, all the disks are on the first peg in order of

size, the largest on the bottom and the smallest on top. The goal is to move all the disks to the third

peg, using the second one as an auxiliary.

FIGURE 1.8 Recursive solution to the Tower of Hanoi puzzle.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.17

ALGORITHM TOH(n, A, C, B)

//Move disks from source to destination recursively

//Input: n disks and 3 pegs A, B, and C

//Output: Disks moved to destination as in the source order.

if n=1

Move nth disk directly from A to C

else

TOH(n - 1, A, B, C) // Move top n-1 disks from A to B using C

Move nth disk directly from A to C

TOH(n - 1, B, C, A) // Move top n-1 disks from B to C using A

Algorithm analysis

The number of moves M(n) depends on n only, and we get the following recurrence

equation for it:

M(n) = M(n − 1) + 1+ M(n − 1) for n > 1.

With the obvious initial condition M(1) = 1, we have the following recurrence relation for the

number of moves M(n):

M(n) = 2M(n − 1) + 1 for n > 1,

M(1) = 1.

We solve this recurrence by the same method of backward substitutions:

M(n) = 2M(n − 1) + 1 sub. M(n − 1) = 2M(n − 2) + 1

= 2[2M(n − 2) + 1]+ 1

= 22M(n − 2) + 2 + 1 sub. M(n − 2) = 2M(n − 3) + 1

2

= 2 [2M(n − 3) + 1]+ 2 + 1

= 23M(n − 3) + 22 + 2 + 1 sub. M(n − 3) = 2M(n − 4) + 1

4 3 2

= 2 M(n − 4) + 2 + 2 + 2 + 1

…

= 2iM(n − i) + 2i−1 + 2i−2 + . . . + 2 + 1= 2iM(n − i) + 2i − 1.

…

Since the initial condition is specified for n = 1, which is achieved for i = n − 1,

M(n) = 2n−1M(n − (n − 1)) + 2n−1 – 1 = 2n−1M(1) + 2n−1 − 1= 2n−1 + 2n−1 − 1= 2n − 1.

Thus, we have an exponential time algorithm

EXAMPLE 3: An investigation of a recursive version of the algorithm which finds the number of

binary digits in the binary representation of a positive decimal integer.

ALGORITHM BinRec(n)

//Input: A positive decimal integer n

//Output: The number of binary digits in n’s binary representation

else return BinRec( ہn/2])+ 1

if n = 1 return 1

The number of additions made in computing BinRec(ہn/2]) is A(ہn/2]), plus one more

Algorithm analysis

A(n) = A(ہn/2]) + 1 for n > 1.

addition is made by the algorithm to increase the returned value by 1. This leads to the recurrence

Since the recursive calls end when n is equal to 1 and there are no additions made

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.18

then, the initial condition is A(1) = 0.

The standard approach to solving such a recurrence is to solve it only for n = 2k

A(2k) = A(2k−1) + 1 for k > 0,

A(20) = 0.

backward substitutions

A(2k) = A(2k−1) + 1 substitute A(2k−1) = A(2k−2) + 1

= [A(2k−2) + 1]+ 1= A(2k−2) + 2 substitute A(2k−2) = A(2k−3) + 1

= [A(2k−3) + 1]+ 2 = A(2k−3) + 3 ...

...

= A(2k−i) + i

...

= A(2k−k) + k.

Thus, we end up with A(2k) = A(1) + k = k, or, after returning to the original variable n = 2k and

hence k = log2 n,

A(n) = log2 n ϵ Θ (log2 n).

1.9 MATHEMATICAL ANALYSIS FOR NON-RECURSIVE ALGORITHMS

General Plan for Analyzing the Time Efficiency of Nonrecursive Algorithms

1. Decide on a parameter (or parameters) indicating an input’s size.

2. Identify the algorithm’s basic operation (in the innermost loop).

3. Check whether the number of times the basic operation is executed depends only on the size

of an input. If it also depends on some additional property, the worst-case, average-case,

and, if necessary, best-case efficiencies have to be investigated separately.

4. Set up a sum expressing the number of times the algorithm’s basic operation is executed.

5. Using standard formulas and rules of sum manipulation either find a closed form formula

for the count or at the least, establish its order of growth.

EXAMPLE 1: Consider the problem of finding the value of the largest element in a list of n

numbers. Assume that the list is implemented as an array for simplicity.

ALGORITHM MaxElement(A[0..n − 1])

//Determines the value of the largest element in a given array

//Input: An array A[0..n − 1] of real numbers

//Output: The value of the largest element in A

maxval ←A[0]

for i ←1 to n − 1 do

if A[i]>maxval

maxval←A[i]

return maxval

Algorithm analysis

The measure of an input’s size here is the number of elements in the array, i.e., n.

There are two operations in the for loop’s body:

o The comparison A[i]> maxval and

o The assignment maxval←A[i].

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.19

The comparison operation is considered as the algorithm’s basic operation, because the

comparison is executed on each repetition of the loop and not the assignment.

The number of comparisons will be the same for all arrays of size n; therefore, there is no

need to distinguish among the worst, average, and best cases here.

Let C(n) denotes the number of times this comparison is executed. The algorithm makes

one comparison on each execution of the loop, which is repeated for each value of the

loop’s variable i within the bounds 1 and n − 1, inclusive. Therefore, the sum for C(n) is

calculated as follows:

𝑛−1

(𝑛) = ∑ 1

i=1

i.e., Sum up 1 in repeated n-1 times

(𝑛) = 𝑛 − 1

EXAMPLE 2: Consider the element uniqueness problem: check whether all the Elements in a given

array of n elements are distinct.

ALGORITHM UniqueElements(A[0..n − 1])

//Determines whether all the elements in a given array are distinct

//Input: An array A[0..n − 1]

//Output: Returns “true” if all the elements in A are distinct and “false” otherwise

for i ←0 to n − 2 do

for j ←i + 1 to n − 1 do

if A[i]= A[j ] return false

return true

Algorithm

analysis

The natural measure of the input’s size here is again n (the number of elements in the array).

Since the innermost loop contains a single operation (the comparison of two elements), we

should consider it as the algorithm’s basic operation.

The number of element comparisons depends not only on n but also on whether there are

equal elements in the array and, if there are, which array positions they occupy. We will

limit our investigation to the worst case only.

One comparison is made for each repetition of the innermost loop, i.e., for each value of the

loop variable j between its limits i + 1 and n − 1; this is repeated for each value of the outer

loop, i.e., for each value of the loop variable i between its limits 0 and n − 2.

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.20

EXAMPLE 3: Consider matrix multiplication. Given two n × n matrices A and B, find the time

efficiency of the definition-based algorithm for computing their product C = AB. By definition, C

is an n × n matrix whose elements are computed as the scalar (dot) products of the rows of matrix

A and the columns of matrix B:

where C[i, j ]= A[i, 0]B[0, j]+ . . . + A[i, k]B[k, j]+ . . . + A[i, n − 1]B[n − 1, j] for every pair of

indices 0 ≤ i, j ≤ n − 1.

ALGORITHM MatrixMultiplication(A[0..n − 1, 0..n − 1], B[0..n − 1, 0..n − 1])

//Multiplies two square matrices of order n by the definition-based algorithm

//Input: Two n × n matrices A and B

//Output: Matrix C = AB

for i ←0 to n − 1 do

for j ←0 to n − 1 do

C[i, j ]←0.0

C[i, j ]←C[i, j ]+ A[i, k] ∗ B[k, j]

for k←0 to n − 1 do

return C

Algorithm analysis

An input’s size is matrix order n.

There are two arithmetical operations (multiplication and addition) in the innermost loop.

But we consider multiplication as the basic operation.

Let us set up a sum for the total number of multiplications M(n) executed by the algorithm.

Since this count depends only on the size of the input matrices, we do not have to

investigate the worst-case, average-case, and best-case efficiencies separately.

There is just one multiplication executed on each repetition of the algorithm’s innermost

loop, which is governed by the variable k ranging from the lower bound 0 to the upper

bound n − 1.

Therefore, the number of multiplications made for every pair of specific values of variables

i and j is

The total number of multiplications M(n) is expressed by the following triple sum:

Now, we can compute this sum by using formula (S1) and rule (R1)

.

The running time of the algorithm on a particular machine m, we can do it by the product

Downloaded by Judith Petrizia

AD3351 Design and Analysis of Algorithms Unit I 1.21

EXAMPLE 4 The following algorithm finds the number of binary digits in the binary

representation of a positive decimal integer.

ALGORITHM Binary(n)

//Input: A positive decimal integer n

//Output: The number of binary digits in n’s binary representation

count ←1

while n > 1 do

n←𝖫n/2⏌

count ←count + 1

return count

Algorithm analysis

An input’s size is n.

The loop variable takes on only a few values between its lower and upper limits.

Since the value of n is about halved on each repetition of the loop, the answer should be

about log2 n.

The comparison > 1 will be executed is actually 𝖫 log2 n ⏌ + 1.

The exact formula for the number of times.

1.9 EMPIRICAL ANALYSIS OF ALGORITHMS

General Plan for the Empirical Analysis of Algorithm Time Efficiency

1. Understand the experiment’s purpose.

2. Decide on the efficiency metric M to be measured and the measurement

unit (an operation count vs. a time unit).

3. Decide on characteristics of the input sample (its range, size, and so on).

4. Prepare a program implementing the algorithm (or algorithms) for the experimentation.

5. Generate a sample of inputs.

6. Run the algorithm (or algorithms) on the sample’s inputs and record the data observed.

7. Analyze the data obtained.

1.10 ALGORITHM VISUALIZATION

Algorithm Visualization can be defined as the use of images to convey some useful

information about algorithms. That information can be a visual illustration of an algorithm’s

operation, of its performance on different kinds of inputs, or of its execution speed versus that

of other algorithms for the same problem.

To accomplish this goal, an algorithm visualization uses graphic elements—points, line

segments, two- or three-dimensional bars, and so on—to represent some “interesting events”

in the algorithm’s operation.

There are two principal variations of algorithm visualization:

Static algorithm visualization

Dynamic algorithm visualization, also called algorithm animation

Static algorithm visualization shows an algorithm’s progress through a series of still images.

Algorithm animation, on the other hand, shows a continuous, movie-like presentation of an

algorithm’s operations.

Downloaded by Judith Petrizia

You might also like

- Unit I Introduction: CS640 2 - Design and Analysis of Algorithms - Unit I - 1.1No ratings yetUnit I Introduction: CS640 2 - Design and Analysis of Algorithms - Unit I - 1.120 pages

- Design and Analysis of Algorithms Dr. N. Subhash Chandra: Course ObjectivesNo ratings yetDesign and Analysis of Algorithms Dr. N. Subhash Chandra: Course Objectives137 pages

- Objective: Analysis and Design of AlgorithmsNo ratings yetObjective: Analysis and Design of Algorithms46 pages

- Machine (RAM), Instructions Are Executed One After Another (The CentralNo ratings yetMachine (RAM), Instructions Are Executed One After Another (The Central3 pages

- ADA - BCS401 MOD 1 (Simplified Notes) @vtunetwork100% (2)ADA - BCS401 MOD 1 (Simplified Notes) @vtunetwork24 pages

- DAA UE19CS251 Unit1 Class1 V2 20210109205250No ratings yetDAA UE19CS251 Unit1 Class1 V2 2021010920525023 pages

- Design and Analysis of Algorithm Question Bank For Anna UniversityNo ratings yetDesign and Analysis of Algorithm Question Bank For Anna University21 pages

- International Journal of Antennas and Propagation - 2024 - Prithivirajan - A Compact Highly Isolated Four Element AntennaNo ratings yetInternational Journal of Antennas and Propagation - 2024 - Prithivirajan - A Compact Highly Isolated Four Element Antenna12 pages

- Melancholy in The Medieval World The Christian, Jewish, and Muslim TraditionsNo ratings yetMelancholy in The Medieval World The Christian, Jewish, and Muslim Traditions19 pages

- Biology Form 5 Chapter 2 Subtopic 2.3 Main Organ For TranspirationNo ratings yetBiology Form 5 Chapter 2 Subtopic 2.3 Main Organ For Transpiration16 pages

- Brine Shrimp (Artemia) : Conditions Needed To Survive (Habitat)No ratings yetBrine Shrimp (Artemia) : Conditions Needed To Survive (Habitat)3 pages

- Soft Drink Industry Profitability AnalysisNo ratings yetSoft Drink Industry Profitability Analysis3 pages

- Ranjan Kumar Jaiswal Kotak Mahindra BankNo ratings yetRanjan Kumar Jaiswal Kotak Mahindra Bank6 pages

- Perhitungan Tugas Besar Geometri Jalan Raya (Andre Gunawan 1622201019)No ratings yetPerhitungan Tugas Besar Geometri Jalan Raya (Andre Gunawan 1622201019)77 pages

- Coax Data Sheet - Coaxial Valve: Type MK 15 FK 15No ratings yetCoax Data Sheet - Coaxial Valve: Type MK 15 FK 152 pages

- MCQ Bank For Promotion Test - UDC LDC Assistant DEO DPS Associate StenoNo ratings yetMCQ Bank For Promotion Test - UDC LDC Assistant DEO DPS Associate Steno354 pages

- Reisetter, Matt - Reisetter For Iowa House - 1631 - DR2 - SummaryNo ratings yetReisetter, Matt - Reisetter For Iowa House - 1631 - DR2 - Summary1 page