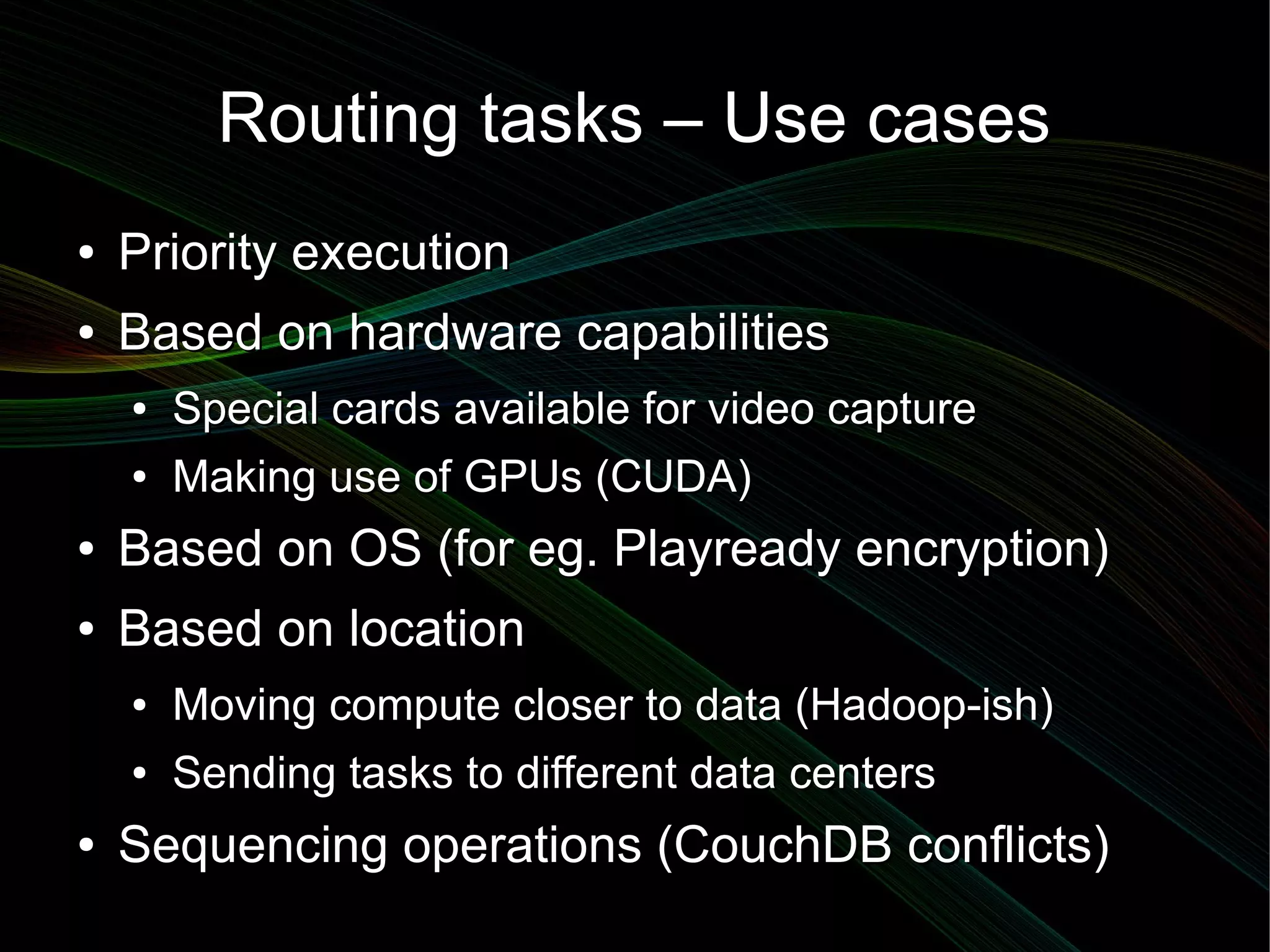

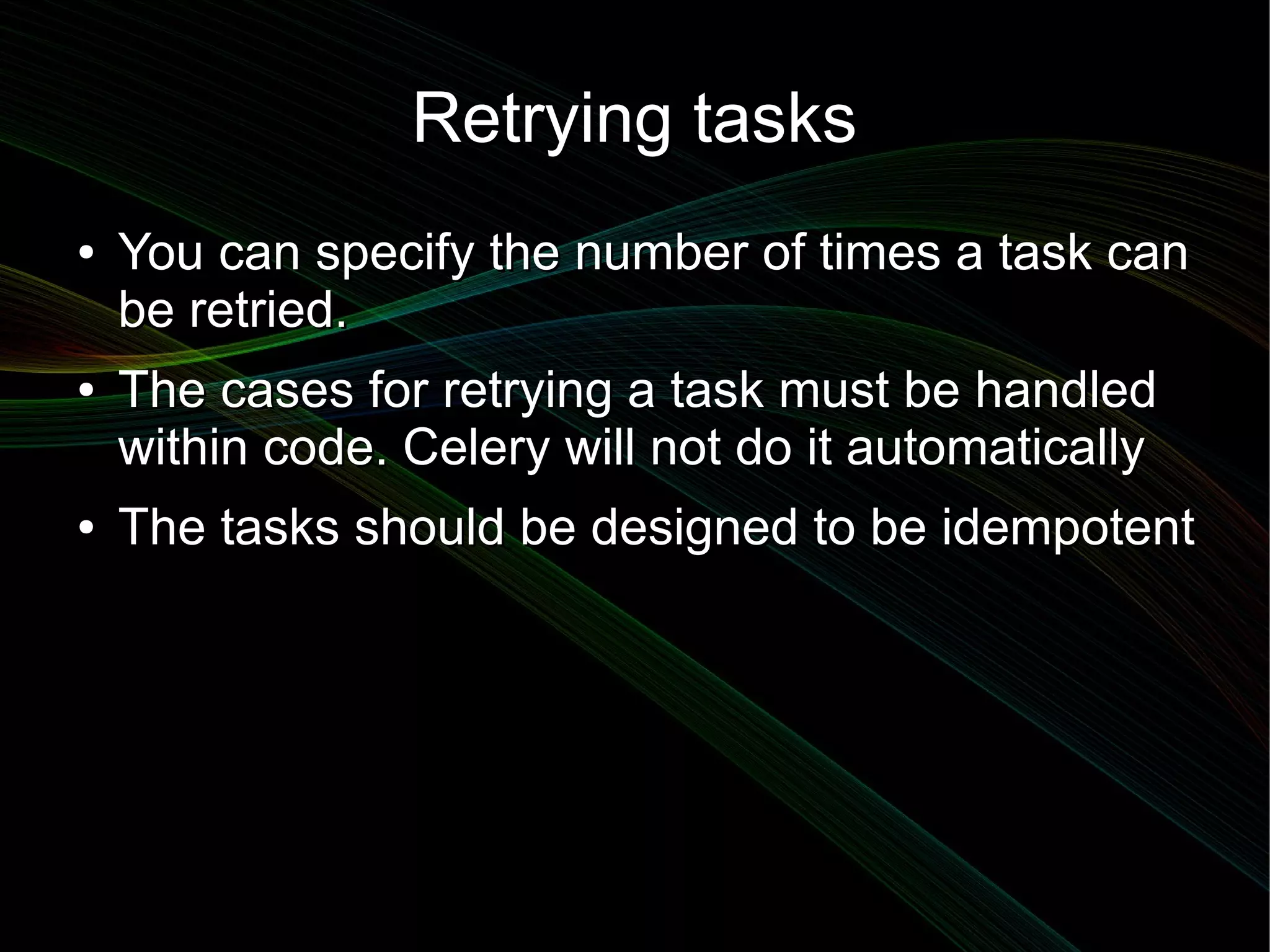

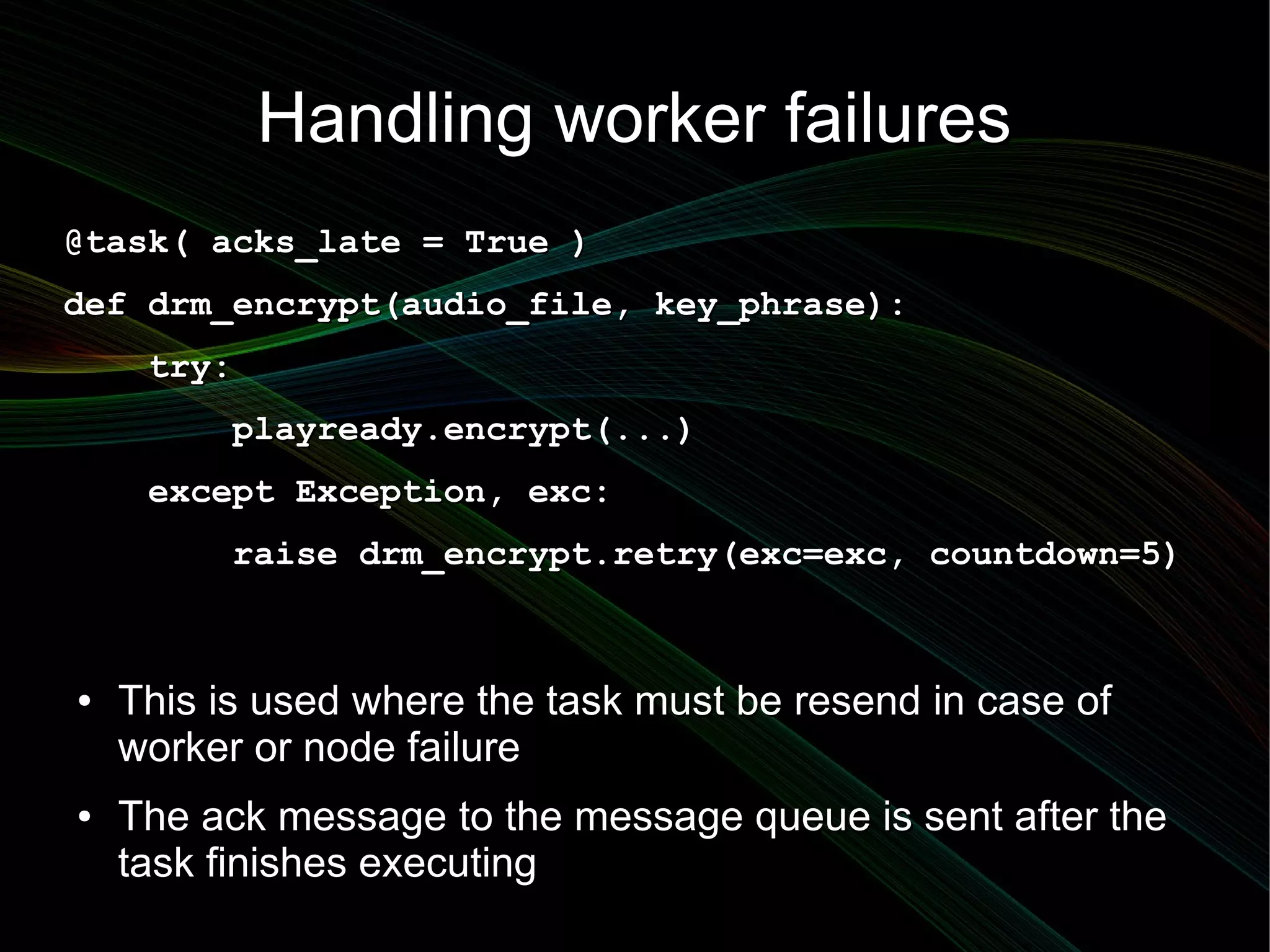

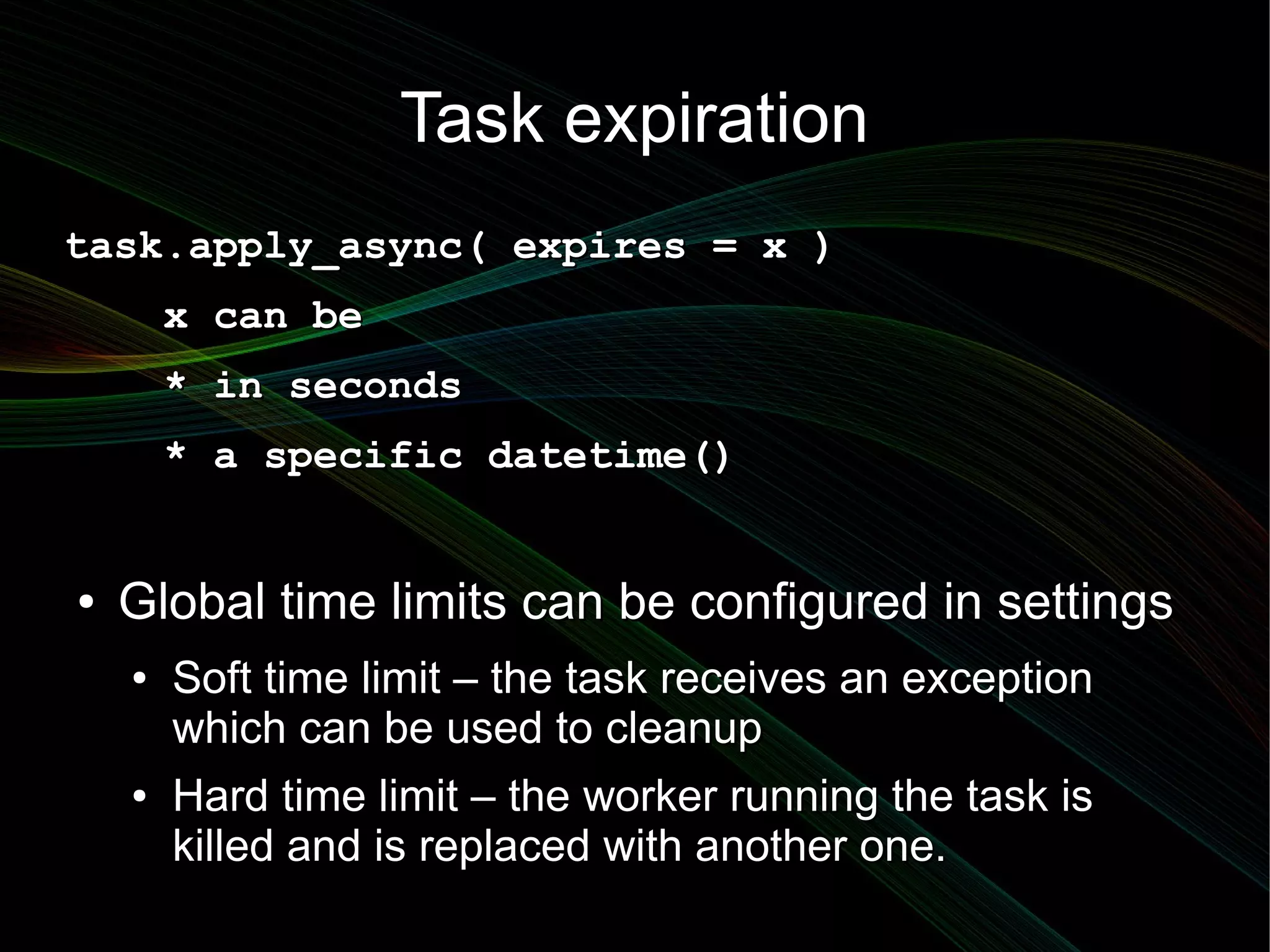

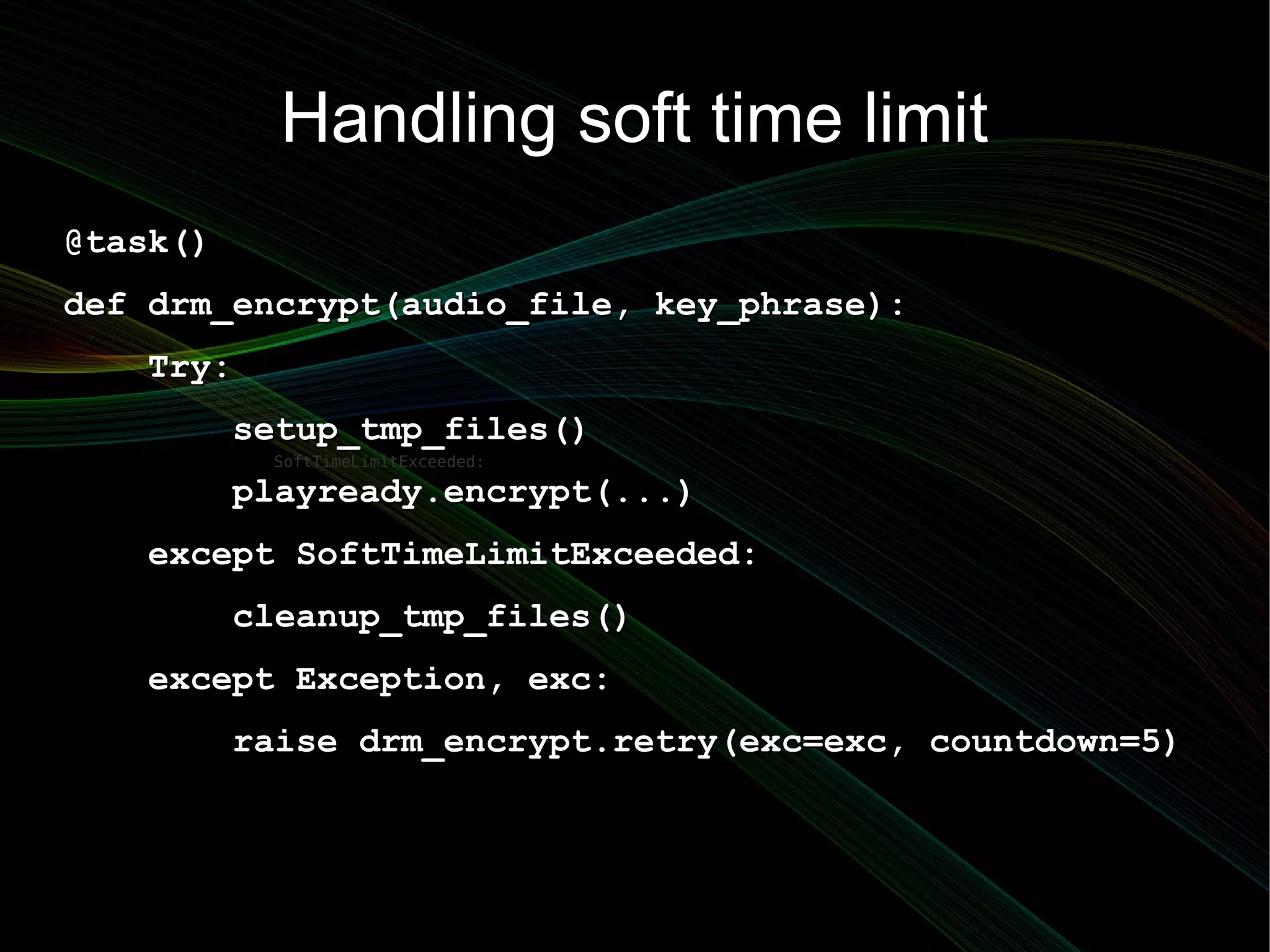

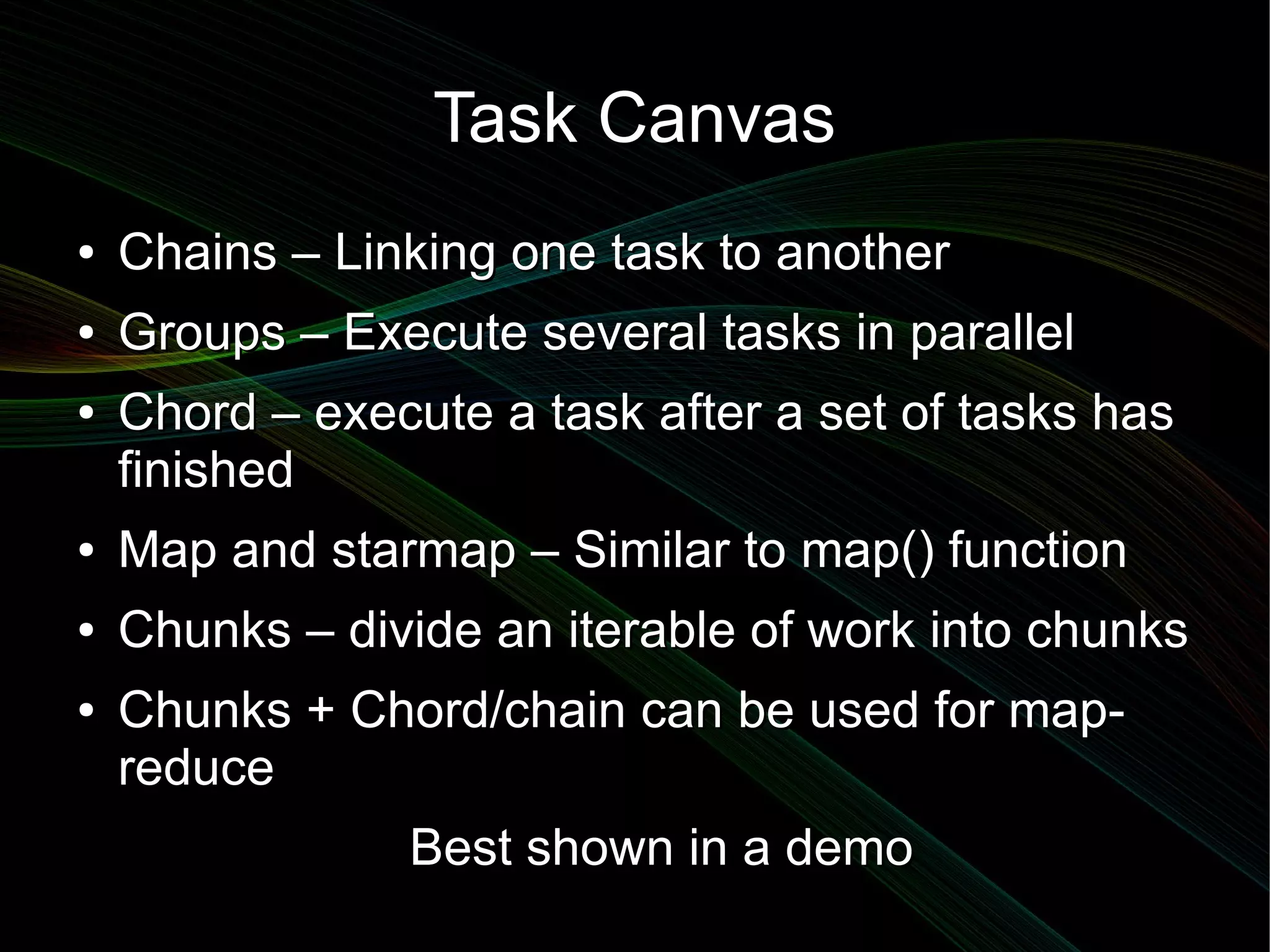

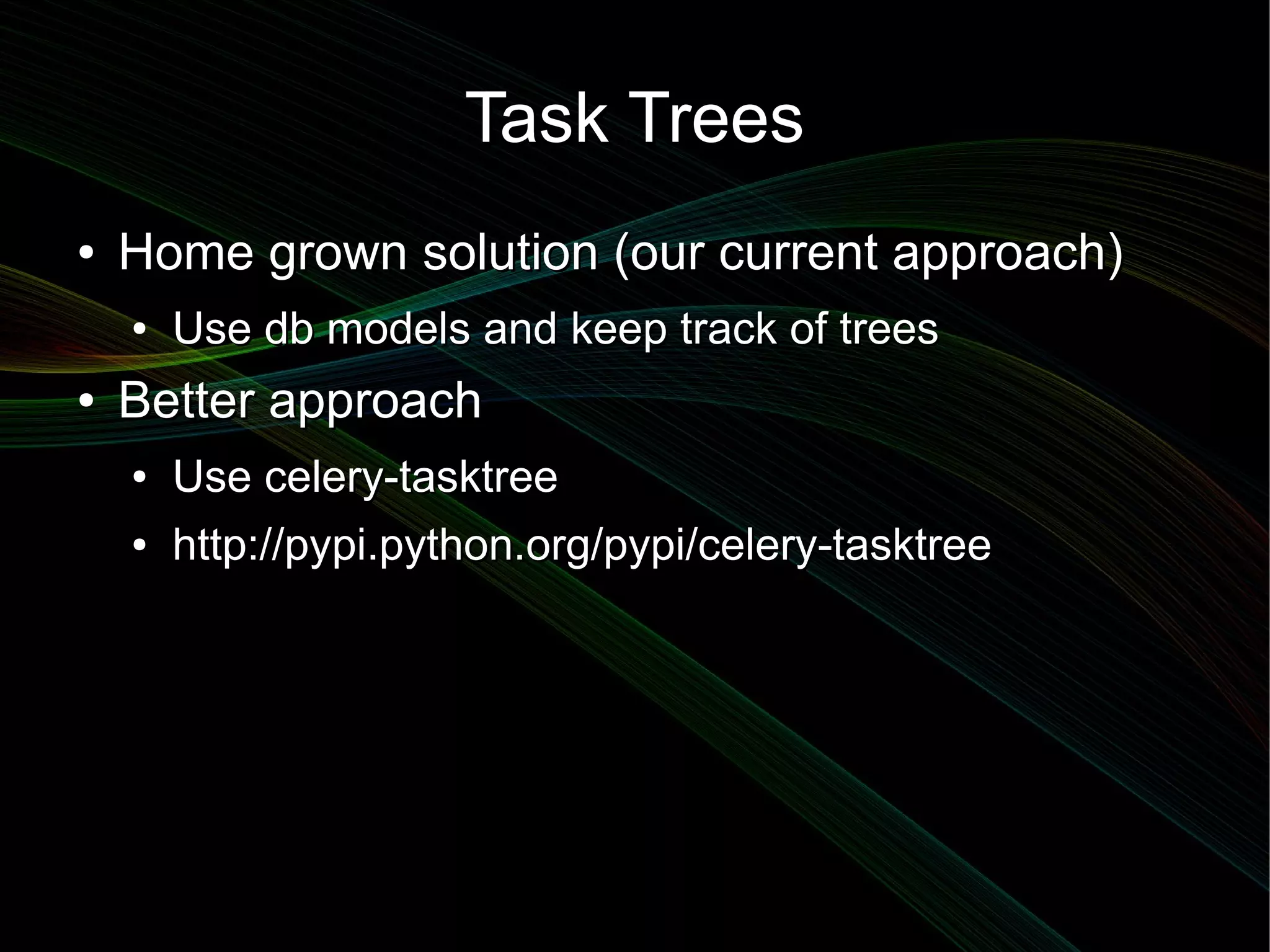

The document provides an overview of advanced task management using Celery, detailing its features like asynchronous task processing, task routing, retries, and task coordination. It discusses various use cases for Celery, including managing long-running jobs and scheduling tasks, as well as concepts such as task trees and batch processing. Additionally, the document touches on aspects of handling worker failures, task revocation, expiration, and monitoring tools available for Celery deployments.

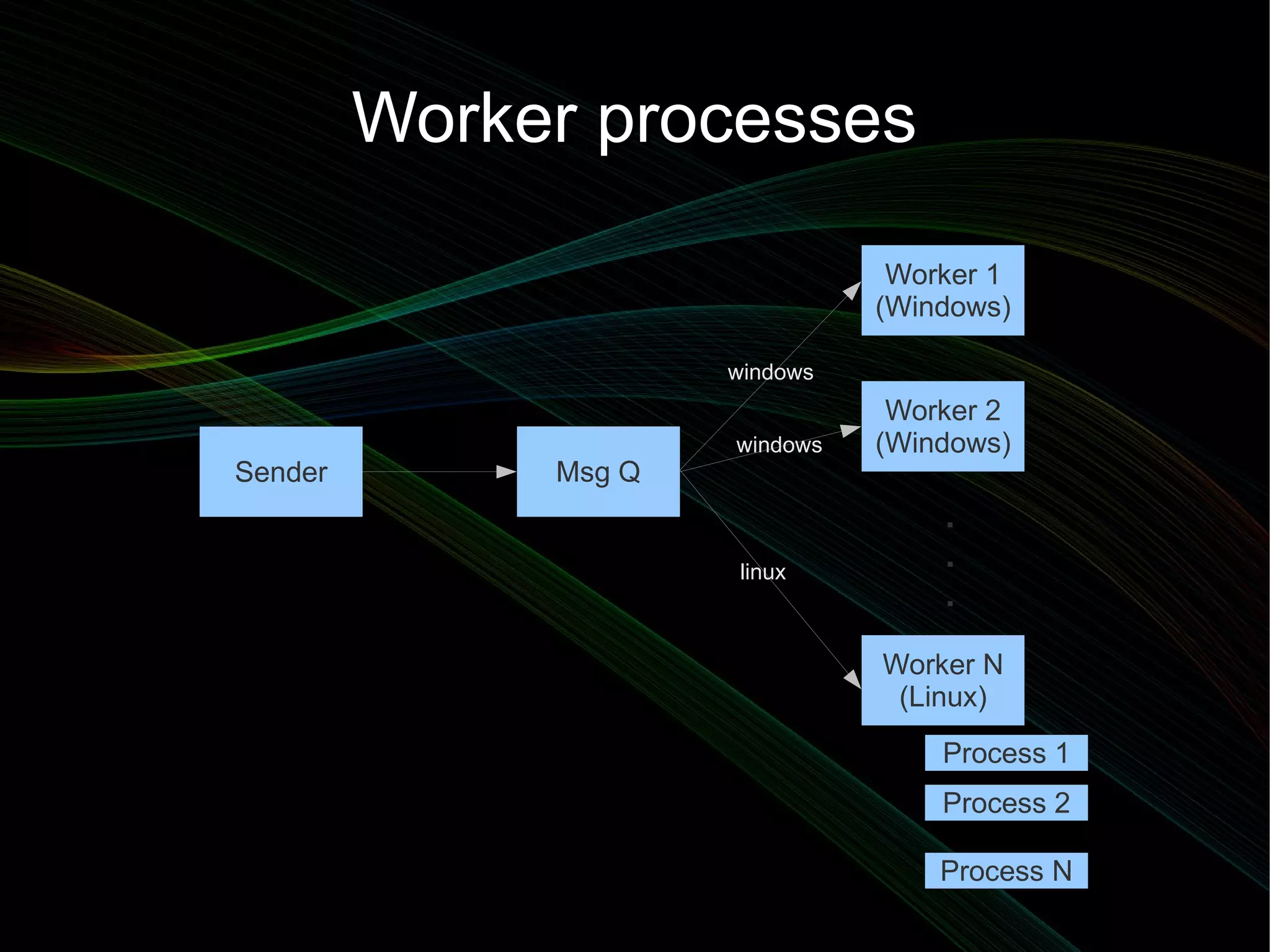

![Sample Code

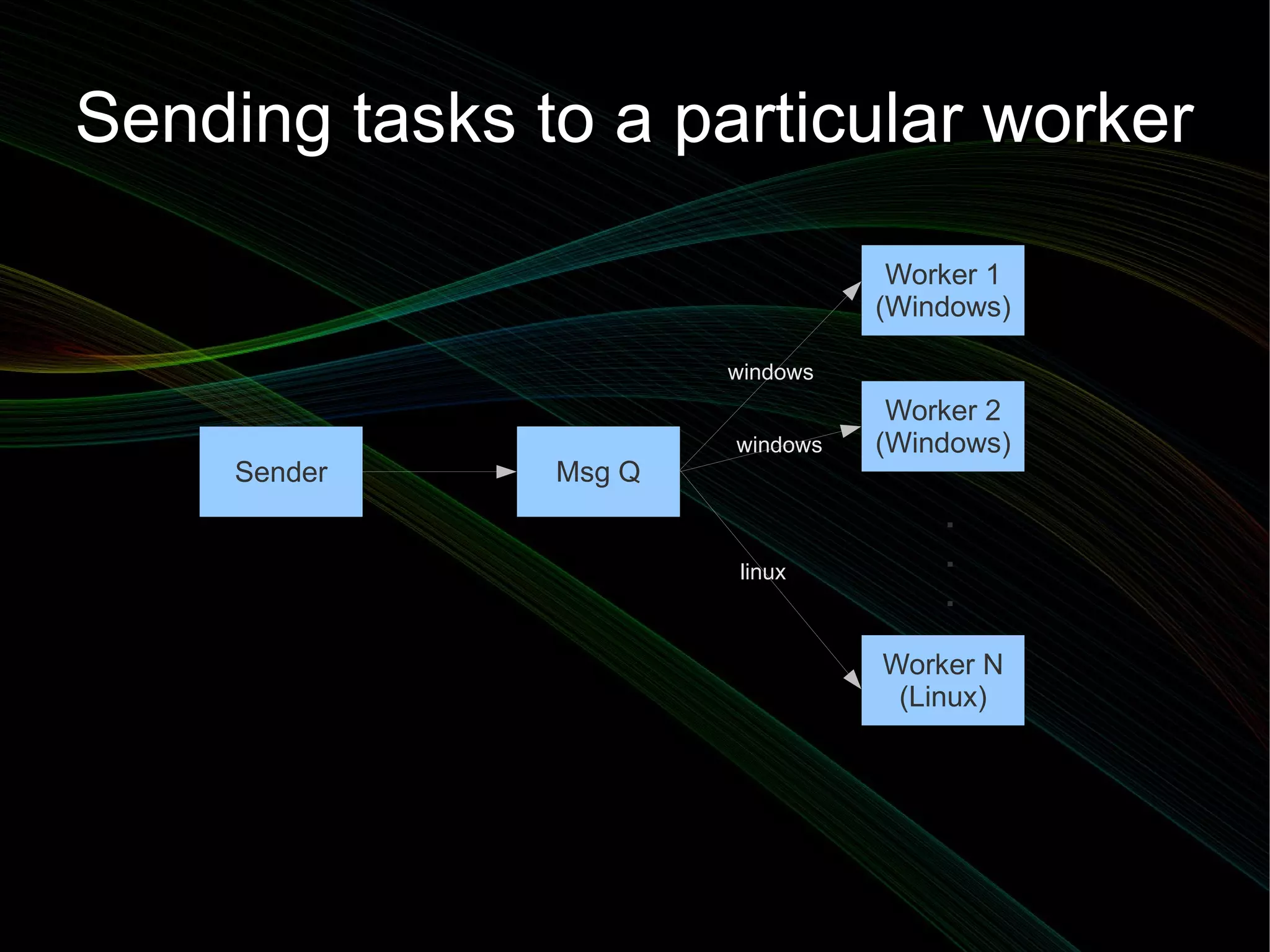

from celery.task import task

@task(queue = 'windows')

def drm_encrypt(audio_file, key_phrase):

...

r = drm_encrypt.apply_async( args = [afile, key],

queue = 'windows' )

#Start celery worker with queues options

$ celery worker -Q windows](https://image.slidesharecdn.com/celery-pycon-2012-121008010028-phpapp01/75/Advanced-task-management-with-Celery-10-2048.jpg)

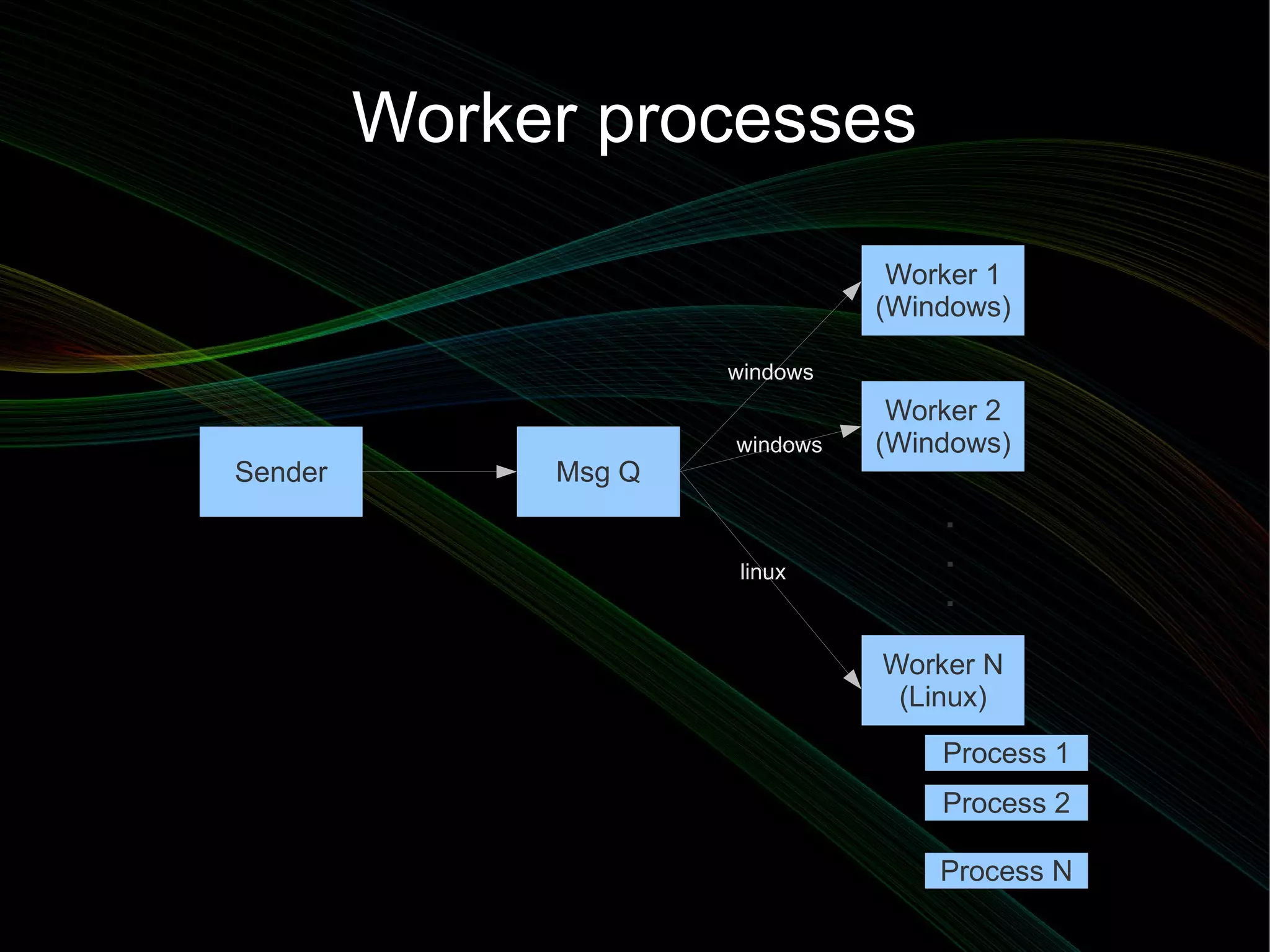

![Task trees

[ task 1 ] --- spawns --- [ task 2 ] ---- spawns --> [ task 2_1 ]

| [ task 2_3 ]

|

+------ [ task 3 ] ---- spawns --> [ task 3_1 ]

| [ task 3_2 ]

|

+------ [ task 4 ] ---- links ---> [ task 5 ]

|(spawns)

|

|

[ task 8 ] <--- links <--- [ task 6 ]

|(spawns)

[ task 7 ]](https://image.slidesharecdn.com/celery-pycon-2012-121008010028-phpapp01/75/Advanced-task-management-with-Celery-21-2048.jpg)

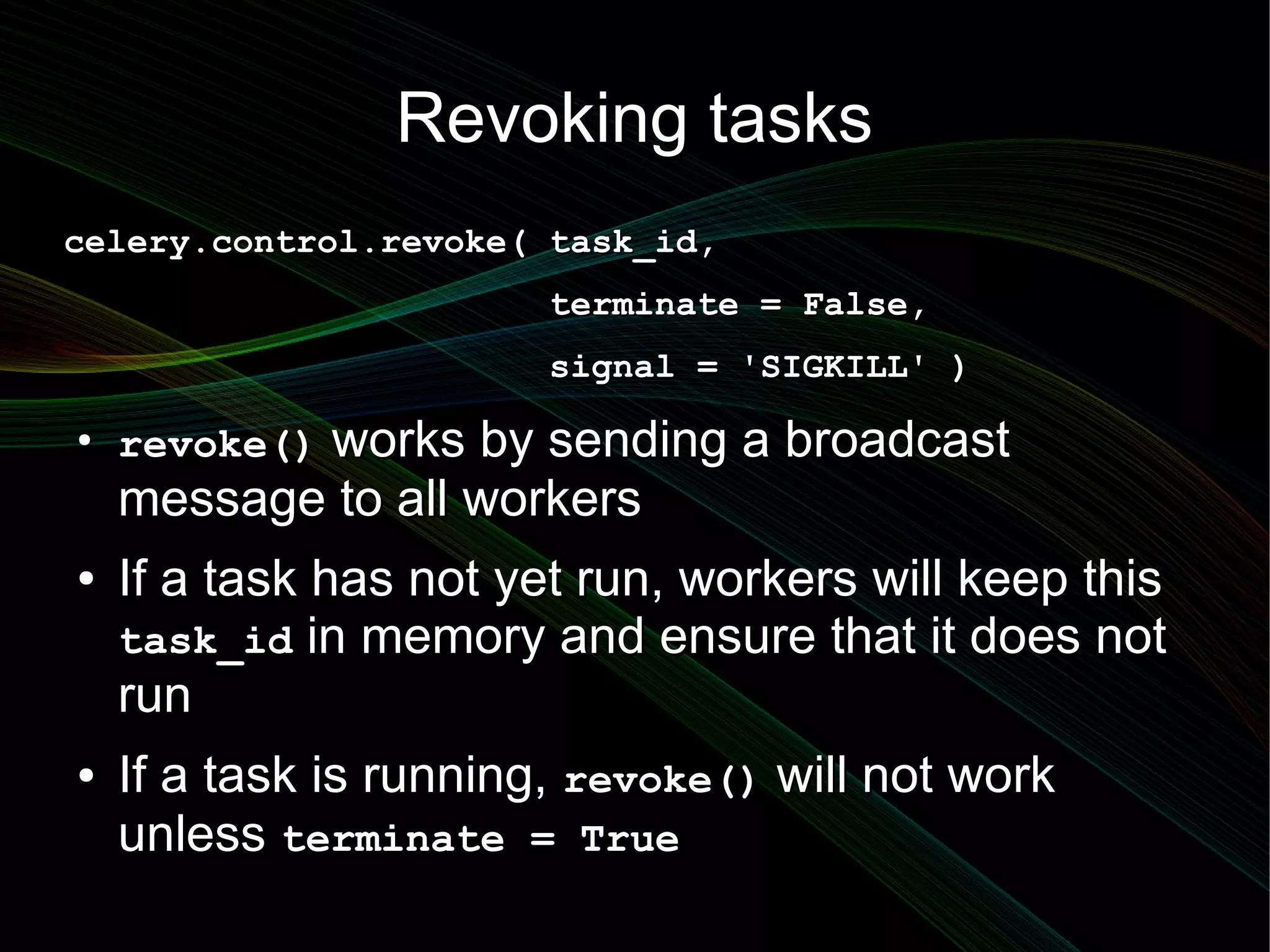

![Celery Batches

from celery.contrib.batches import Batches

@task( base=Batches, flush_every=50, flush_interval=10 )

def collect_stats( requests ):

items = {}

for request in requests:

item_id = request.kwargs['item_id']

items[ item_id ] = get_obj( item_id )

items[ item_id ].count += 1

# Sync to db

collect_stats.delay( item_id = 45 )

collect_stats.delay( item_id = 57 )](https://image.slidesharecdn.com/celery-pycon-2012-121008010028-phpapp01/75/Advanced-task-management-with-Celery-24-2048.jpg)