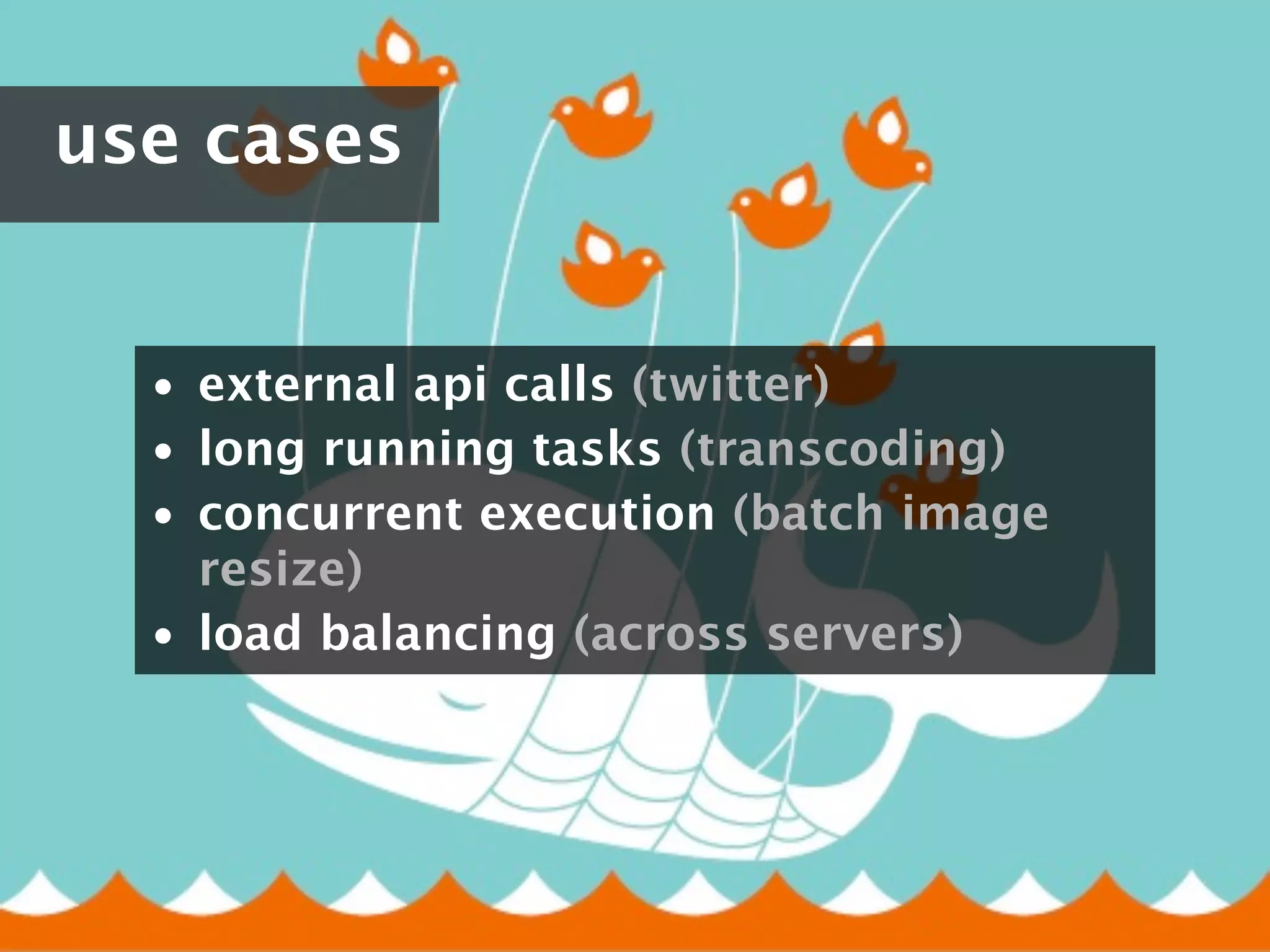

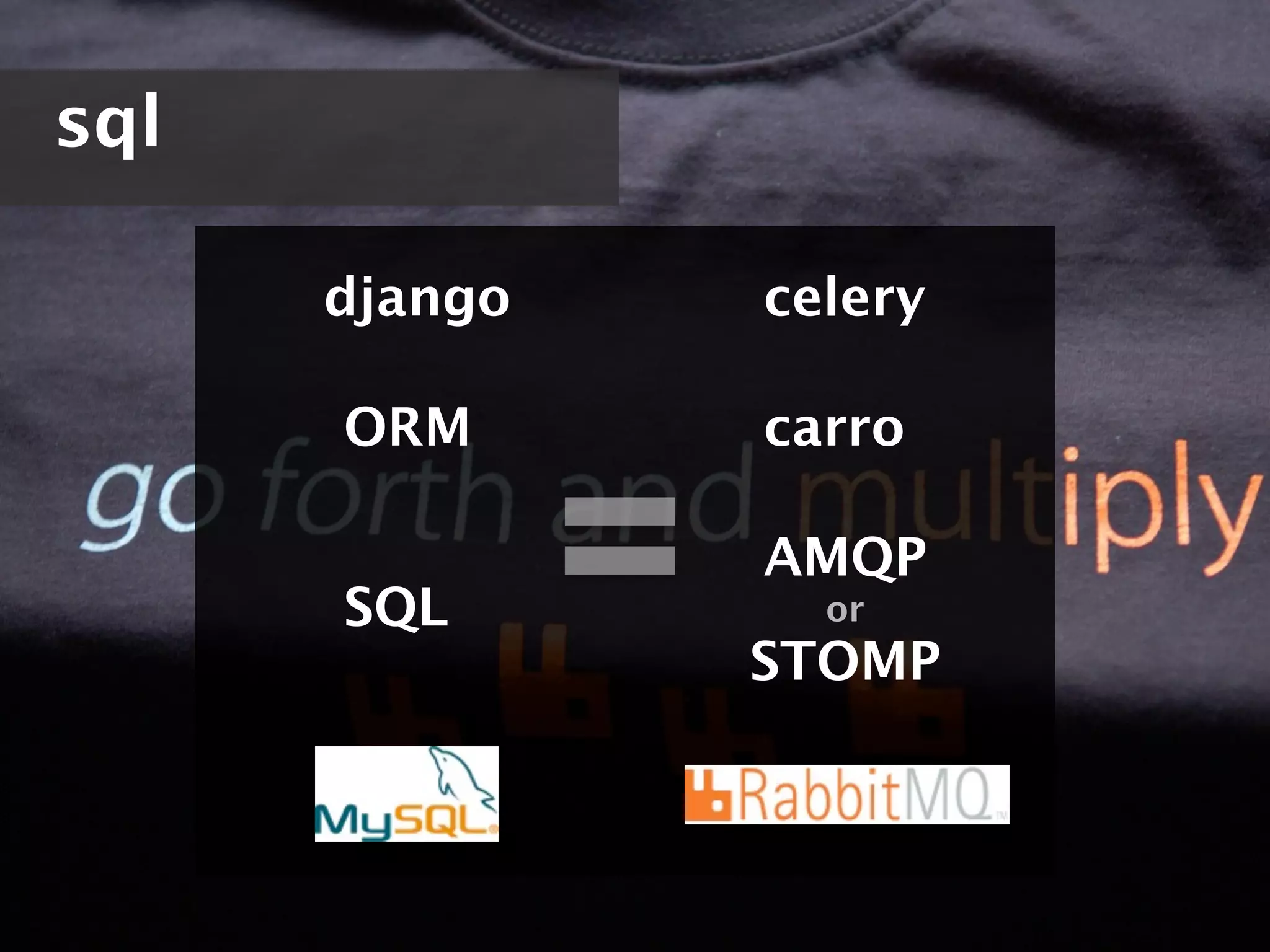

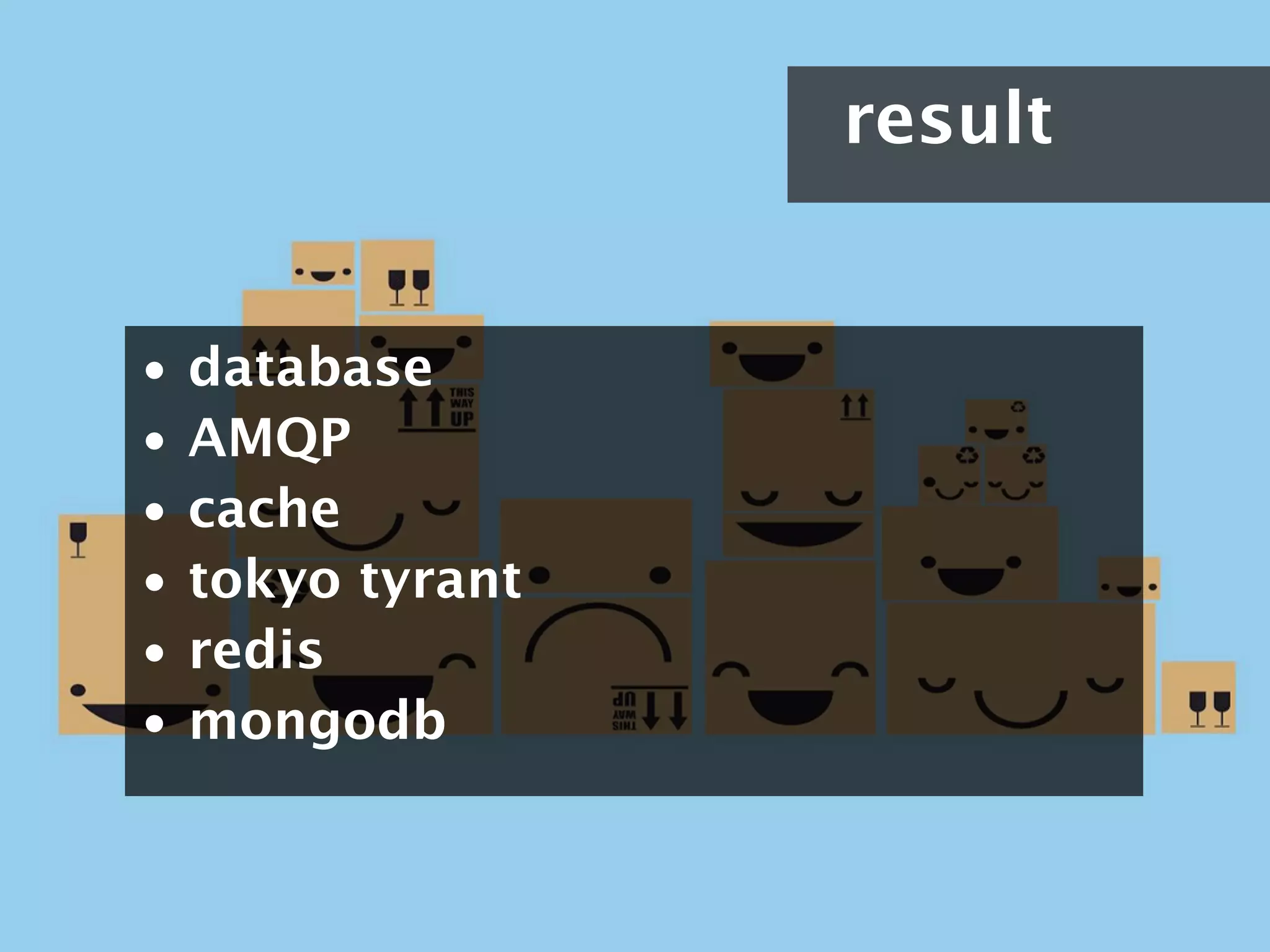

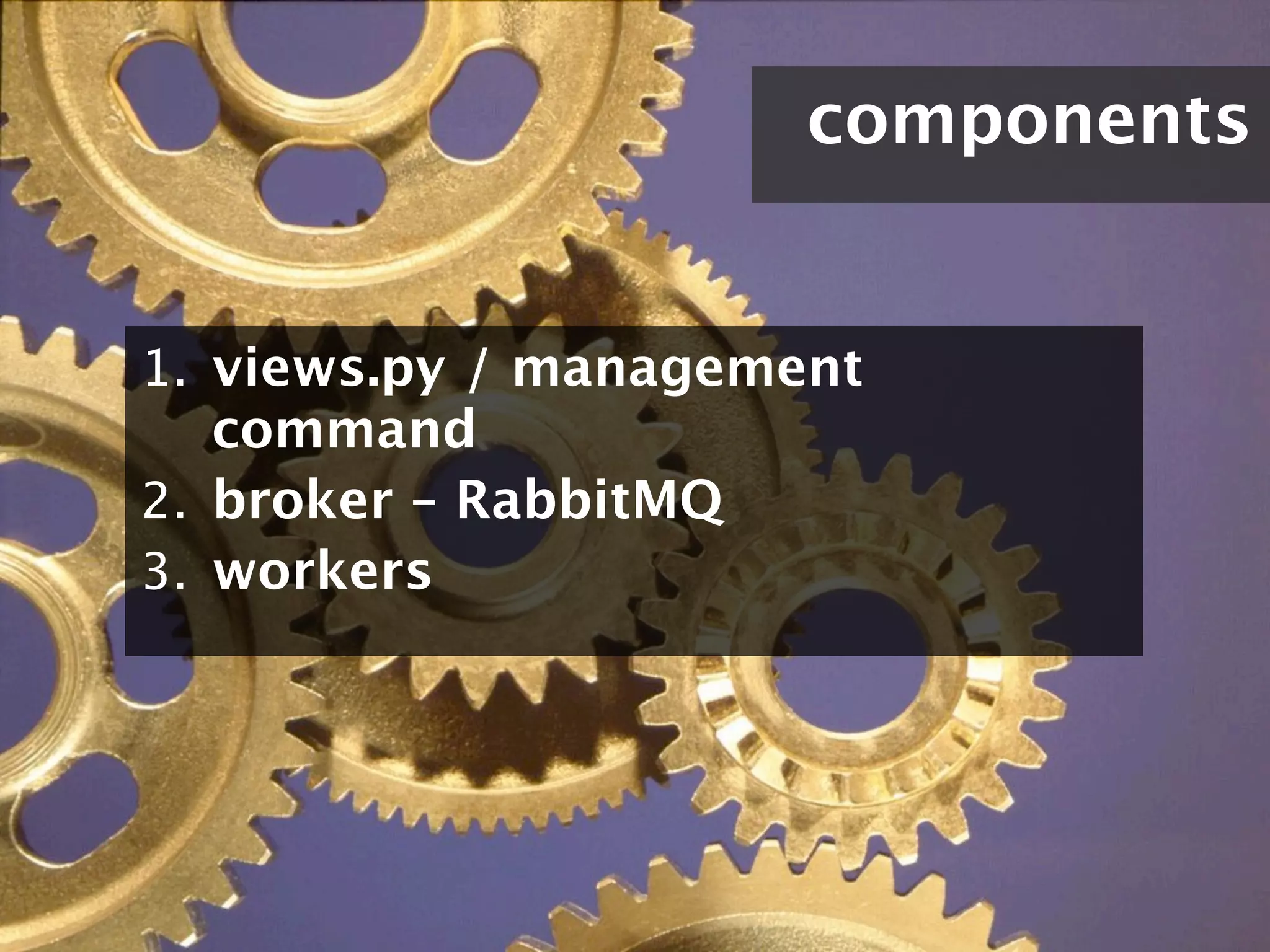

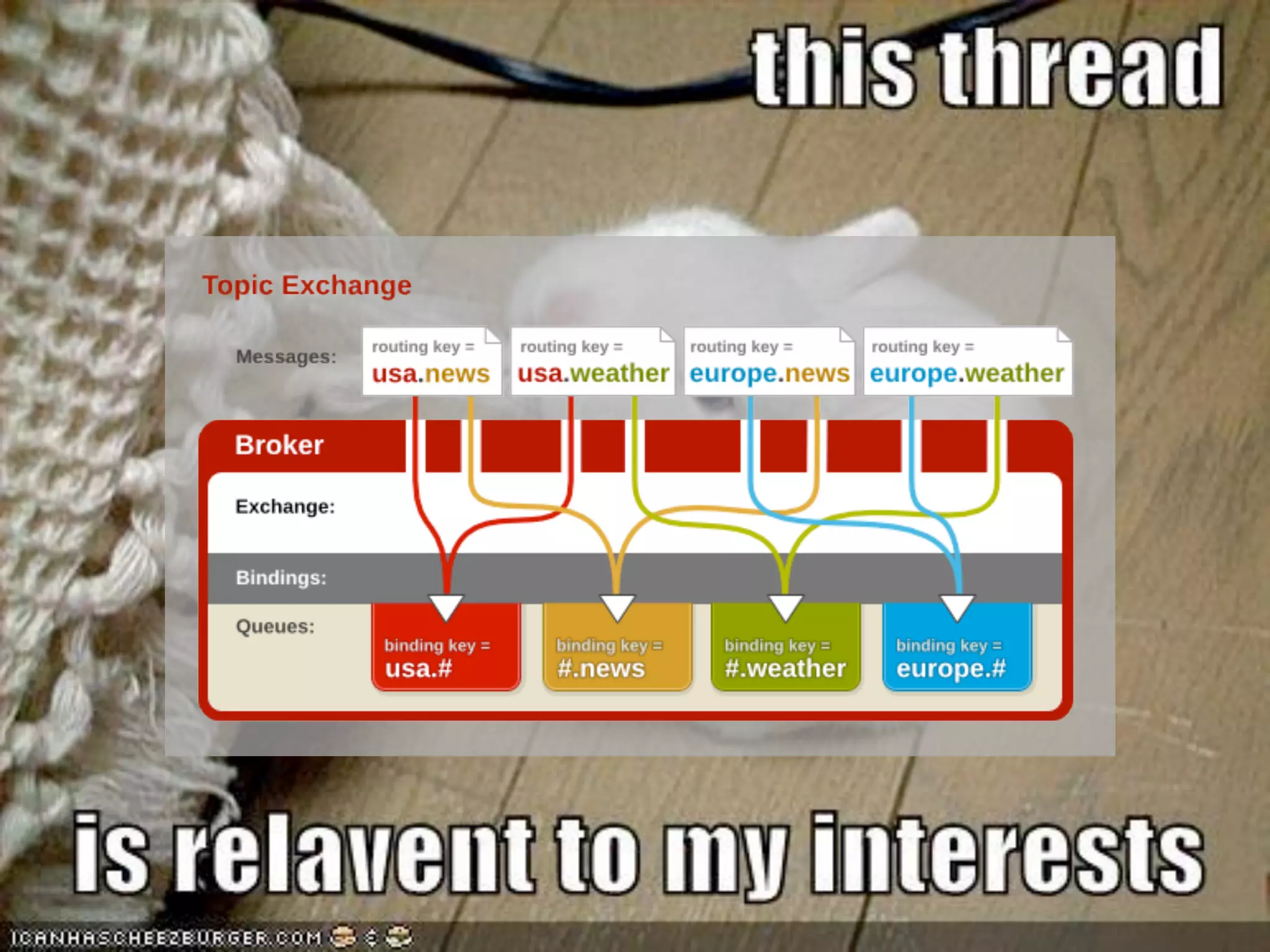

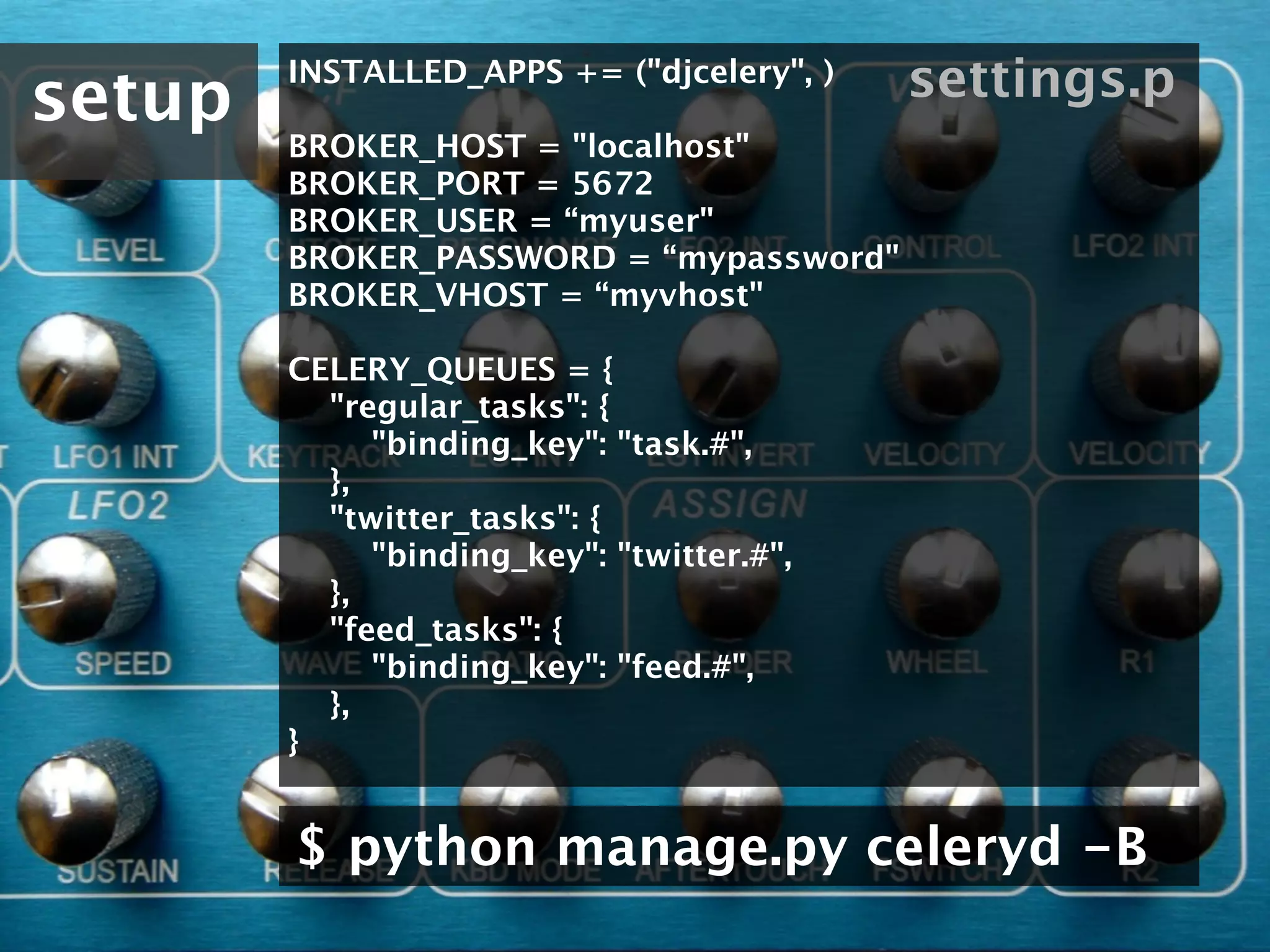

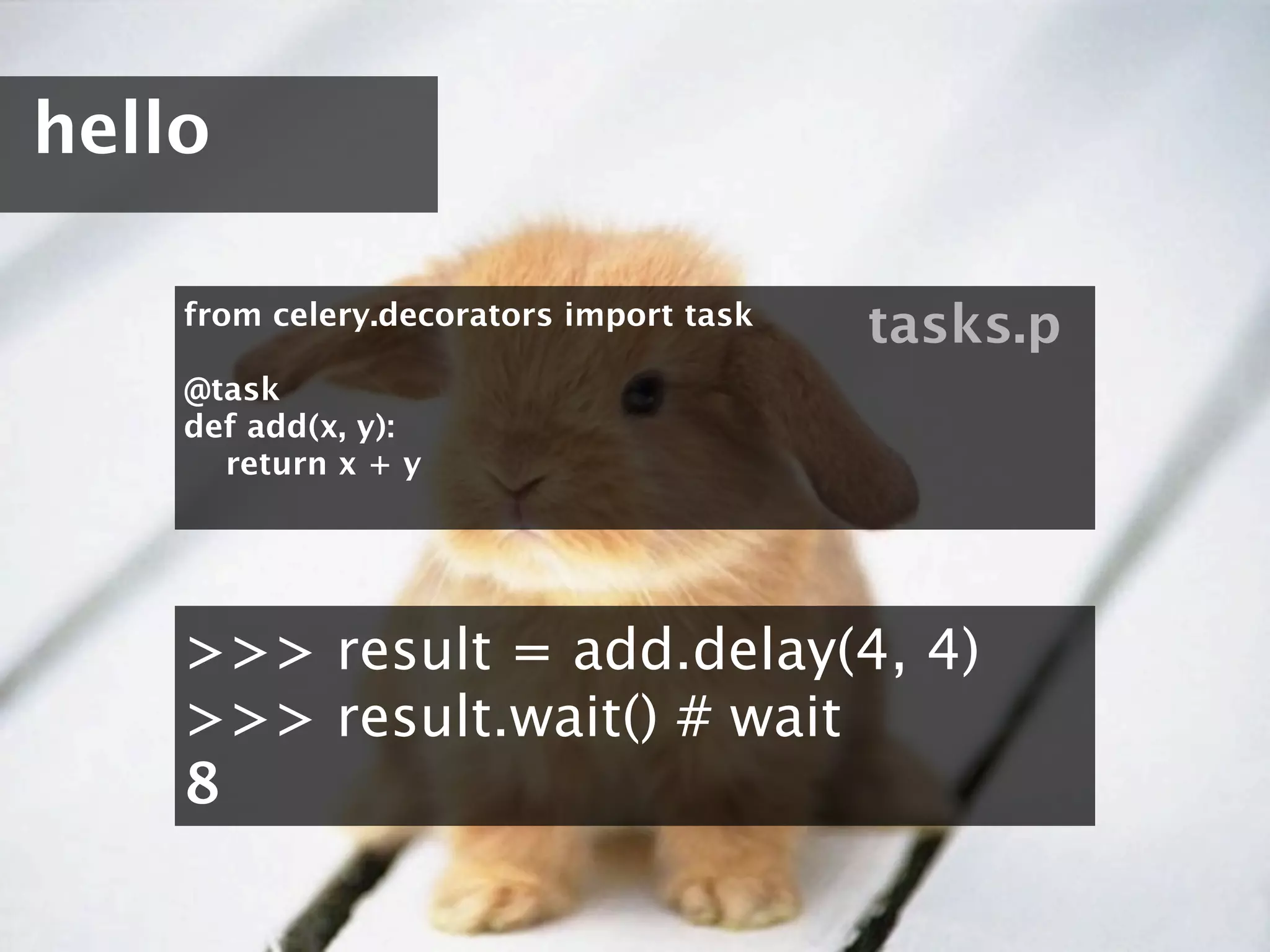

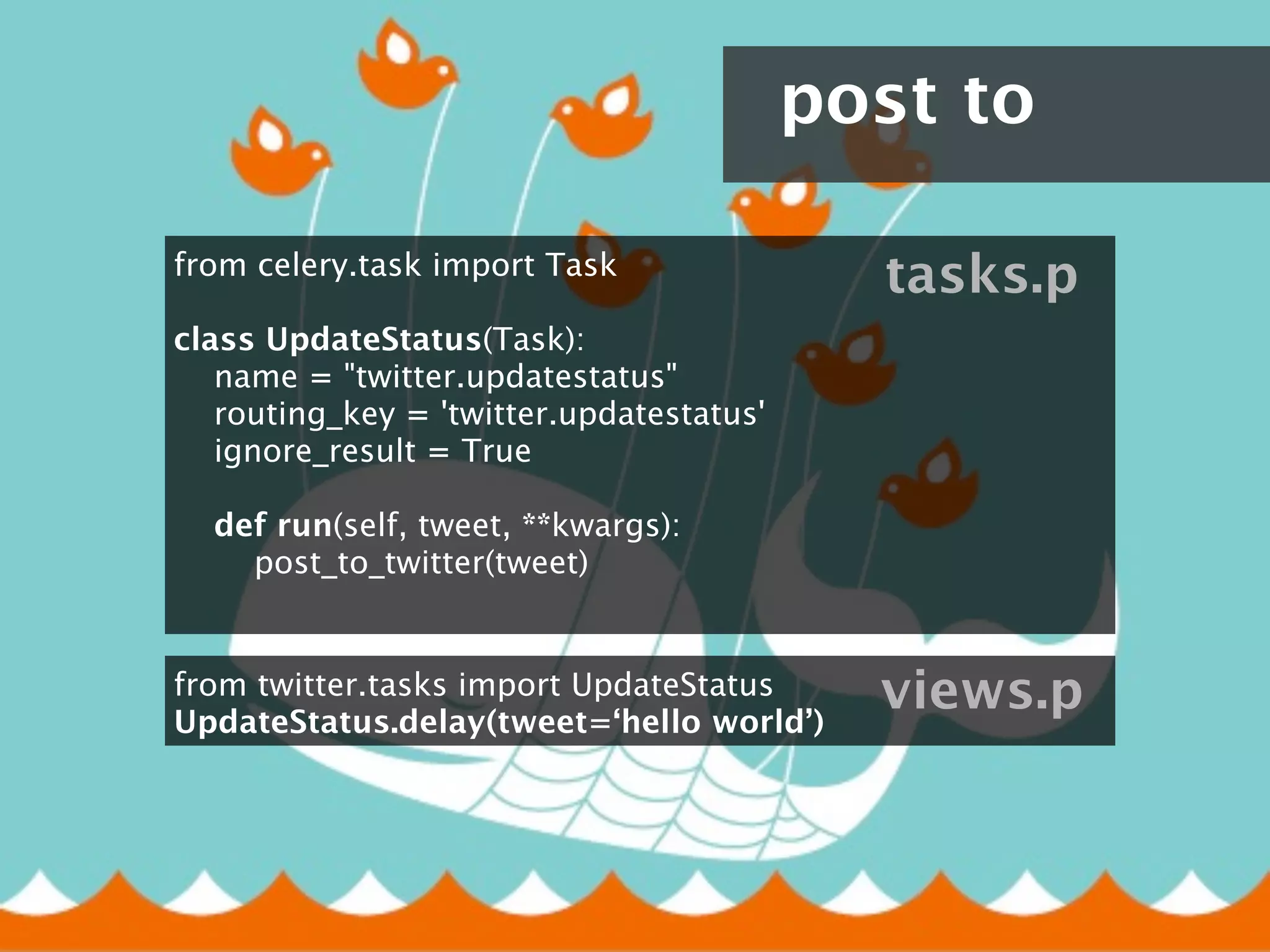

Celery is an open source asynchronous task queue/job queue based on distributed message passing. It allows tasks to be executed concurrently, in the background across multiple servers. Common use cases include running long tasks like API calls or image processing without blocking the main process, load balancing tasks across servers, and concurrent execution of batch jobs. Celery uses message brokers like RabbitMQ to asynchronously queue and schedule tasks. Tasks are defined as Python functions which get executed by worker processes. The workflow involves defining tasks, adding tasks to the queue from views or management commands, and having workers process the tasks.

![retry / rate

from celery.task import Task

tasks.p

class UpdateStatus(Task):

name = "twitter.updatestatus"

routing_key = 'twitter.updatestatus'

ignore_result = True

default_retry_delay = 5 * 60

max_retries = 12 # 1 hour retry

rate_limit = ‘10/s’

def run(self, tweet, **kwargs):

try:

post_to_twitter(tweet)

except Exception, exc:

# If twitter crashes retry

self.retry([tweet,], kwargs, exc=exc)

from twitter.tasks import UpdateStatus views.p

UpdateStatus.delay(tweet=‘hello world’)](https://image.slidesharecdn.com/djangocelery1-100727052925-phpapp02/75/Django-Celery-19-2048.jpg)