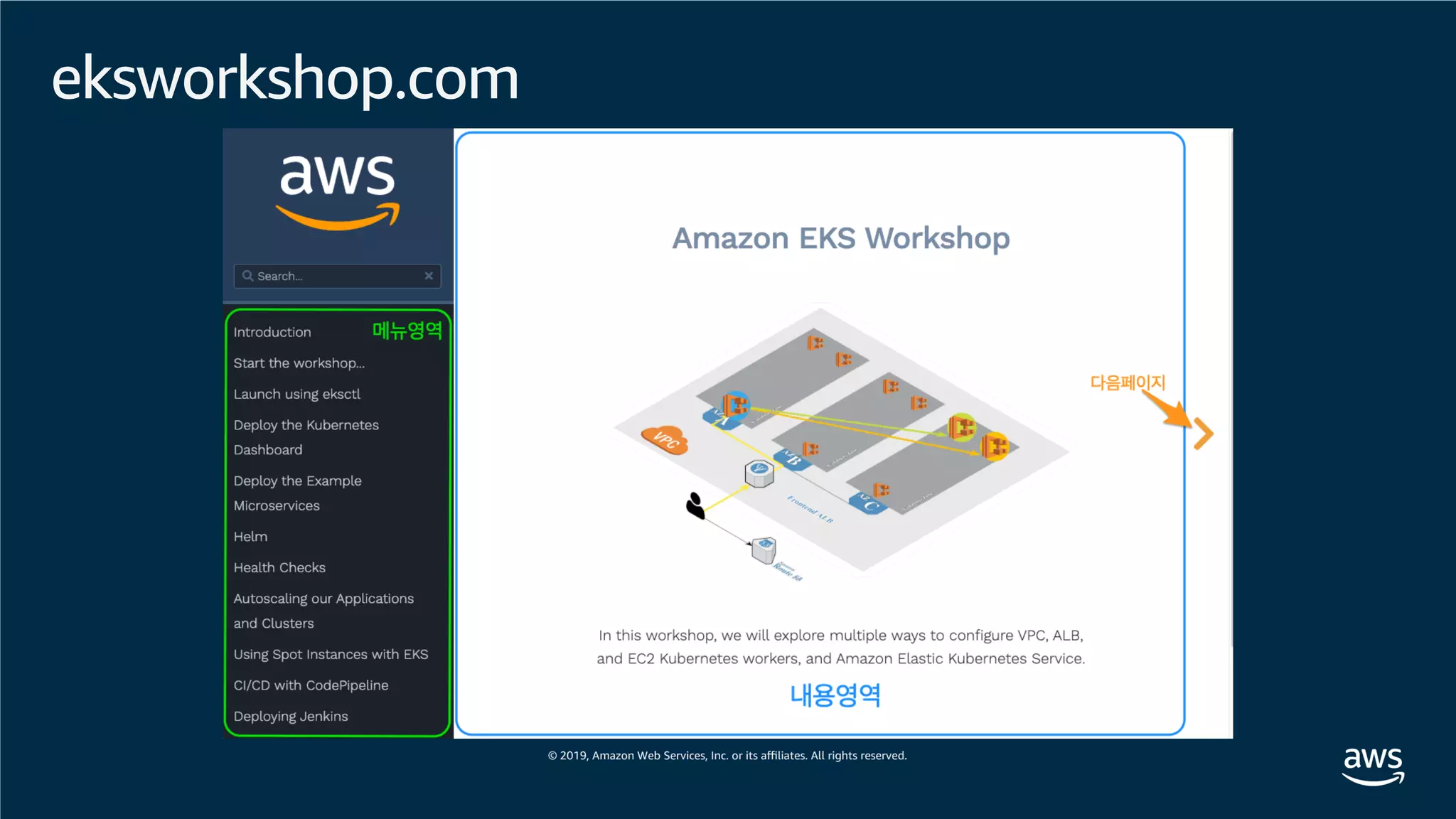

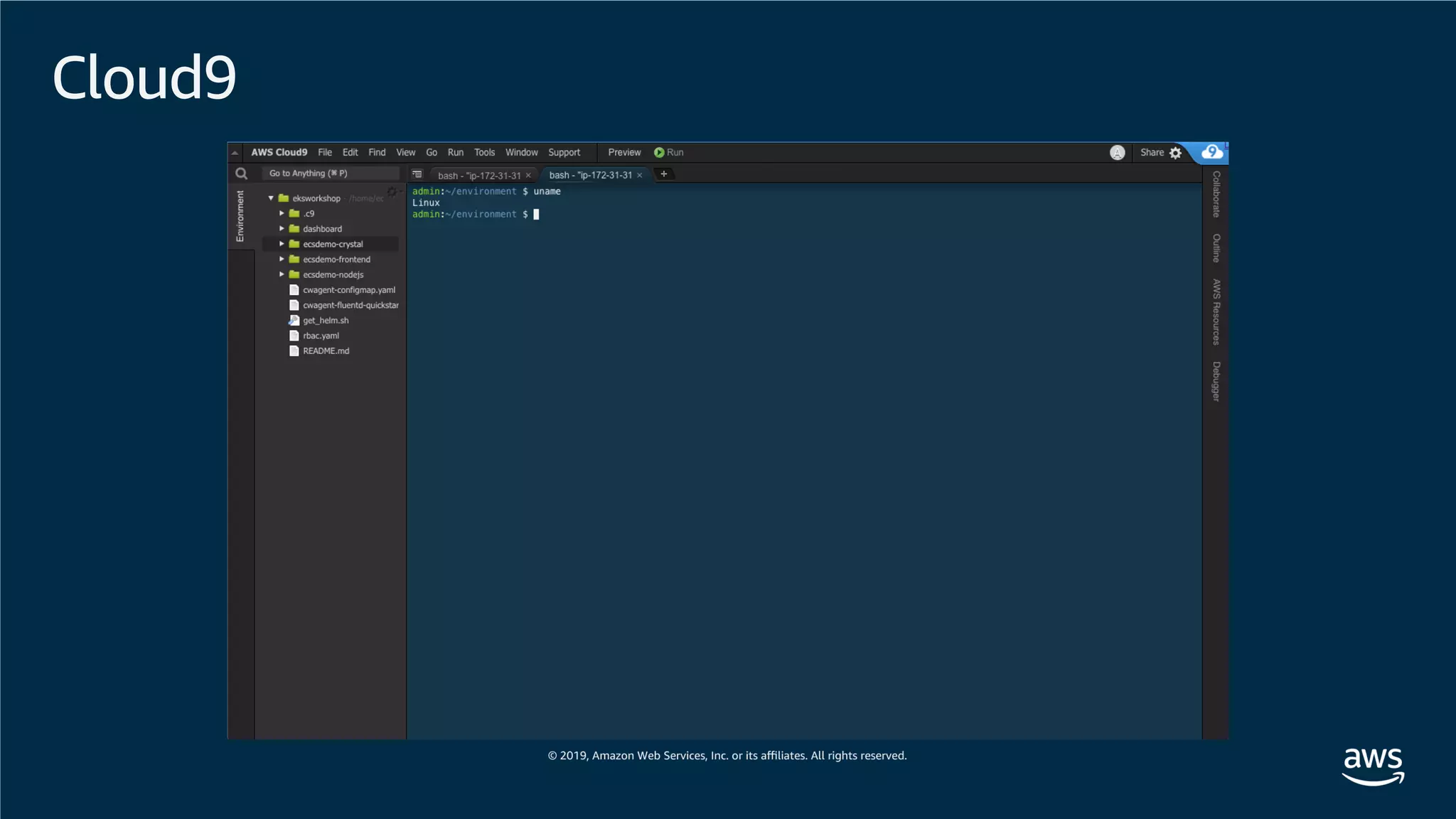

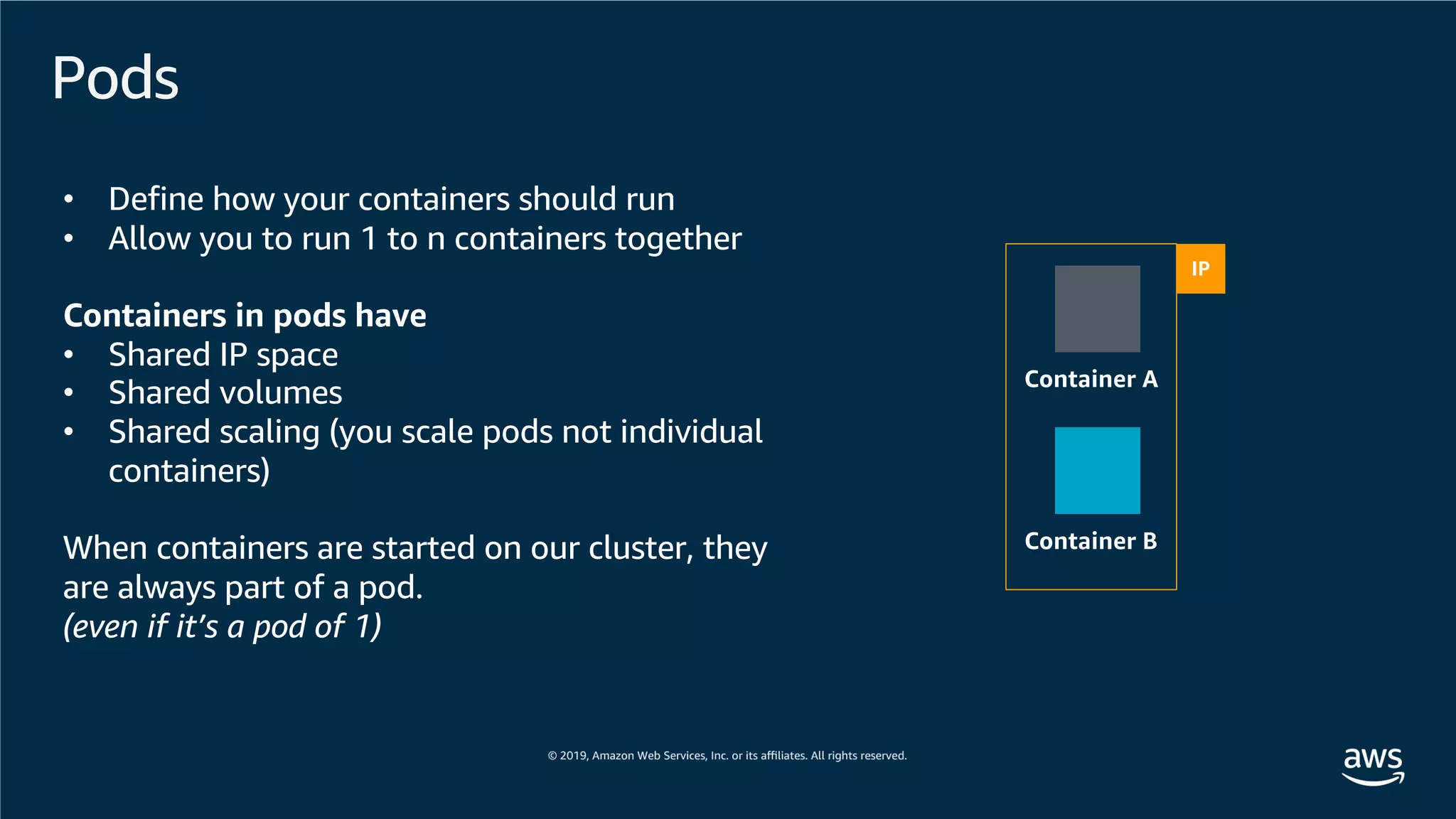

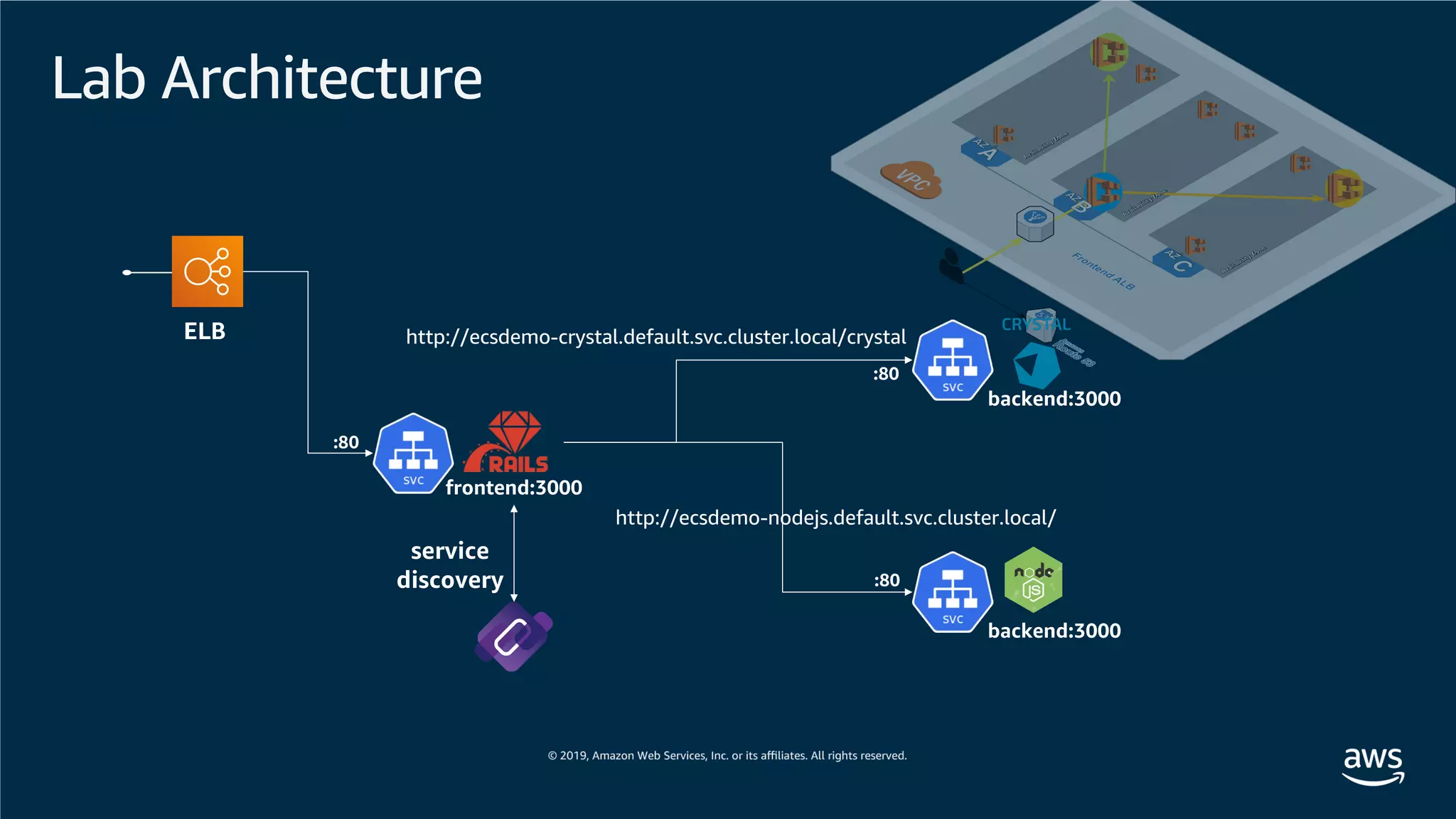

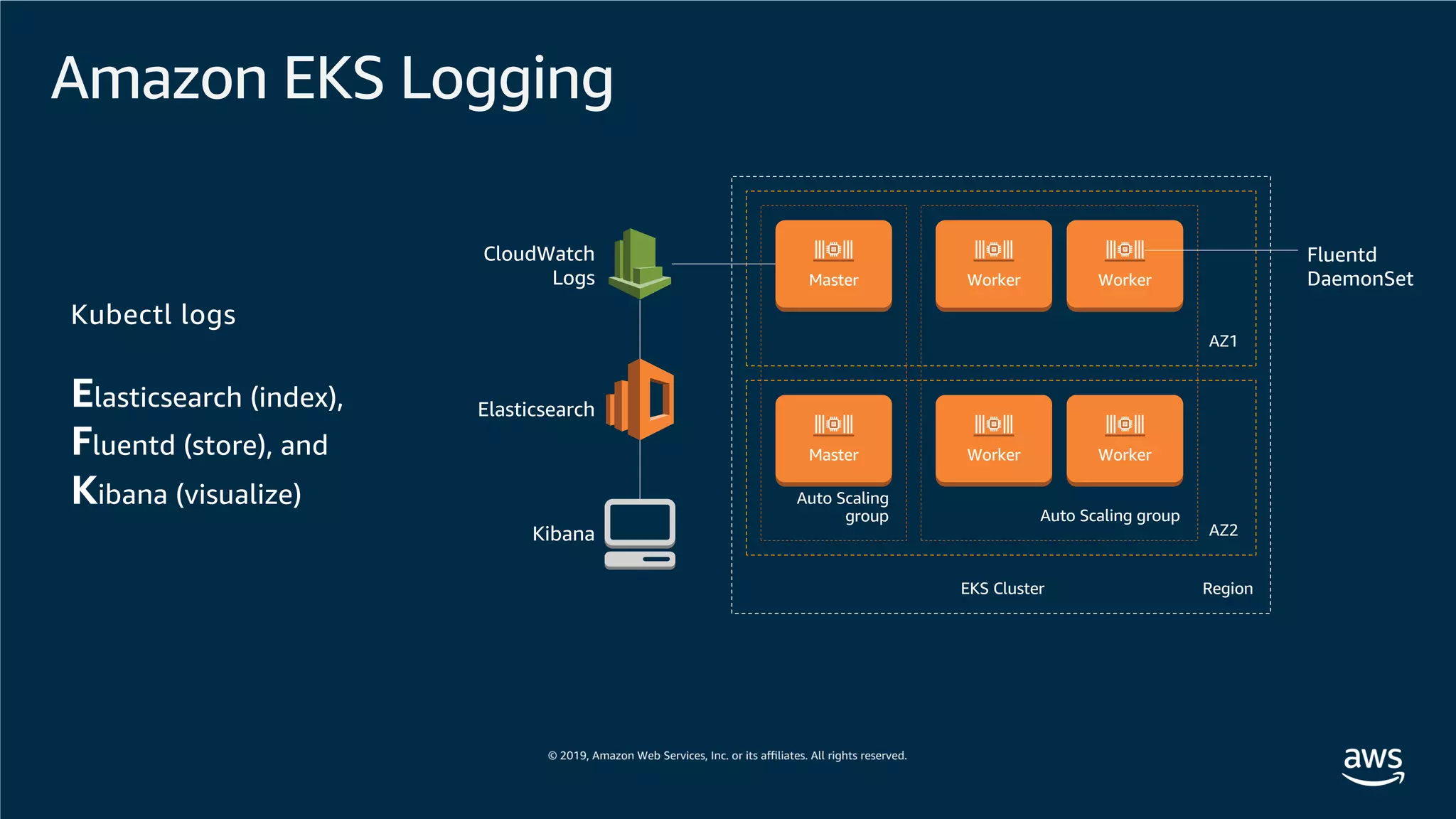

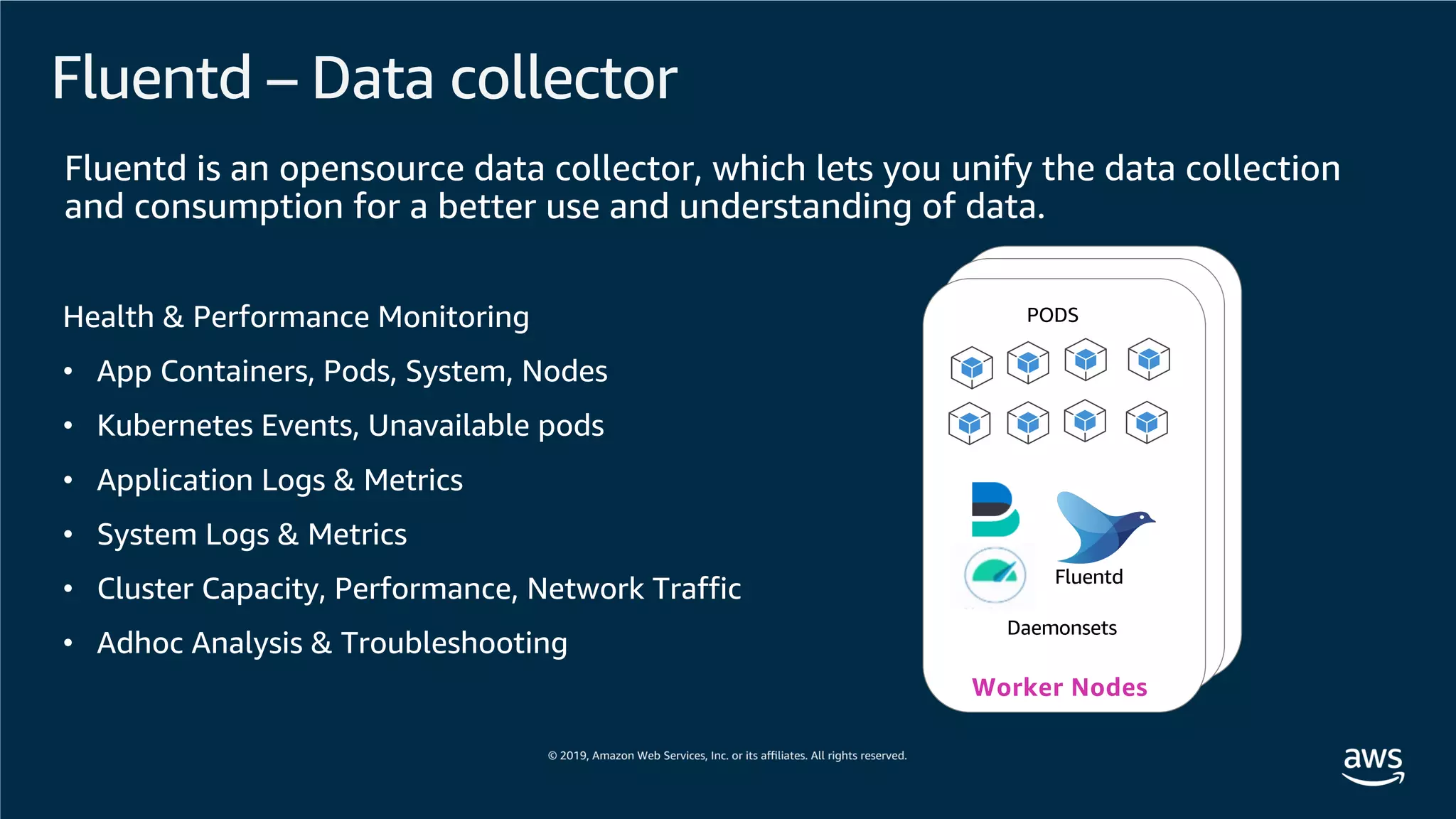

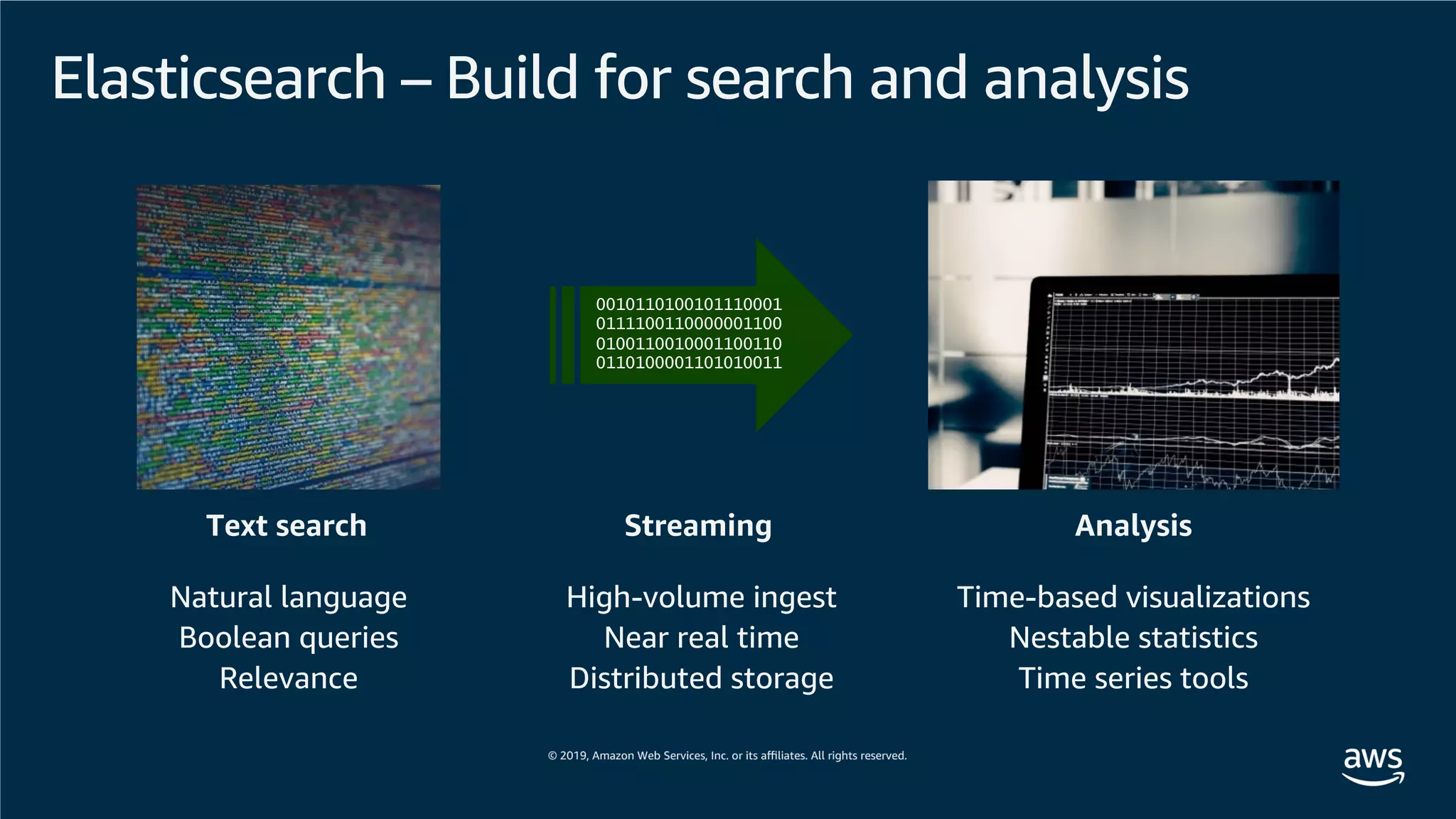

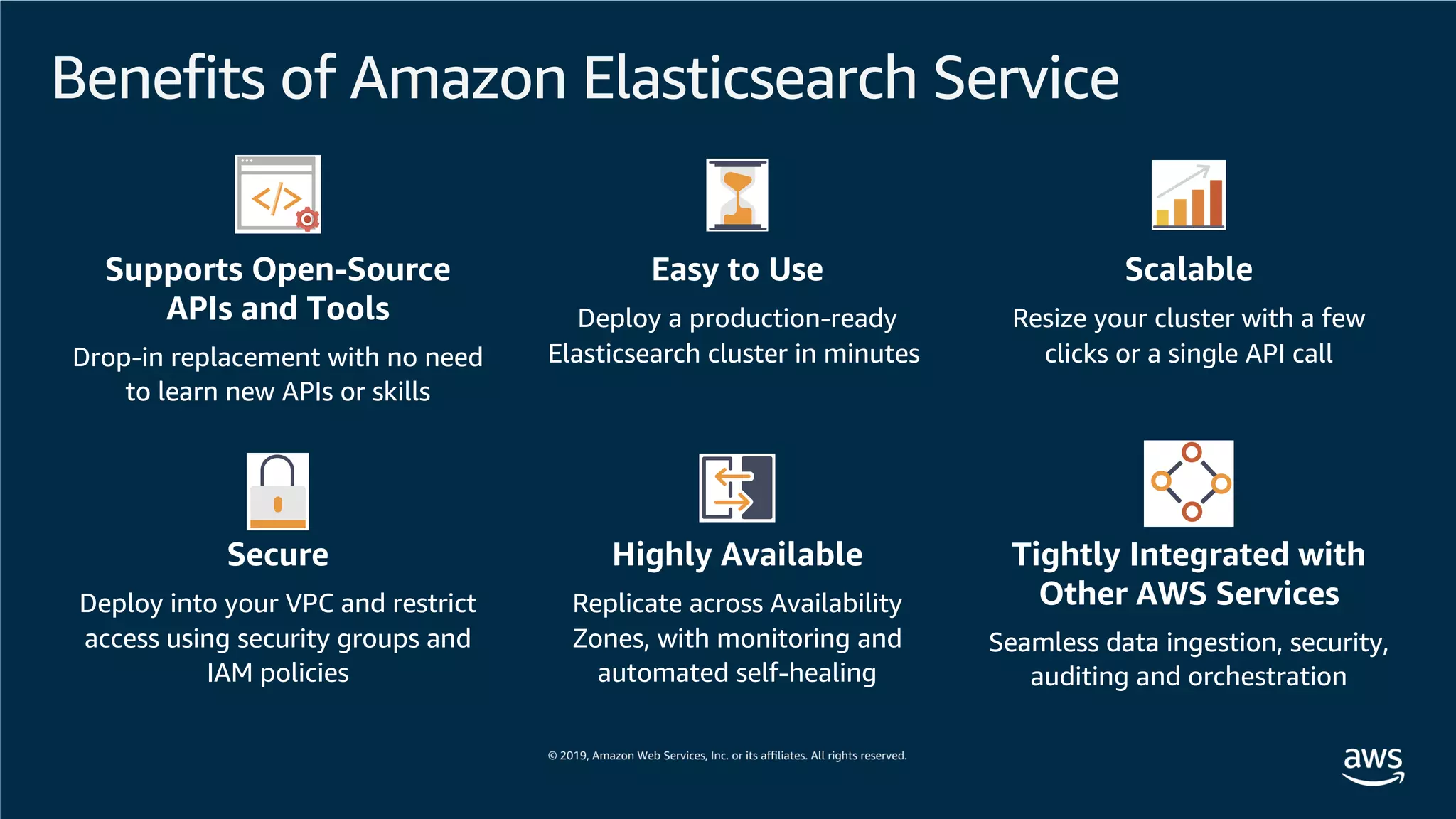

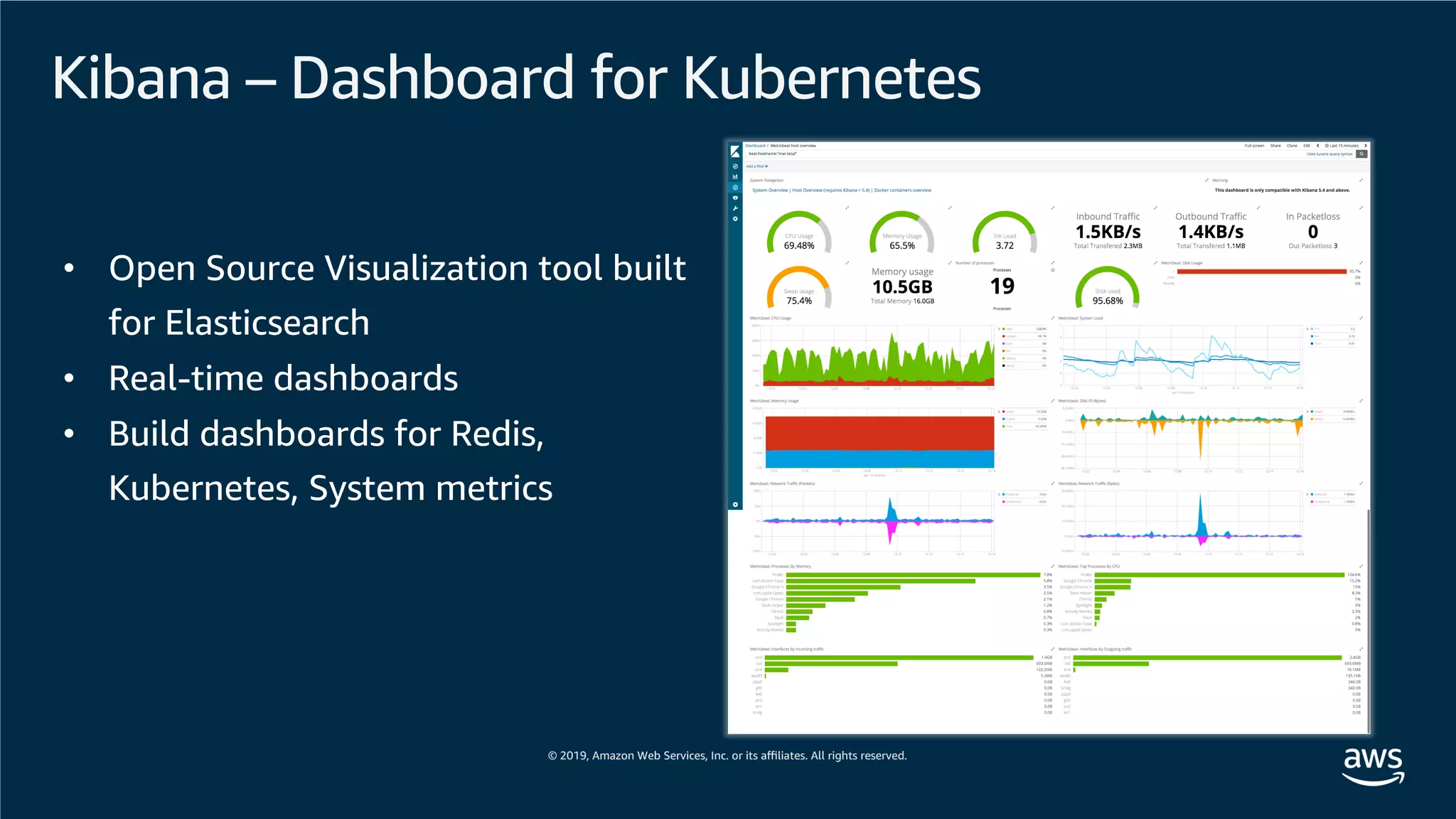

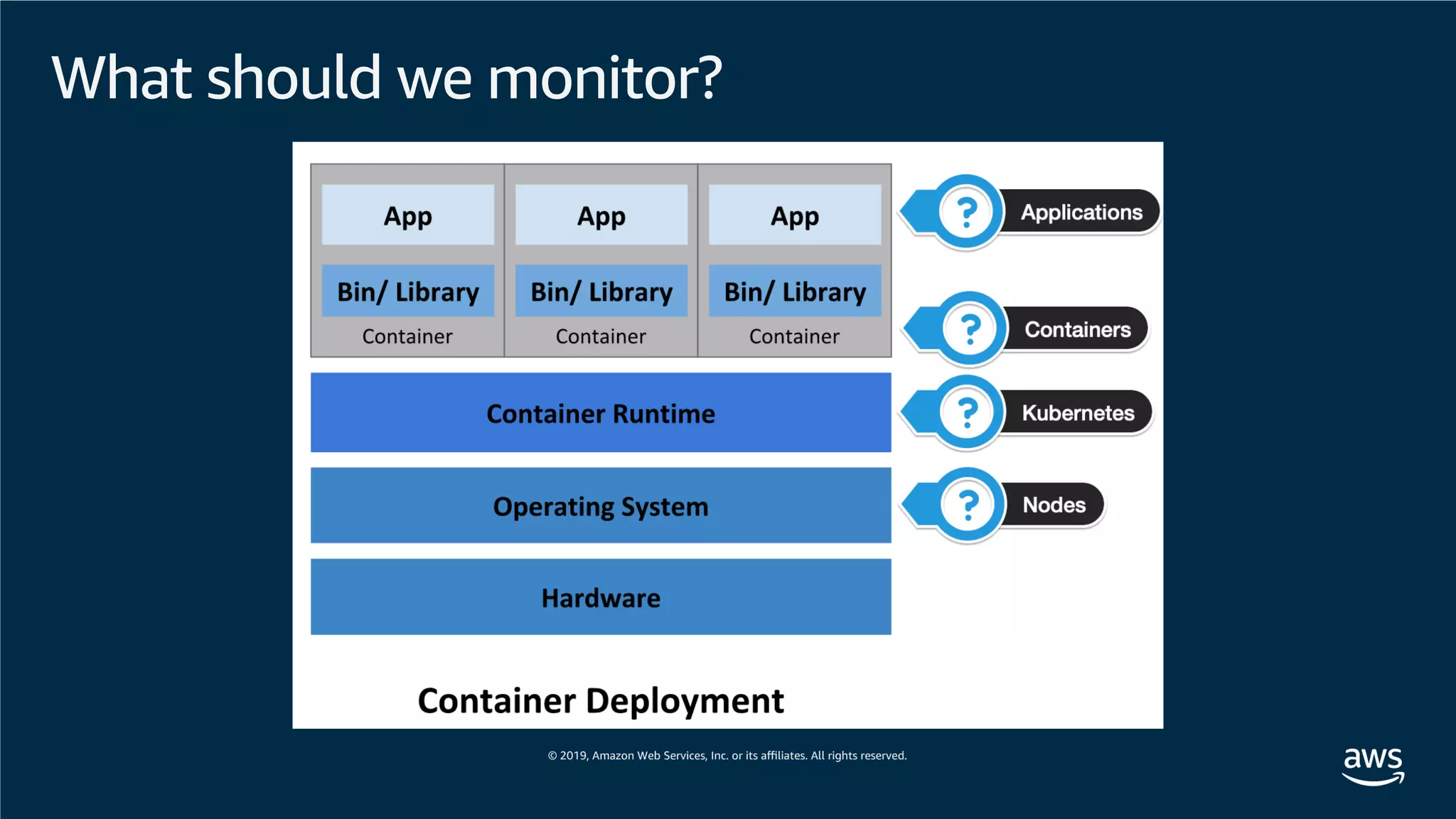

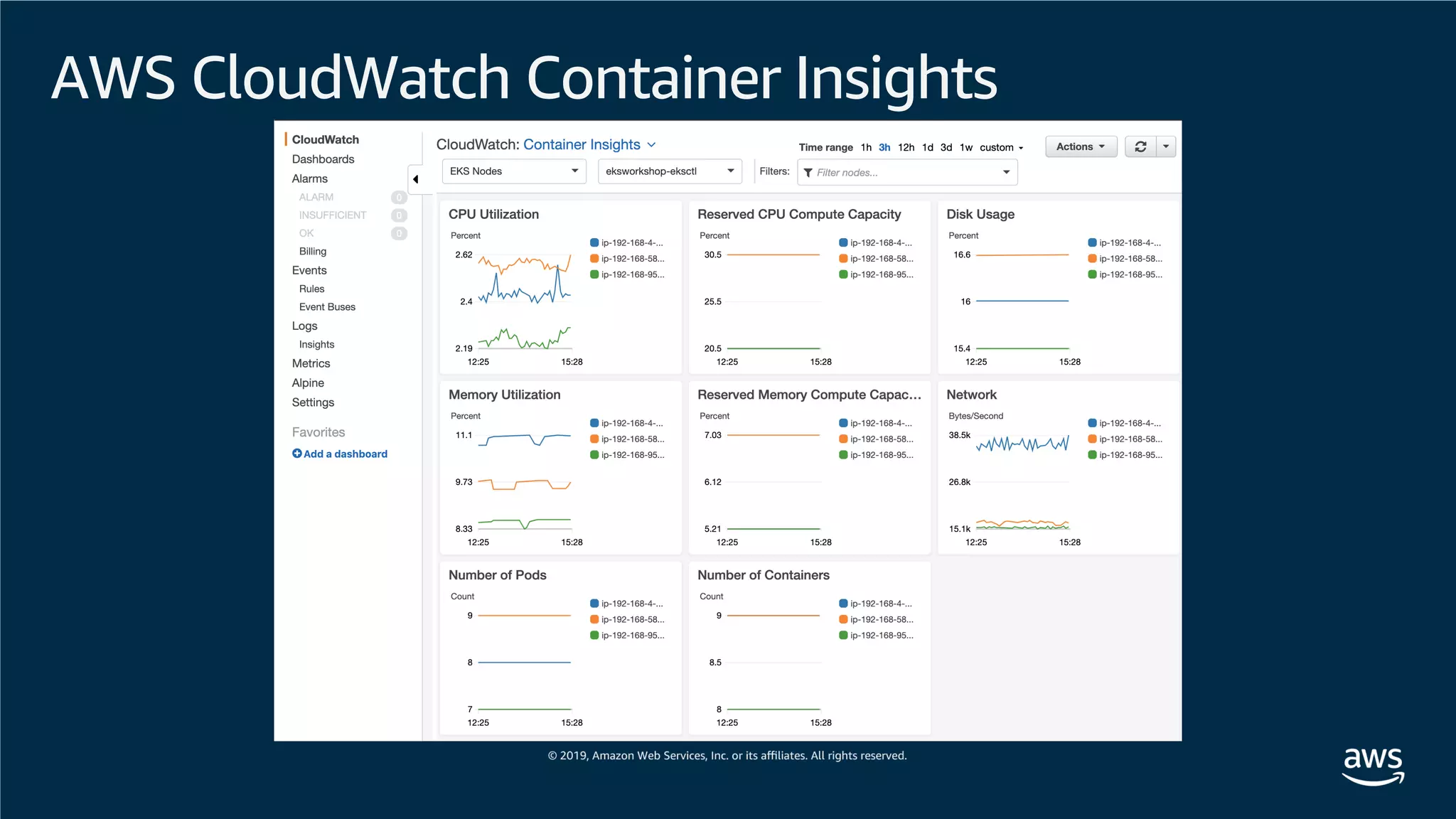

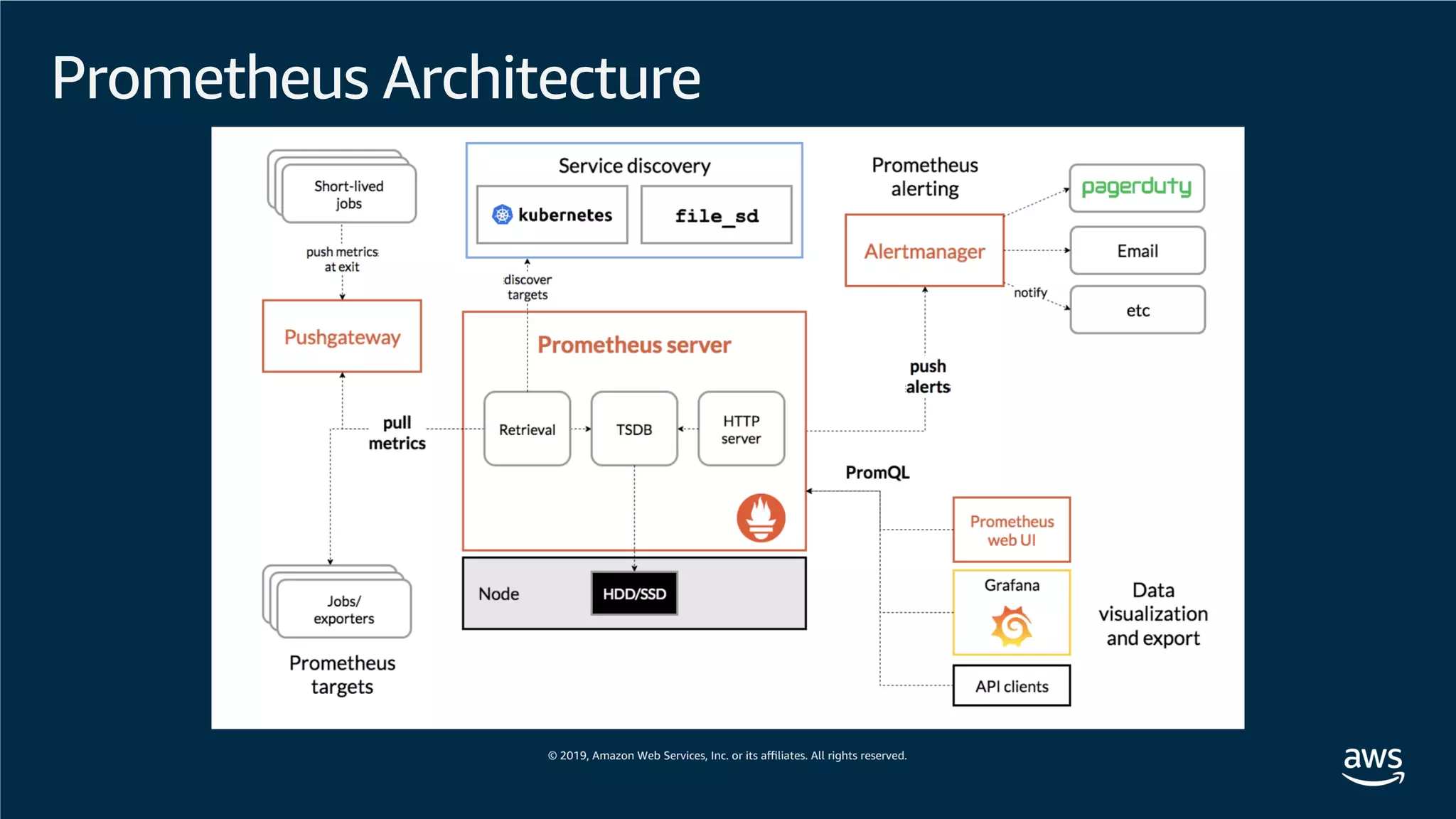

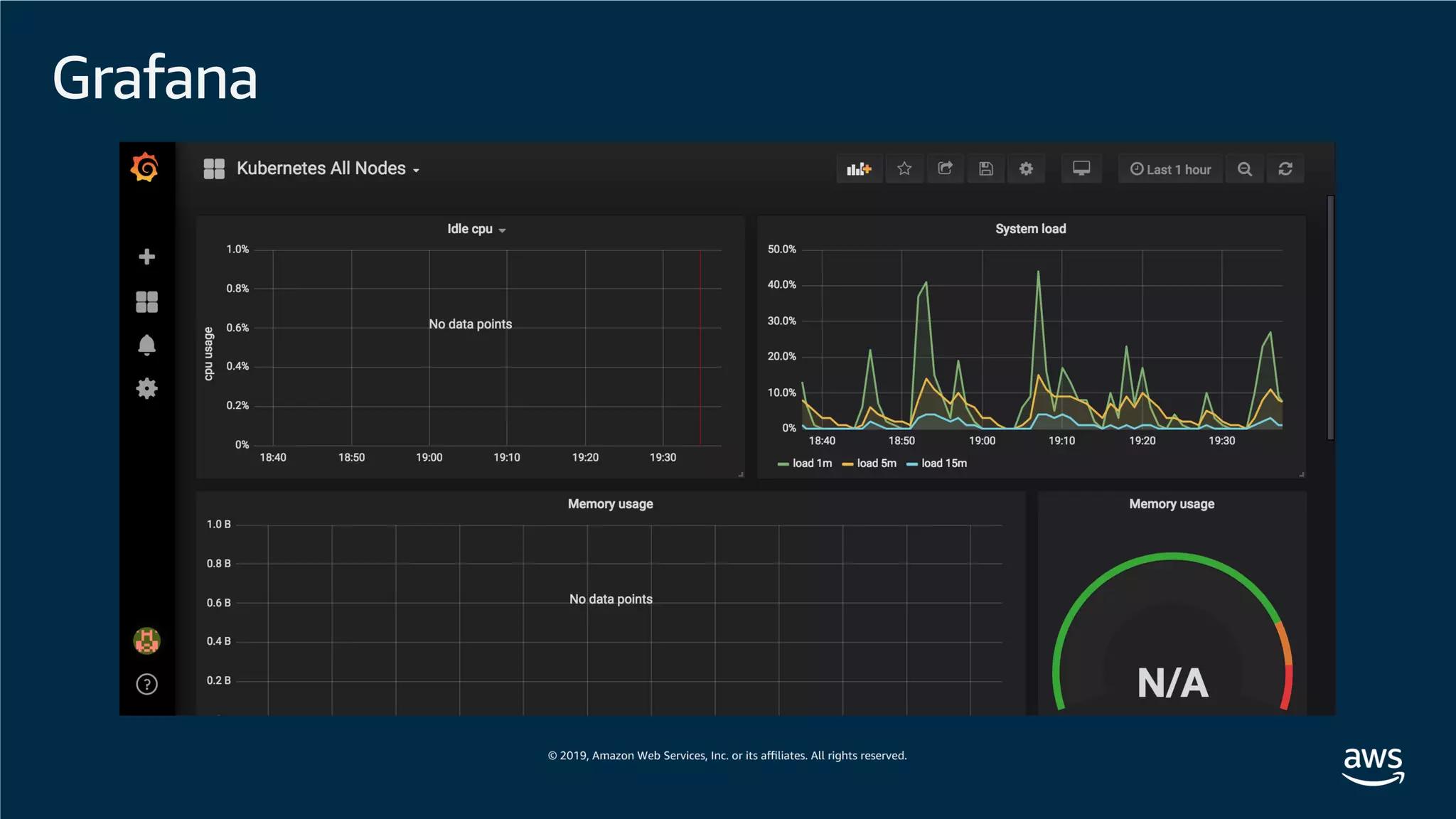

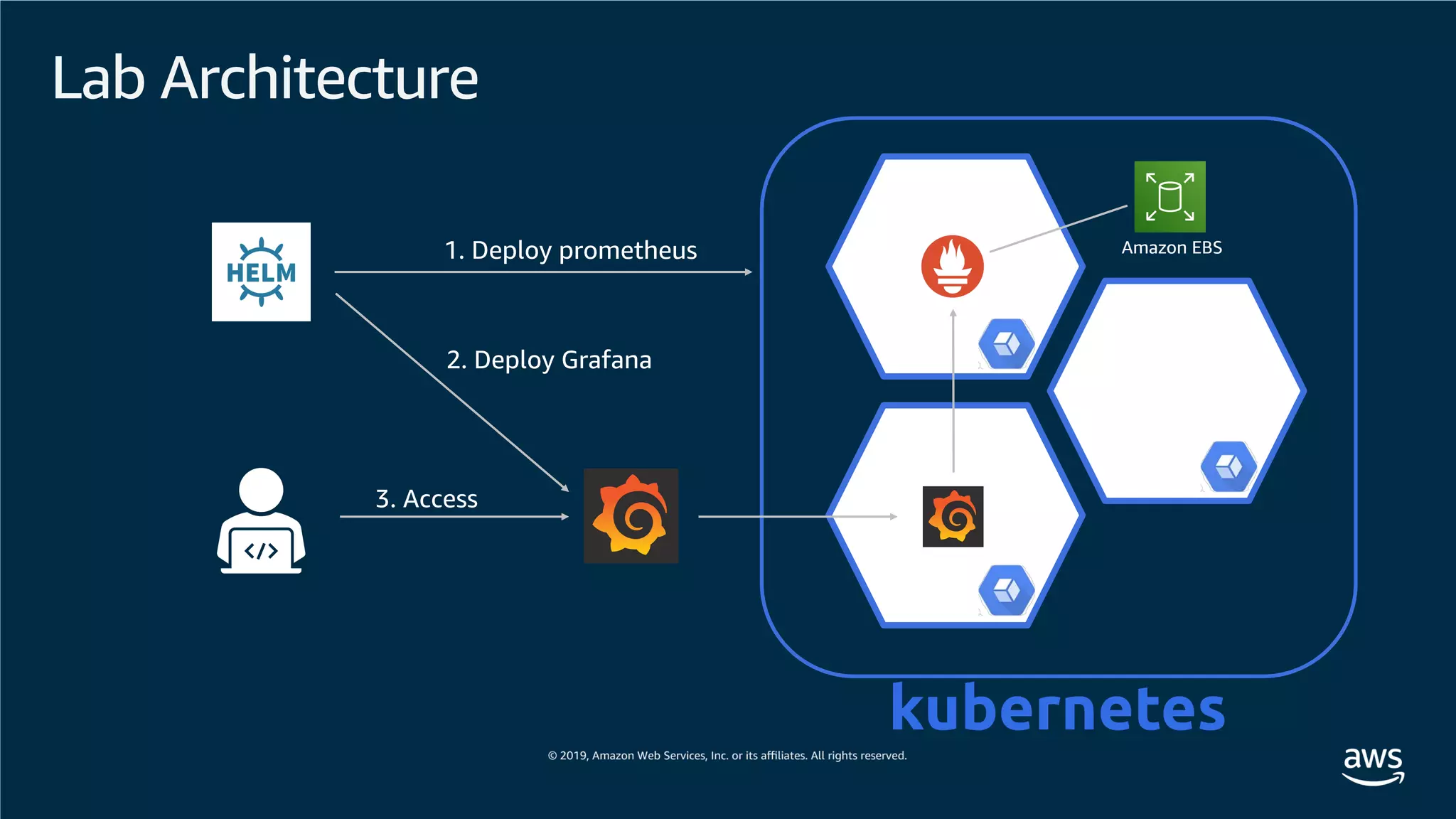

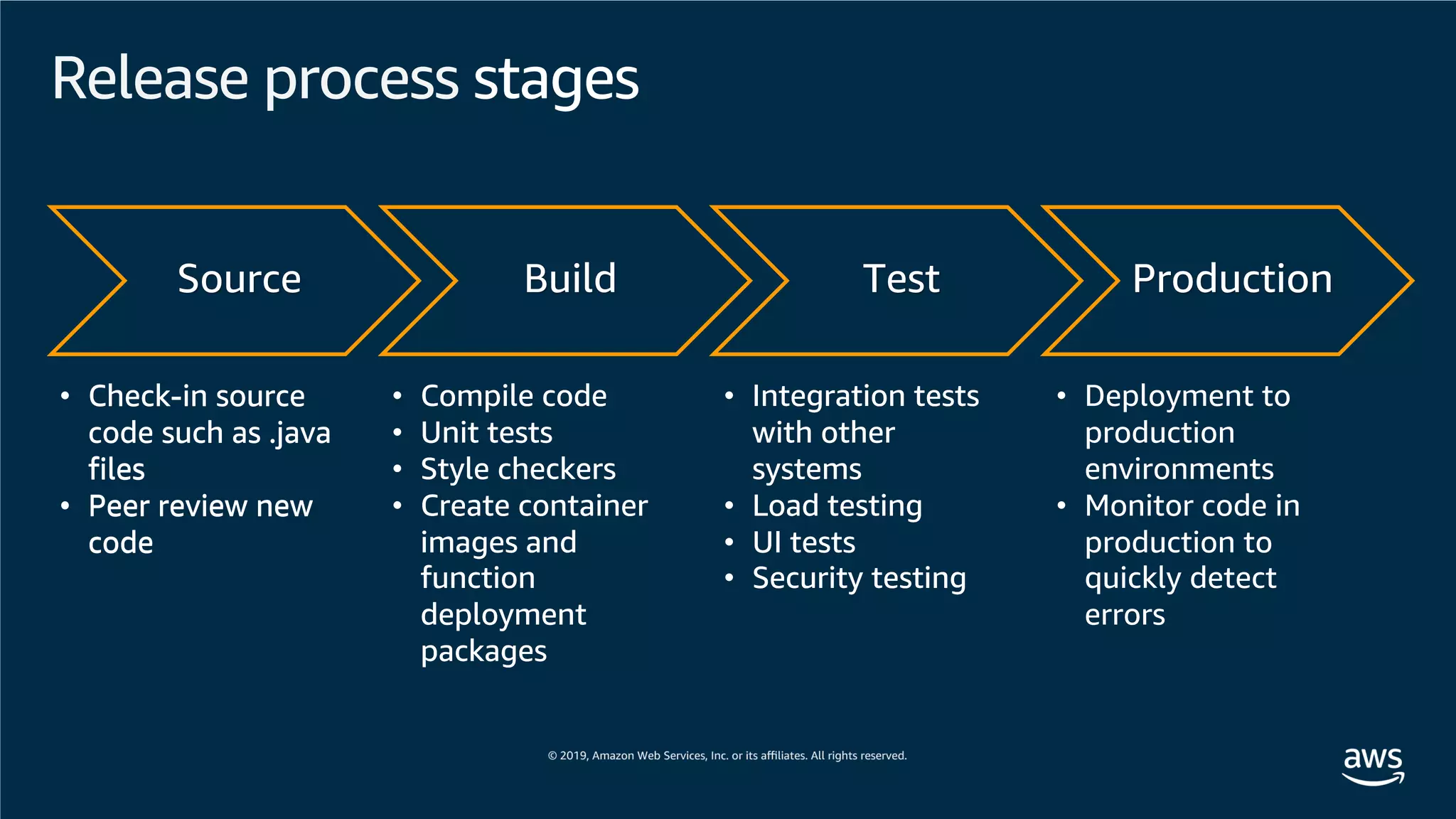

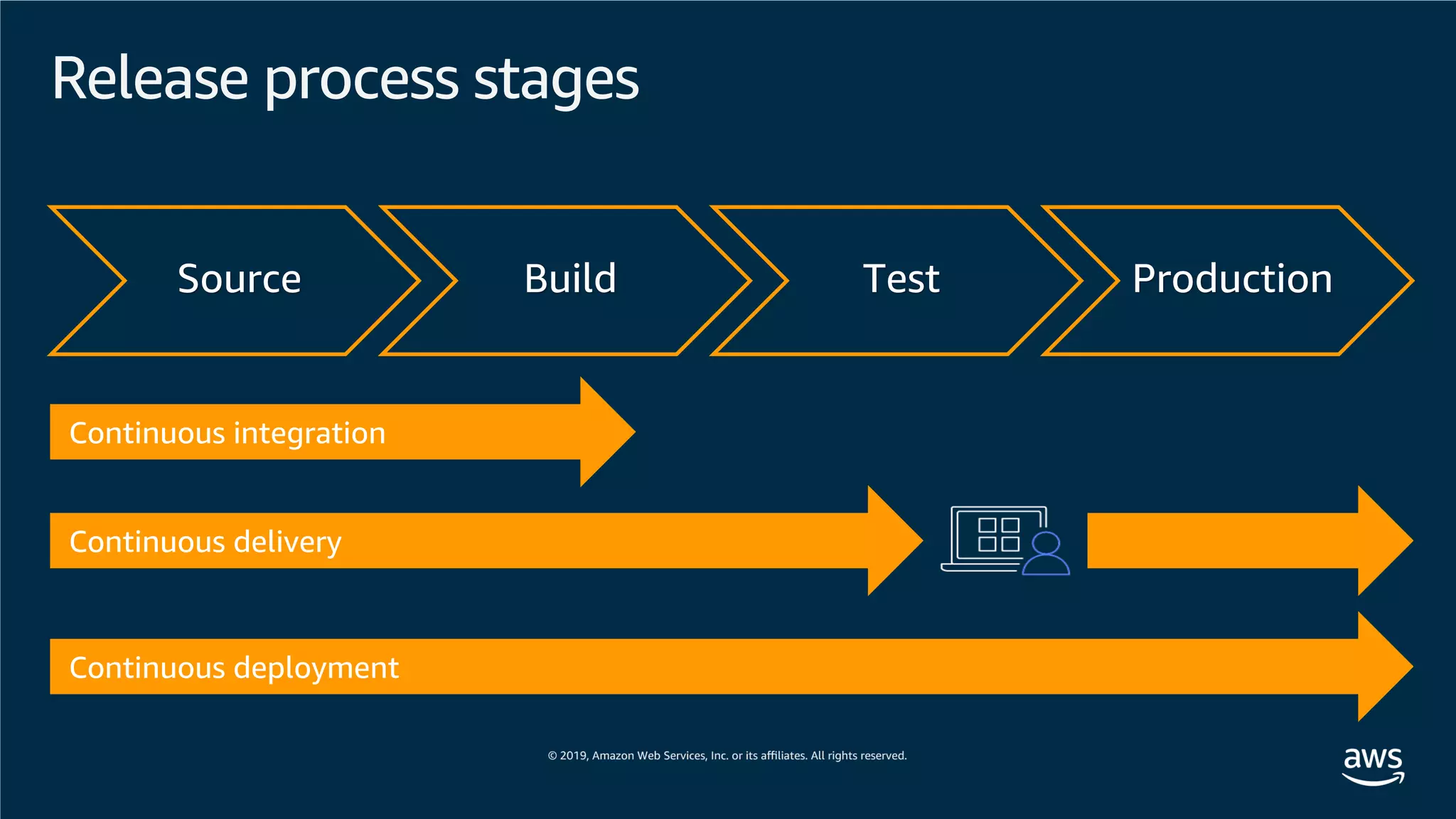

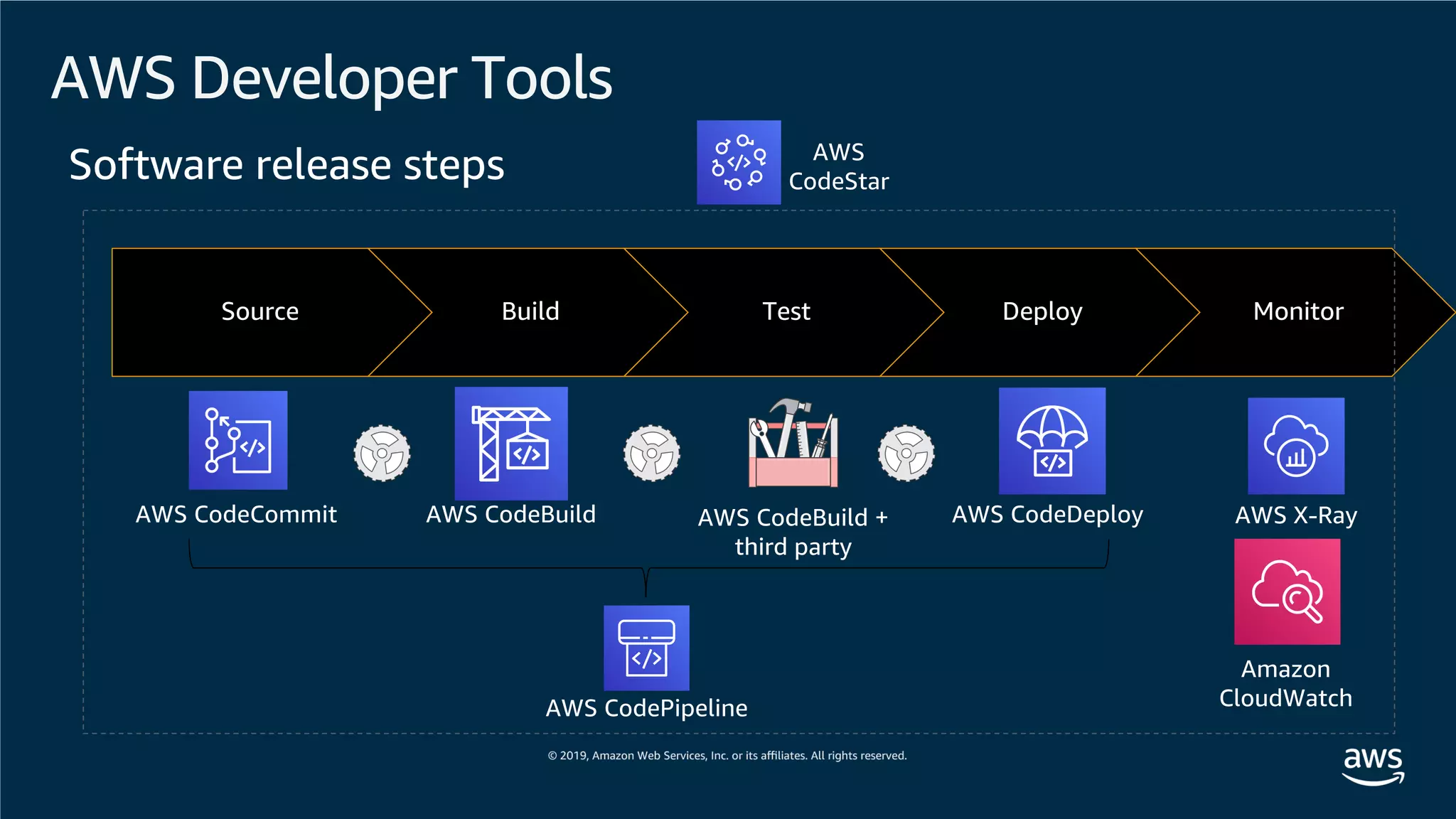

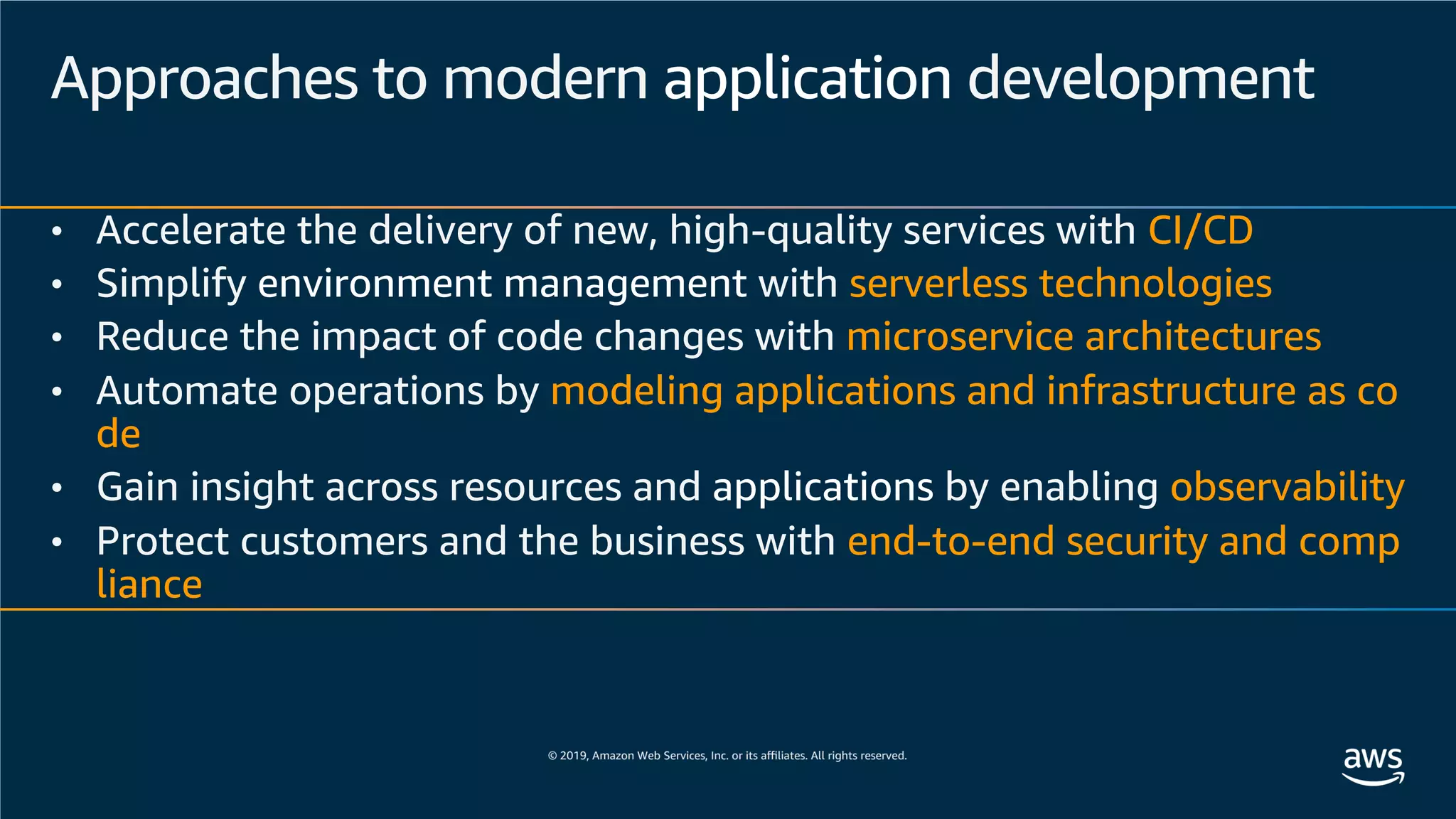

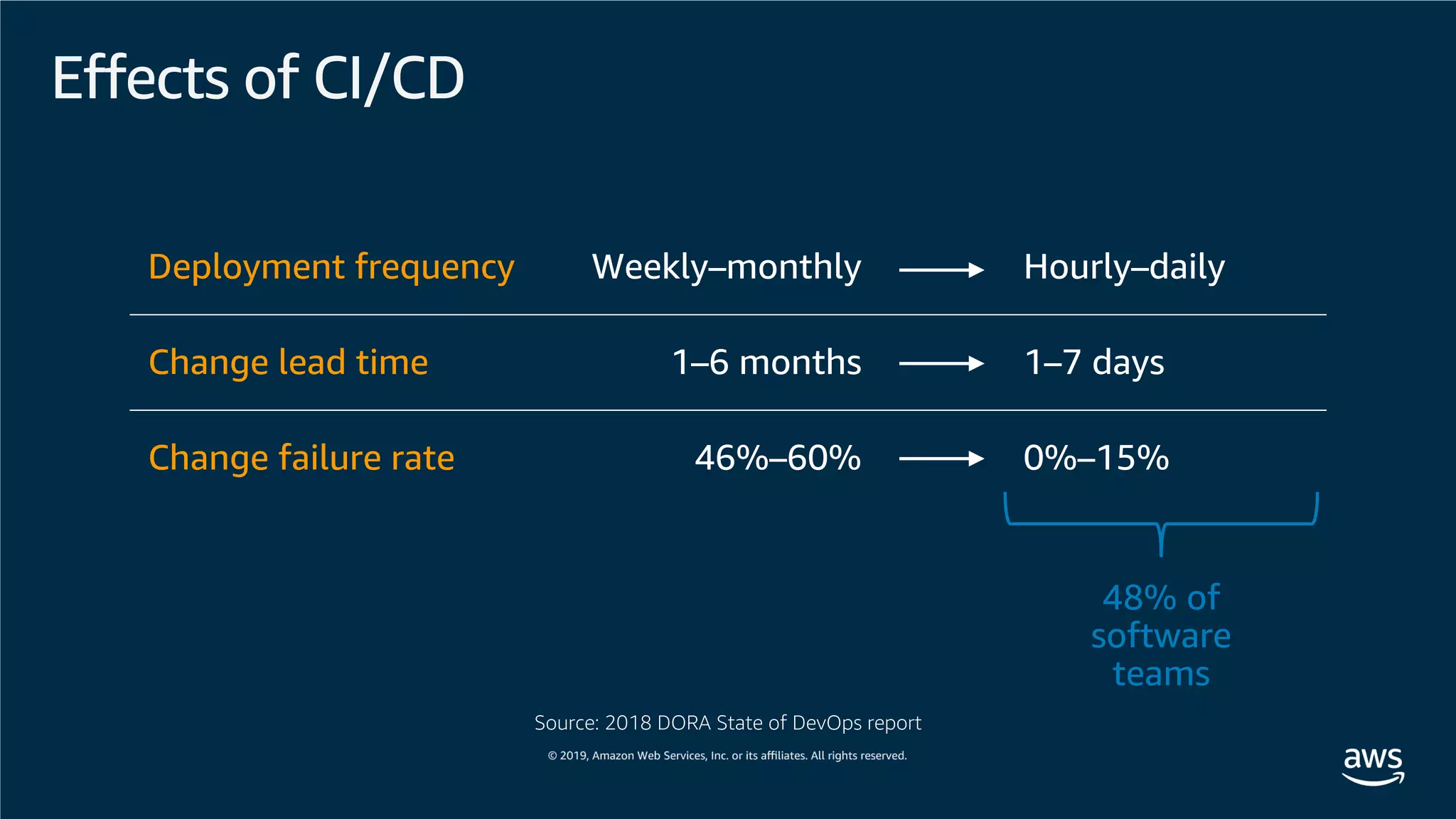

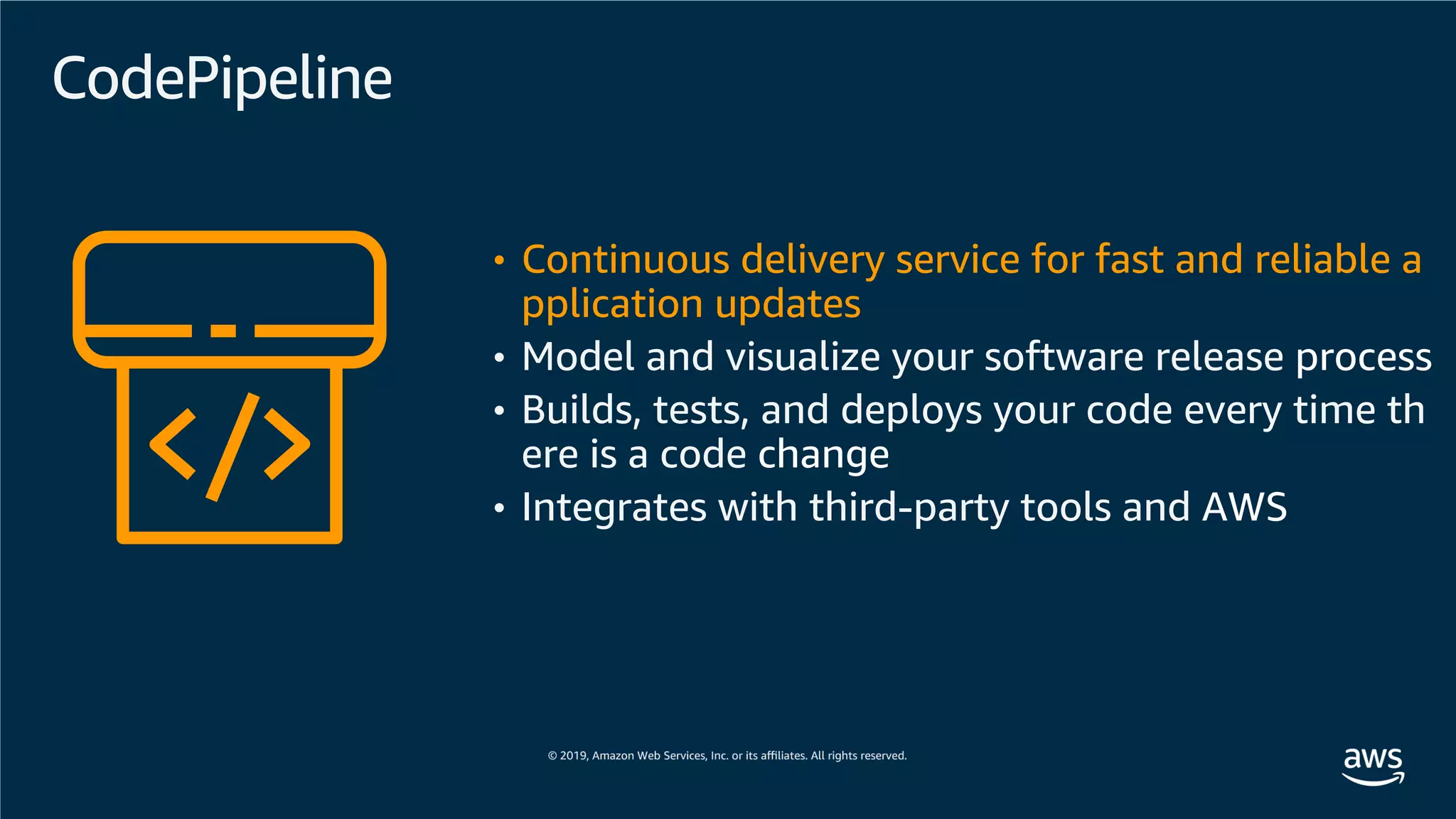

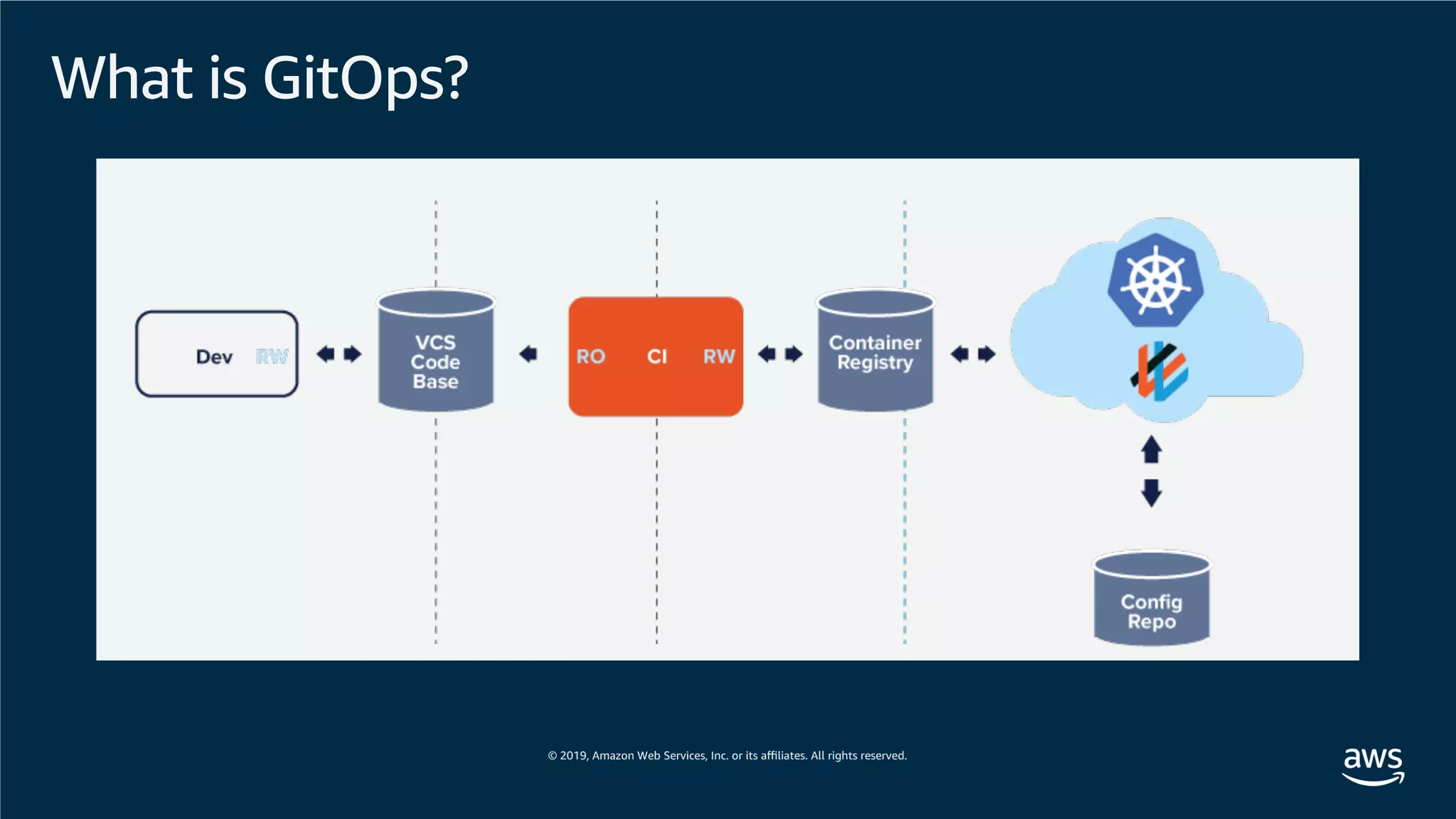

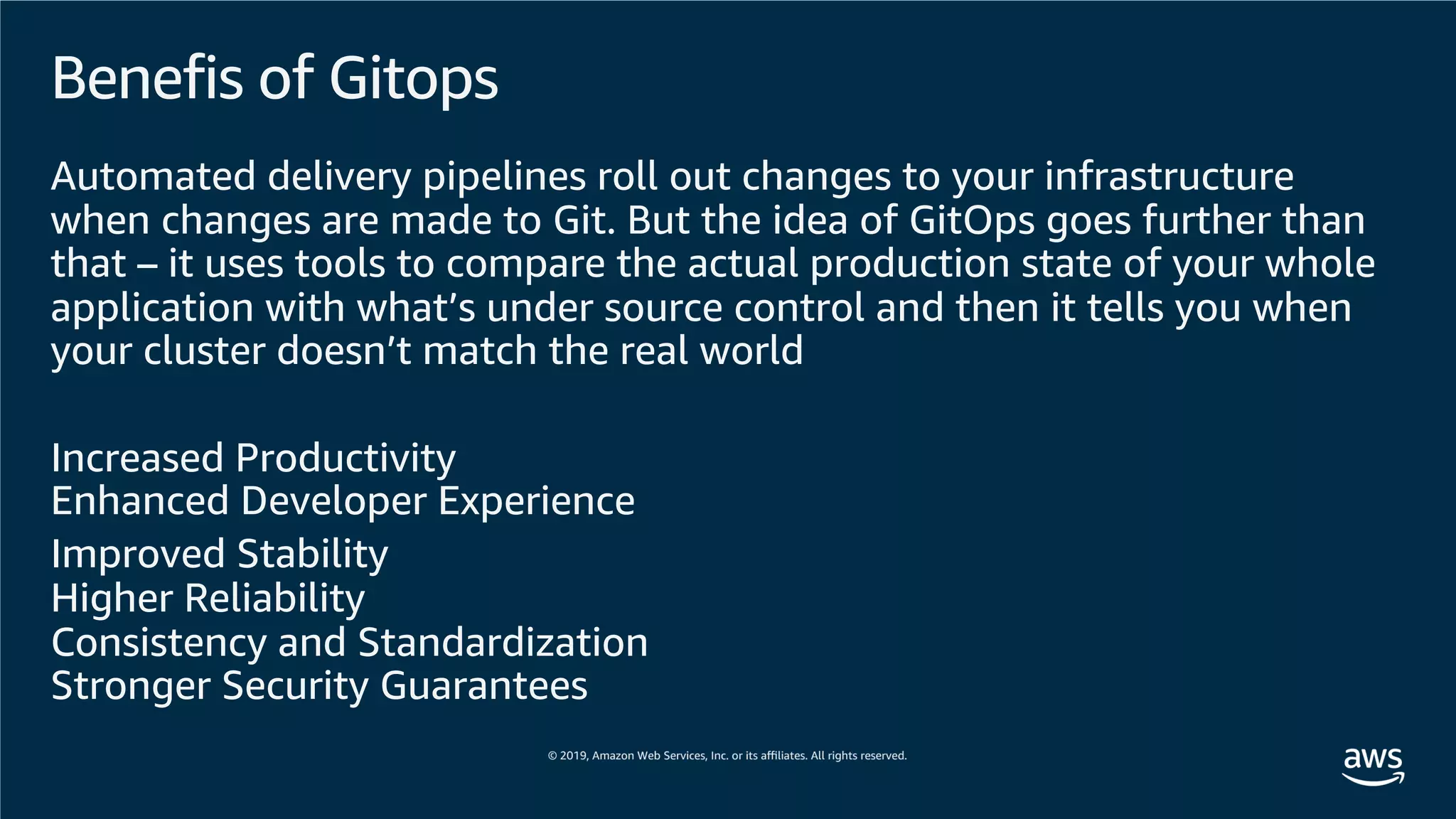

This document provides an overview of an Amazon EKS hands-on workshop. It introduces the workshop agenda which includes deploying example microservices, logging with Elasticsearch Fluentd and Kibana, monitoring with Prometheus and Grafana, and continuous integration/continuous delivery using GitOps with Weave Flux. Key concepts covered are Kubernetes pods, services, deployments, container networking with CNI plugins, observability tools, and CI/CD approaches.

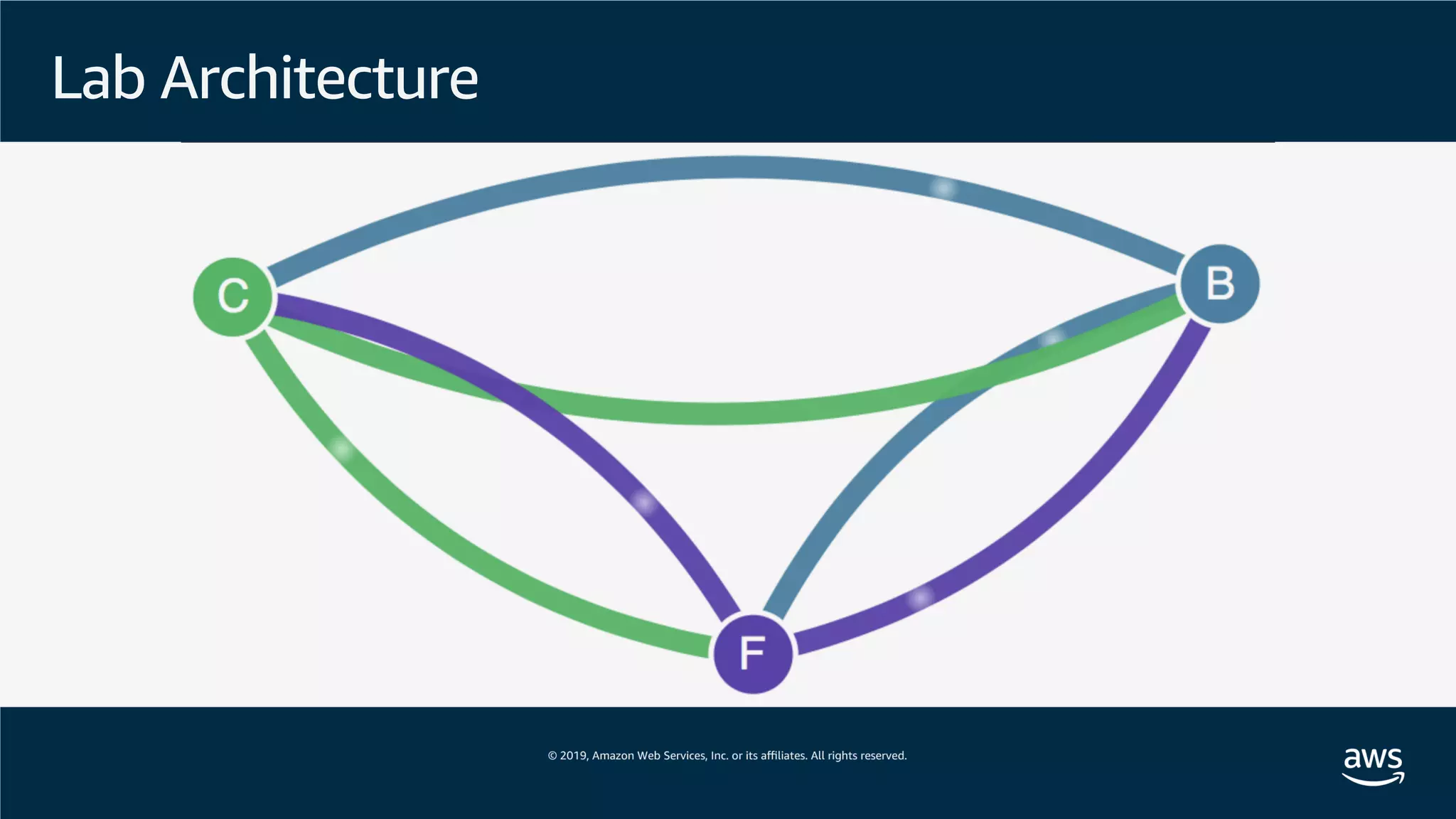

![Lab Architecture

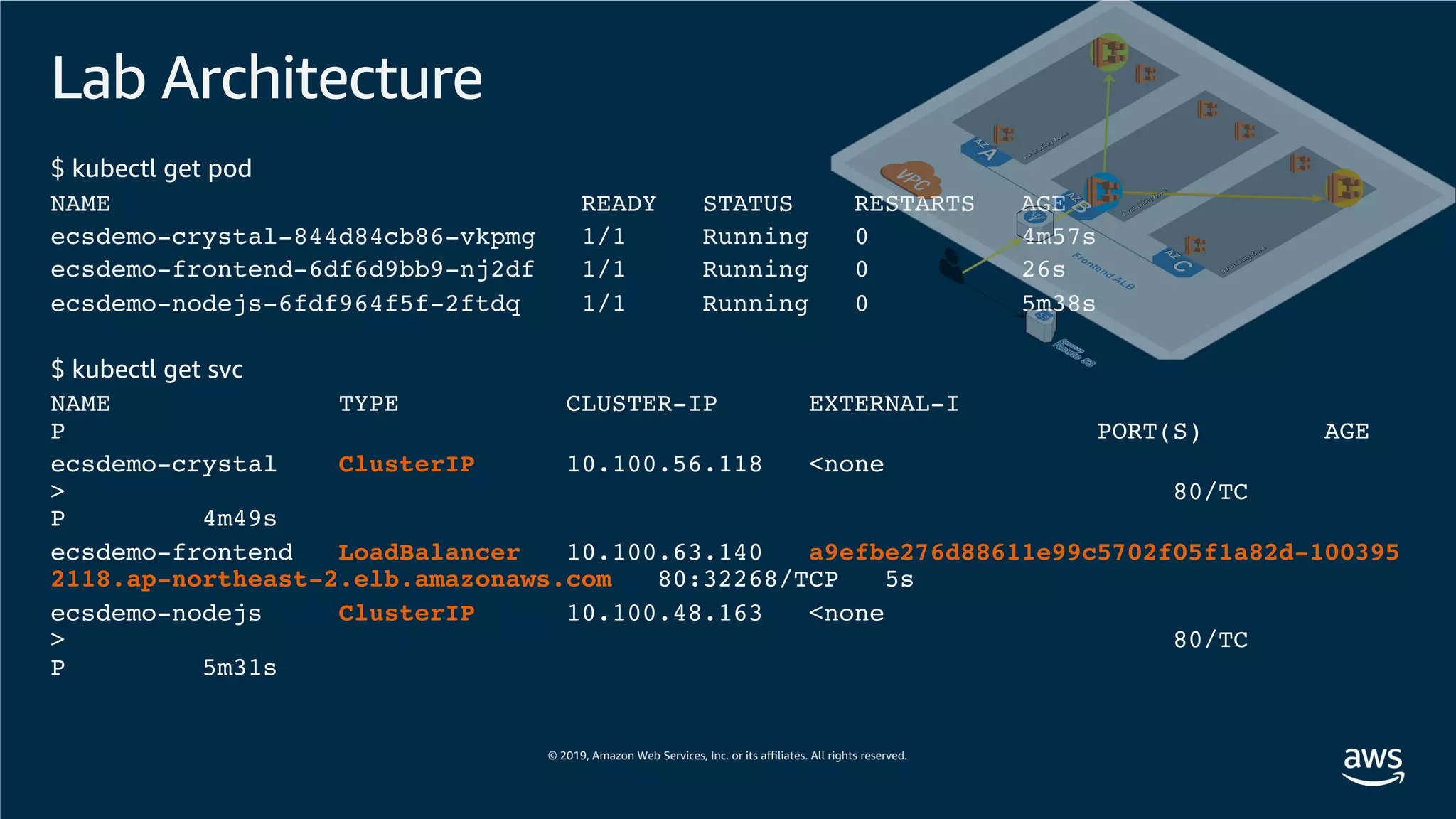

I, [2019-09-17T03:26:03.072922 #1] INFO -- : Started GET "/" for 192.168.233.51 at 2019-09-17 03:26:03 +0000

I, [2019-09-17T03:26:03.075543 #1] INFO -- : Processing by ApplicationController#index as HTML

I, [2019-09-17T03:26:03.081943 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.081977 #1] INFO -- : expanded http://ecsdemo-nodejs.default.svc.cluster.local/ to http://ecsdemo-nodejs

.default.svc.cluster.local/

I, [2019-09-17T03:26:03.089166 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.089197 #1] INFO -- : expanded http://ecsdemo-nodejs.default.svc.cluster.local/ to http://ecsdemo-nodejs

.default.svc.cluster.local/

I, [2019-09-17T03:26:03.092048 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.092078 #1] INFO -- : expanded http://ecsdemo-nodejs.default.svc.cluster.local/ to http://ecsdemo-nodejs

.default.svc.cluster.local/

I, [2019-09-17T03:26:03.121076 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.121120 #1] INFO -- : expanded http://ecsdemo-crystal.default.svc.cluster.local/crystal to http://ecsdem

o-crystal.default.svc.cluster.local/crystal

I, [2019-09-17T03:26:03.128501 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.128538 #1] INFO -- : expanded http://ecsdemo-crystal.default.svc.cluster.local/crystal to http://ecsdem

o-crystal.default.svc.cluster.local/crystal

I, [2019-09-17T03:26:03.135349 #1] INFO -- : uri port is 80

I, [2019-09-17T03:26:03.135382 #1] INFO -- : expanded http://ecsdemo-crystal.default.svc.cluster.local/crystal to http://ecsdem

o-crystal.default.svc.cluster.local/crystal

I, [2019-09-17T03:26:03.146138 #1] INFO -- : Rendered application/index.html.erb within layouts/application (2.5ms)

I, [2019-09-17T03:26:03.146535 #1] INFO -- : Completed 200 OK in 71ms (Views: 6.3ms | ActiveRecord: 0.0ms)](https://image.slidesharecdn.com/awsdevdayseoul2019-amazonekshands-onworkshopv0-190930092336/75/AWS-Dev-Day-Amazon-EKS-28-2048.jpg)

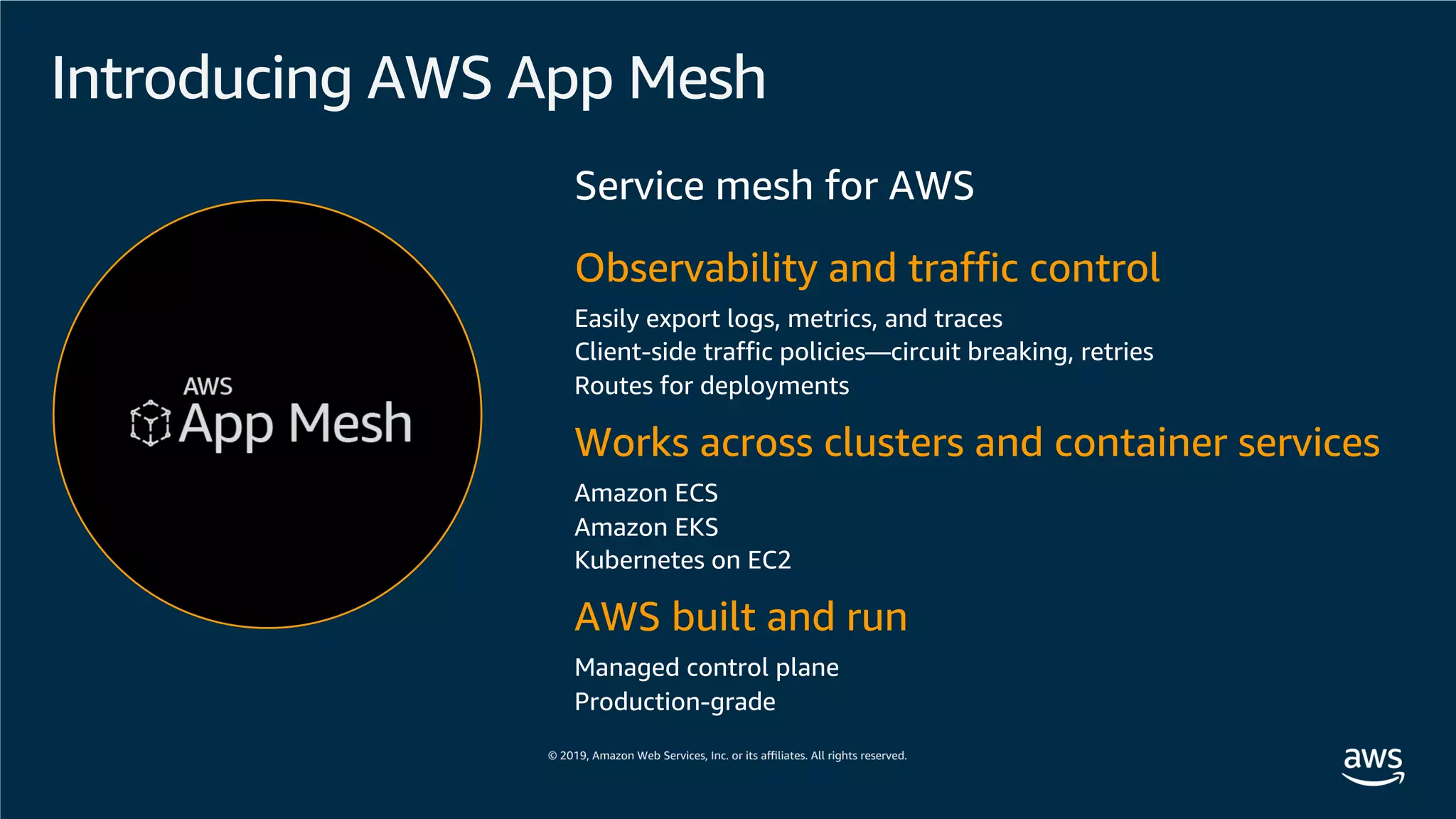

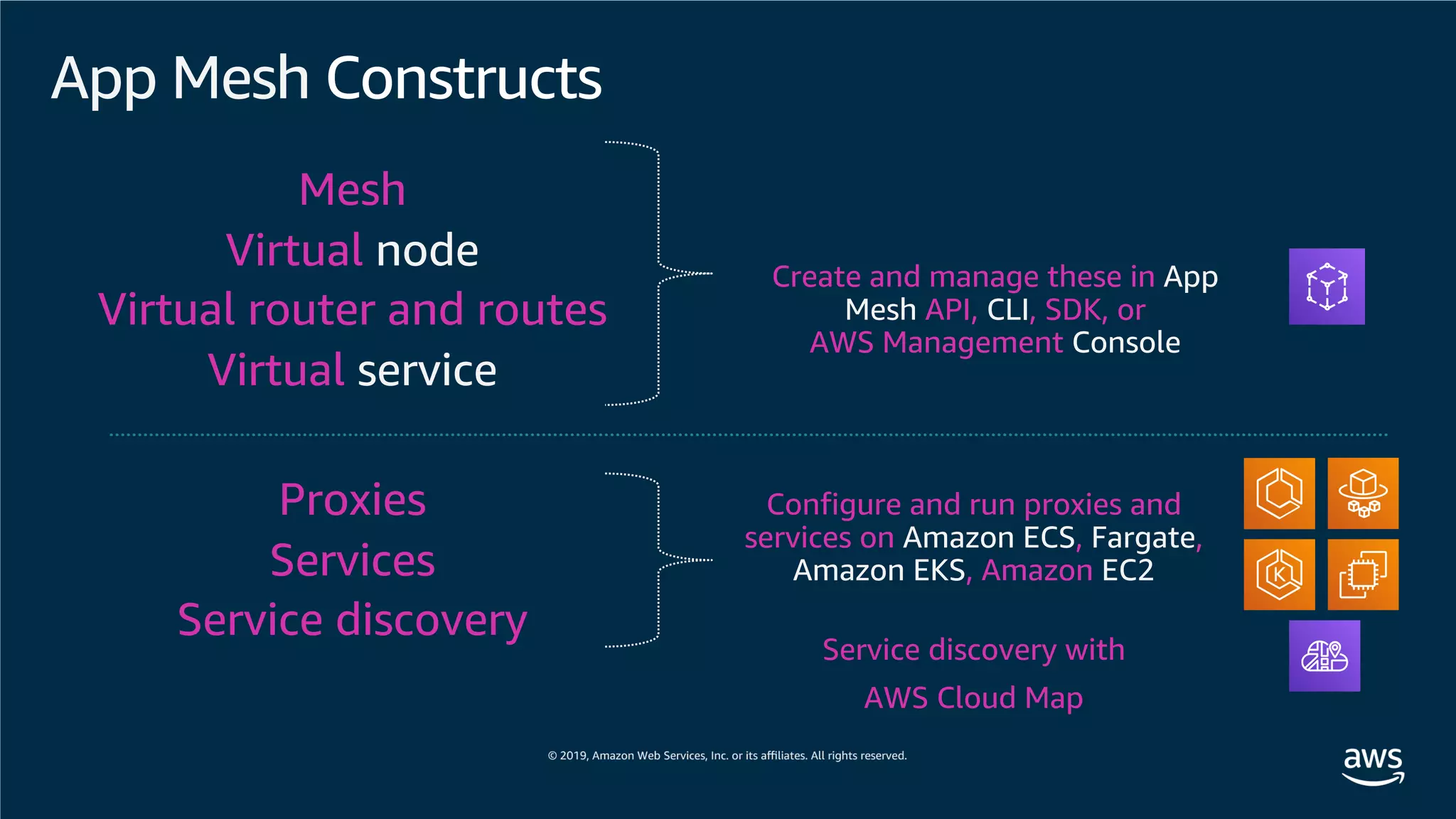

![Mesh – [sample_app]

Virtual router

HTTP route

Targets:

Prefix: /

B

B’

Virtual

node A

Service

discovery

Listener Backends Virtual

node B

Service

discovery

Listener Backends

Virtual

node B’

Service

discovery

Listener Backends

B

B

B’

B’

A

Connecting microservices](https://image.slidesharecdn.com/awsdevdayseoul2019-amazonekshands-onworkshopv0-190930092336/75/AWS-Dev-Day-Amazon-EKS-88-2048.jpg)