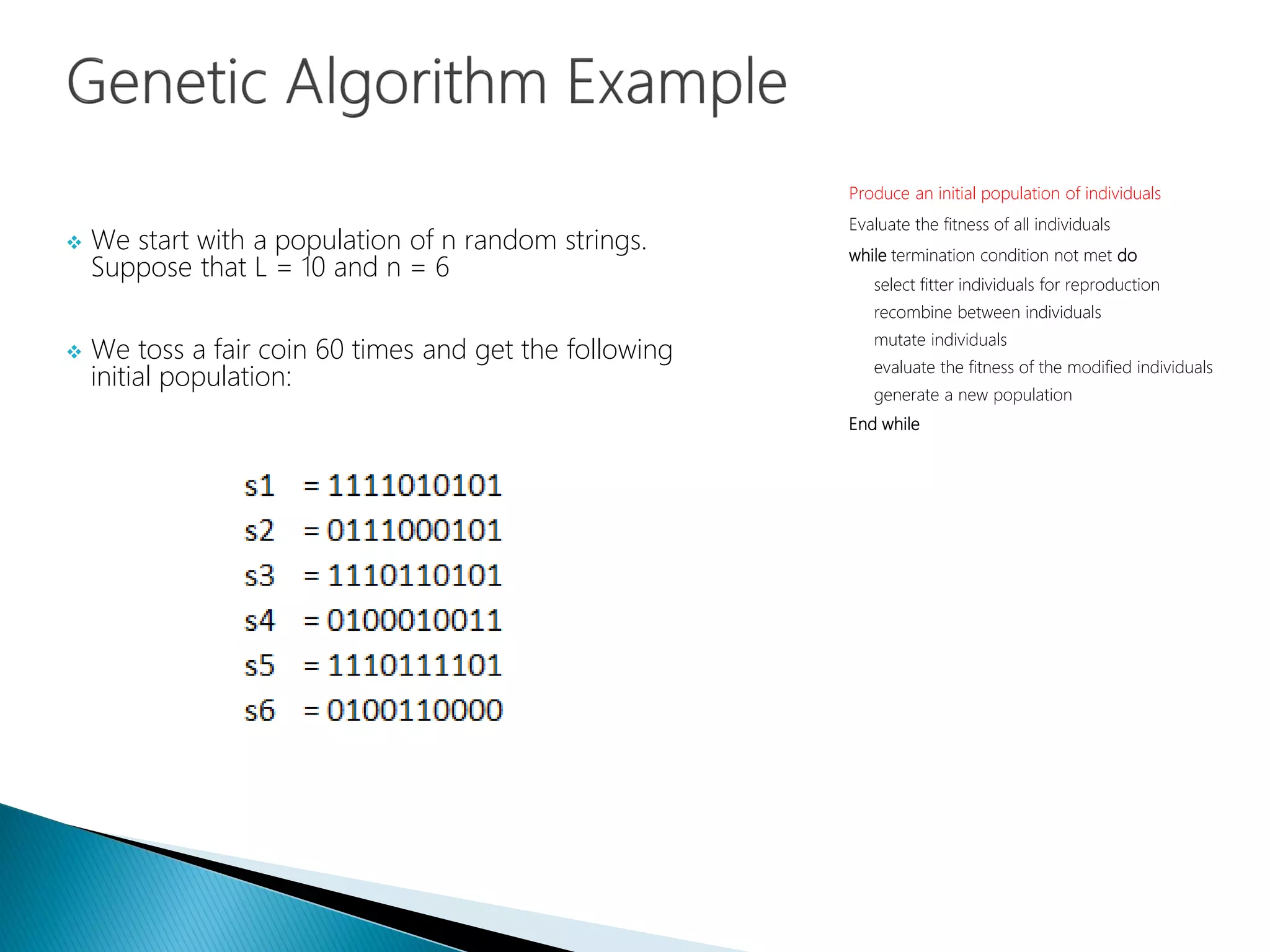

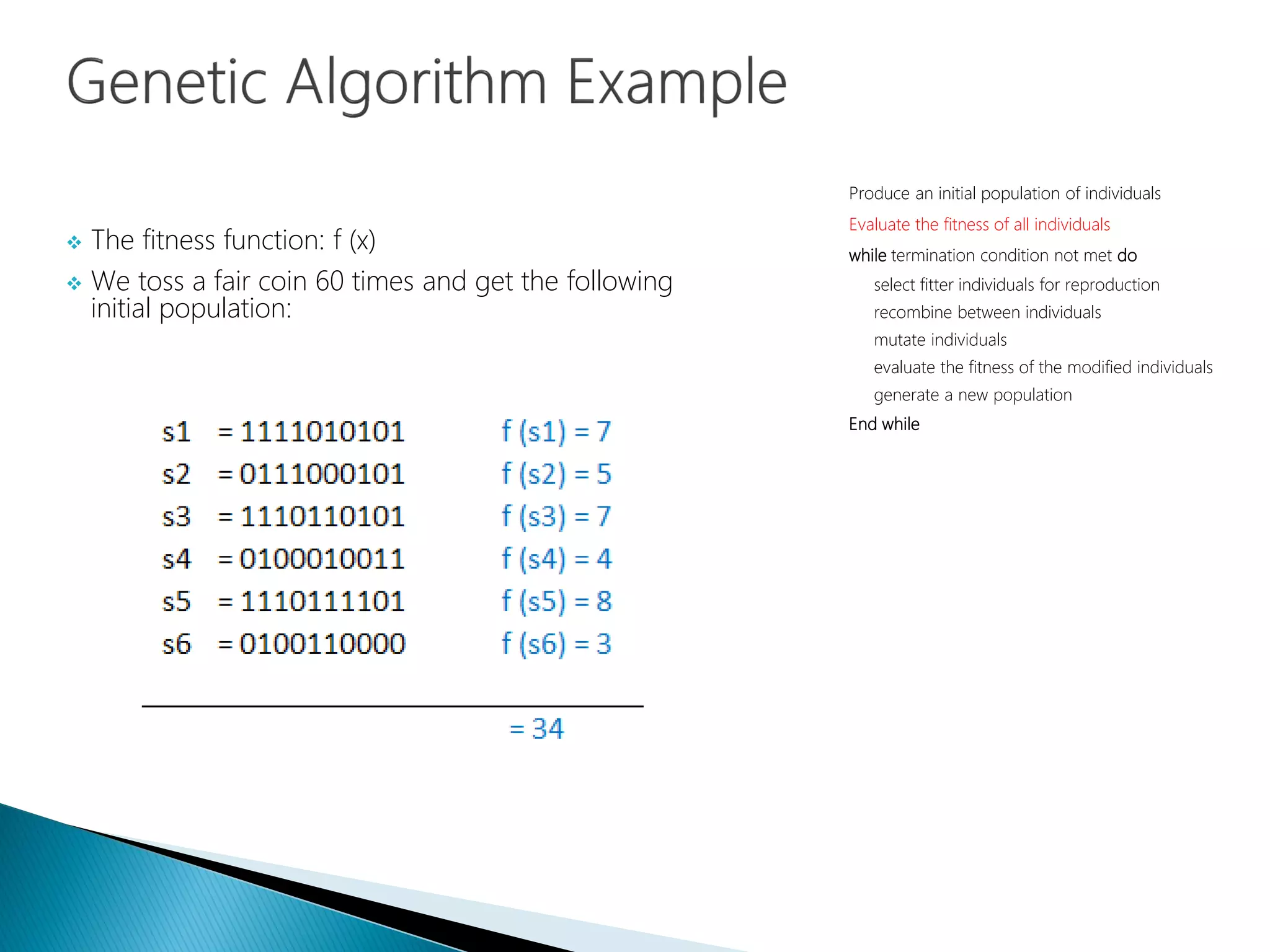

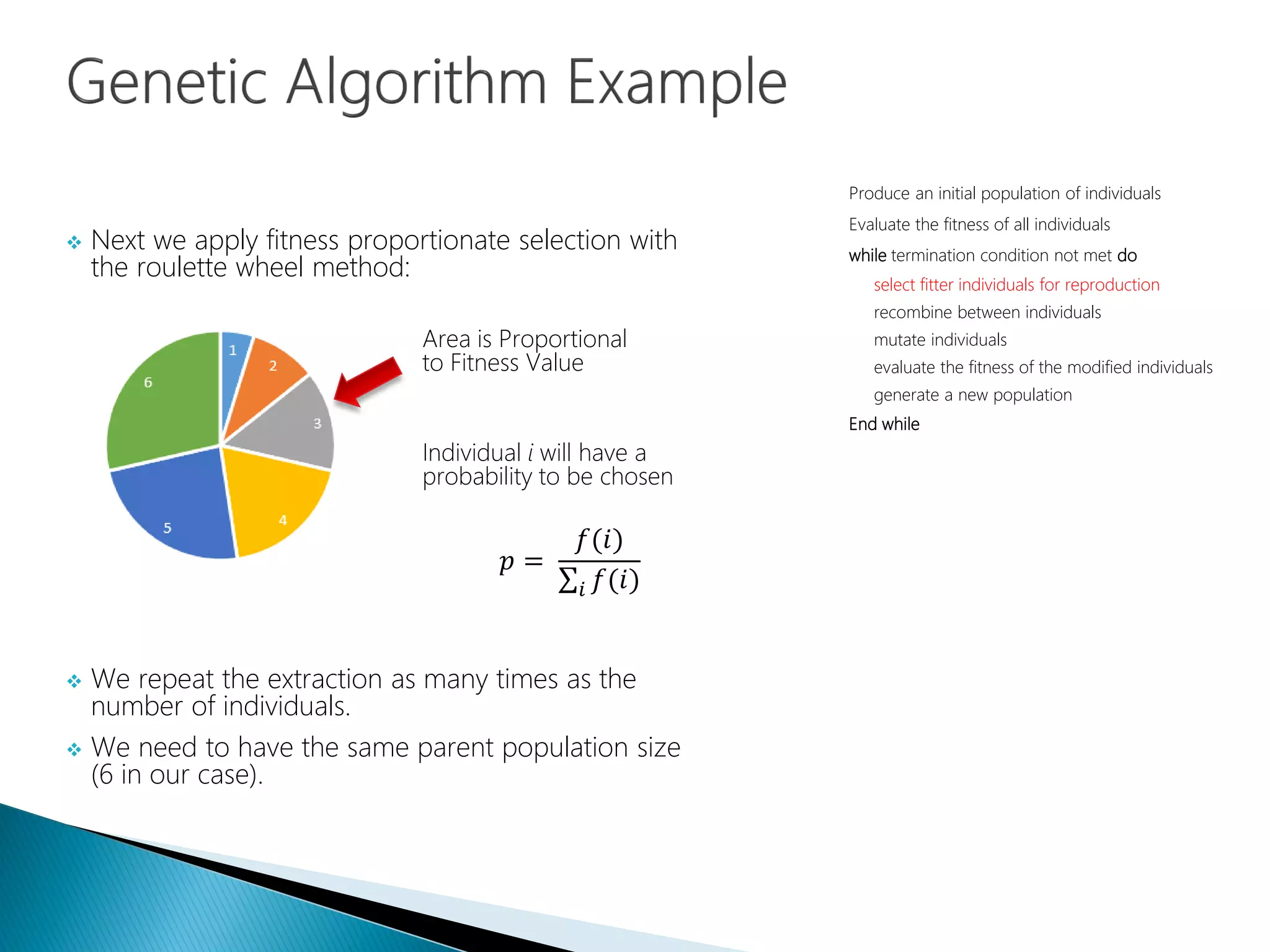

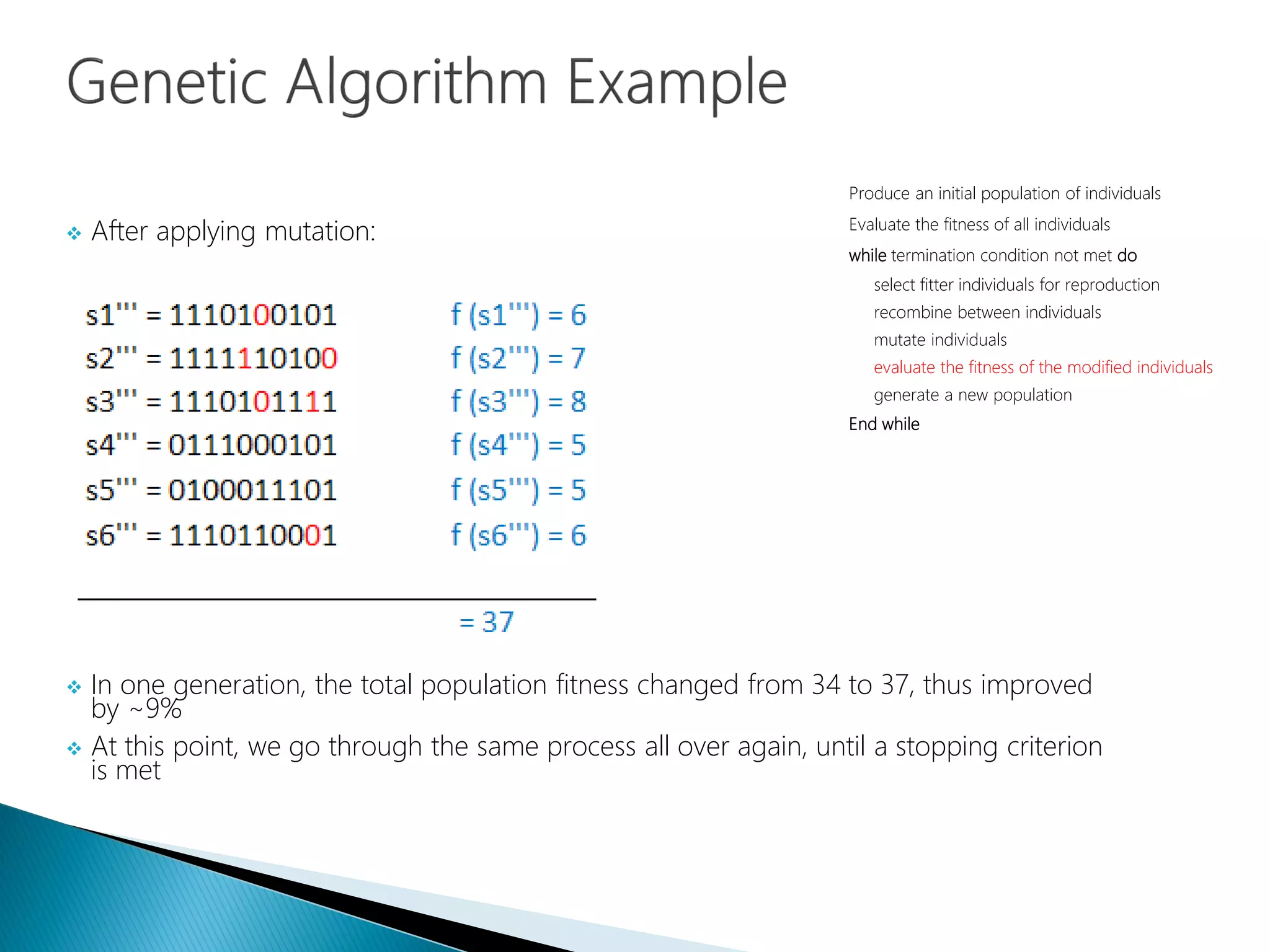

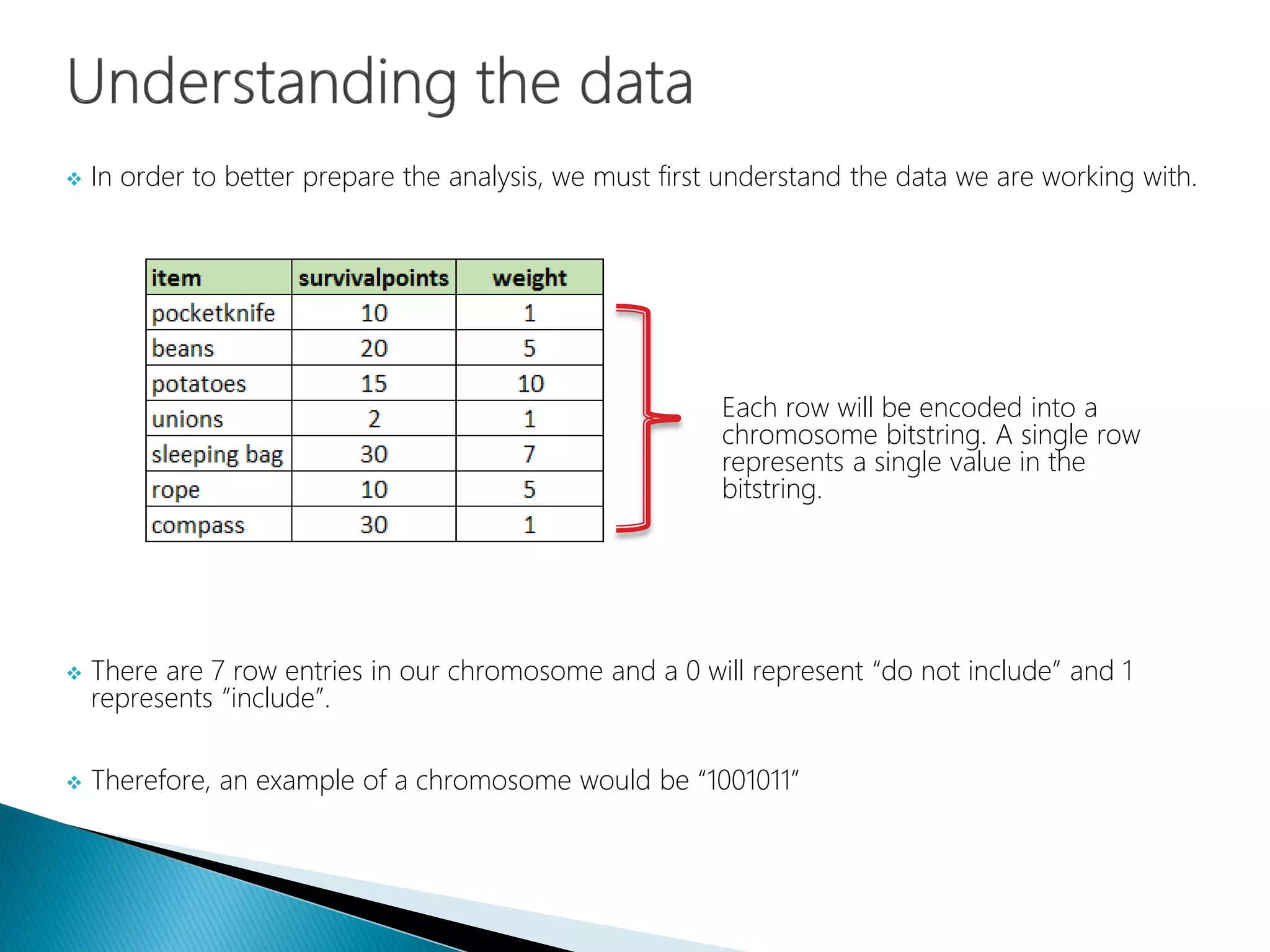

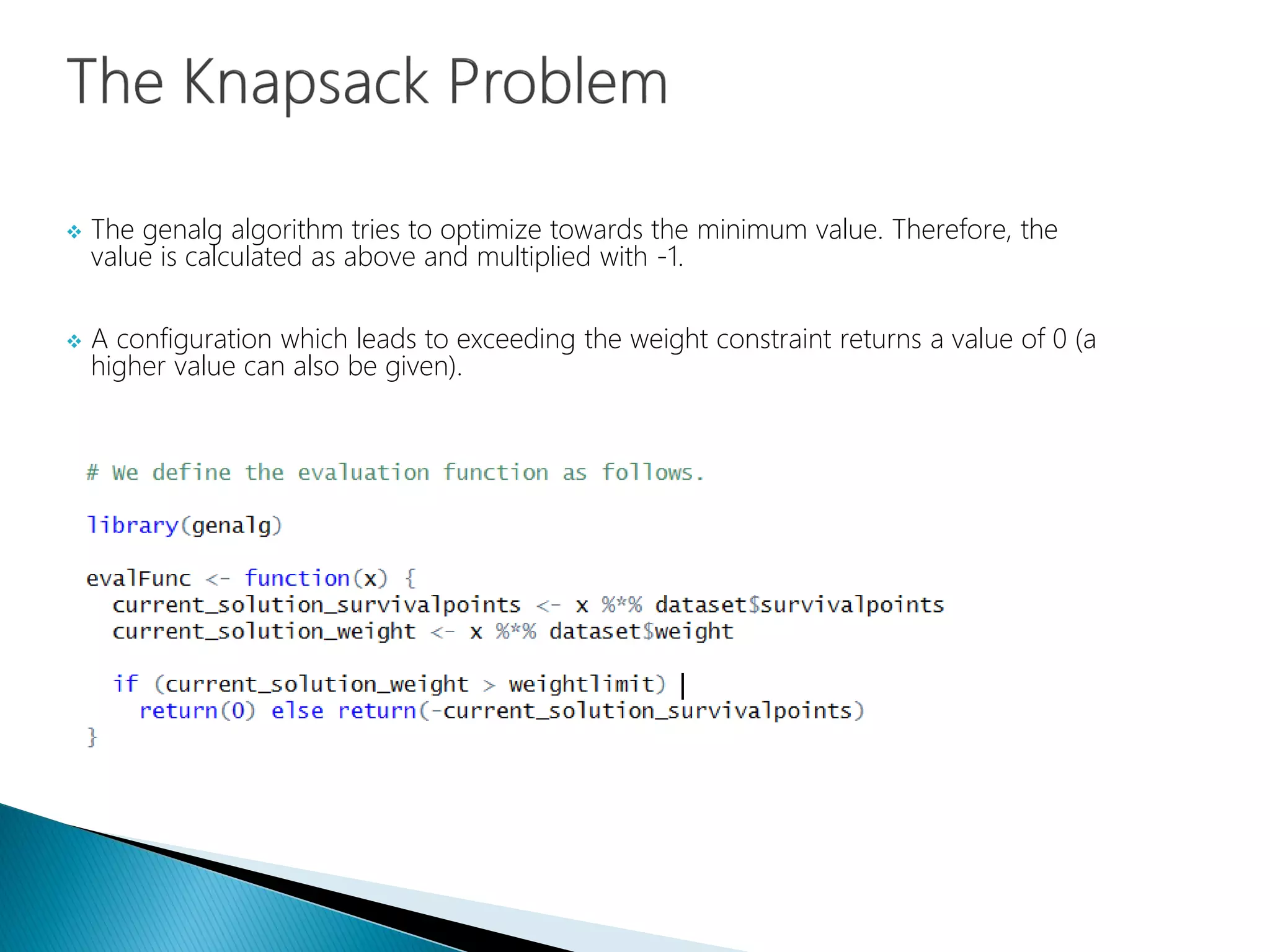

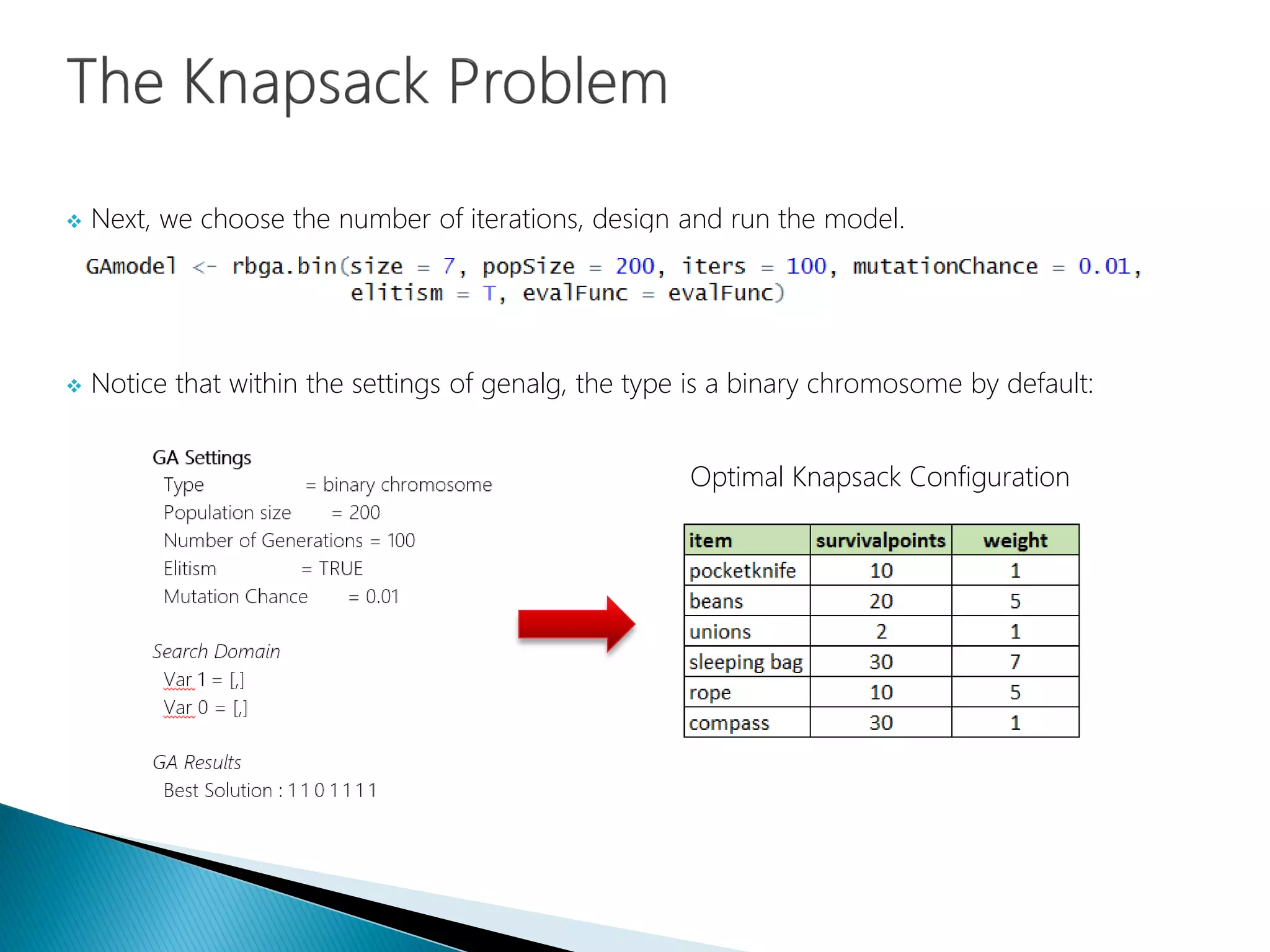

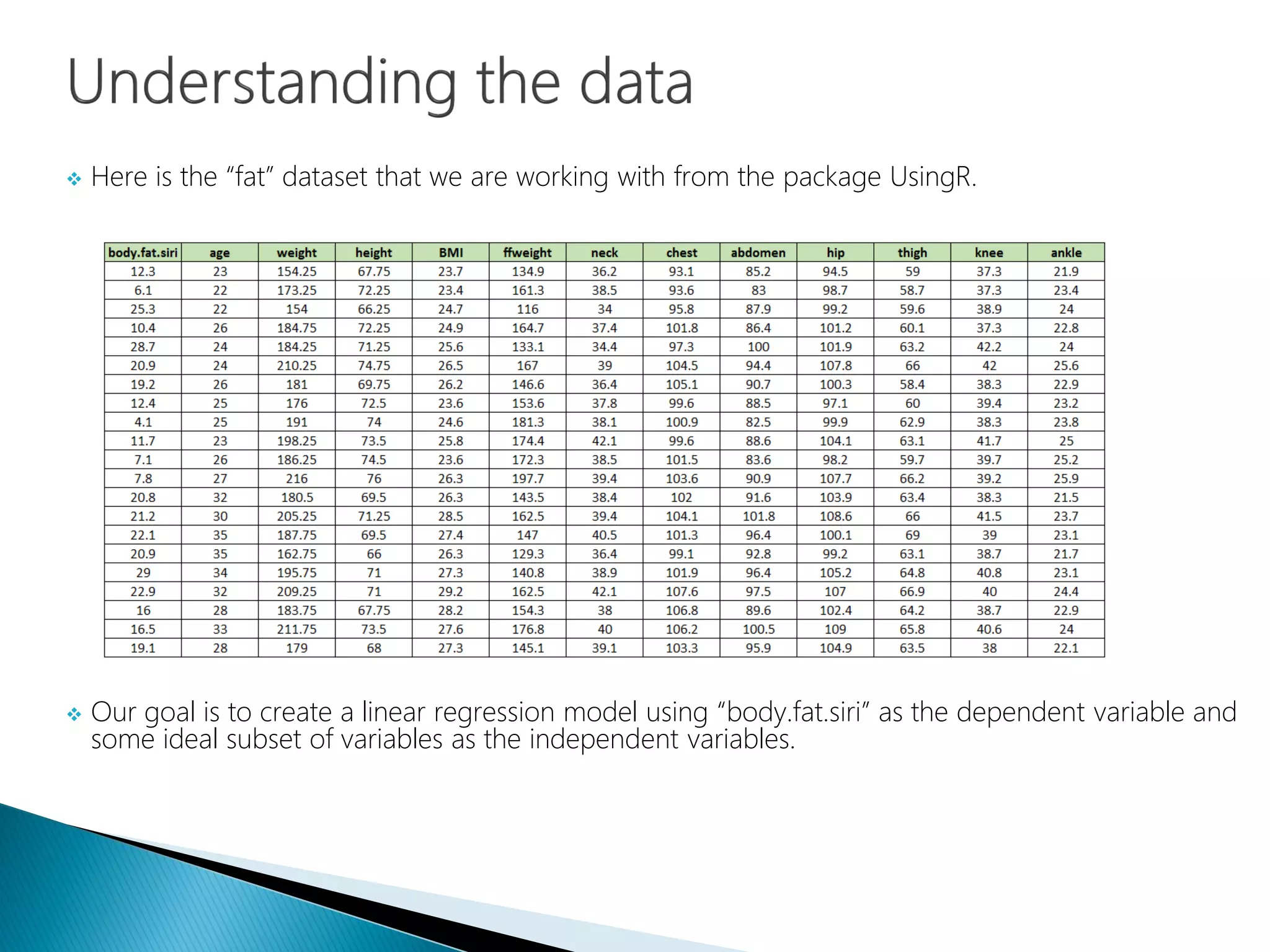

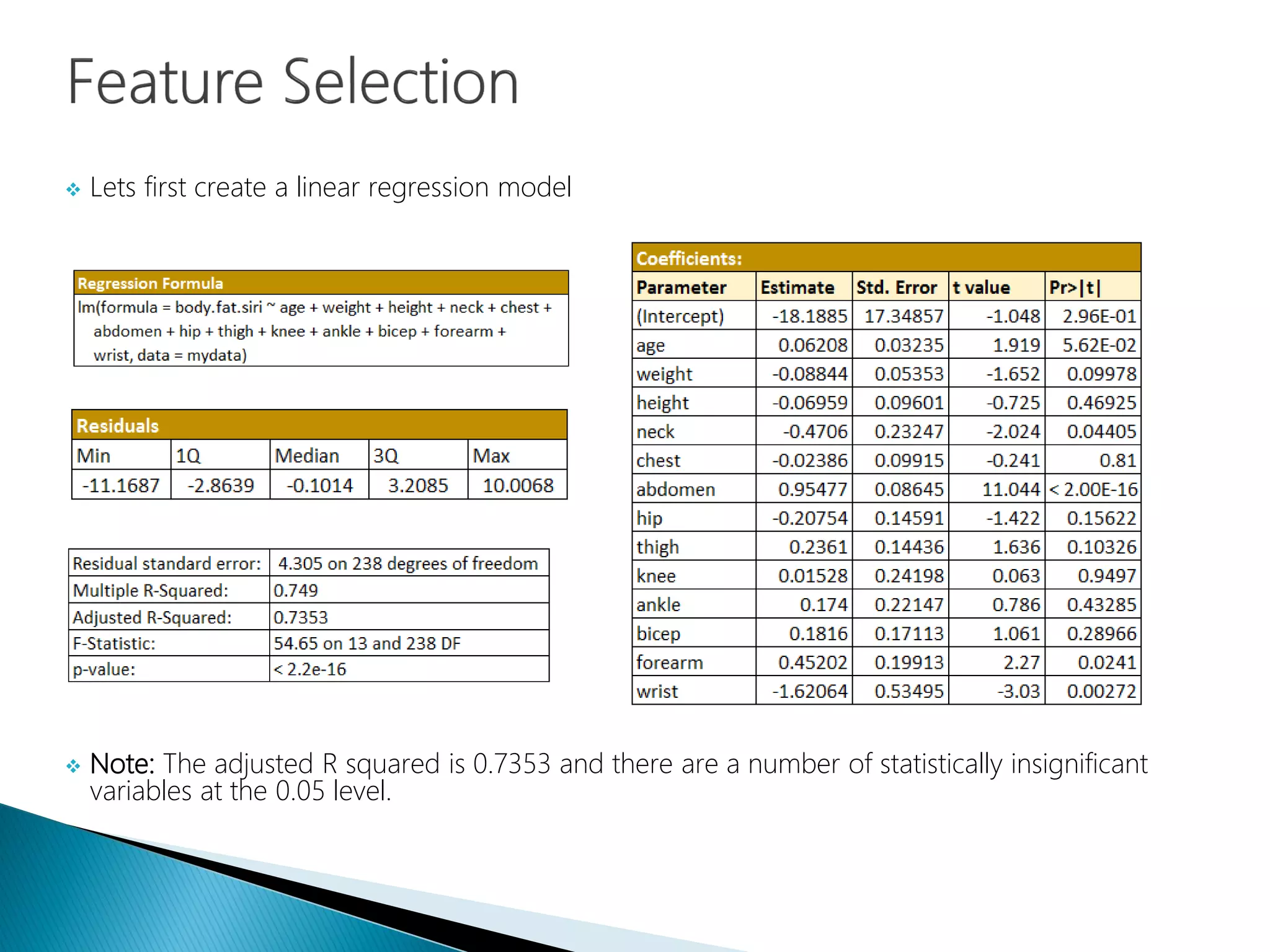

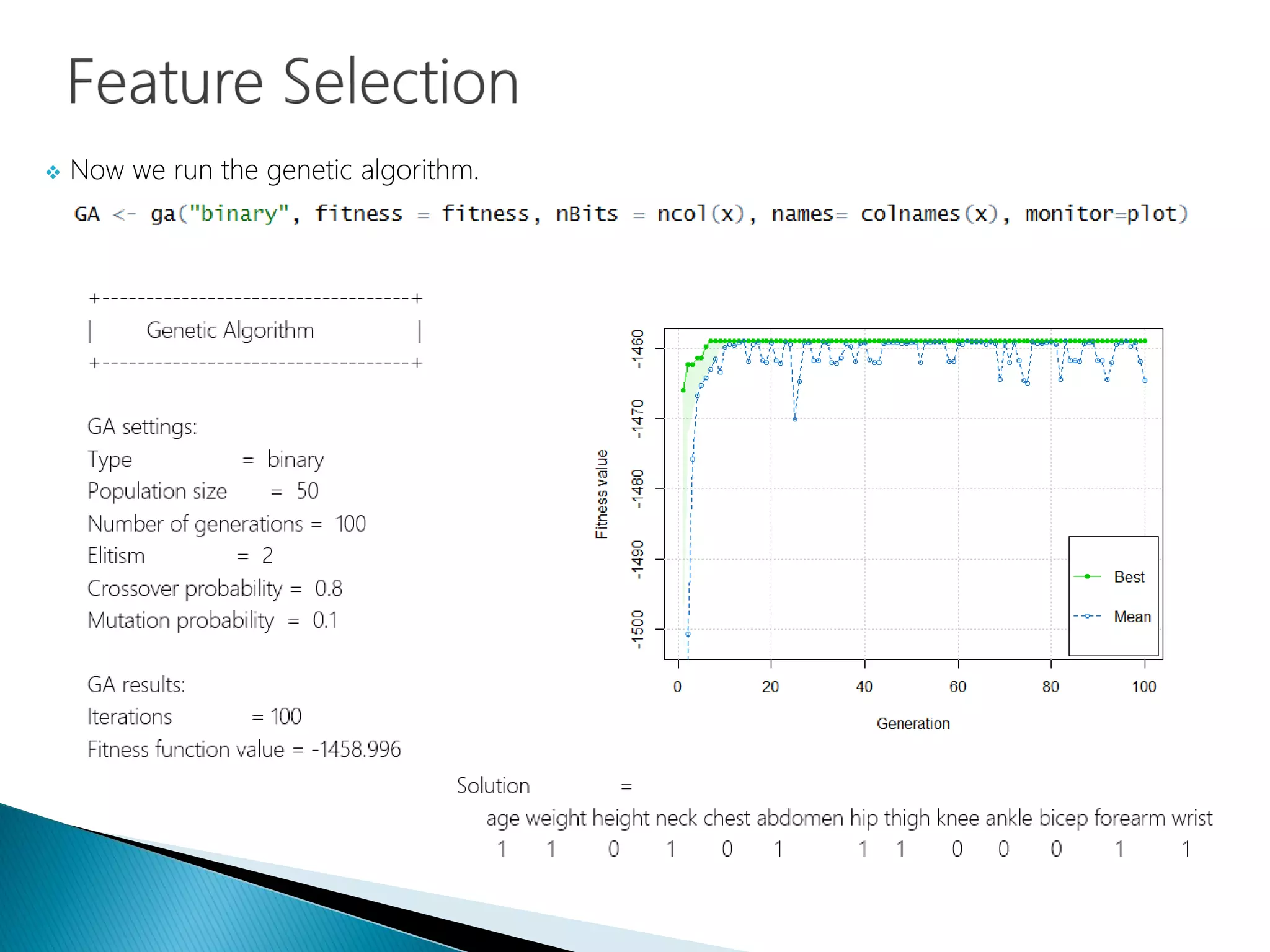

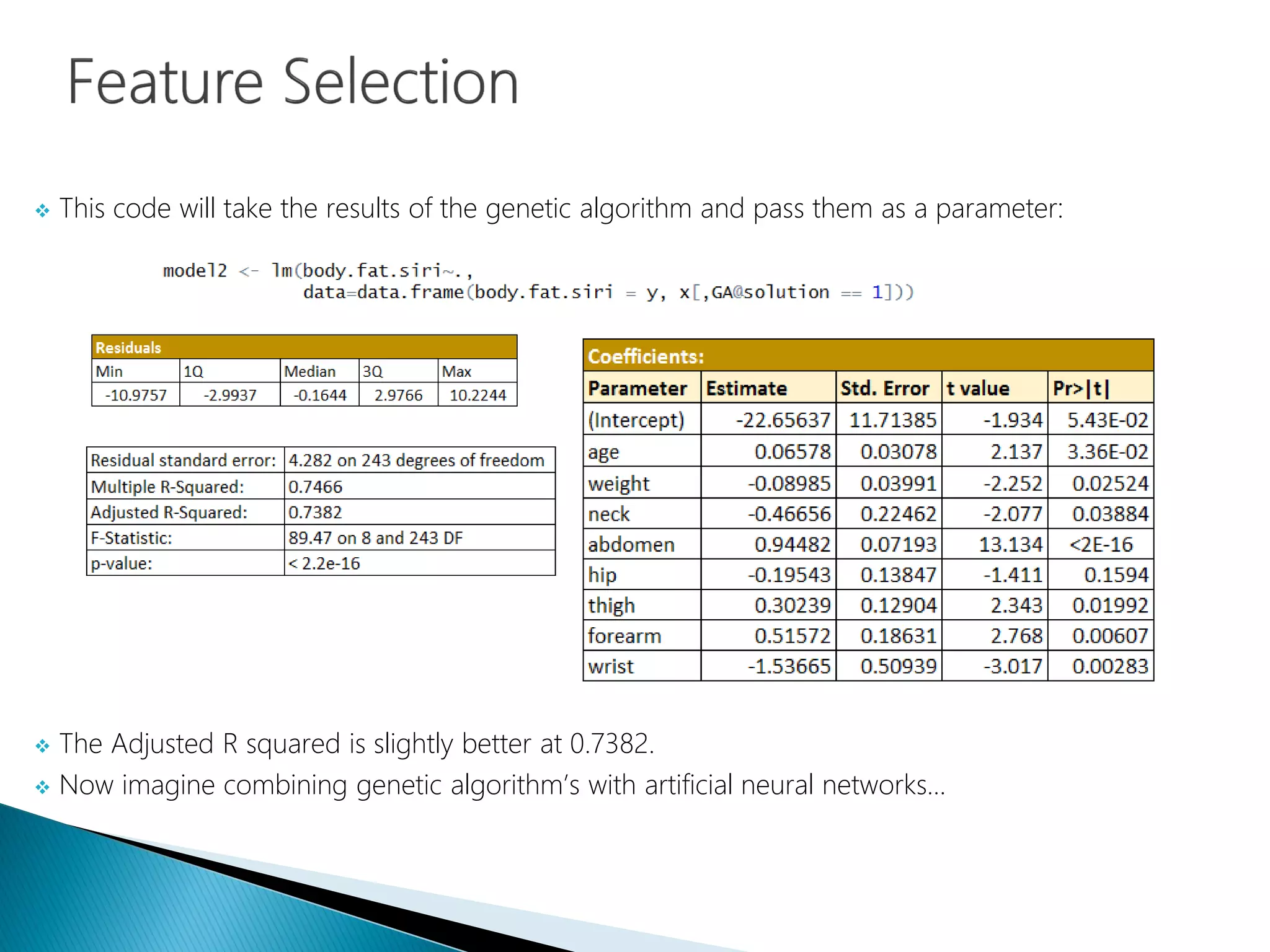

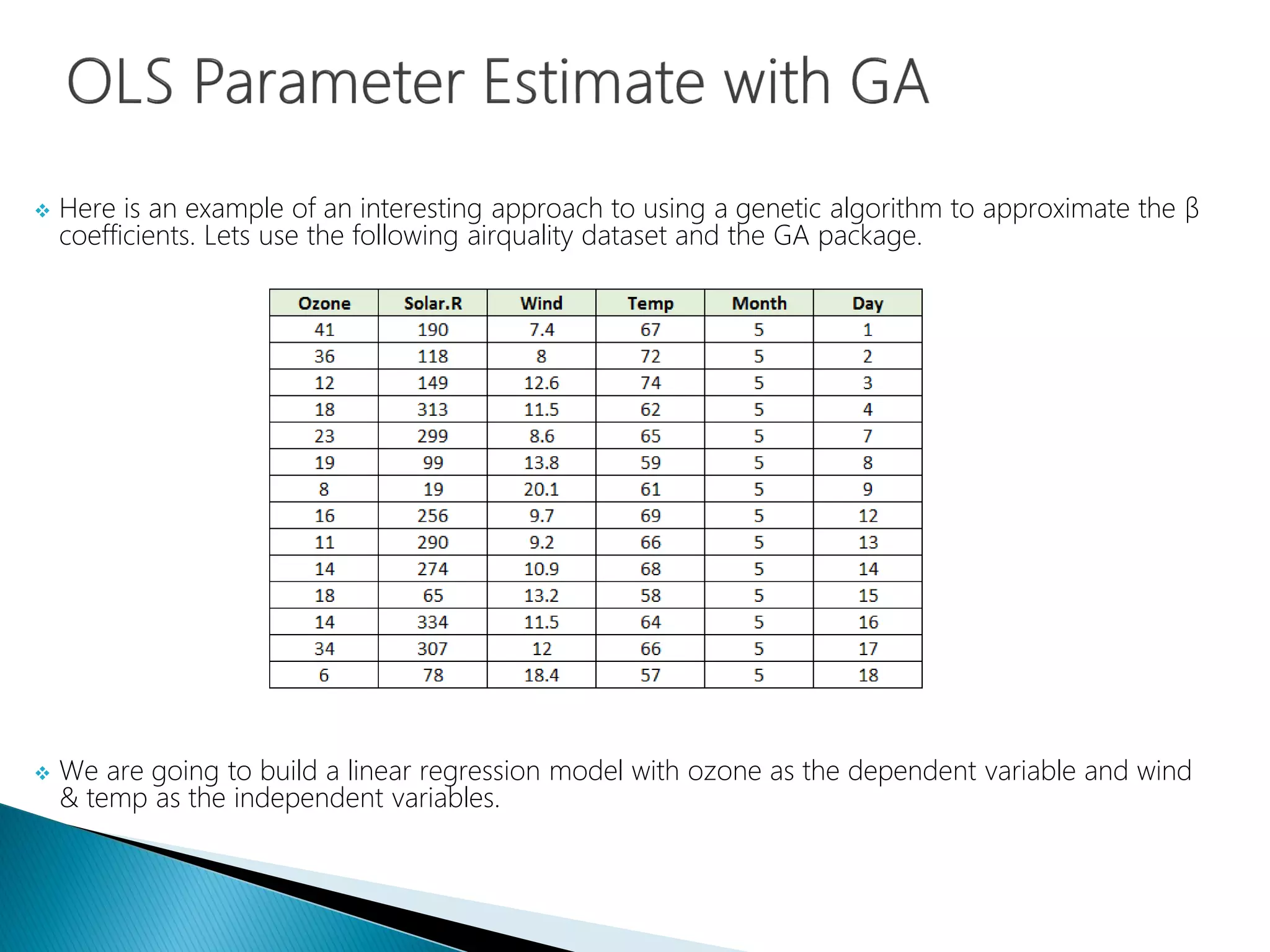

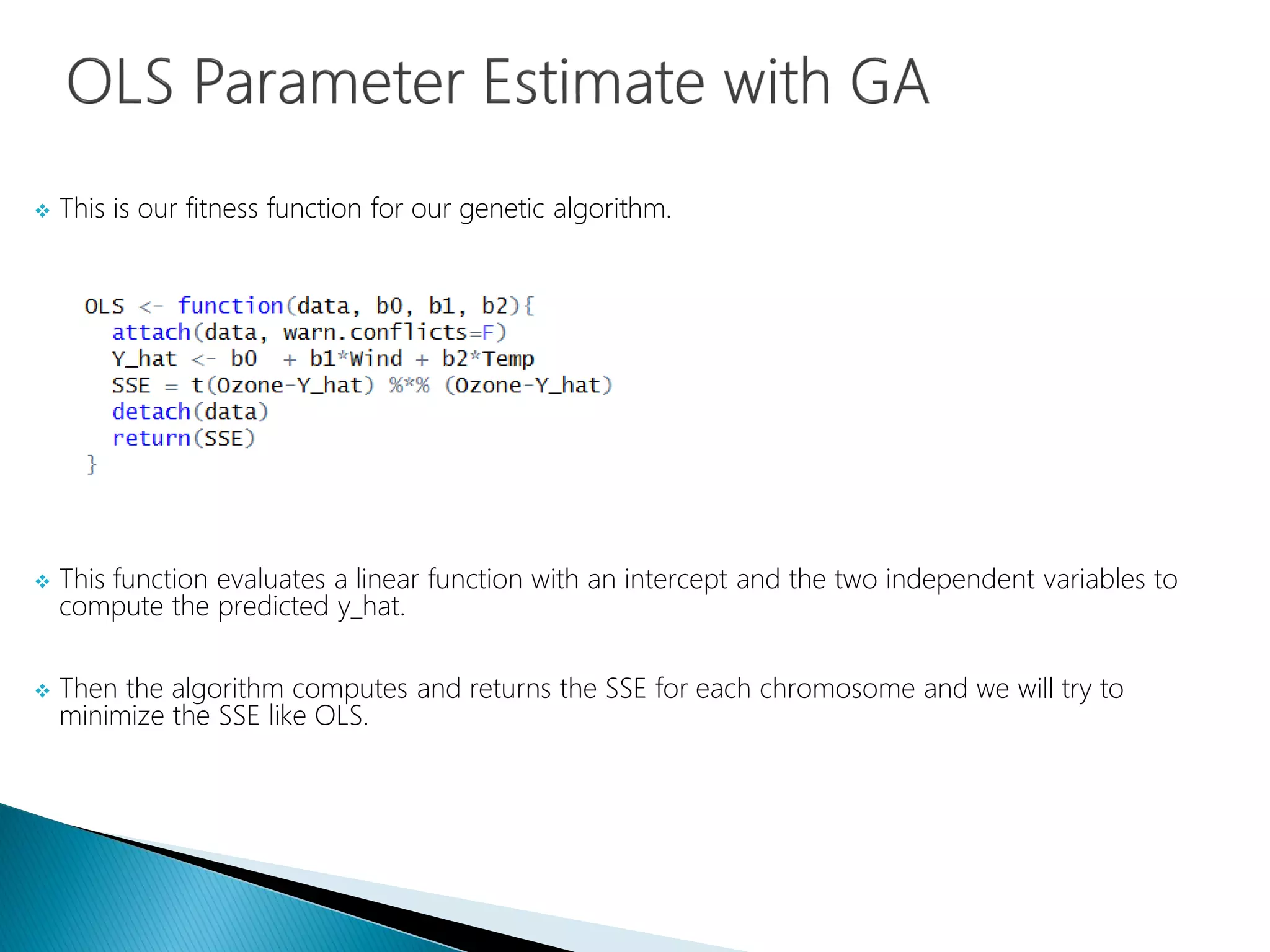

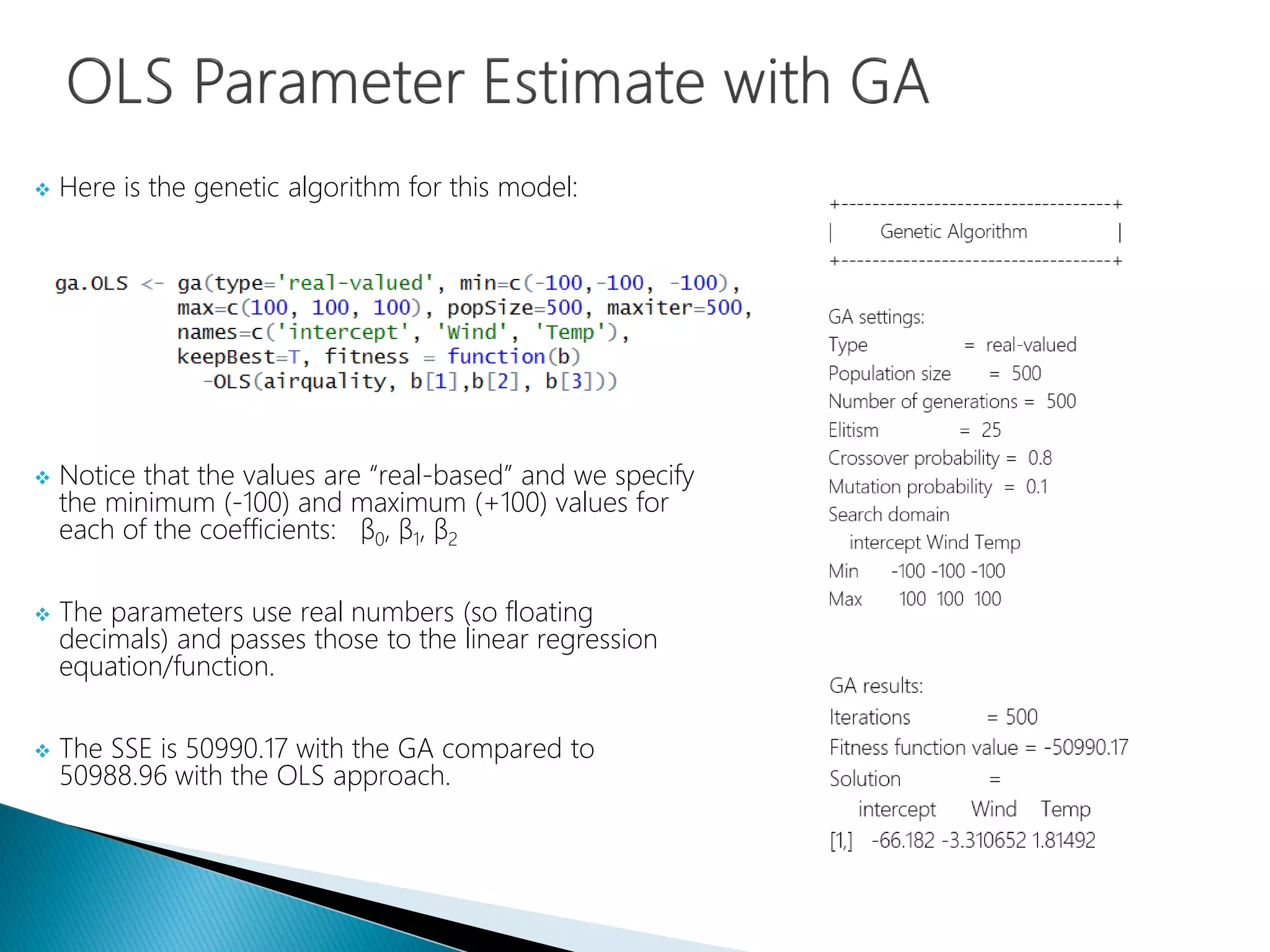

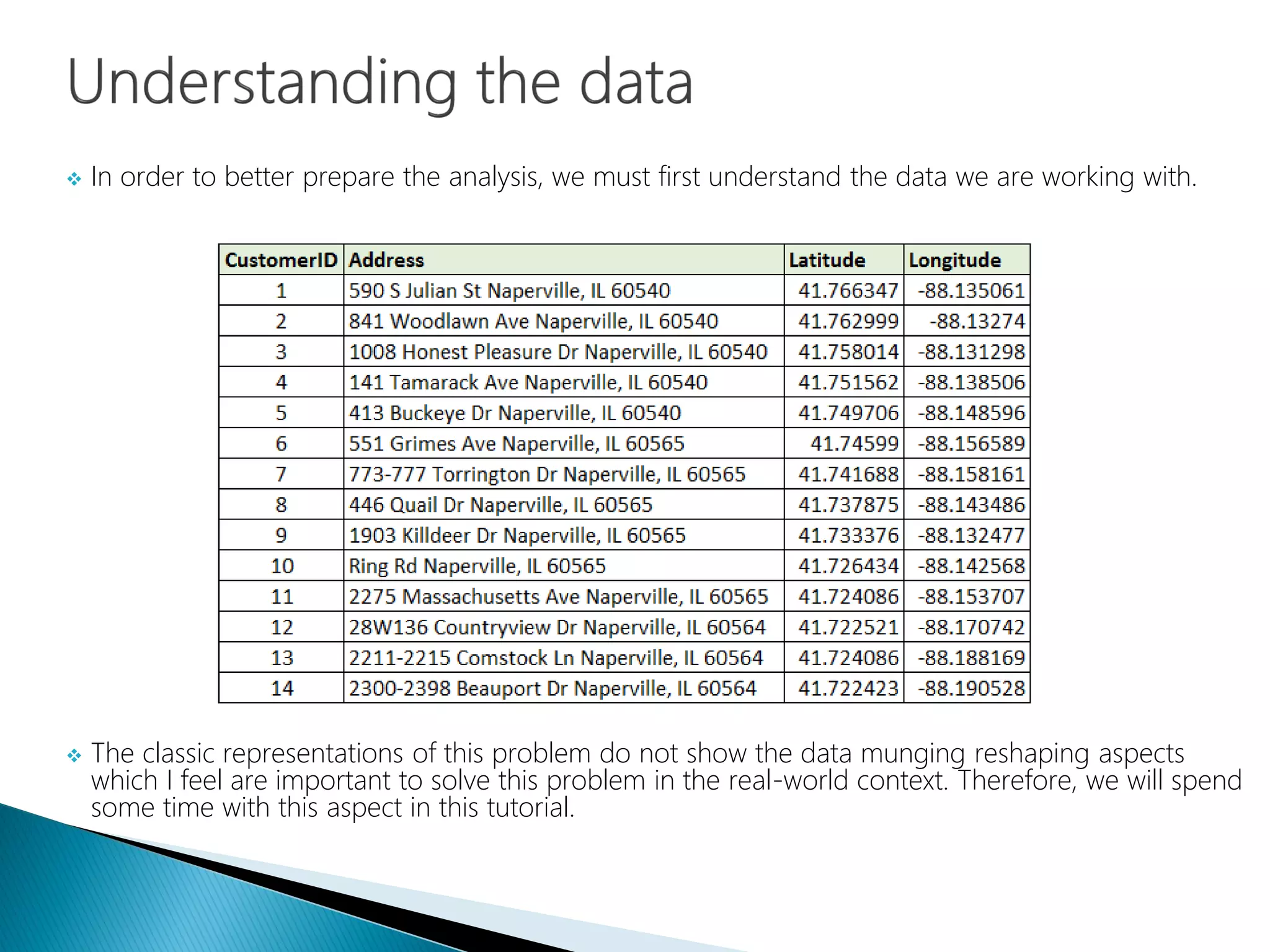

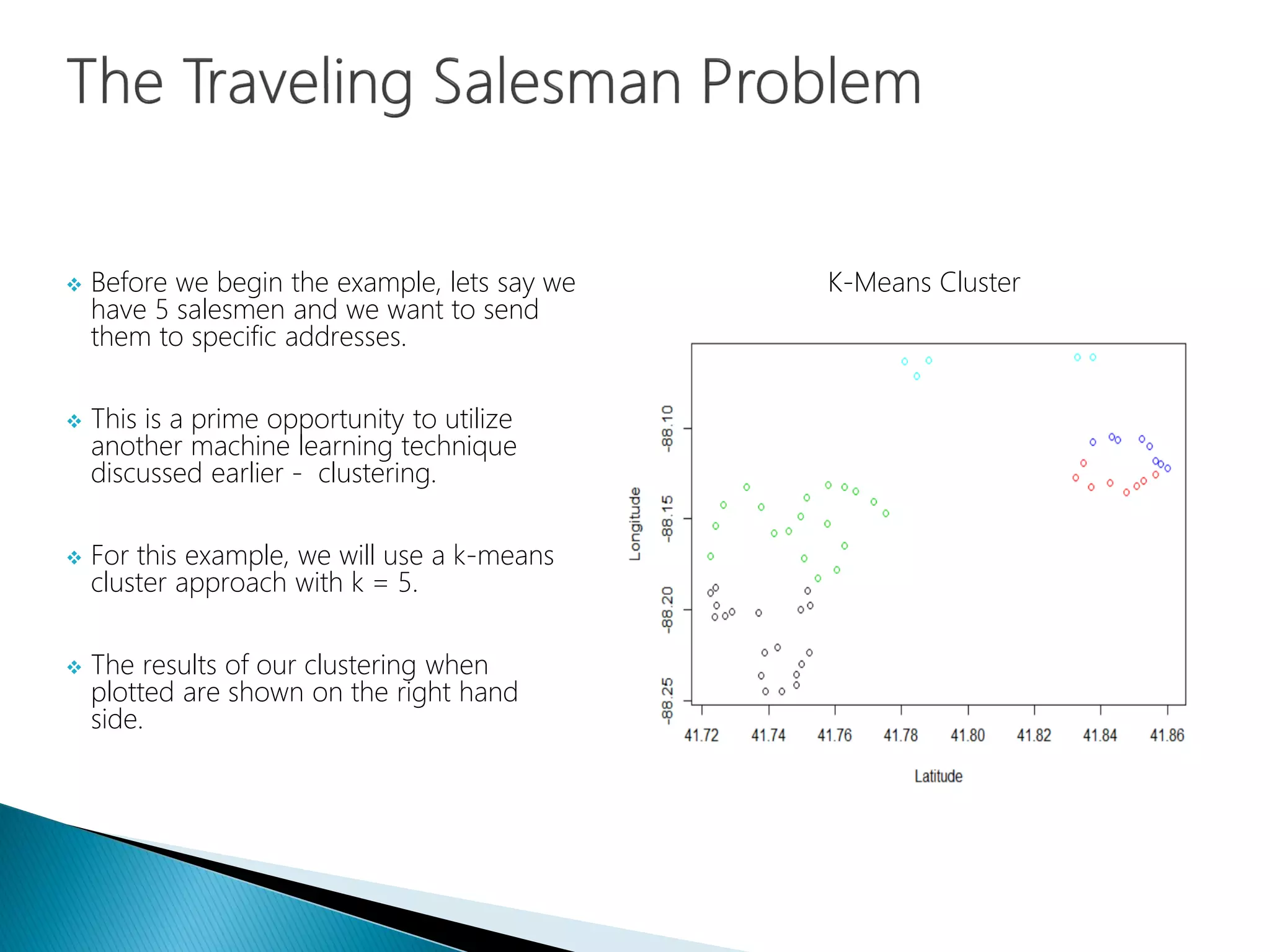

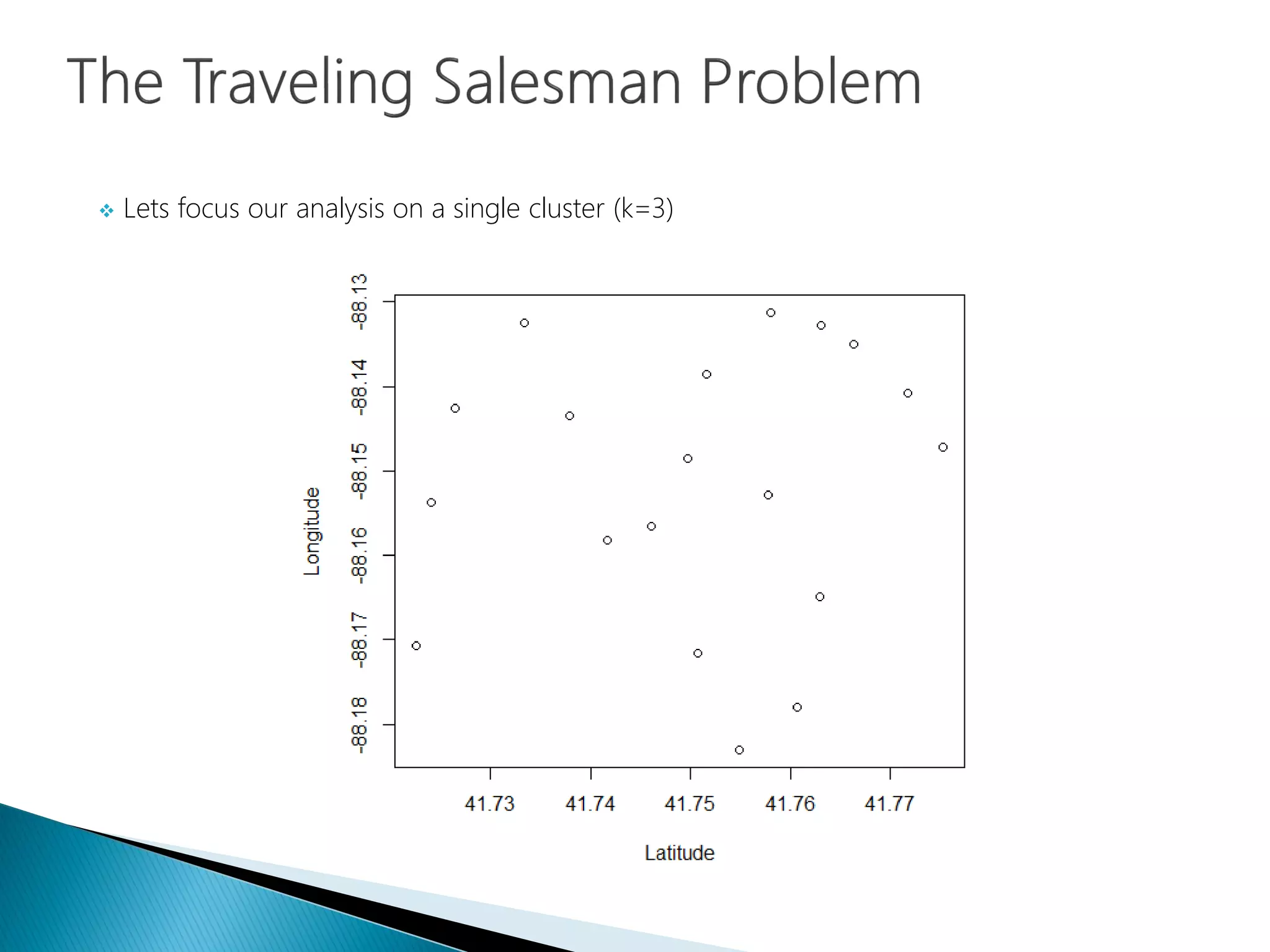

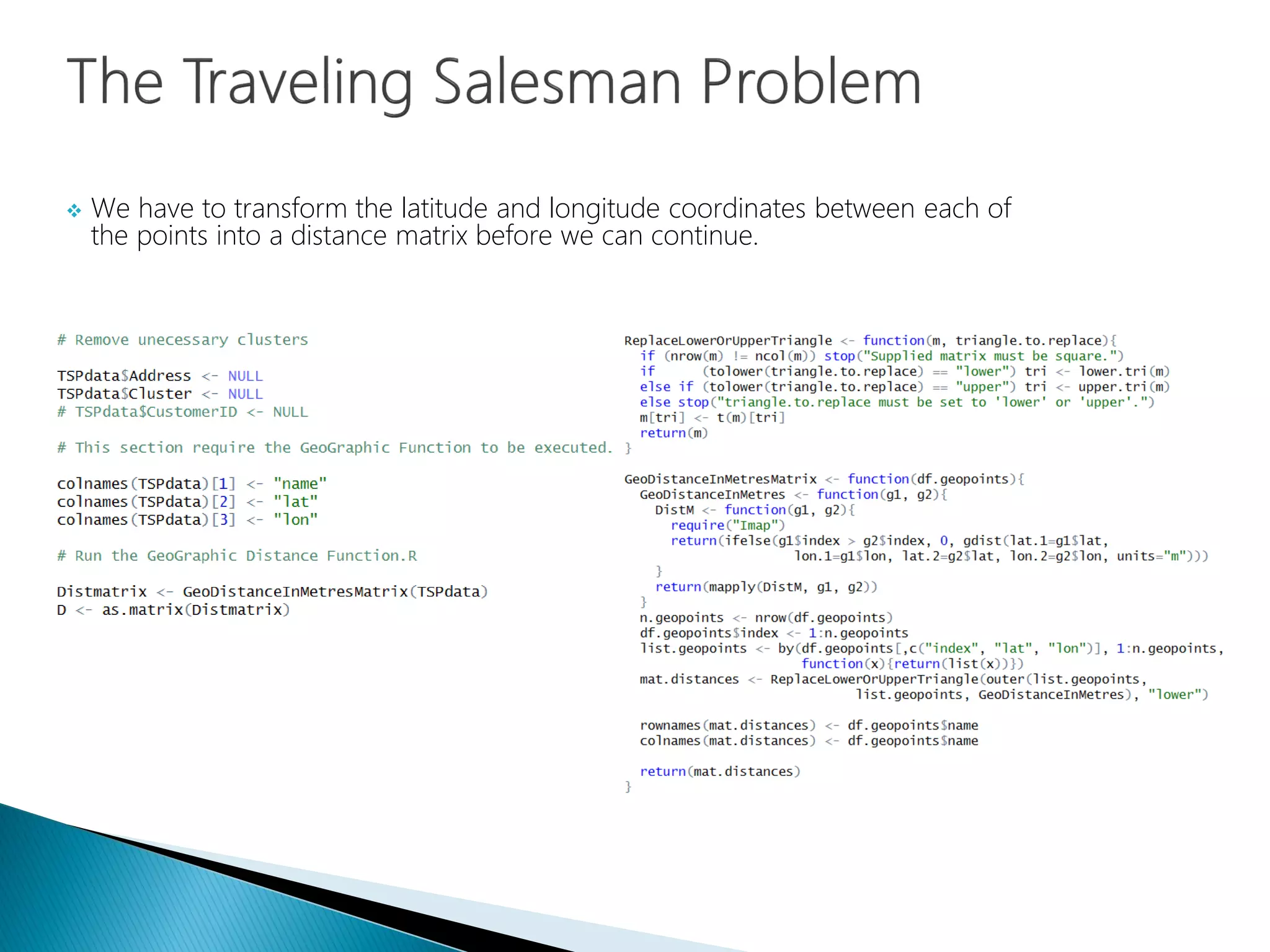

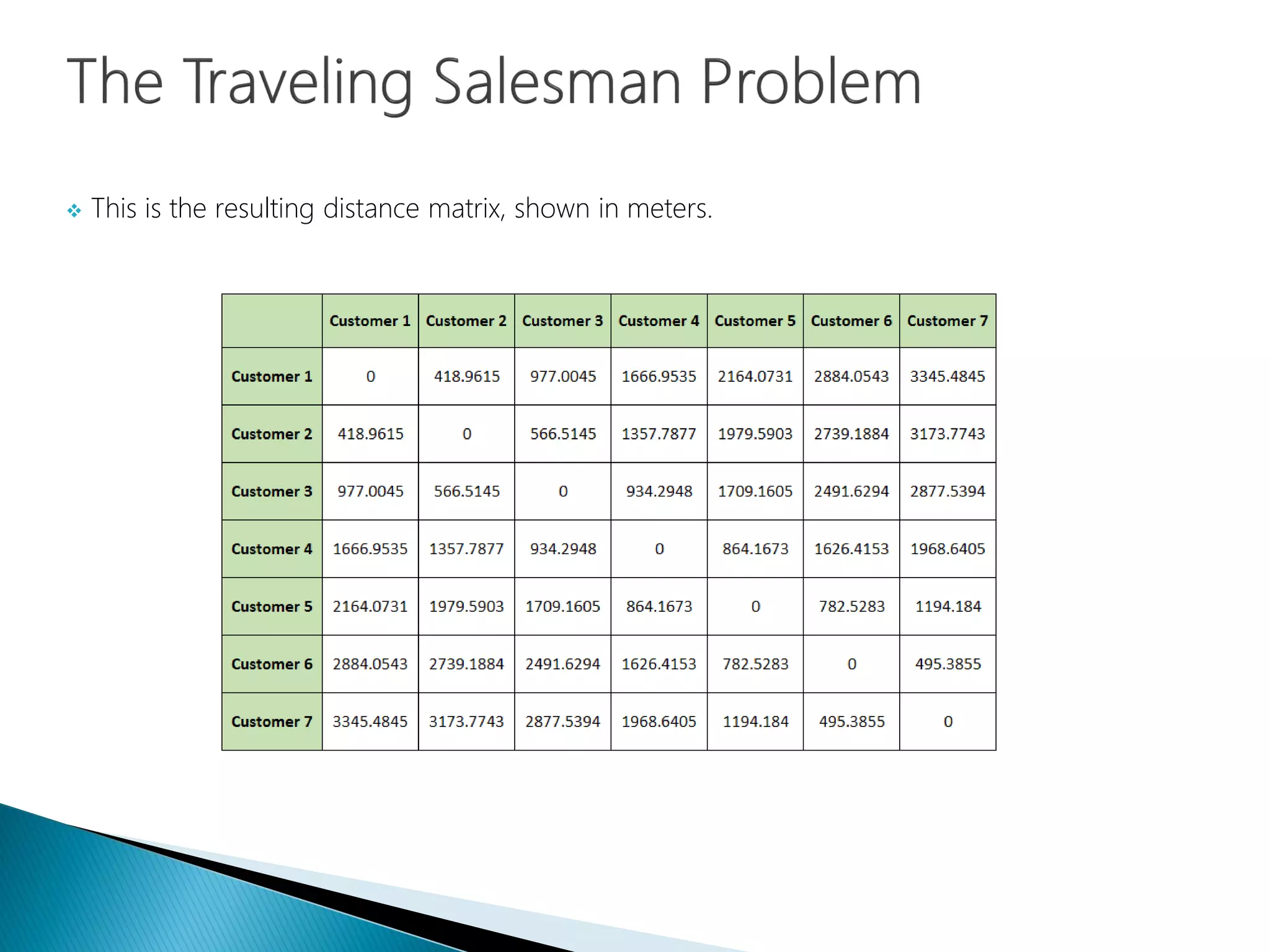

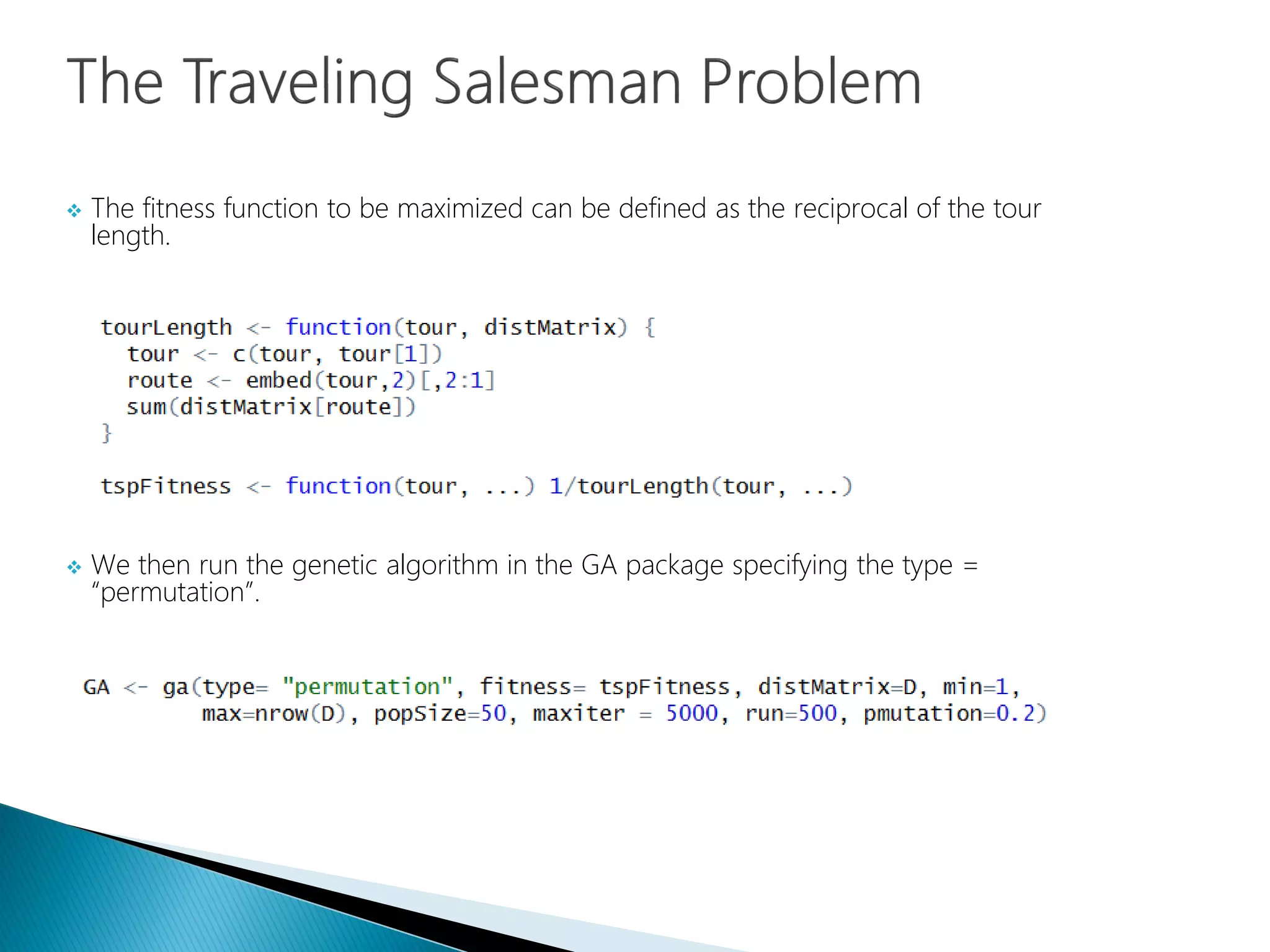

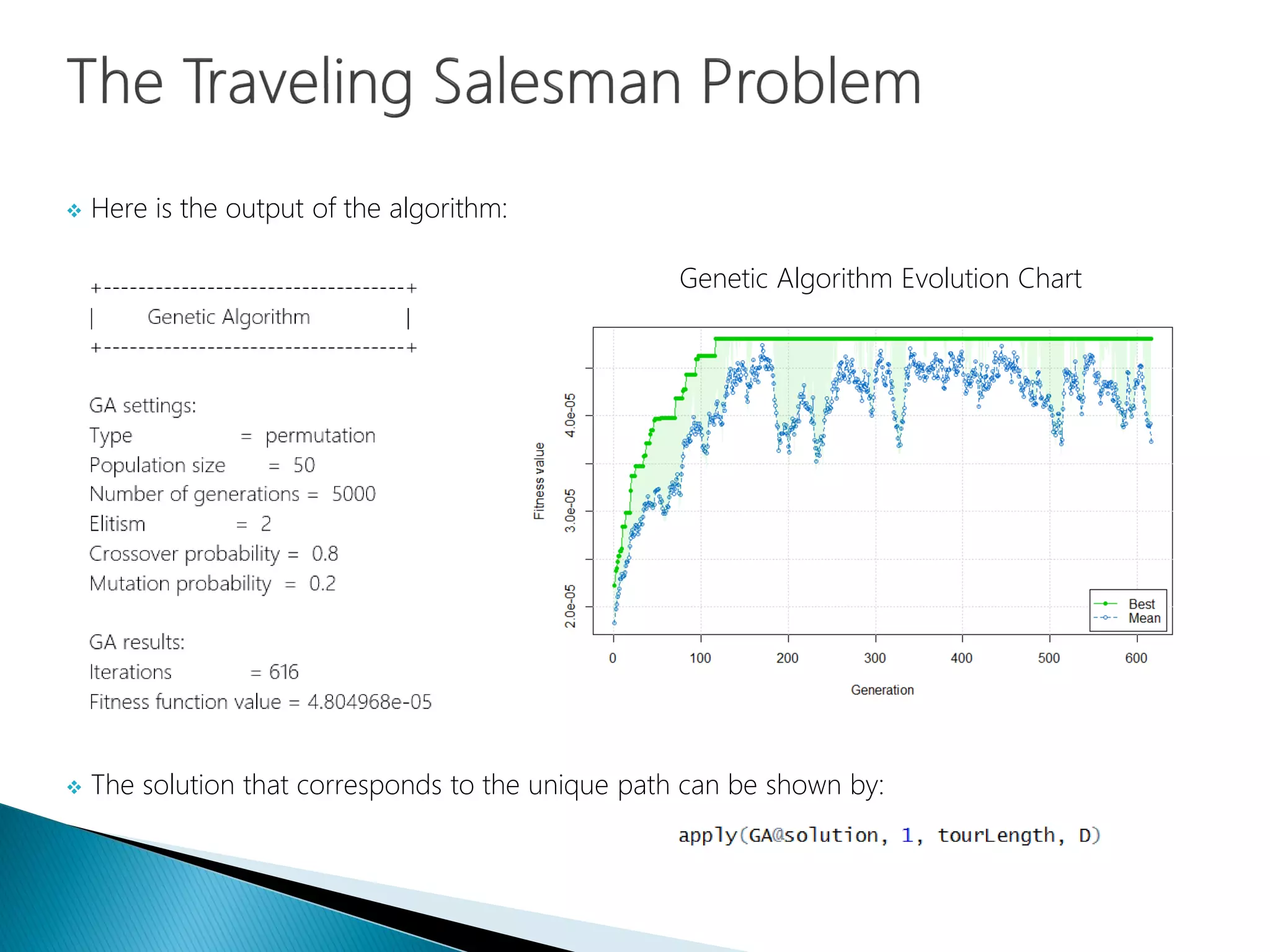

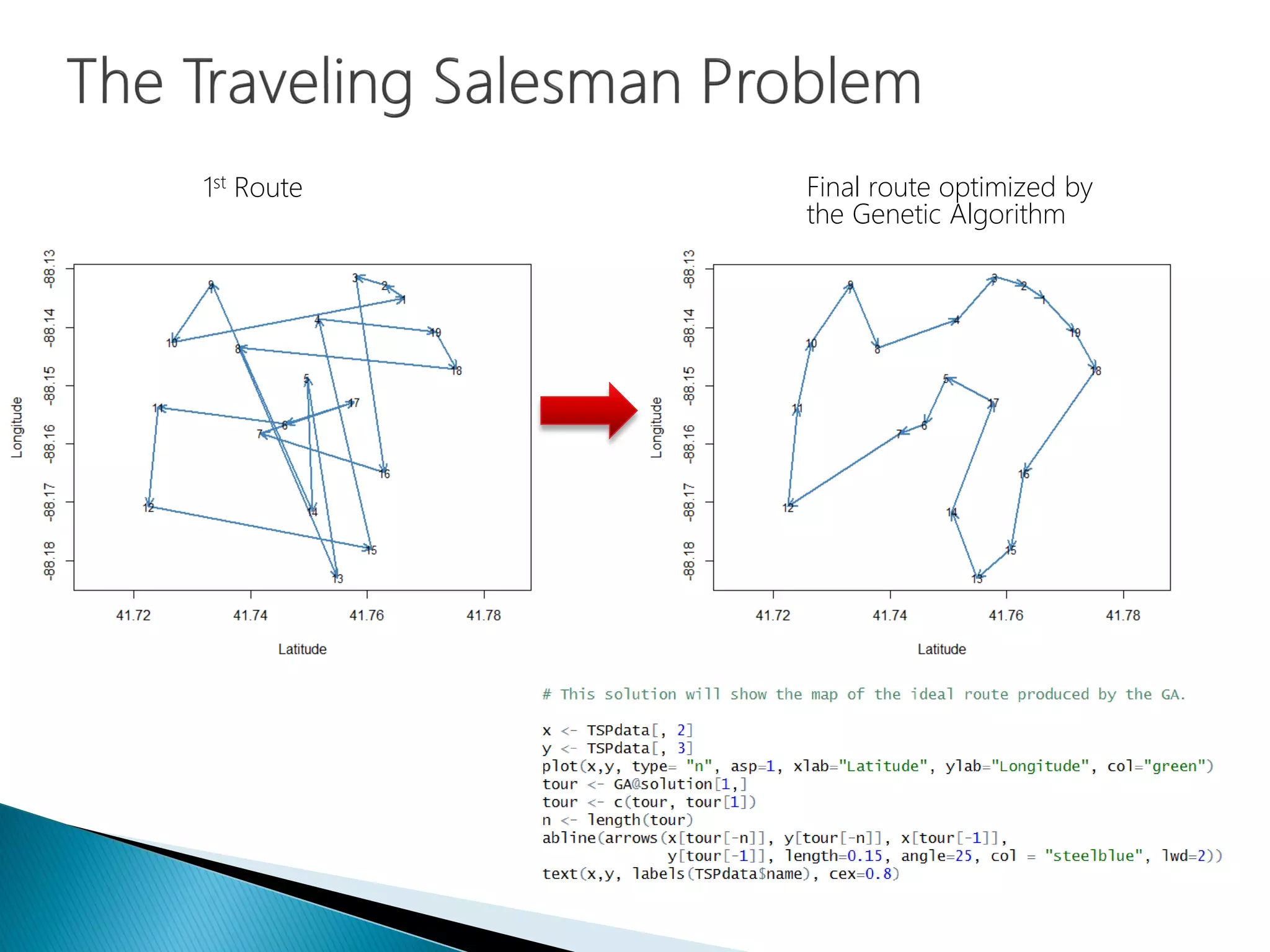

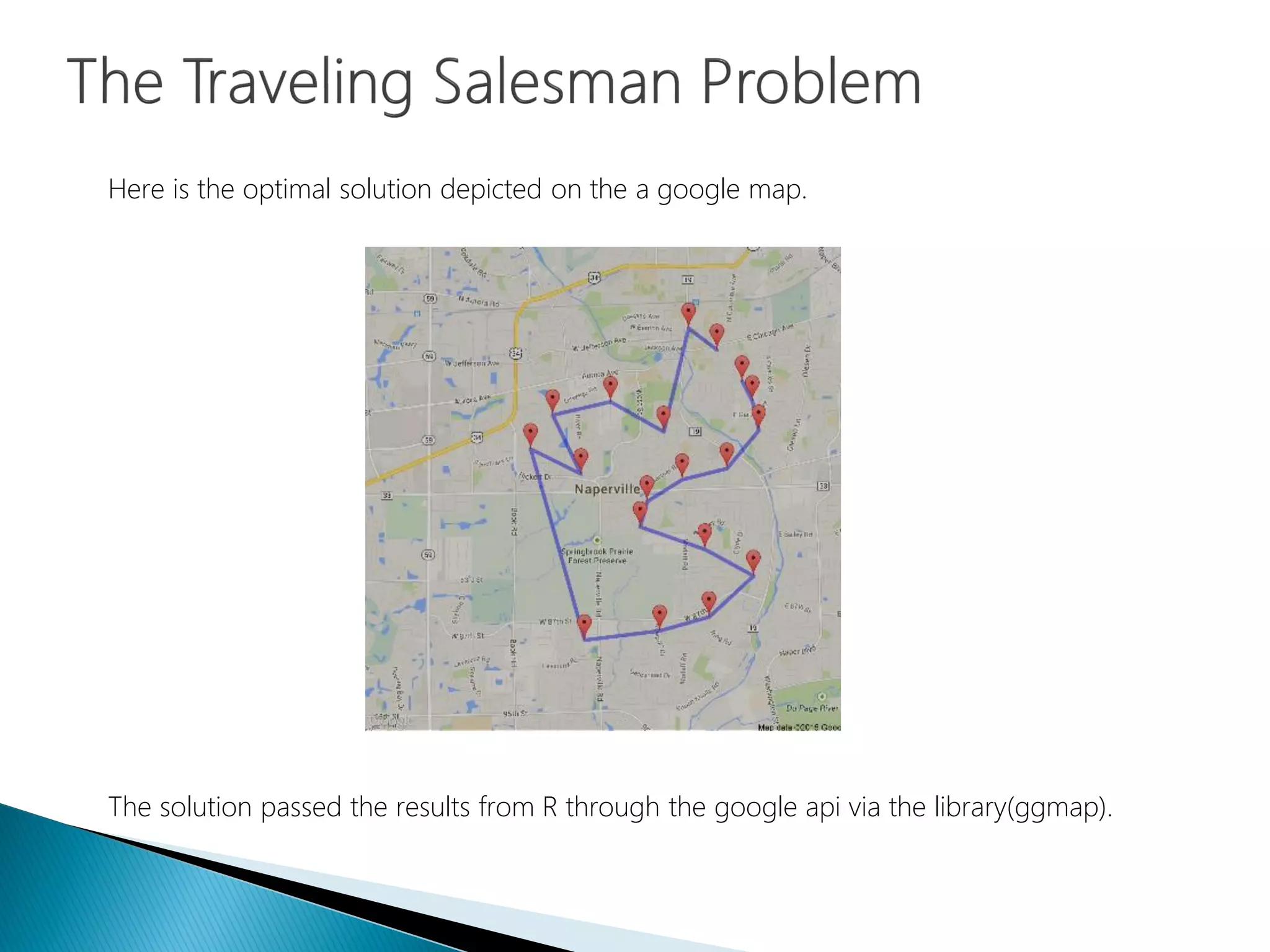

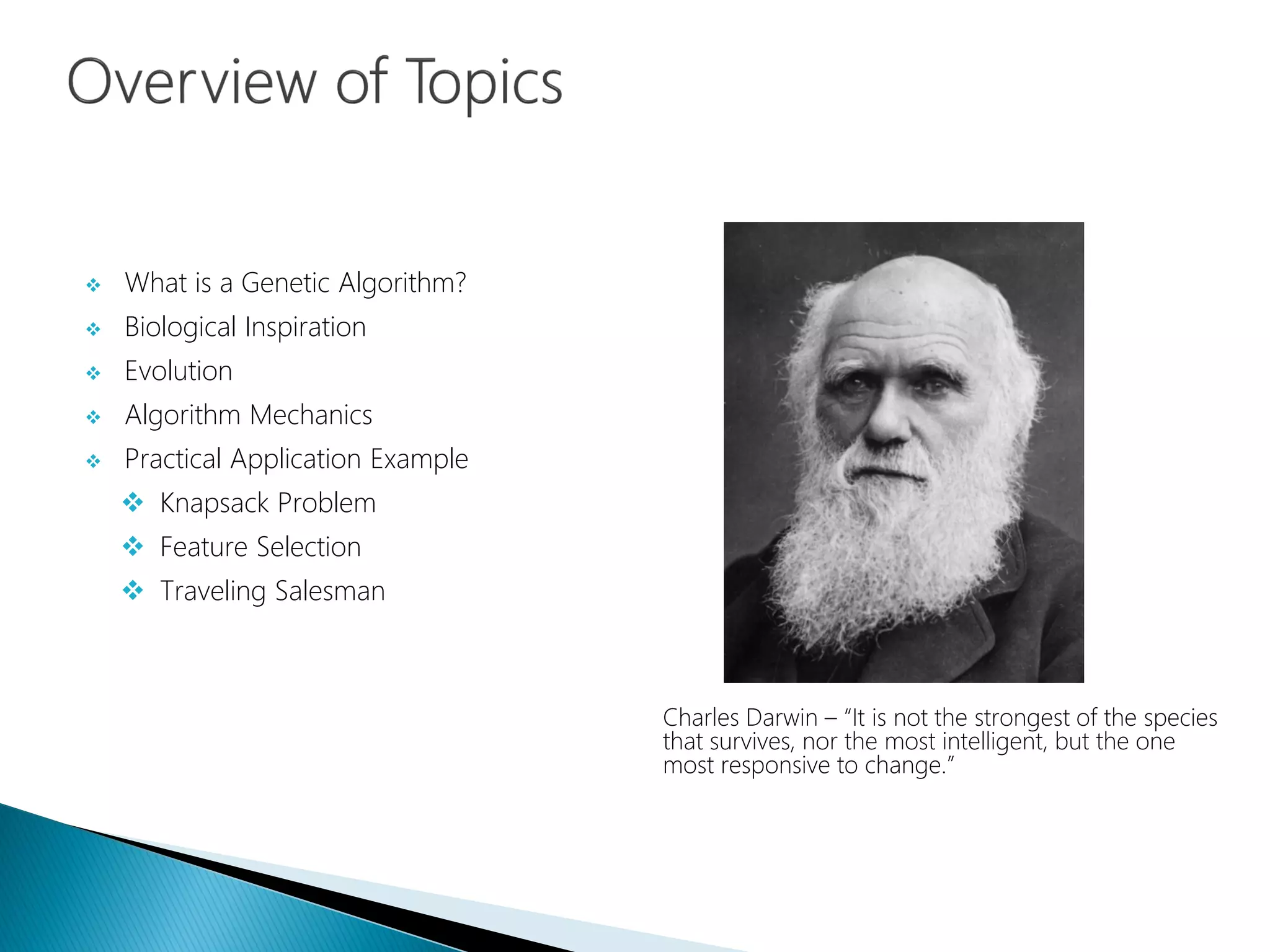

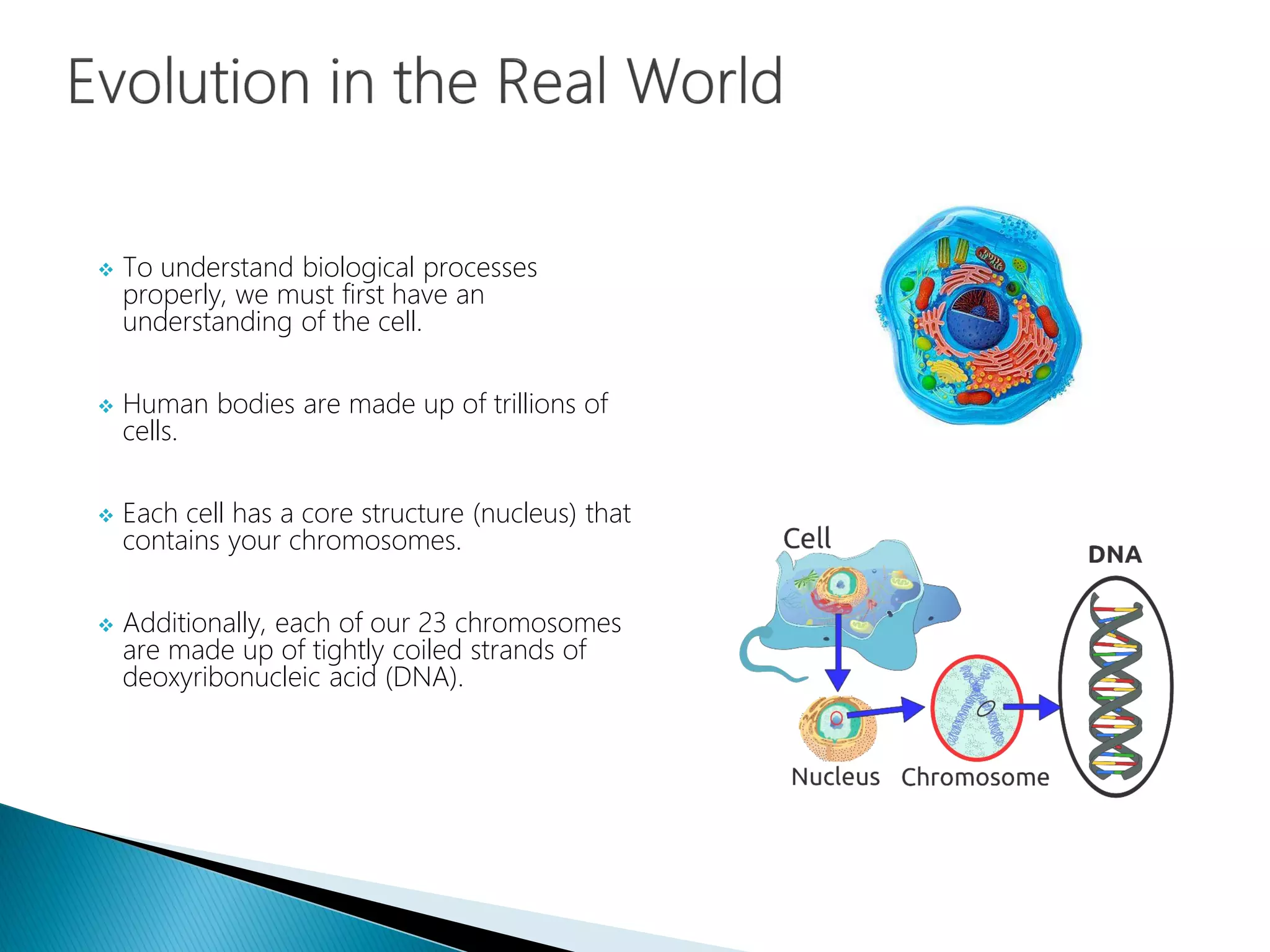

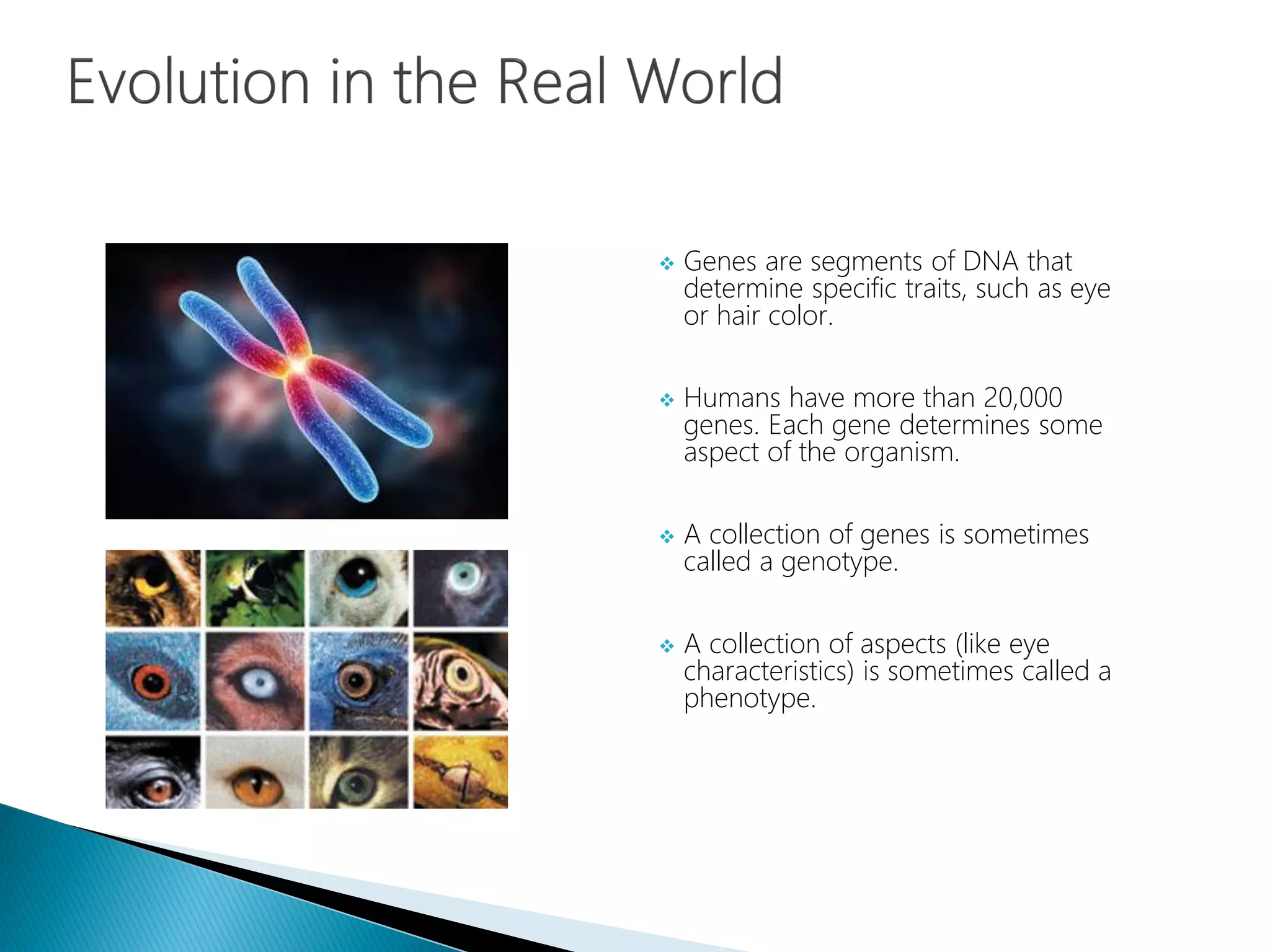

This document discusses genetic algorithms, inspired by natural selection and evolution, highlighting their components, mechanisms, and applications across various fields. It explores their use in optimization problems like the knapsack problem and feature selection for machine learning, demonstrating their evolutionary approach to problem-solving. The document also contrasts traditional classical computing with bio-inspired computing, emphasizing the adaptability and efficiency of genetic algorithms in complex problem-solving scenarios.

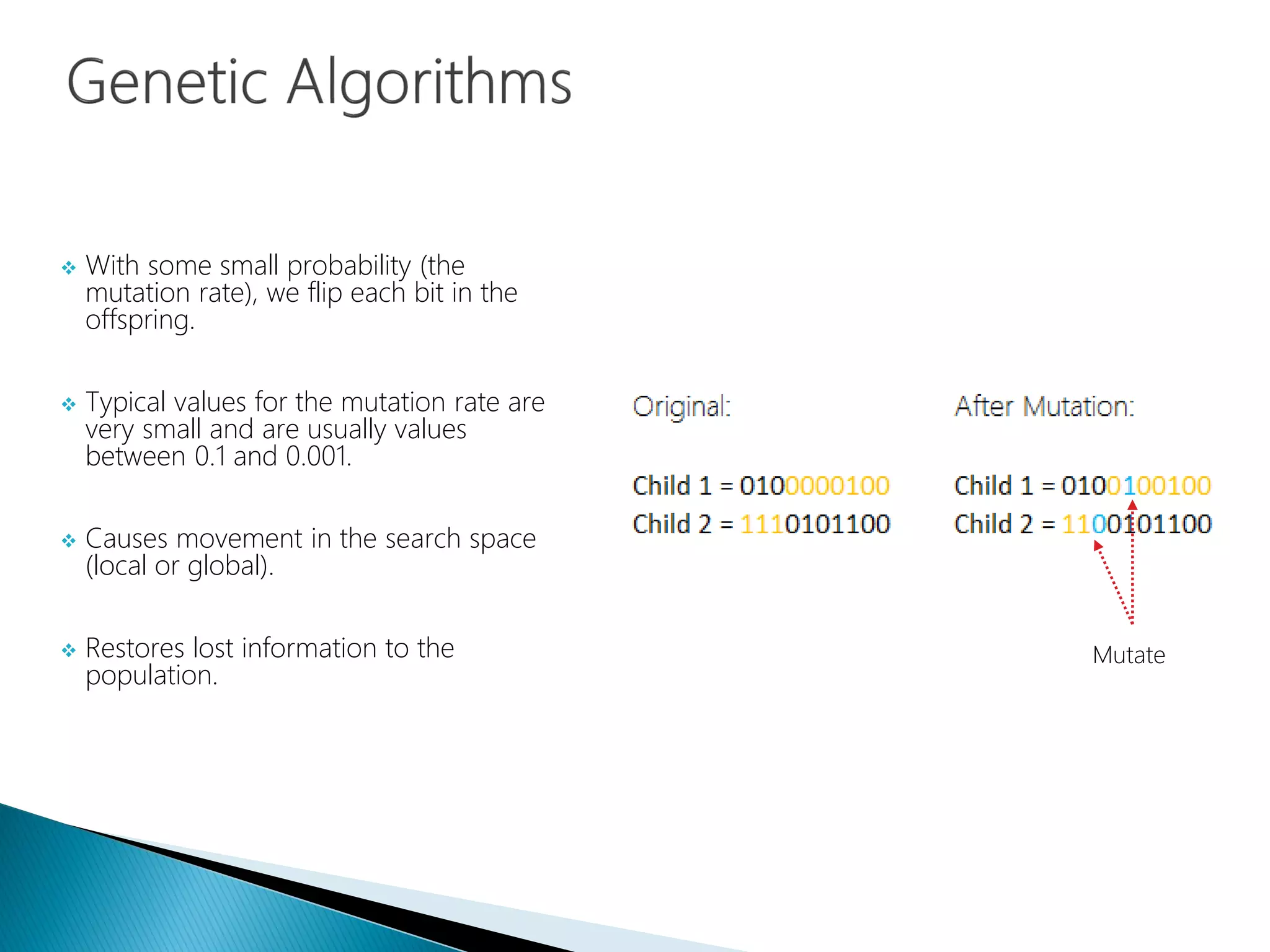

![ The set of all possible solutions [0 to 1000]

is called the search space or state space.

In this example, it’s just one number but it

could be many numbers.

Often genetic algorithms code numbers

in binary producing a bitstring

representing a solution.

We choose 1,0 bits which is enough to

represent 0 to 1000](https://image.slidesharecdn.com/14-150226063005-conversion-gate01/75/Data-Science-Part-XIV-Genetic-Algorithms-22-2048.jpg)