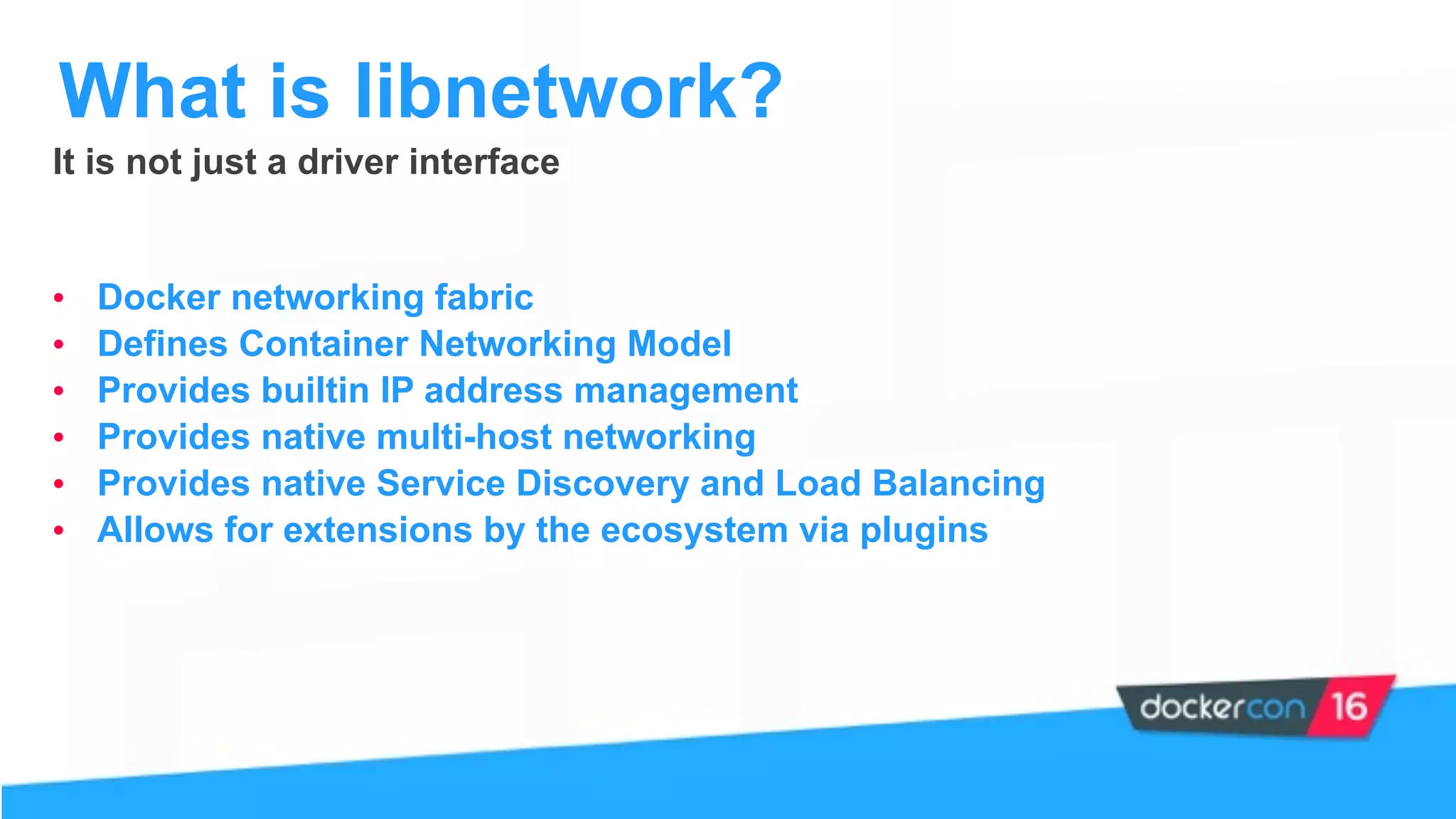

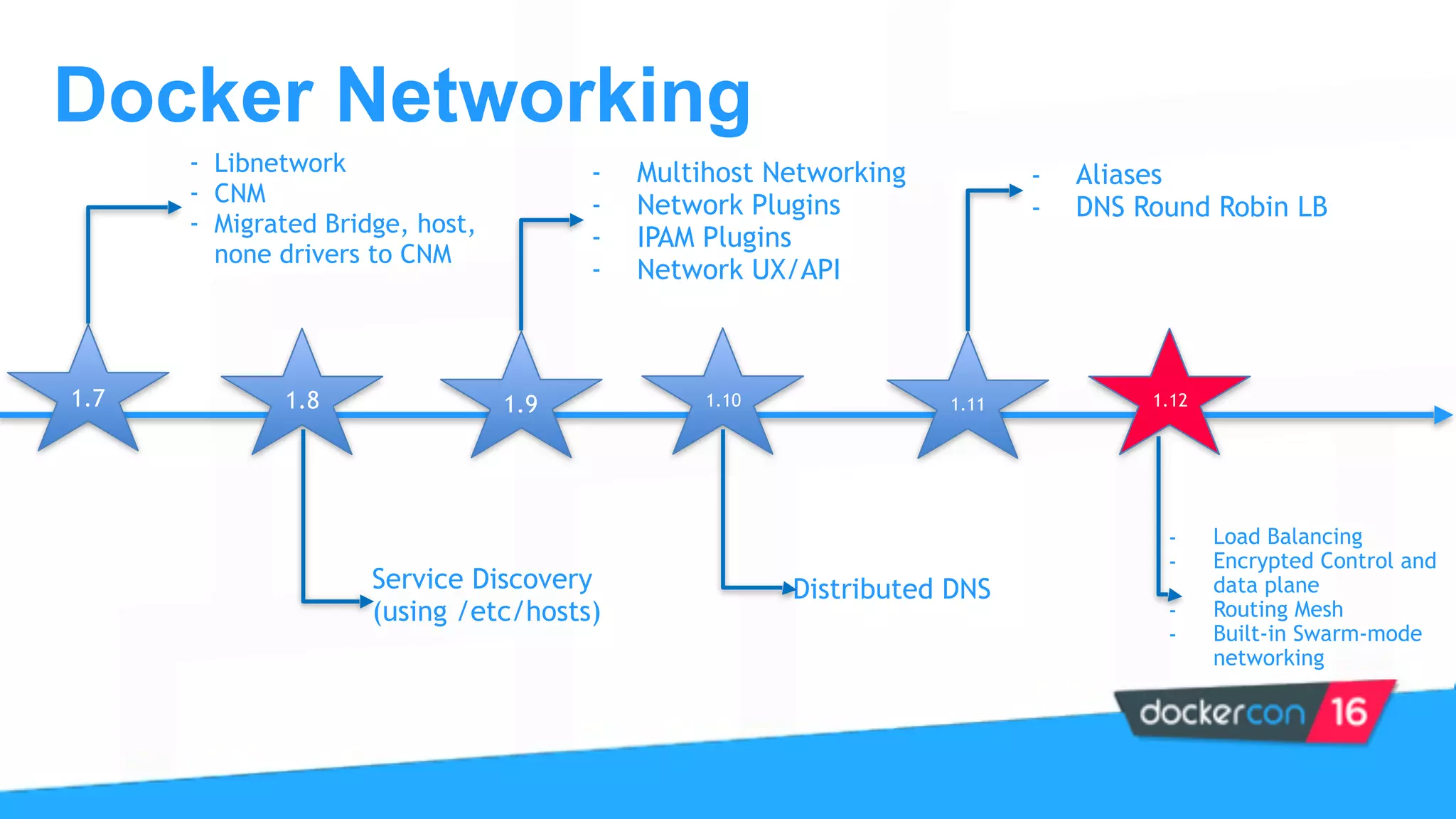

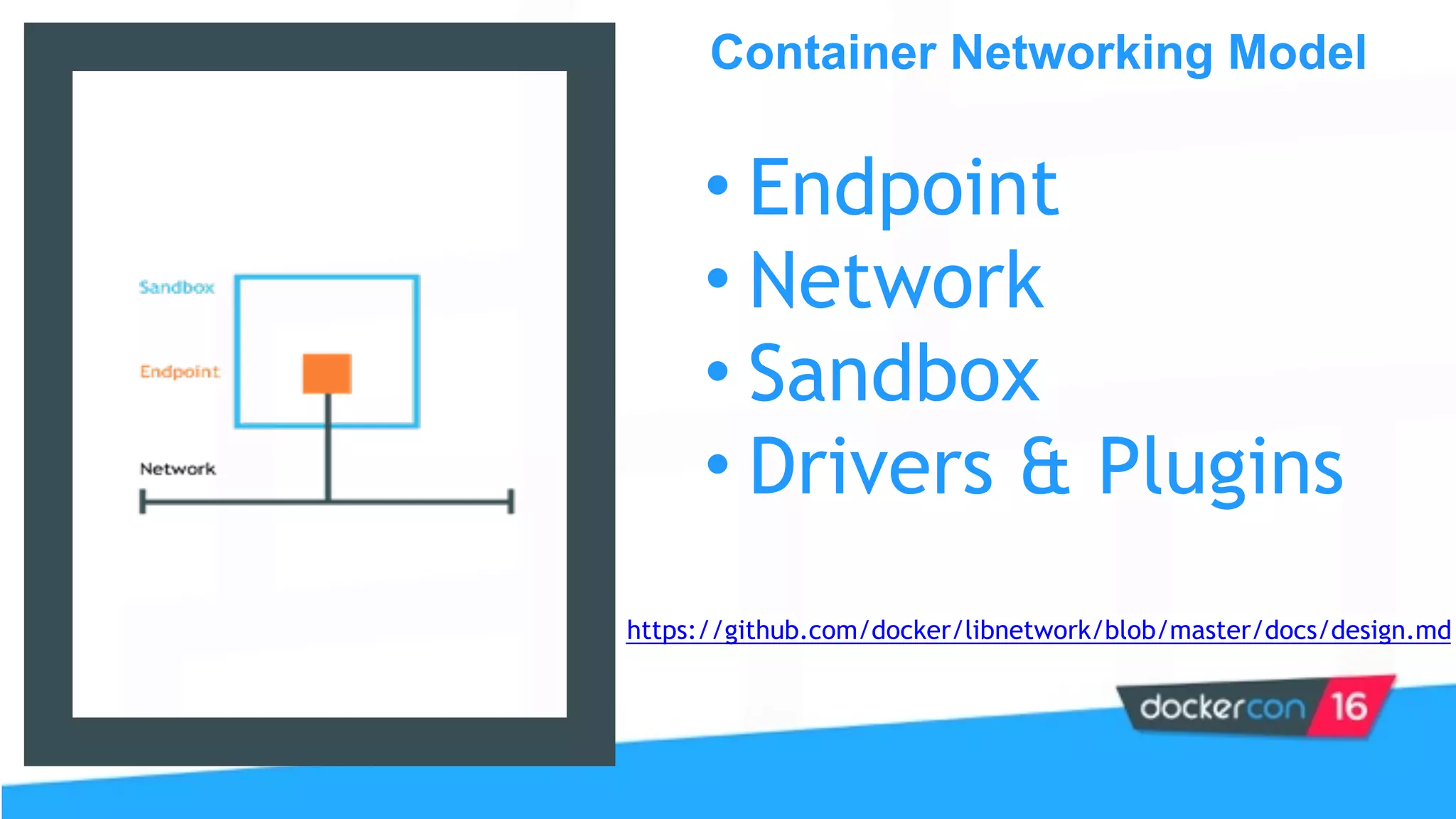

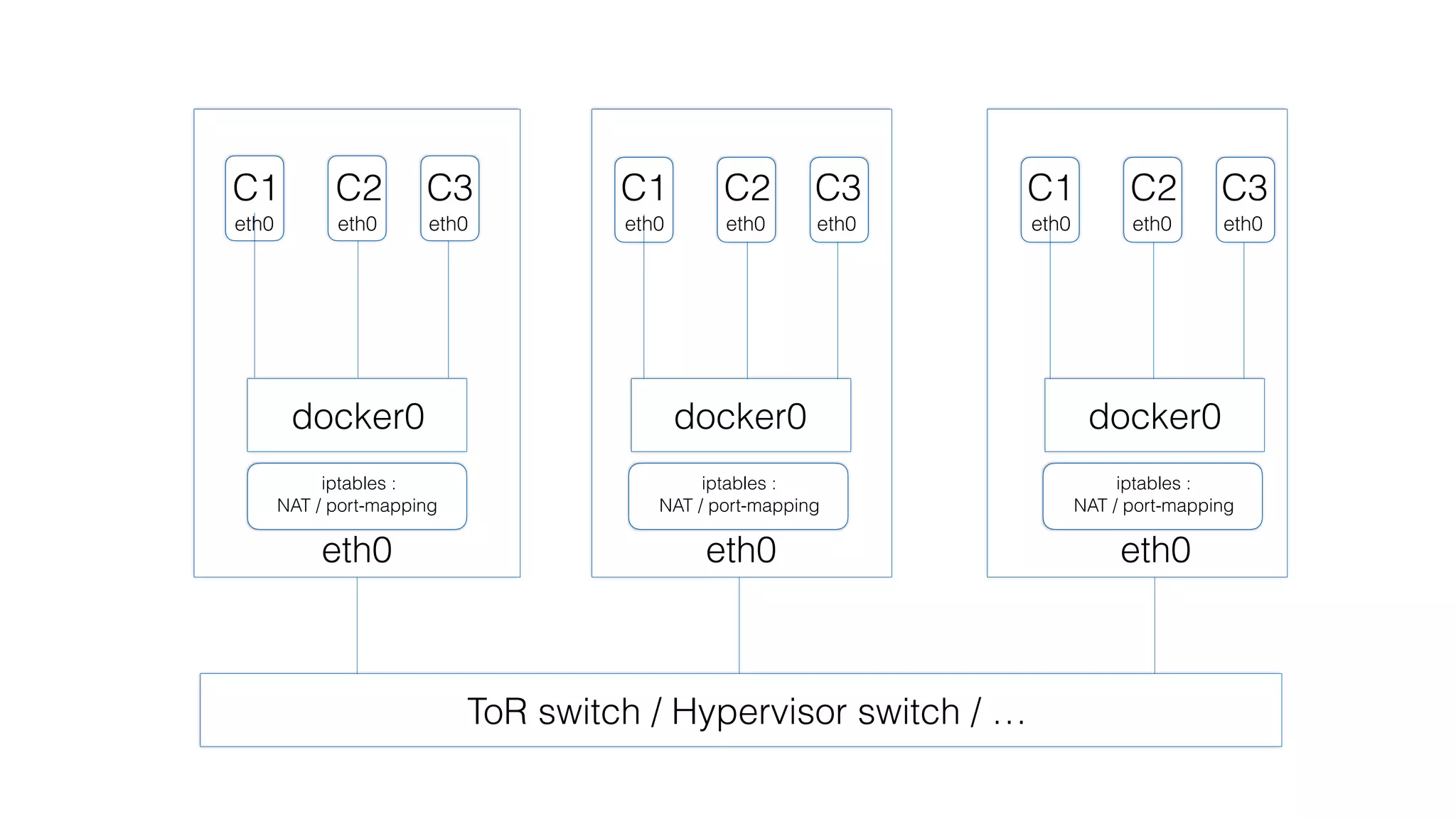

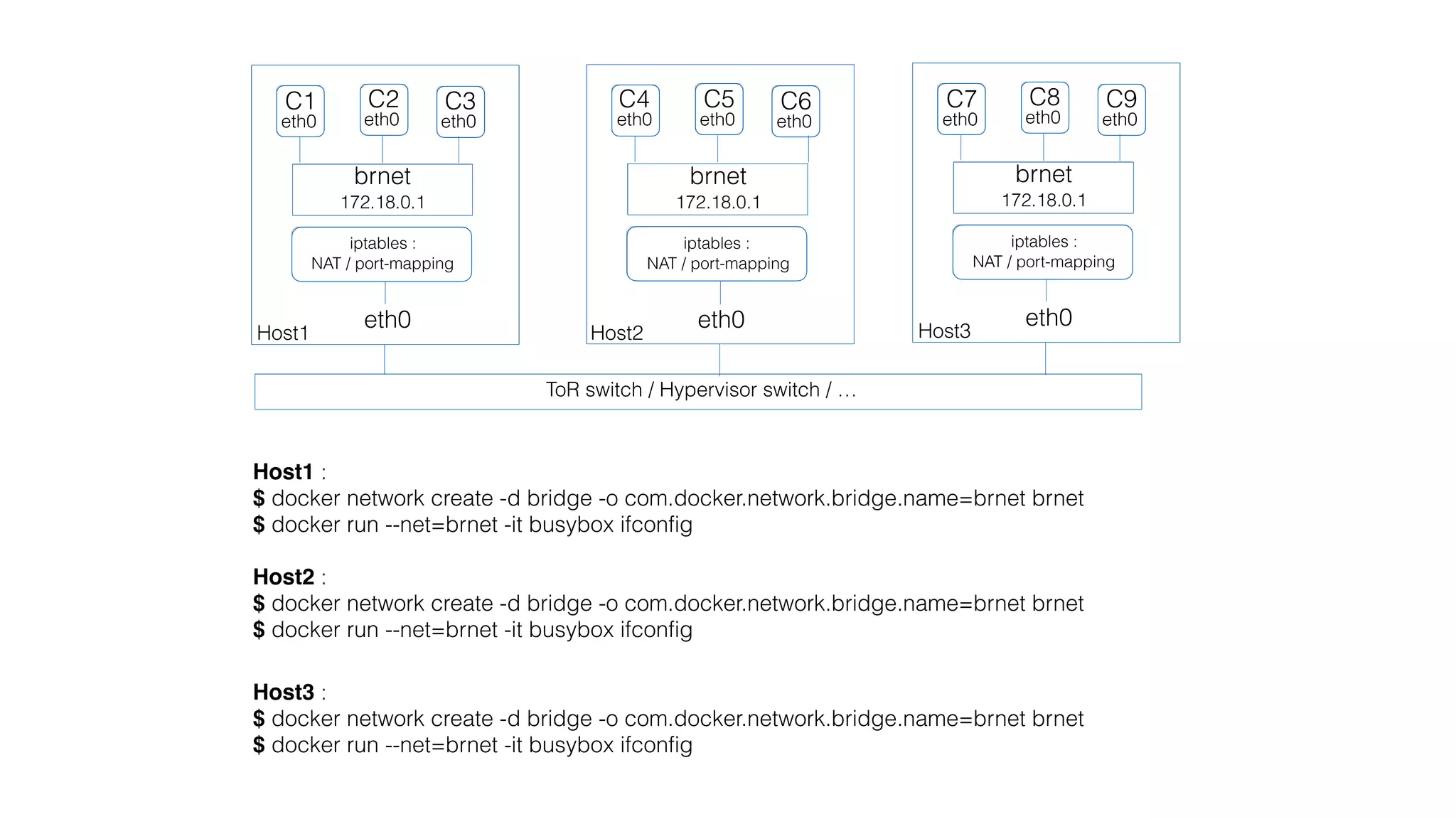

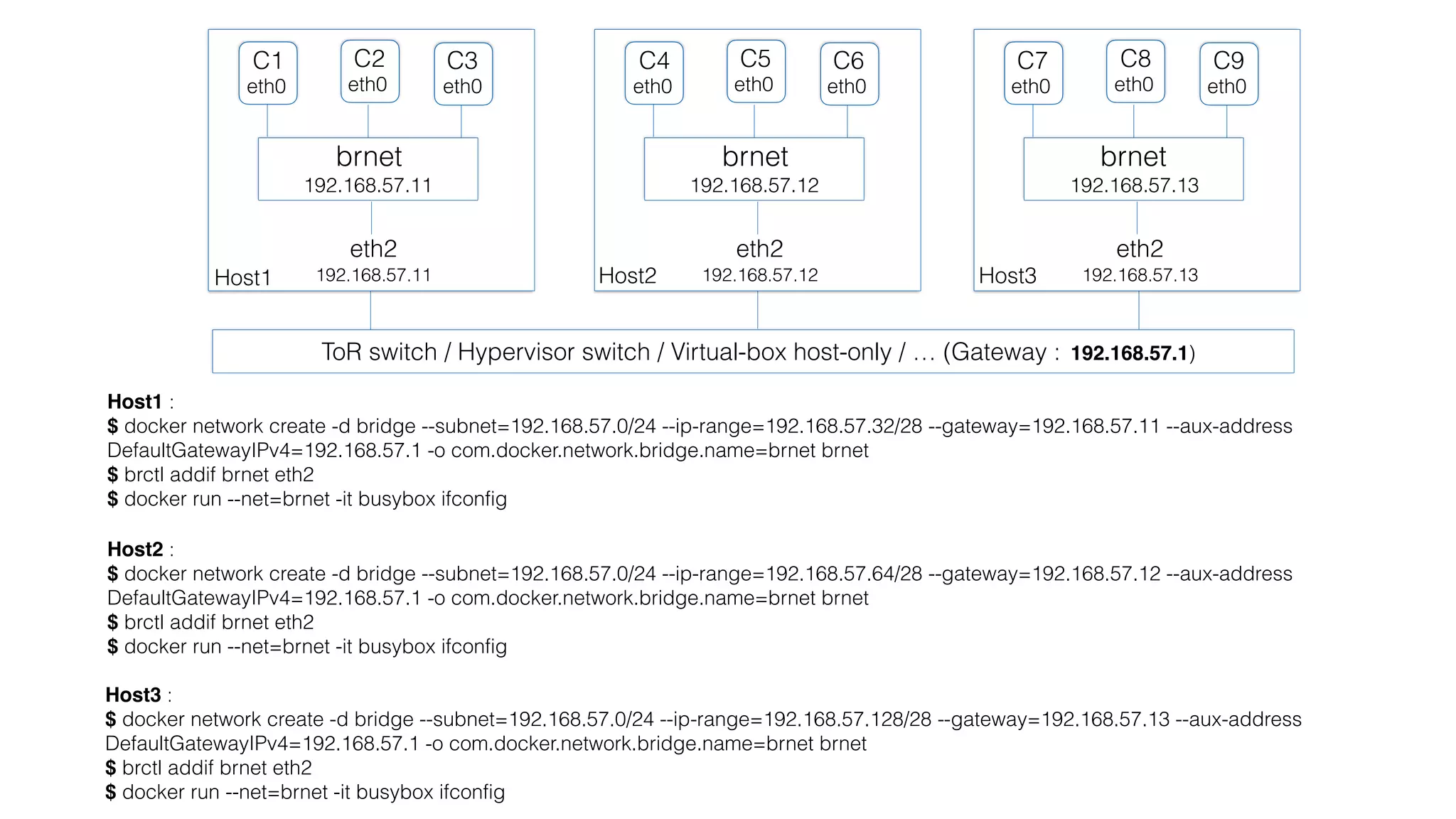

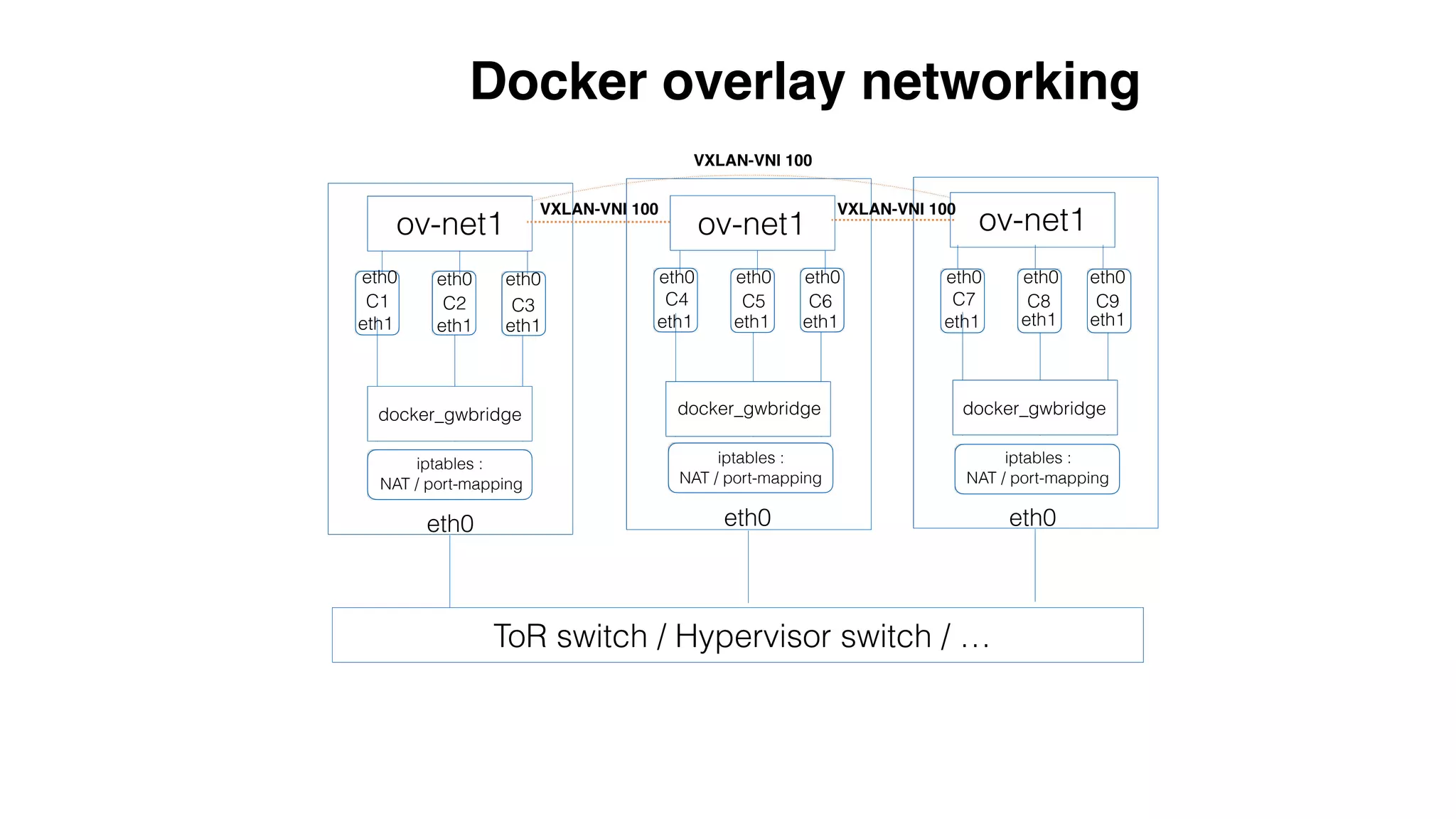

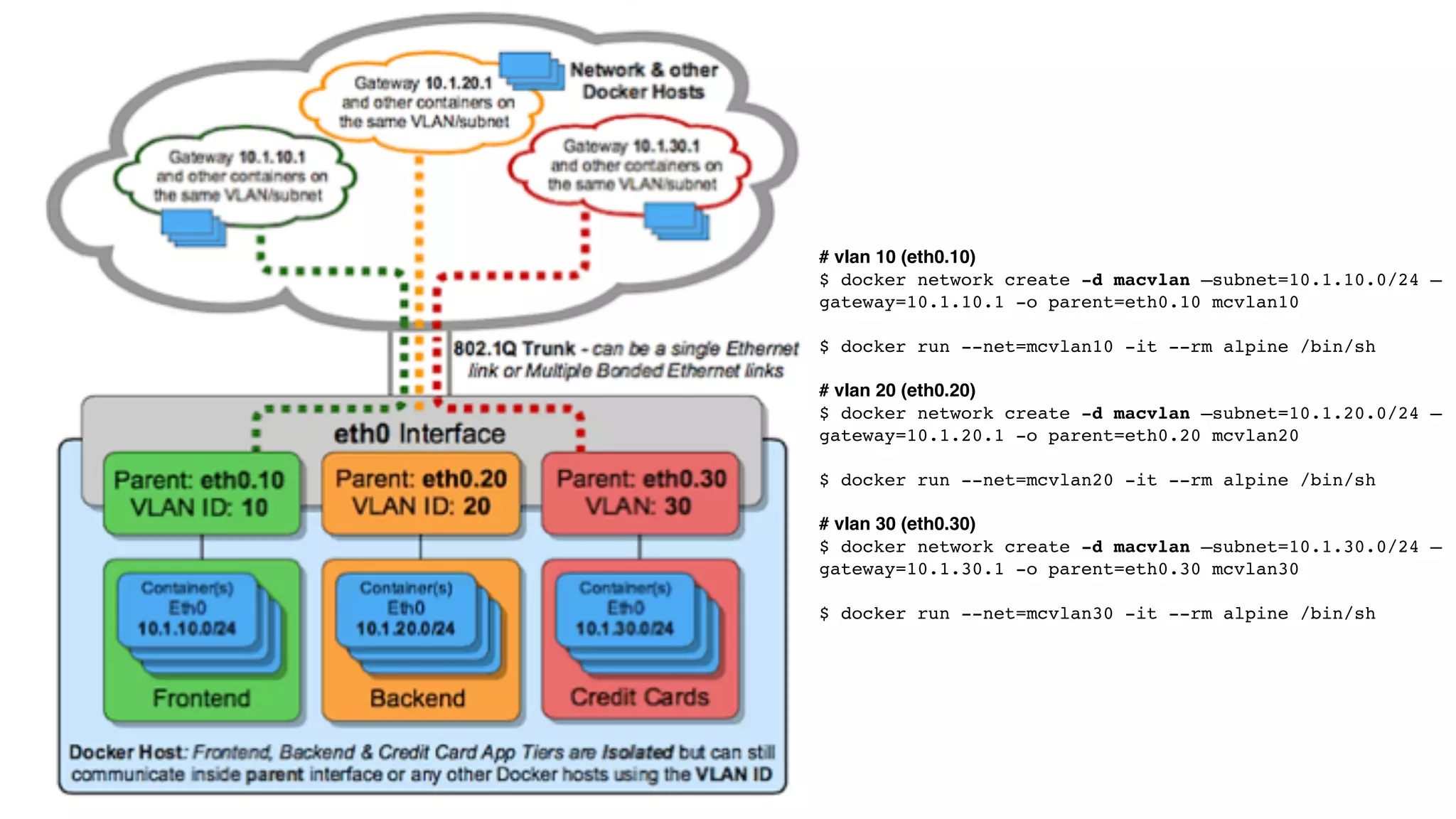

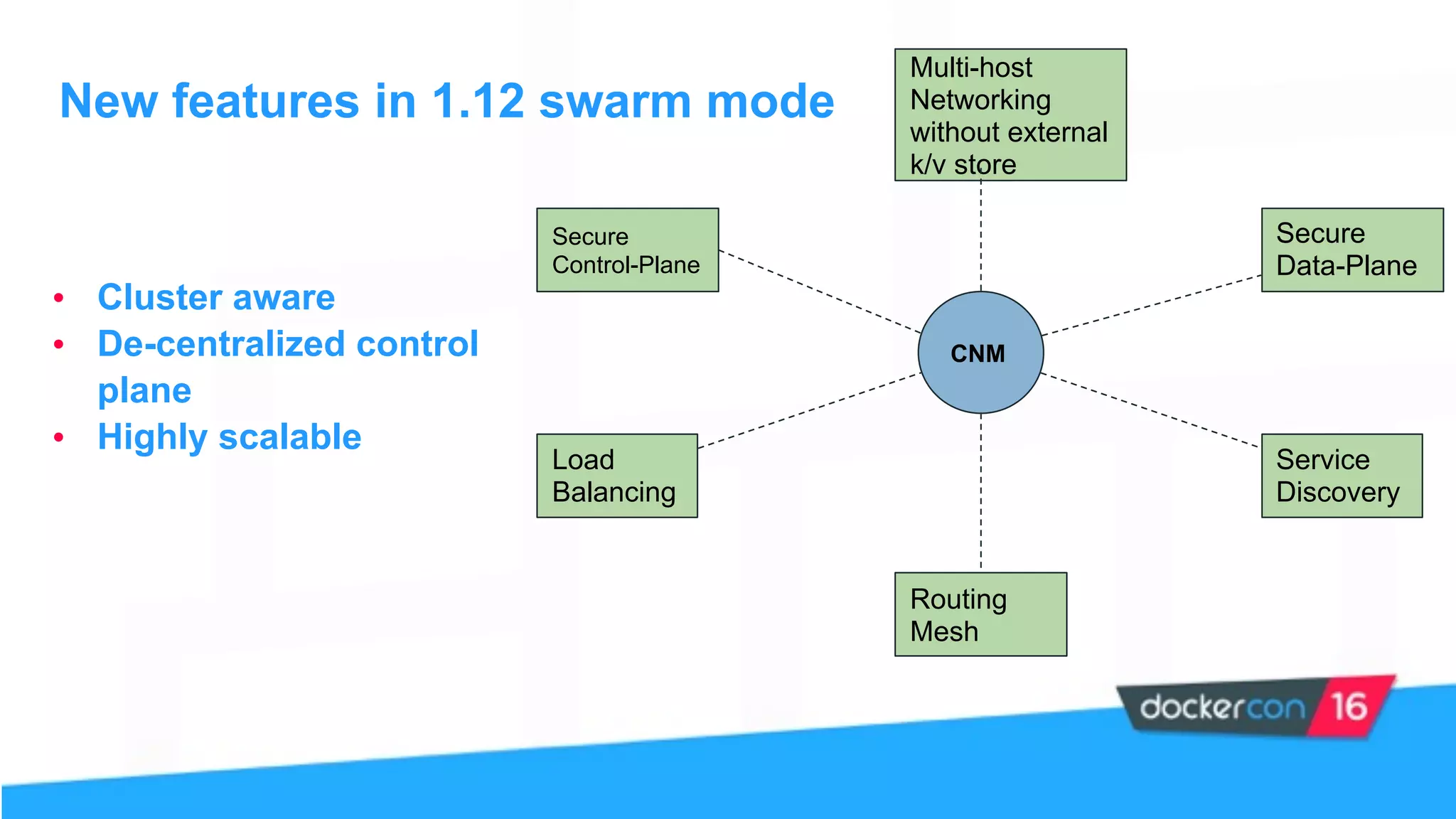

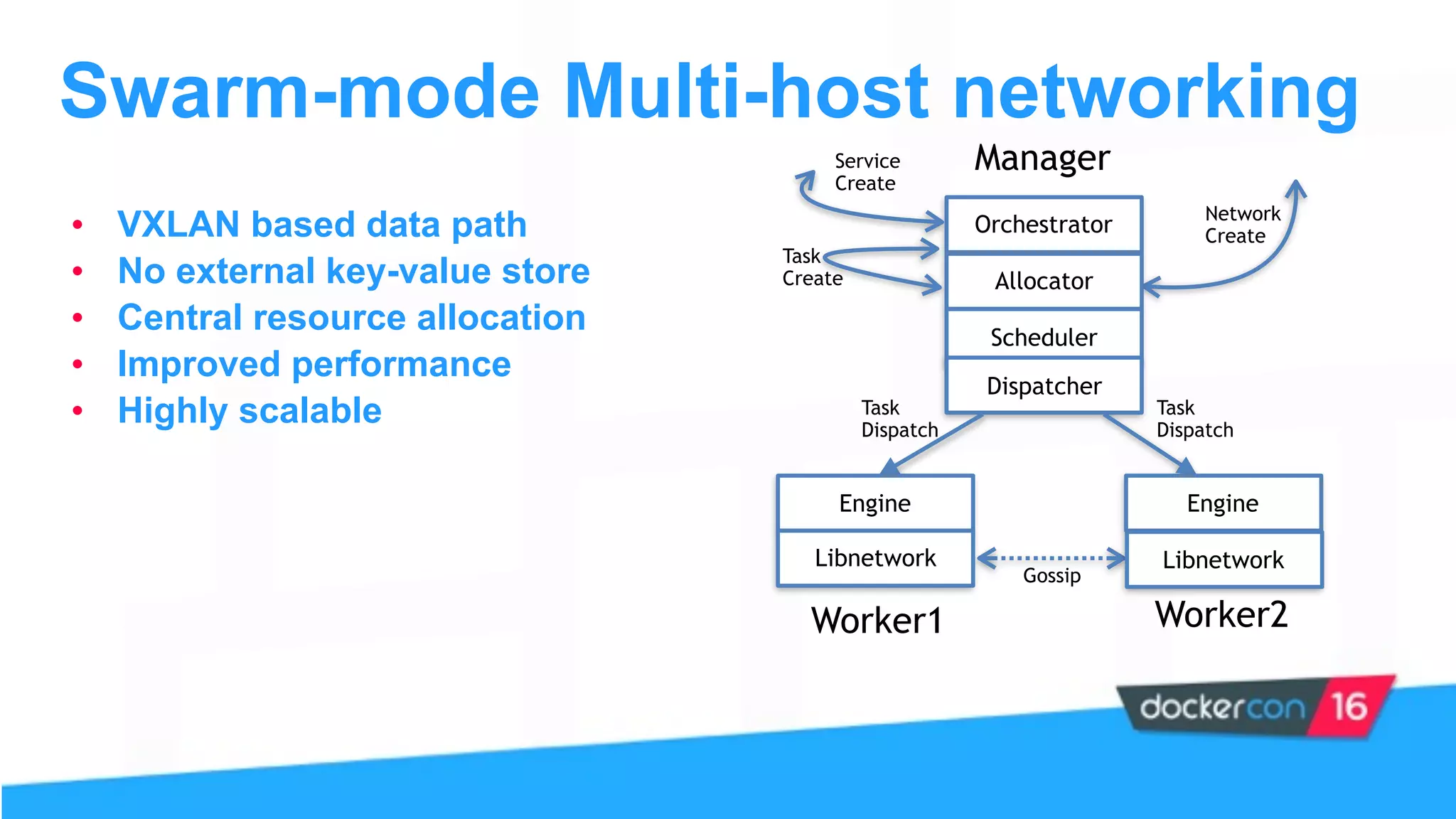

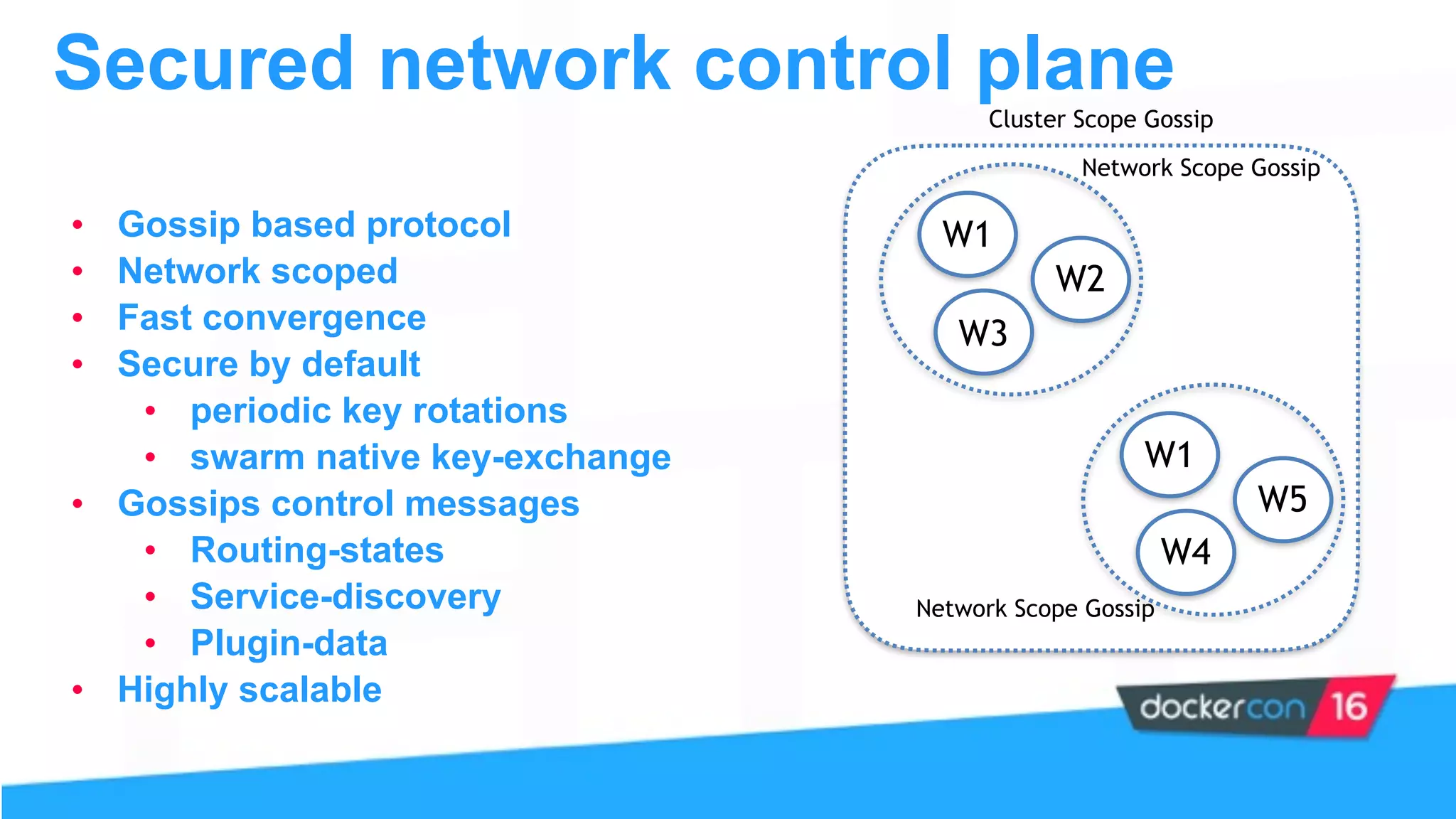

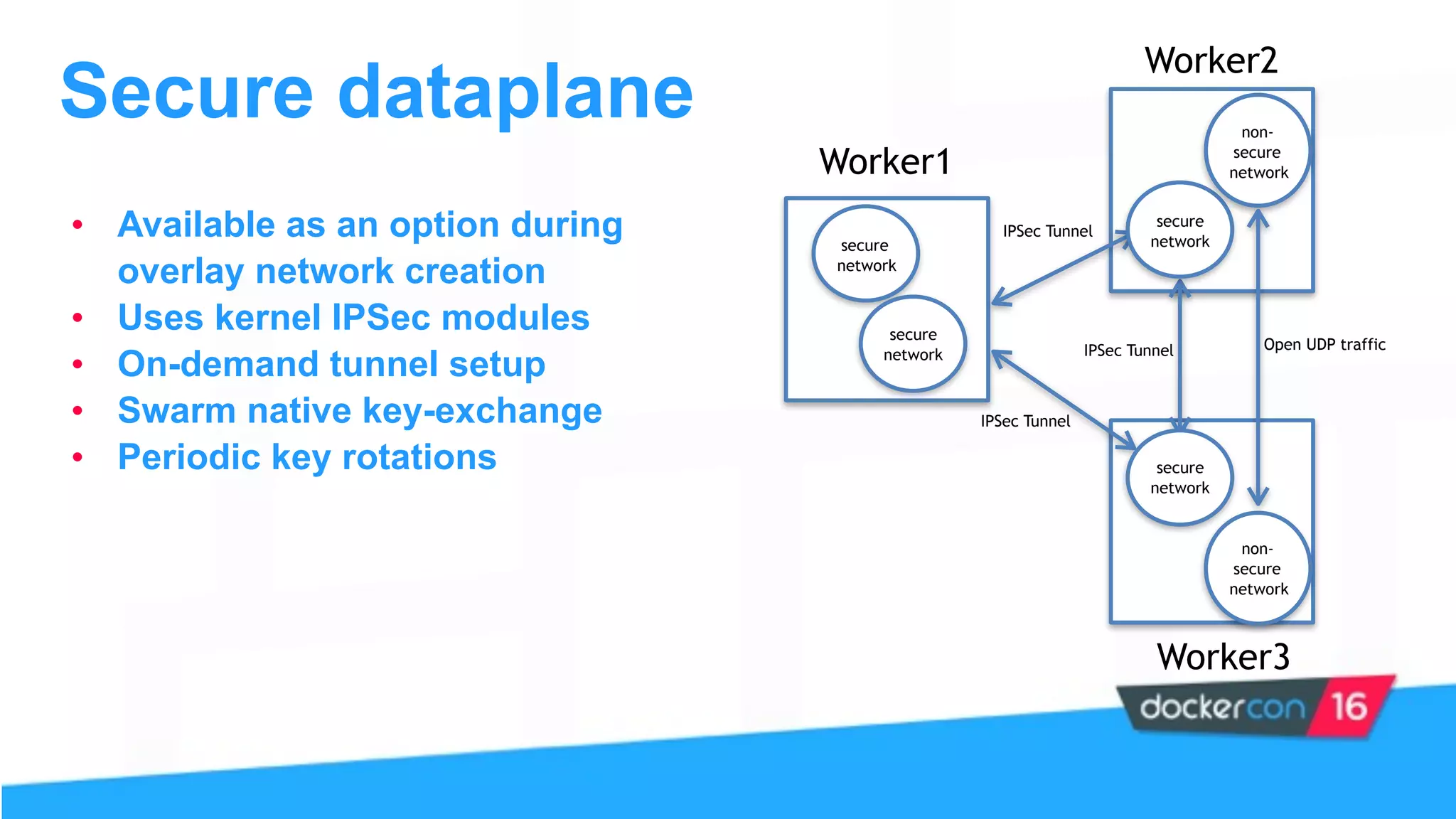

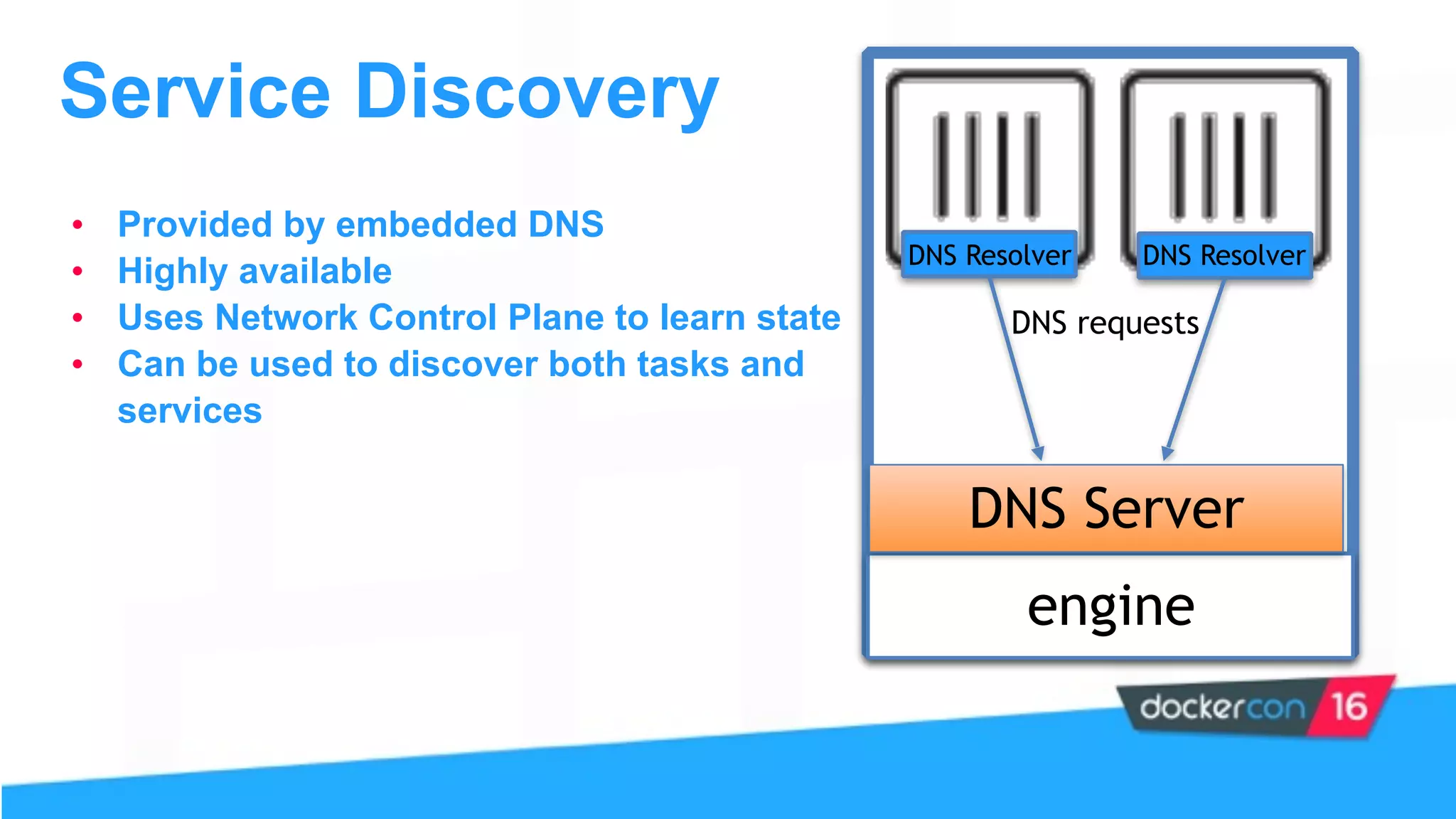

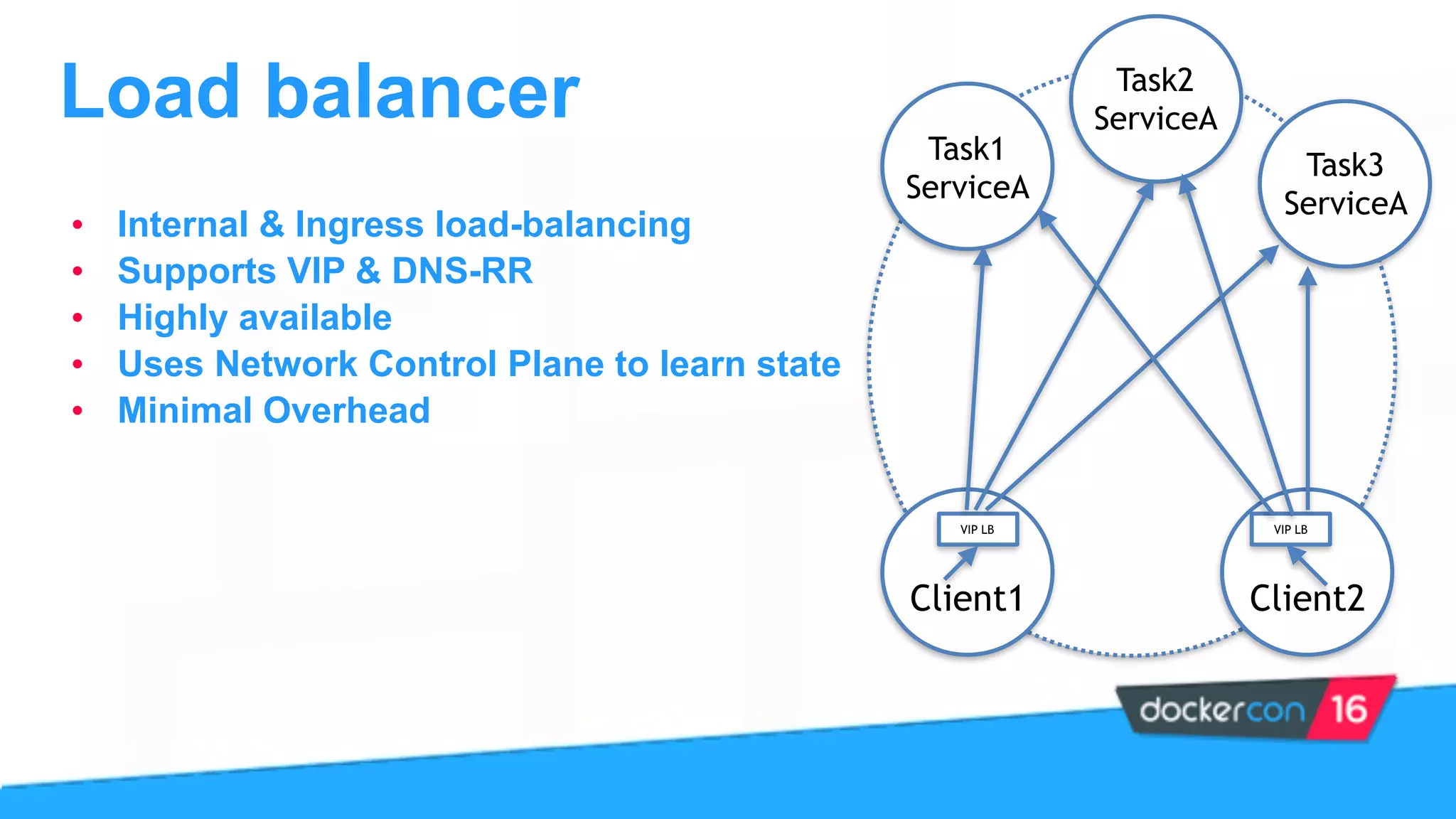

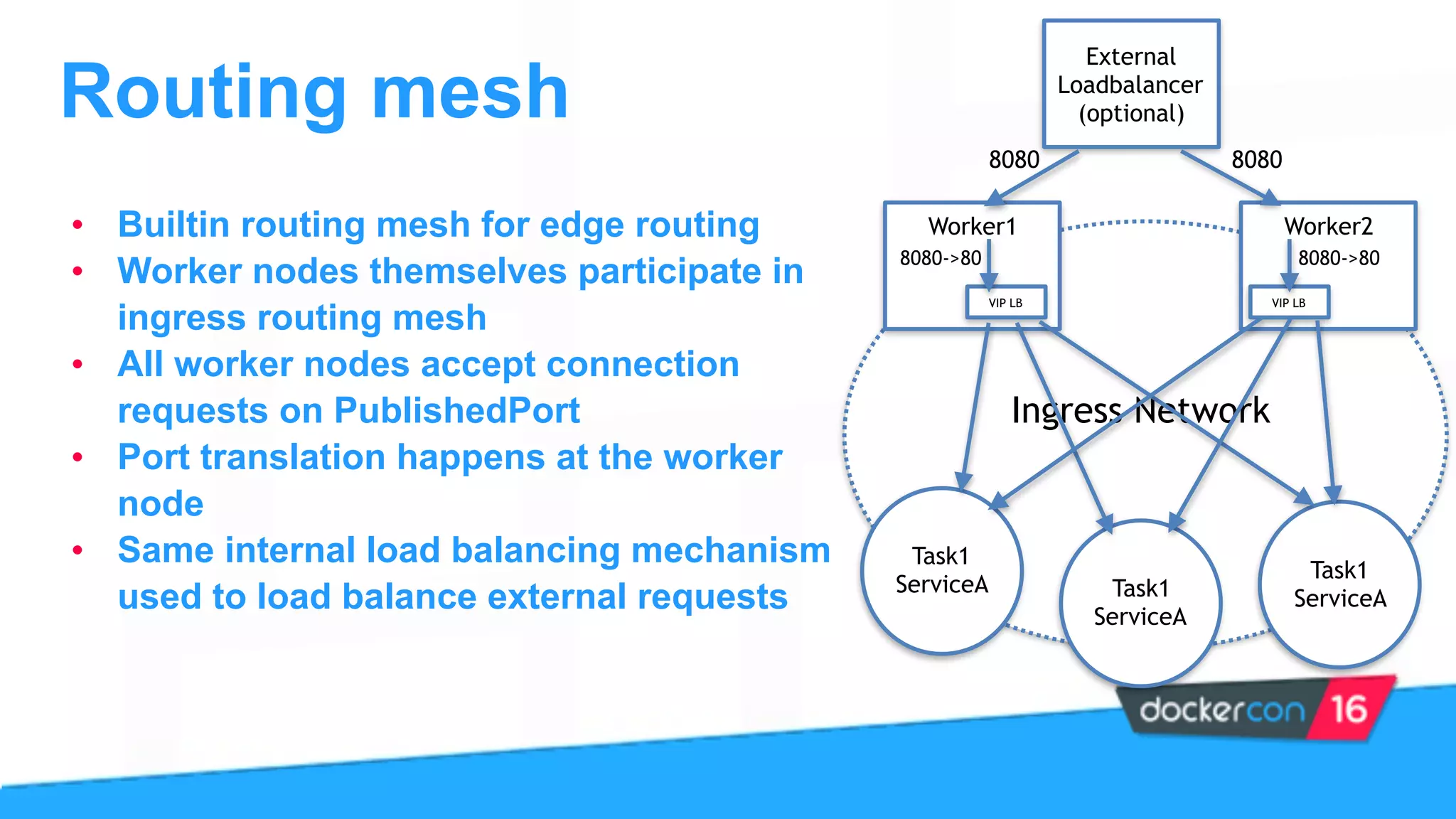

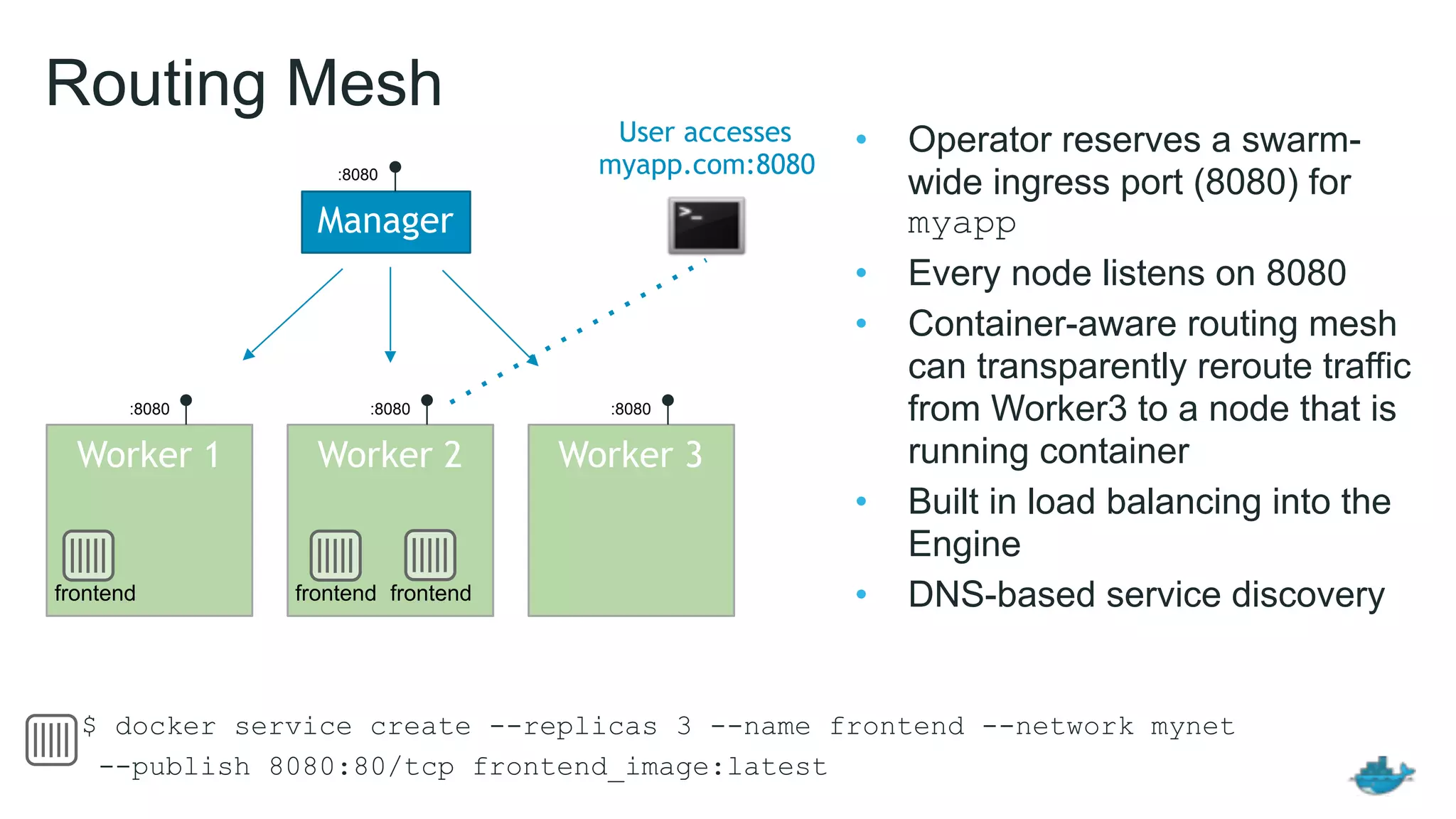

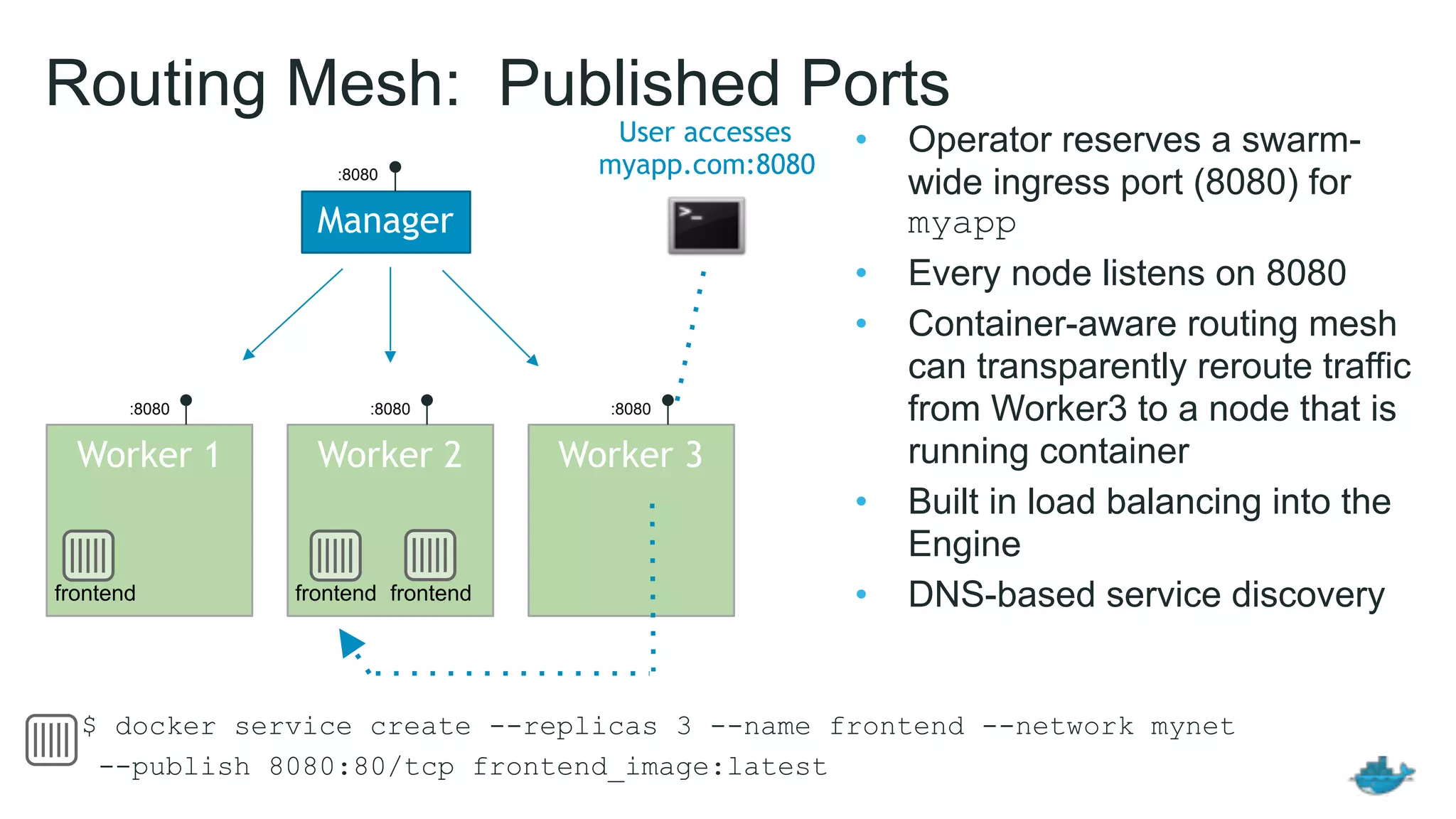

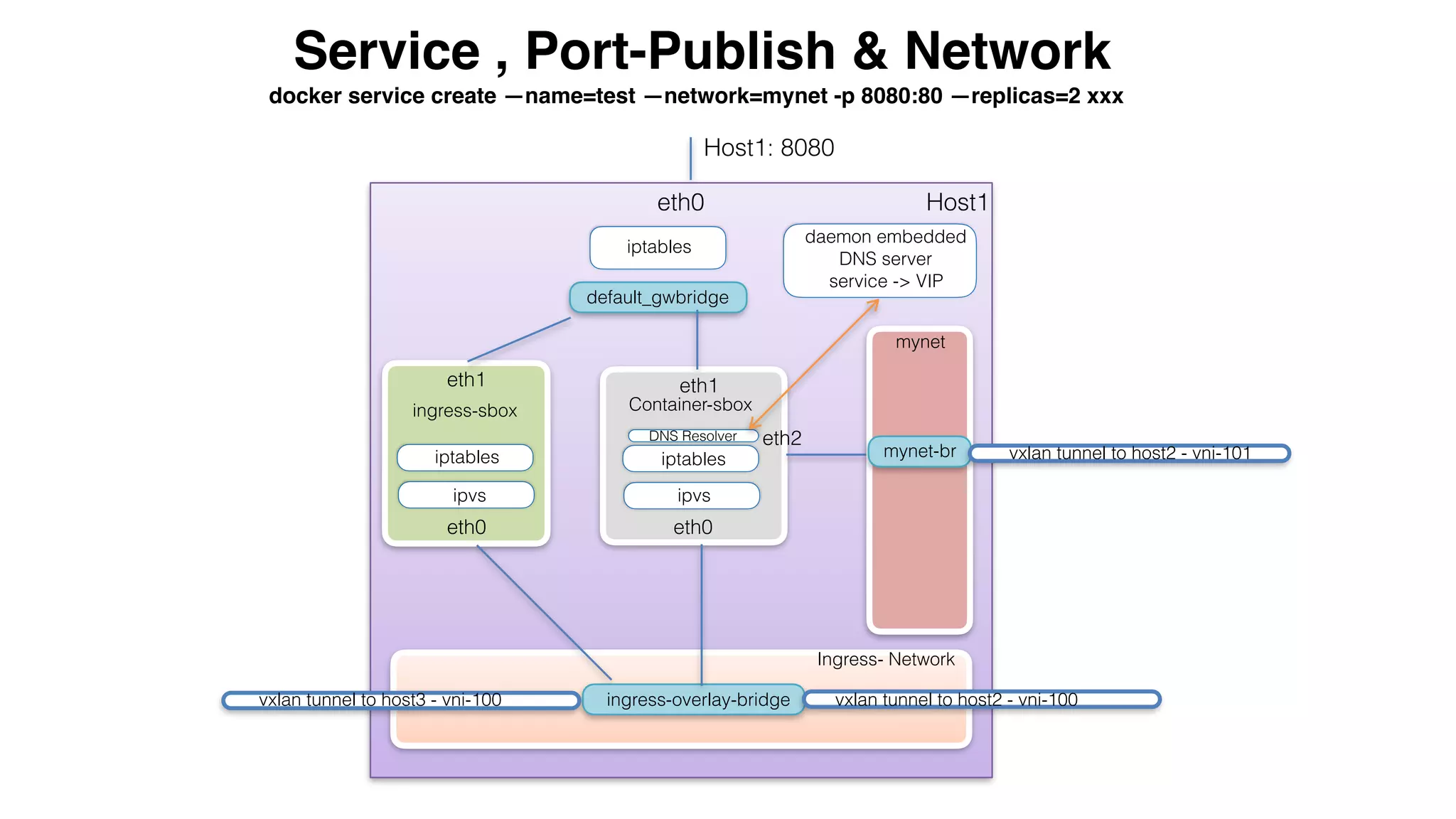

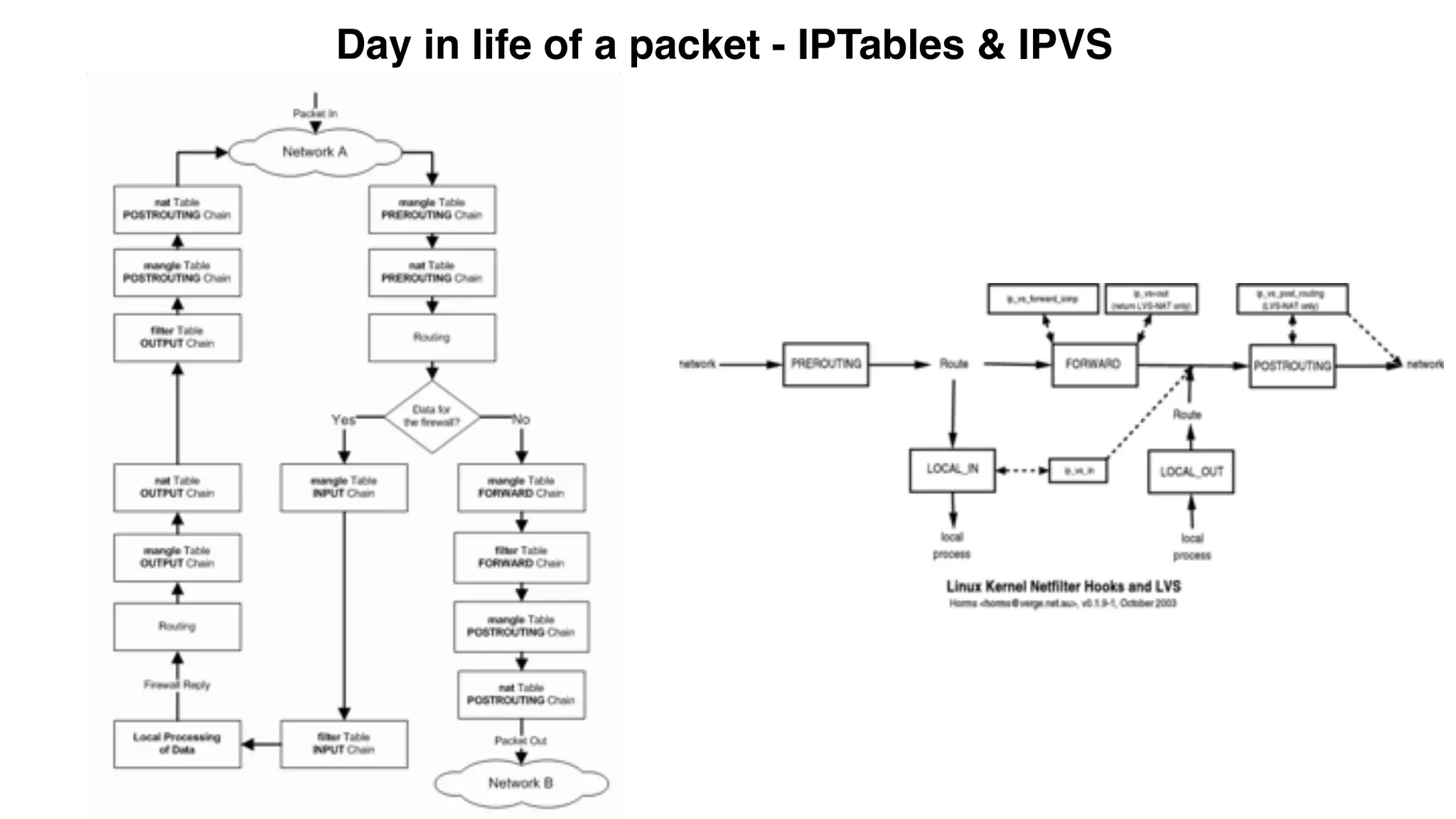

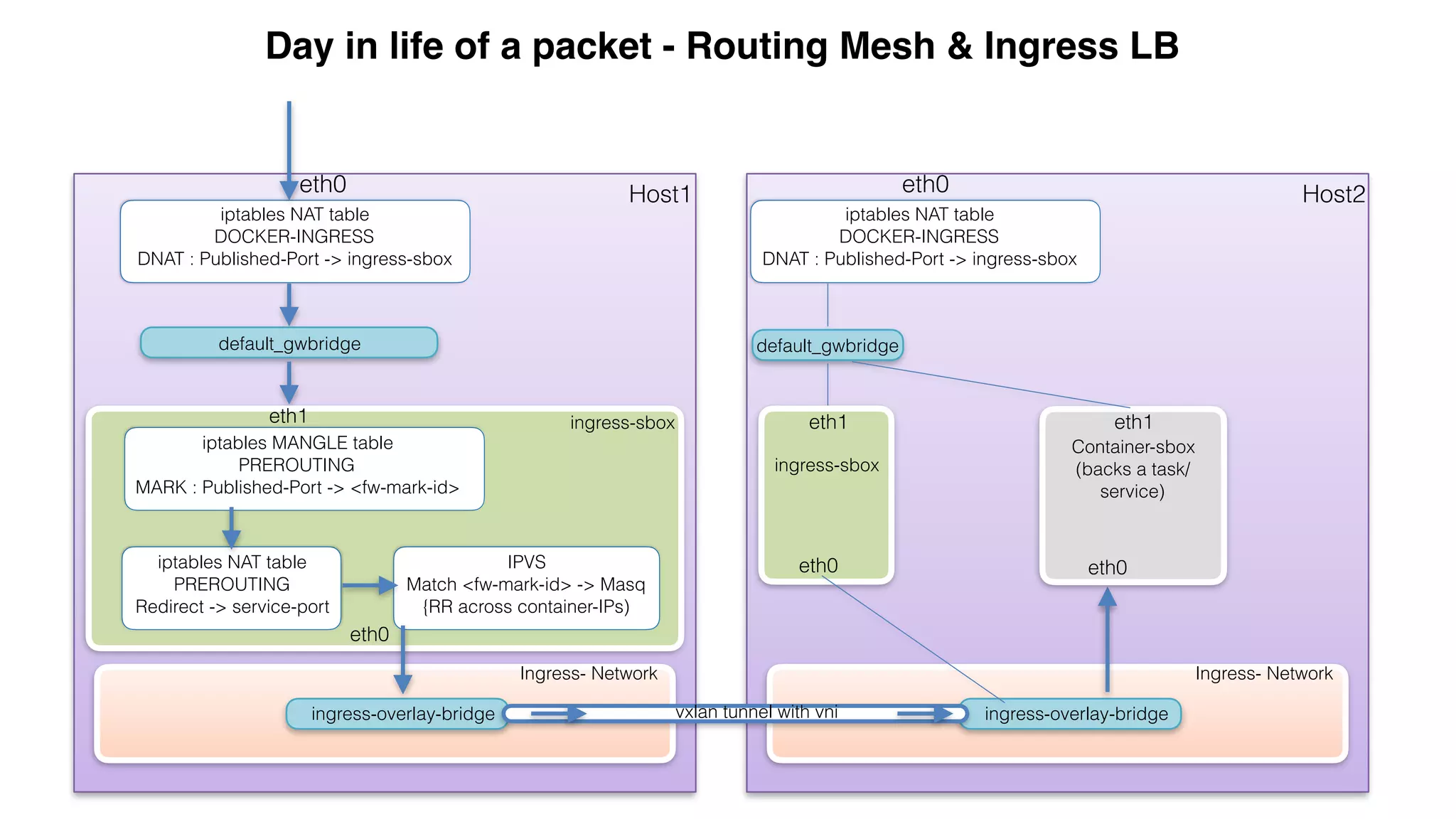

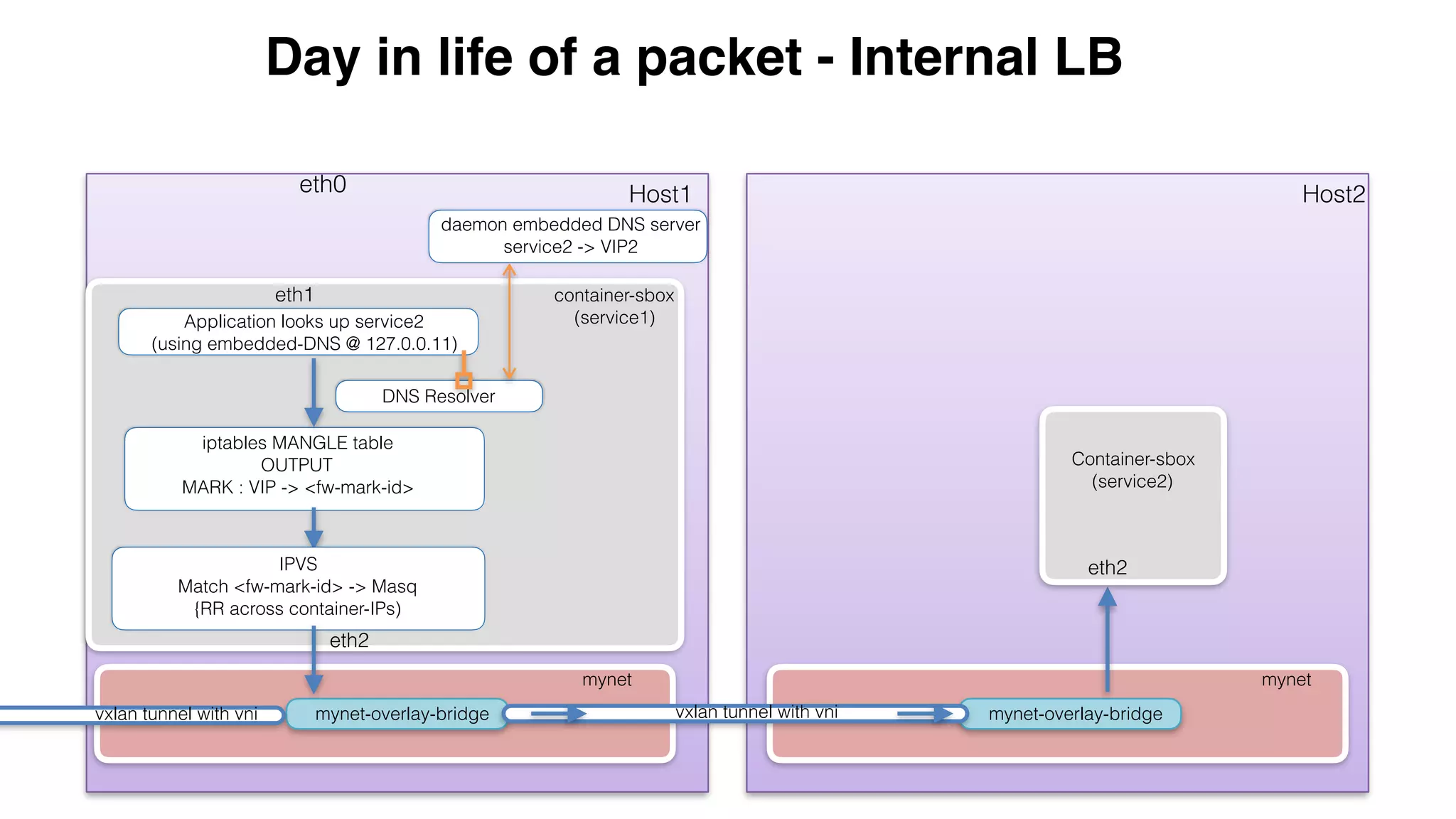

This document provides an overview and agenda for a Docker networking deep dive presentation. The presentation covers key concepts in Docker networking including libnetwork, the Container Networking Model (CNM), multi-host networking capabilities, service discovery, load balancing, and new features in Docker 1.12 like routing mesh and secured control/data planes. The agenda demonstrates Docker networking use cases like default bridge networks, user-defined bridge networks, and overlay networks. It also covers networking drivers, Docker 1.12 swarm mode networking functionality, and how concepts like routing mesh and load balancing work.