The document details research on embedded sensing and computational behavior science, focusing on understanding human behavior through various datasets and technologies. It highlights the Sussex-Huawei locomotion and transportation dataset, outlining the dataset's collection process, sensor modalities, and machine learning applications for activity recognition. Additionally, it discusses advancements in gesture recognition, embedded AI, and the potential of deep learning in activity recognition, along with associated ethical considerations and research perspectives.

![Transportation & Locomotion Recognition with the SHL dataset

www.shl-dataset.org

[1] Gjoreski et al.,The University of Sussex-Huawei locomotion and transportation dataset for multimodal analytics with mobile devices, IEEE Access, 2018](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-8-2048.jpg)

![Transportation & Locomotion Recognition with the SHL dataset

www.shl-dataset.org

[1] Gjoreski et al.,The University of Sussex-Huawei locomotion and transportation dataset for multimodal analytics with mobile devices, IEEE Access, 2018

• 2812 hours, 17562 km

• 3 users

• 8 primary annotations

• 23 secondary annotations

• 11 sensor modalities

• 30 data channels

• Smart assistance

• Mobility recognition

• City-scale optimisation

• Well-being

• Road condition

• Traffic condition

• Novel localisation

• Multimodal sensor fusion

Applications](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-9-2048.jpg)

![Activity recognition pipeline

• 5 s / frame

• 3.95 millions frames

[1] Roggen et al., The adARC pattern analysis architecture for adaptive human activity recognition systems, J. Ambient Intelligence and Humanized Computing

4(2), 2013

[2] Roggen et al., Opportunistic human activity and context recognition, IEEE Computer 46(2), 2013

[3] L. Wang et al., Enabling Reproducible Research in Sensor-Based Transportation Mode Recognition with the Sussex-Huawei Dataset. IEEE Access, 2019

• Feature extraction: 2724 features/frame

• Feature selection: 147 features/frame](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-10-2048.jpg)

![1 - Still; 2 - Walk; 3 - Run;

4 - Bike; 5 - Car; 6 - Bus;

7 - Train; 8 - Subway

[1] Wang et al., Enabling Reproducible Research in Sensor-Based Transportation Mode Recognition with the Sussex-Huawei Dataset. IEEE Access, 2019

[2] Roggen et al., The adARC pattern analysis architecture for adaptive human activity recognition systems, J. Ambient Intelligence and Humanized

Computing 4(2), 2013

[3] Roggen et al., Opportunistic human activity and context recognition, IEEE Computer 46(2), 2013

[4] Gjoreski et al., Unsupervised Online Activity Discovery Using Temporal Behaviour Assumption, ISWC, 2017

Activity recognition pipeline

• Automation

• Robustness: user-independent, placement-independent, …

• Power-performance trade-offs

• Enhanced pipelines: adaptive [2], opportunistic [3], lifelong learning [4]](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-11-2048.jpg)

![SHL Recognition Challenge

2018 – Singapore

• 21 teams

2019 – London

• 15 teams

[1] Wang et al., Summary of the Sussex-Huawei locomotion-transportation recognition challenge, Adjunct Proc. of Ubicomp, 2018

[2] Wang et al., Summary of the Sussex-Huawei locomotion-transportation recognition challenge 2019, Adjunct Proc. of Ubicomp, 2019](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-12-2048.jpg)

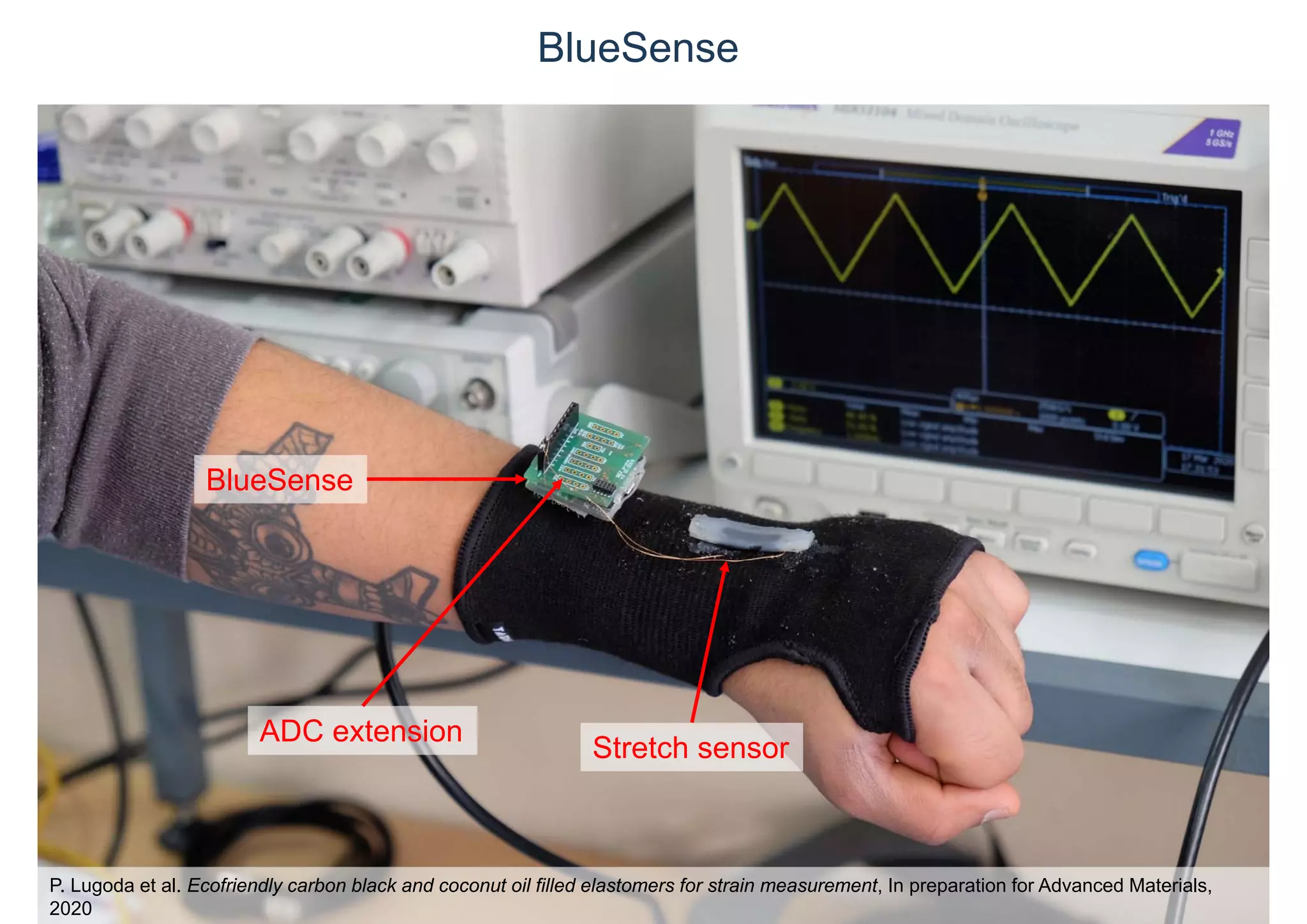

![Embedded Sensing Platforms

[1] Roggen et al., BlueSense - Designing an Extensible Platform for Wearable Motion Sensing, Sensor Research and IoT Applications, Proc. EWSN, 2018

30mm

30mm

9DoF motion

5 ppm RTC

Fuel gauge

AVR 1284p

SDHC

Expansion

USB

Bluetooth

Microphone

SDHC

STM32L4

Fuel gauge

USB

Expansion

Power

MPU

EEPROM

Bluetooth

DFU

30mm

30mm

BlueSense2 (AVR) BlueSense4 (ARM Cortex M4)

• 1KHz motion

• Microphone

• Multimodal

• Logging

• Streaming

• Extensible

Motion ADC

Sound](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-14-2048.jpg)

![BlueSense2

[1] Roggen et al., BlueSense - Designing an Extensible Platform for Wearable Motion Sensing, Sensor Research and IoT Applications, Proc. EWSN, 2018

• RTC for synchronous recordings

• True hardware off

• RTC wakeup

• Built-in power sense](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-15-2048.jpg)

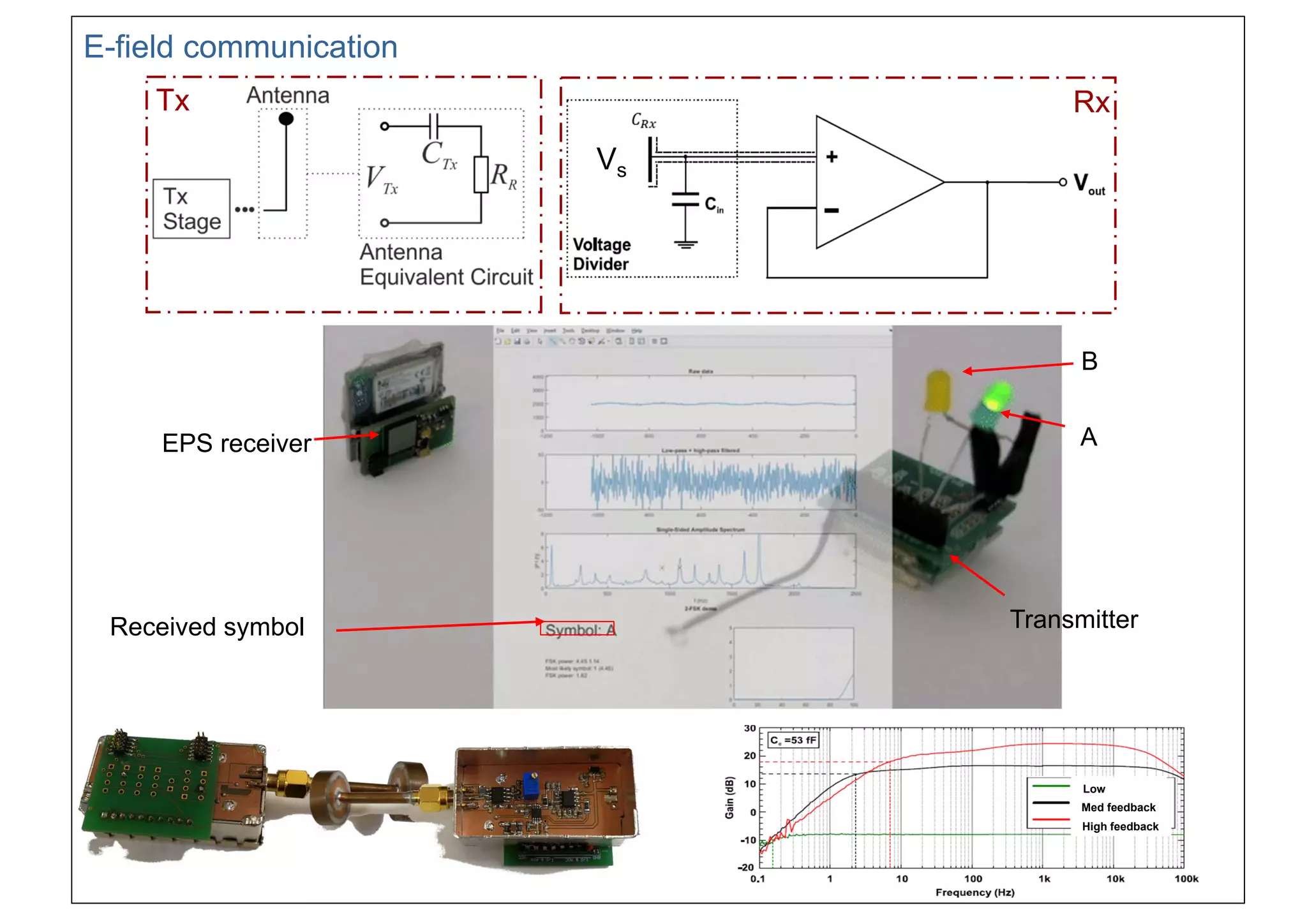

![EPS: Electric Potential Sensing

• Remote electric potential sensing

– Small voltages: e.g. non-contact ECG [2]

– 50/60Hz field

• Capacitively coupled

• Available as an IC [1]

[1] Plessey Semiconductors. PS25254/55 EPIC Ultra High Impedance ECG Sensor. Issue 1

[2] Prance et al., Remote detection of human electrophysiological signals using the electric potential sensor, Applied Physics Letter, 2008](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-17-2048.jpg)

![[1] Roggen et al., Electric field phase sensing for wearable orientation and localisation applications, ISWC, 2016

Fingerprinting for localization Relative orientation sensing](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-18-2048.jpg)

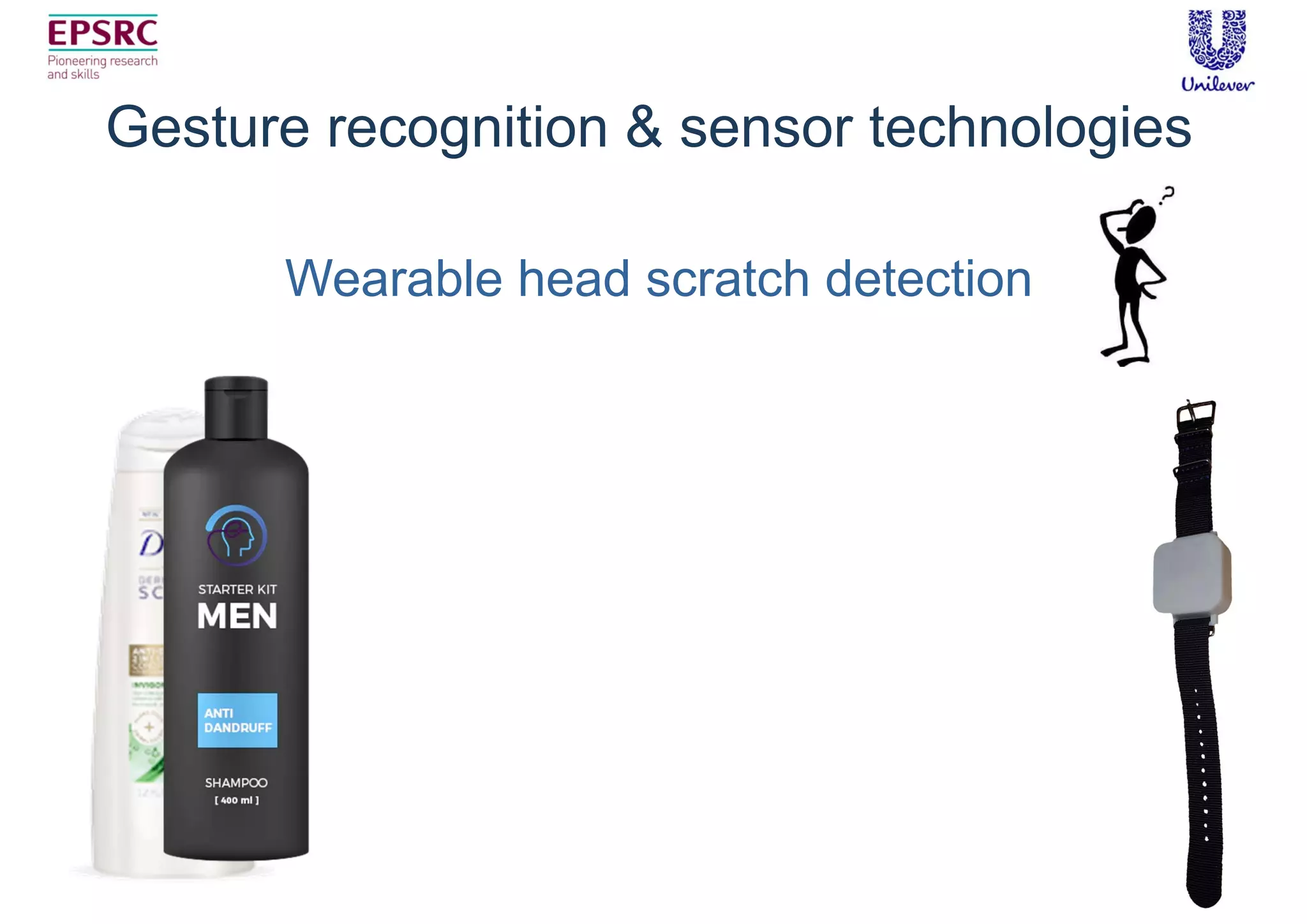

![IMU + EPS

IMU

[1] Pour Yazdan et al., Wearable electric potential sensing: a new modality sensing hair touch and restless leg movement, Proc. Ubicomp, 2016

[2] Jocys et al., Multimodal fusion of IMUs and EPS body worn sensors for scratch recognition. Accepted in Proc. of Pervasive Health, 2019

EPSxyz coordinates

Single IMU: -15pp

+8pp w/ multimodal

fusion](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-20-2048.jpg)

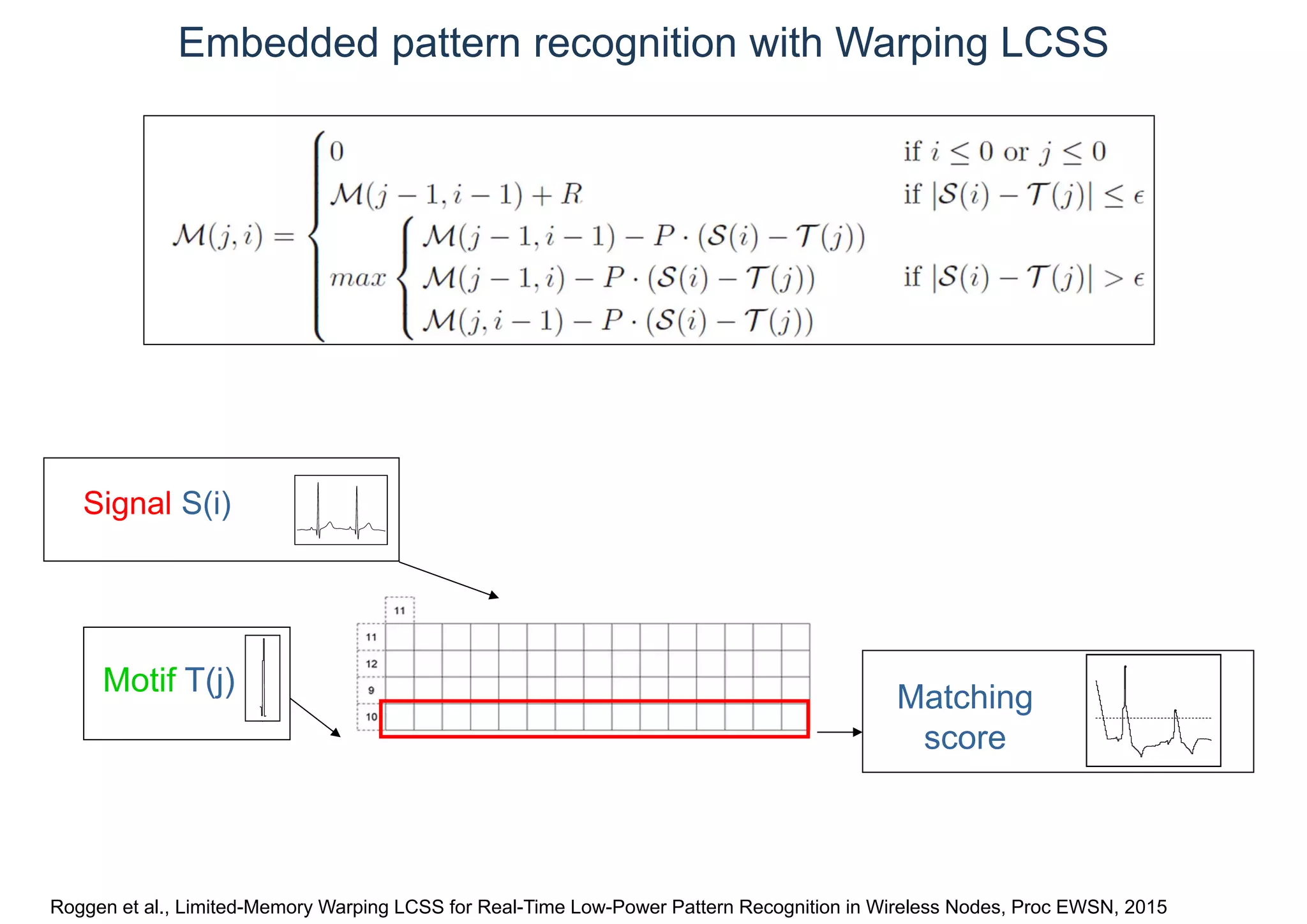

![Sports skill assessment [1,2]

Serve type 1 Serve type 2

[1] Roggen et al., Limited-Memory Warping LCSS for Real-Time Low-Power Pattern Recognition in Wireless Nodes, Proc. EWSN 2015

[2] Ponce Cuspinera et al., Beach Volleyball serve type recognition, Proc ISWC, 2016

[3] Ciliberto et al., Complex Human Gestures Encoding from Wearable Inertial Sensors for Activity Recognition, Proc. EWSN, 2018

2

13

4](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-24-2048.jpg)

![LM-WLCSS training

• Evolutionary optimisation [1]

[1] Ciliberto et al., WLCSSLearn: Learning Algorithm for Template Matching-based Gesture Recognition Systems, Proc. Joint 8th International

Conference on Informatics, Electronics & Vision (ICIEV) and 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), 2019

[2] Ciliberto et al., WLCSSCuda: A CUDA Accelerated Template Matching Method for Gesture Recognition, Proc. ISWC, 2019

• CUDA acceleration [2]](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-25-2048.jpg)

![Embedded pattern recognition with Warping LCSS

• High-speed: 67 (AVR), 140 (M4) motifs w/ 8mW, 10mW @8MHz

• Low-power: single gesture spotter (AVR) w/ 135uW

• Suitable for silicon implementation

– Integer representation

– Operations: add, shift, compare

• Initial VHDL / FPGA implementation

[1] Roggen et al., Limited-Memory Warping LCSS for Real-Time Low-Power Pattern Recognition in Wireless Nodes, Proc EWSN, 2015

[2] Ciliberto et al., WLCSSLearn: Learning Algorithm for Template Matching-based Gesture Recognition Systems, Proc. Joint 8th International Conference on

Informatics, Electronics & Vision (ICIEV) and 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), 2019

[3] Ciliberto et al., WLCSSCuda: A CUDA Accelerated Template Matching Method for Gesture Recognition, Proc. ISWC, 2019

ARM M4](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-26-2048.jpg)

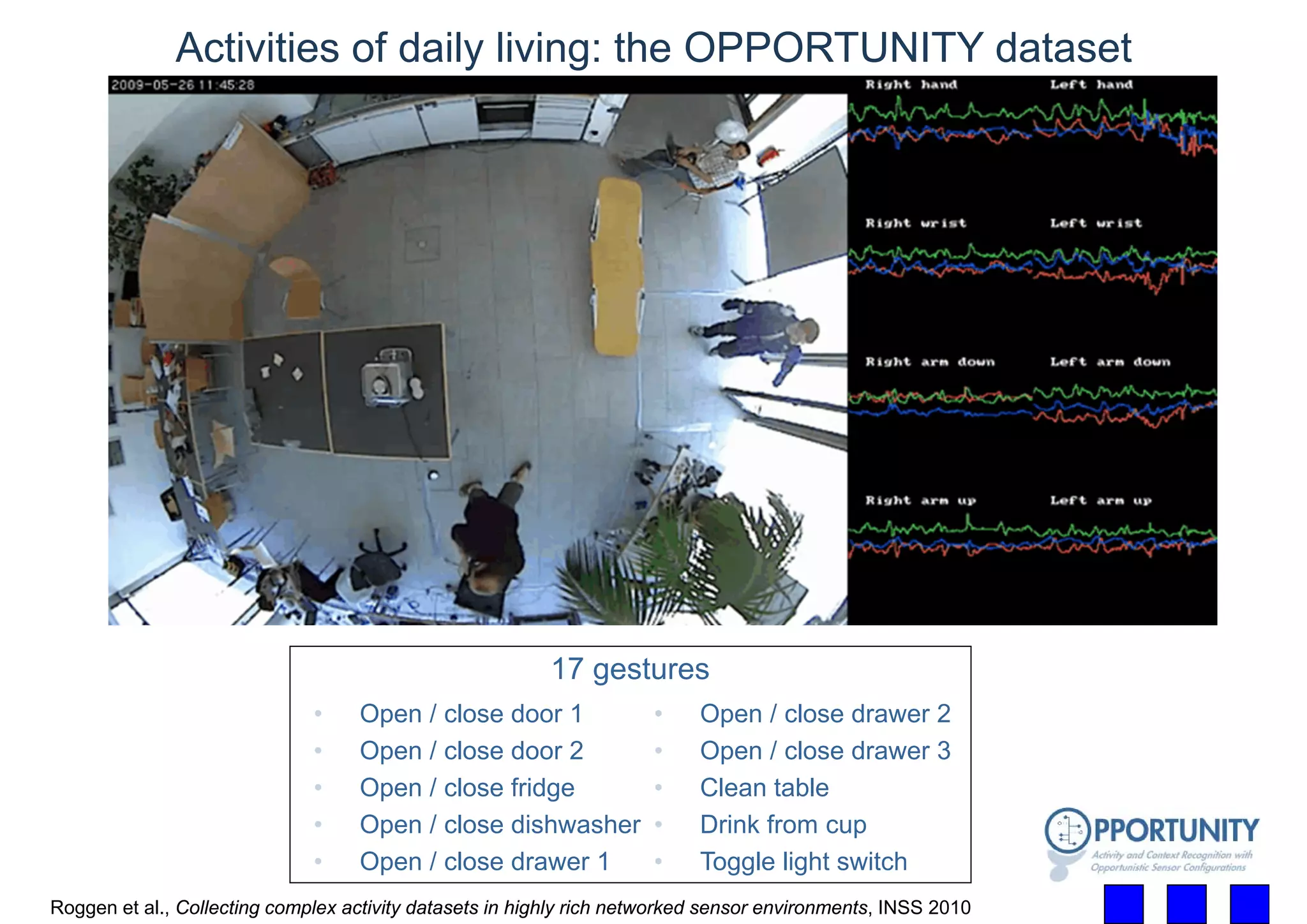

![Is Deep Learning Useful for Activity Recognition?

• Open / close door 1

• Open / close door 2

• Open / close fridge

• Open / close dishwasher

• Open /close drawer 1

• Open / close drawer 2

• Open / close drawer 3

• Clean table

• Drink from cup

• Toggle light switch

[1] Ordonez Morales et al., Deep LSTM recurrent neural networks for multimodal wearable activity

recognition, Sensors, 2016

[2] Chavarriaga et al., The Opportunity challenge: A benchmark database for on-body sensor-based activity

recognition, Pattern recognition letters, 2013

DeepConvLSTM [1]: 0.86 F1 Score +9pp over competing

approaches [2]](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-29-2048.jpg)

![Yes, Deep Learning is Useful for Activity Recognition

Architecture [1]

Architecture optimisation

[1] Ordonez Morales et al., Deep LSTM recurrent neural networks for multimodal wearable activity recognition, Sensors, 2016

[2] Ordonez Morales et al., Deep convolutional feature transfer across mobile activity recognition domains, sensor modalities and

locations, ISWC, 2016

Kernel reuse [2]

17% training

time reduction

Generic kernels for HAR

Power-performance trade-offs

(Weight quantization / LUT / ablation / TPU)](https://image.slidesharecdn.com/keynote-final-200327143601/75/Embedded-Sensing-and-Computational-Behaviour-Science-30-2048.jpg)